0% found this document useful (0 votes)

11 views33 pagesLecture 7 Logistic Regression

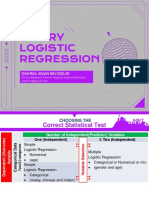

The document compares linear regression and logistic regression, highlighting that linear regression is suitable for continuous outcomes while logistic regression is appropriate for binary outcomes. It explains the logistic function, how to interpret coefficients, and provides examples of modeling binary outcomes using logistic regression. Additionally, it discusses variable selection methods for multiple regression, including forward selection, backward elimination, and stepwise selection.

Uploaded by

NURASYIKIN shamsuddinCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

11 views33 pagesLecture 7 Logistic Regression

The document compares linear regression and logistic regression, highlighting that linear regression is suitable for continuous outcomes while logistic regression is appropriate for binary outcomes. It explains the logistic function, how to interpret coefficients, and provides examples of modeling binary outcomes using logistic regression. Additionally, it discusses variable selection methods for multiple regression, including forward selection, backward elimination, and stepwise selection.

Uploaded by

NURASYIKIN shamsuddinCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

/ 33