0% found this document useful (0 votes)

10 views36 pagesNLP Class X AI

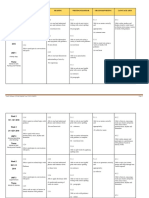

The document provides an overview of Natural Language Processing (NLP), a sub-field of AI focused on enabling computers to understand human languages. It discusses various applications of NLP, including automatic summarization, sentiment analysis, text classification, and virtual assistants like chatbots. Additionally, it addresses challenges in processing natural language and outlines data processing techniques such as text normalization, stemming, lemmatization, and the Bag of Words algorithm.

Uploaded by

swastiksambhu10Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

10 views36 pagesNLP Class X AI

The document provides an overview of Natural Language Processing (NLP), a sub-field of AI focused on enabling computers to understand human languages. It discusses various applications of NLP, including automatic summarization, sentiment analysis, text classification, and virtual assistants like chatbots. Additionally, it addresses challenges in processing natural language and outlines data processing techniques such as text normalization, stemming, lemmatization, and the Bag of Words algorithm.

Uploaded by

swastiksambhu10Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

/ 36