Product Availability Update

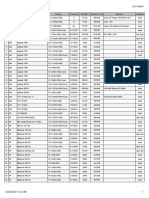

Product C1060 M1060 S1070-400 Inventory 200 units 500 units 50 units Leadtime for big orders 8 weeks 8 weeks 10 weeks Notes Build to order Build to order Build to order

S1070-500

M2050 S2050 C2050 M2070 C2070 M2070-Q

25 units+ 75 being built

Shipping now Building 20K for Q2 Shipping now Building 200 for Q2 Sept 2010

10 weeks

8 weeks 8 weeks

Build to order

Sold out through mid-July Sold out through mid-July

Processamento Paralelo em 2000 units 8 weeks Will maintain inventory GPUs na Arquitetura Fermi

Arnaldo Tavares

Get PO in now to get priority

1

Sept-Oct 2010 - for LatinGet PO in now to get priority Tesla Sales Manager America Oct 2010 -

�Quadro or Tesla?

Computer Aided Design

e.g. CATIA, SolidWorks, Siemens NX

Numerical Analytics

e.g. MATLAB, Mathematica

3D Modeling / Animation

e.g. 3ds, Maya, Softimage

Computational Biology

e.g. AMBER, NAMD, VMD

Video Editing / FX

e.g. Adobe CS5, Avid

Computer Aided Engineering

e.g. ANSYS, SIMULIA/ABAQUS

�GPU Computing

CPU + GPU Co-Processing

4 cores

CPU

48 GigaFlops (DP)

GPU

515 GigaFlops (DP)

(Average efficiency in Linpack: 50%)

3

�146X

Medical Imaging U of Utah

36X

Molecular Dynamics U of Illinois, Urbana

18X

Video Transcoding Elemental Tech

50X

Matlab Computing AccelerEyes

100X

Astrophysics RIKEN

50x 150x

149X

Financial simulation Oxford

47X

Linear Algebra Universidad Jaime

20X

3D Ultrasound Techniscan

130X

Quantum Chemistry U of Illinois, Urbana

30X

Gene Sequencing U of Maryland

4

�Increasing Number of Professional CUDA Apps

Available Now

CUDA C/C++

Tools

Future

Parallel Nsight Vis Studio IDE ParaTools VampirTrace MAGMA (LAPACK) StoneRidge RTM MATLAB PGI CUDA x86 TotalView Debugger

PGI Accelerators CAPS HMPP EMPhotonics CULAPACK OpenGeoSolut ions OpenSEIS VSG Open Inventor NAMD LAMMPS MUMmerGPU GPU-HMMR Autodesk Moldflow

Platform LSF Cluster Mgr Bright Cluster Manager Thrust C++ Template Lib GeoStar Seismic Suite Seismic City RTM HOOMD VMD CUDA-MEME CUDA-EC Prometch Particleworks

TauCUDA Perf Tools Allinea DDT Debugger NVIDIA NPP Perf Primitives Acceleware RTM Solver Tsunami RTM TeraChem GAMESS PIPER Docking

PGI CUDA Fortran CUDA FFT CUDA BLAS Headwave Suite ffA SVI Pro AMBER

AccelerEyes Wolfram Jacket MATLAB Mathematica NVIDIA RNG & SPARSE Video Libraries CUDA Libraries Paradigm RTM Paradigm SKUA Panorama Tech

Libraries

Oil & Gas

BigDFT ABINT CP2K

Acellera ACEMD

DL-POLY

Bio-Chemistry

GROMACS CUDA-BLASTP CUDA SW++ SmithWaterm ACUSIM AcuSolve 1.8

Announced

OpenEye ROCS

BioInformatics

HEX Protein Docking

Remcom XFdtd 7.0 ANSYS Mechanical LSTC LS-DYNA 971 Metacomp CFD++ FluiDyna OpenFOAM MSC.Software Marc 2010.2 5

CAE

Available

�Increasing Number of Professional CUDA Apps

Available Now

Adobe Premier Pro CS5 MainConcept CUDA Encoder Bunkspeed Shot (iray) ARRI Various Apps GenArts Sapphire Fraunhofer JPEG2000 Random Control Arion TDVision TDVCodec Cinnafilm Pixel Strings ILM Plume Black Magic Da Vinci Assimilate SCRATCH Autodesk 3ds Max Cebas finalRender Chaos Group V-Ray GPU Works Zebra Zeany The Foundry Kronos

Future

Video

Elemental Video

Refractive SW Octane

Rendering

mental images iray (OEM)

NAG RNG

Finance

NVIDIA OptiX (SDK)

Numerix Risk Hanweck Options Analy CST Microwave SPEAG SEMCAD X

Caustic Graphics

SciComp SciFinance Murex MACS Agilent ADS SPICE Gauda OPC

Weta Digital PantaRay

RMS Risk Mgt Solutions

Lightworks Artisan

Aquimin AlphaVision Agilent EMPro 2010 Synopsys TCAD

EDA

Acceleware FDTD Solver Acceleware EM Solution

Rocketick Veritlog Sim

Siemens 4D Ultrasound

Other

Digisens Medical

Manifold GIS

Schrodinger Core Hopping

Useful Progress Med

MVTec Machine Vis

MotionDSP Ikena Video

Announced

Dalsa Machine Digital Vision Anarchy Photo 6

Available

�3 of Top5 Supercomputers

3000 8 7 2500 6

2000

5 Megawatts Gigaflops

1500

4

3

1000 2 500 1 0 Tianhe-1A Jaguar Nebulae Tsubame Hopper II Tera 100 0

�3 of Top5 Supercomputers

3000 8 7 2500 6

2000

5 Megawatts Gigaflops

1500

4

3

1000 2 500 1 0 Tianhe-1A Jaguar Nebulae Tsubame Hopper II Tera 100 0

�Linpack Teraflops

What if Every Supercomputer Had Fermi?

1000

800

600

400

450 GPUs 110 TeraFlops $2.2 M Top 50

225 GPUs 55 TeraFlops $1.1 M Top 100

150 GPUs 37 TeraFlops $740K Top 150

200

Top 500 Supercomputers (Nov 2009)

�Hybrid ExaScale Trajectory

2010 1.27 PFLOPS 2.55 MWatts 2008 1 TFLOP 7.5 KWatts

2017 * 2 EFLOPS 10 MWatts

This is a projection based on Moores law and does not represent a committed roadmap

10

�Tesla Roadmap

11

�The March of the GPUs

1200

Peak Double Precision FP GFlops/sec

250

Peak Memory Bandwidth GBytes/sec

1000

200

T20A

800

T20 T20A

150

8-core Sandy Bridge 3 GHz

600

T20

T10

100

8-core Sandy Bridge 3 GHz Nehalem 3 GHz Westmere 3 GHz

400 50

200

T10

Nehalem 3 GHz

Westmere 3 GHz

0 2007

2008

2009

2010

2011

2012

0 2007

2008

2009

2010

2011 x86 CPU

2012

Double Precision: NVIDIA GPU

Double Precision: x86 CPU

NVIDIA GPU (ECC off)

12

�Project Denver

13

�Expected Tesla Roadmap with Project Denver

14

�Workstation / Data Center Solutions

2 Tesla M2050/70 GPUs

Workstations Up to 4x Tesla C2050/70 GPUs

OEM CPU Server + Tesla S2050/70 4 Tesla GPUs in 2U

Integrated CPU-GPU Server 2x Tesla M2050/70 GPUs in 1U

15

�Tesla C-Series Workstation GPUs

Tesla C2050

Processors Number of Cores Caches Floating Point Peak Performance GPU Memory Memory Bandwith System I/O Power 238 W (max) 448

Tesla C2070

Tesla 20-series GPU

64 KB L1 cache + Shared Memory / 32 cores 768 KB L2 cache 1030 Gigaflops (single) 515 Gigaflops (double) 3 GB 2.625 GB with ECC on 6 GB 5.25 GB with ECC on

144 GB/s (GDDR5) PCIe x16 Gen2 238 W (max)

Available

Shipping Now

Shipping Now

16

�How is the GPU Used?

Basic Component: Stream Multiprocessor (SM)

SIMD: Single Instruction Multiple Data Same Instruction for all cores, but can operate over different data SIMD at SM, MIMD at GPU chip

Source: Presentation from Felipe A. Cruz, Nagasaki University

17

�The Use of GPUs and Bottleneck Analysis

Source: Presentation from Takayuki Aoki, Tokyo Institute of Technology

18

�The Fermi Architecture

3 billion transistors 16 x Streaming Multiprocessors (SMs) 6 x 64-bit Memory Partitions = 384-bit Memory Interface Host Interface: connects the GPU to the CPU via PCI-Express GigaThread global scheduler: distribute thread blocks to SM thread schedulers

19

�SM Architecture

32 CUDA cores per SM (512 total)

Instruction Cache Scheduler Scheduler Dispatch Dispatch

Register File Core Core Core Core

16 x Load/Store Units = source and destin. address calculated for 16 threads per clock

4 x Special Function Units (sin, cosine, sq. root, etc.) 64 KB of RAM for shared memory and L1 cache (configurable) Dual Warp Scheduler

Core Core Core Core

Core Core Core Core Core Core Core Core Core Core Core Core Core Core Core Core

Core Core Core Core

Core Core Core Core Load/Store Units x 16 Special Func Units x 4

Interconnect Network

64K Configurable Cache/Shared Mem Uniform Cache

20

�Dual Warp Scheduler

1 Warp = 32 parallel threads

2 Warps issued and executed concurrently

Each Warp goes to 16 CUDA Cores Most instructions can be dual issued (exception: Double Precision instructions) Dual-Issue Model allows near peak hardware performance

21

�CUDA Core Architecture

New IEEE 754-2008 floating-point standard, surpassing even the most advanced CPUs Newly designed integer ALU optimized for 64-bit and extended precision operations Fused multiply-add (FMA) instruction for both 32-bit single and 64-bit double precision

Instruction Cache Scheduler Scheduler Dispatch Dispatch

Register File Core Core Core Core

Core Core Core Core

Core Core Core Core Core Core Core Core Core Core Core Core Core Core Core Core

CUDA Core

Dispatch Port Operand Collector FP Unit INT Unit

Core Core Core Core

Core Core Core Core Load/Store Units x 16

Result Queue

Special Func Units x 4

Interconnect Network

64K Configurable Cache/Shared Mem Uniform Cache

22

�Fused Multiply-Add Instruction (FMA)

23

�GigaThreadTM Hardware Thread Scheduler (HTS)

Hierarchically manages thousands of simultaneously active threads

10x faster application context switching (each program receives a time slice of processing resources)

HTS

Concurrent kernel execution

24

�GigaThread Hardware Thread Scheduler

Concurrent Kernel Execution + Faster Context Switch

Kernel 1 Kernel 2 Kernel 2 Kernel 3 Kernel 4 Kernel 5 Kernel 1 Kernel 2 Kernel 2 Kernel 3 Kernel 5 Ker 4

nel

Time

Serial Kernel Execution

Parallel Kernel Execution

25

�GigaThread Streaming Data Transfer Engine

Dual DMA engines

Simultaneous CPUGPU and GPUCPU data transfer Fully overlapped with CPU and GPU processing time SDT

Activity Snapshot:

Kernel 0

CPU SDT0 CPU GPU SDT0 CPU SDT1 GPU SDT0 CPU SDT1 GPU SDT0 SDT1 GPU SDT1

Kernel 1

Kernel 2

Kernel 3

26

�Cached Memory Hierarchy

First GPU architecture to support a true cache hierarchy in combination with on-chip shared memory Shared/L1 Cache per SM (64KB)

Improves bandwidth and reduces latency

Unified L2 Cache (768 KB)

Fast, coherent data sharing across all cores in the GPU

Global Memory (up to 6GB)

27

�CUDA: Compute Unified Device Architecture

NVIDIAs Parallel Computing Architecture Software Development Platform aimed to the GPU Architecture

Device-level APIs Language Integration

Applications Using DirectX

Applications Using OpenCL

Applications Using the CUDA Driver API

Applications Using C, C++, Fortran, Java, Python, ...

HLSL

OpenCL C

C for CUDA

C for CUDA

DirectX 11 Compute

OpenCL Driver

C Runtime for CUDA

CUDA Driver

PTX (ISA)

CUDA Support in Kernel Level Driver

CUDA Parallel Compute Engines inside GPU

28

�Thread Hierarchy

Kernels (simple C program) are executed by thread Threads are grouped into Blocks Threads in a Block can synchronize execution

Blocks are grouped in a Grid

Blocks are independent (must be able to be executed at any order

Source: Presentation from Felipe A. Cruz, Nagasaki University

29

�Memory and Hardware Hierarchy

Threads access Registers CUDA Cores execute Threads Threads within a Block can share data/results via Shared Memory Streaming Multiprocessors (SMs) execute Blocks Grids use Global Memory for result sharing (after kernel-wide global synchronization) GPU executes Grids

Source: Presentation from Felipe A. Cruz, Nagasaki University

30

�Full View of the Hierarchy Model

CUDA

Thread Block

Hardware Level

CUDA Core SM

Memory Access

Registers Shared Memory

Grid

Device

GPU

Node

Global Memory

Host Memory

31

�IDs and Dimensions

Threads 3D IDs, unique within a block

Blocks

Device Grid 1 Block (0, 0) Block (0, 1) Block (1, 0) Block (1, 1) Block (2, 0) Block (2, 1)

2D IDs, unique within a grid

Dimensions set at launch time Can be unique for each grid

Block (1, 1)

Built-in variables threadIdx, blockIdx blockDim, gridDim

Thread (0, 0) Thread (0, 1) Thread (0, 2)

Thread (1, 0) Thread (1, 1) Thread (1, 2)

Thread (2, 0) Thread (2, 1) Thread (2, 2)

Thread (3, 0) Thread (3, 1) Thread (3, 2)

Thread (4, 0) Thread (4, 1) Thread (4, 2)

32

�Compiling C for CUDA Applications

void serial_function( ) { ... } void other_function(int ... ) { ... } void saxpy_serial(float ... ) { for (int i = 0; i < n; ++i) y[i] = a*x[i] + y[i]; } void main( ) { float x; saxpy_serial(..); ... }

C CUDA Key Kernels

Rest of C Application

NVCC (Open64)

Modify into Parallel CUDA code

CPU Compiler

CUDA object files

Linker

CPU object files CPU-GPU Executable

33

�C for CUDA : C with a few keywords

void saxpy_serial(int n, float a, float *x, float *y) { for (int i = 0; i < n; ++i) y[i] = a*x[i] + y[i]; } // Invoke serial SAXPY kernel saxpy_serial(n, 2.0, x, y);

Standard C Code

__global__ void saxpy_parallel(int n, float a, float *x, float *y) { int i = blockIdx.x*blockDim.x + threadIdx.x; Parallel if (i < n) y[i] = a*x[i] + y[i]; } // Invoke parallel SAXPY kernel with 256 threads/block int nblocks = (n + 255) / 256; saxpy_parallel<<<nblocks, 256>>>(n, 2.0, x, y);

C Code

34

�Software Programming

Source: Presentation from Andreas Klckner, NYU

35

�Software Programming

Source: Presentation from Andreas Klckner, NYU

36

�Software Programming

Source: Presentation from Andreas Klckner, NYU

37

�Software Programming

Source: Presentation from Andreas Klckner, NYU

38

�Software Programming

Source: Presentation from Andreas Klckner, NYU

39

�Software Programming

Source: Presentation from Andreas Klckner, NYU

40

�Software Programming

Source: Presentation from Andreas Klckner, NYU

41

�Software Programming

Source: Presentation from Andreas Klckner, NYU

42

�CUDA C/C++ Leadership

2007 2008 2009 2010

CUDA Toolkit 1.0 July 07

C Compiler C Extensions

CUDA Toolkit 1.1 Nov 07

Win XP 64

Atomics support Multi-GPU support

CUDA Visual Profiler April 08 2.2 cuda-gdb HW Debugger

CUDA Toolkit 2.0 Aug 08

CUDA Toolkit 2.3 July 09

DP FFT 16-32 Conversion intrinsics Performance enhancements

Parallel Nsight Beta Nov 09

CUDA Toolkit 3.0 Mar 10

C++ inheritance Fermi arch support Tools updates Driver / RT interop

Double Precision Compiler Optimizations Vista 32/64 Mac OSX 3D Textures HW Interpolation

Single Precision BLAS FFT SDK 40 examples

43

�Why should I choose Tesla over consumer cards?

Feature

4x Higher double precision (on 20-series)

Benefits

Higher Performance for scientific CUDA applications

ECC only on Tesla & Quadro (on 20-series)

Bi-directional PCI-E communication (Tesla has Dual DMA Engines, GeForce has only 1 DMA Engine) Larger memory for larger data sets 3GB and 6GB Products

Data reliability inside the GPU and on DRAM memories

Higher Performance for CUDA applications (by overlapping communication & computation) Higher performance on wide range of applications (medical, oil & gas, manufacturing, FEA, CAE) Needed for GPU monitoring and job scheduling in data center deployments Higher performance for CUDA applications due to lower kernel launch overhead. TCC adds support for RDP and Services Trusted, reliable systems built for Tesla products. Bug reproduction, support, feature requests for Tesla only. Built for 24/7 computing in data center and workstation environments. No changes in key components like GPU and memory without notice. Always the same clocks for known, reliable performance. Reliable, long life products Ability to influence CUDA and GPU roadmap. Get early access to features requests. Reliable product supply

Features

Cluster management software tools available on Tesla only

TCC (Tesla Compute Cluster) driver supported for Windows OS only on Tesla. Integrated OEM workstations and servers Professional ISVs will certify CUDA applications only on Tesla 2 to 4 day Stress testing & memory burn-in for reliability. Added margin in memory and core clocks for added reliability.

Quality & Warranty

Manufactured & guaranteed by NVIDIA 3-year warranty from HP

Support & Lifecycle

Enterprise support, higher priority for CUDA bugs and requests

18-24 months availability + 6-month EOL notice

44