0% found this document useful (0 votes)

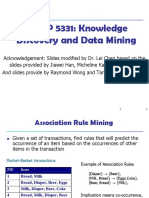

49 views31 pagesData Mining Chapter 4 Association Analysis

a note on data mining ioe attached for the ioe exam, TU KEC

KANTIPUR ENGINEERING COLLEGE , DHAPAKHEL

Binod Wosti

Uploaded by

akafle99Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PPTX, PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

49 views31 pagesData Mining Chapter 4 Association Analysis

a note on data mining ioe attached for the ioe exam, TU KEC

KANTIPUR ENGINEERING COLLEGE , DHAPAKHEL

Binod Wosti

Uploaded by

akafle99Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PPTX, PDF, TXT or read online on Scribd

/ 31