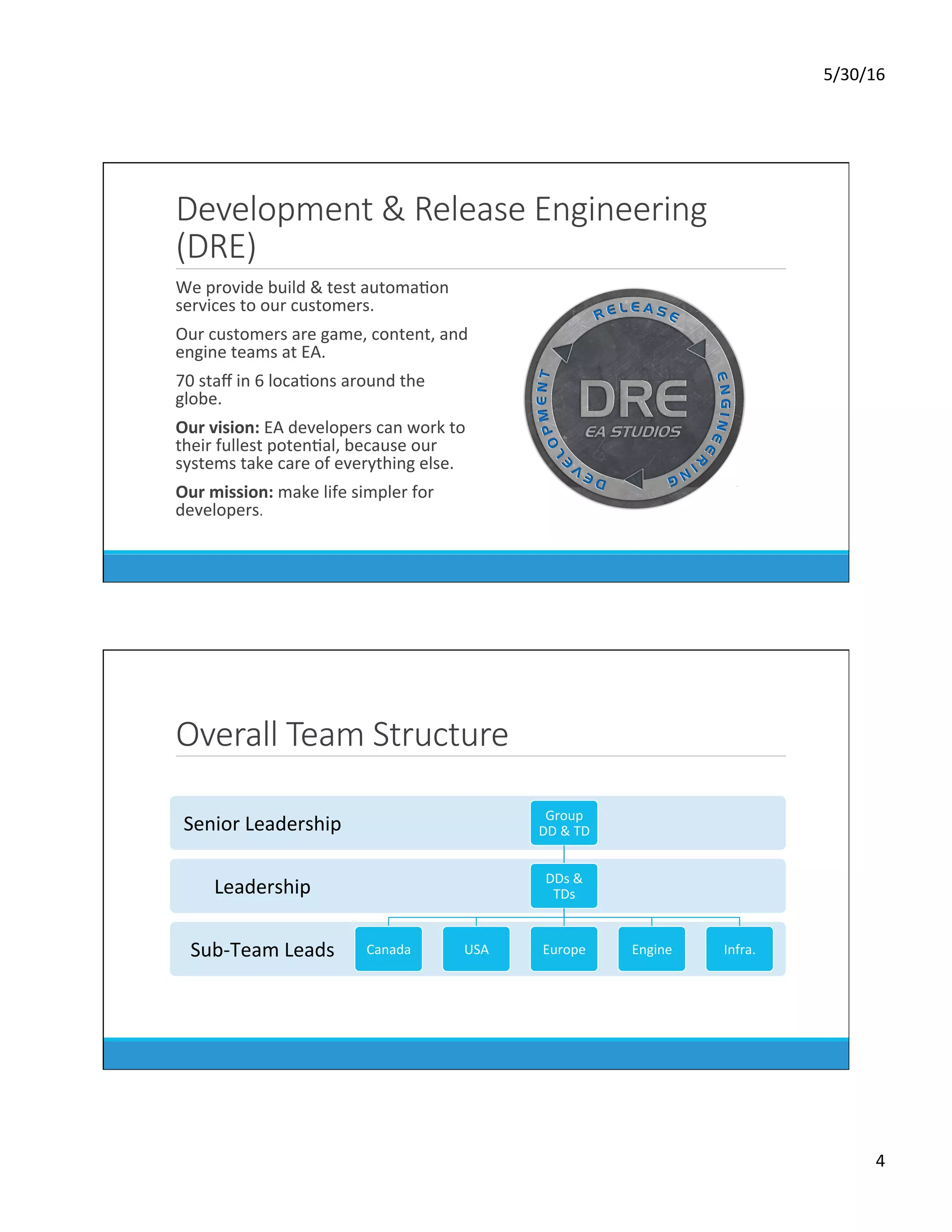

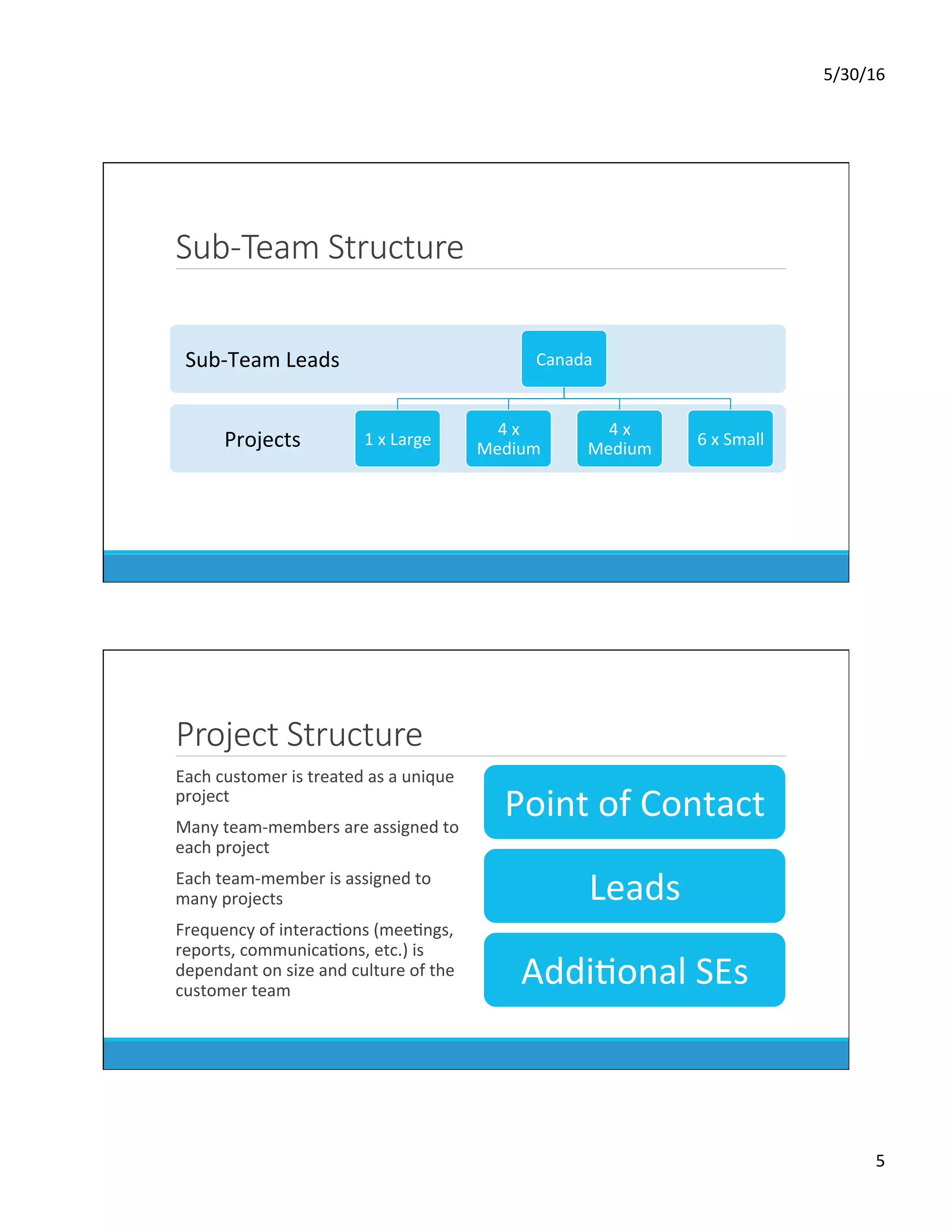

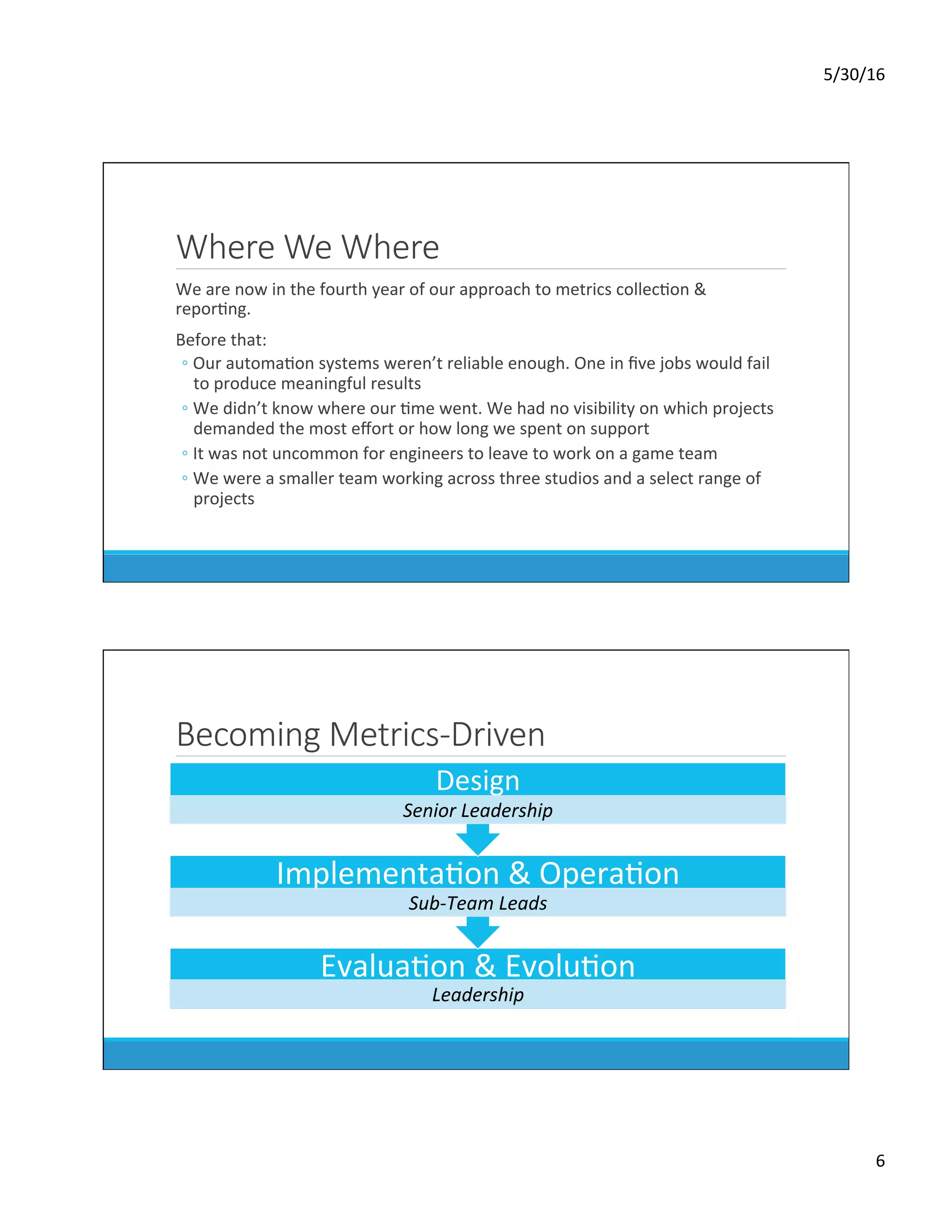

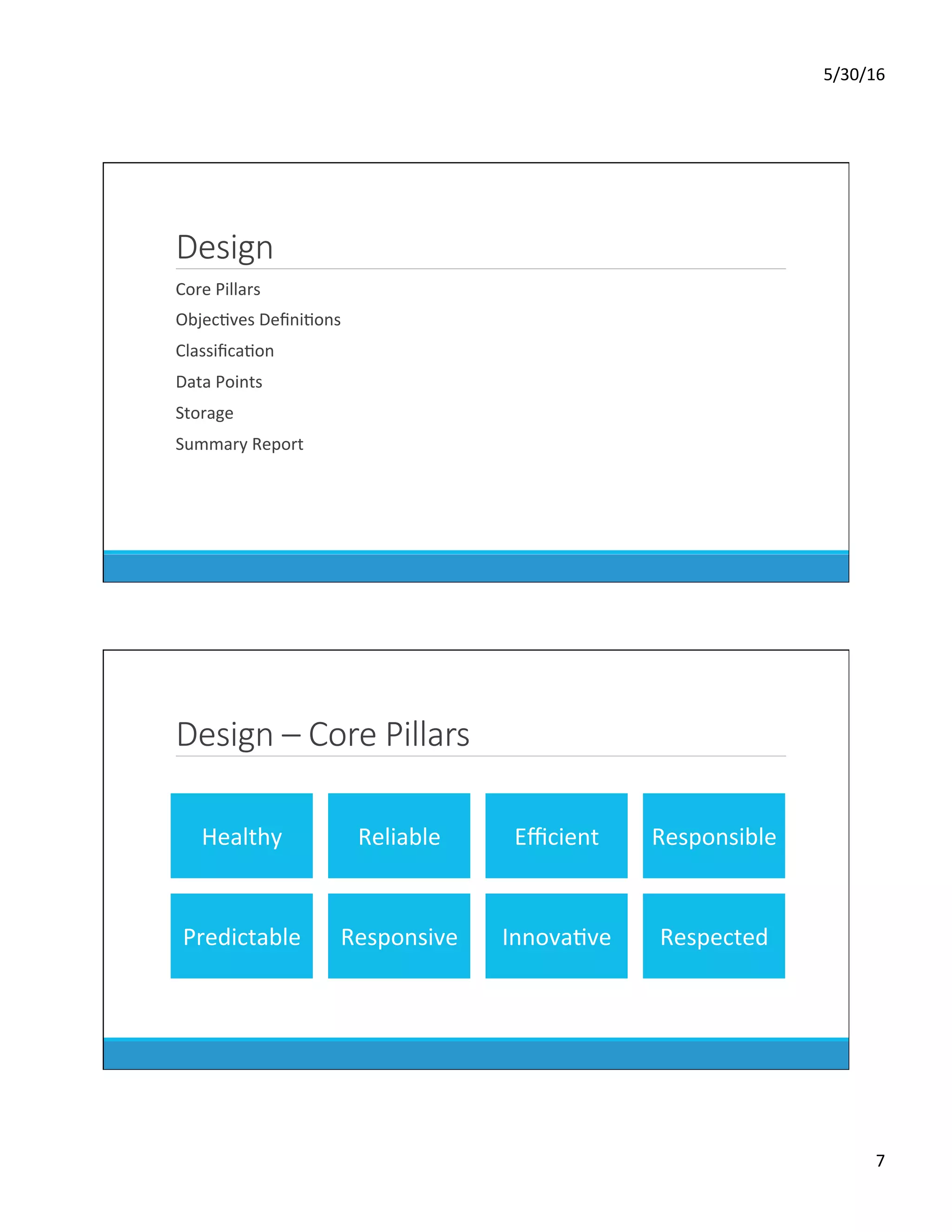

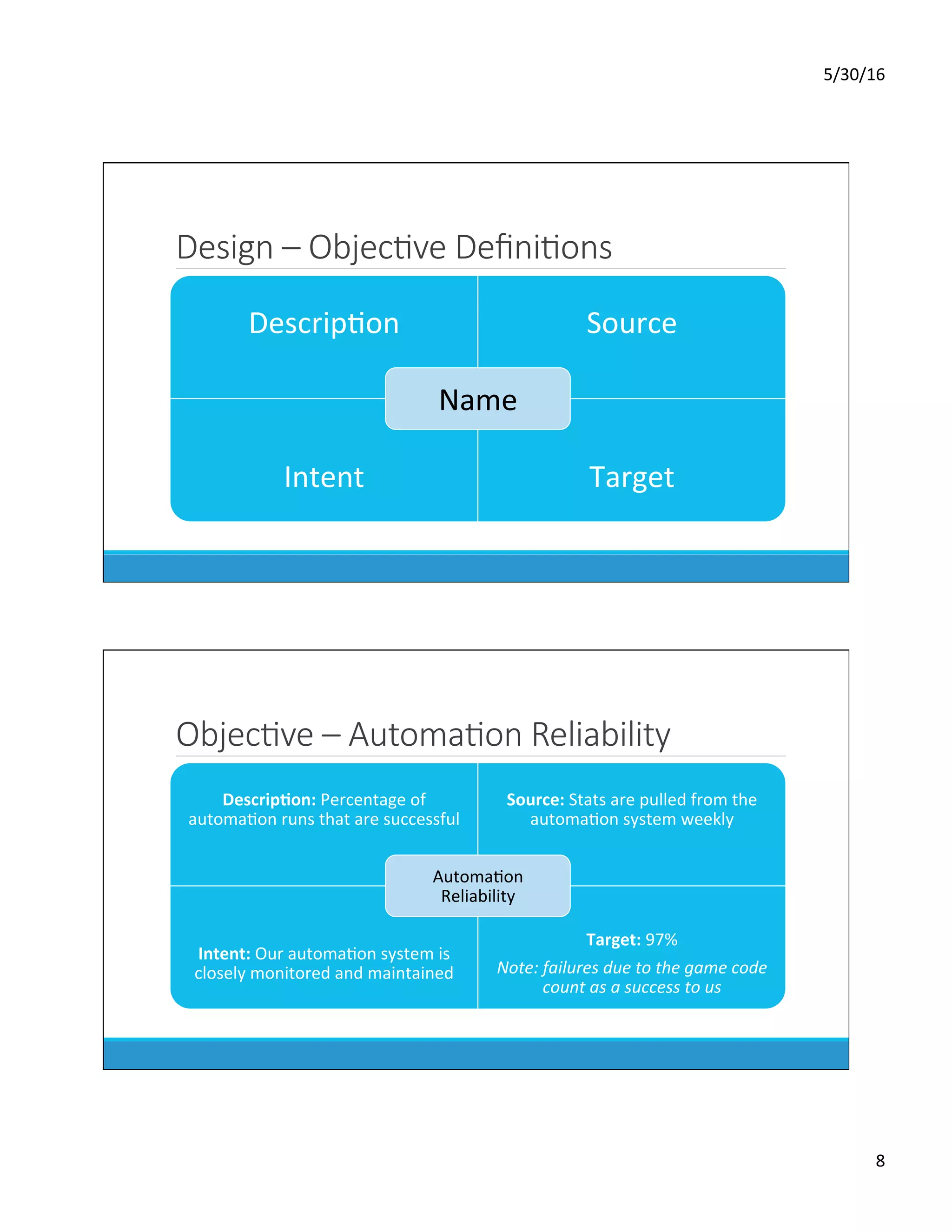

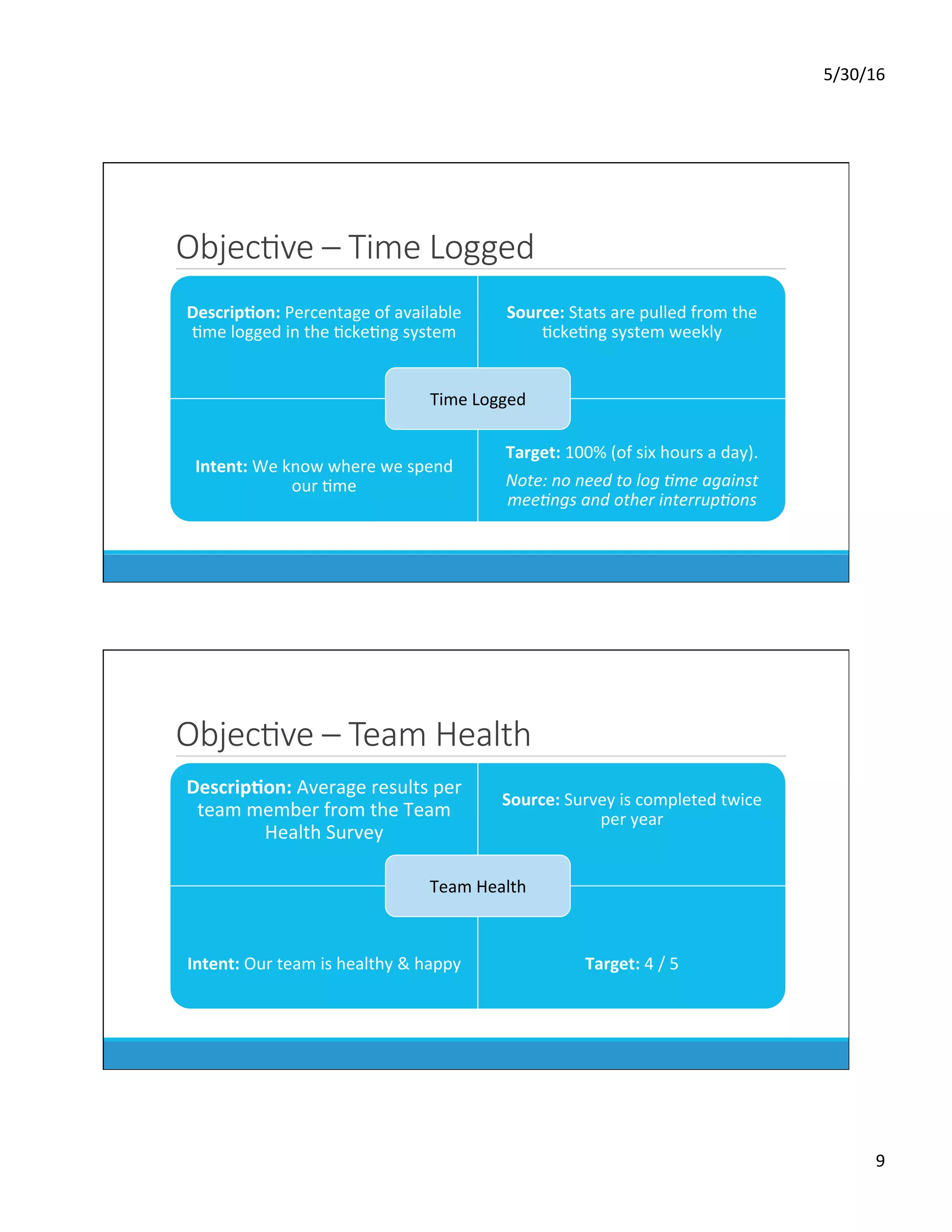

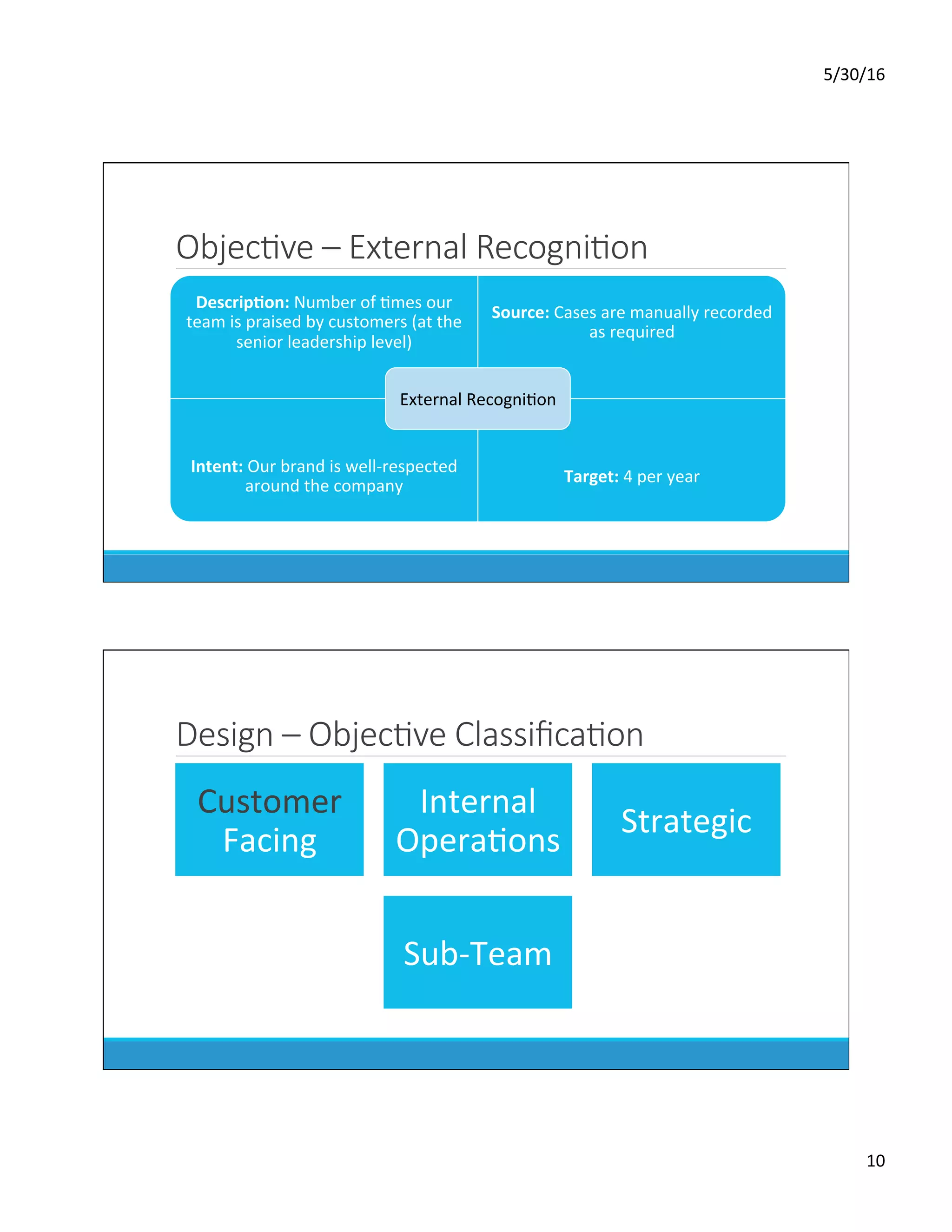

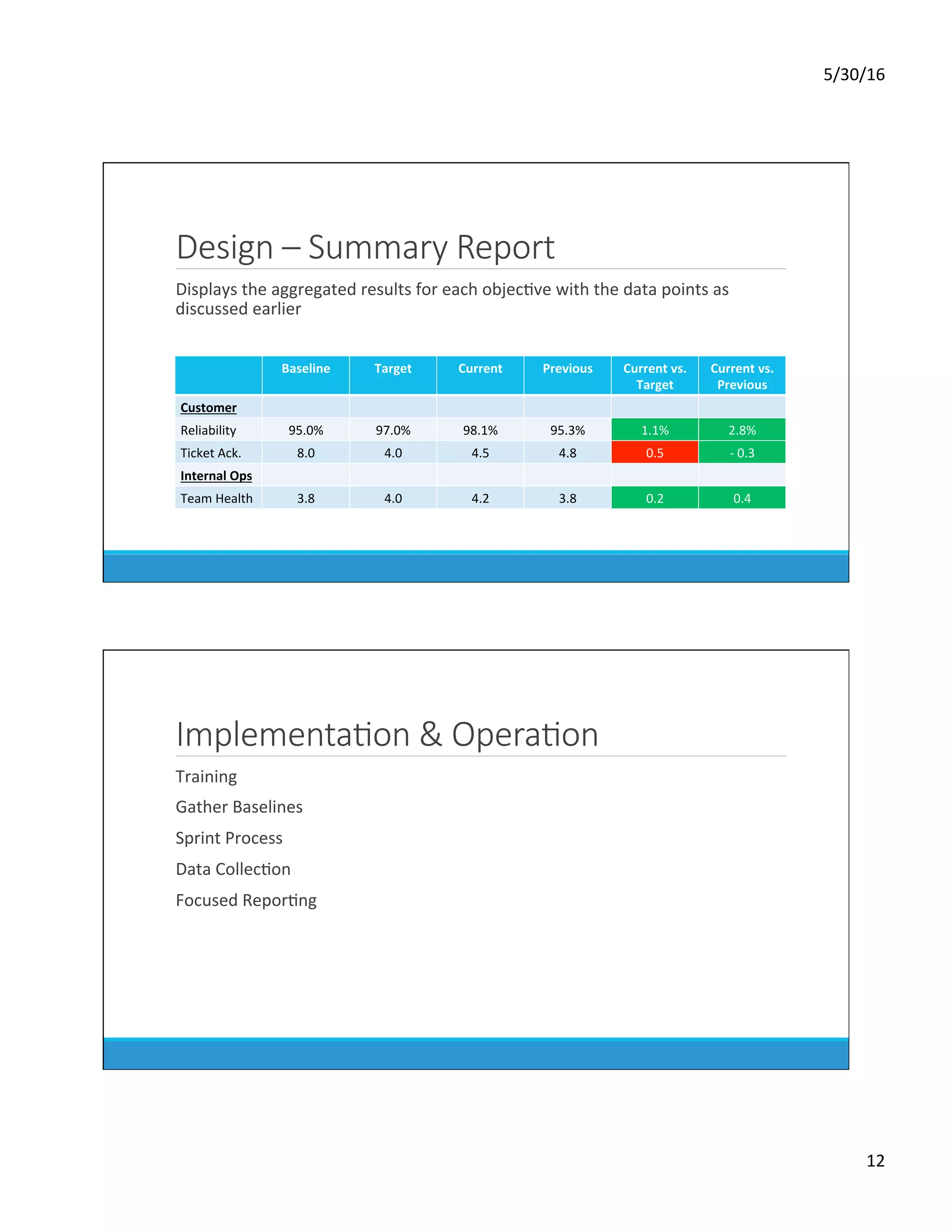

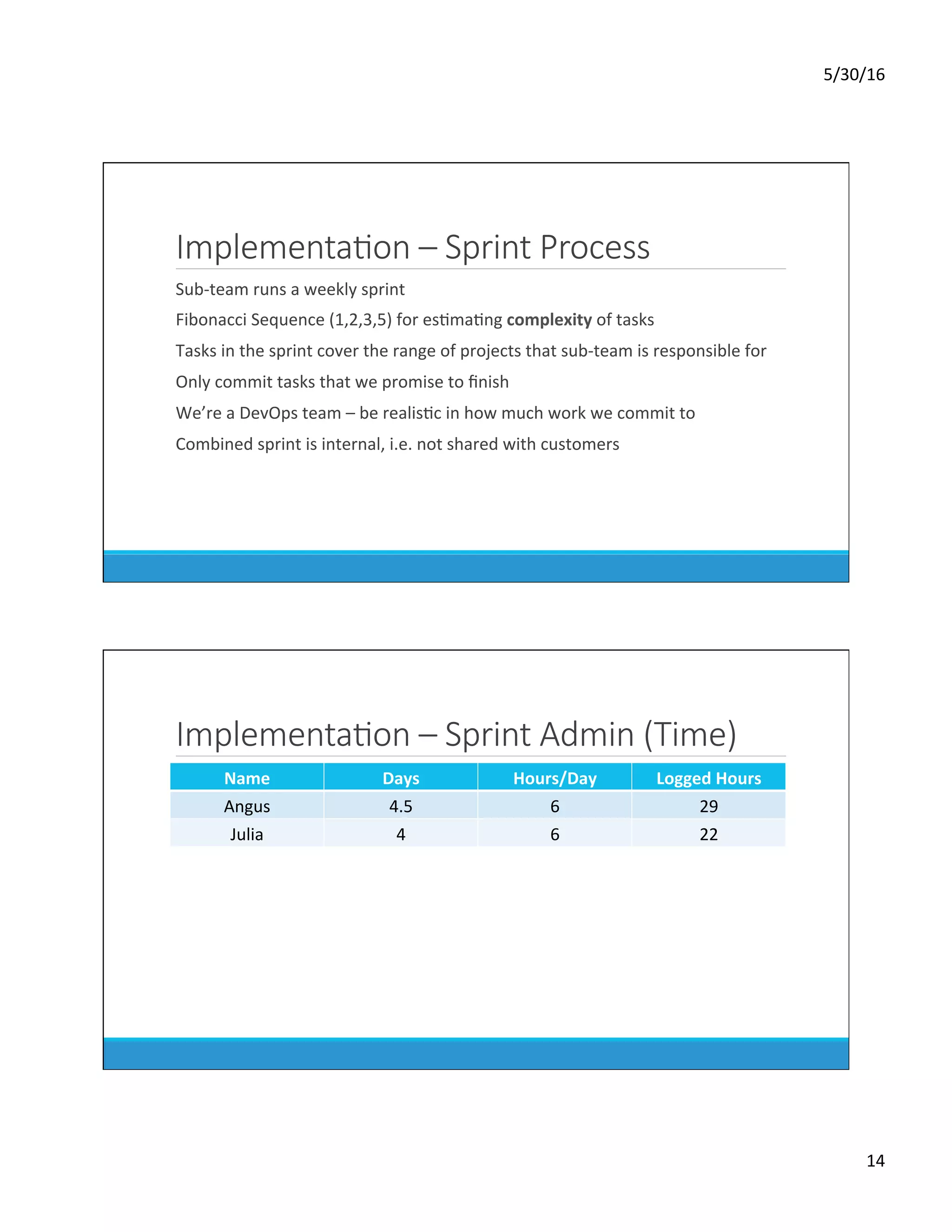

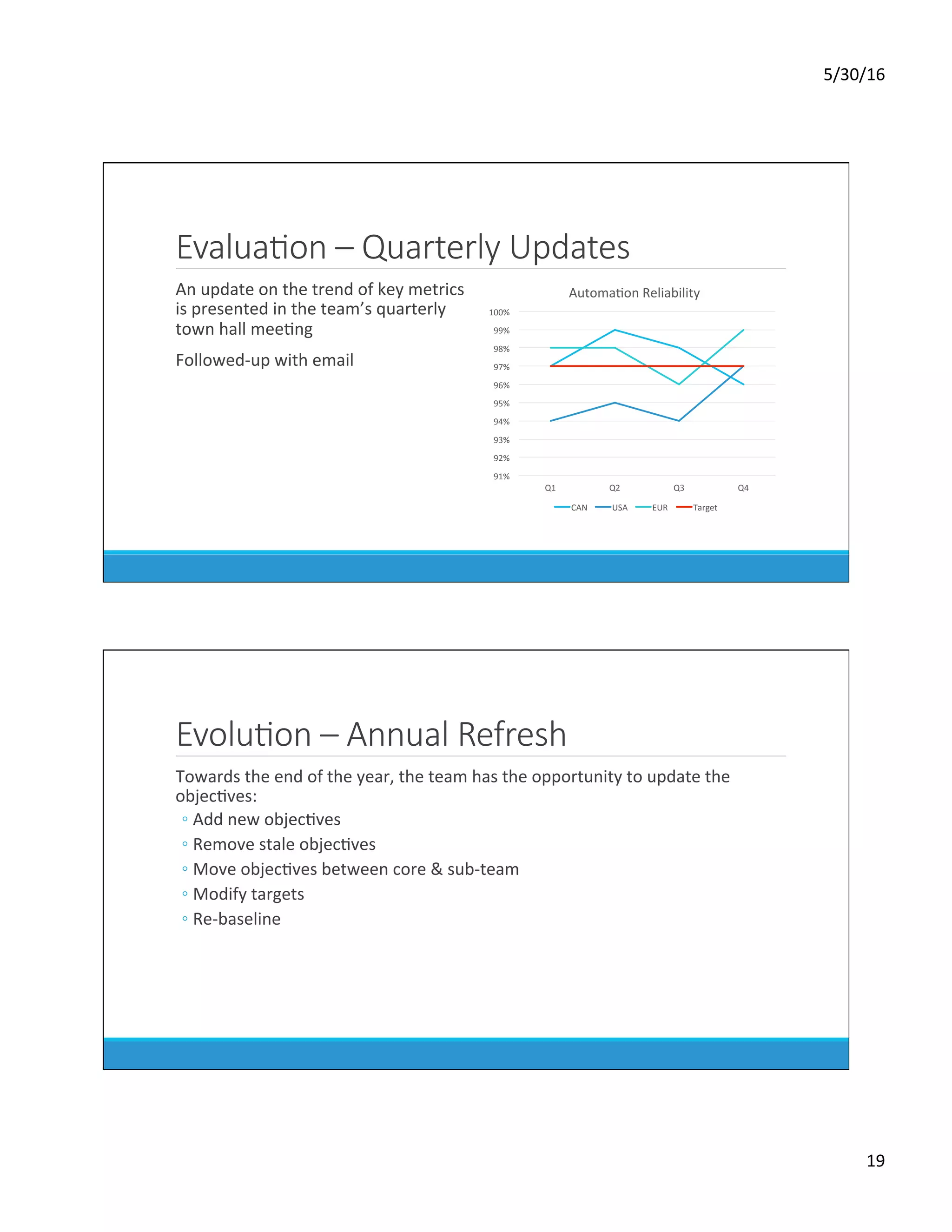

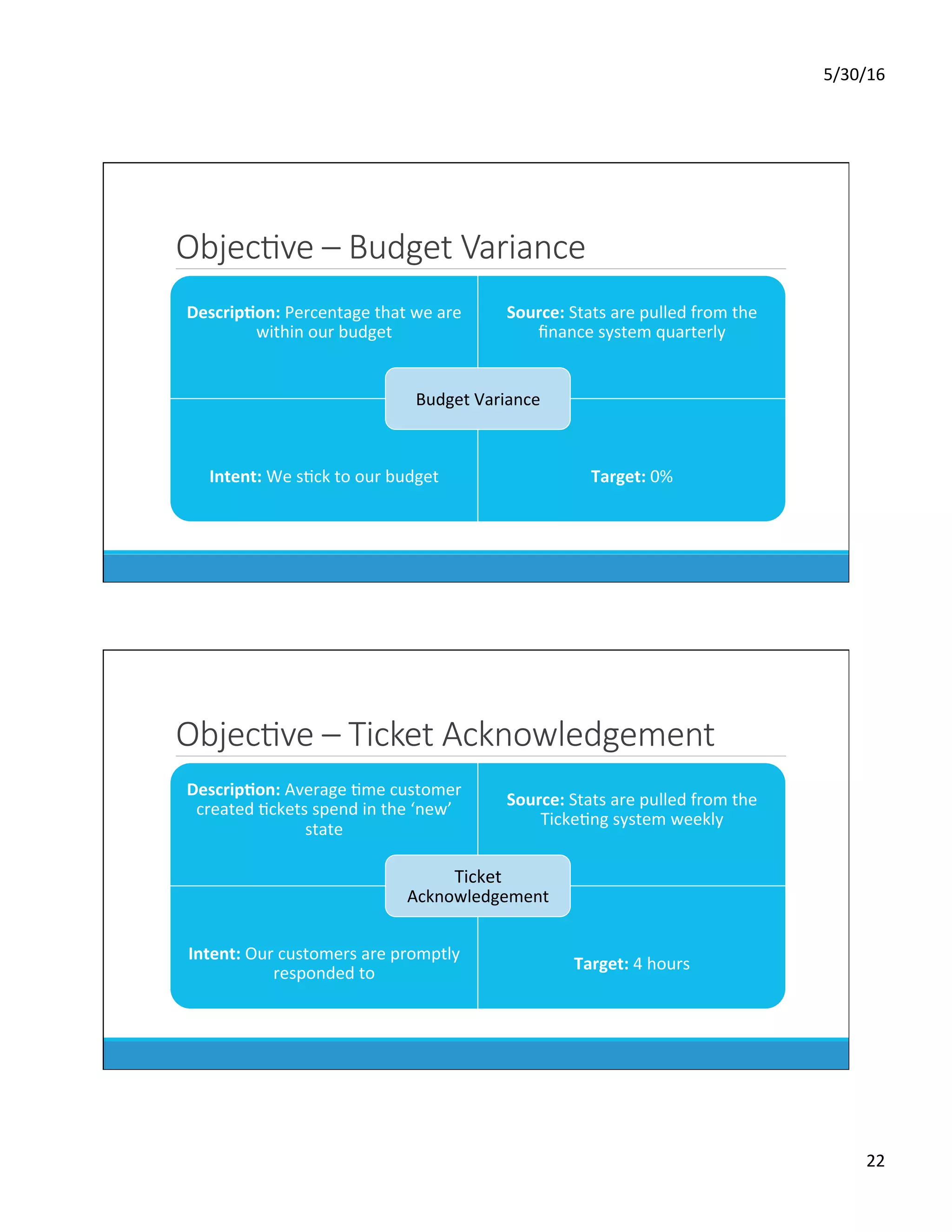

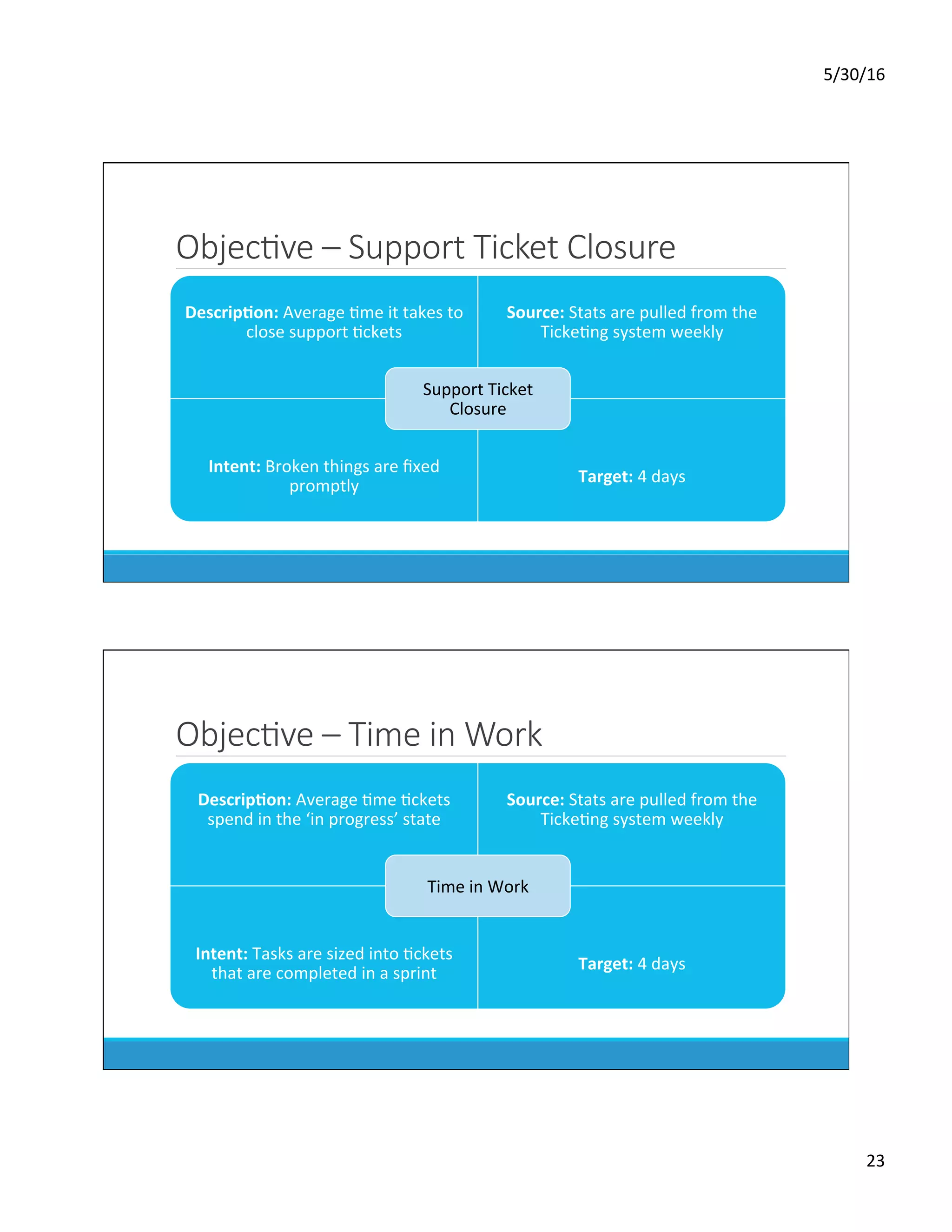

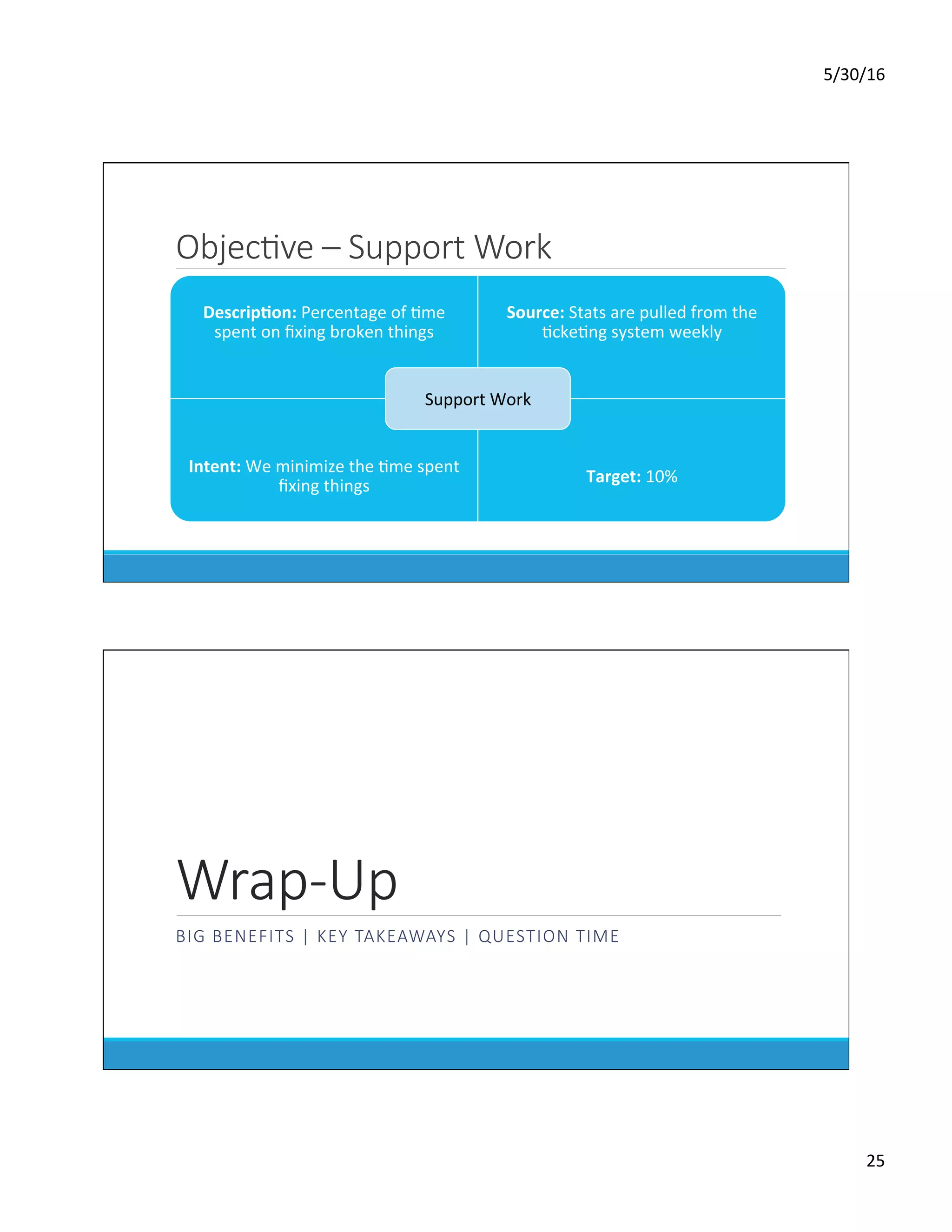

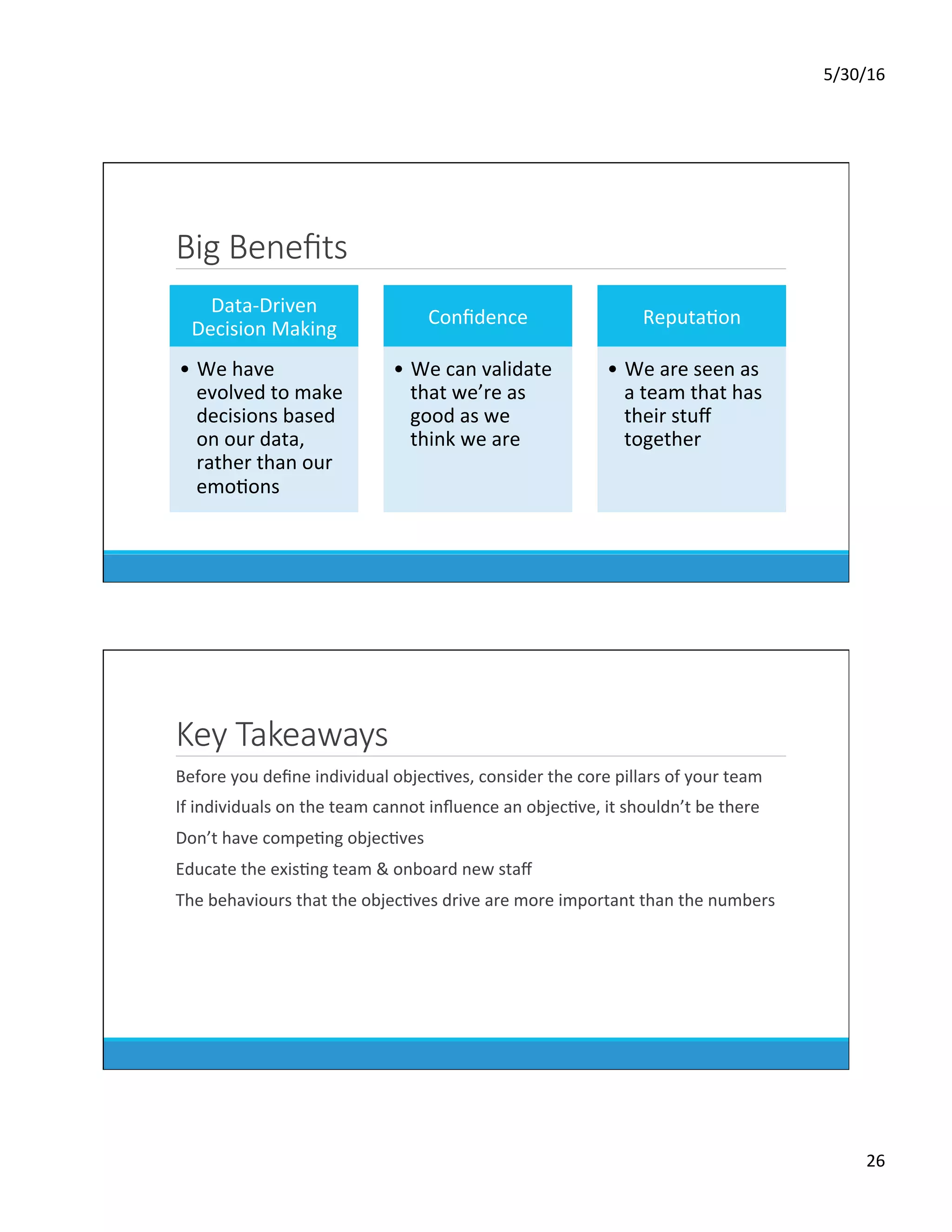

The document presents a case study by Ben Vining from Electronic Arts on implementing metrics-driven DevOps to enhance team performance, reputation, and happiness. It details the transition from unreliable automation systems to a data-driven approach, outlining objectives like automation reliability, time logged, and team health. Key takeaways emphasize defining objectives influenced by team members and prioritizing behaviors over purely numerical targets.