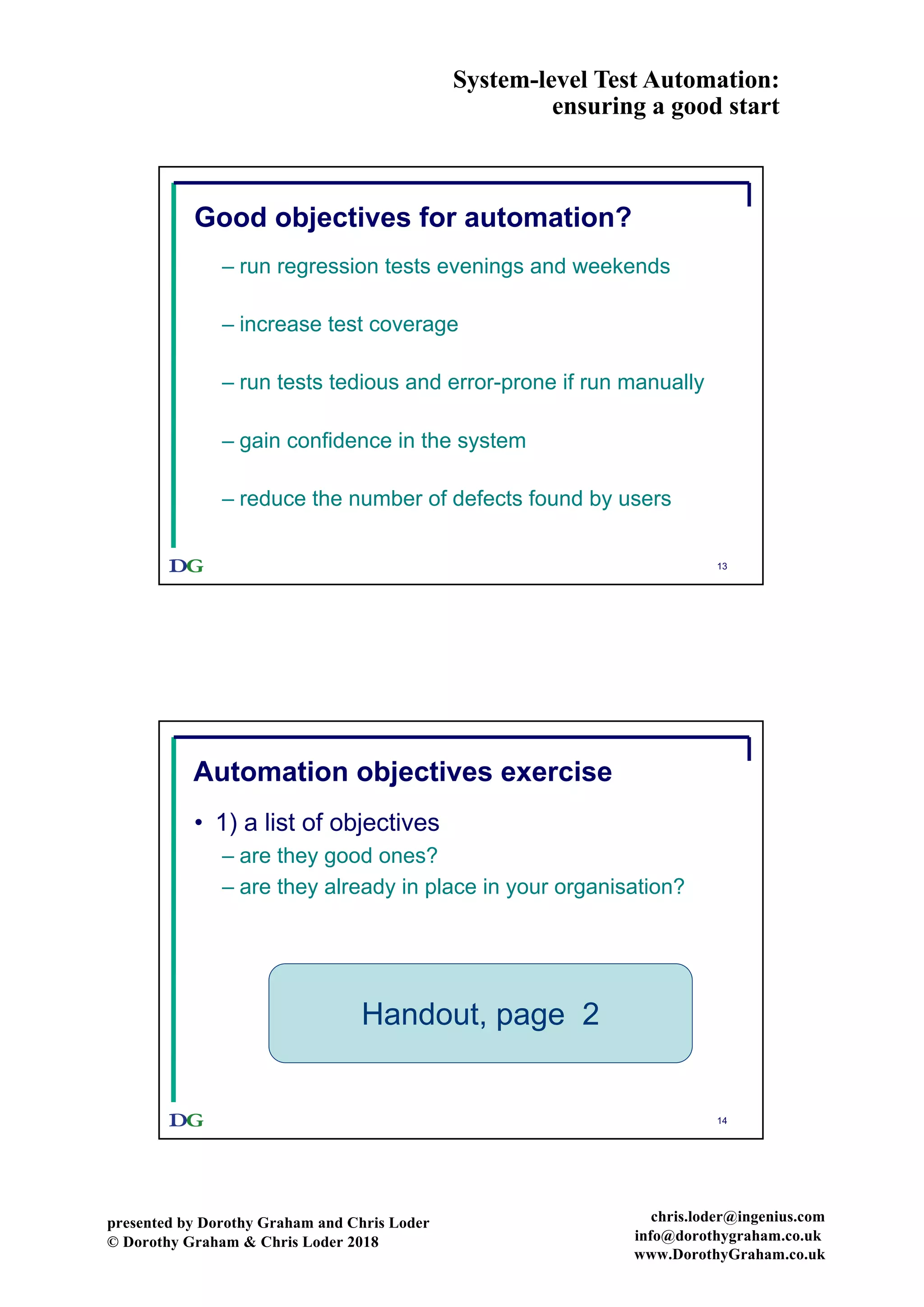

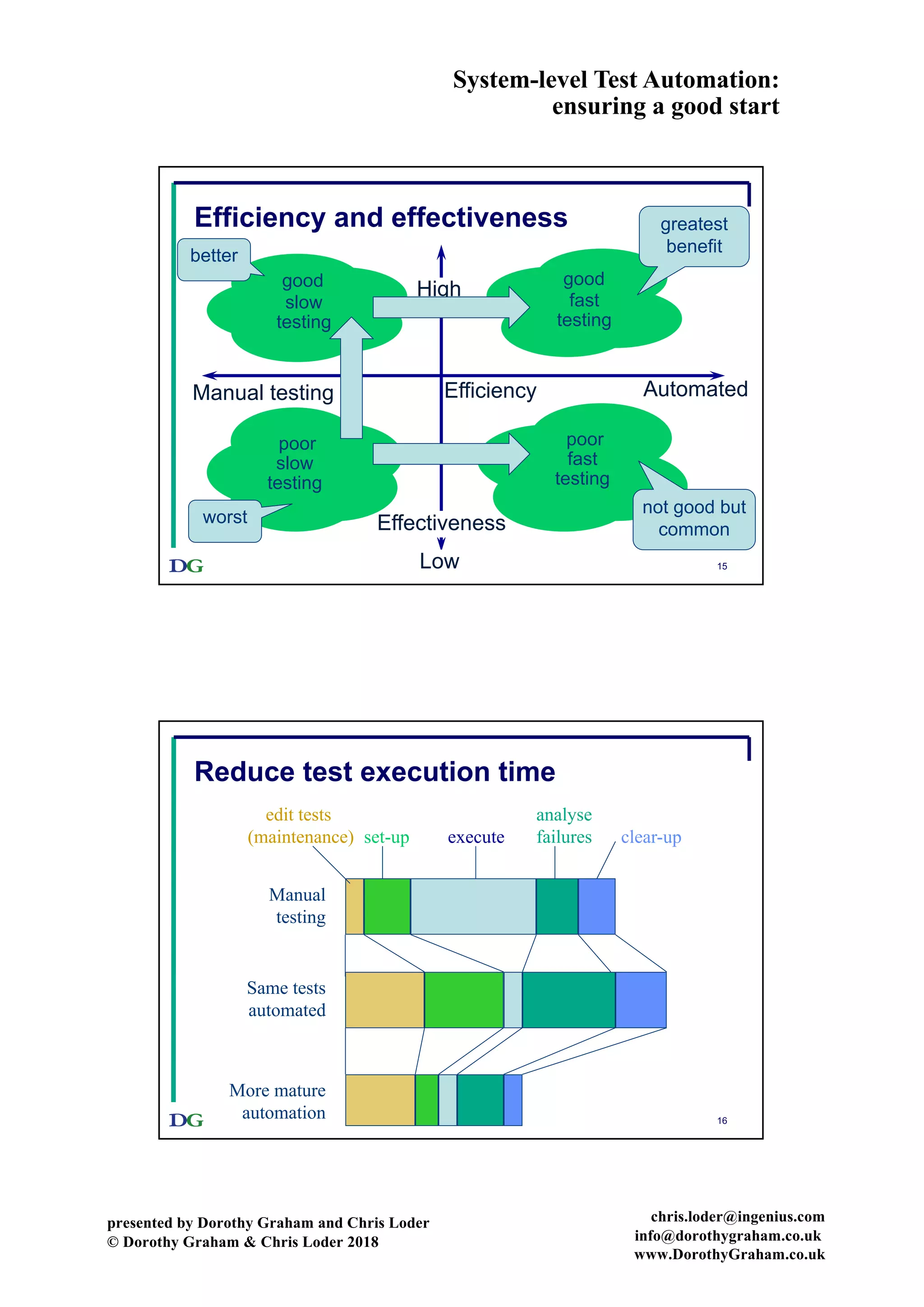

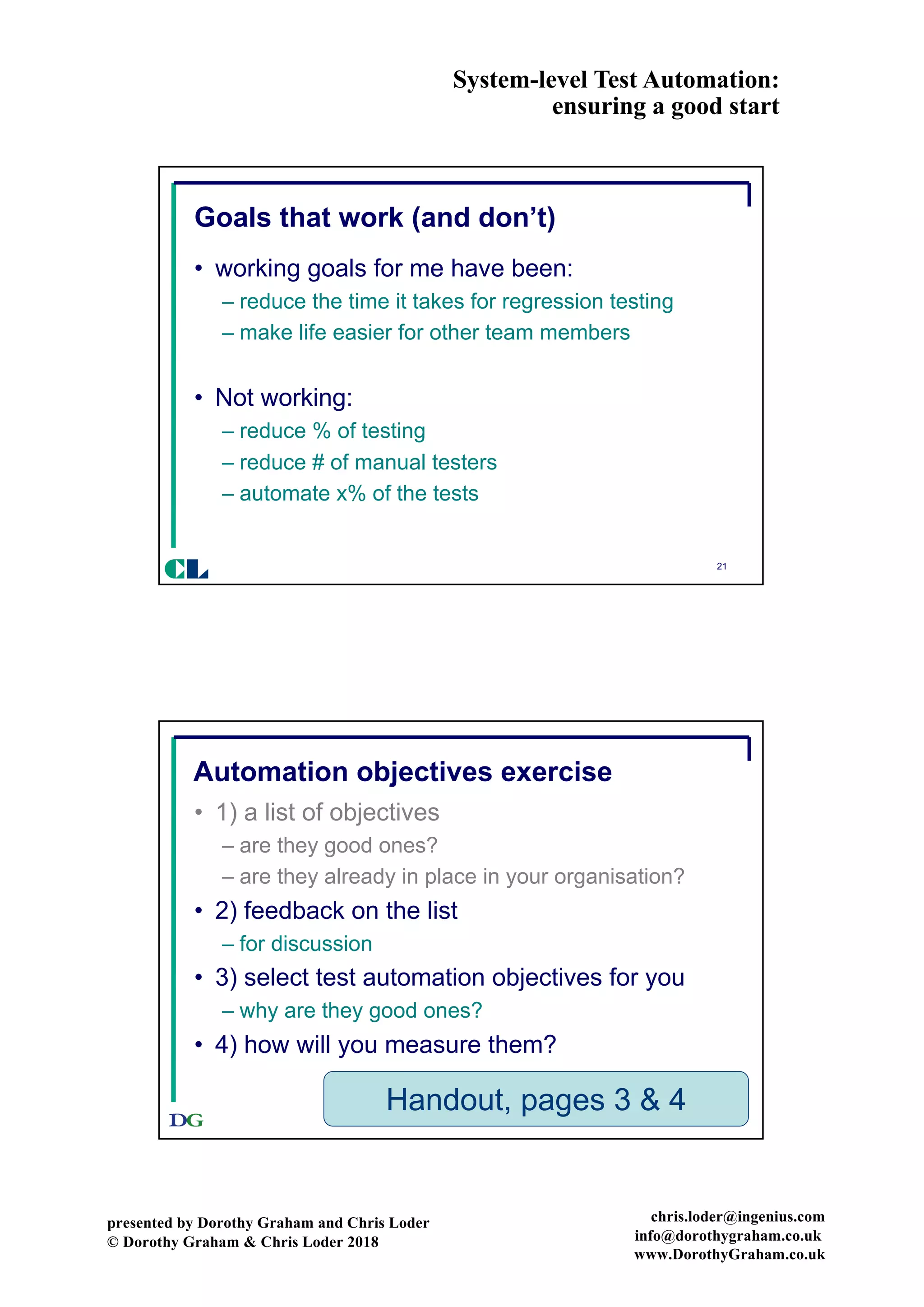

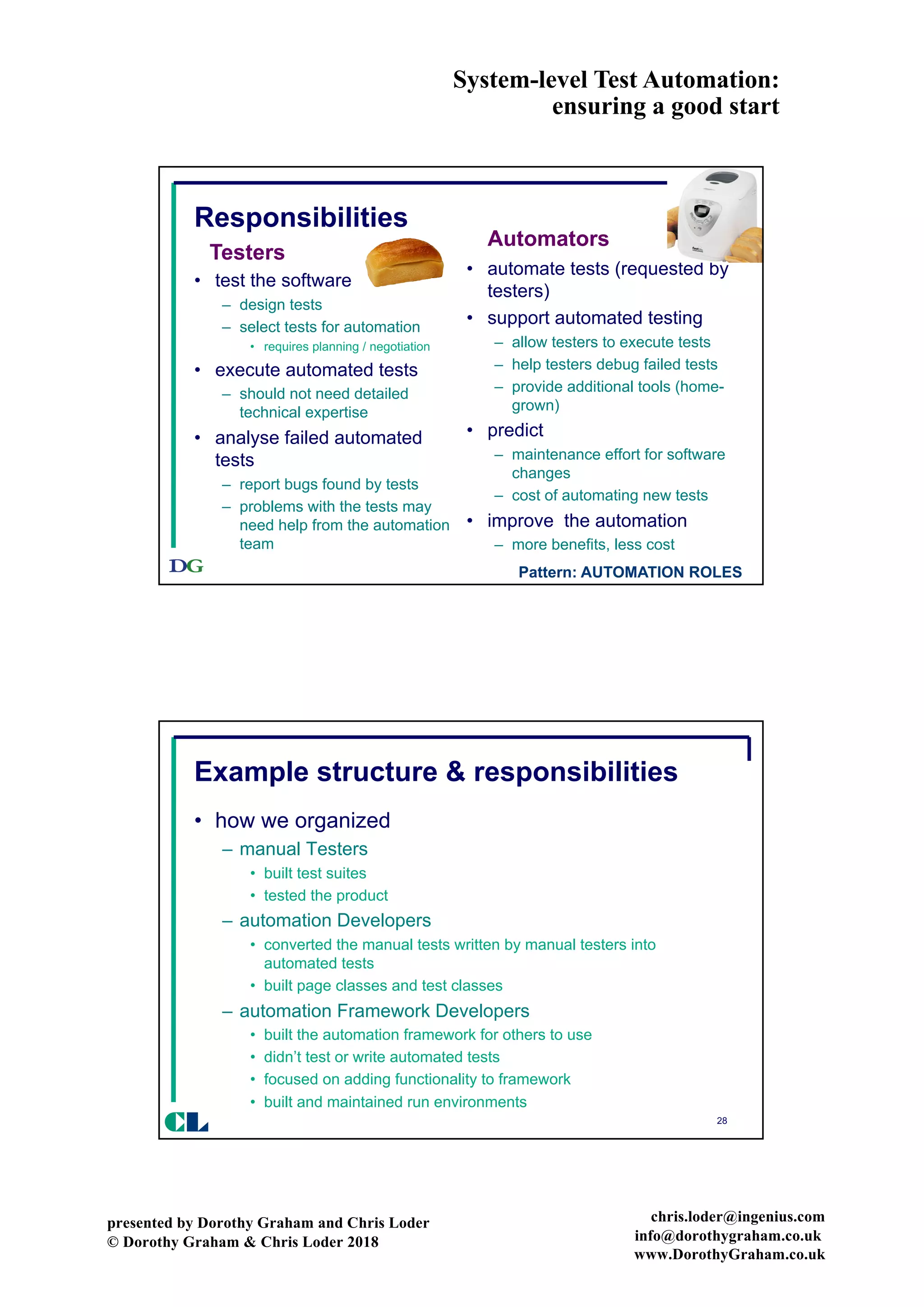

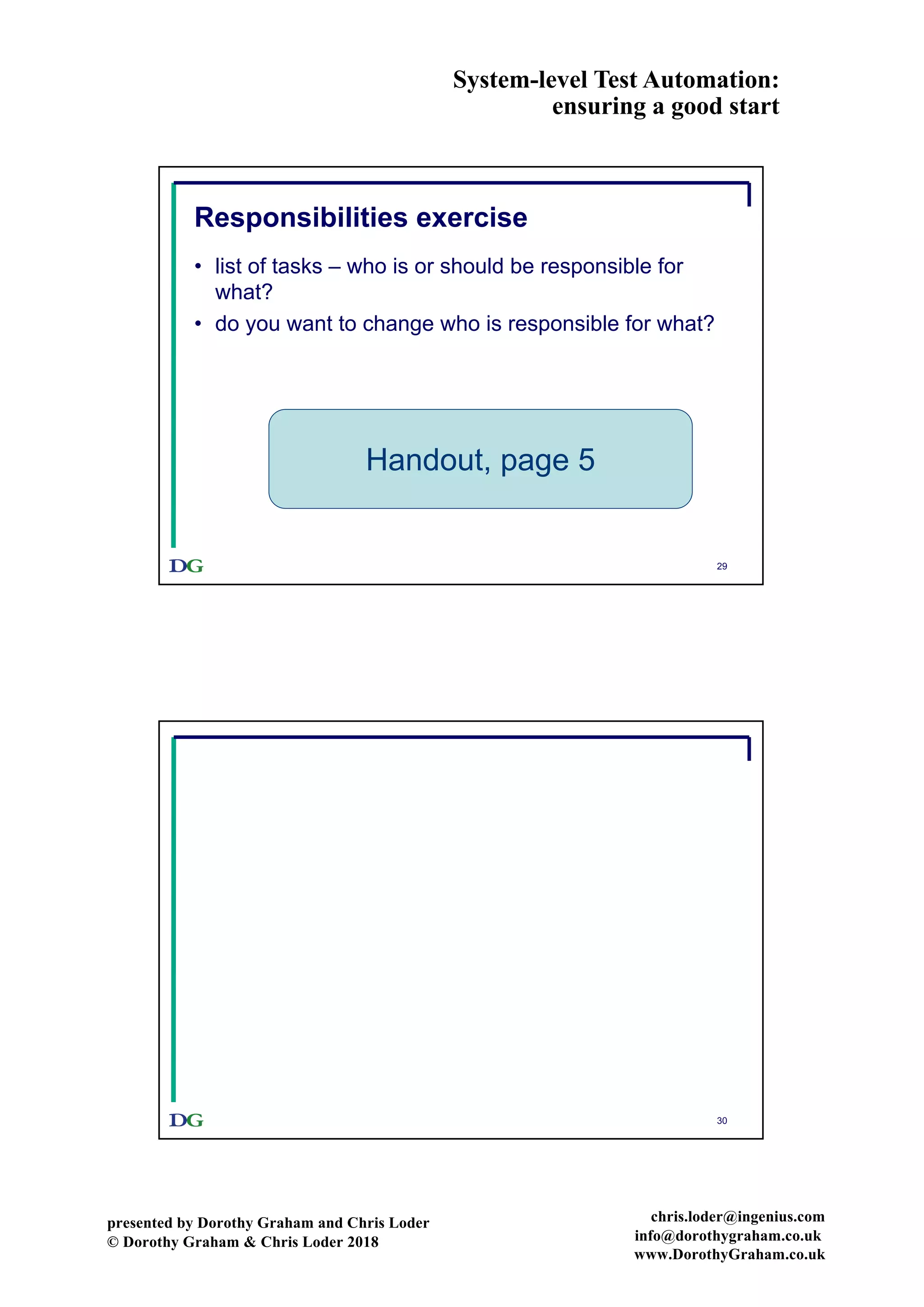

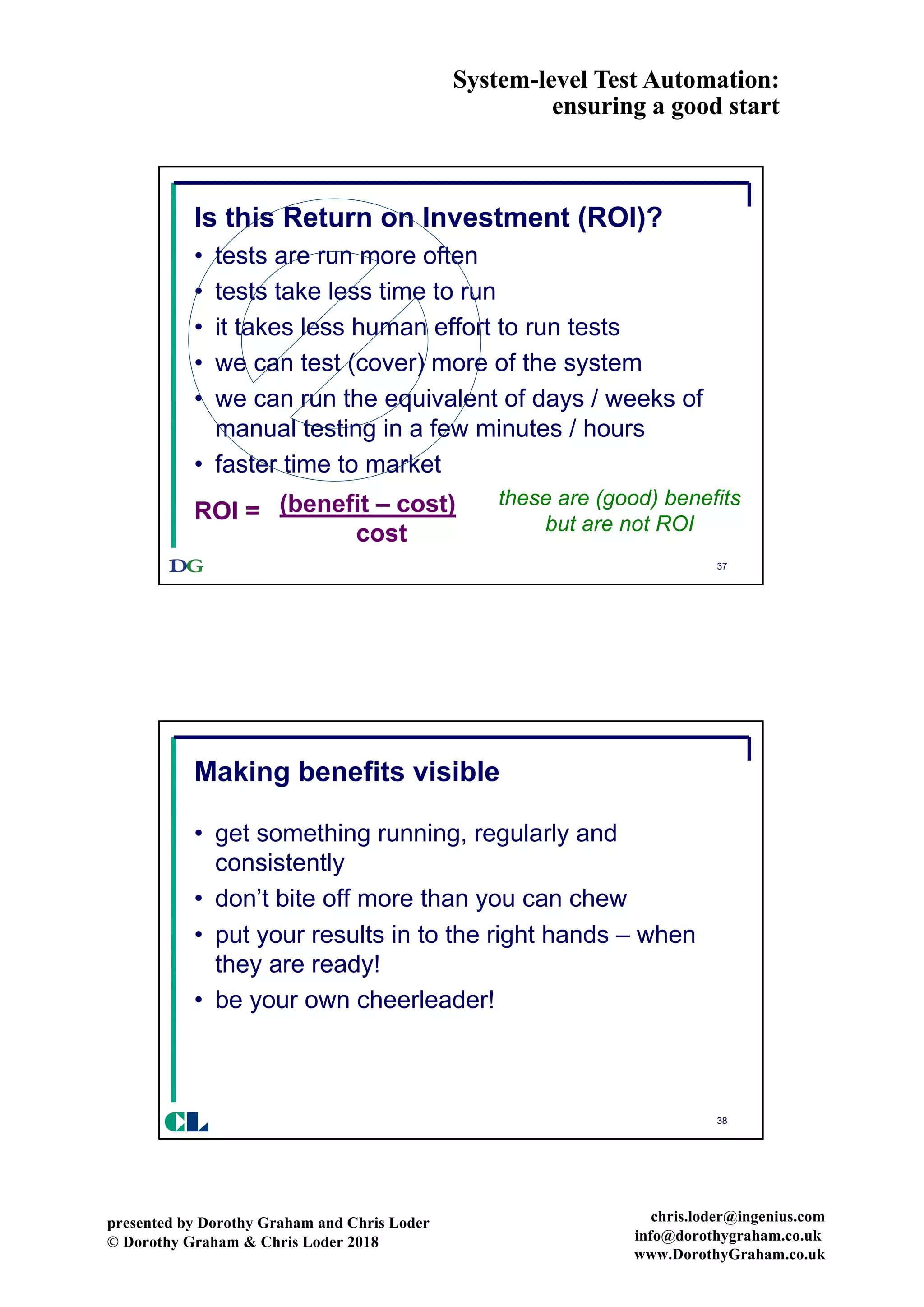

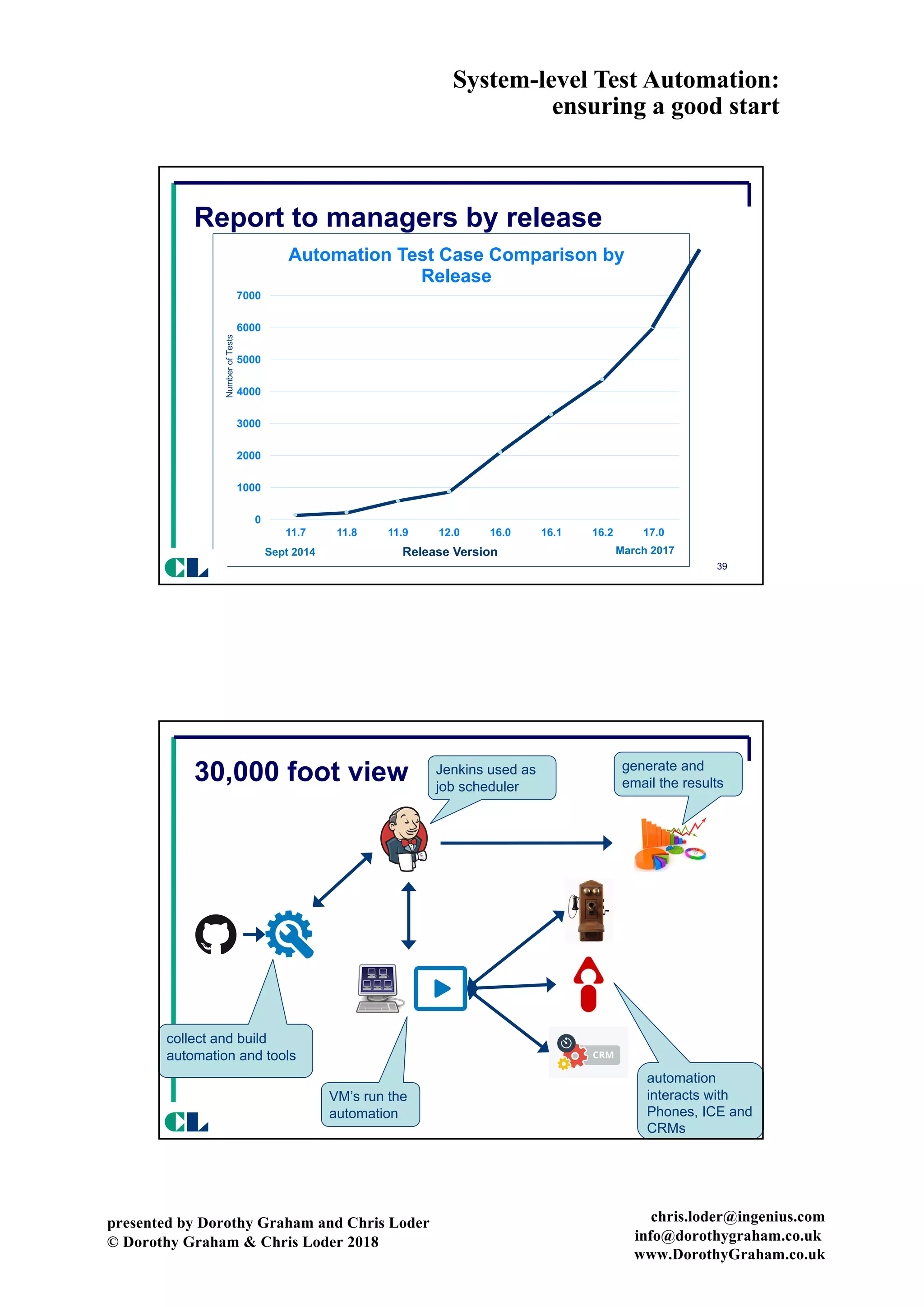

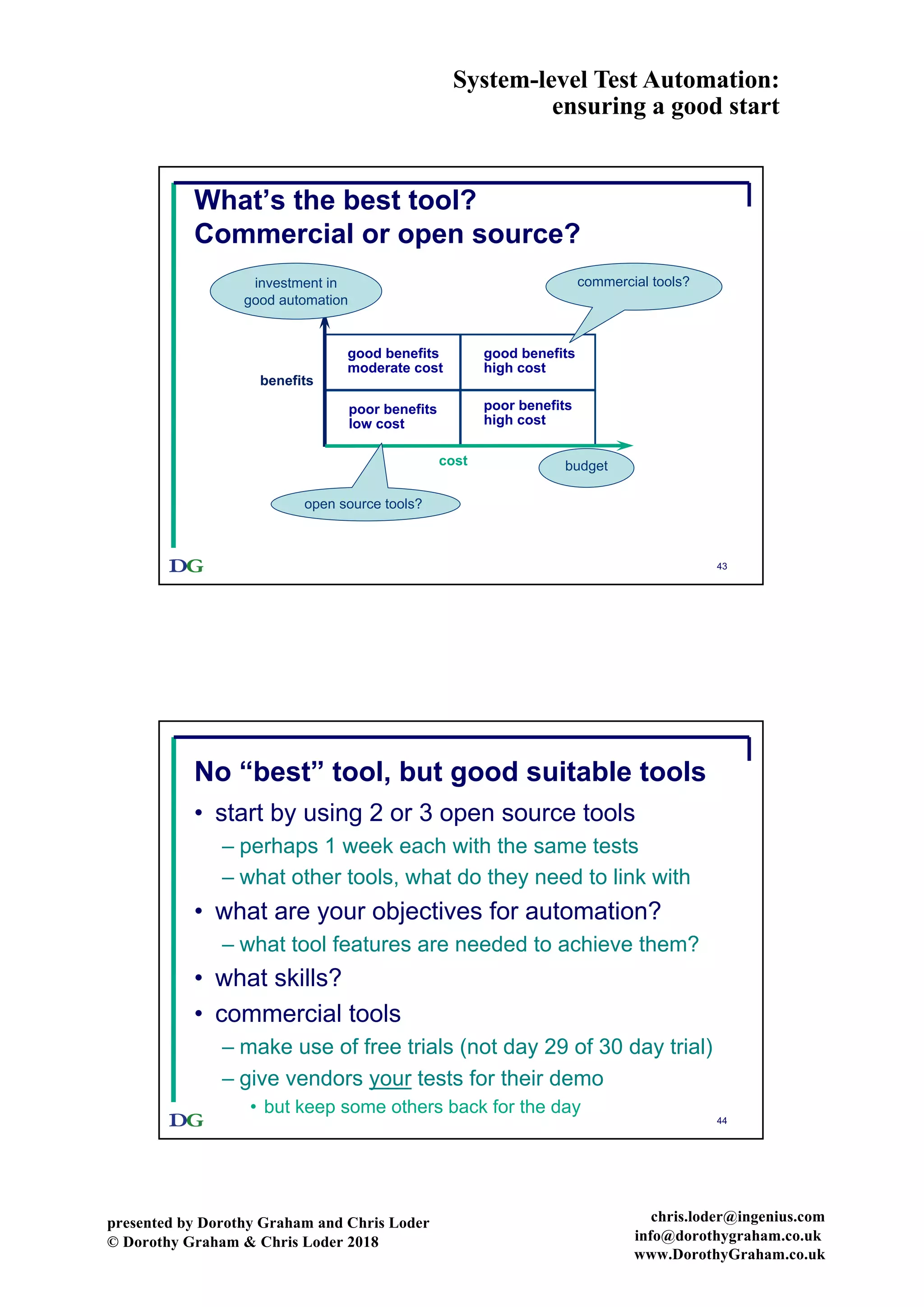

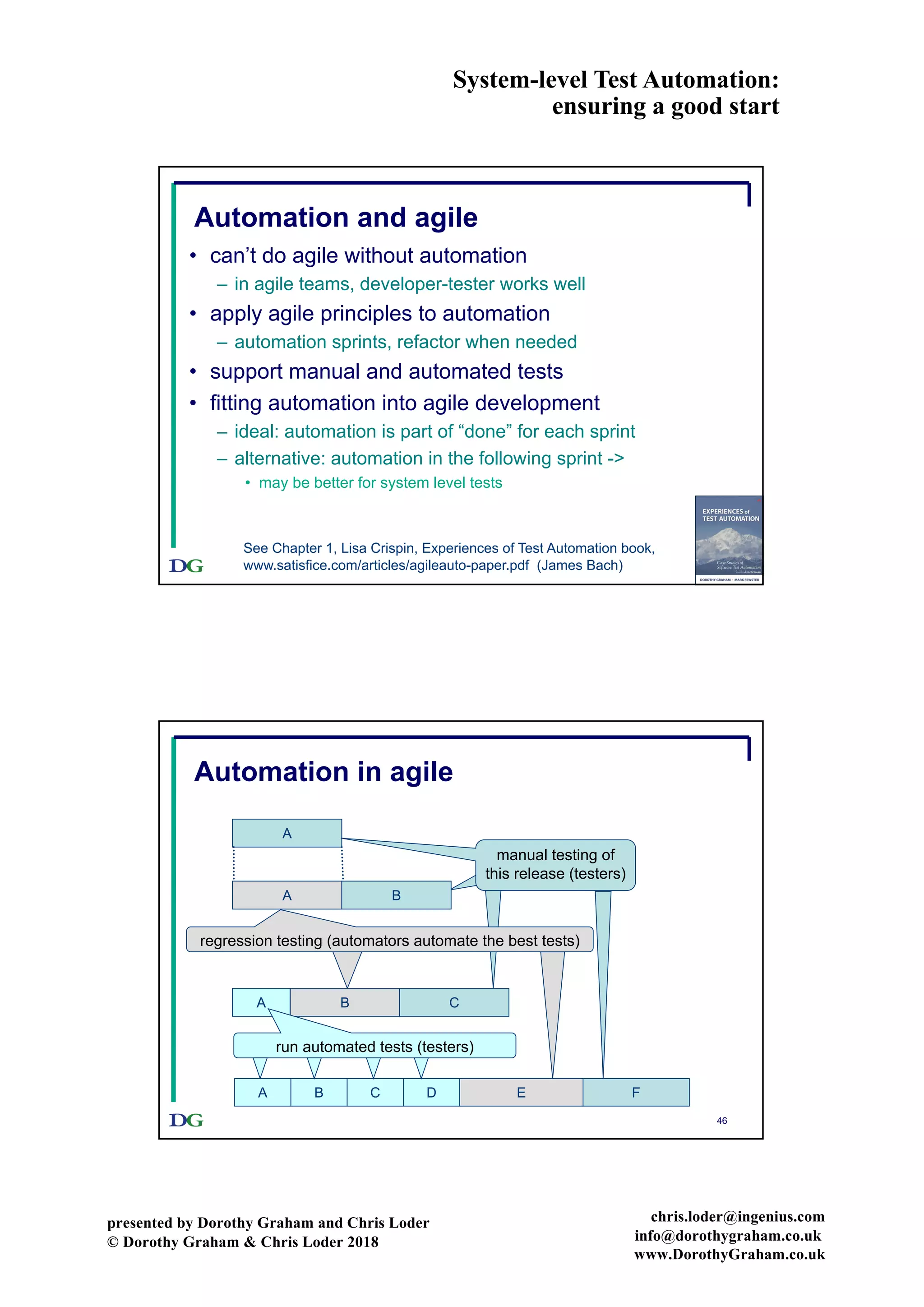

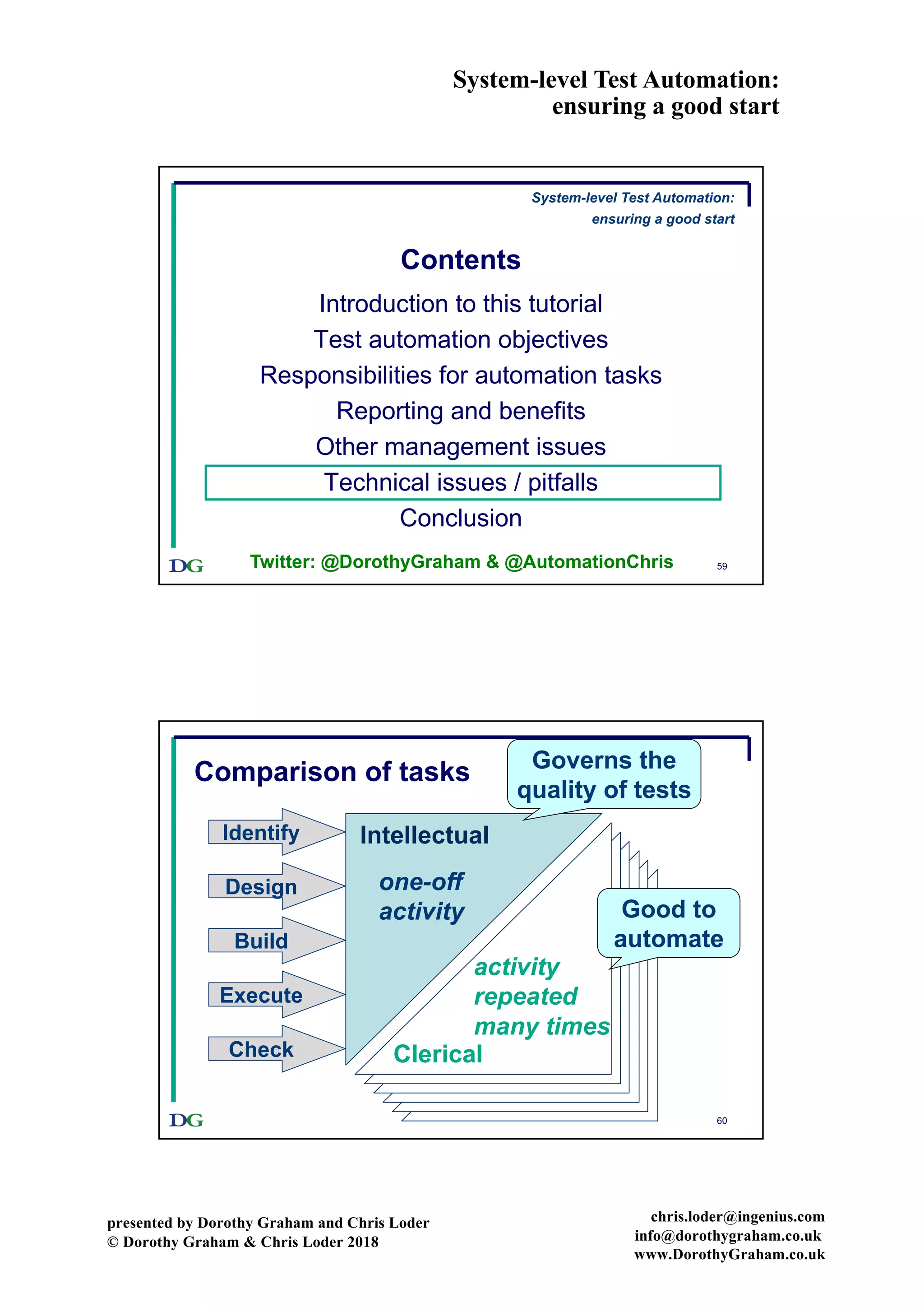

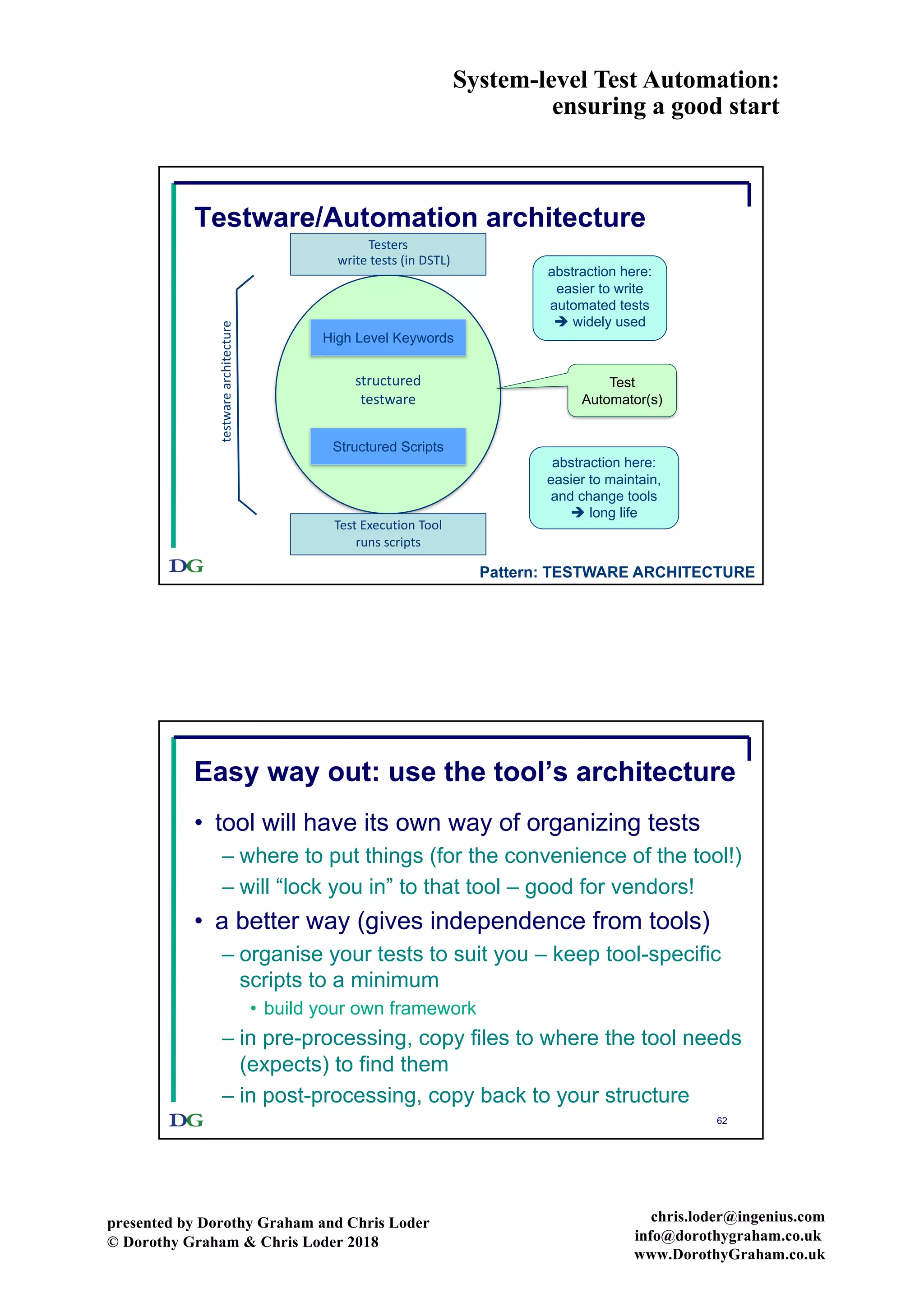

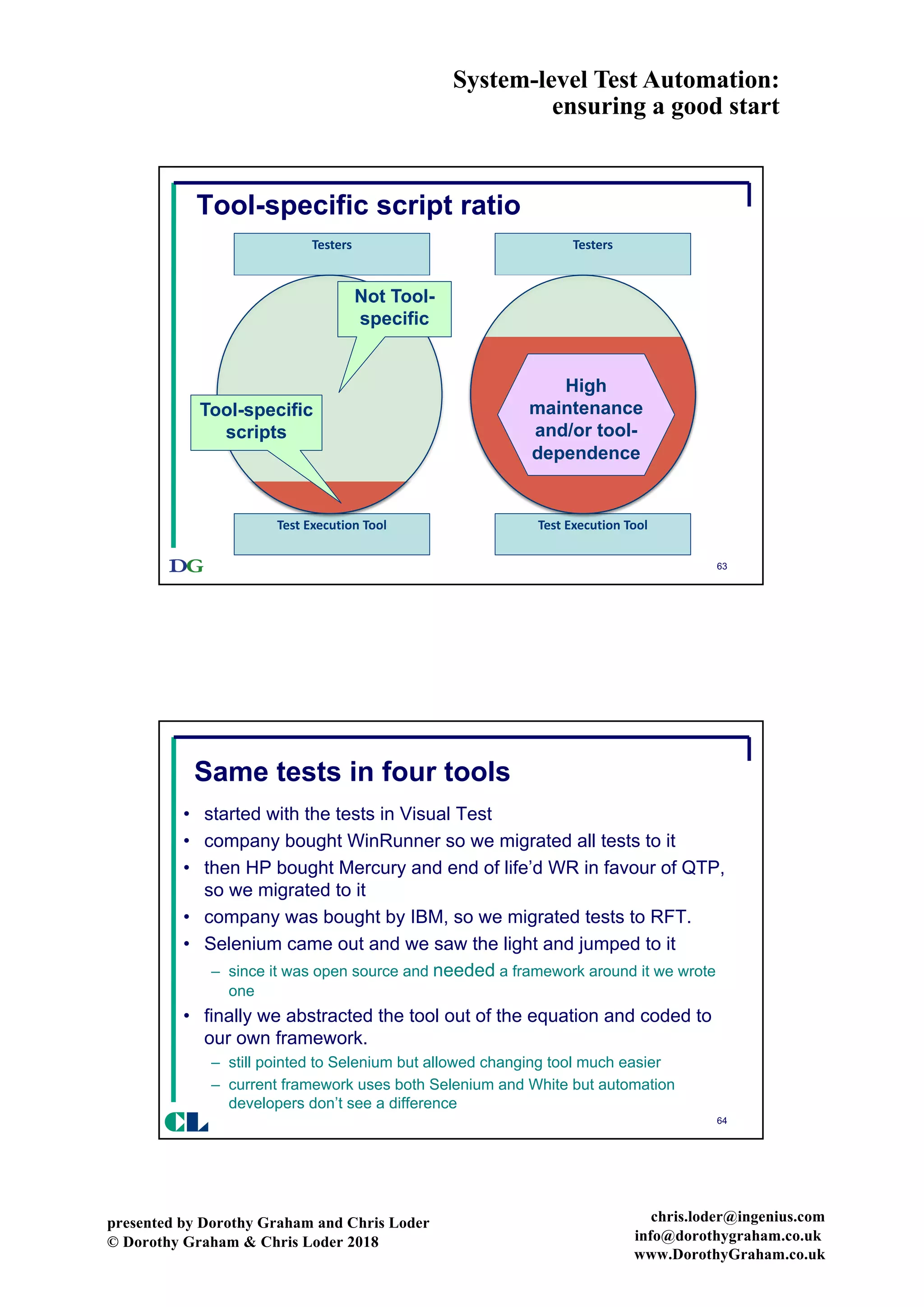

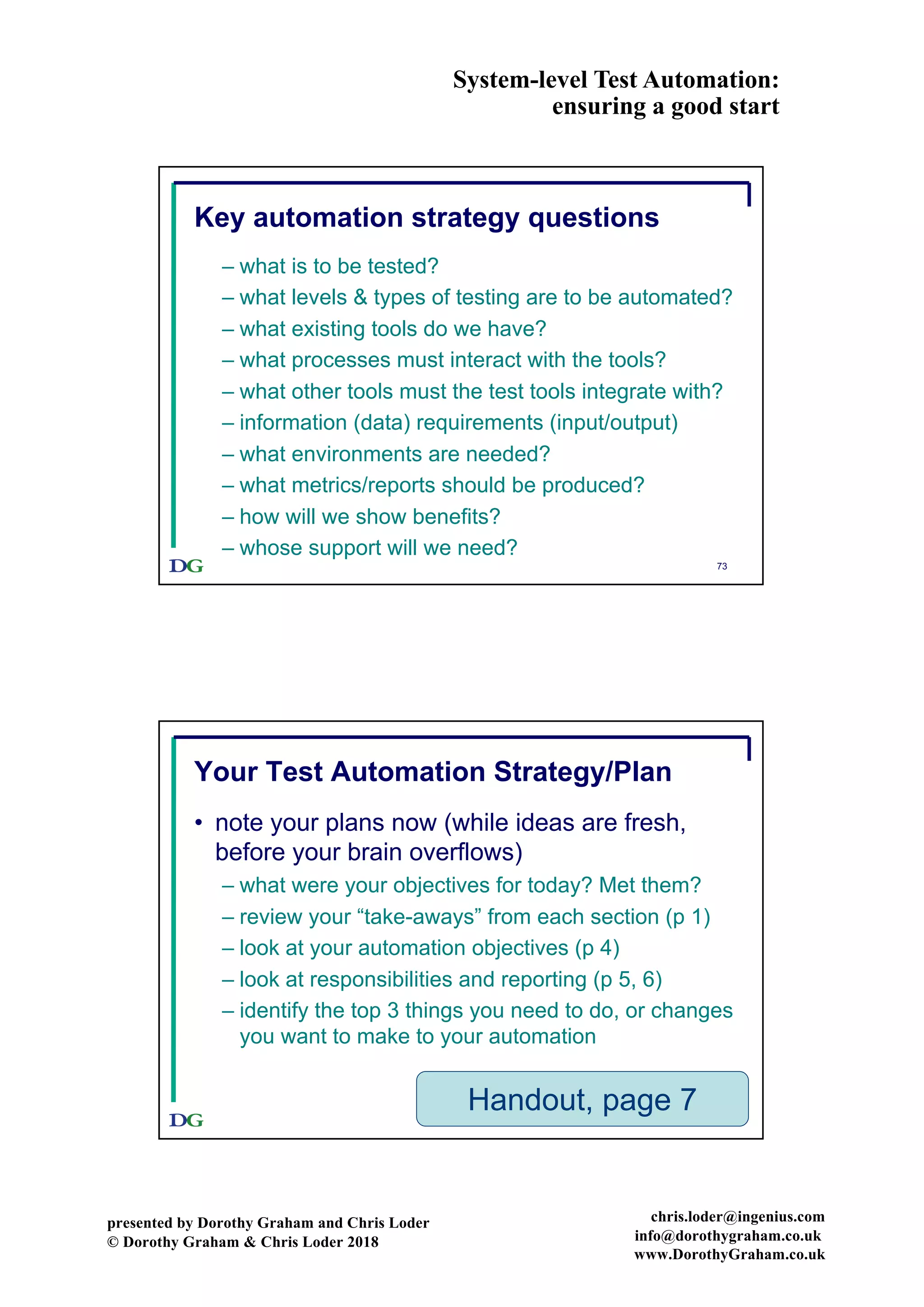

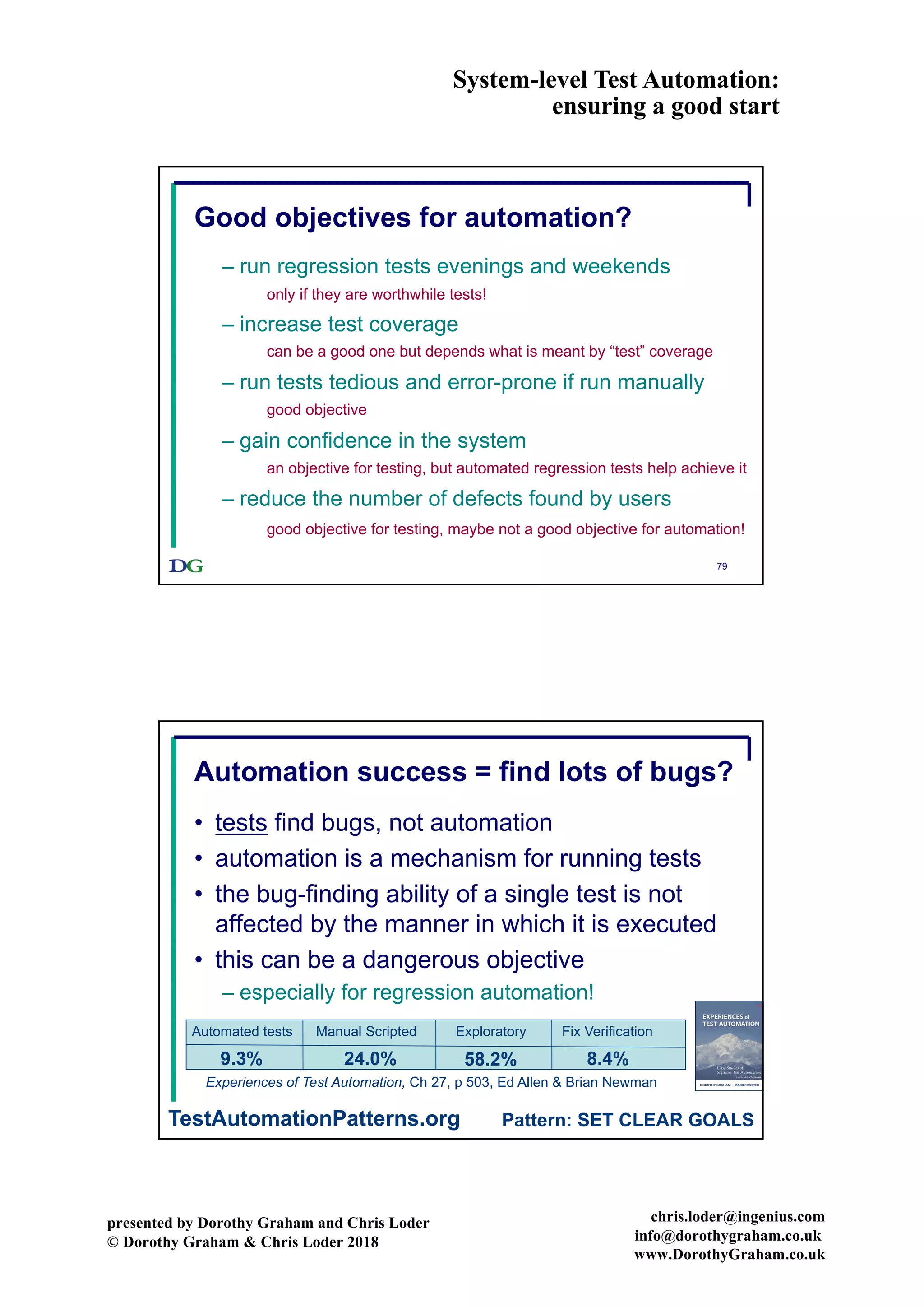

The tutorial on system-level test automation by Dorothy Graham and Chris Loder addresses the key strategies for effectively starting and managing automation efforts in organizations. It emphasizes the importance of setting clear objectives, defining responsibilities, and understanding both management and technical issues that can impact automation success. Participants will learn how to develop a tailored automation strategy based on shared experiences and best practices.