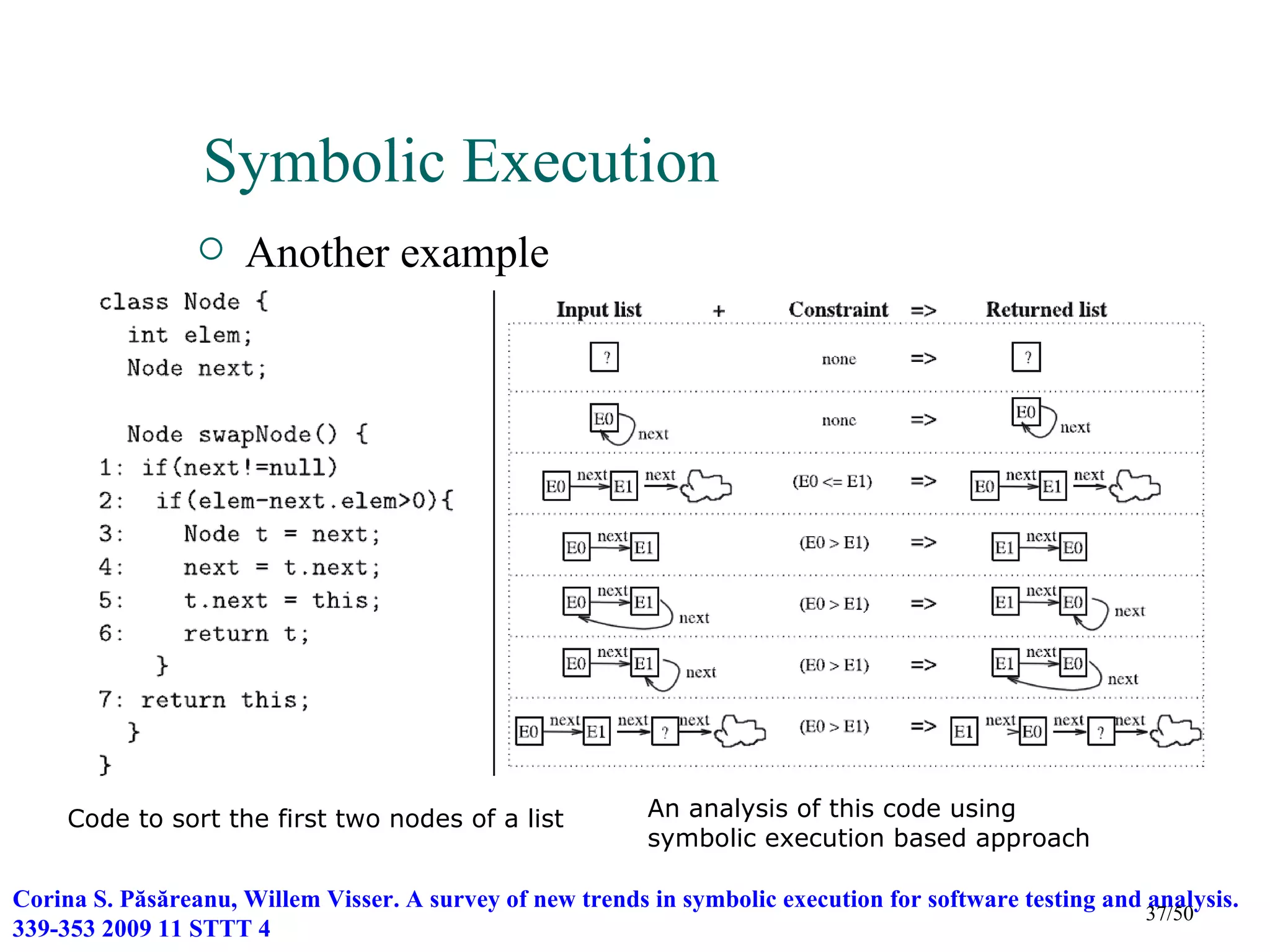

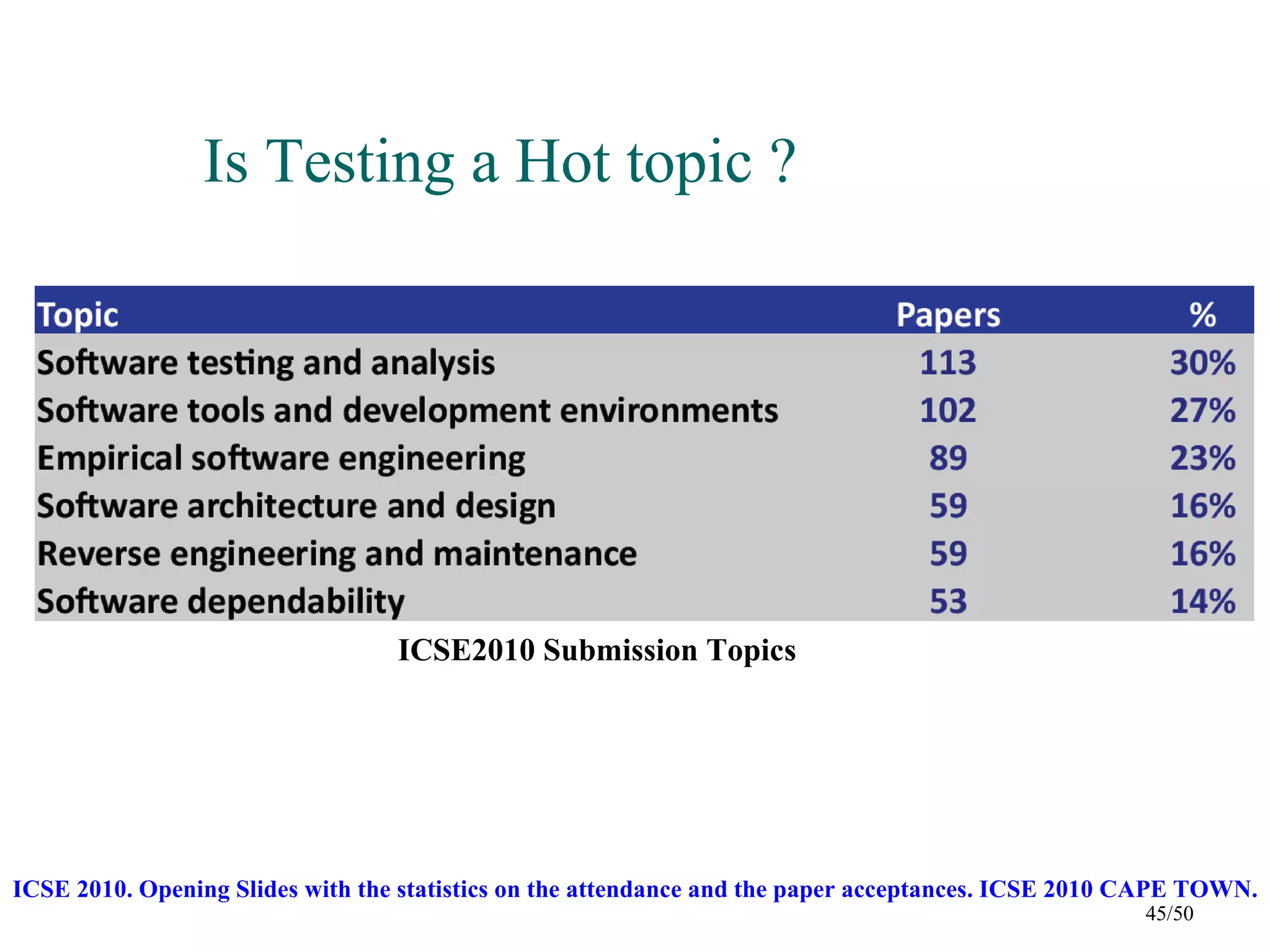

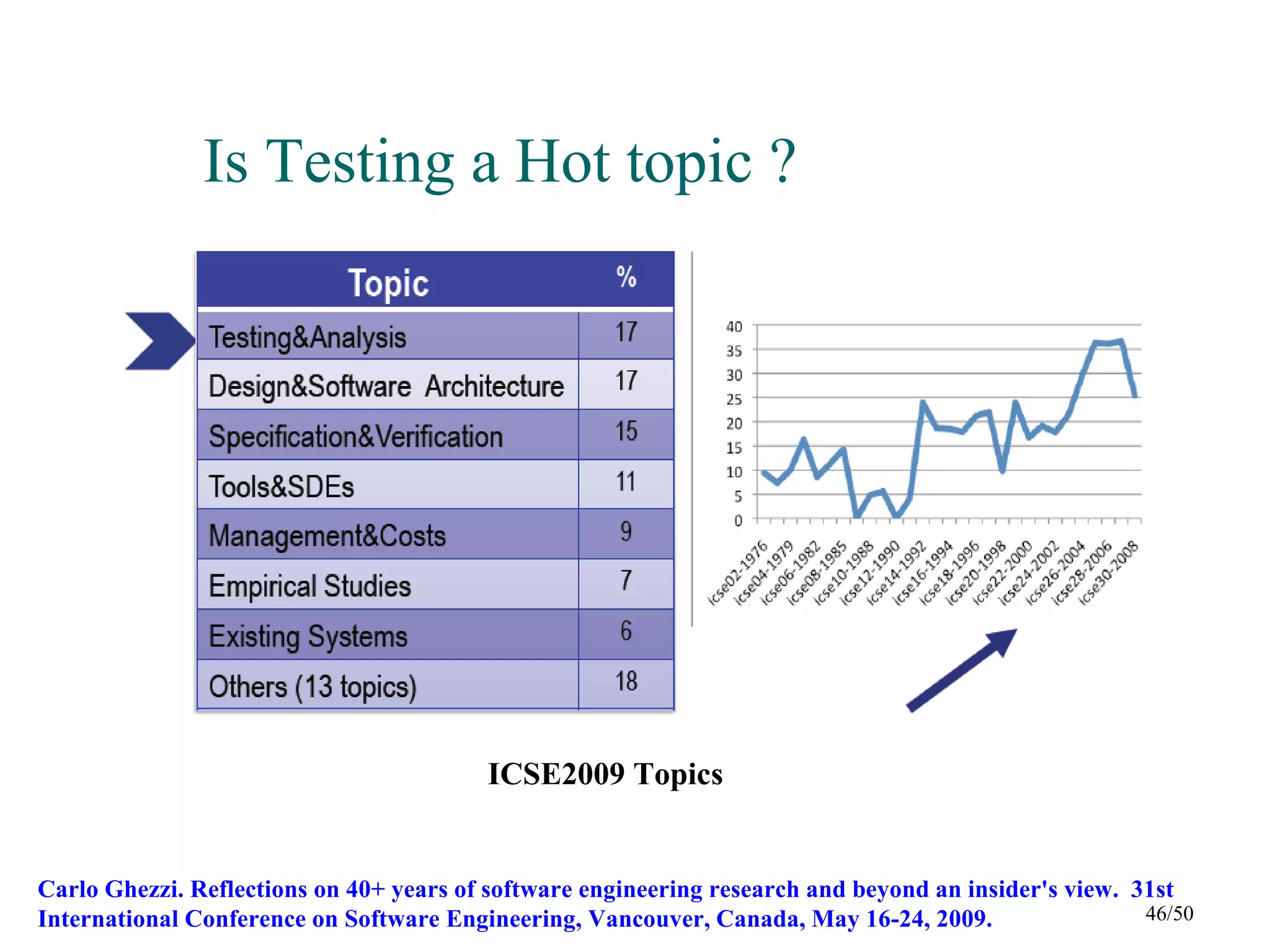

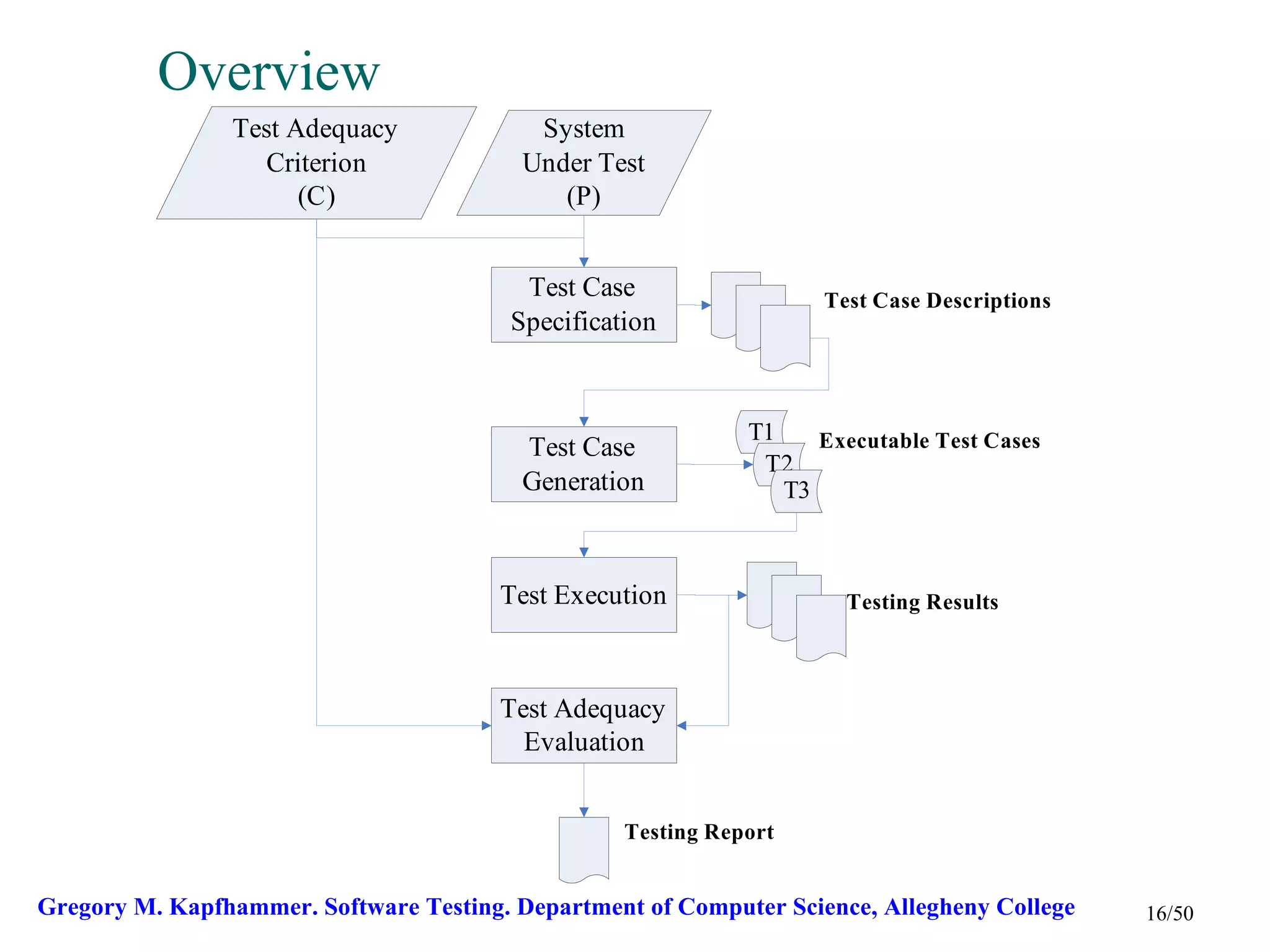

This document provides an overview of software testing. It discusses the background of software testing including definitions, terminology, and history. It presents a framework for software testing that includes the inputs, outputs, and processes. It also discusses test case generation, test execution, evaluation, and some challenges around automation. Finally, it touches on taxonomy and benchmarks for evaluating automated testing techniques.

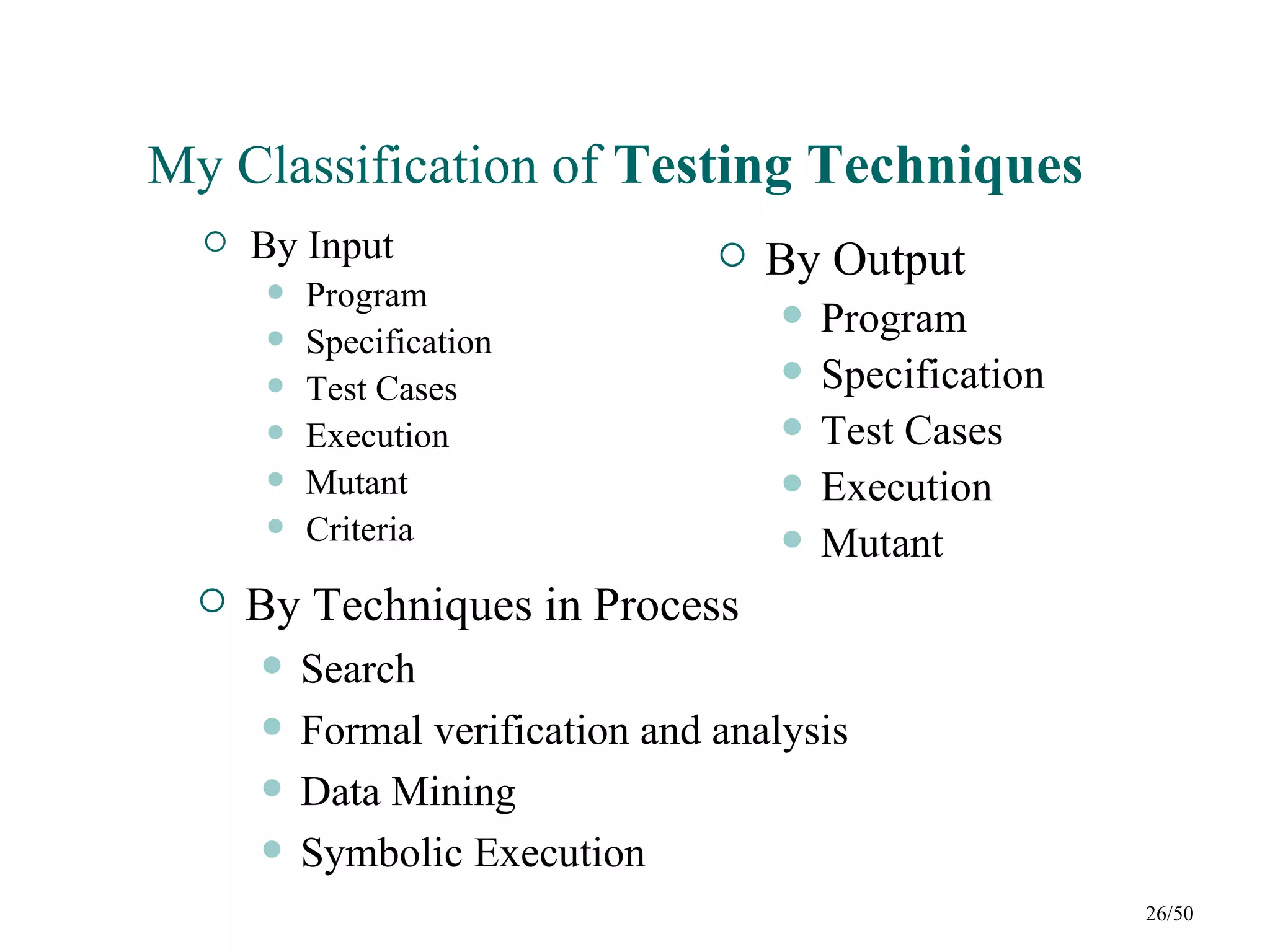

![My Classification of Testing Techniques (cont’)

e.g.

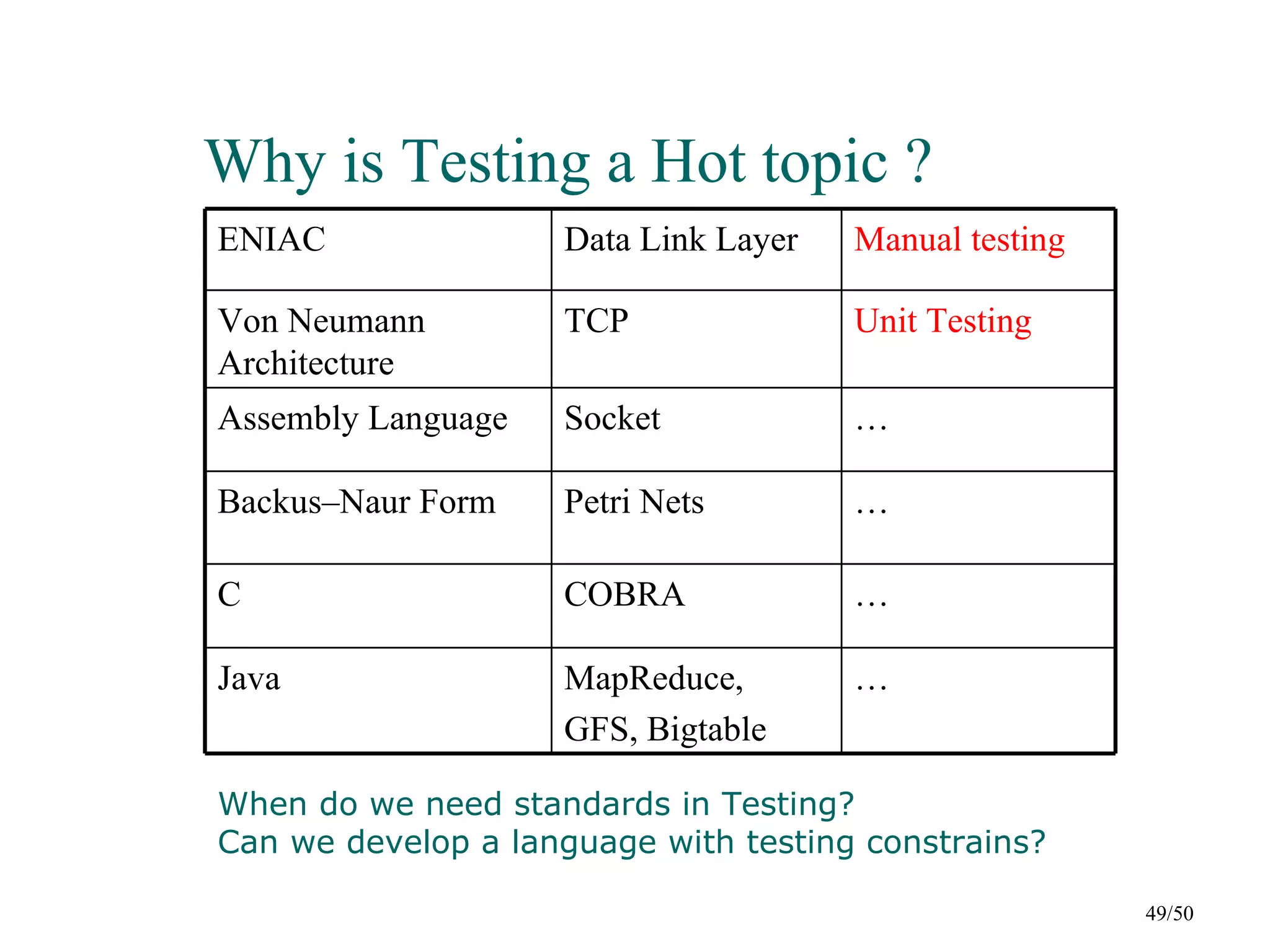

Paper Input Output Process

[GGJ+10] Specification Test Cases Search

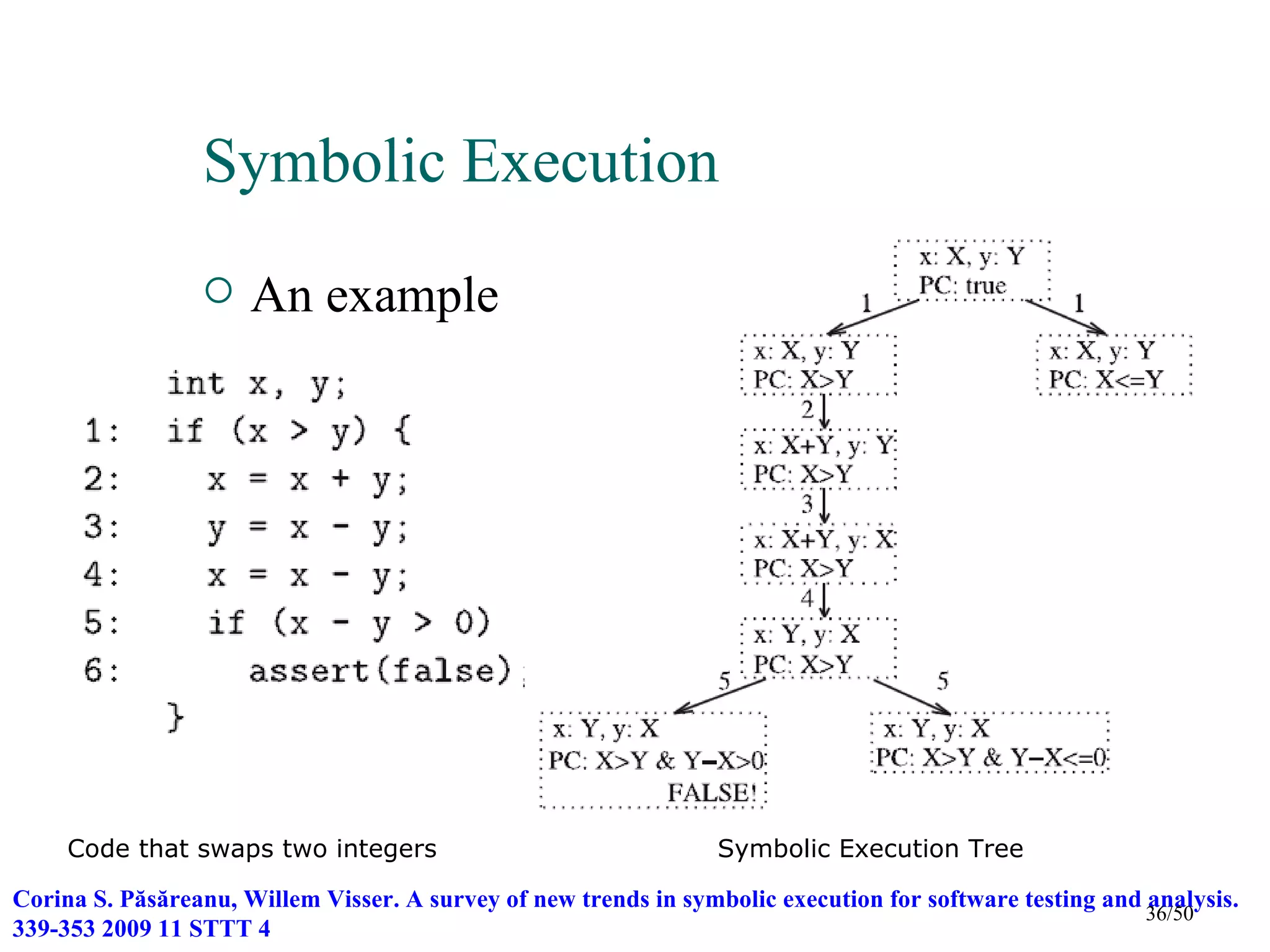

[DGM10] Test Cases Test Cases Symbolic Execution

[HO09] Test Cases Test Cases ILP solvers

[GGJ+10] Milos Gligoric, Tihomir Gvero, Vilas Jagannath, Sarfraz Khurshid, Viktor Kuncak, Darko Marinov. Test

generation through programming in UDITA. Proceedings of the 32nd ACM/IEEE International Conference on

Software Engineering - Volume 1, ICSE 2010, Cape Town, South Africa, 1-8 May 2010.

[DGM10] Daniel, B., Gvero, T., and Marinov, D. 2010. On test repair using symbolic execution. In Proceedings of the

19th international Symposium on Software Testing and Analysis (Trento, Italy, July 12 - 16, 2010). ISSTA '10. ACM,

New York, NY, 207-218.

[HO09] Hwa-You Hsu; Orso, A.; , "MINTS: A general framework and tool for supporting test-suite minimization,"

Software Engineering, 2009. ICSE 2009. IEEE 31st International Conference on , vol., no., pp.419-429, 16-24 May

2009 27/50](https://image.slidesharecdn.com/asurveyofsoftwaretesting-120318205607-phpapp02/75/A-survey-of-software-testing-27-2048.jpg)