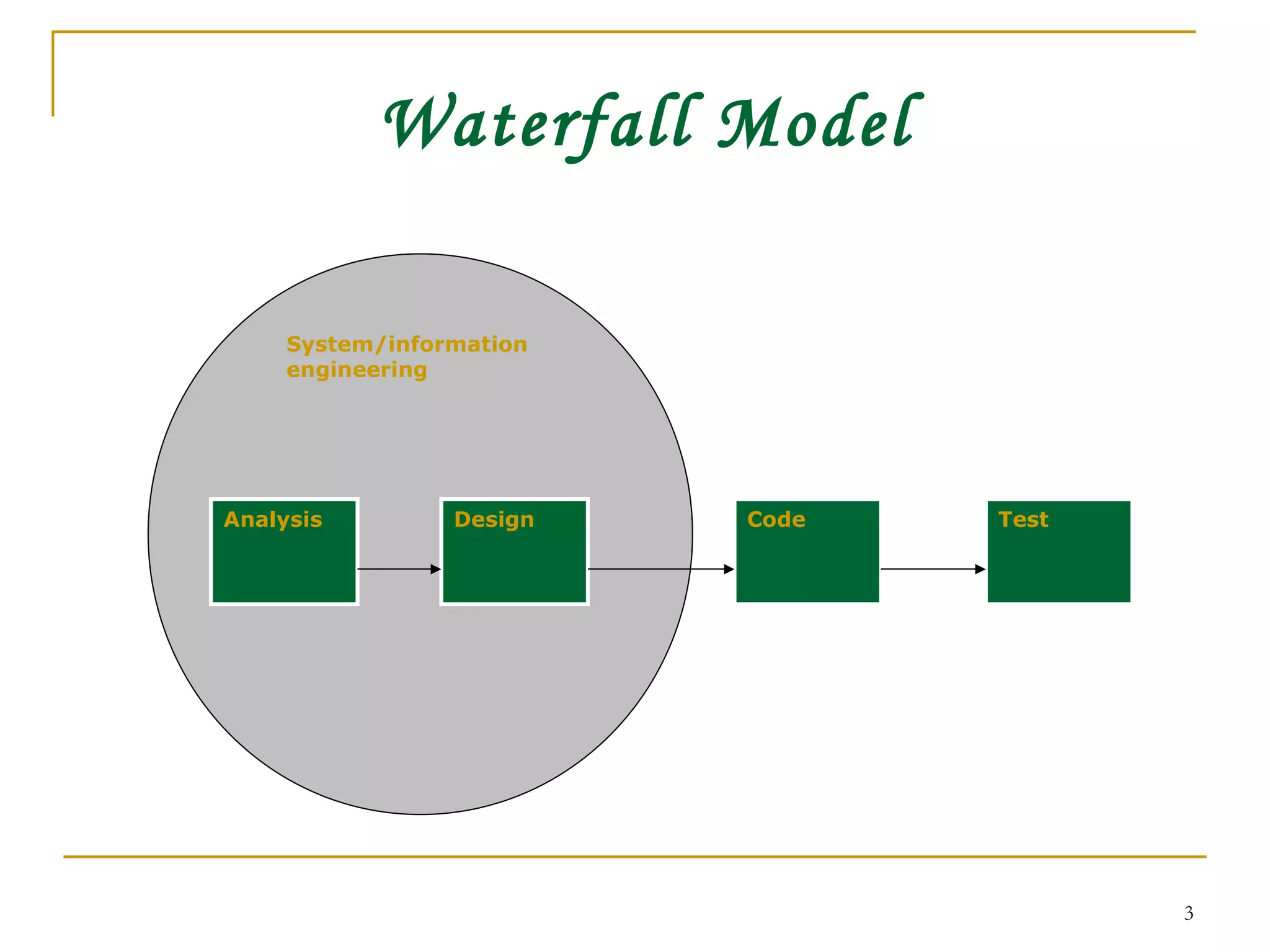

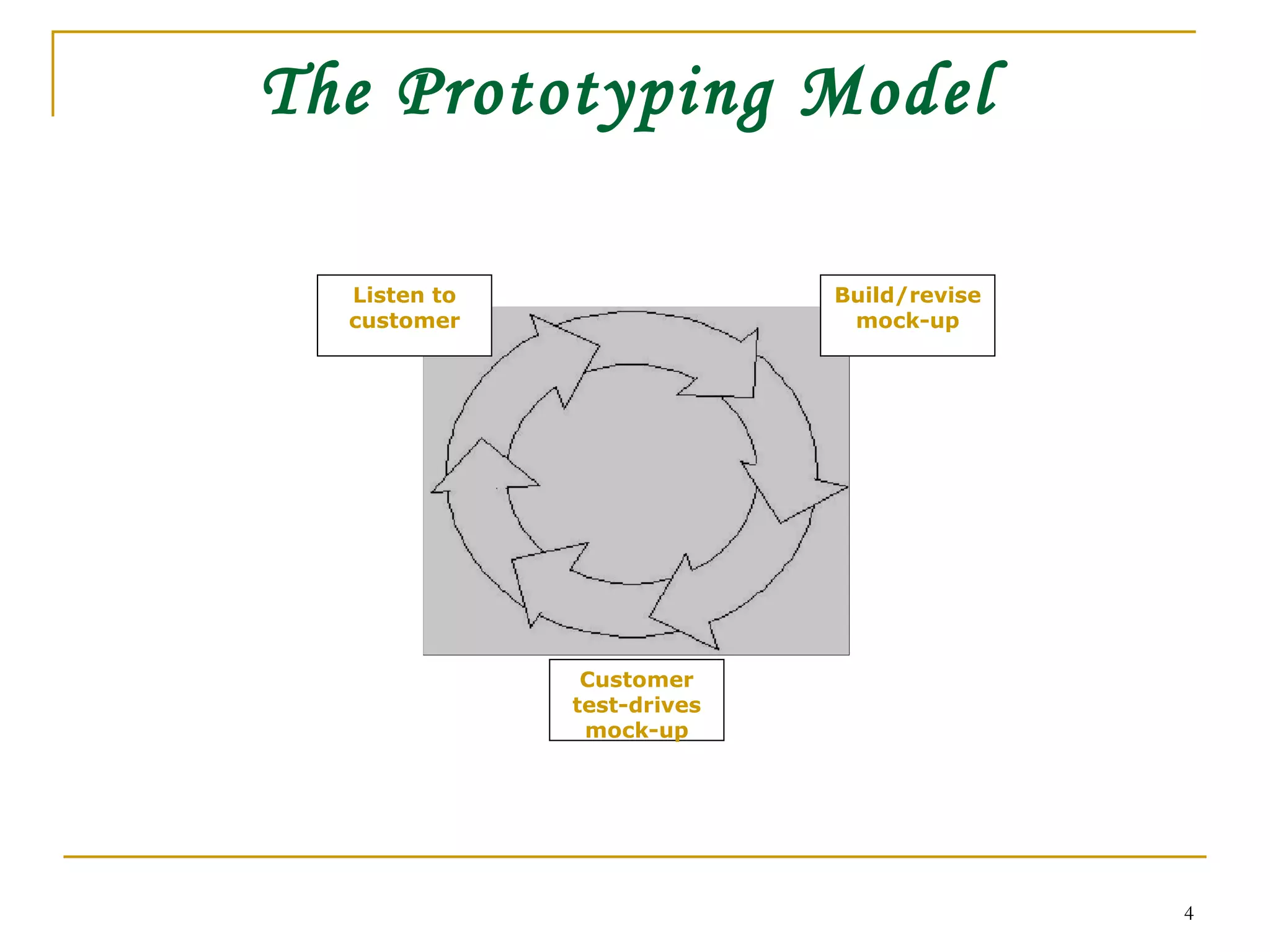

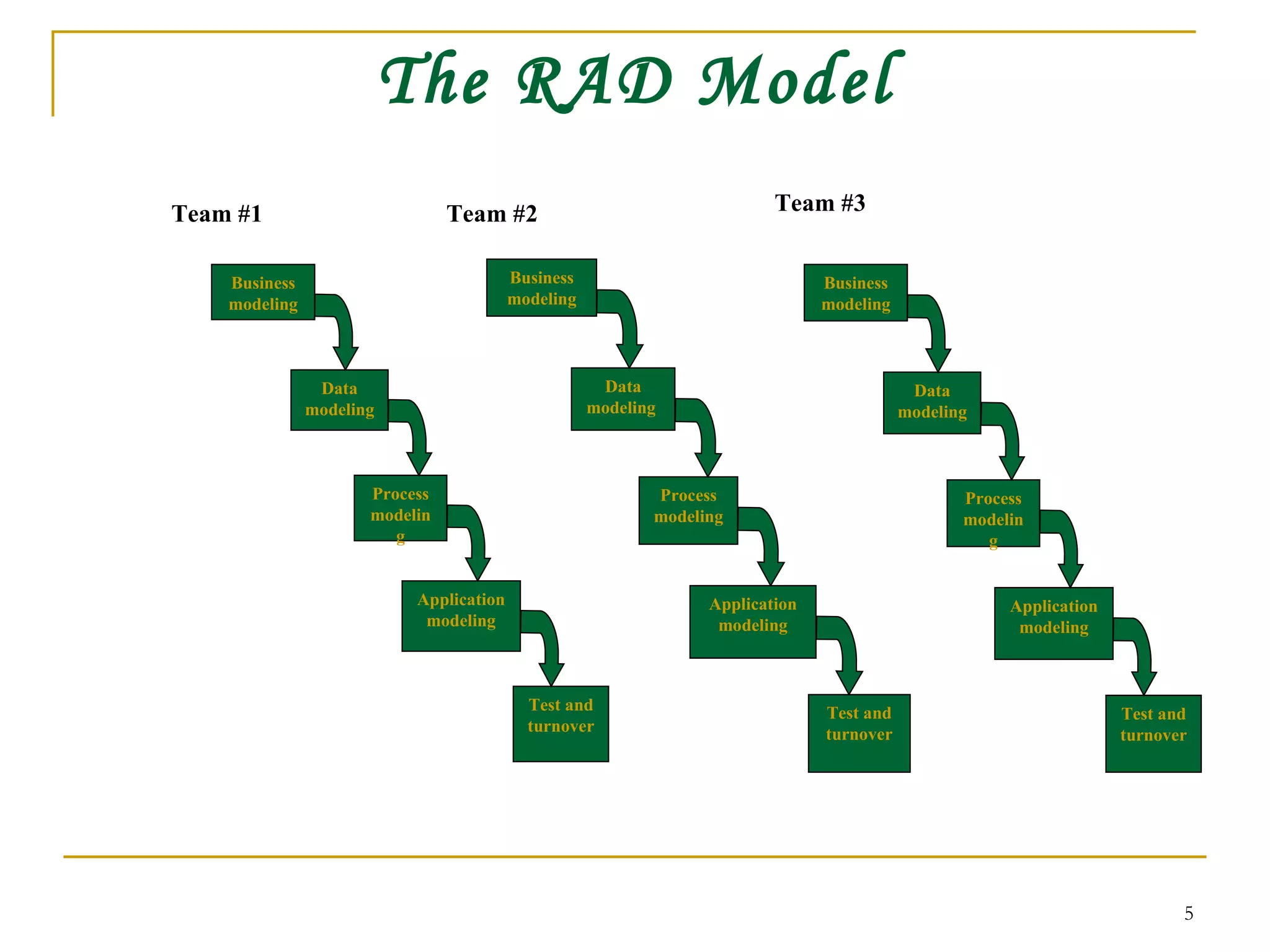

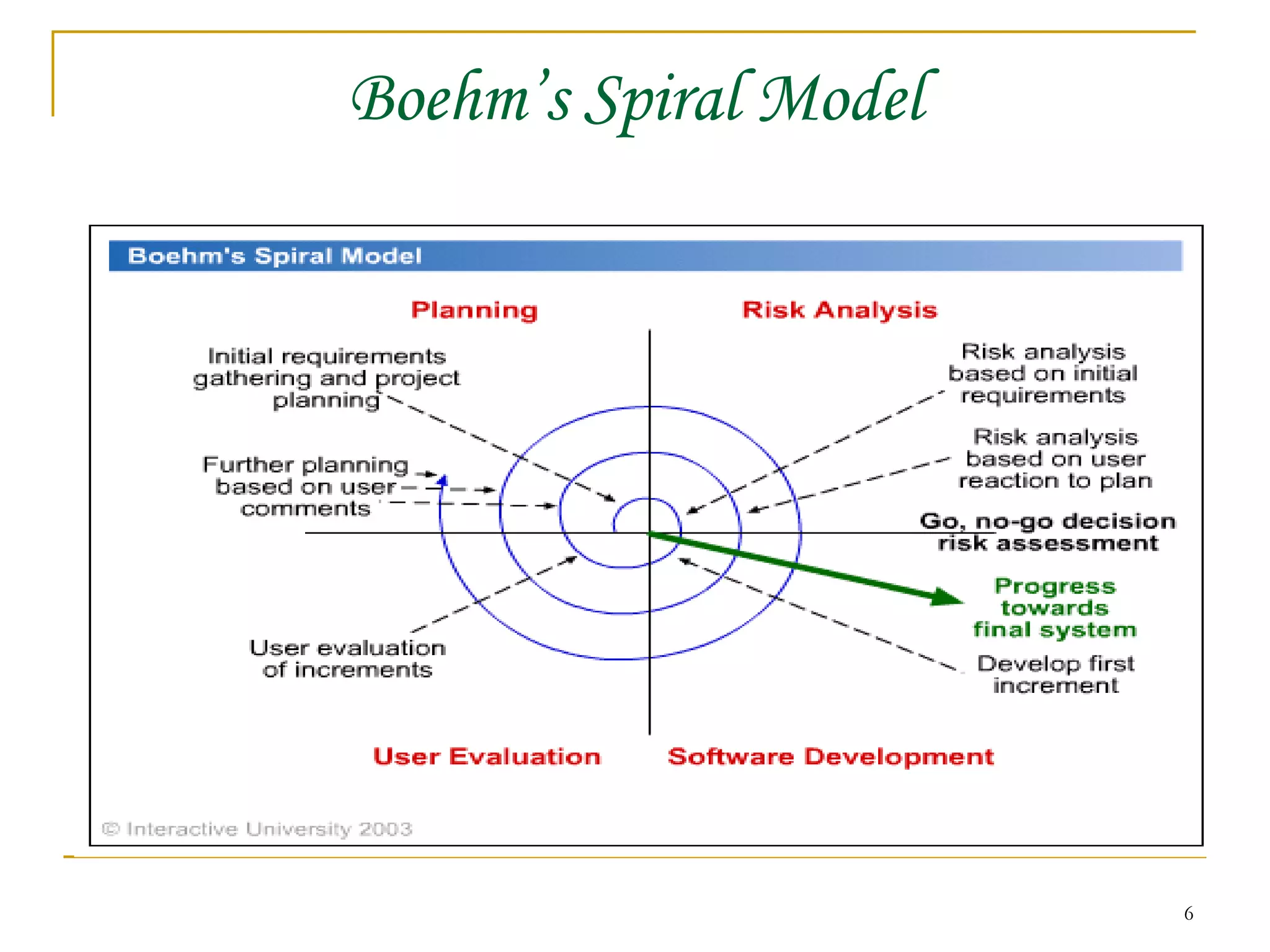

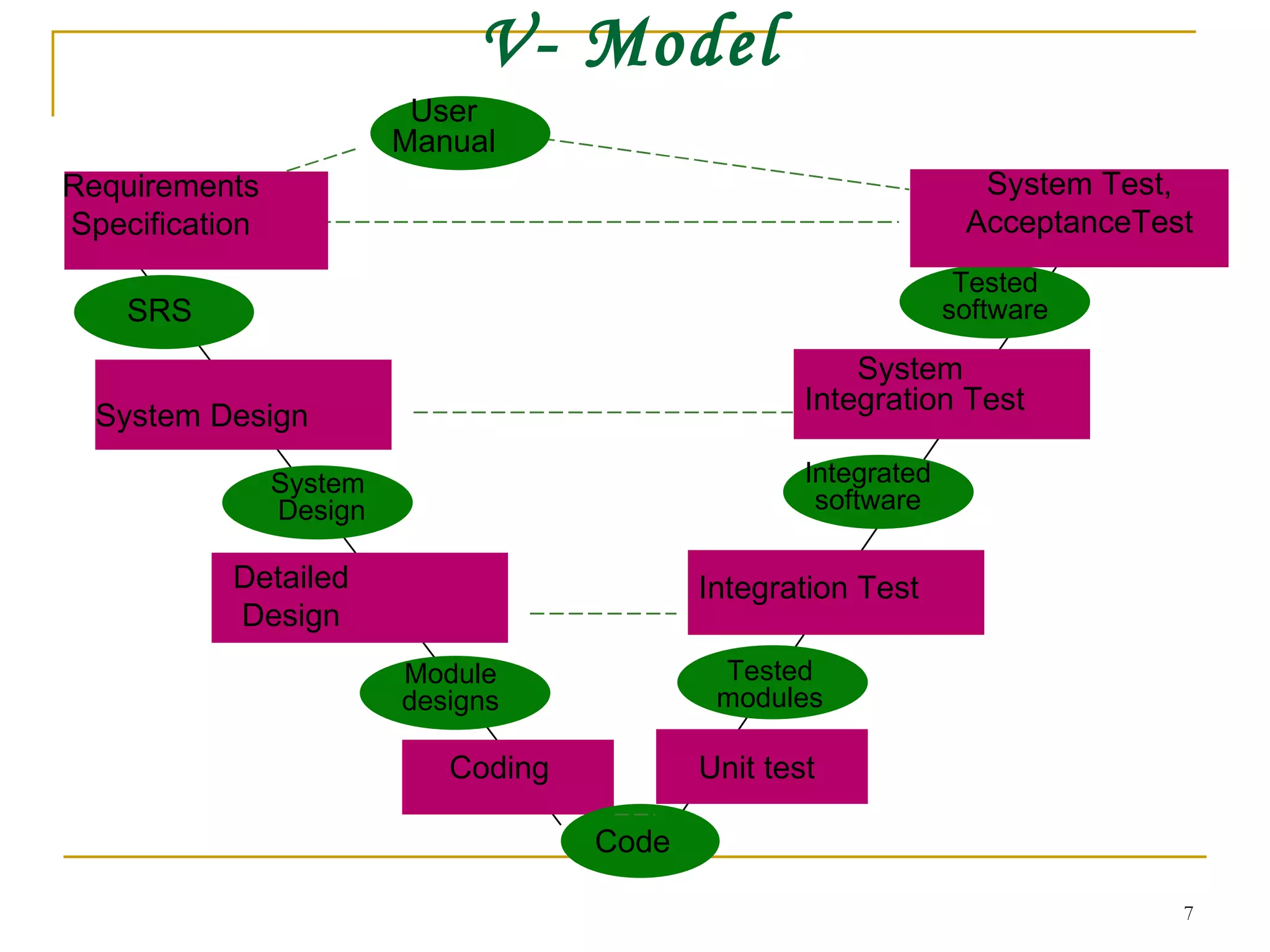

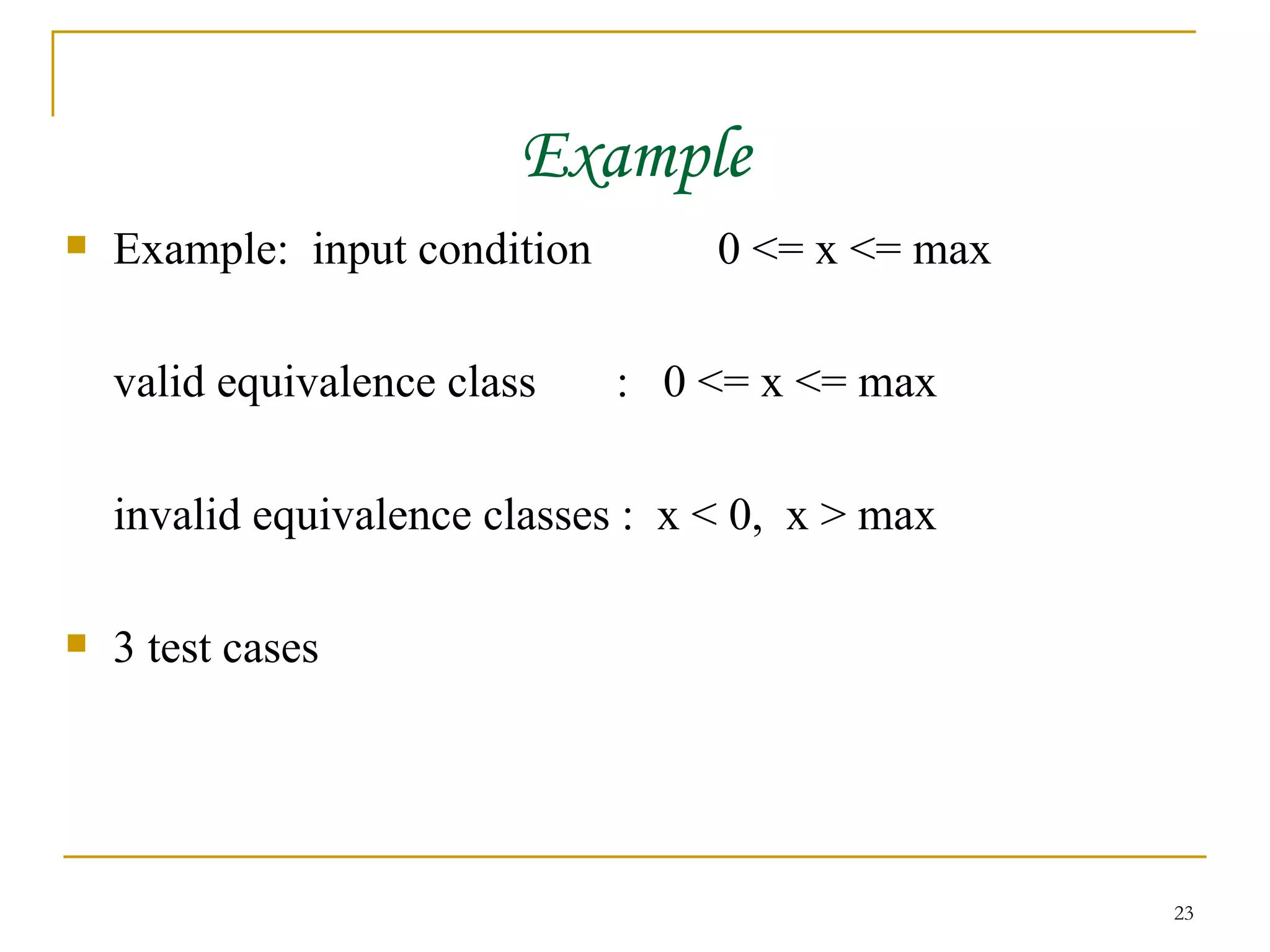

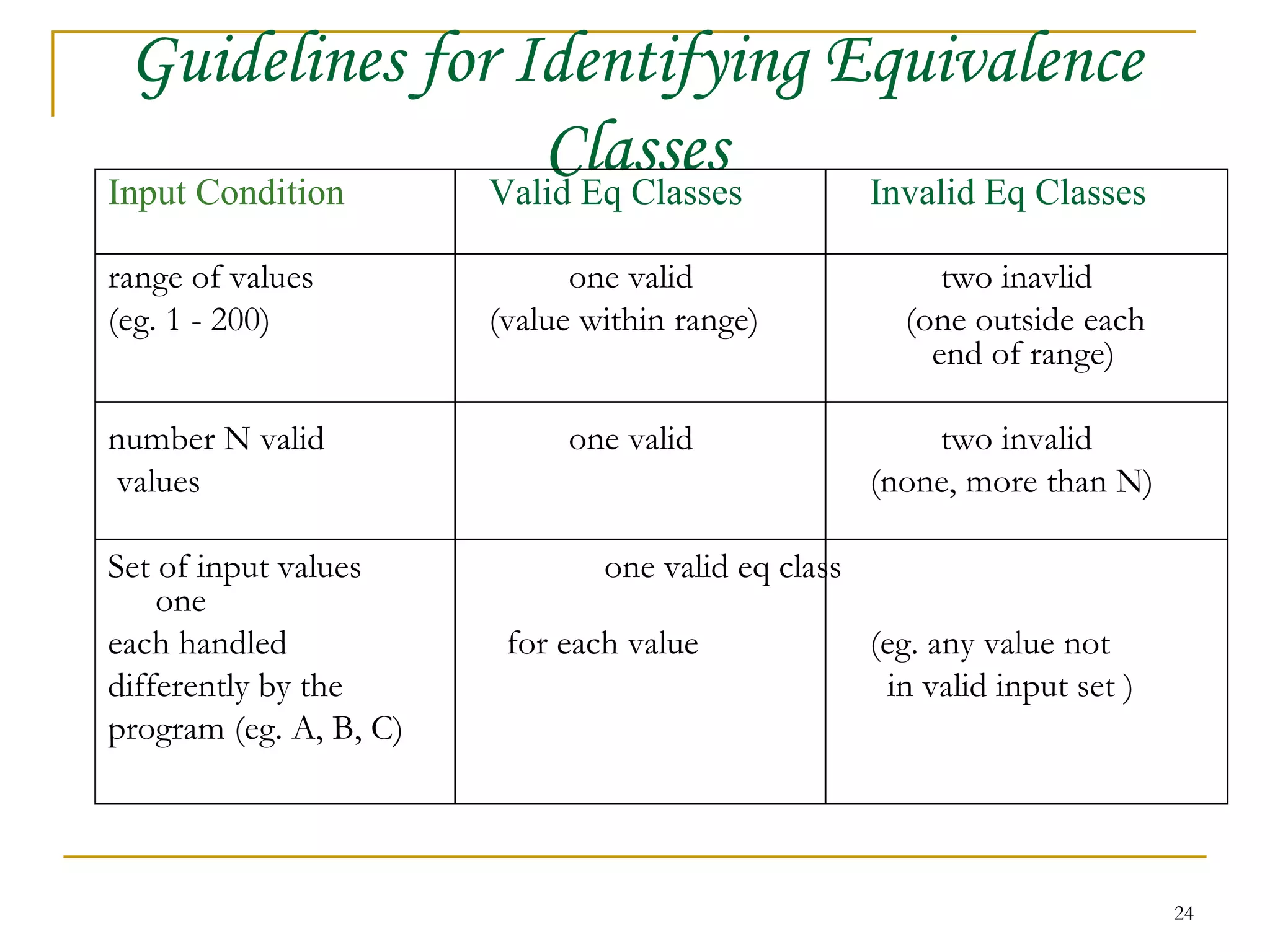

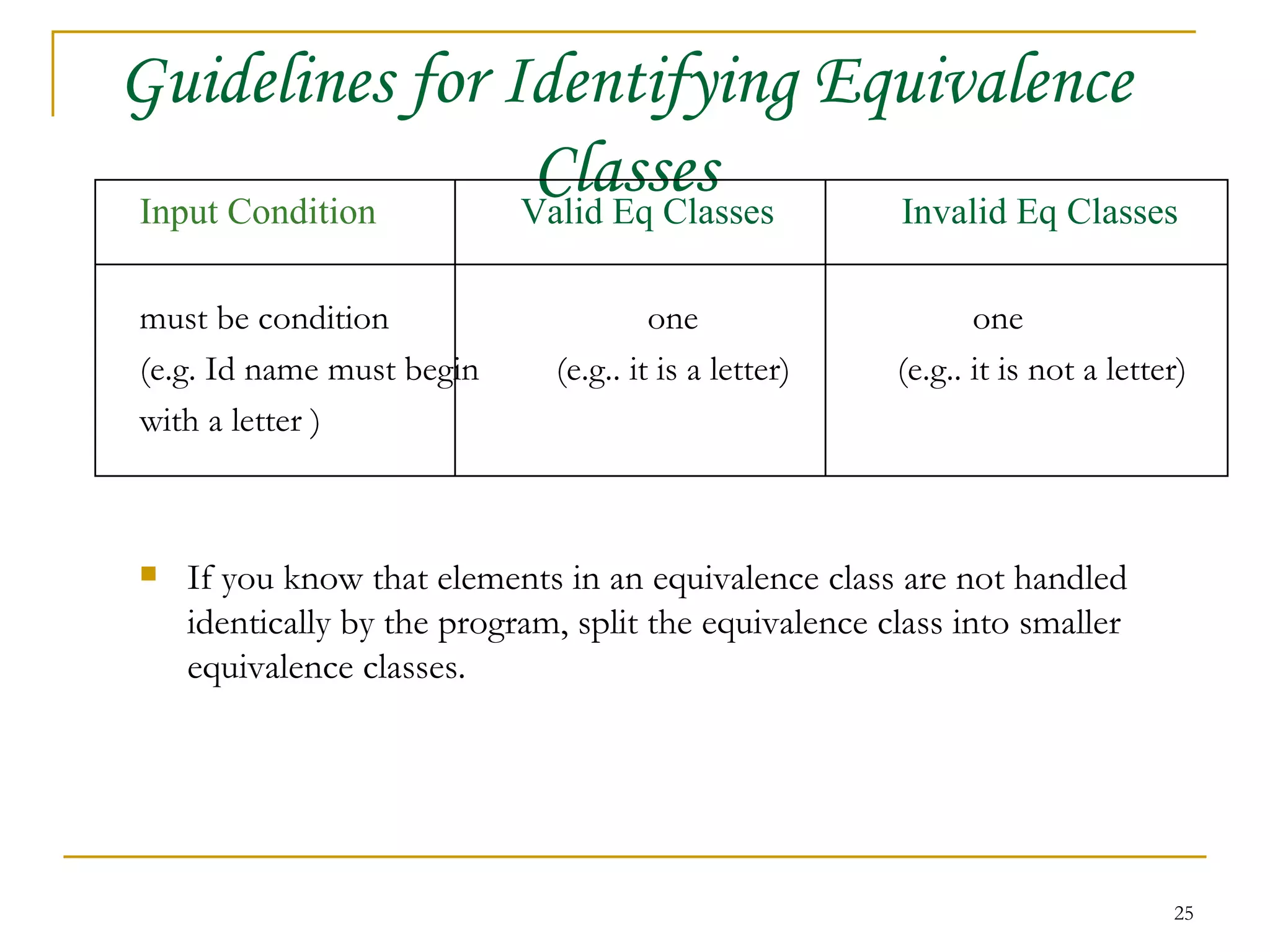

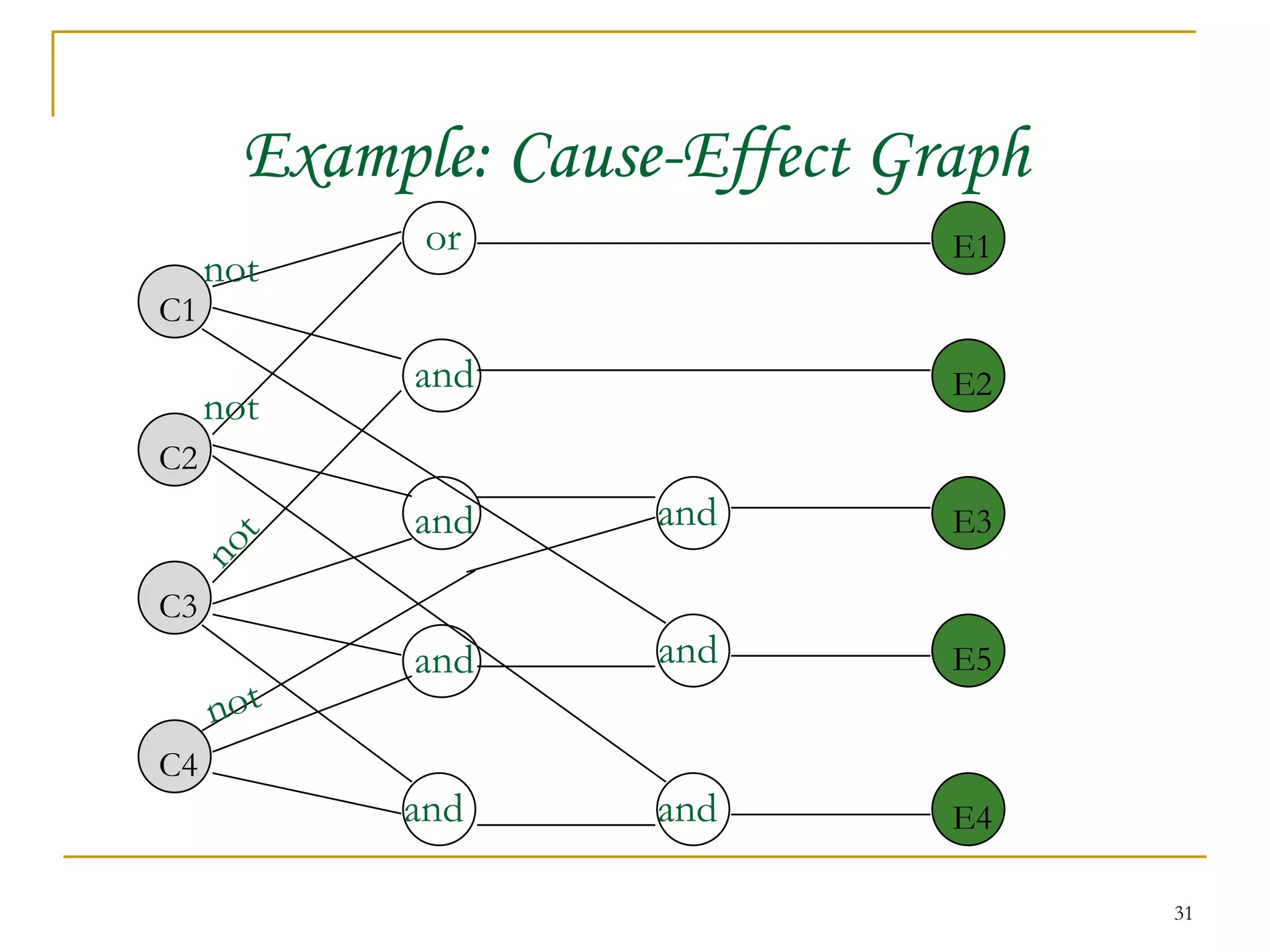

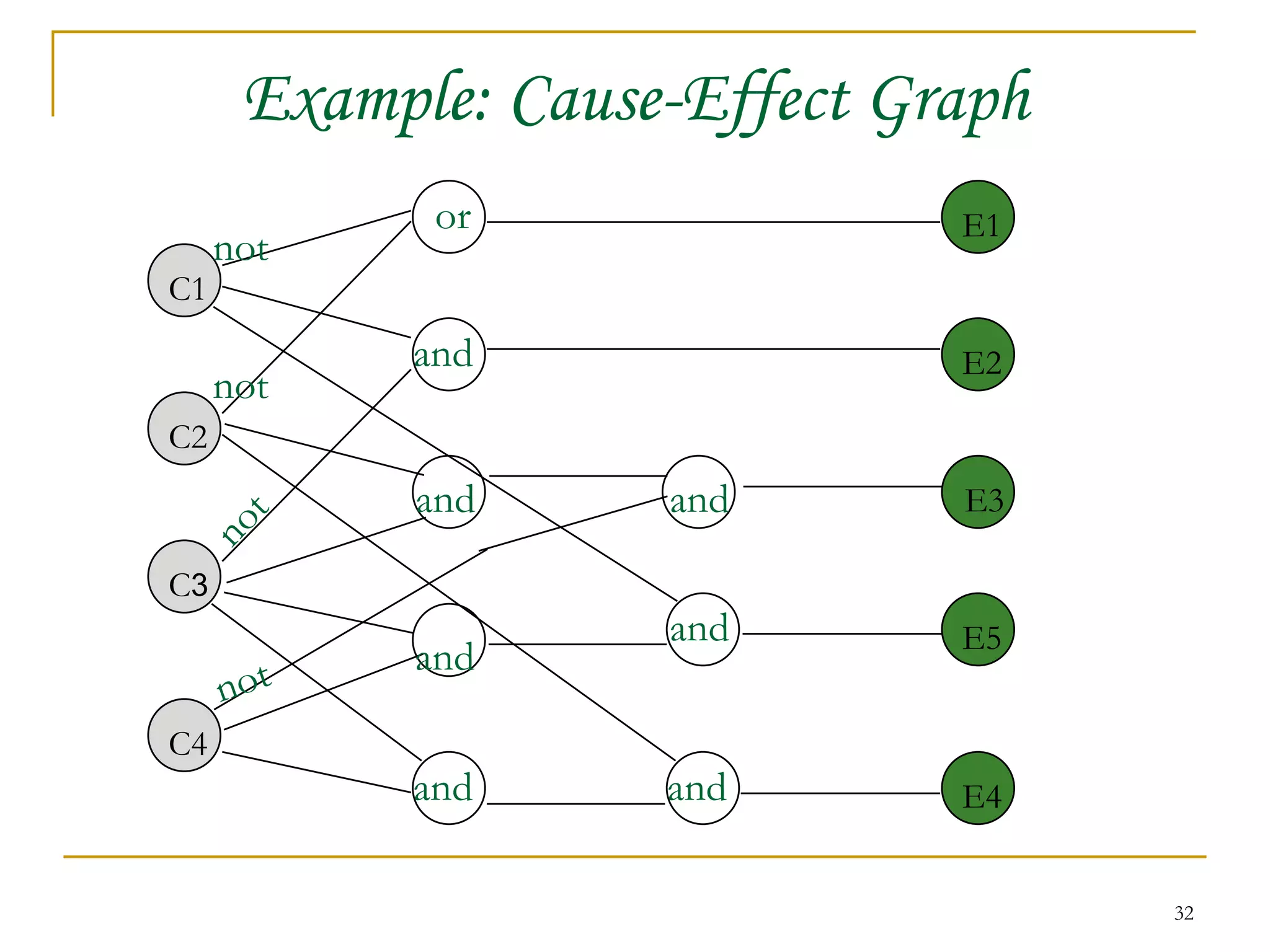

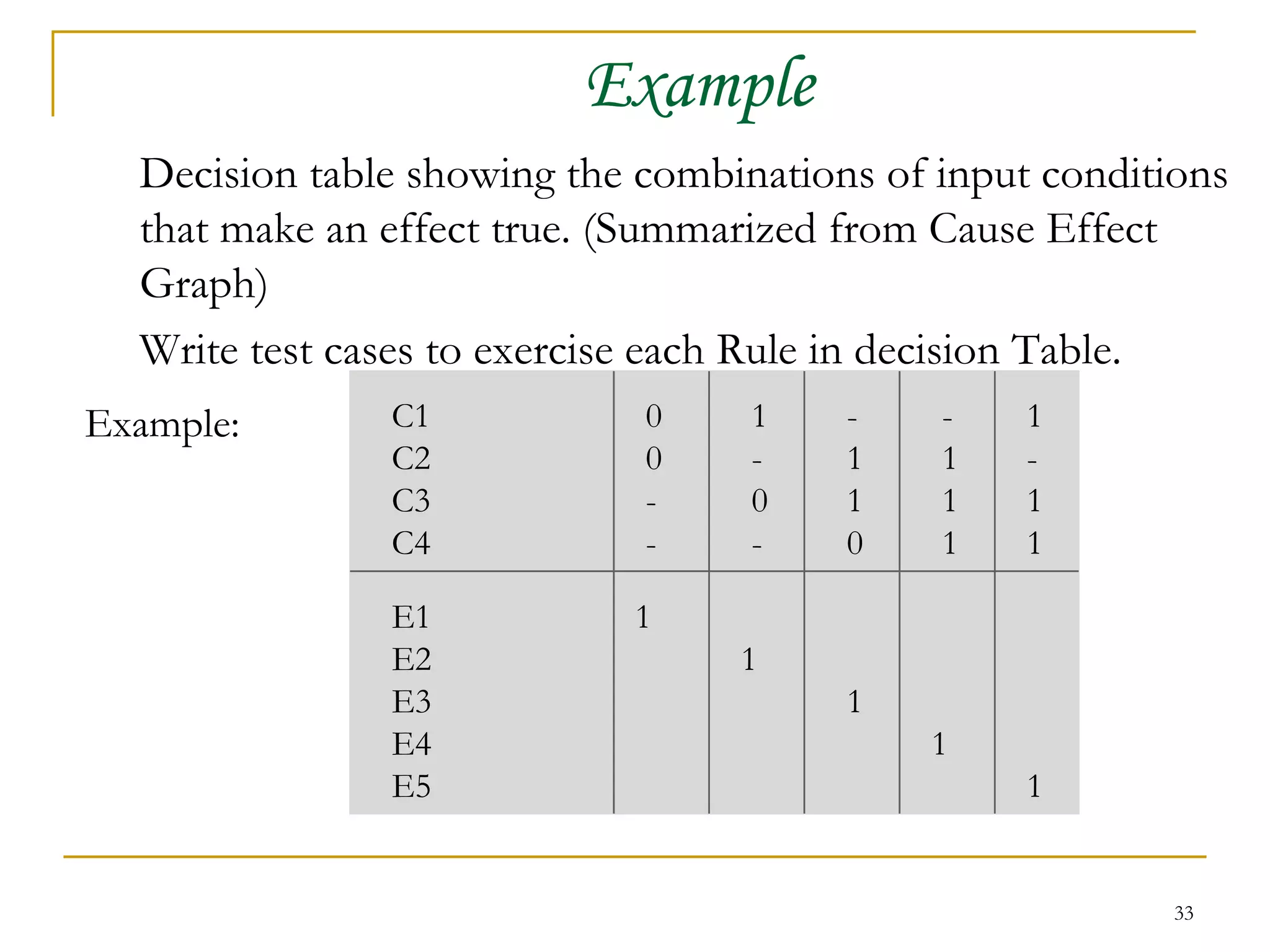

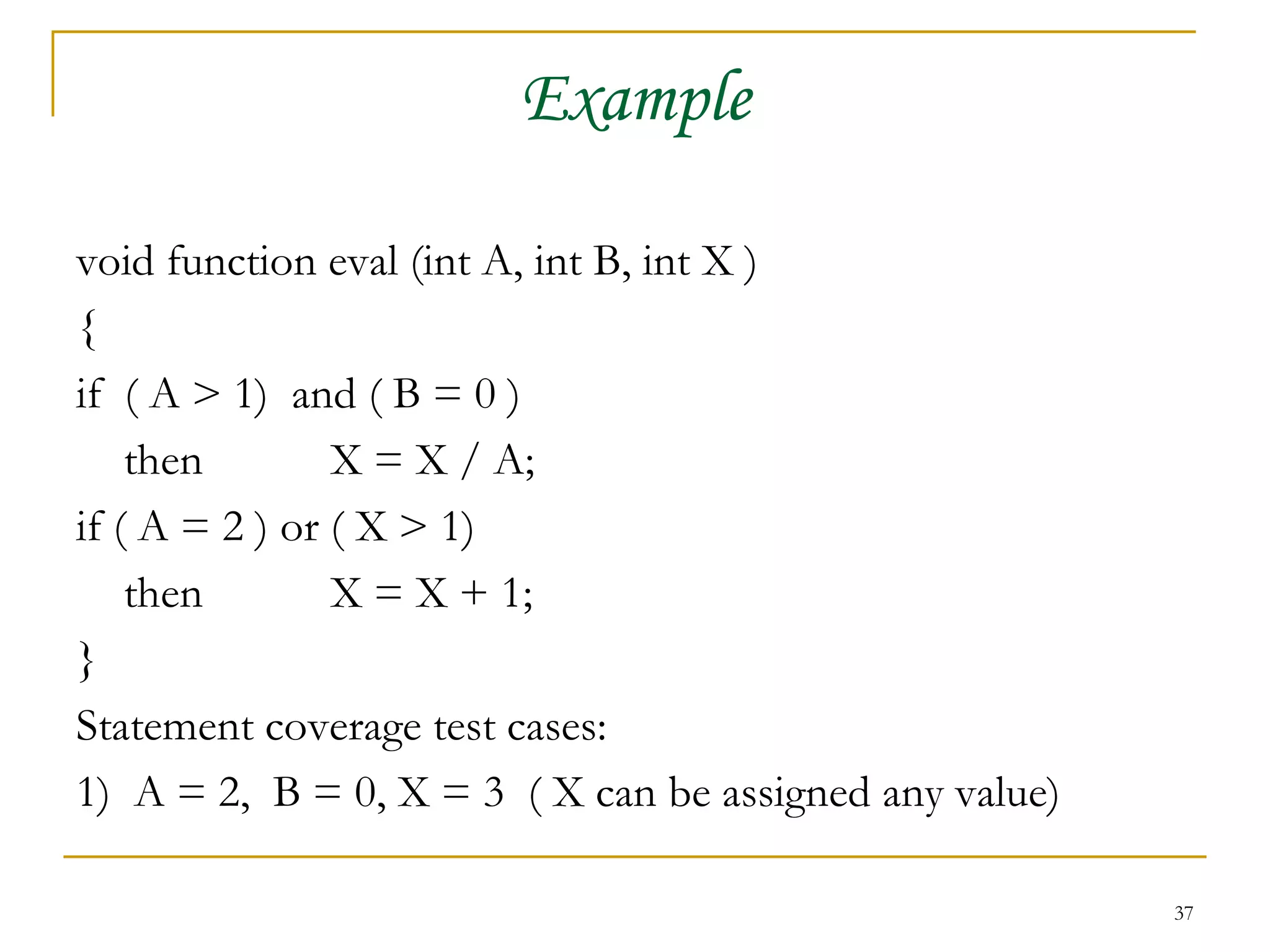

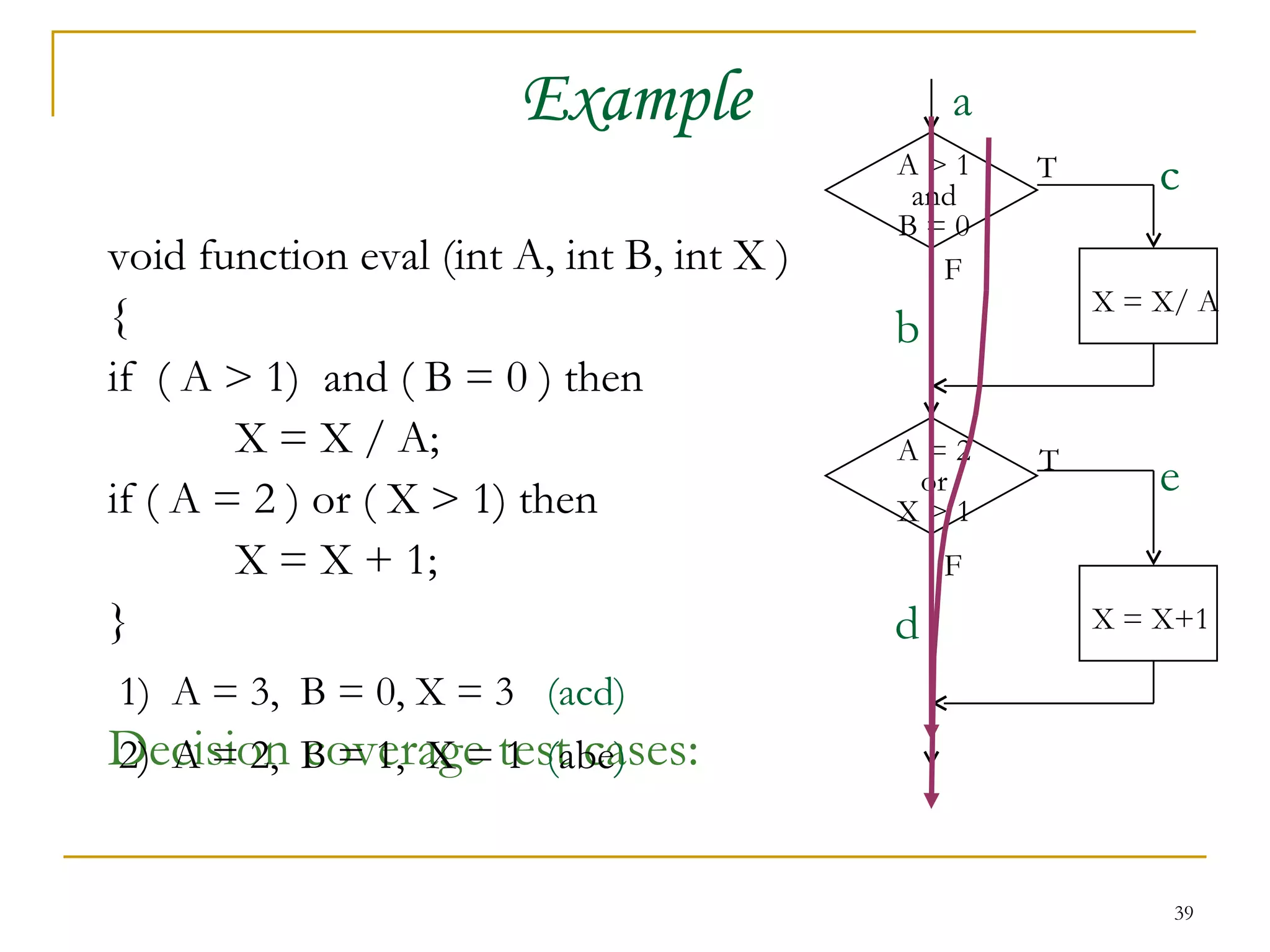

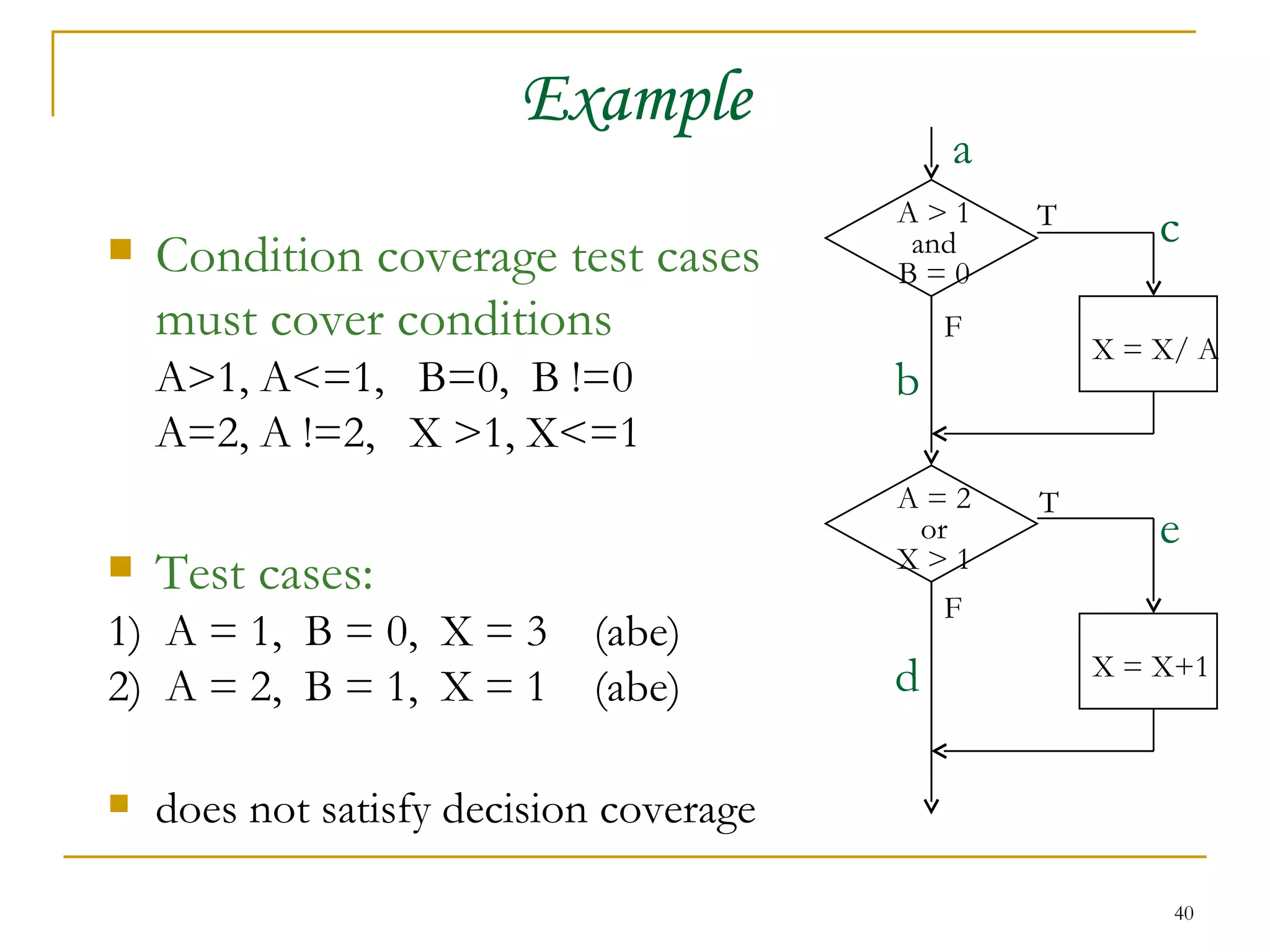

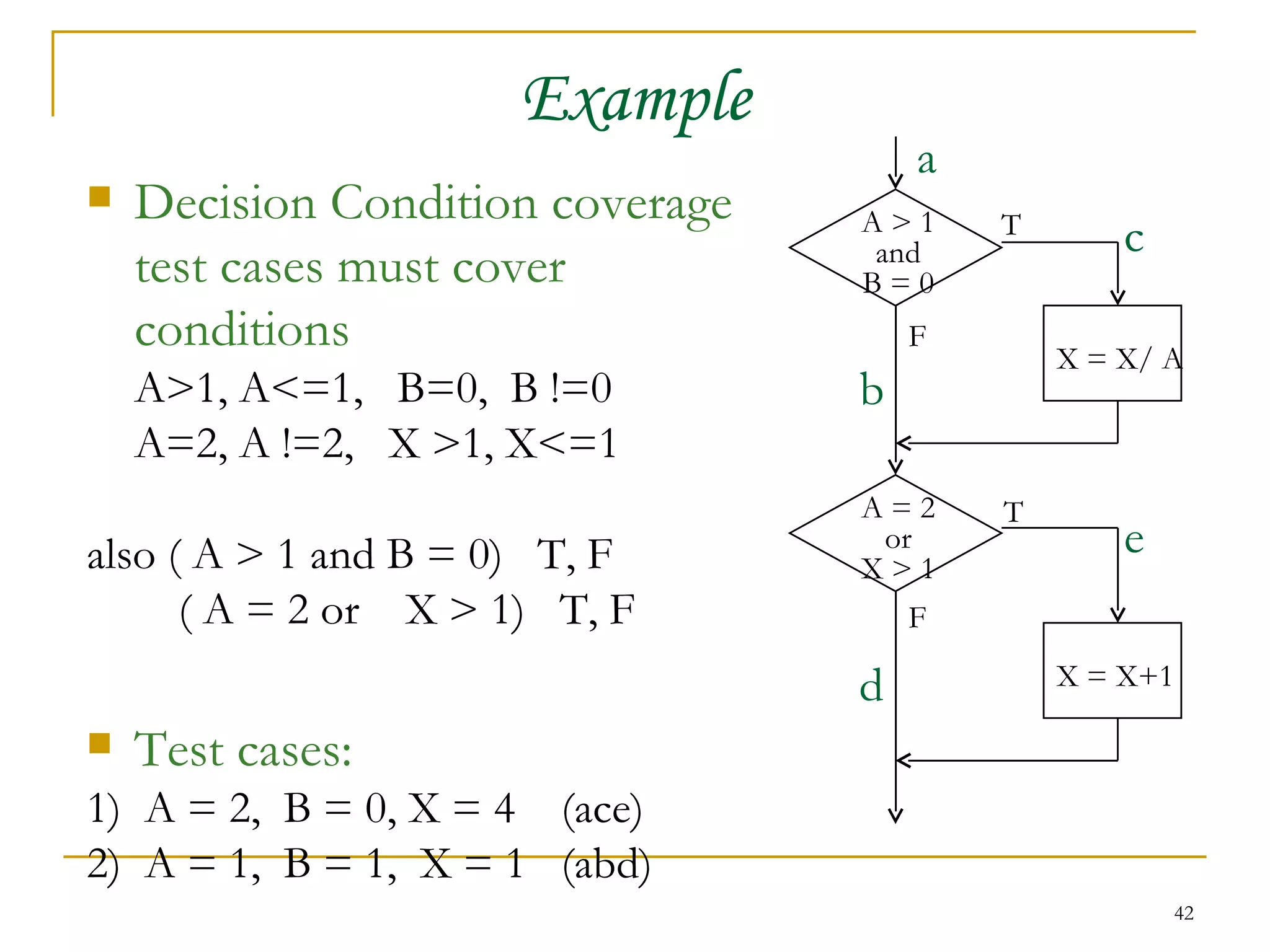

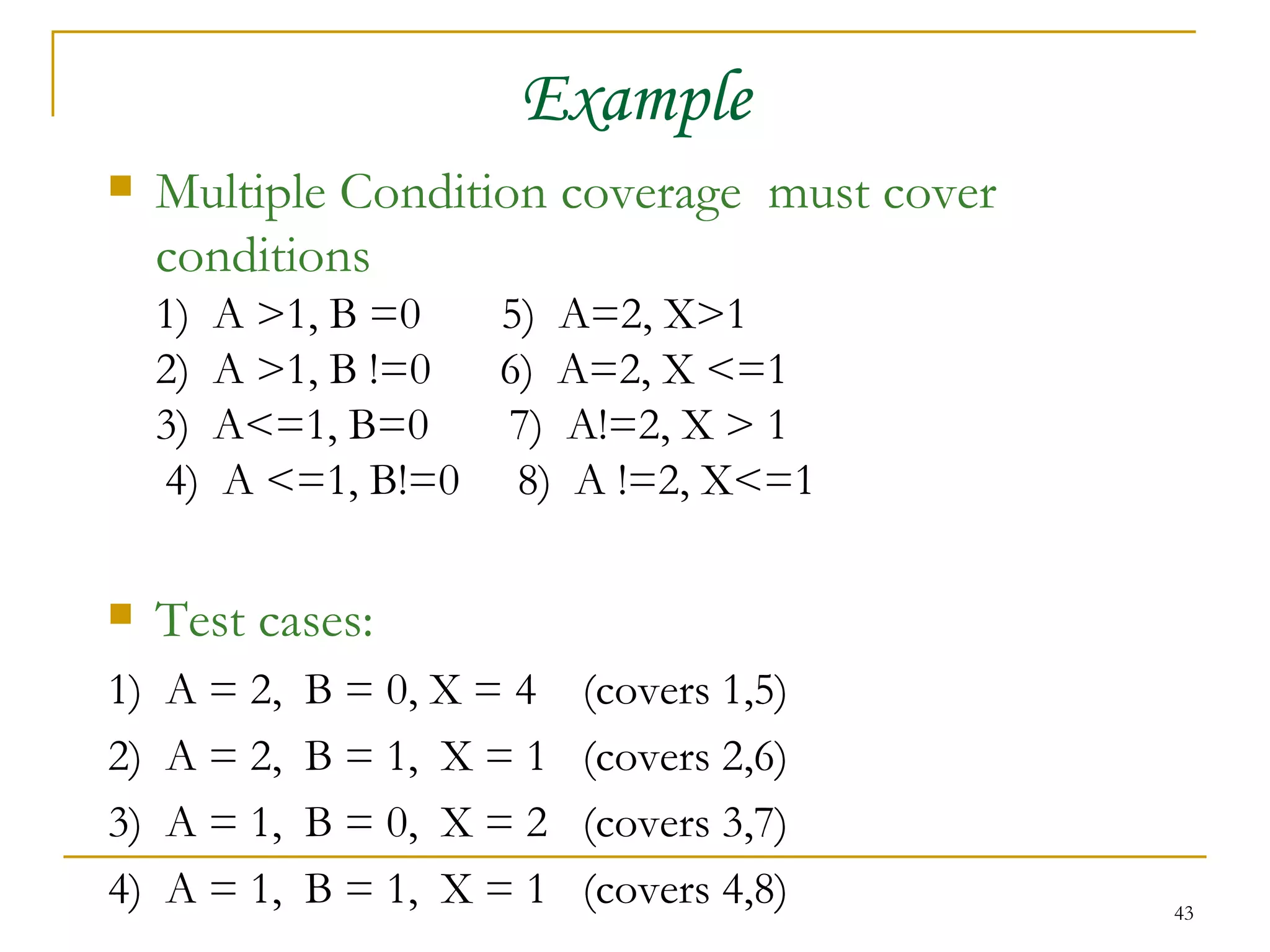

This document discusses various types of software testing techniques used in the software development lifecycle (SDLC). It begins by describing different SDLC models like waterfall, prototyping, RAD, spiral and V-models. It then discusses the importance of testing at different stages of SDLC and different types of testing like static vs dynamic, black box vs white box, unit vs integration etc. The rest of the document elaborates on specific black box and white box testing techniques like equivalence partitioning, boundary value analysis, cause-effect graphing, statement coverage and basis path testing.