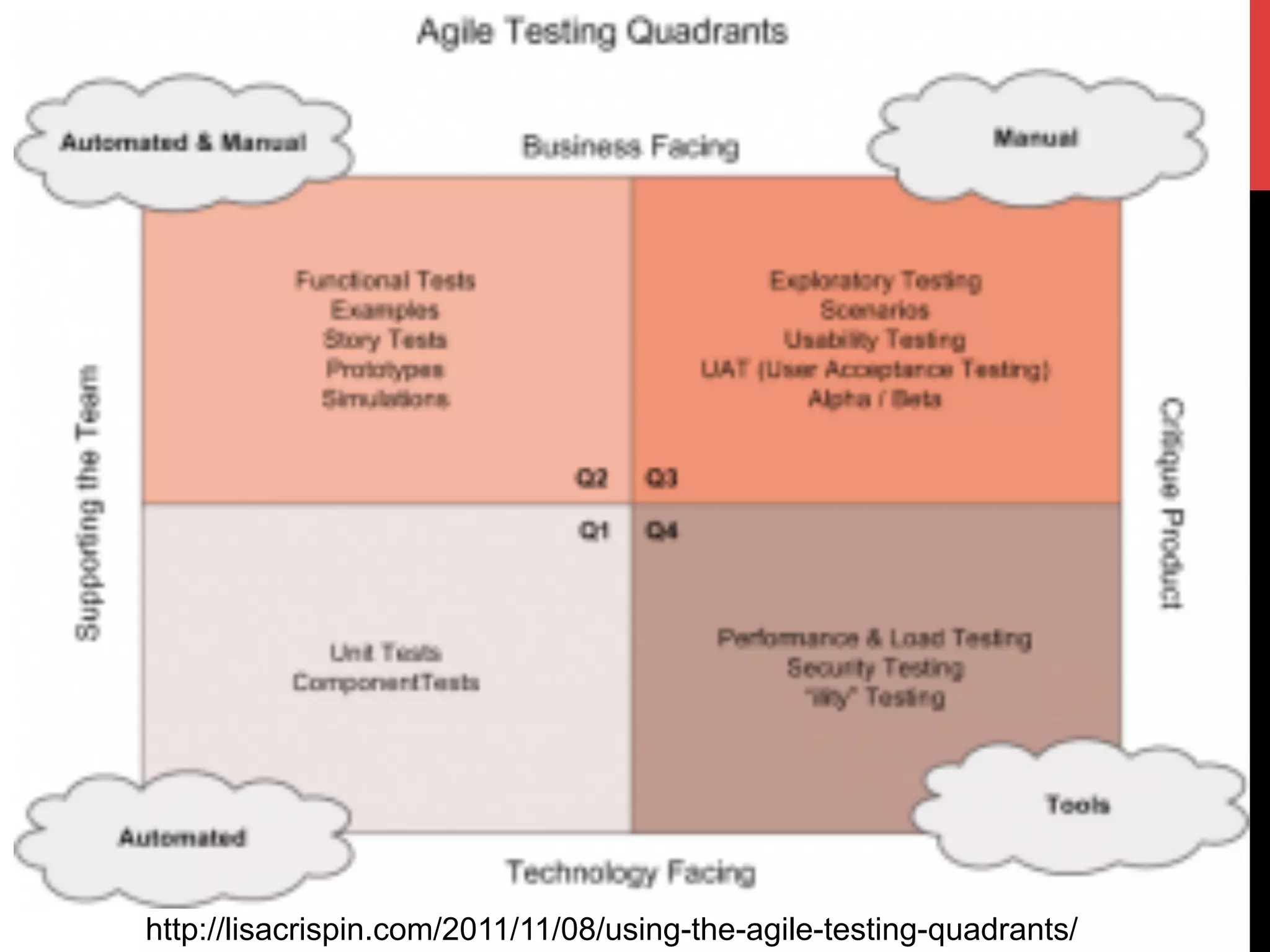

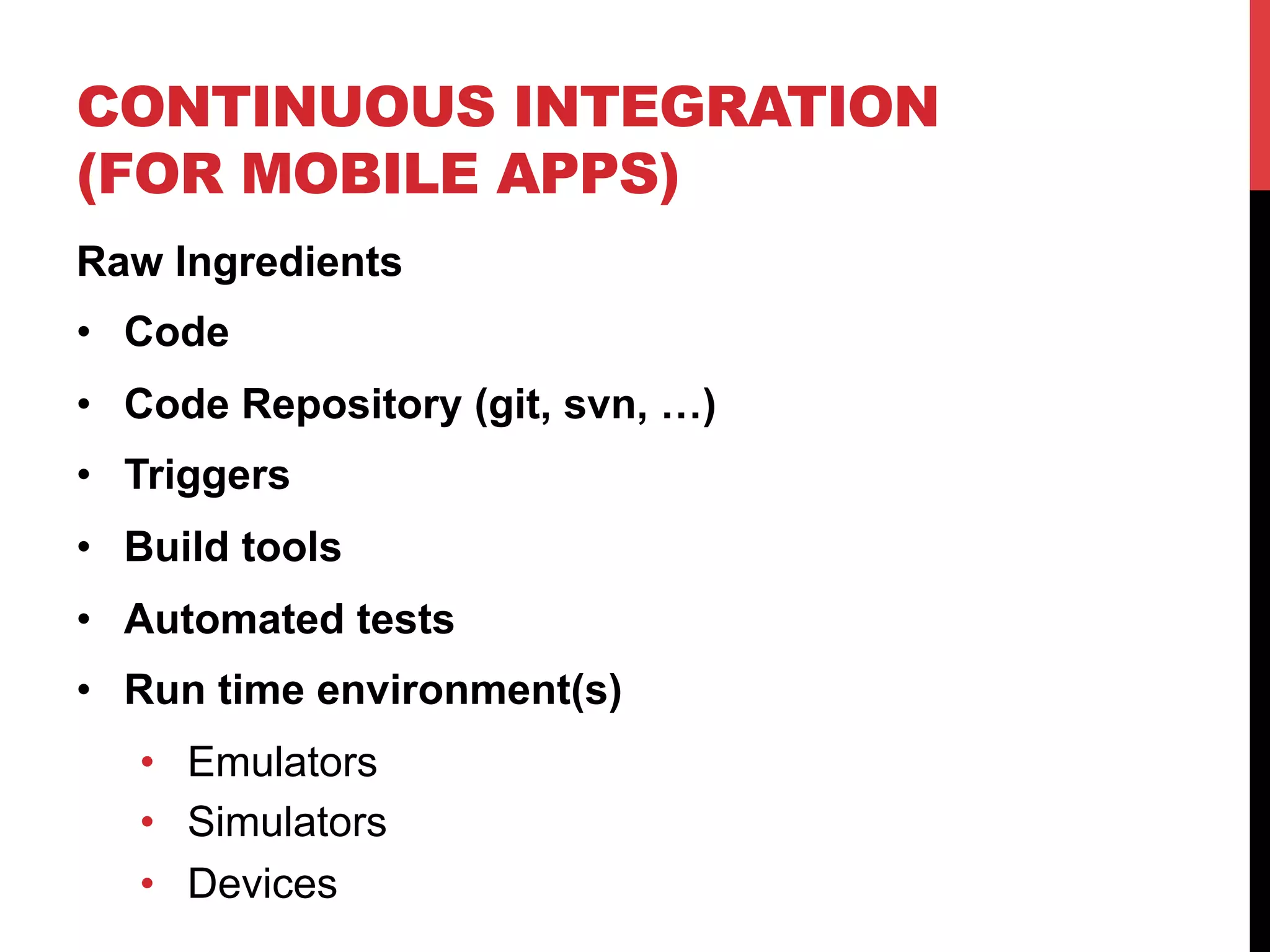

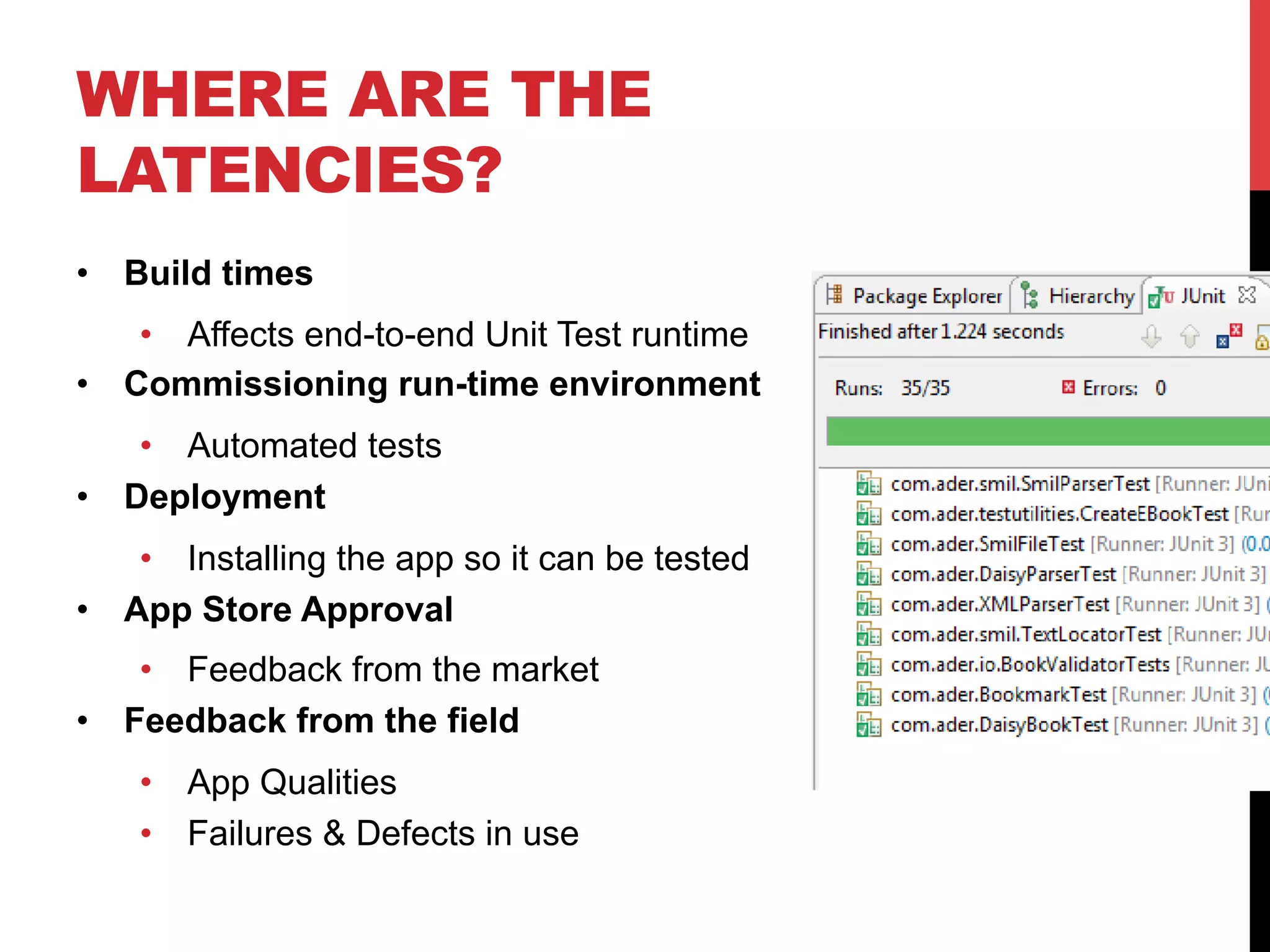

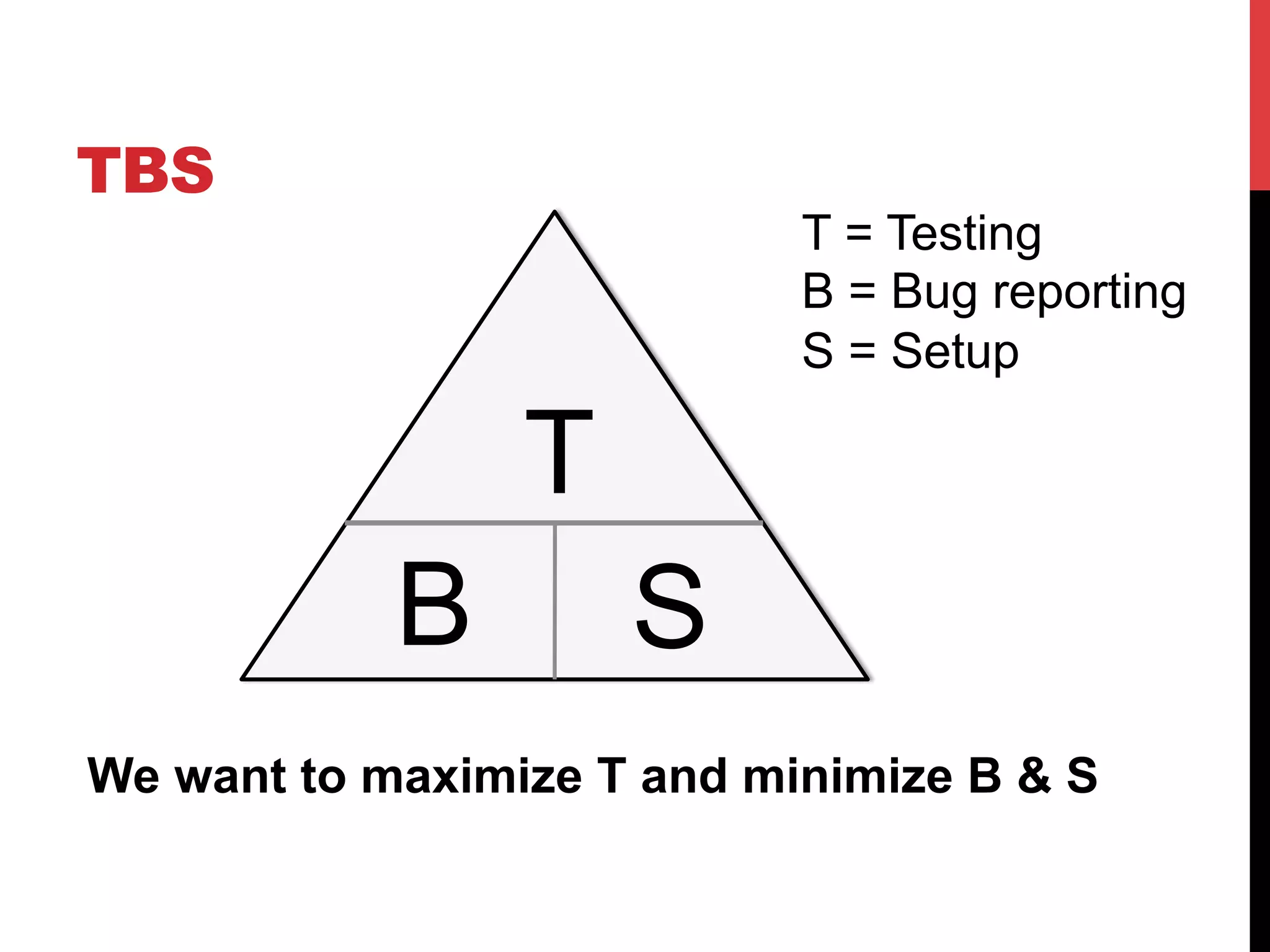

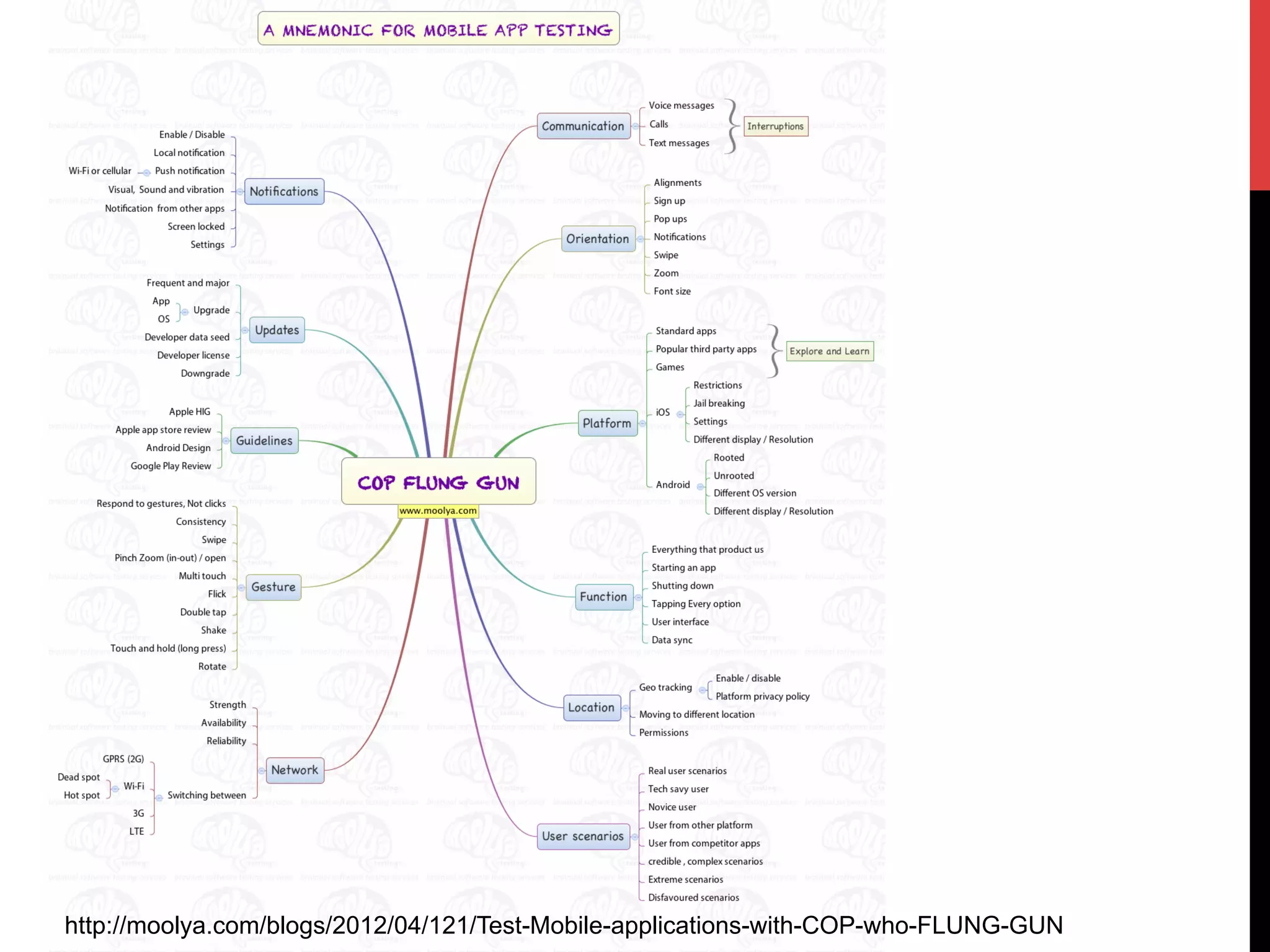

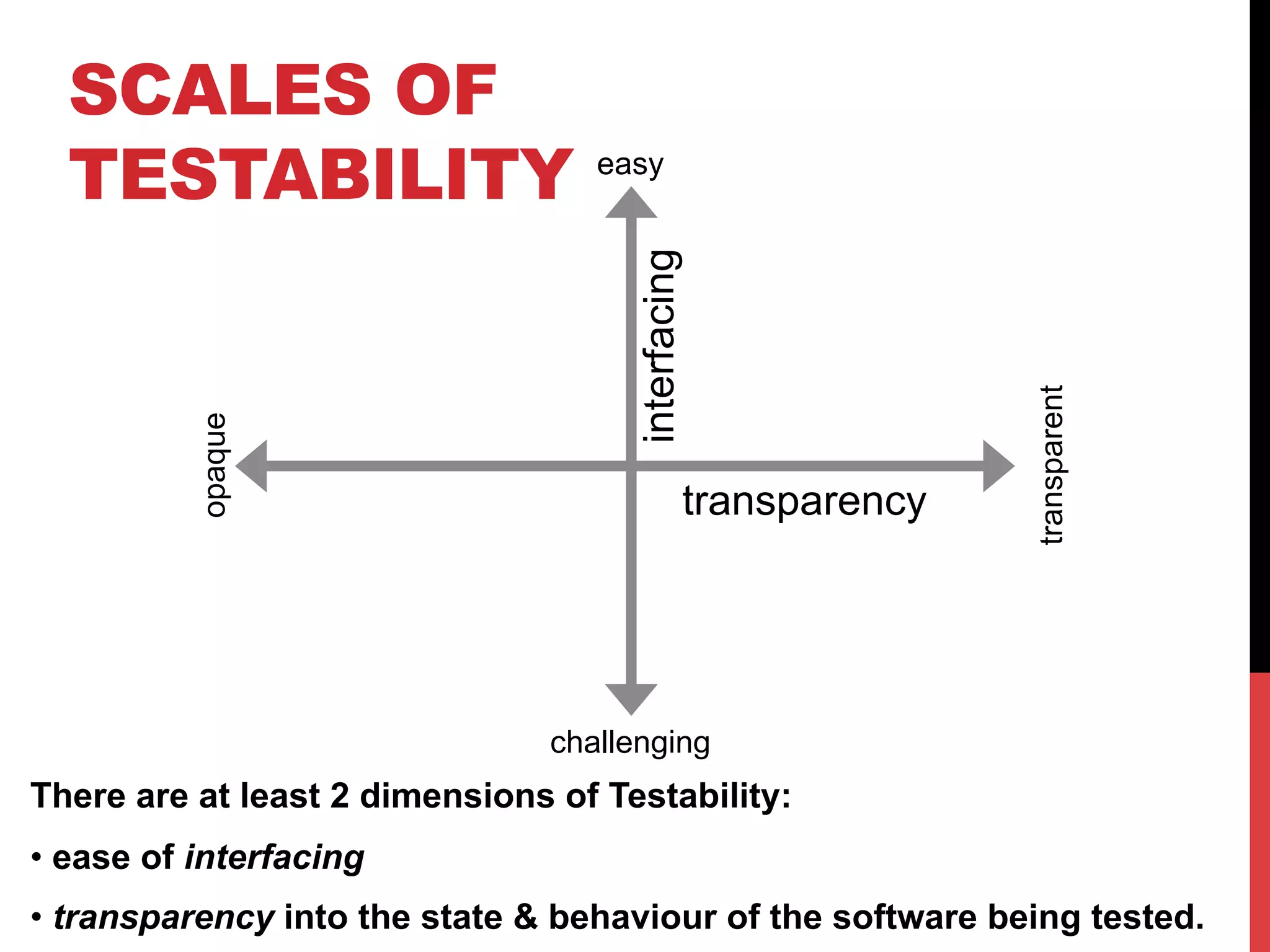

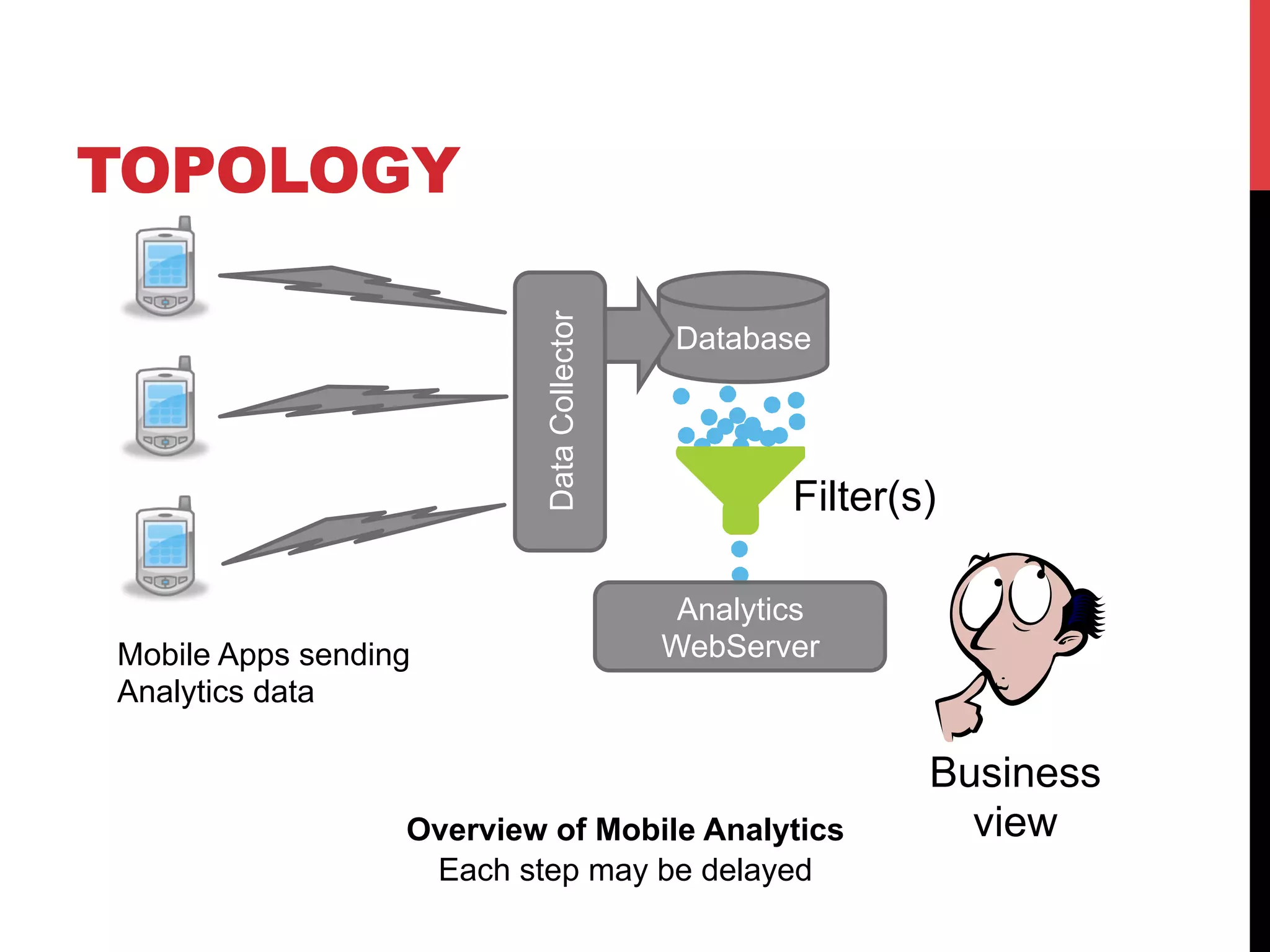

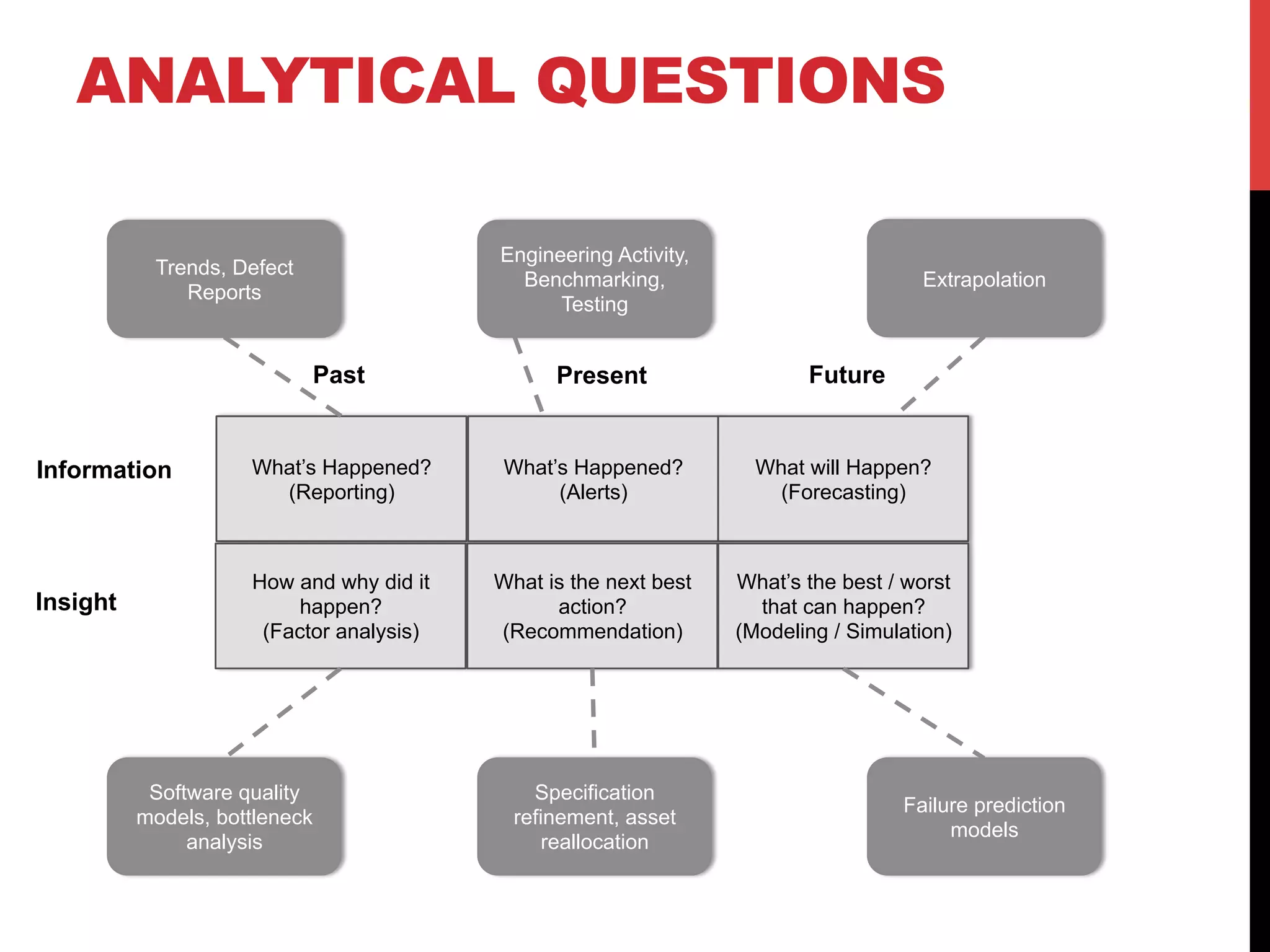

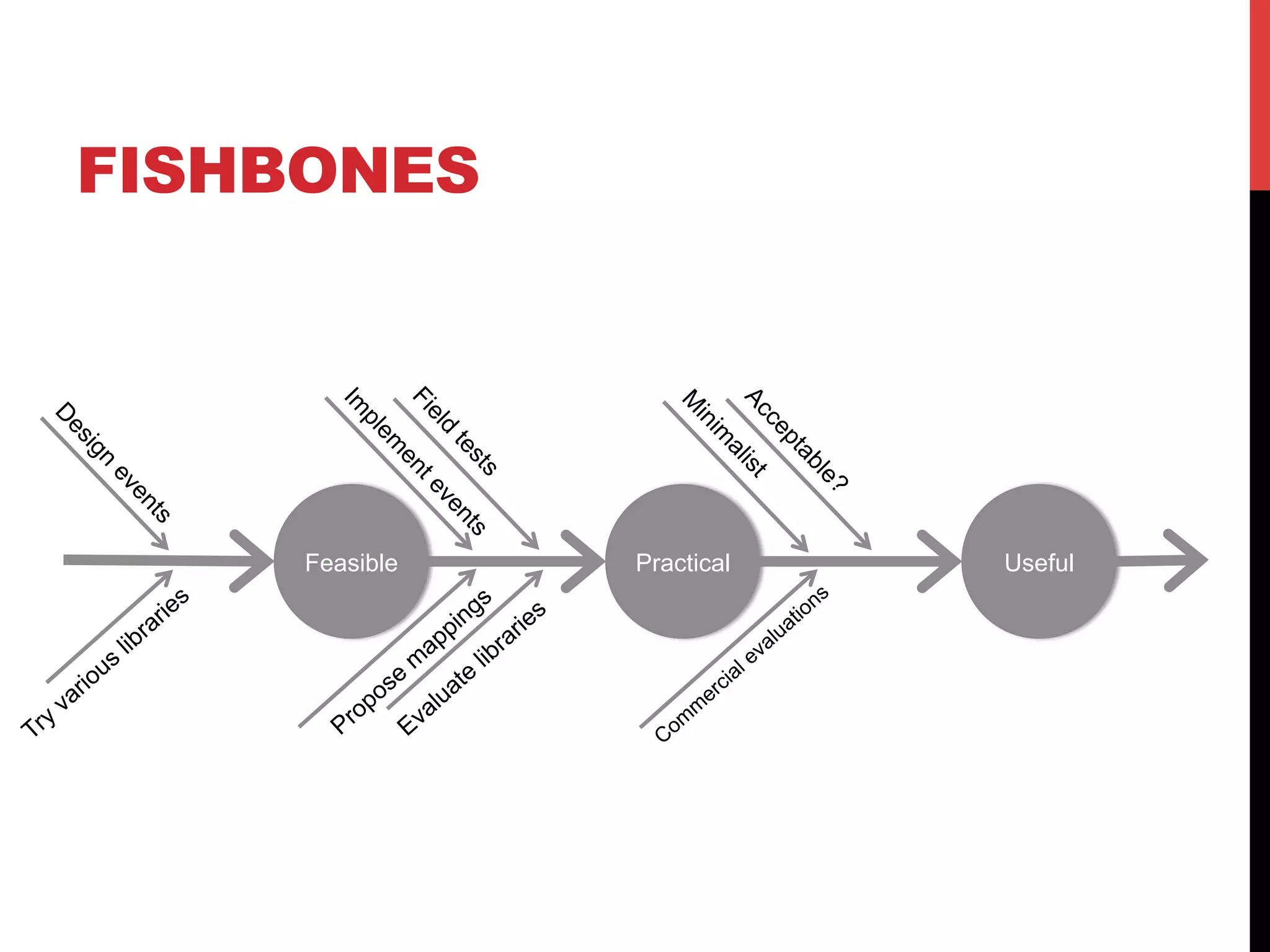

The document discusses the importance of agile mobile testing and offers methods for improving testability in software design, emphasizing timely feedback and effective use of resources. It highlights the need for continuous integration and the separation of concerns in code for maintainability, alongside alternatives to traditional testing like crowd sourcing and analytics. Key considerations include balancing development costs with maintenance and leveraging analytics for better understanding software performance and user feedback.