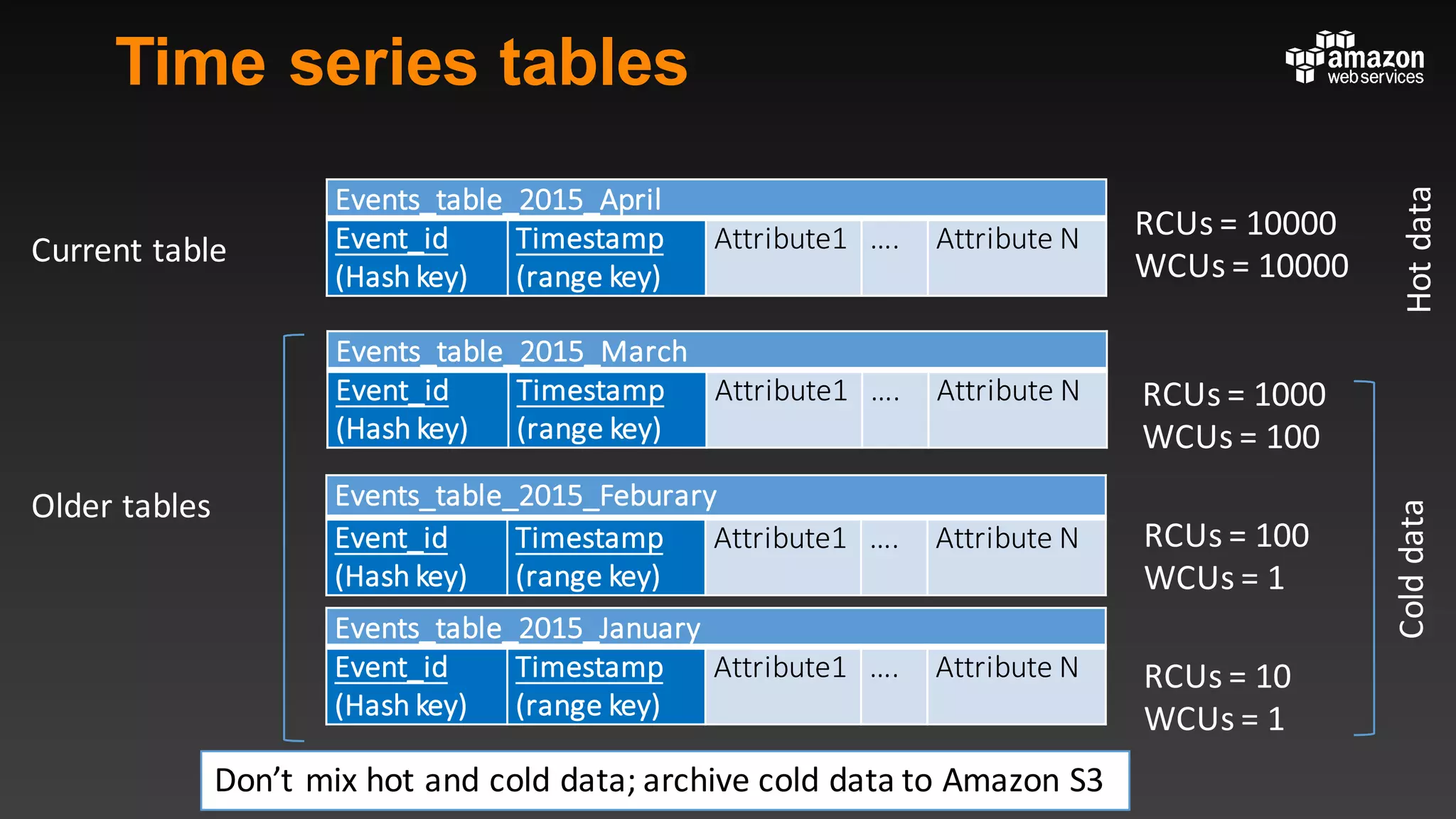

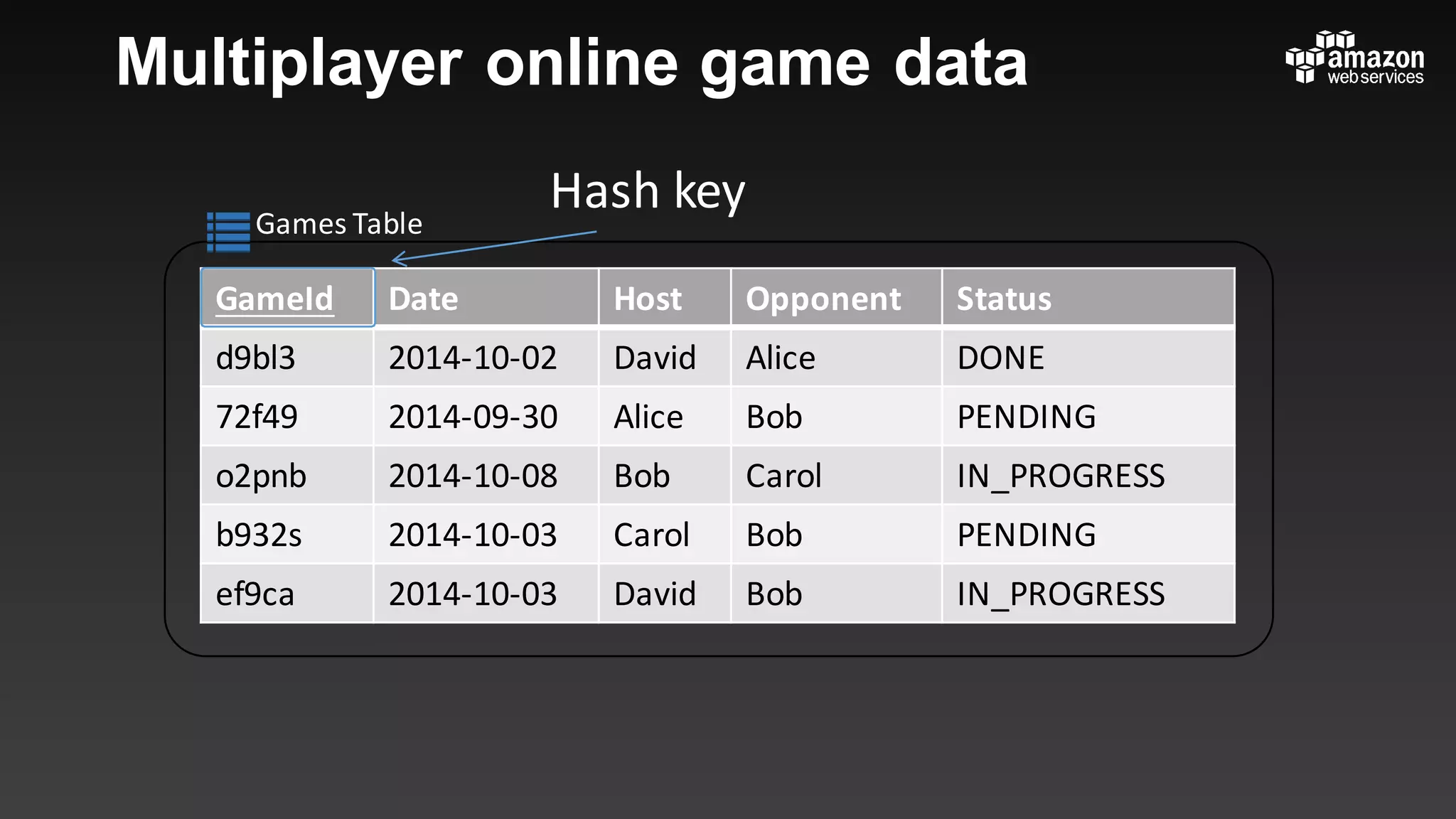

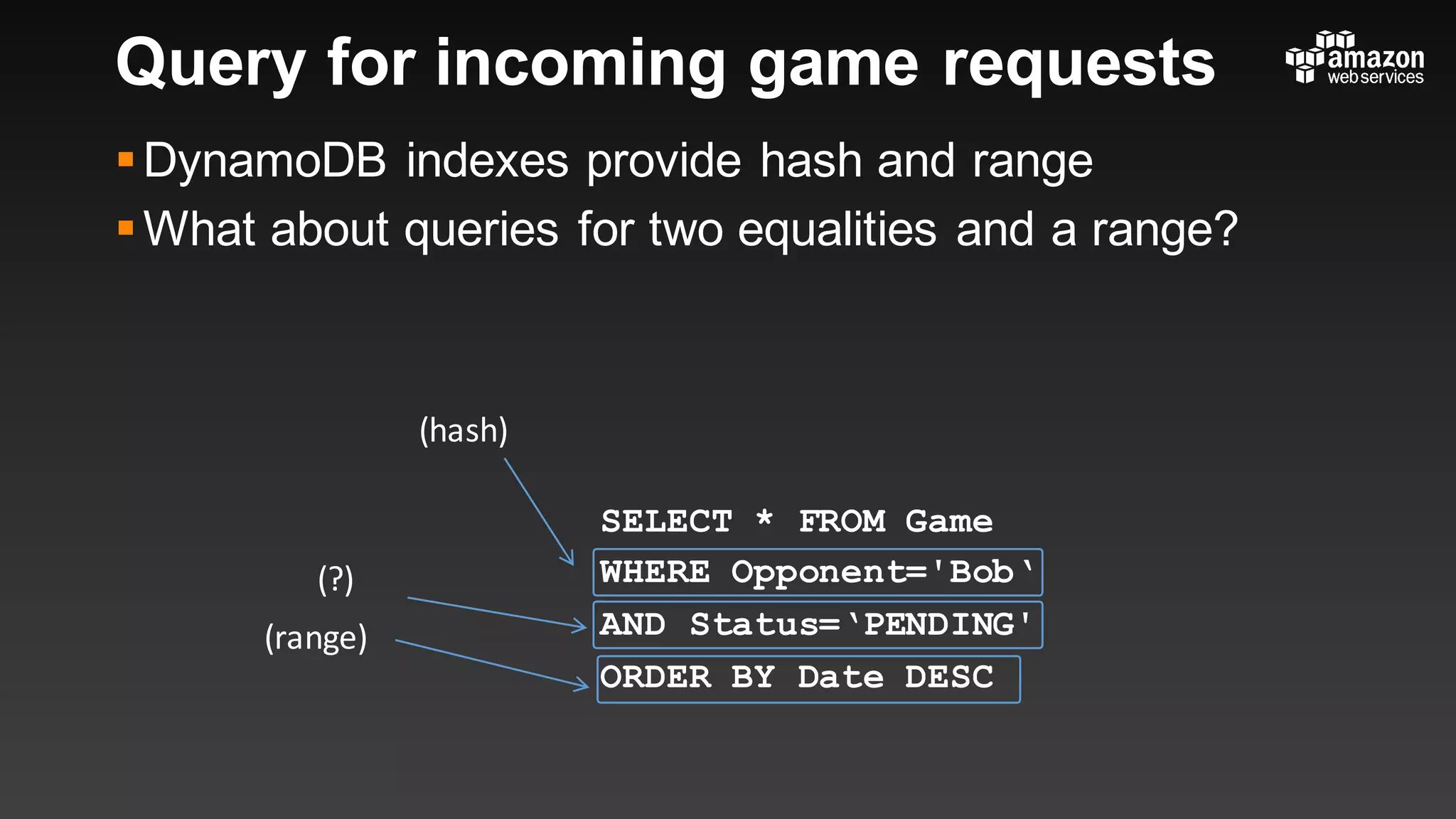

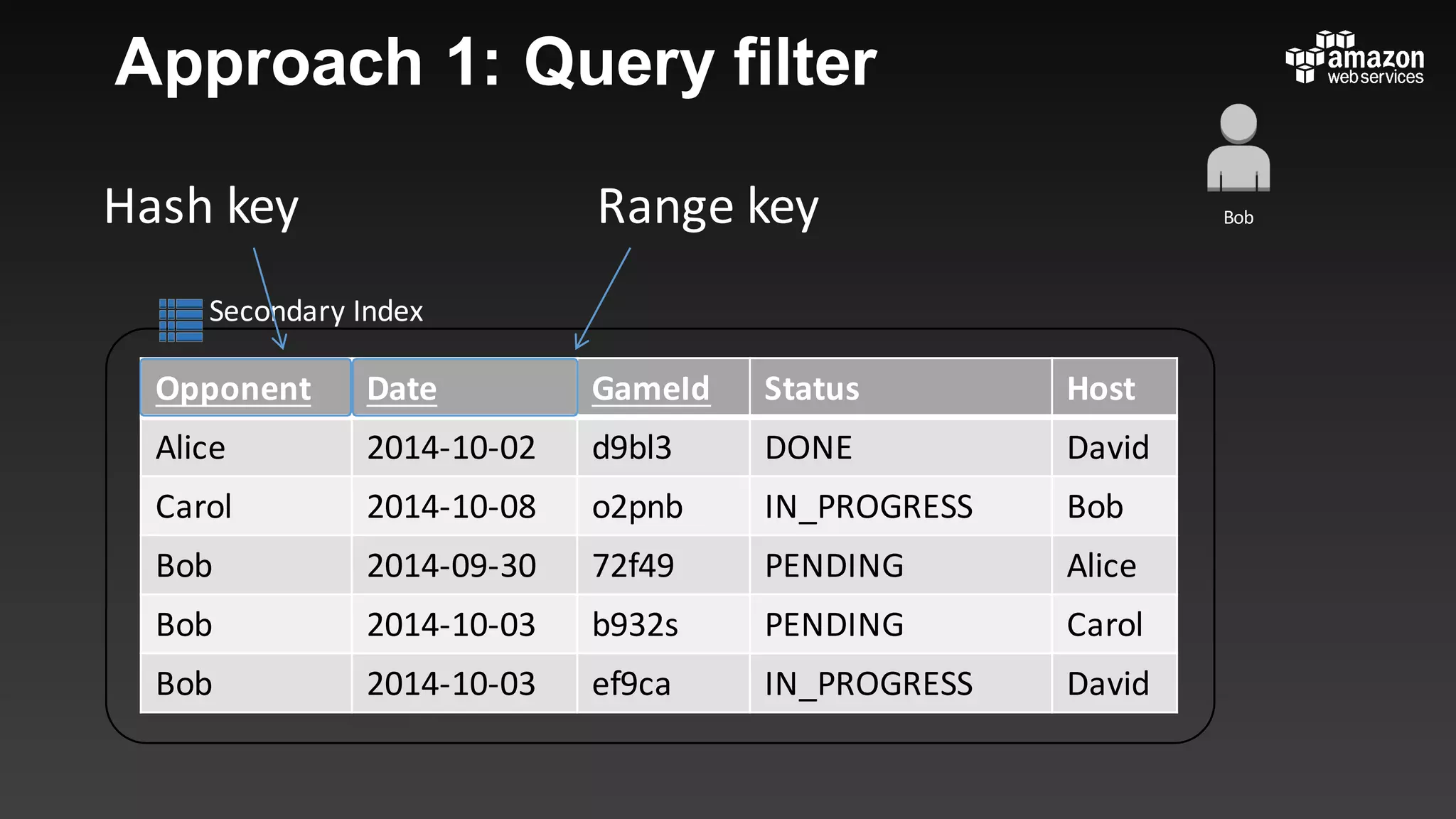

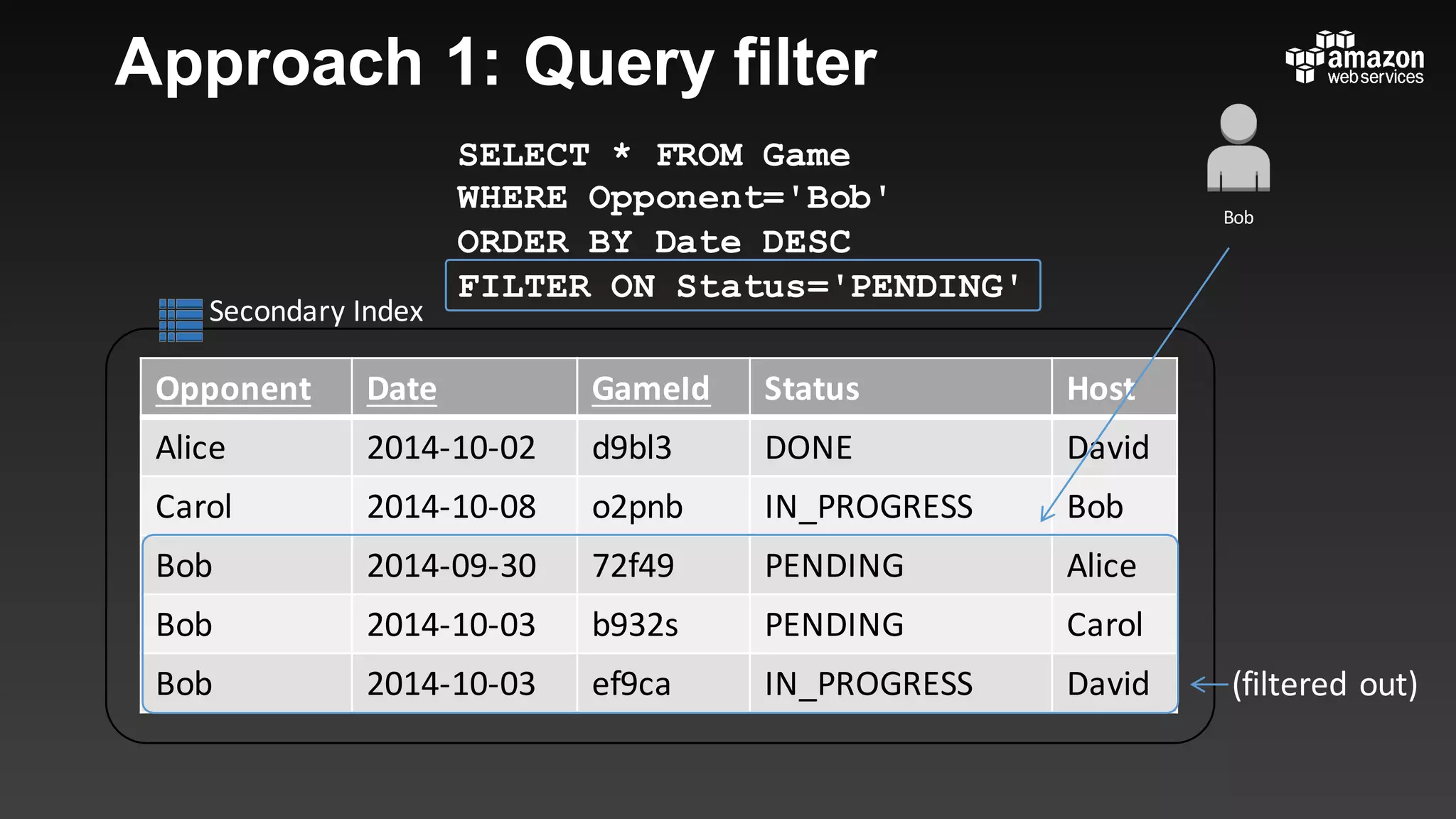

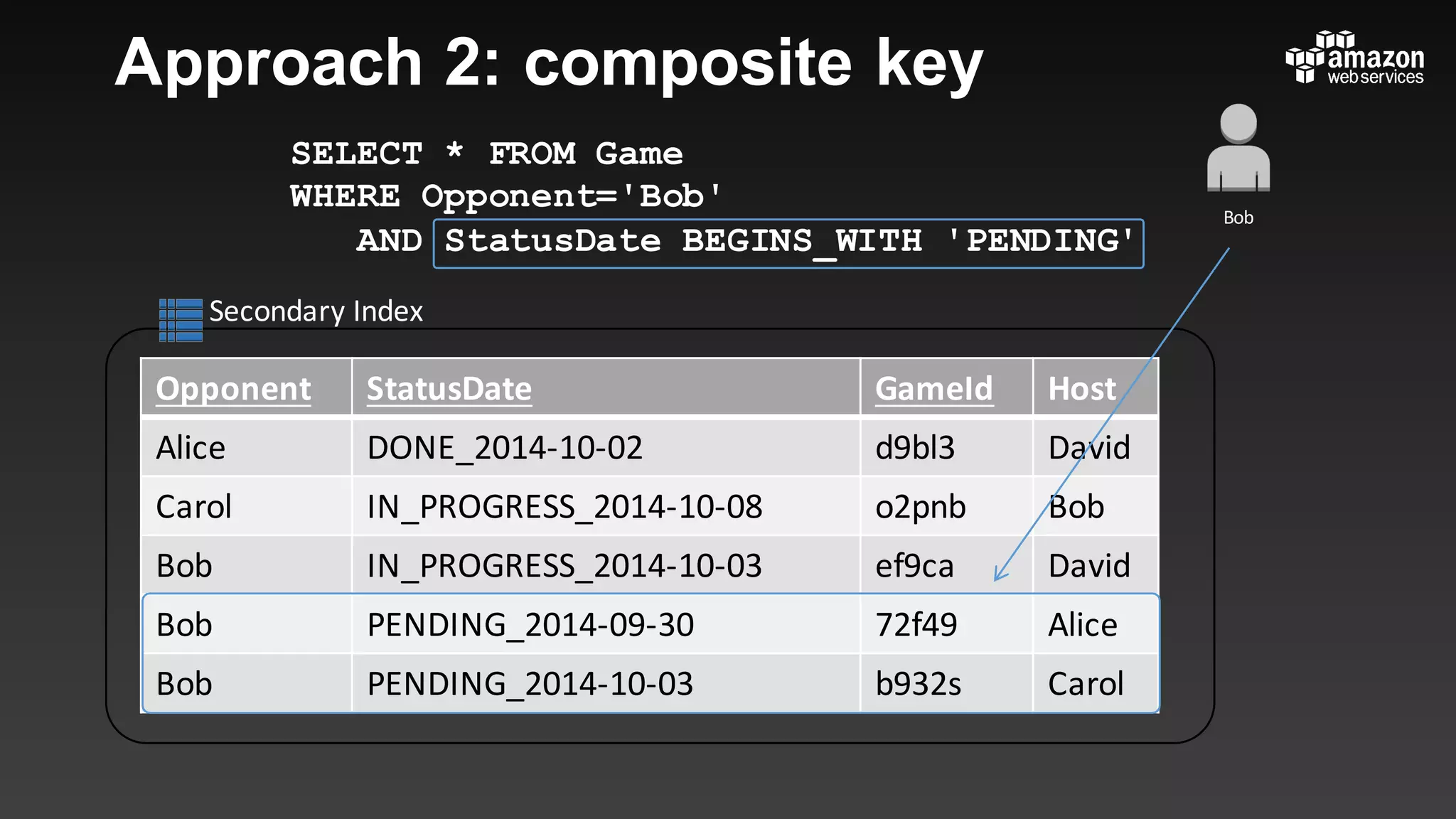

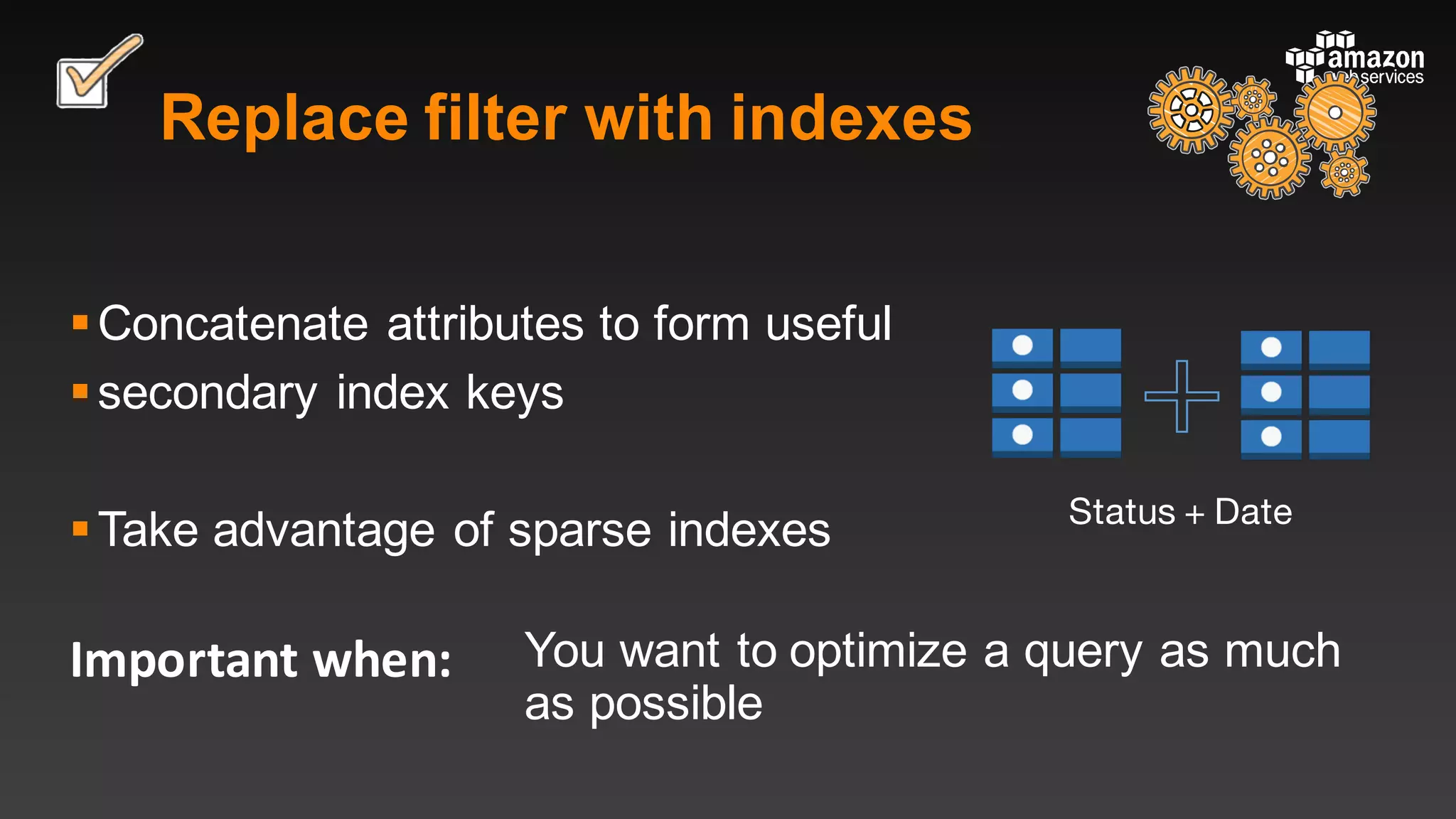

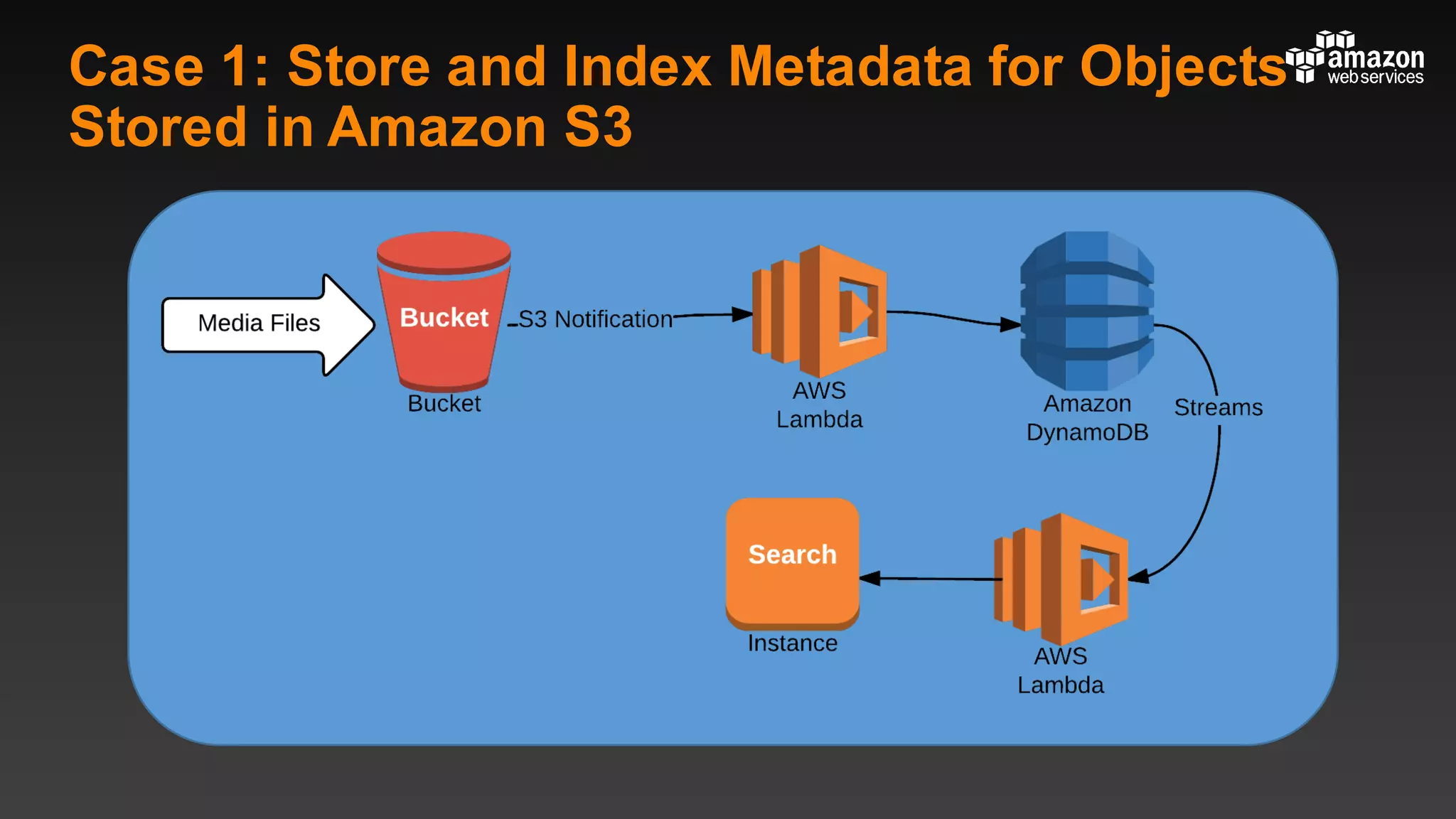

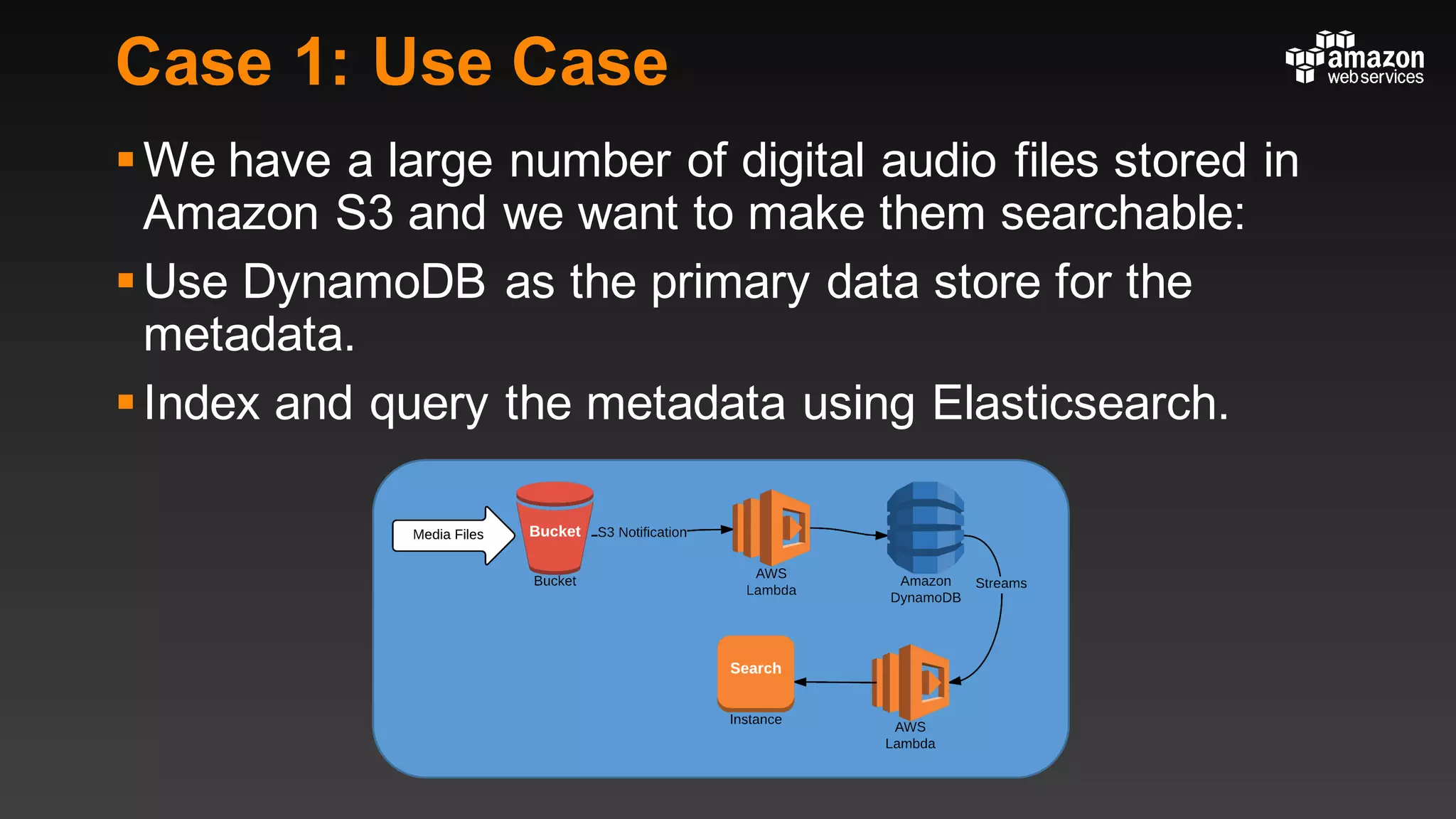

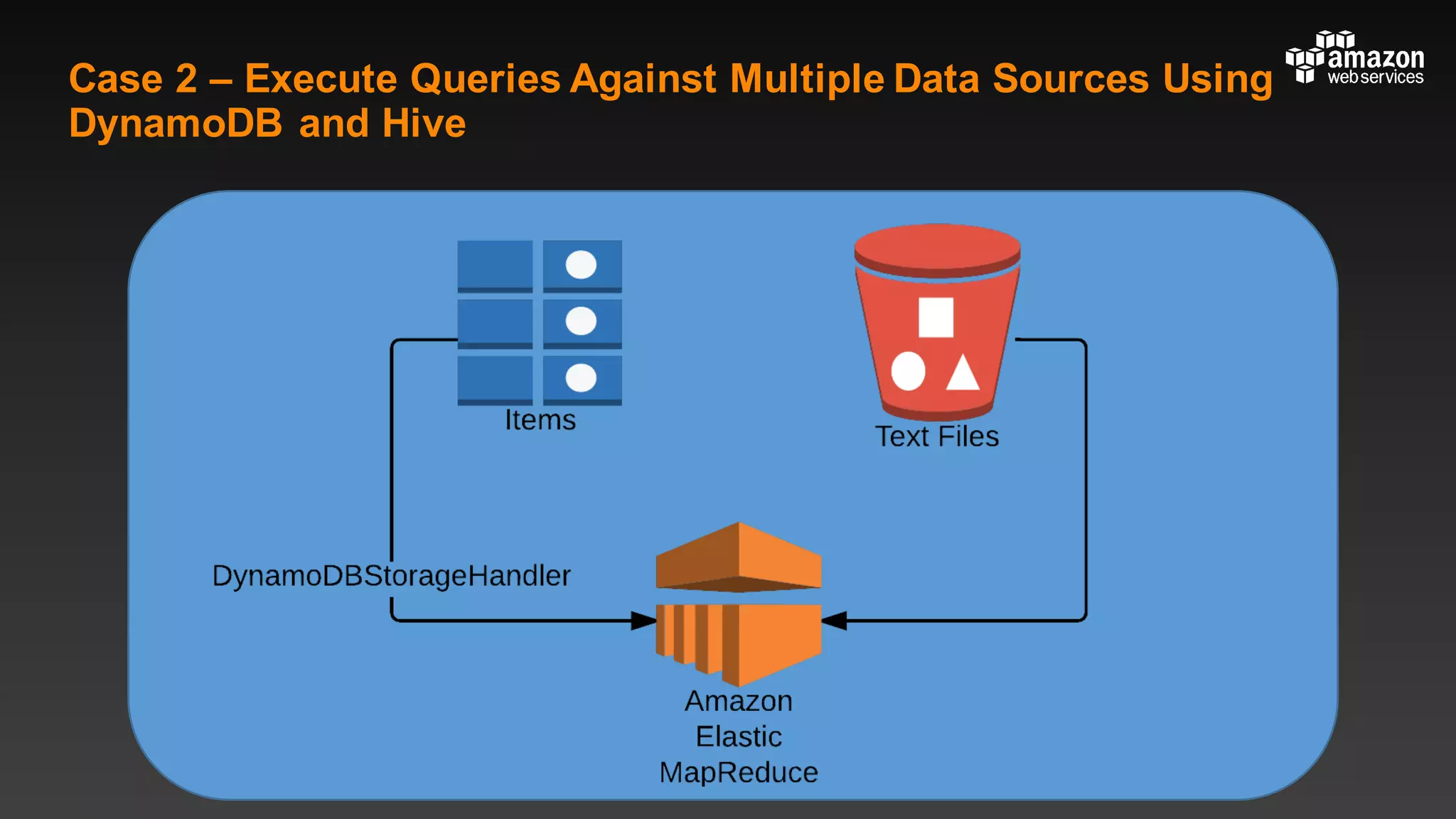

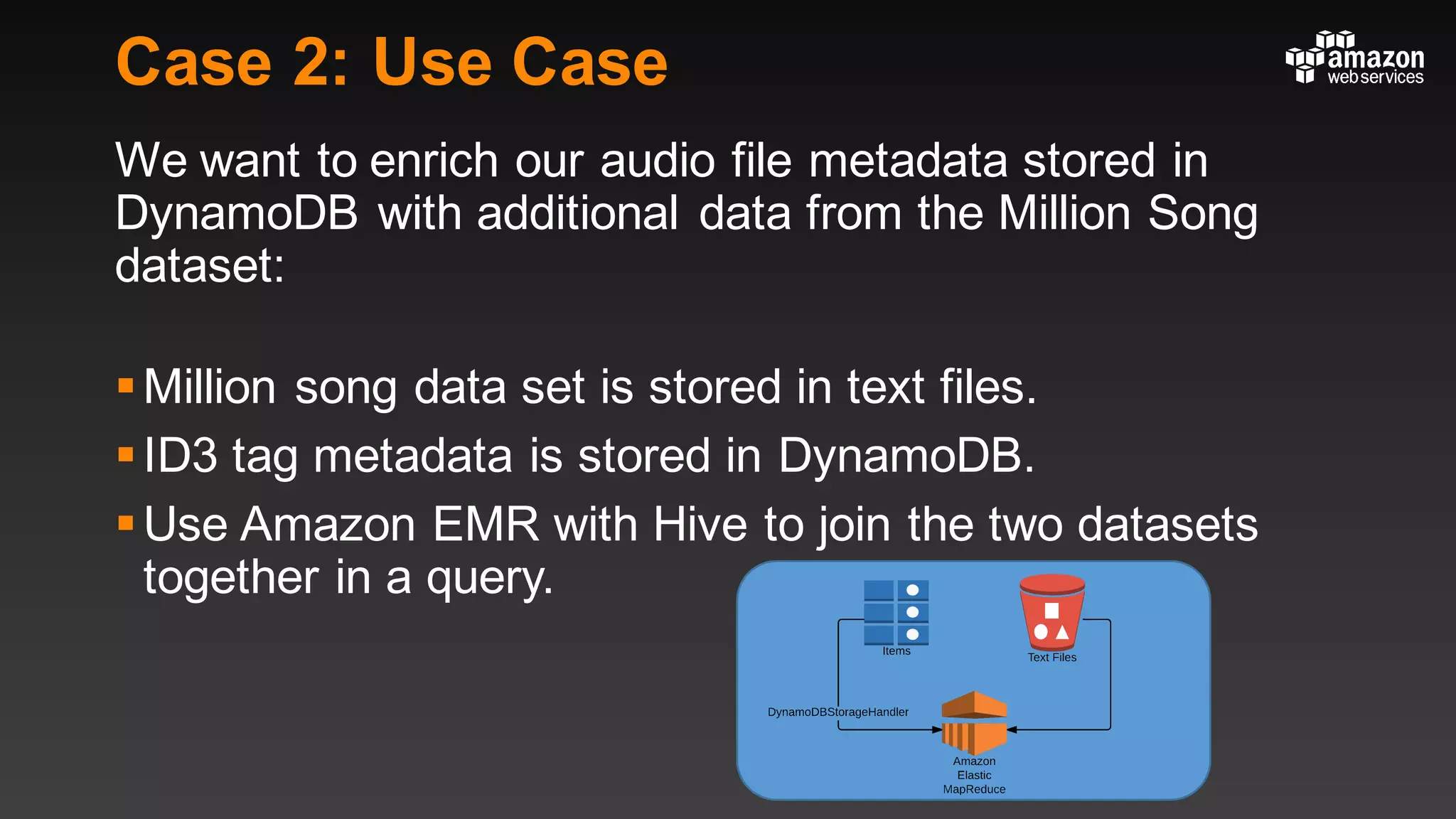

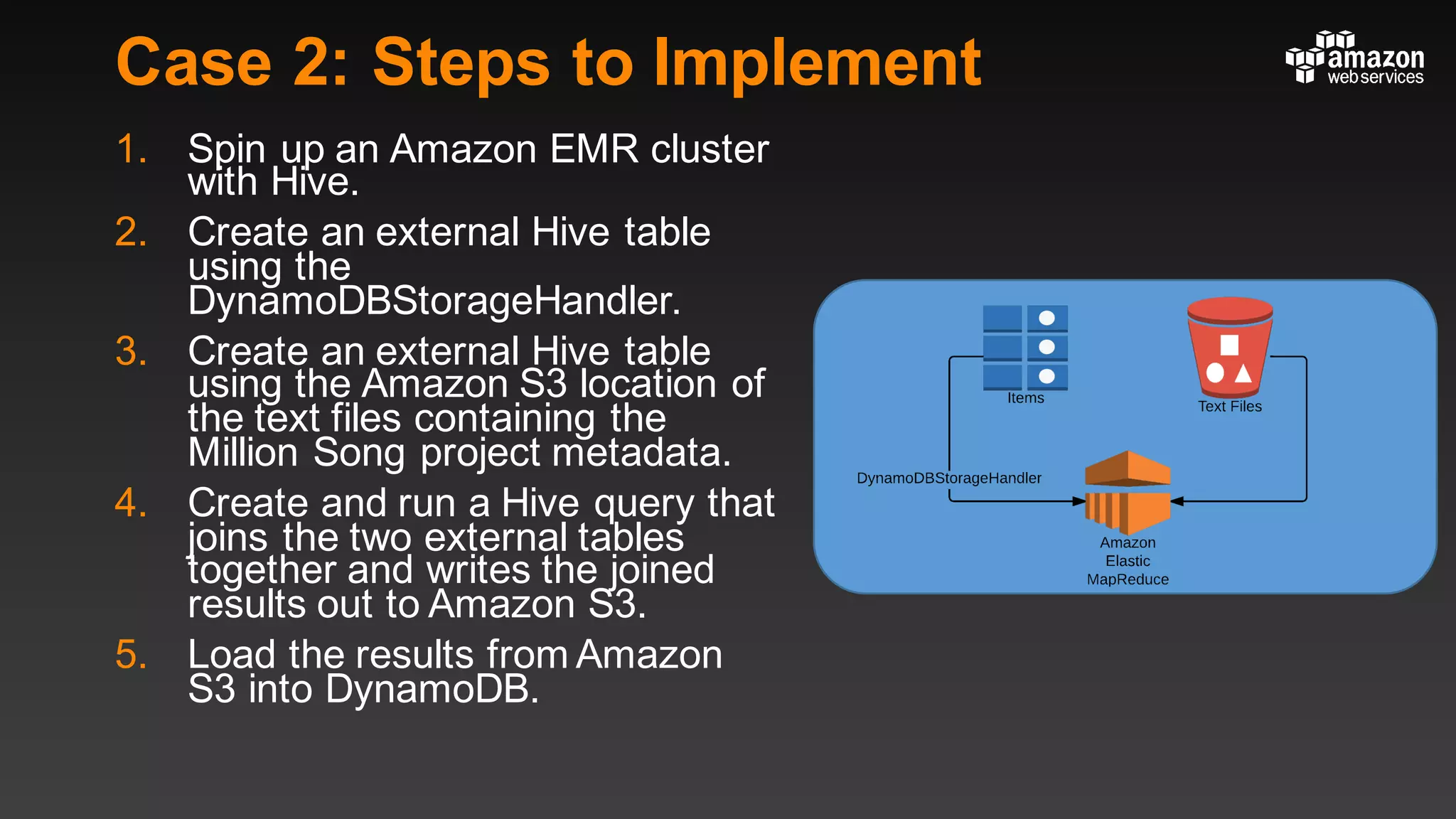

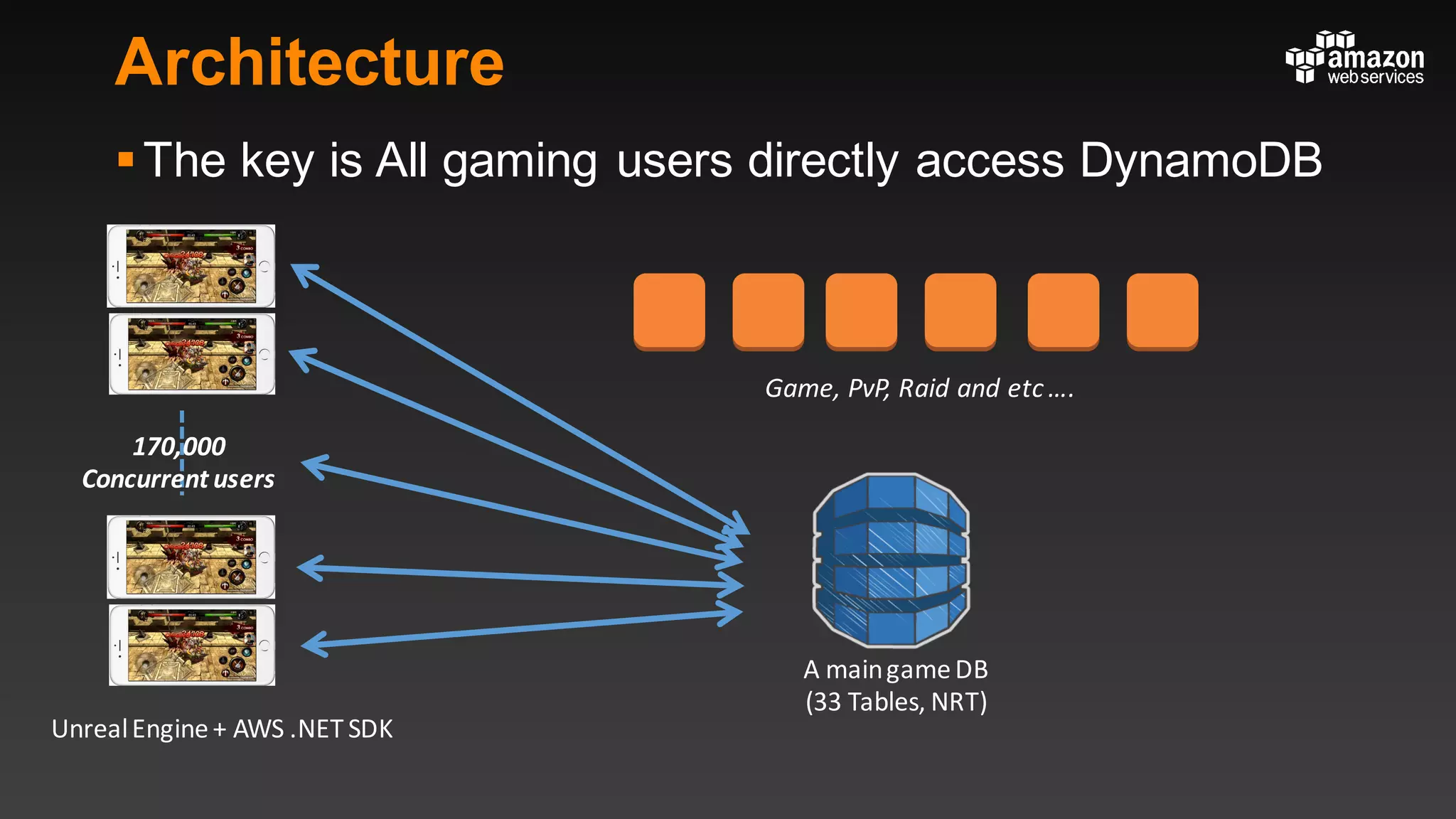

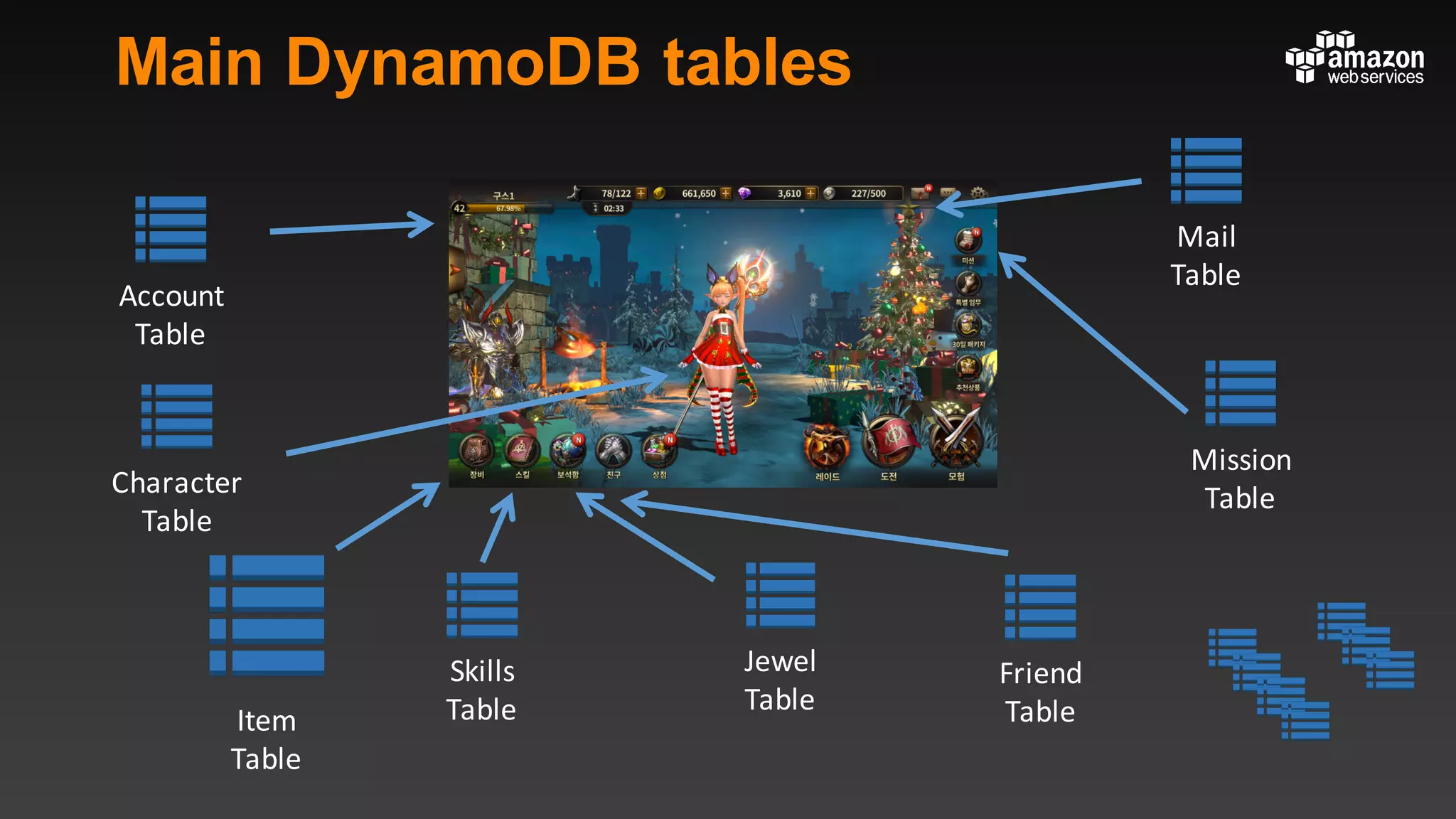

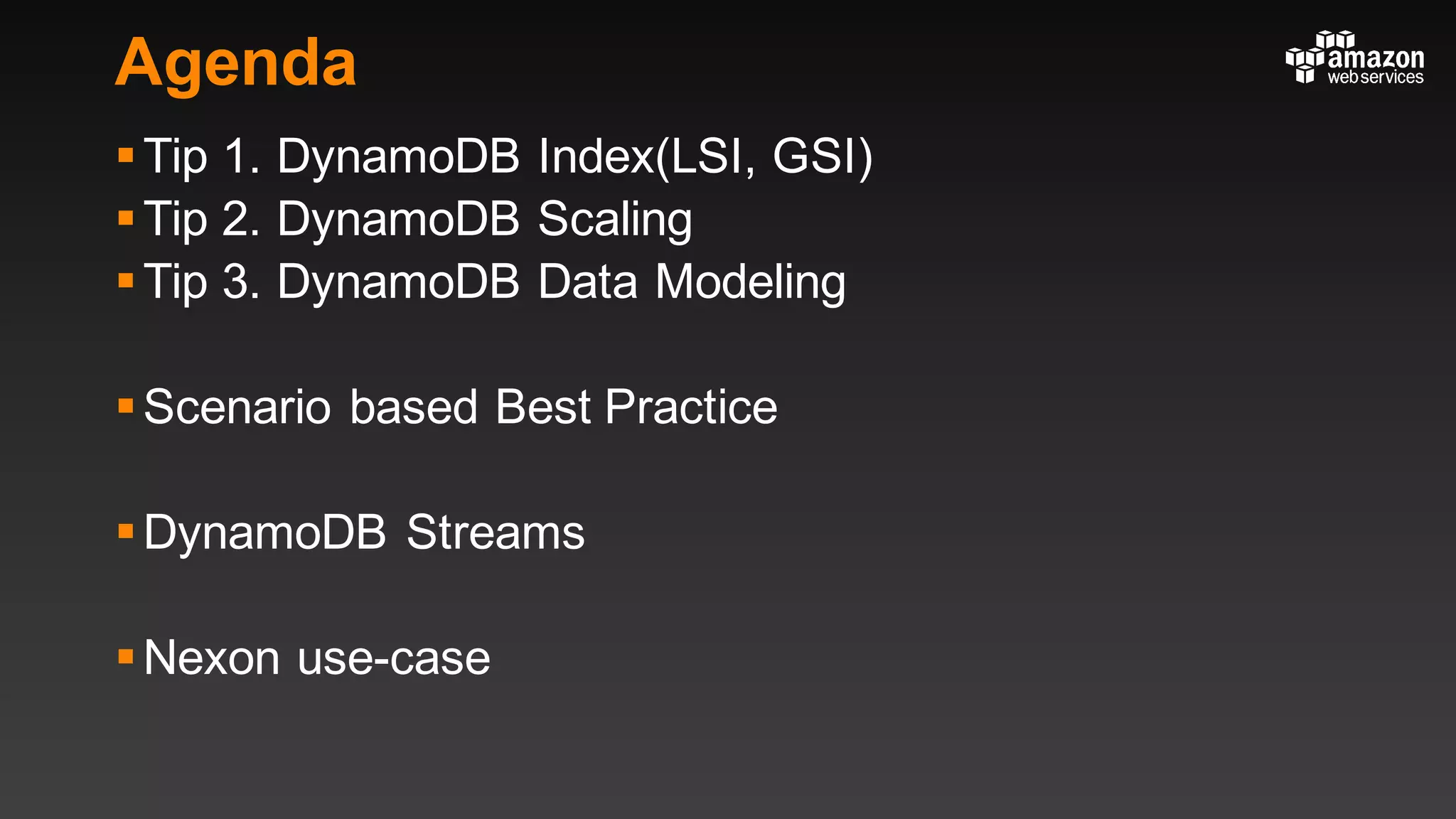

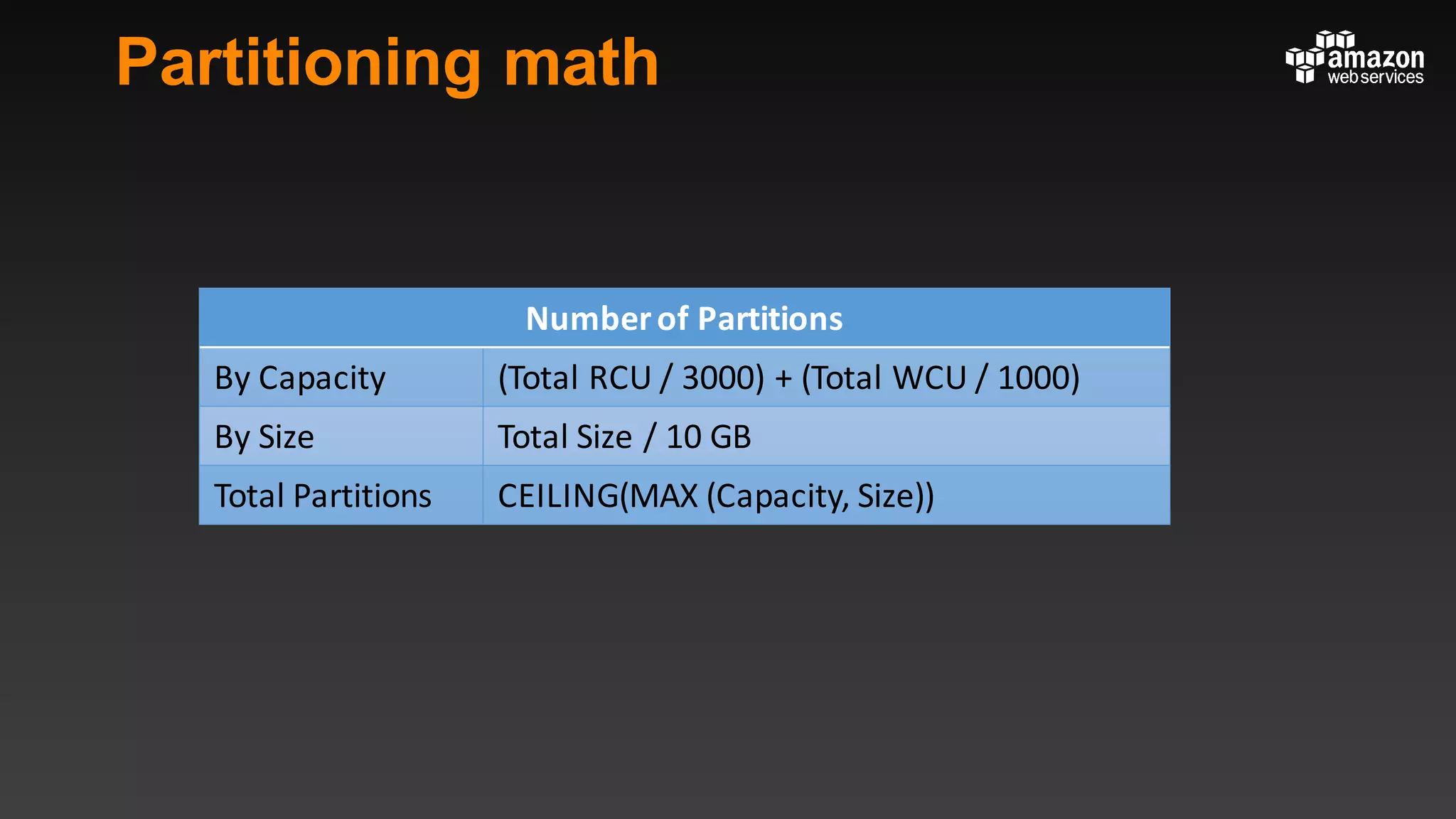

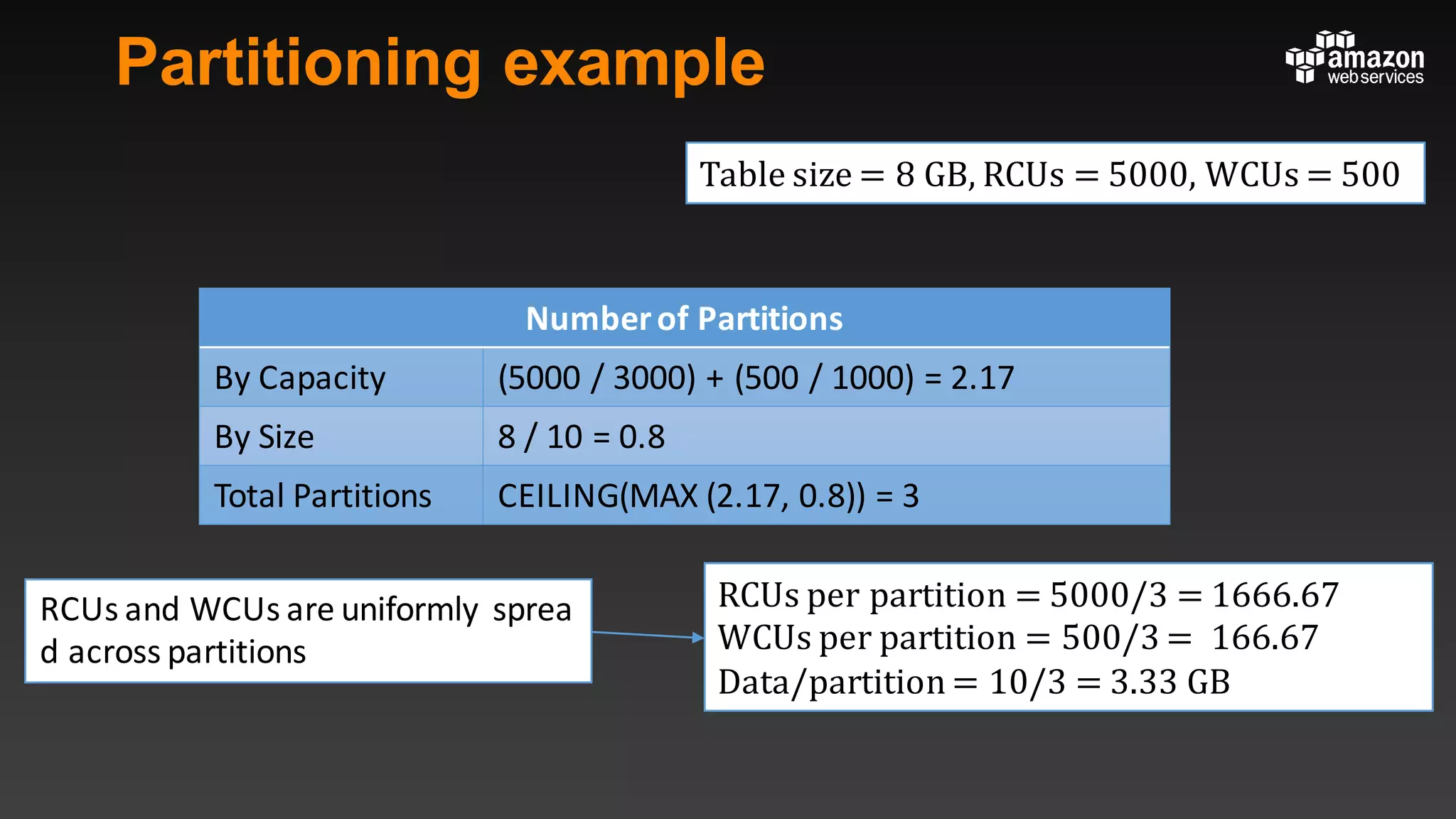

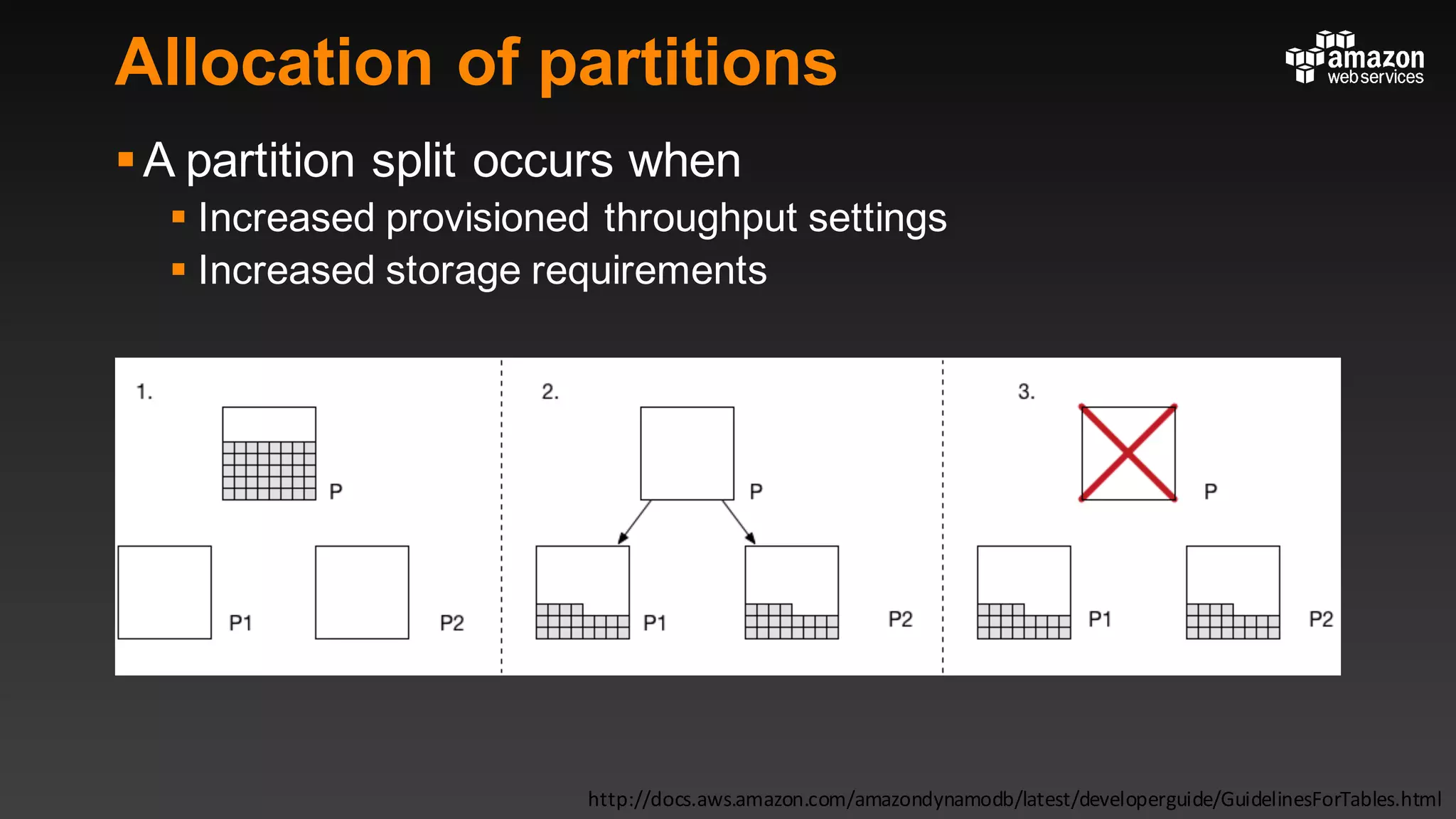

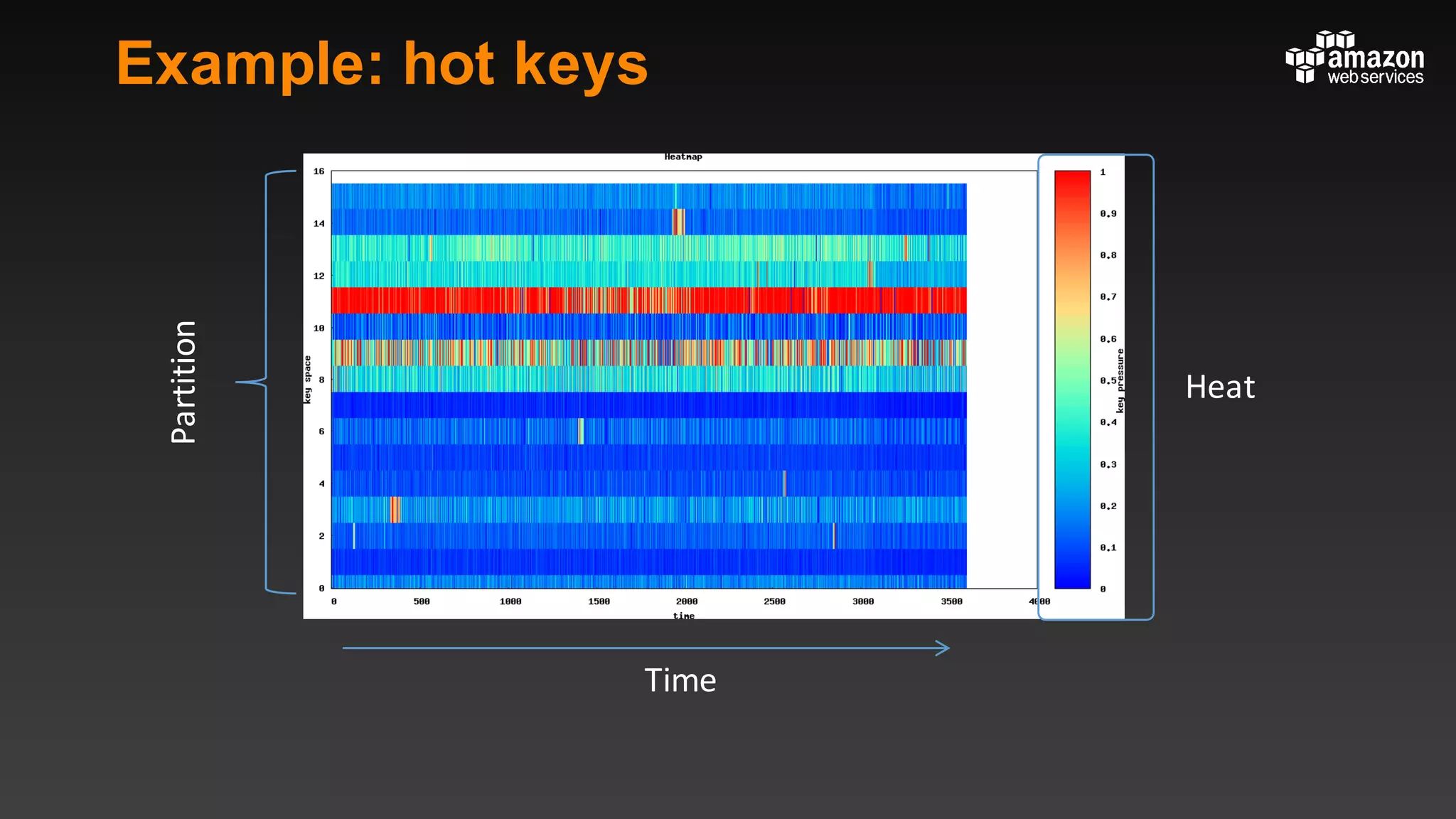

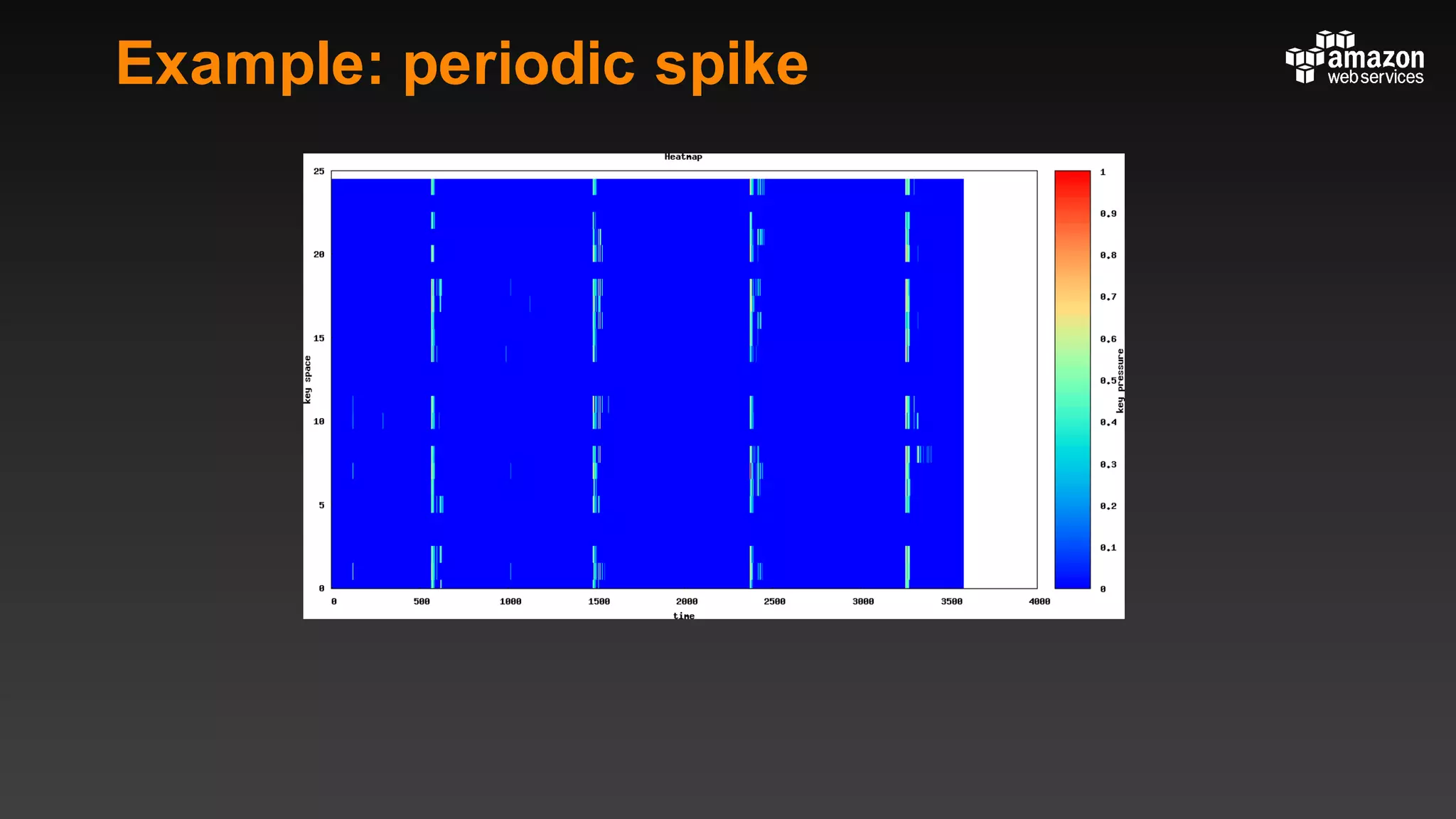

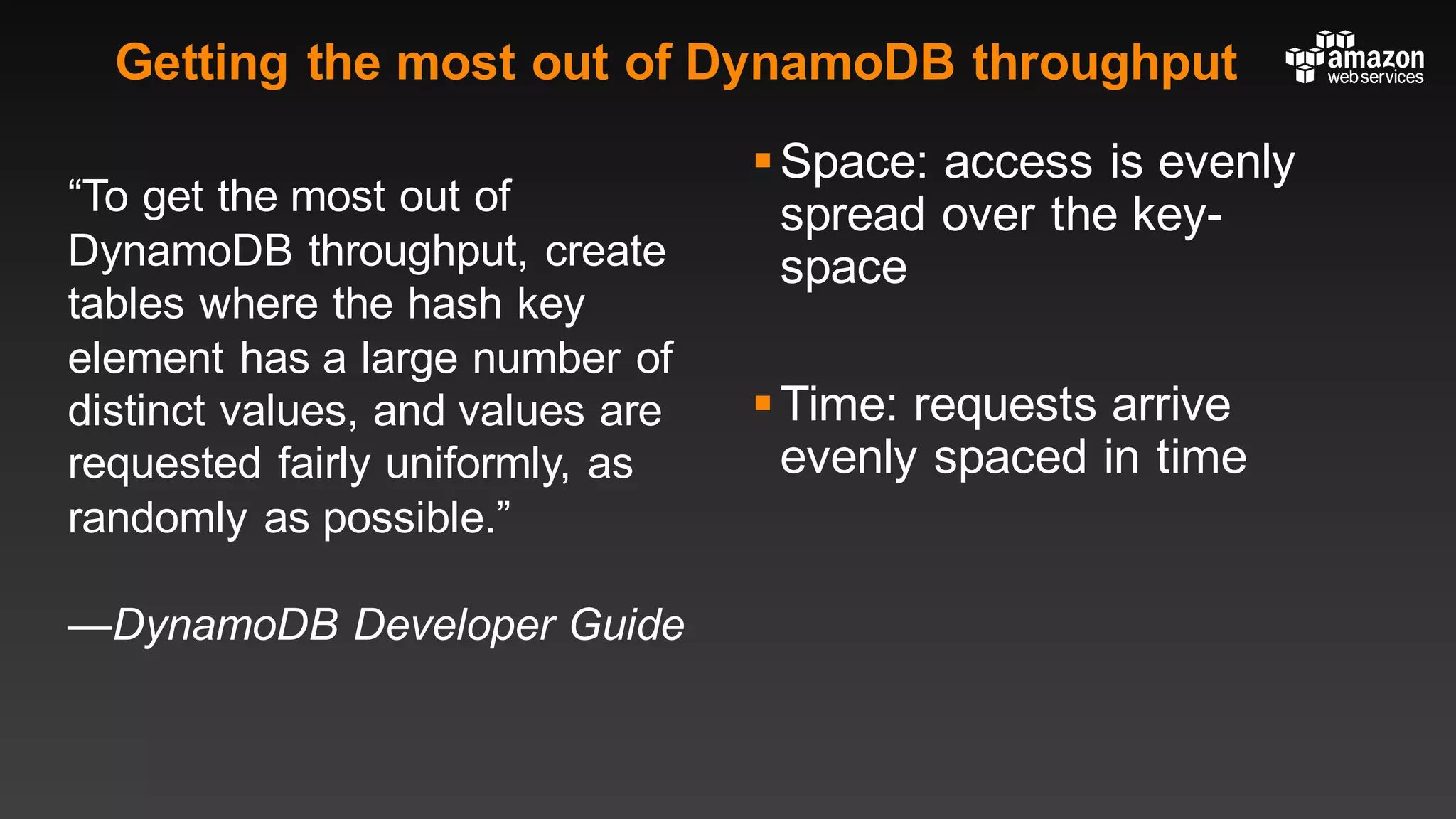

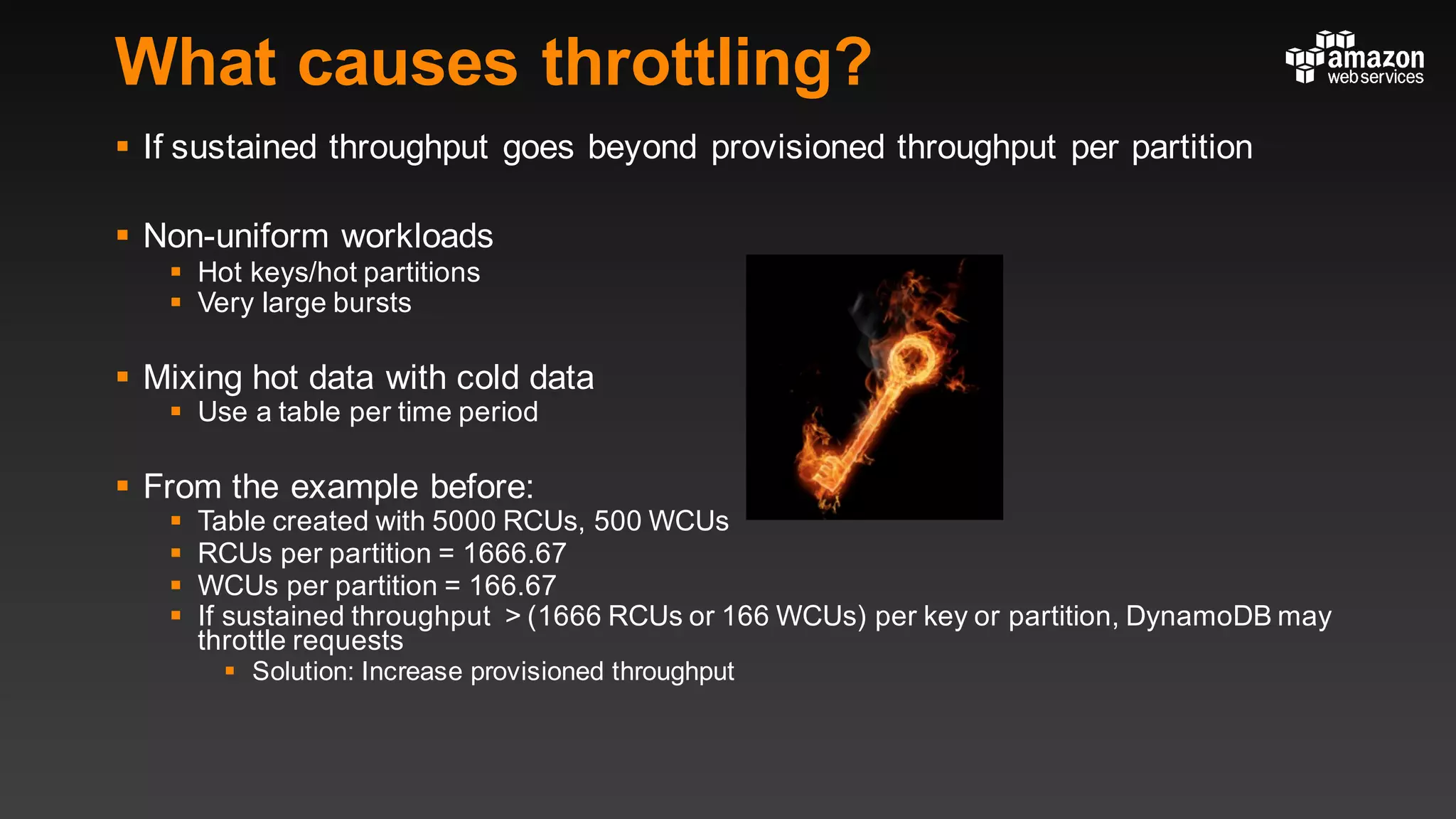

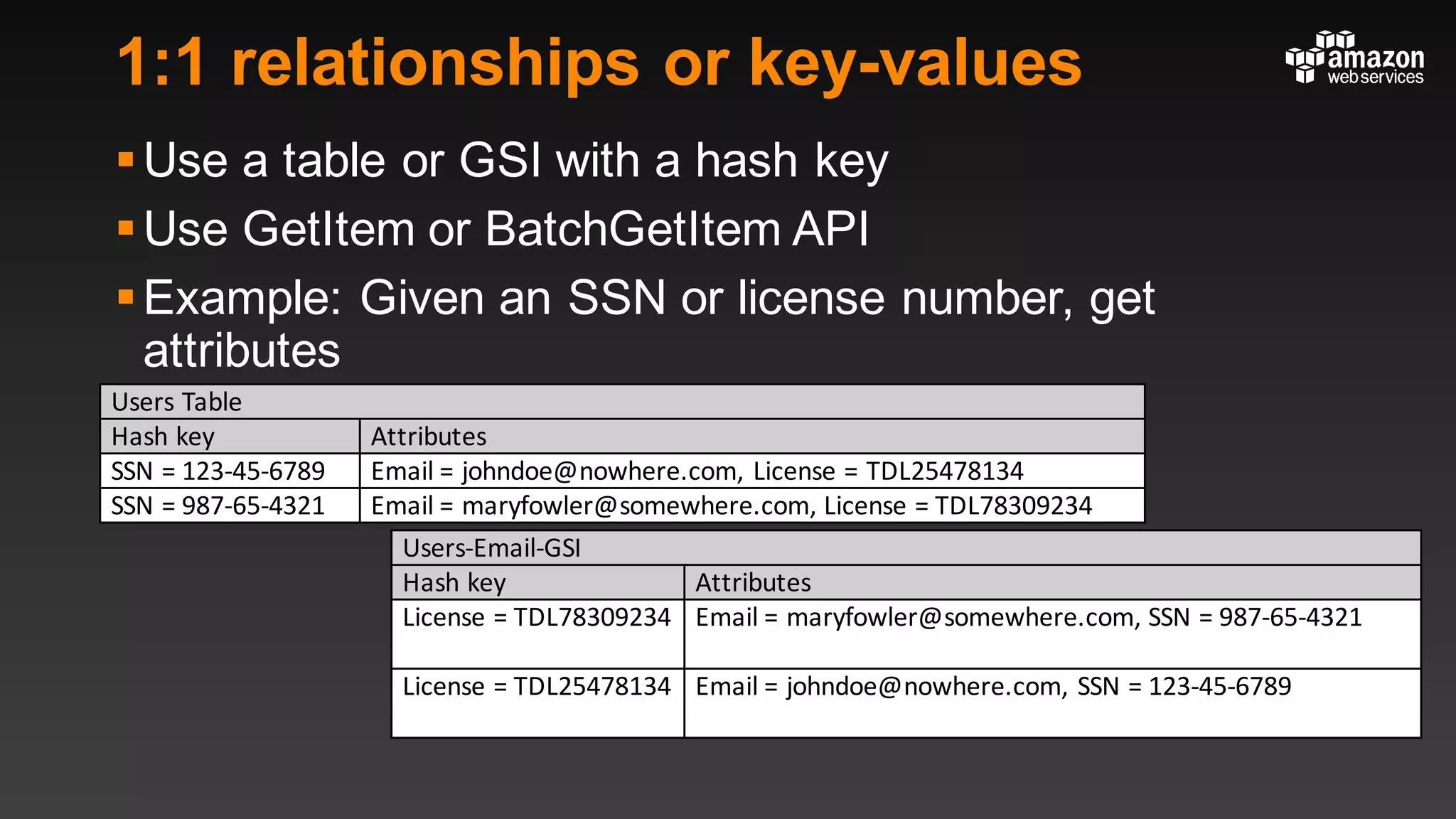

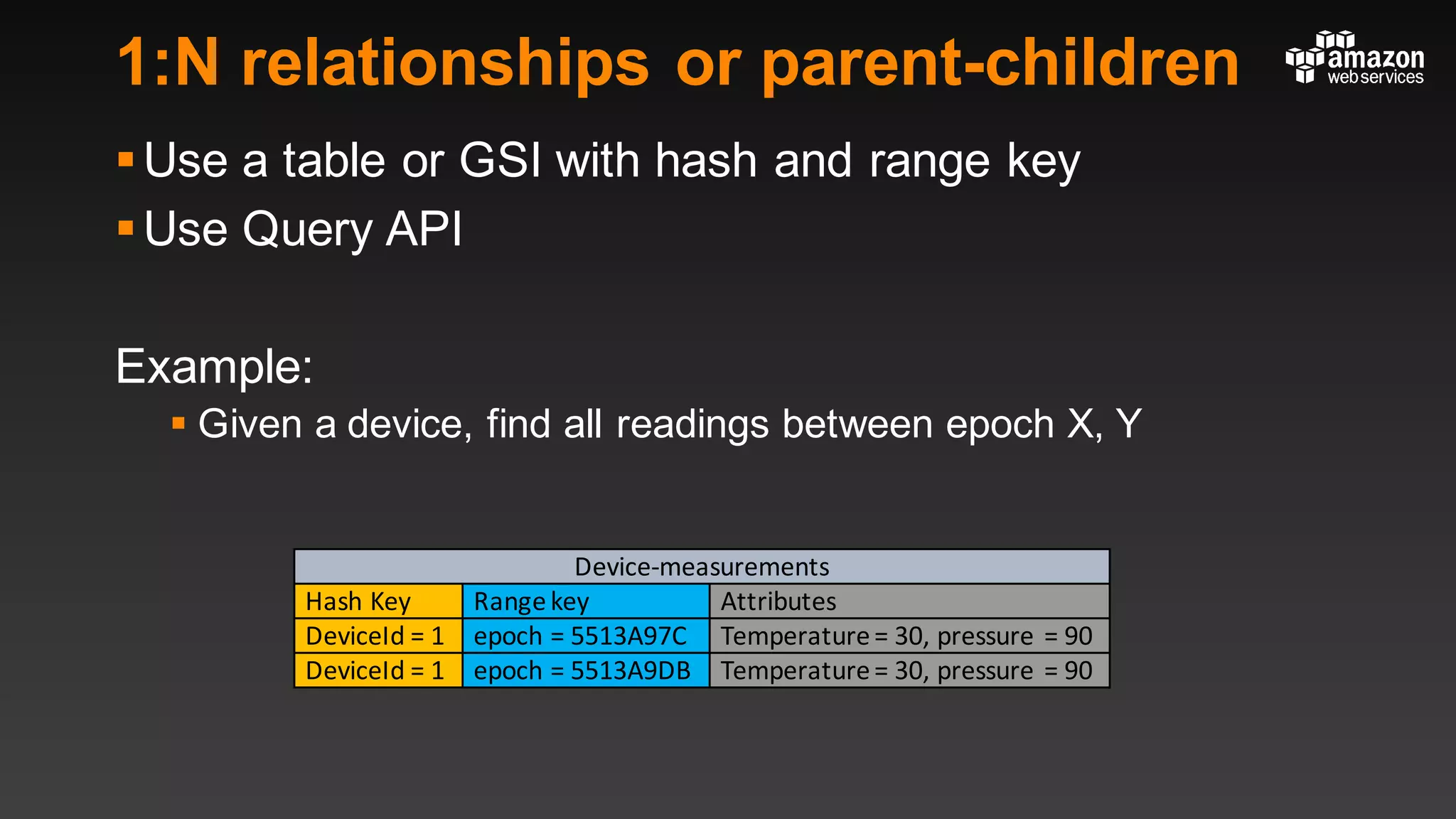

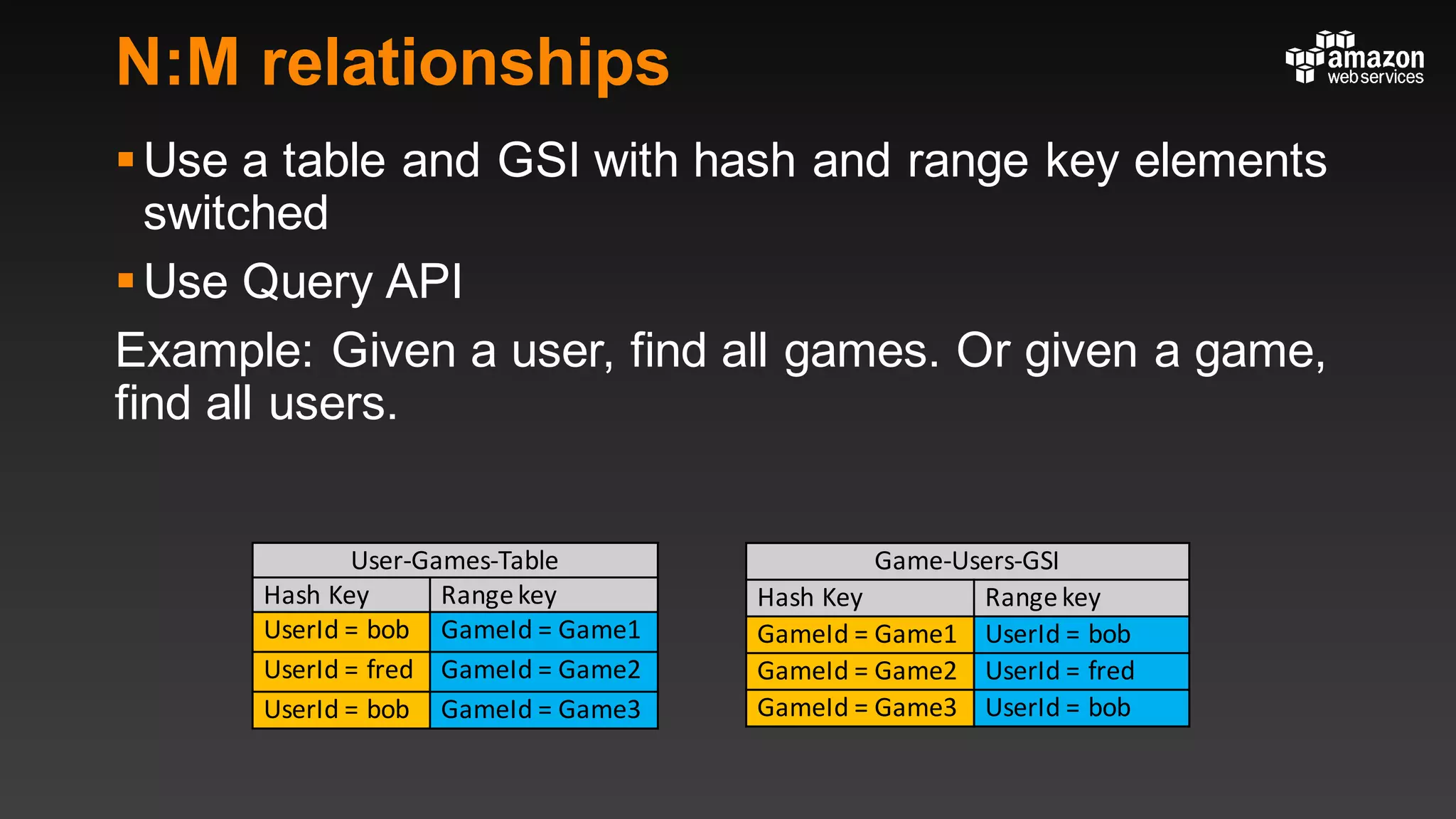

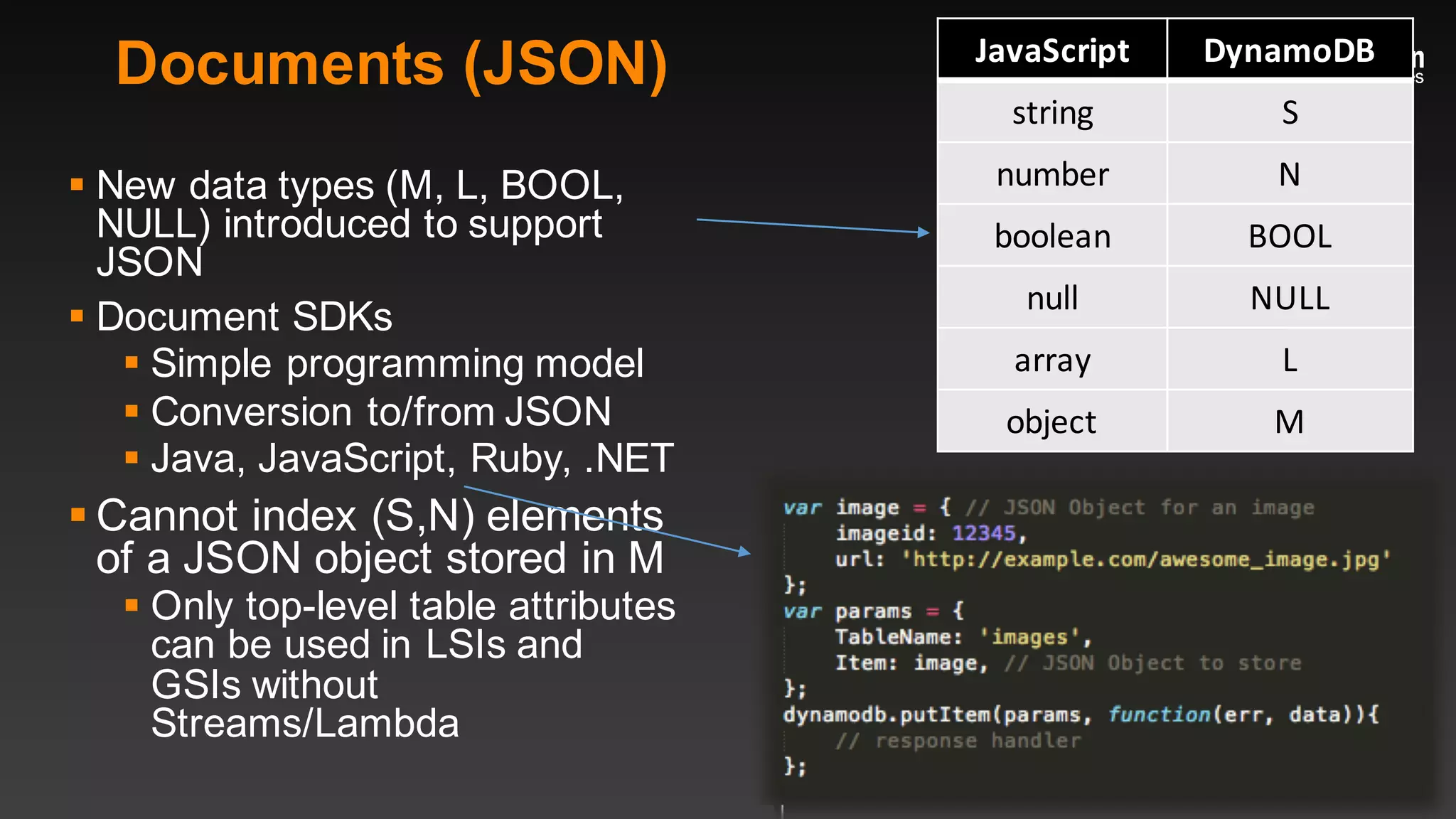

This document summarizes tips and best practices for using DynamoDB. It discusses using local secondary indexes (LSI) and global secondary indexes (GSI) to query data. It covers scaling DynamoDB tables by partitioning and provisioning throughput. Common data modeling patterns like one-to-one, one-to-many, and many-to-many relationships are presented. Best practices for time series data, caching frequently accessed items, and optimizing queries are provided. Examples of using DynamoDB for game analytics and metadata storage in S3 are also included.

![Rich expressions

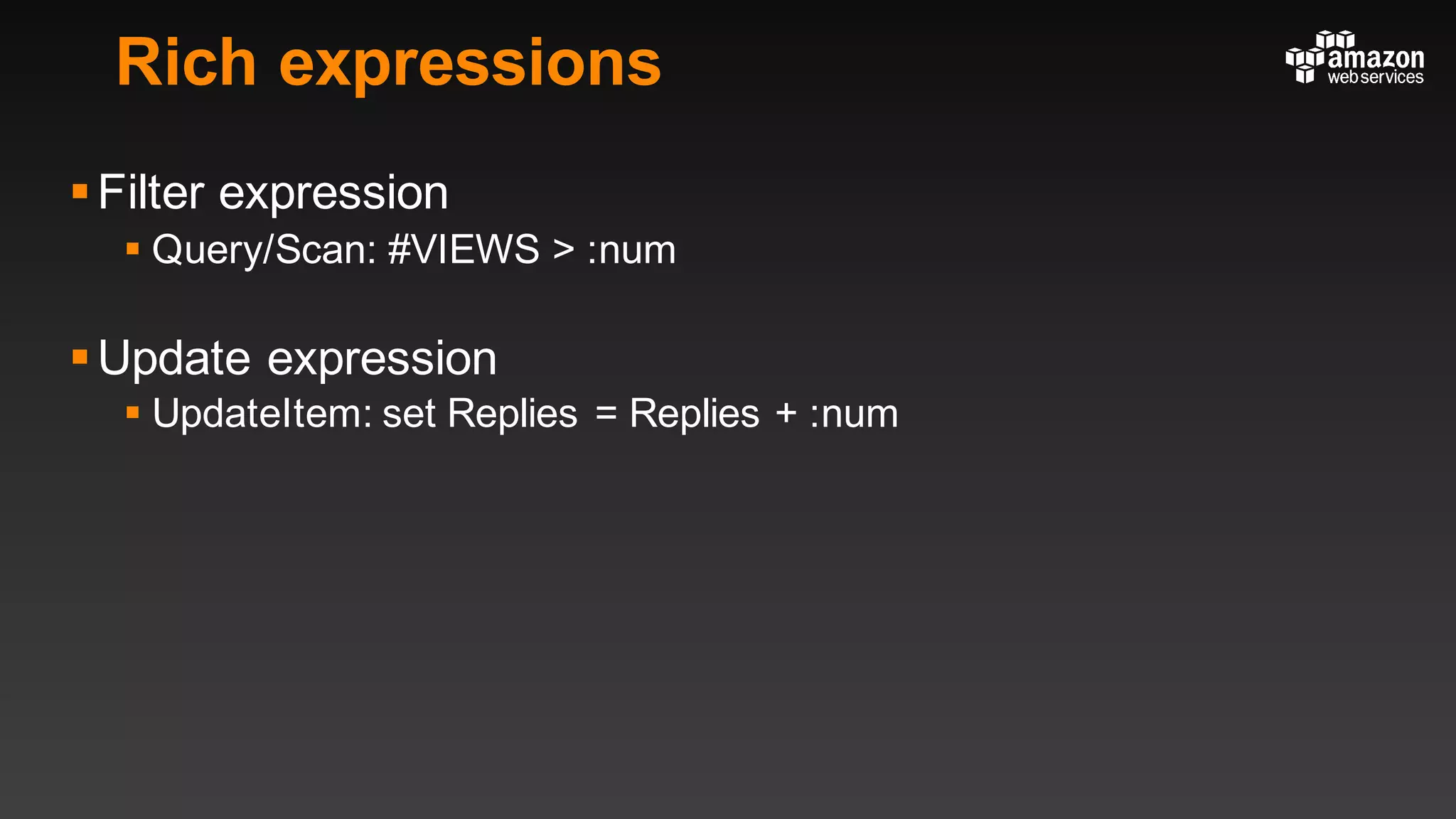

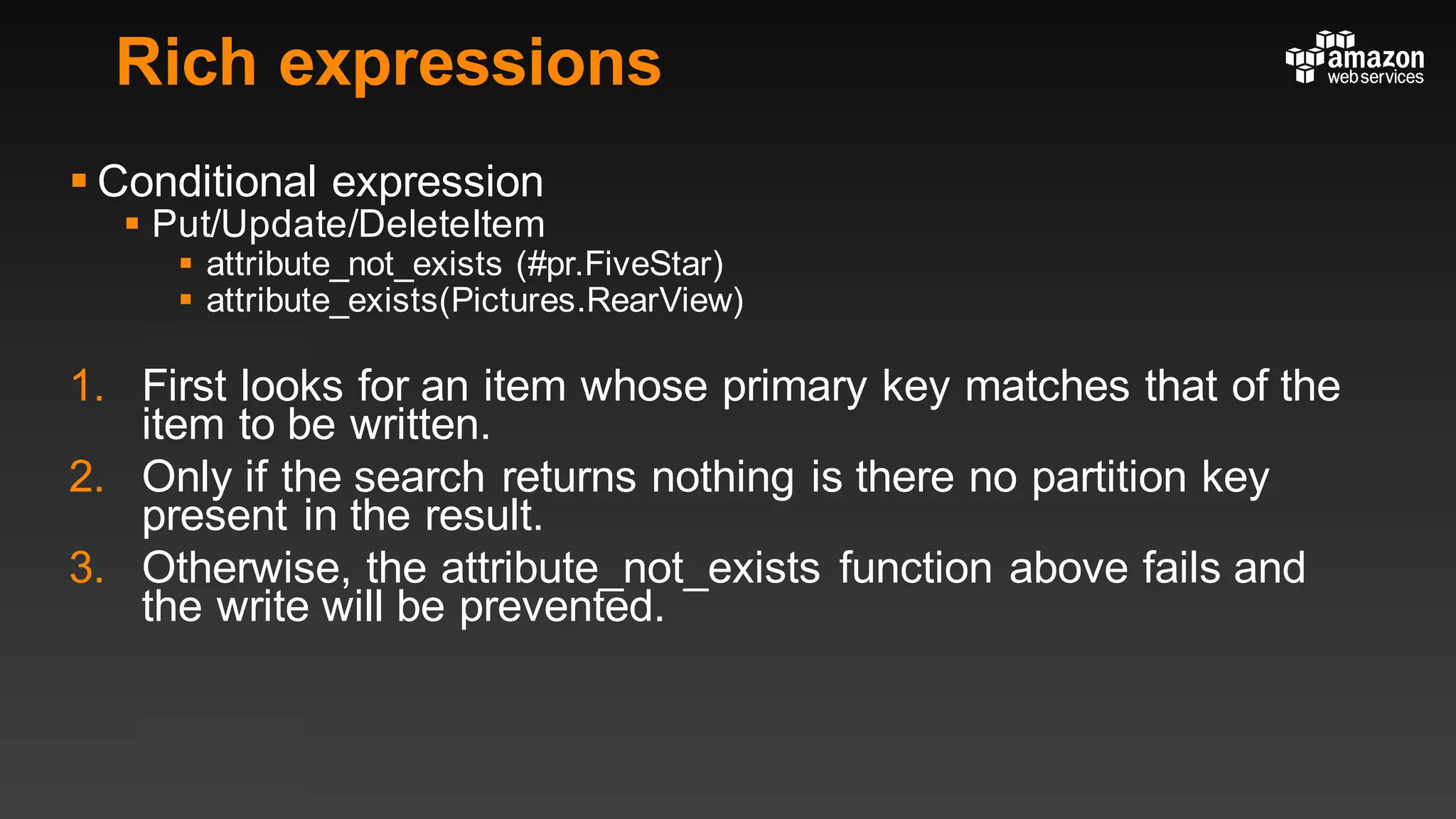

§Projection expression to get just some of the attributes

§ Query/Get/Scan: ProductReviews.FiveStar[0]](https://image.slidesharecdn.com/amazondynamodbfordevelopers-160502081353/75/Amazon-Dynamo-DB-for-Developers-AWS-DB-Day-23-2048.jpg)

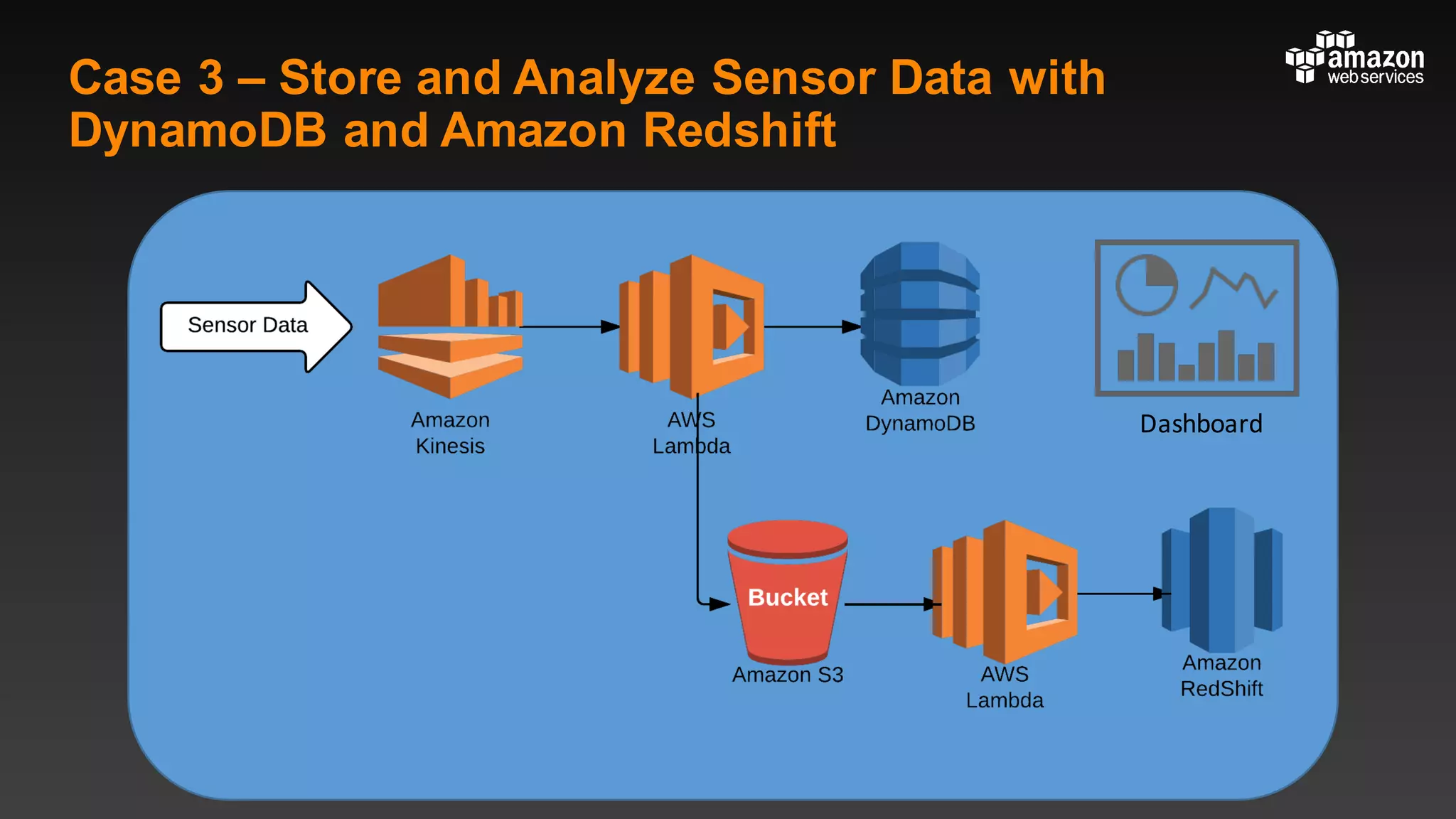

![Rich expressions

§Projection expression to get just some of the attributes

§ Query/Get/Scan: ProductReviews.FiveStar[0]

ProductReviews: {

FiveStar: [

"Excellent! Can't recommend it highly enough! Buy it!",

"Do yourself a favor and buy this." ],

OneStar: [

"Terrible product! Do not buy this." ] }

]

}](https://image.slidesharecdn.com/amazondynamodbfordevelopers-160502081353/75/Amazon-Dynamo-DB-for-Developers-AWS-DB-Day-24-2048.jpg)