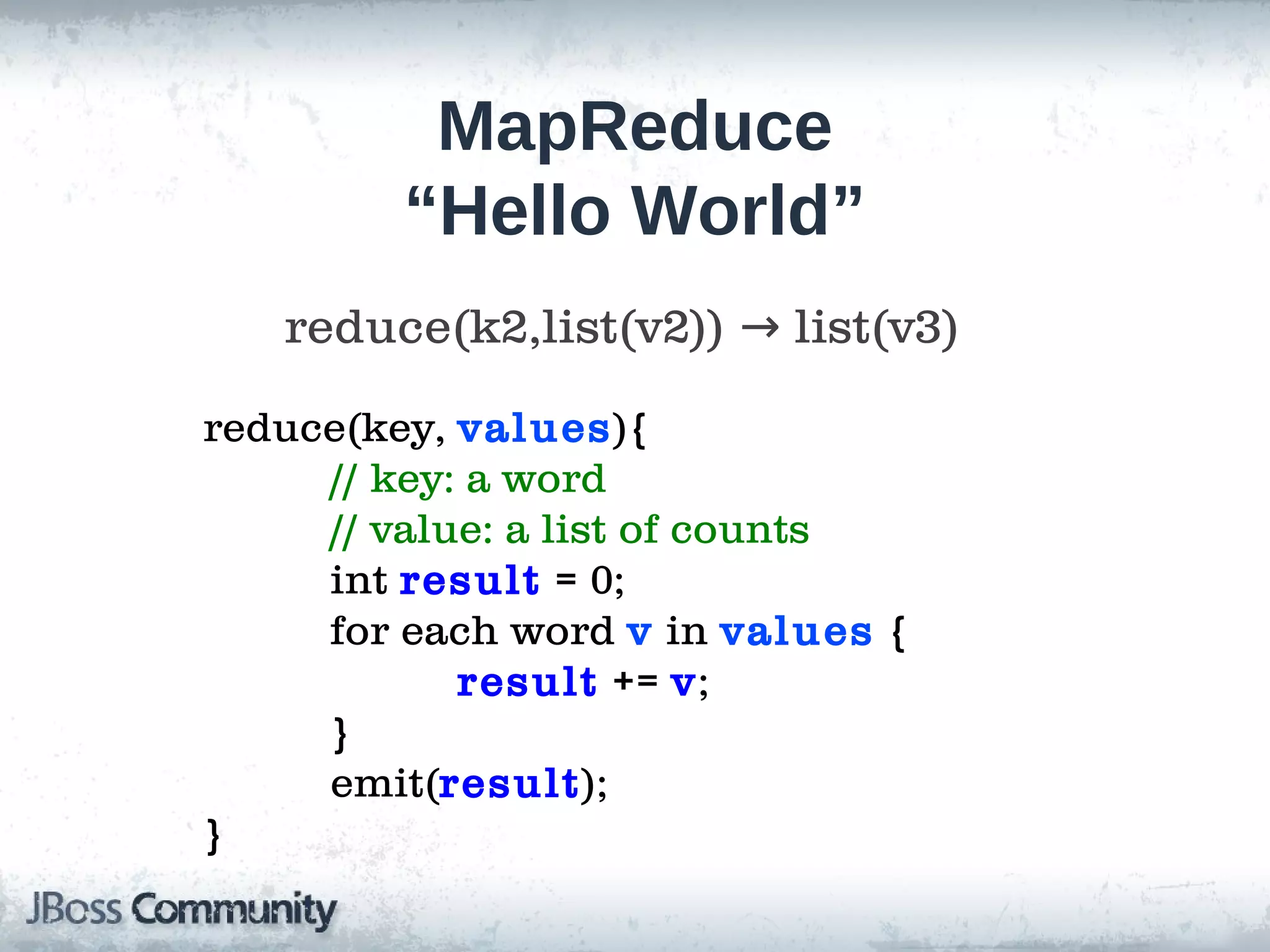

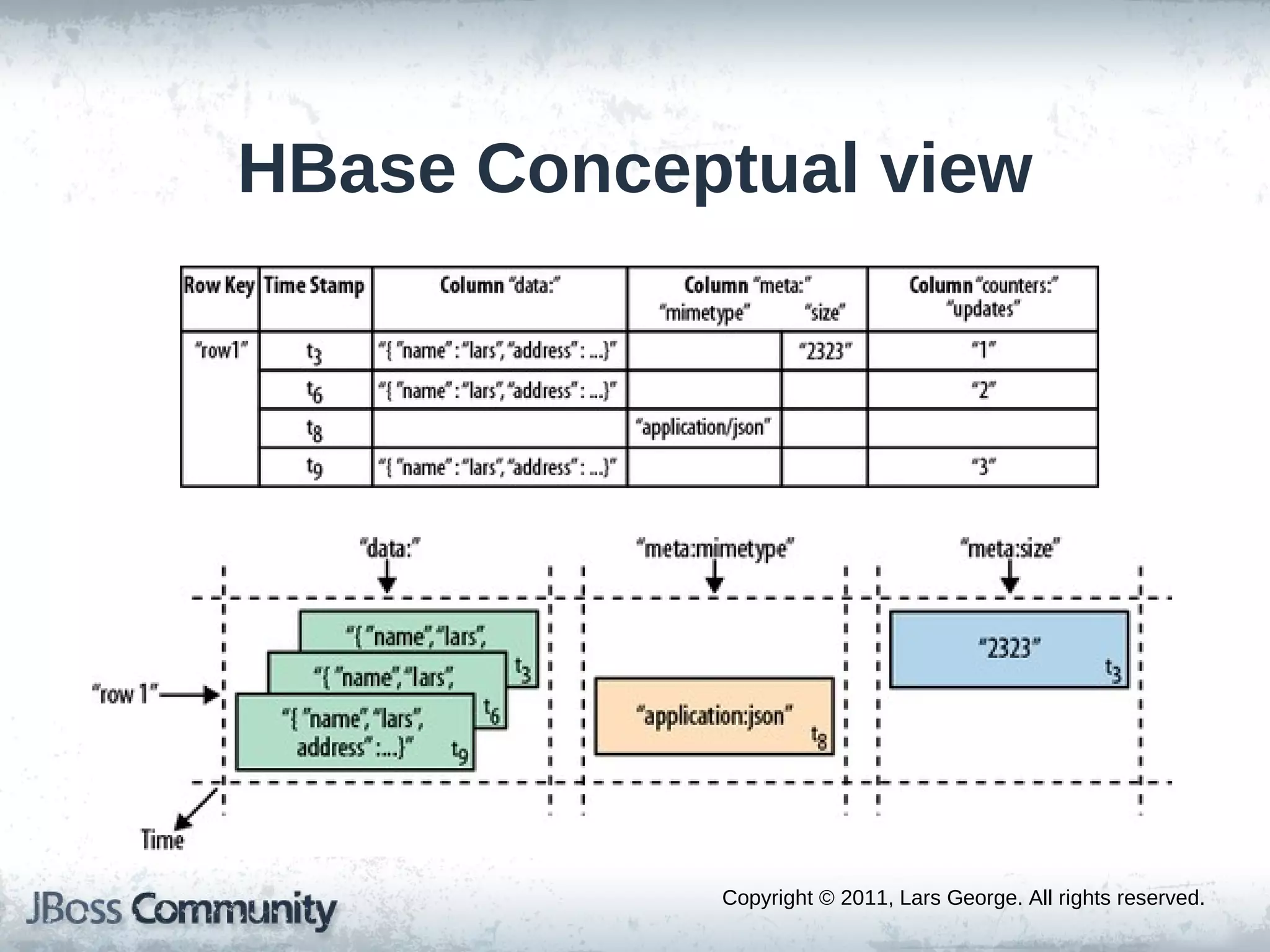

Hadoop is an open source software framework for distributed storage and processing of large datasets across clusters of computers. It implements the MapReduce programming model pioneered by Google and a distributed file system (HDFS). Mahout builds machine learning libraries on top of Hadoop. HBase is a non-relational distributed database modeled after Google's BigTable that provides random access and real-time read/write capabilities. These projects are used by many large companies for large-scale data processing and analytics tasks.

![Hadoop

• Open Source (ASL2) implementation of

Google's MapReduce[1] and Google

DFS (Distributed File System) [2]

(~from 2006 Lucene subproject)

[1] MapReduce: Simplified Data Processing on Large Scale Clusters by

Jeffrey Dean and Sanjay Ghemawat, Google labs, 2004

[2] The Google File System by Sanjay Ghemawat, Howard Gobioff, Shun-Tak

Leung, 2003](https://image.slidesharecdn.com/hadoopmahouthbase-120504080317-phpapp01/75/An-Introduction-to-Apache-Hadoop-Mahout-and-HBase-4-2048.jpg)