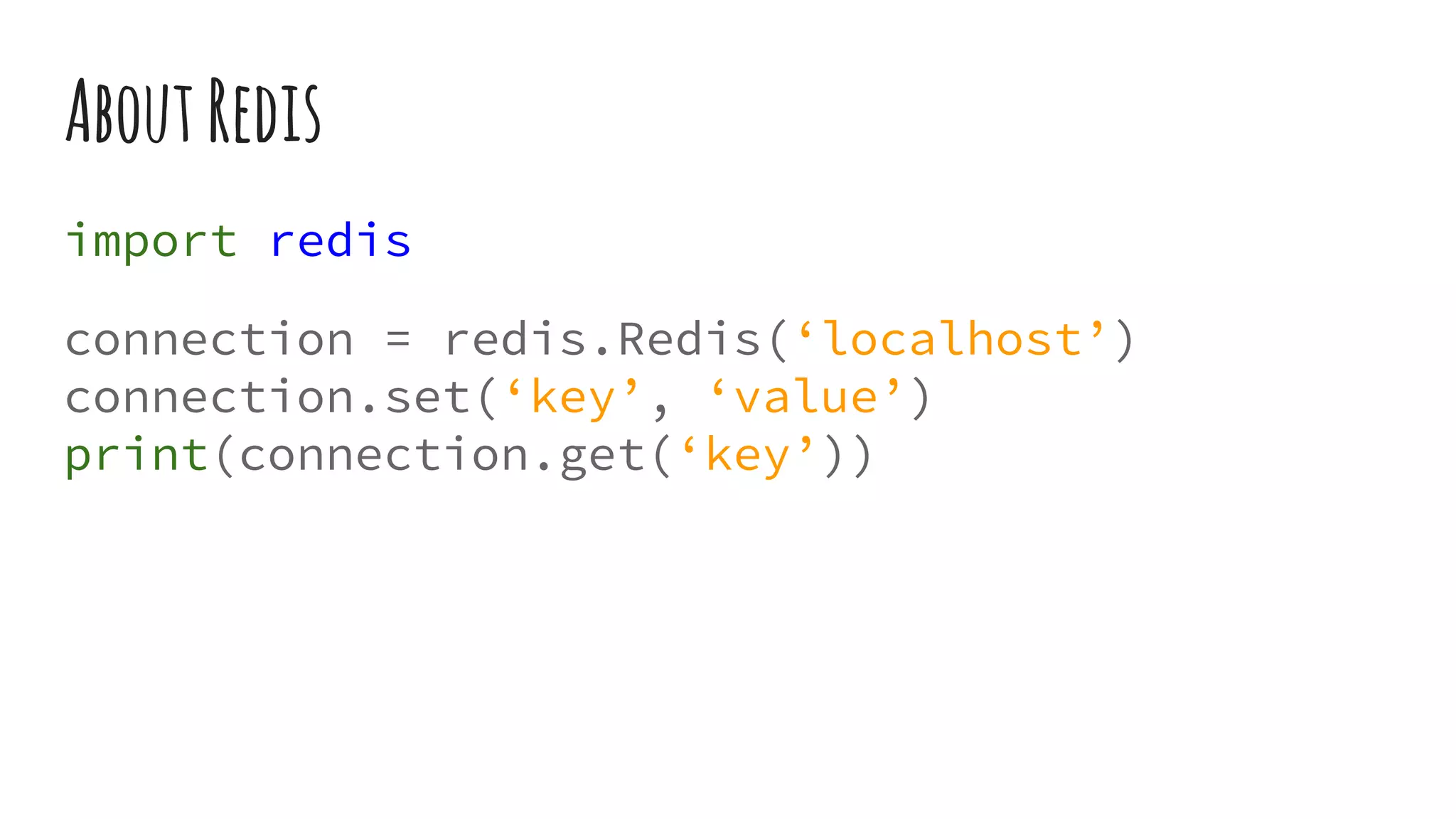

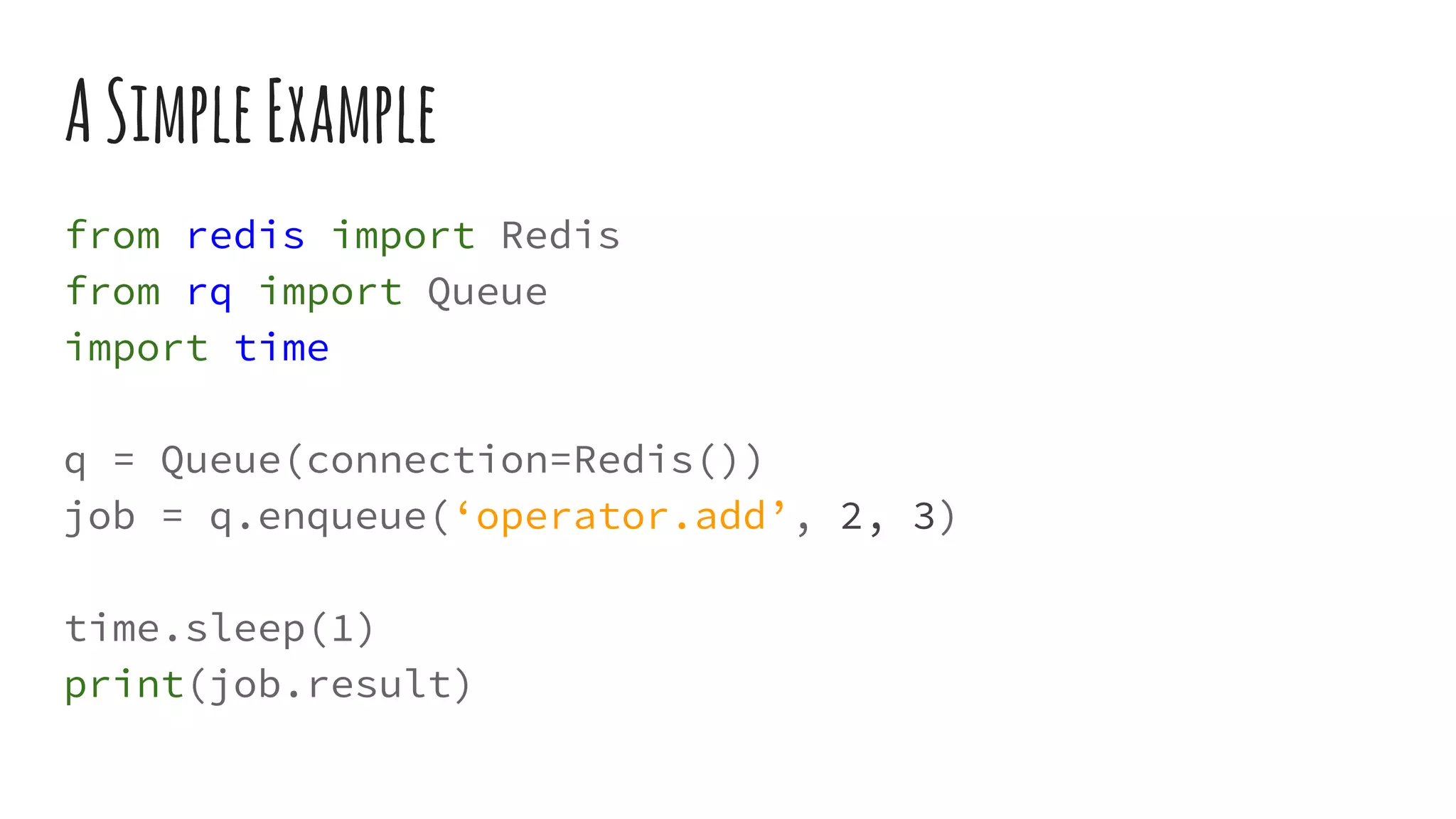

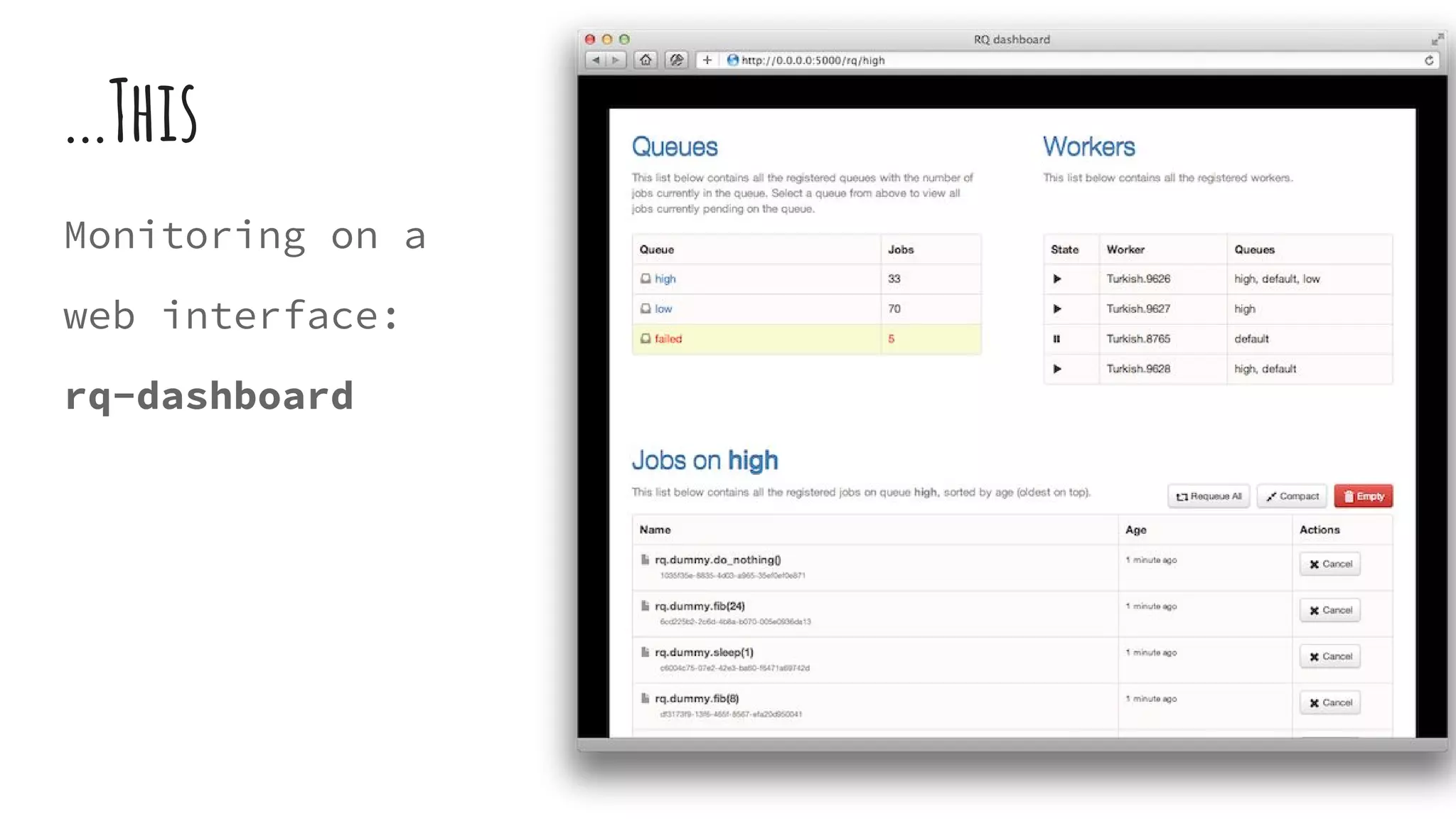

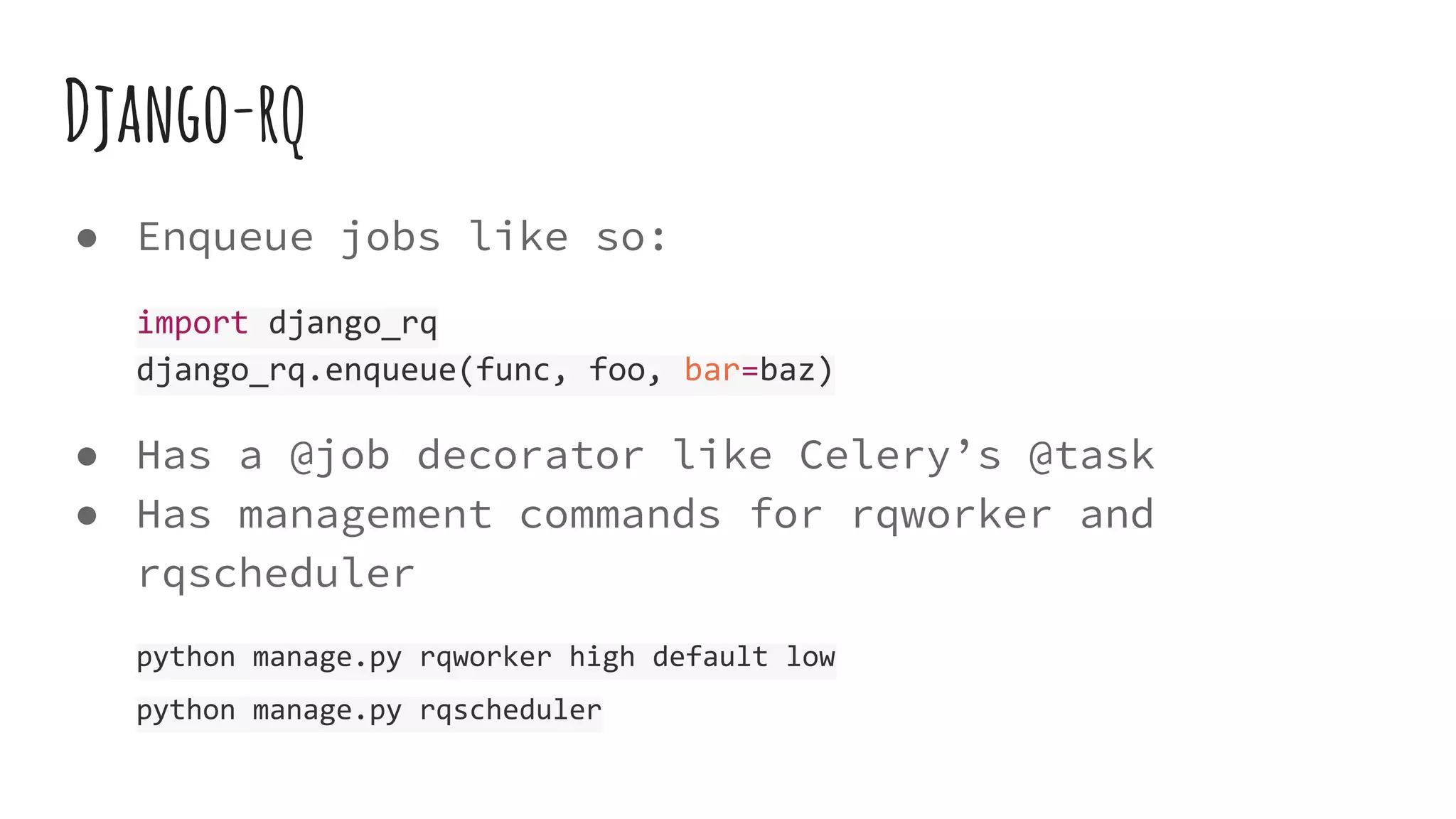

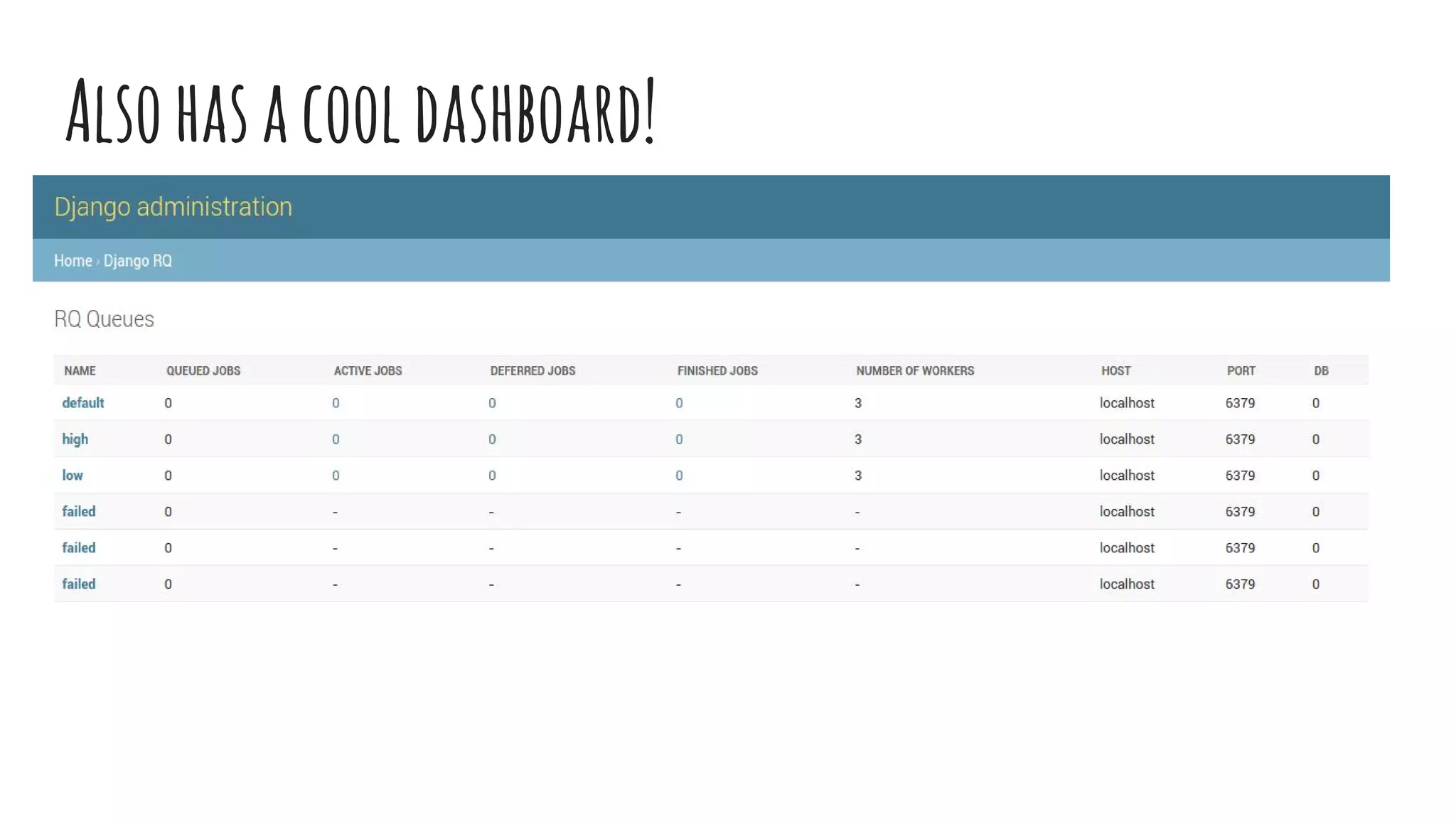

The document discusses asynchronous job queuing with a focus on Redis Queue (RQ) as a lightweight alternative to Celery for processing background jobs in Python applications. It highlights the challenges of using Celery, such as complex setup and configuration, and outlines the advantages of RQ, including ease of use and integration with Django. The document provides examples and tips for utilizing RQ effectively, concluding that RQ is suitable for simpler projects while suggesting Celery for more complex needs.