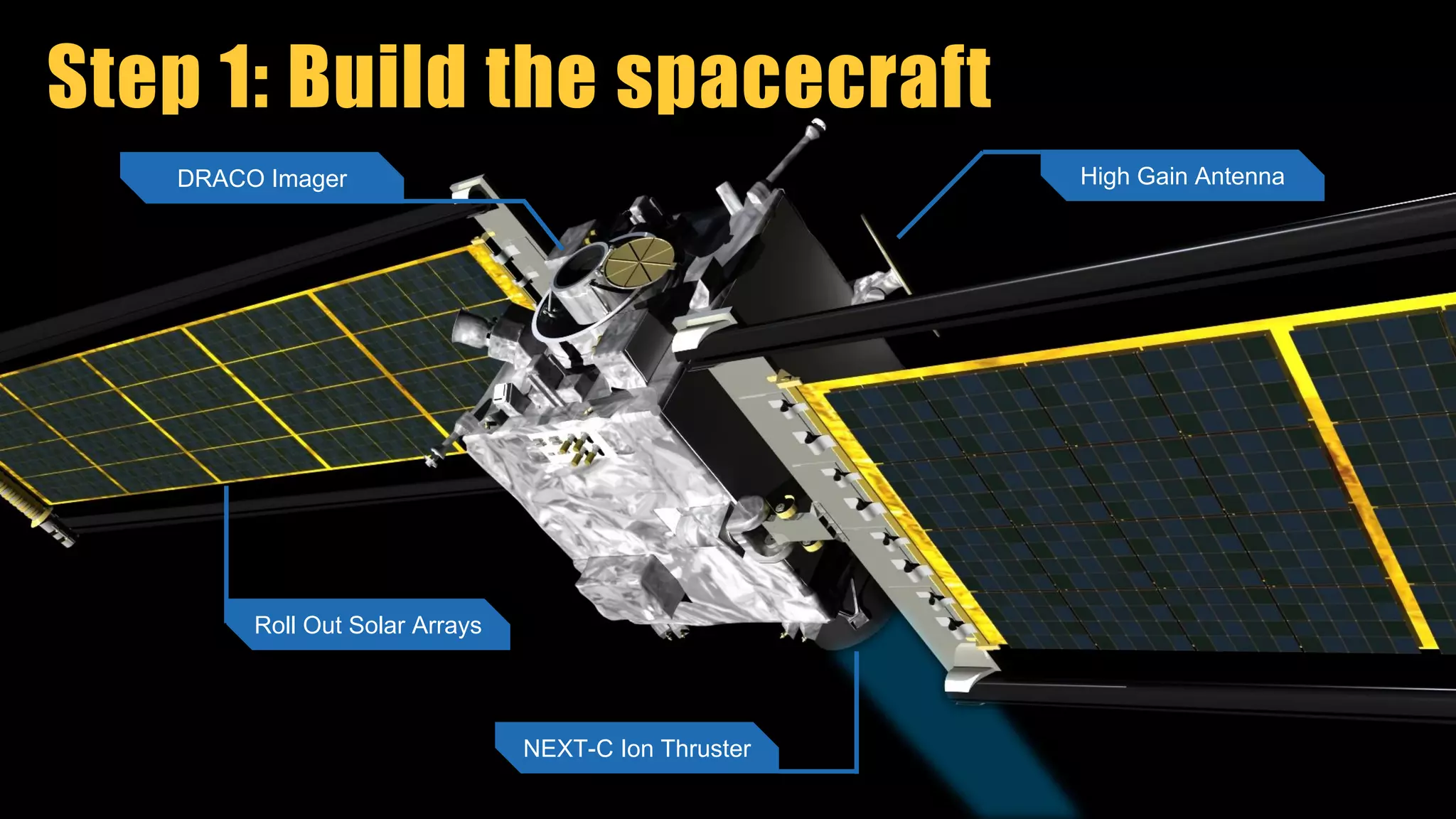

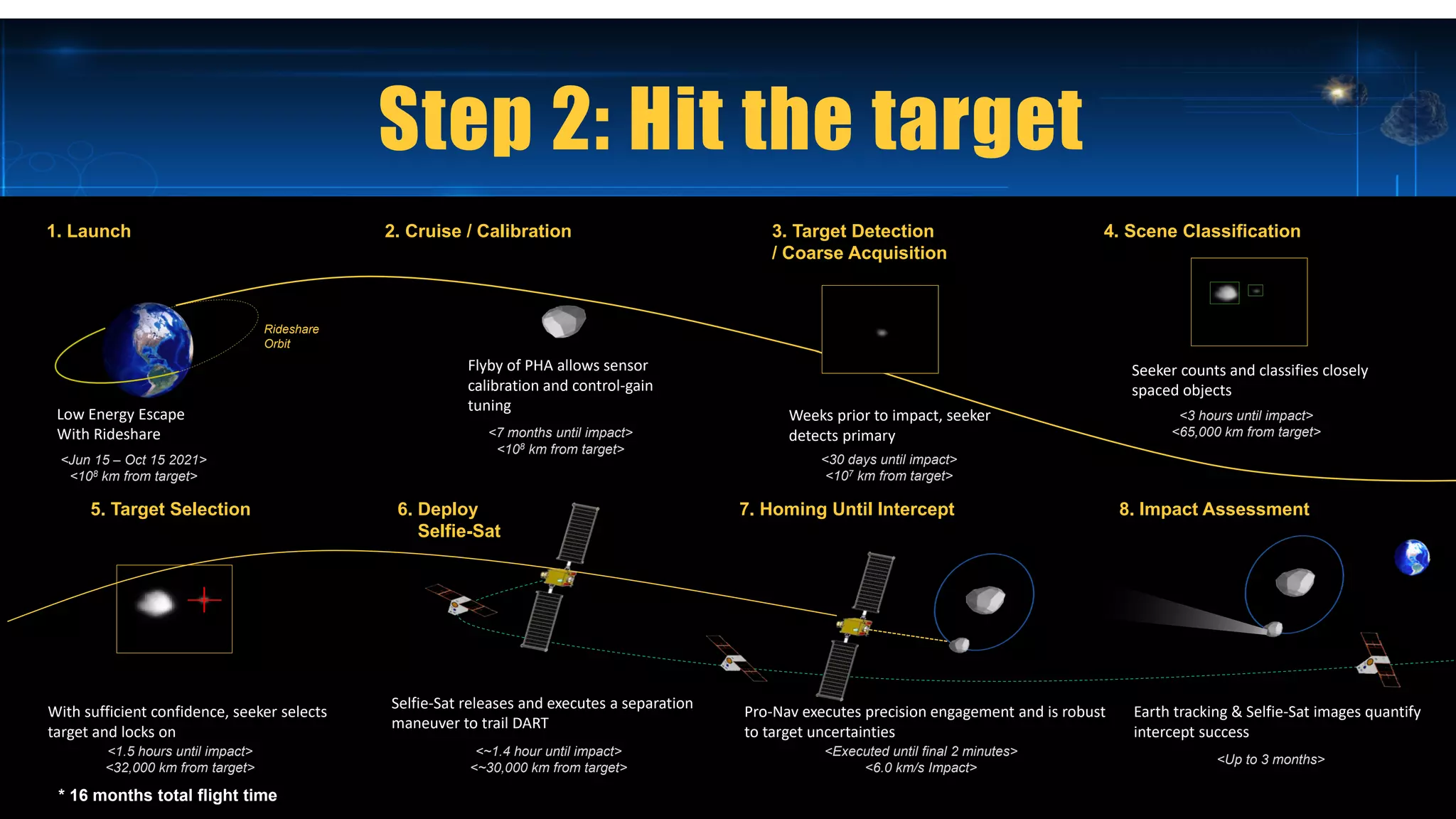

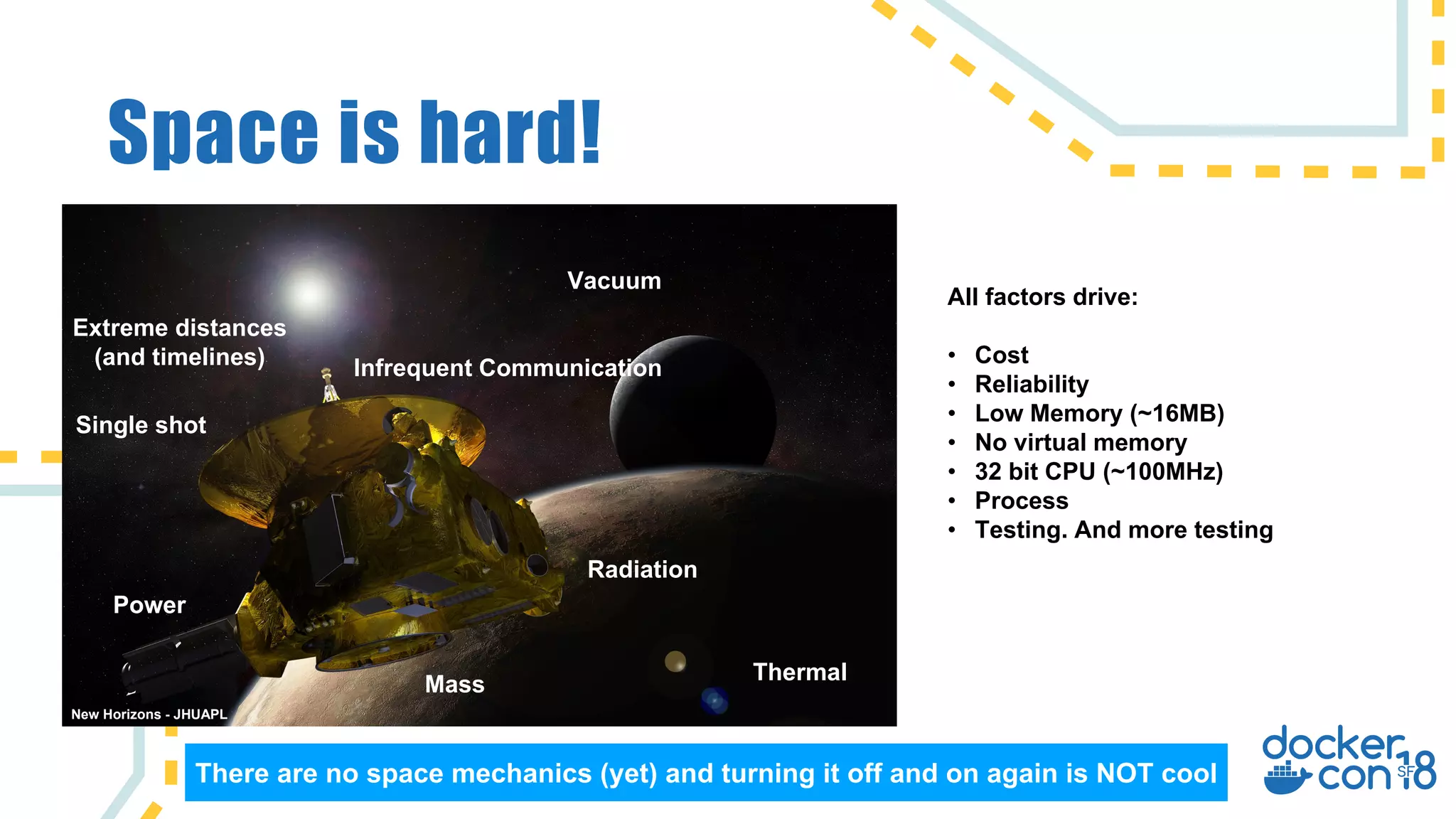

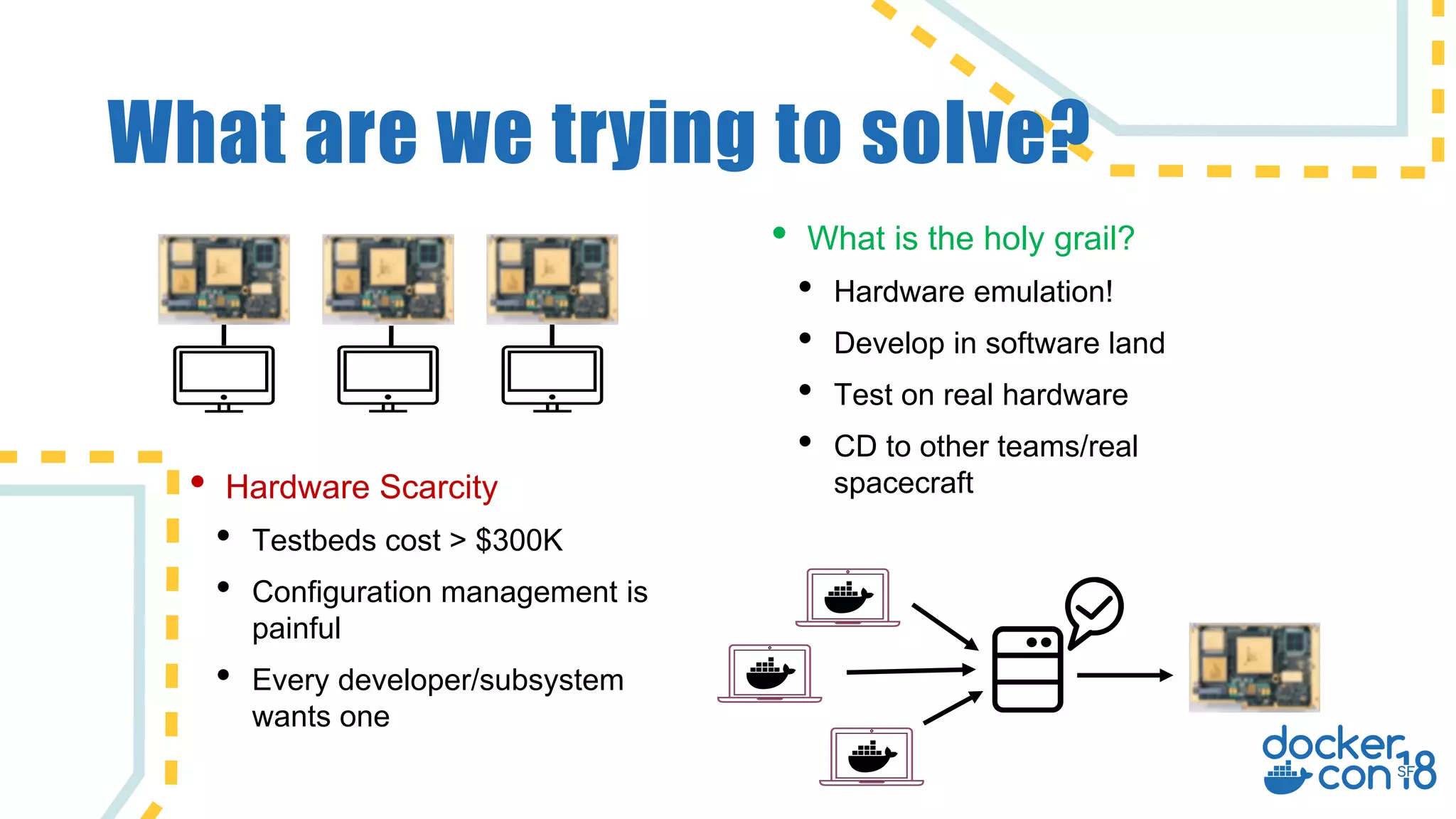

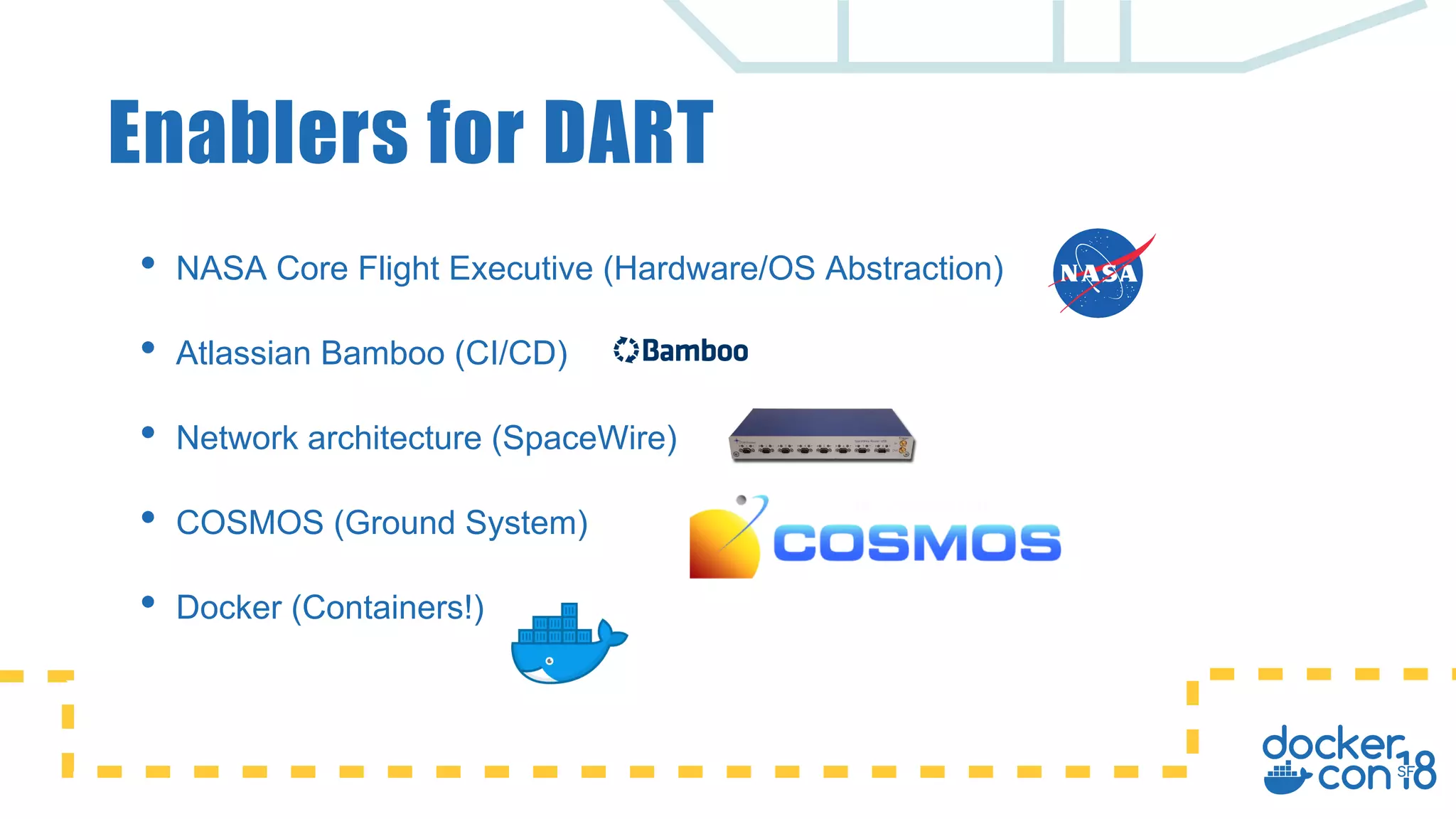

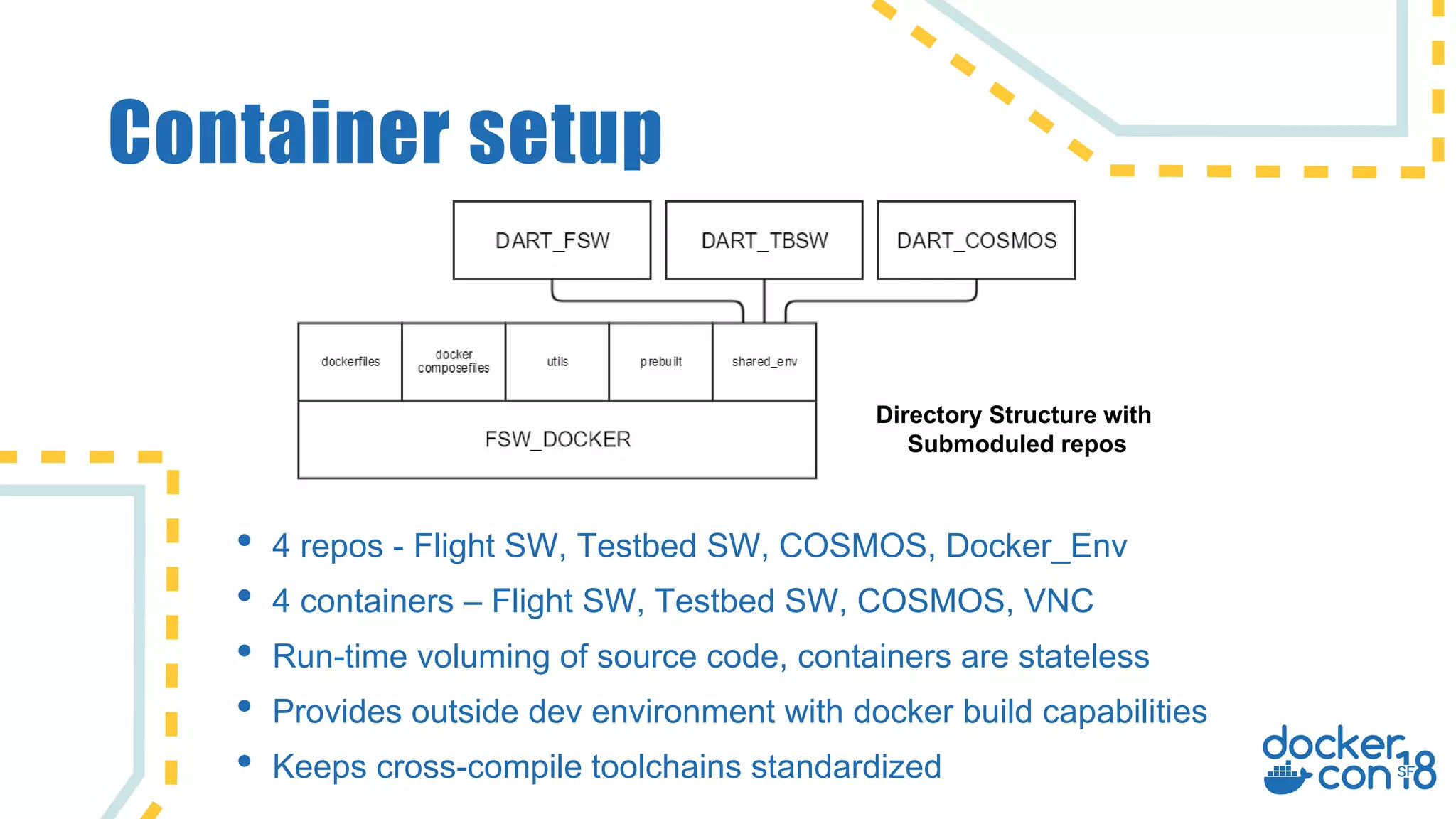

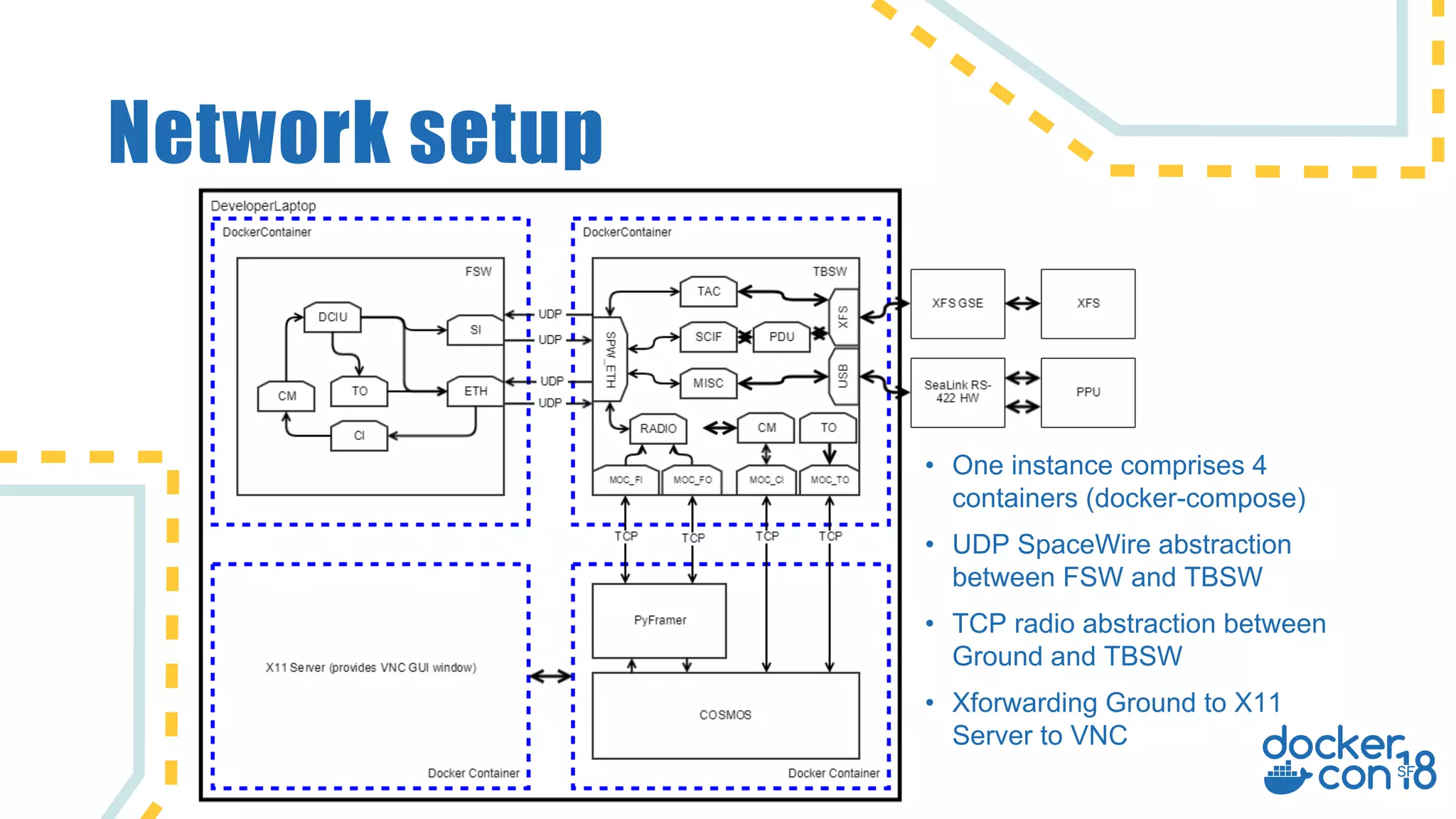

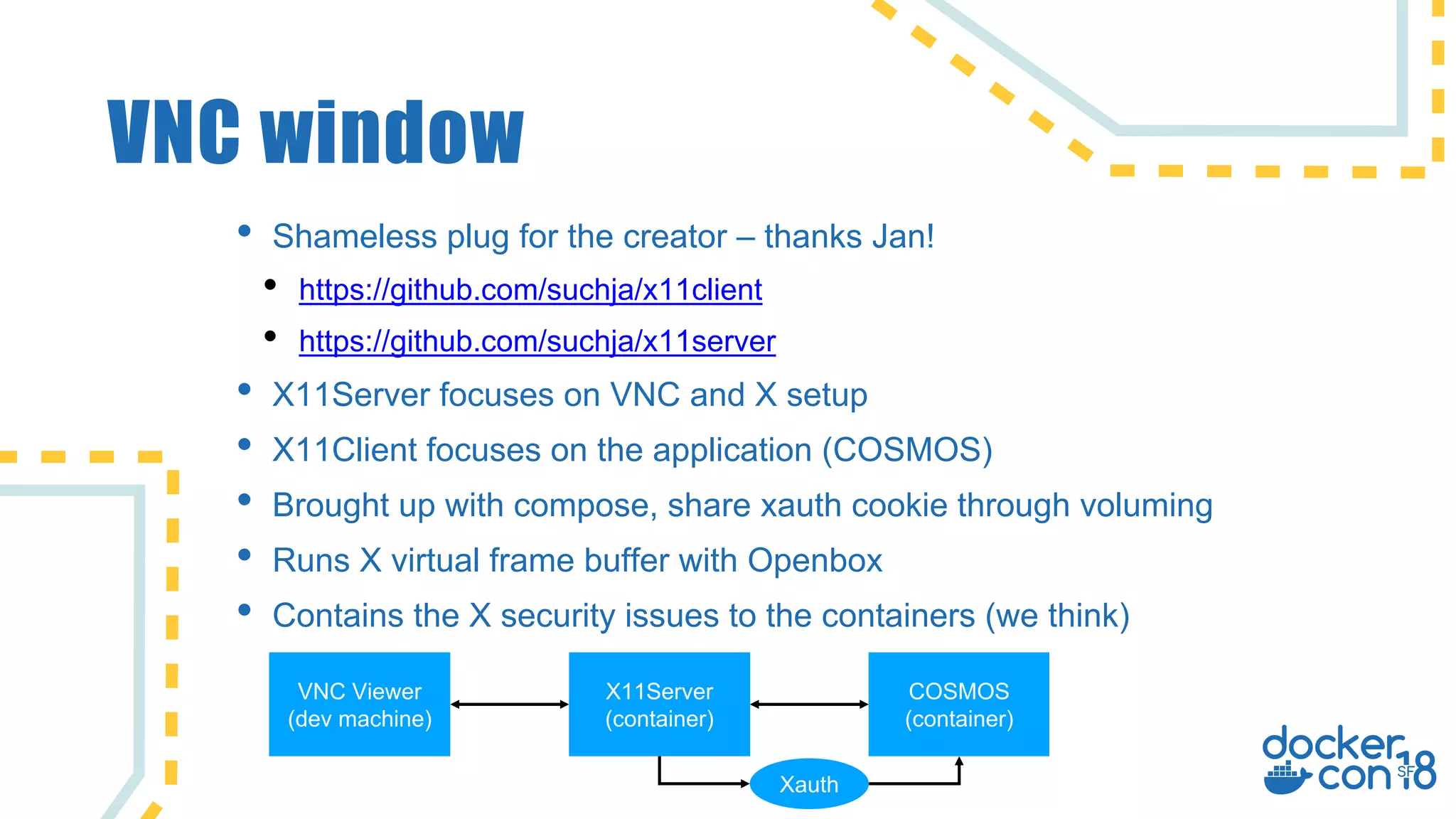

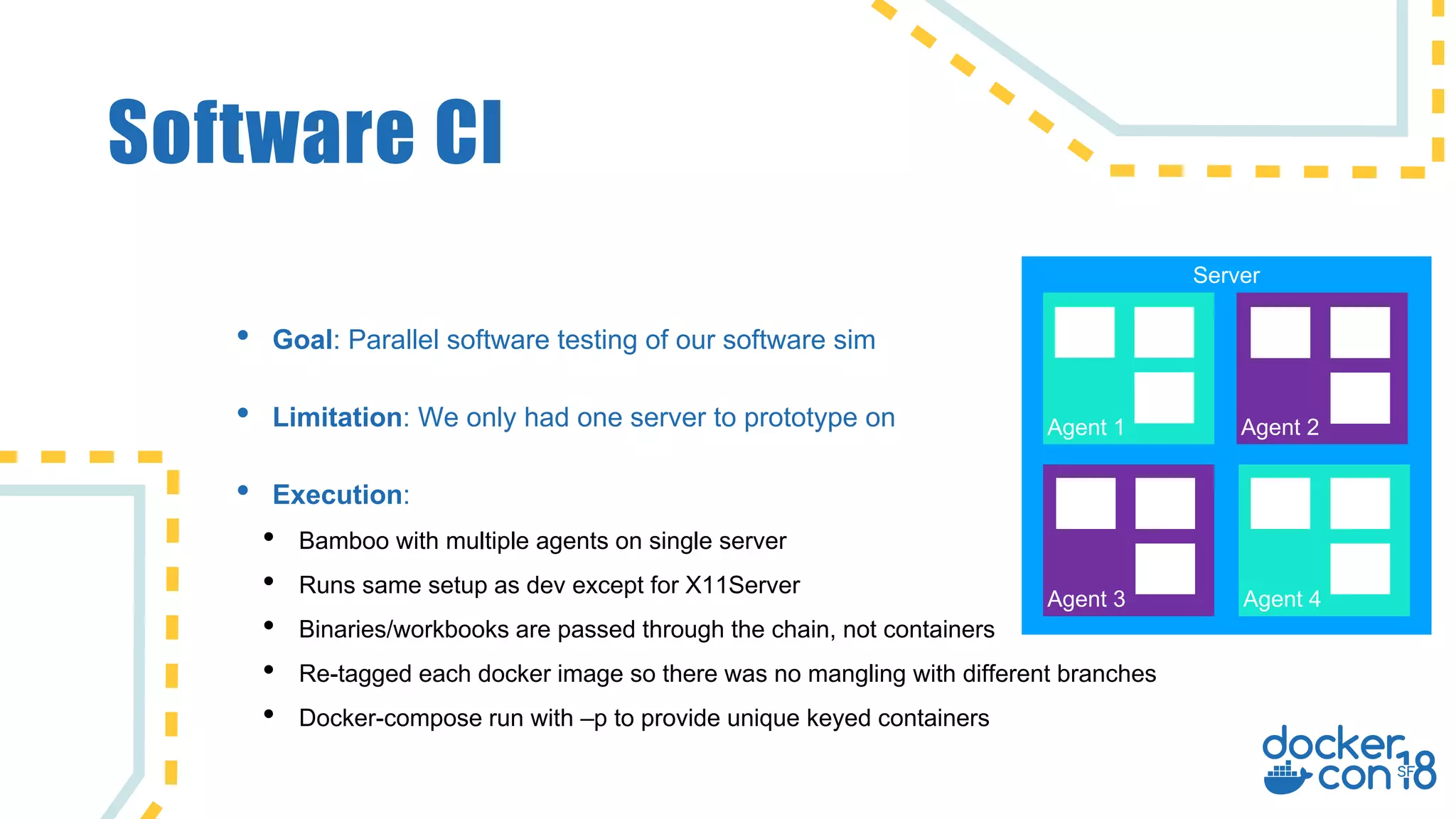

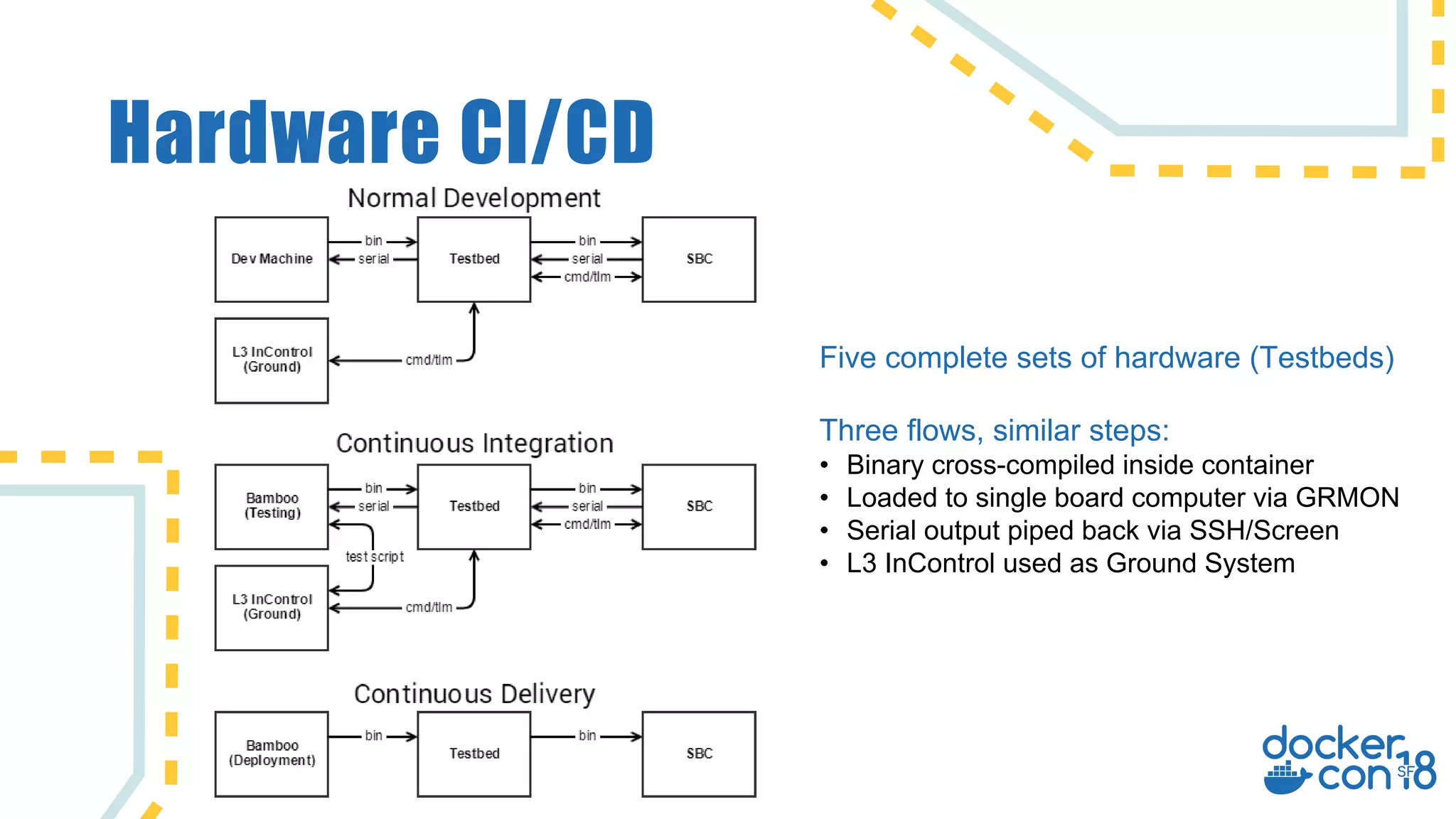

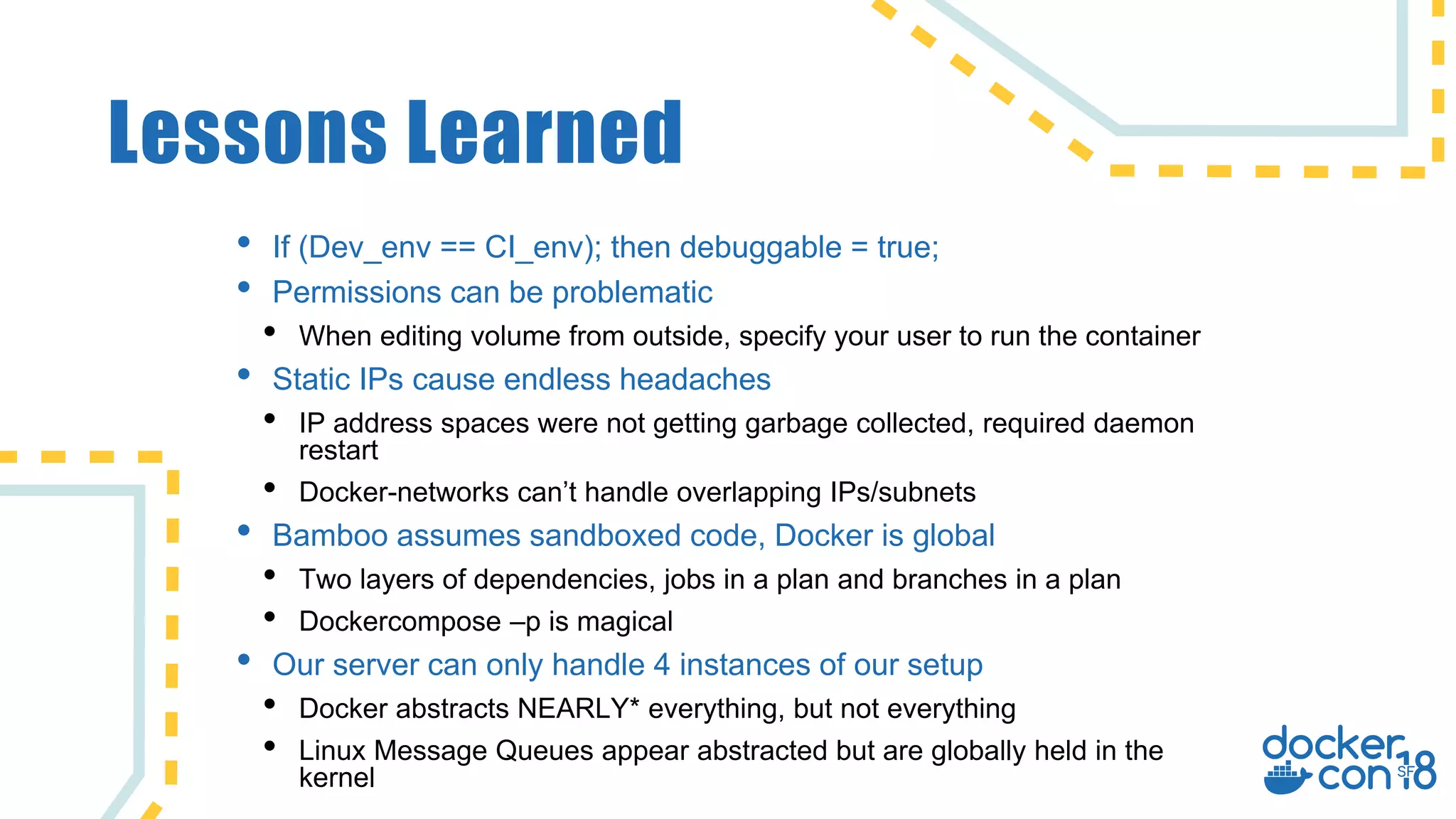

The document discusses the use of Docker for automated hardware testing in NASA's DART mission, aimed at demonstrating a kinetic impact technique to redirect asteroids. It outlines the mission's phases, challenges in space software development, and the adoption of containerization for improving development environments and CI/CD processes. The document also highlights lessons learned and future steps to enhance the testing infrastructure and streamline operations.