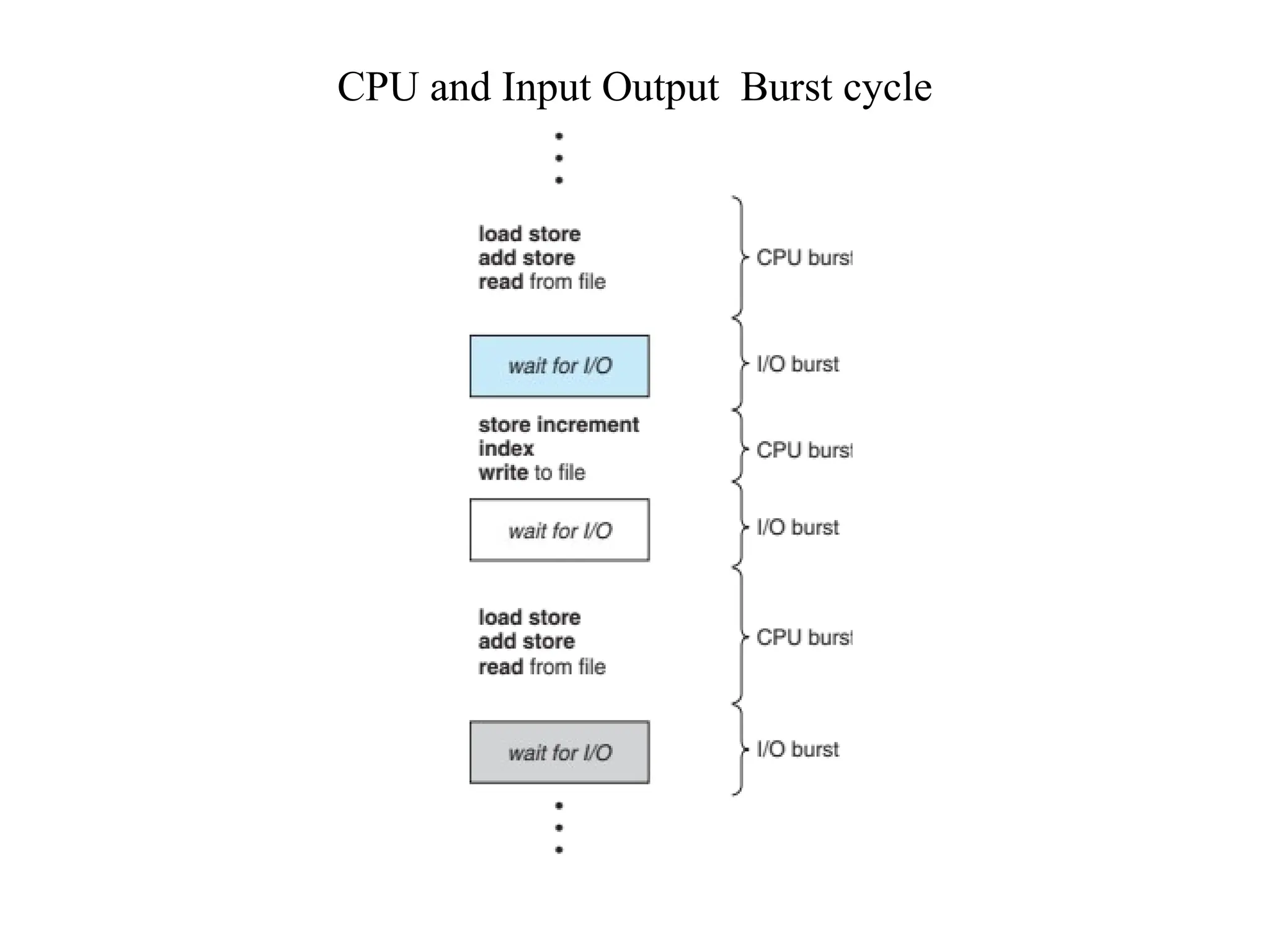

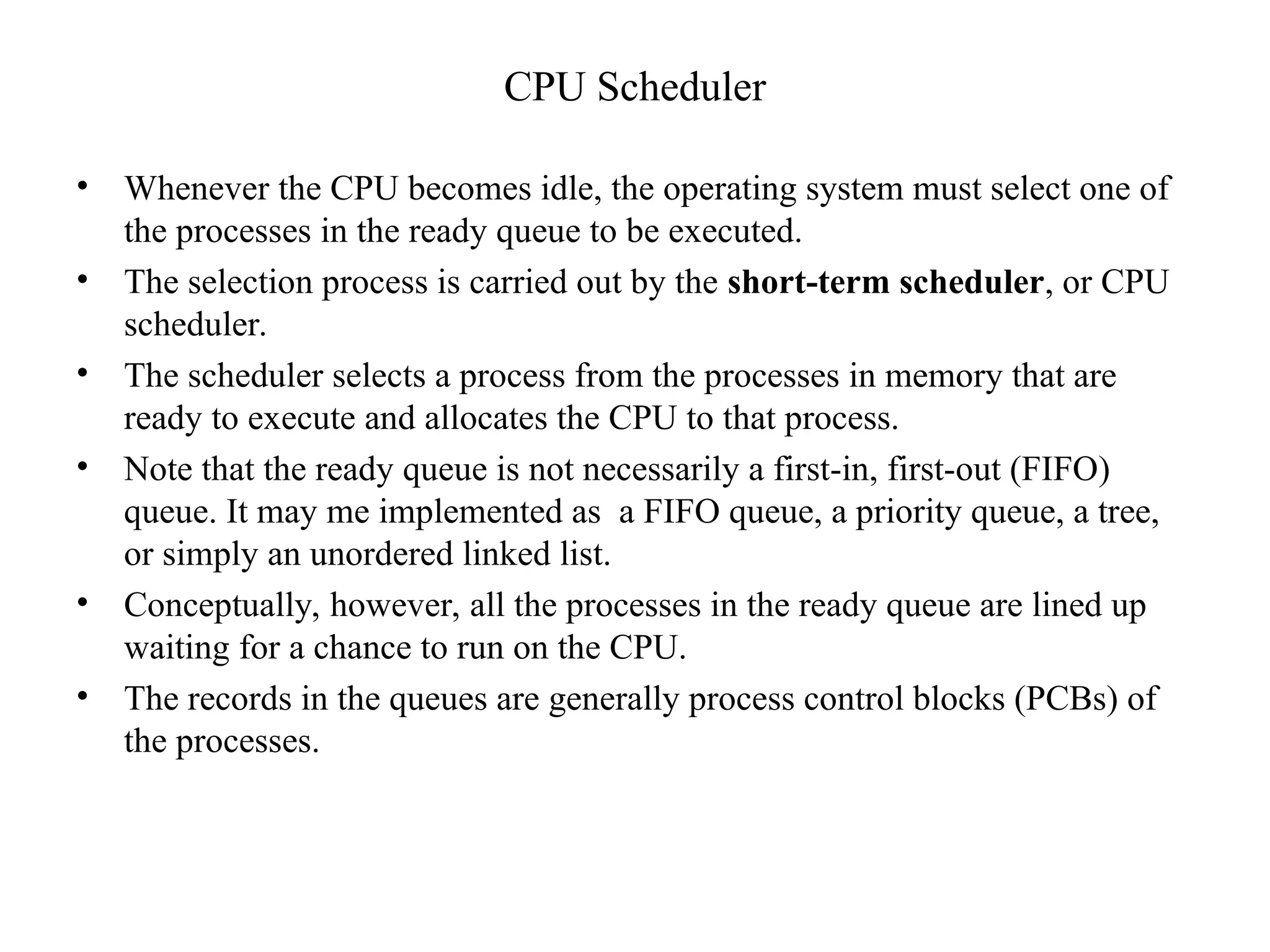

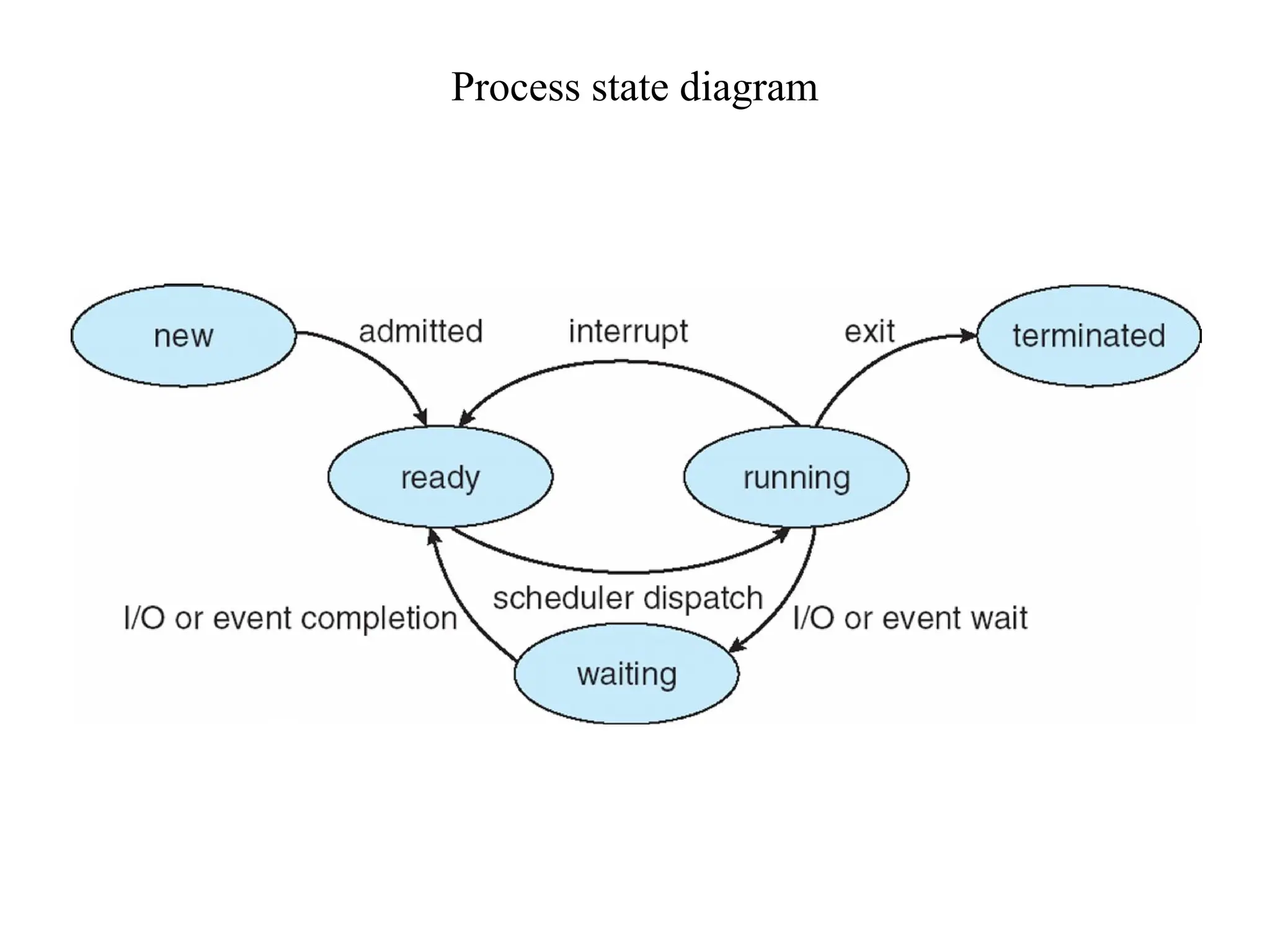

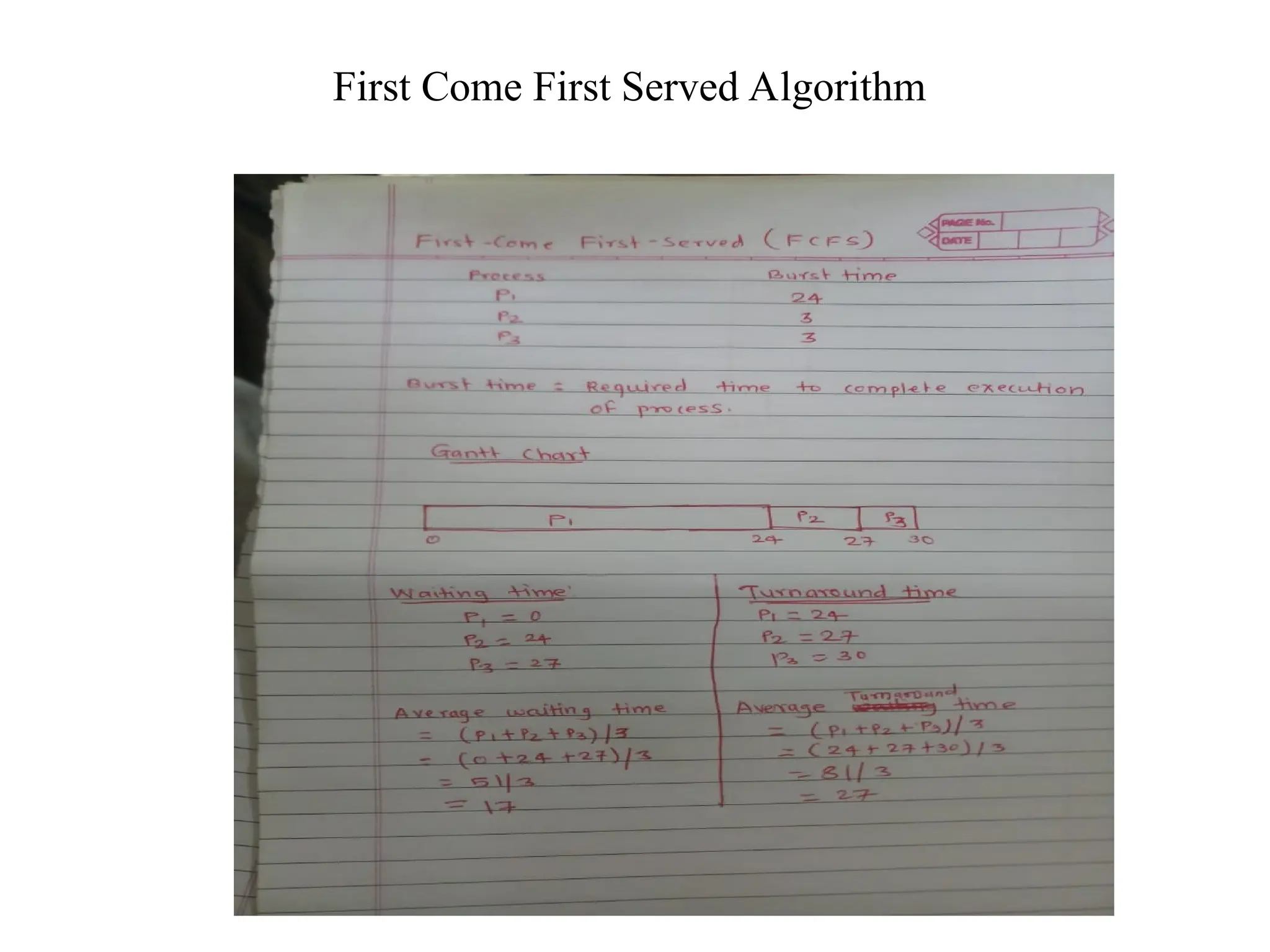

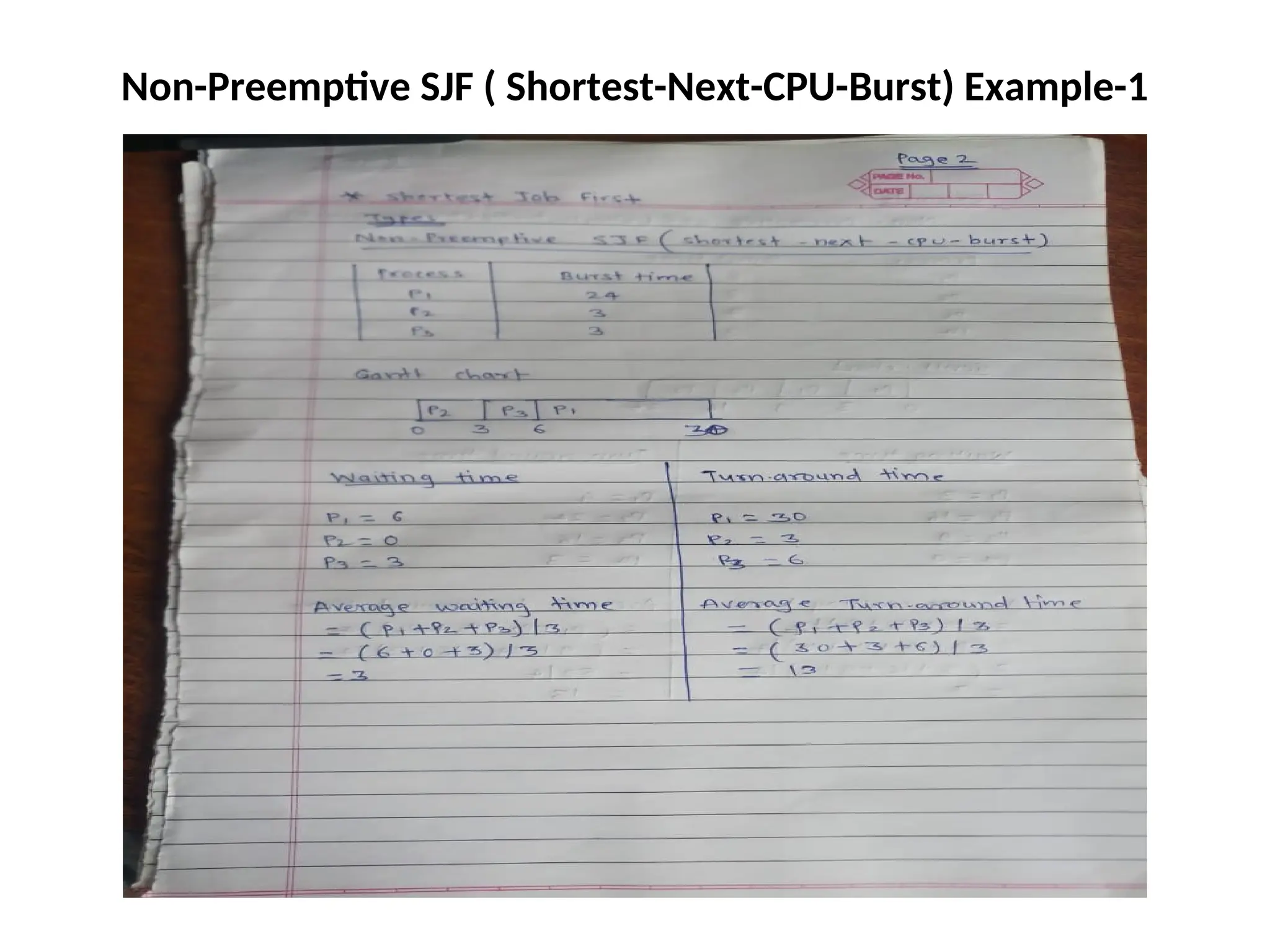

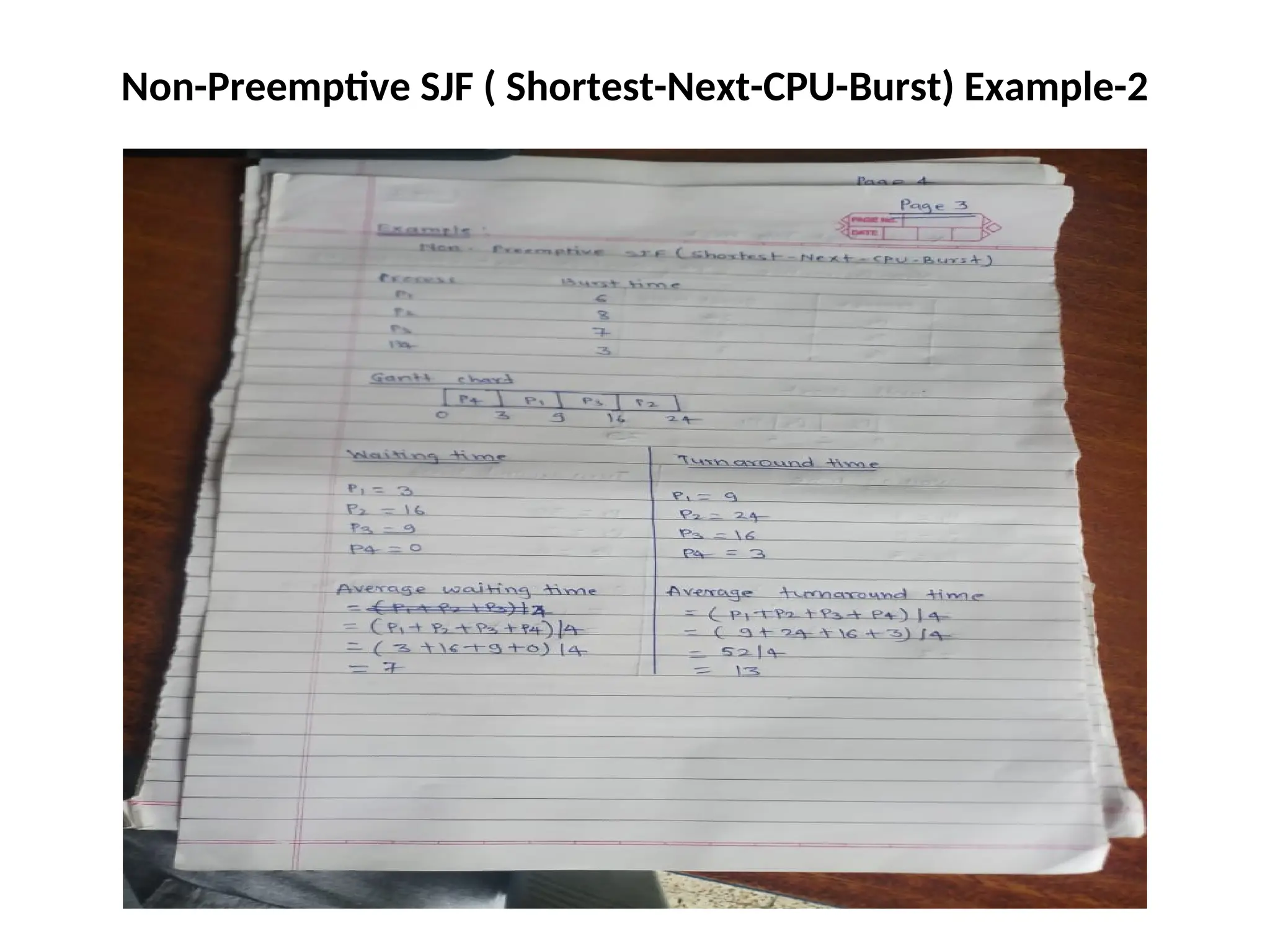

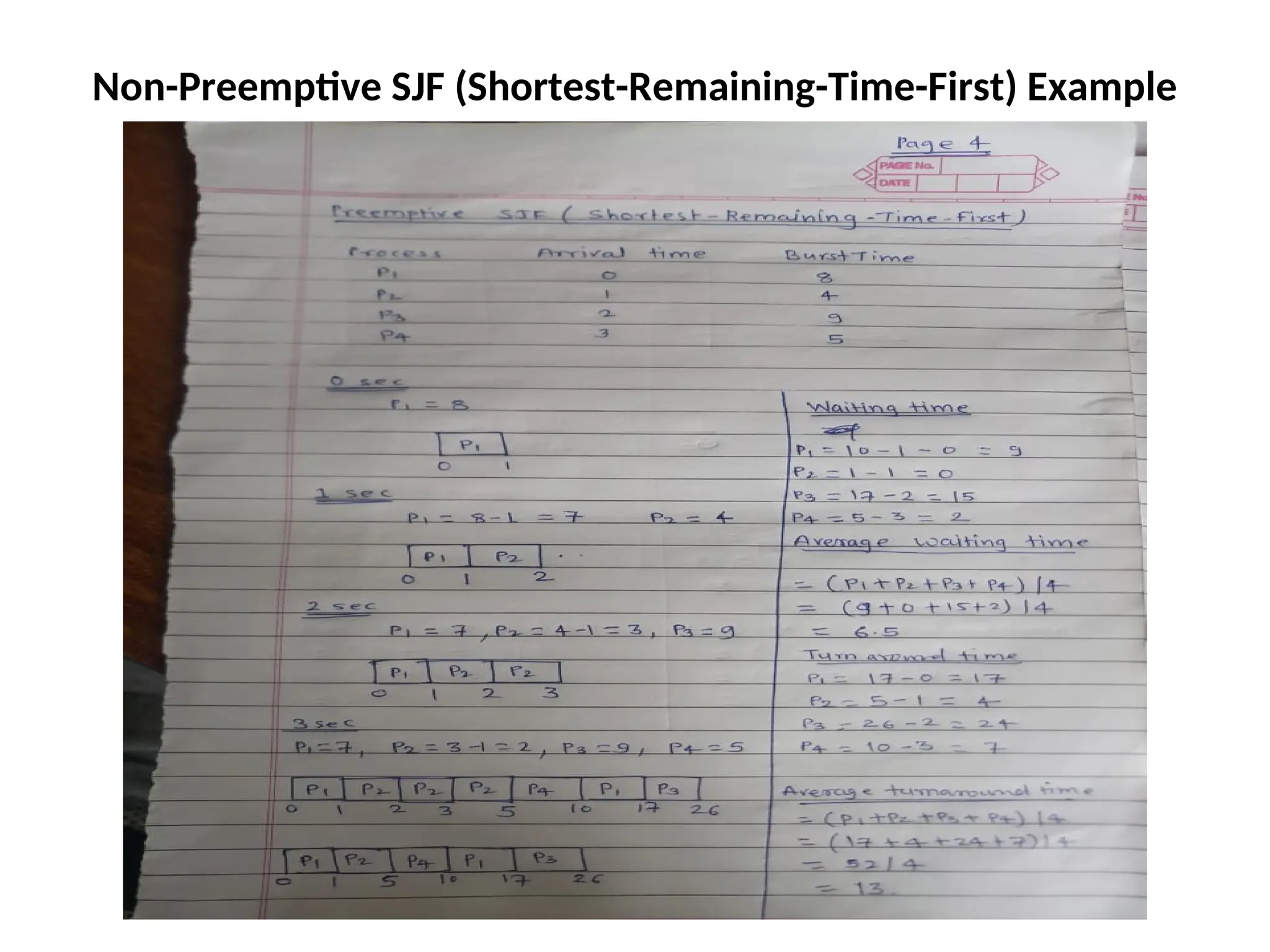

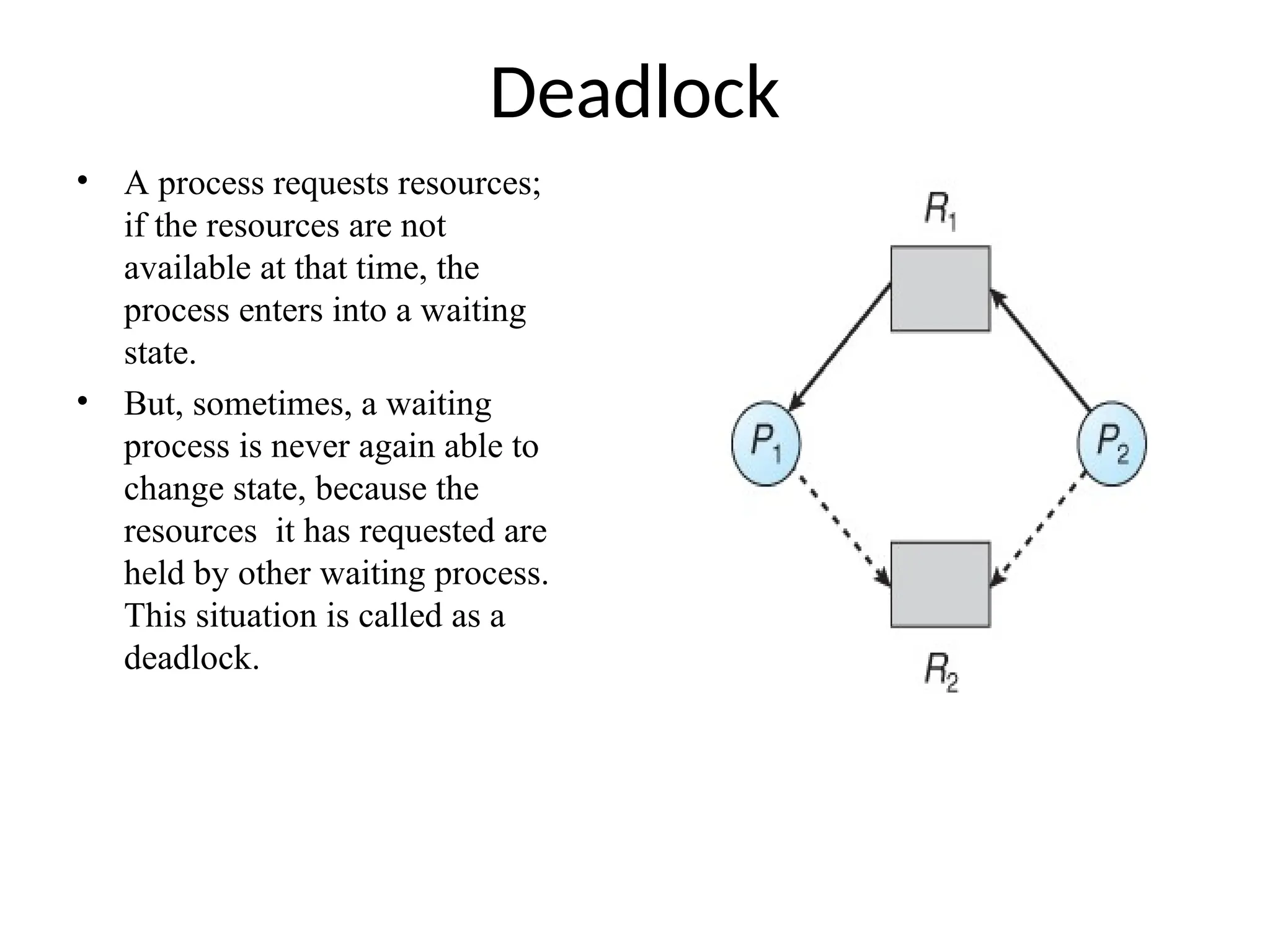

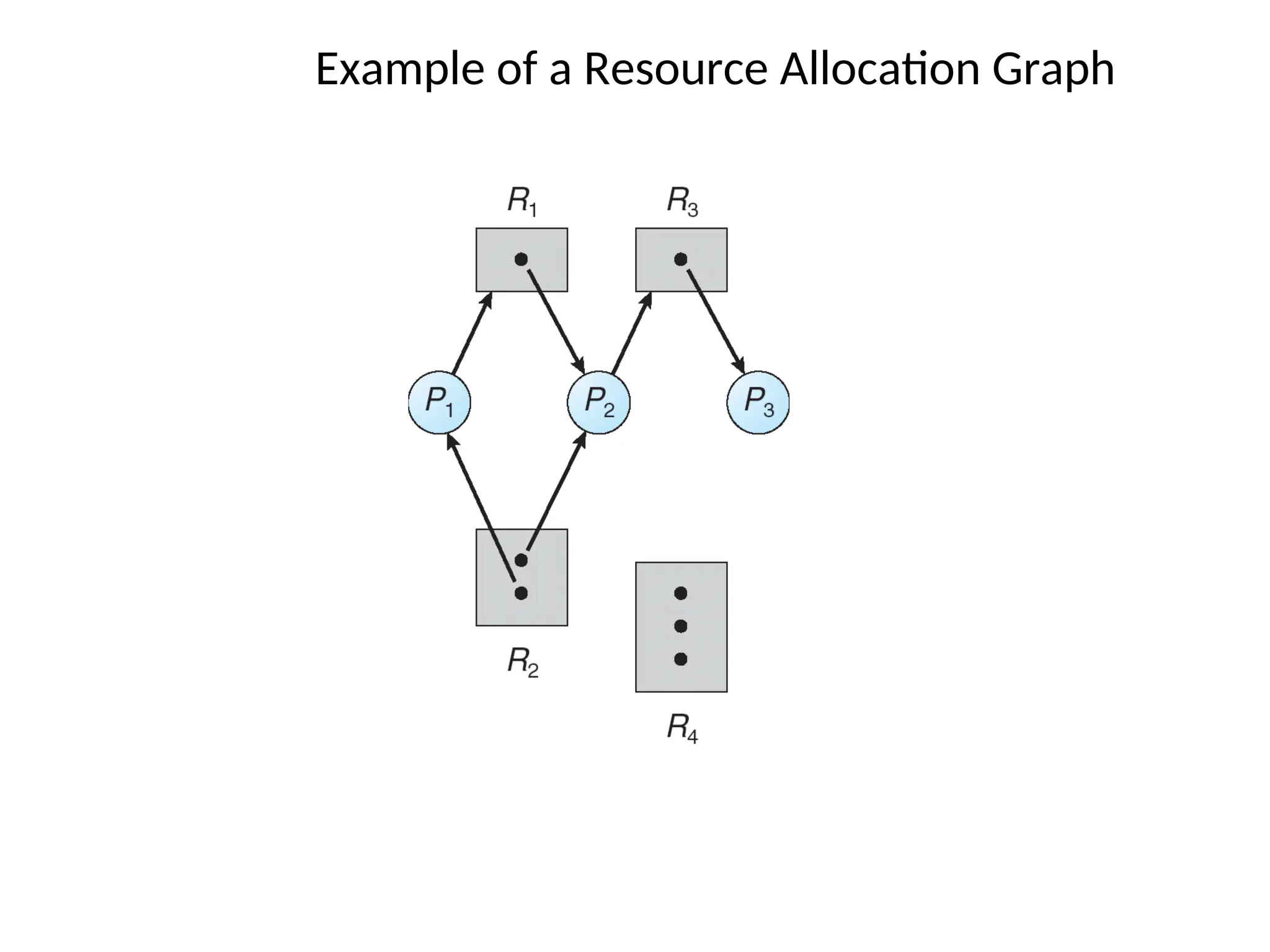

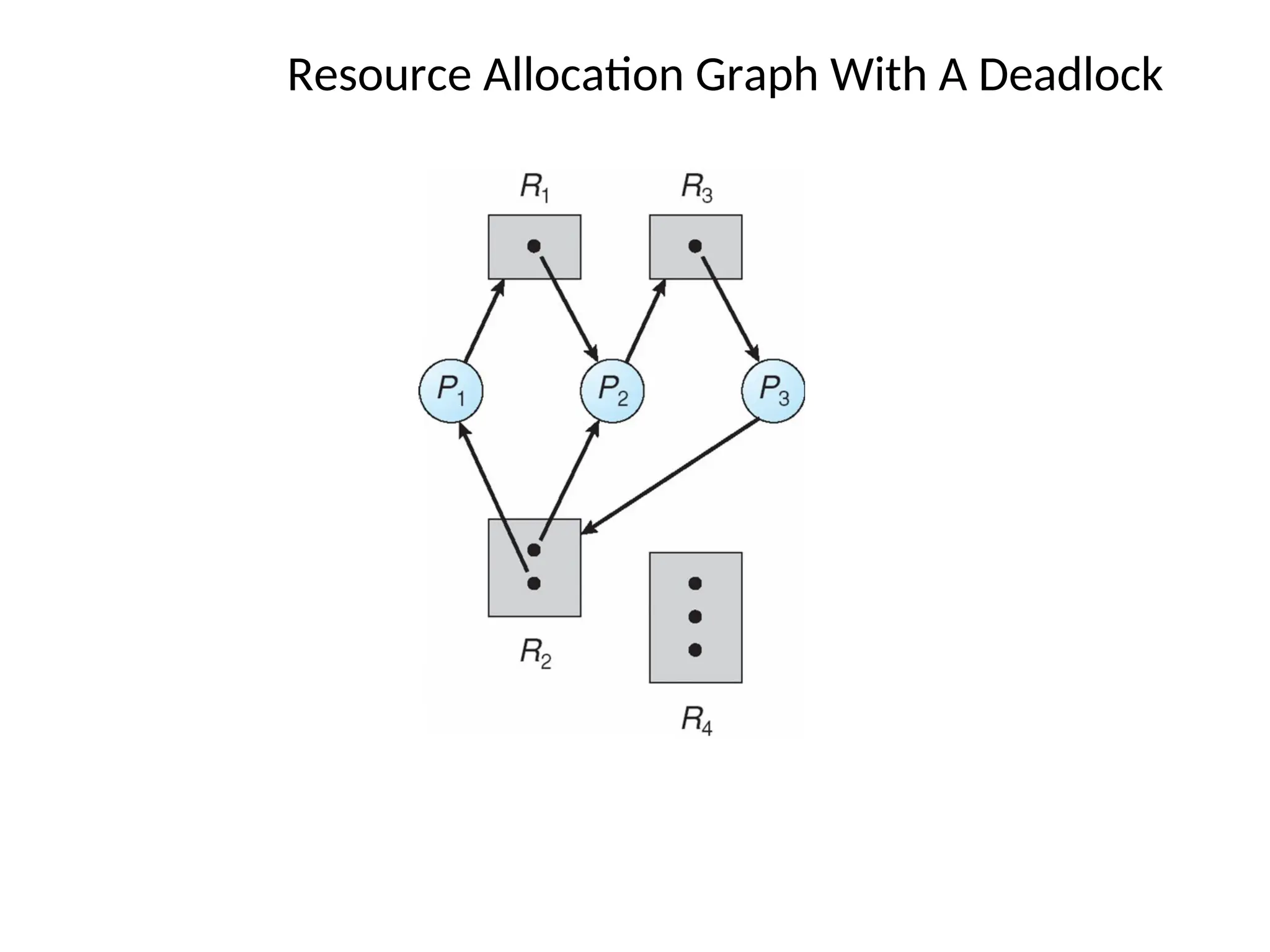

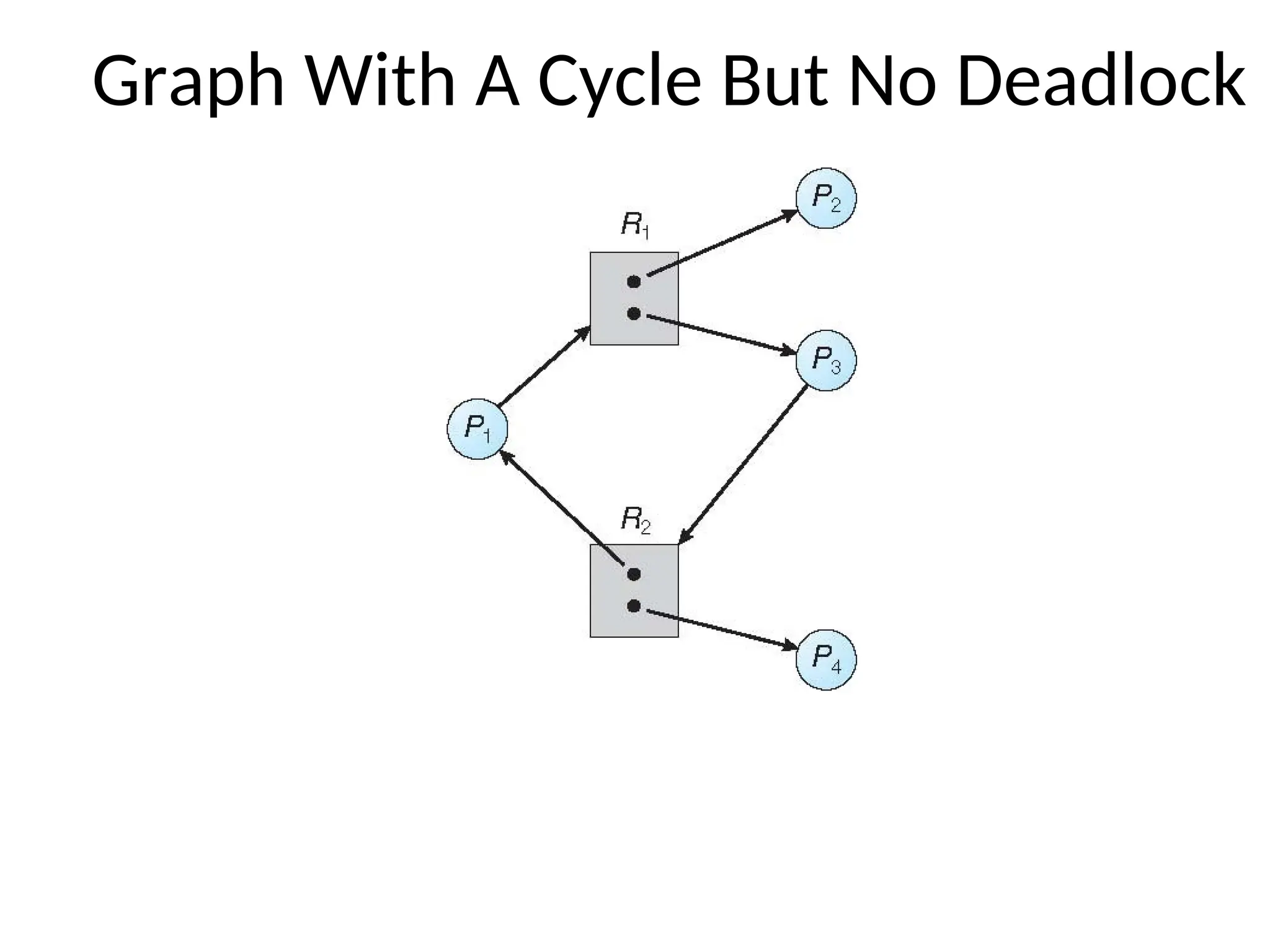

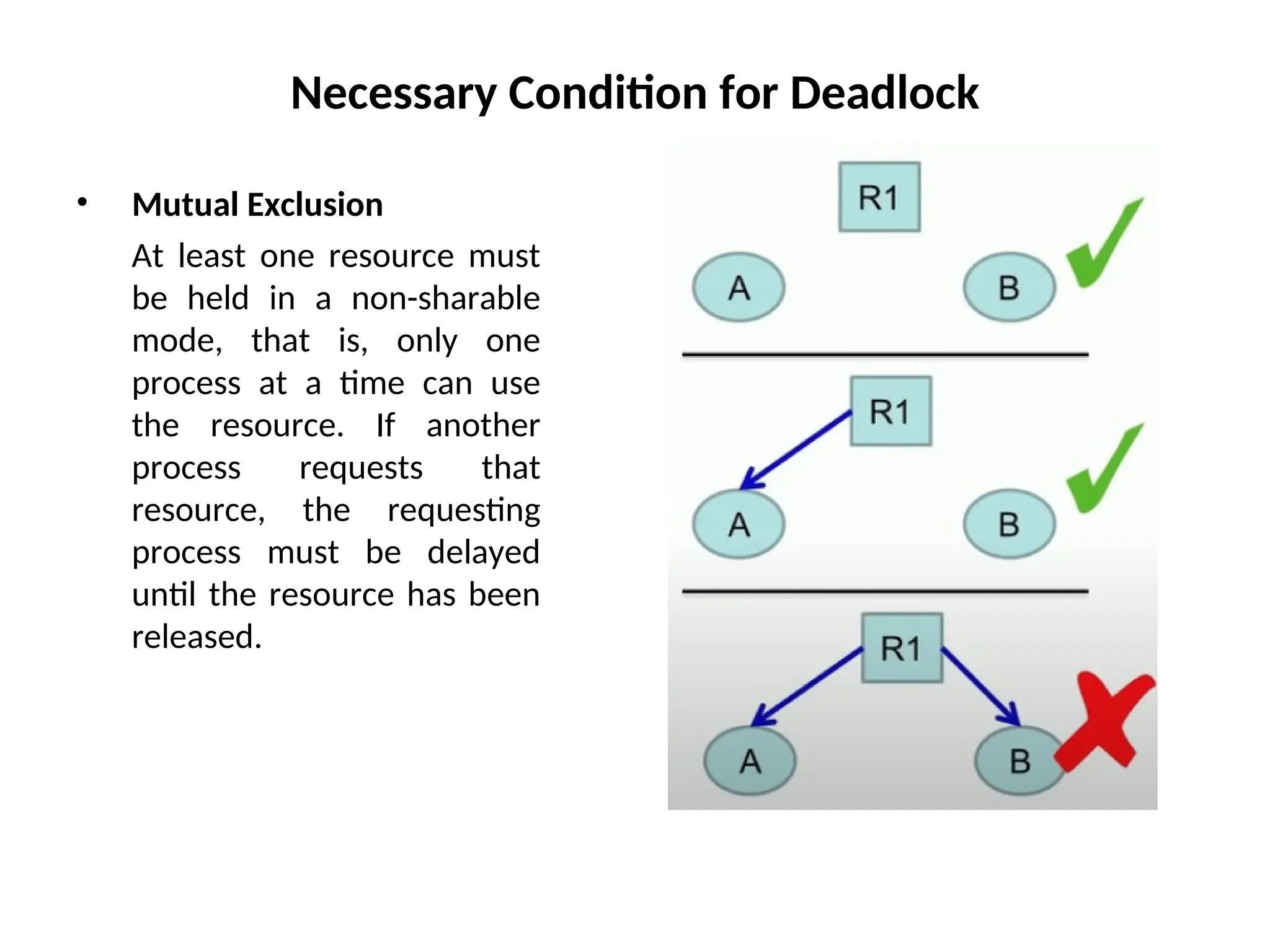

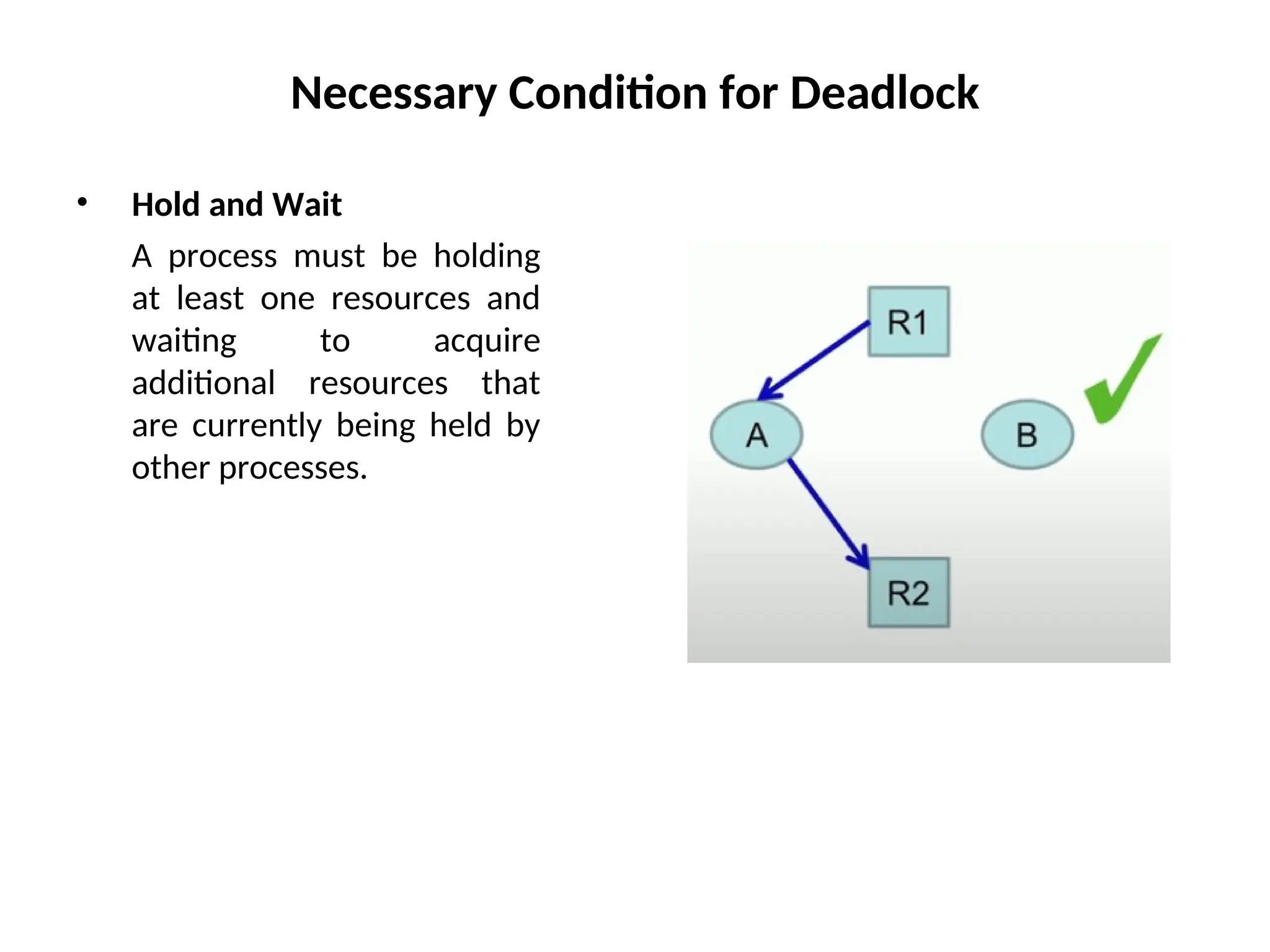

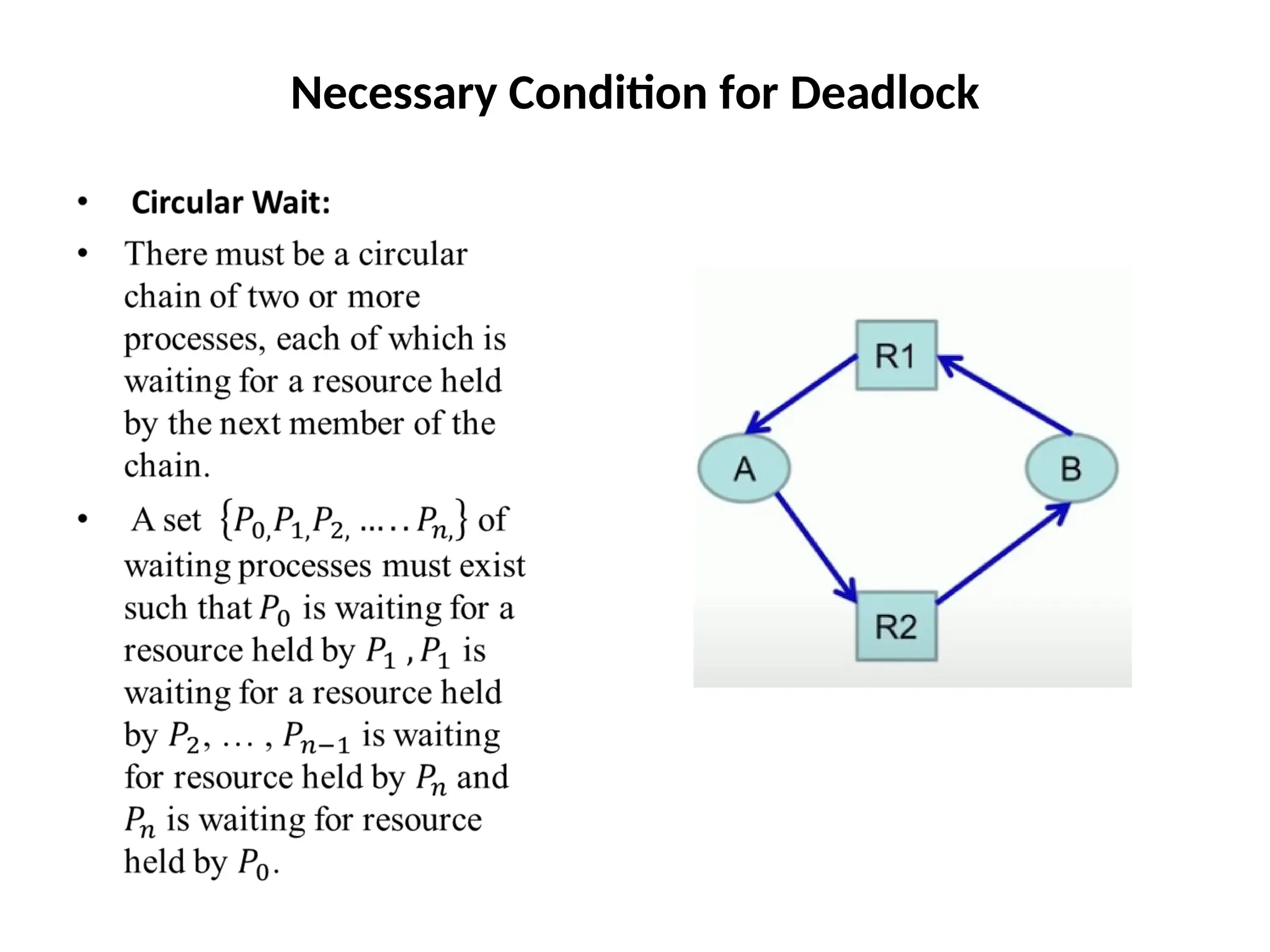

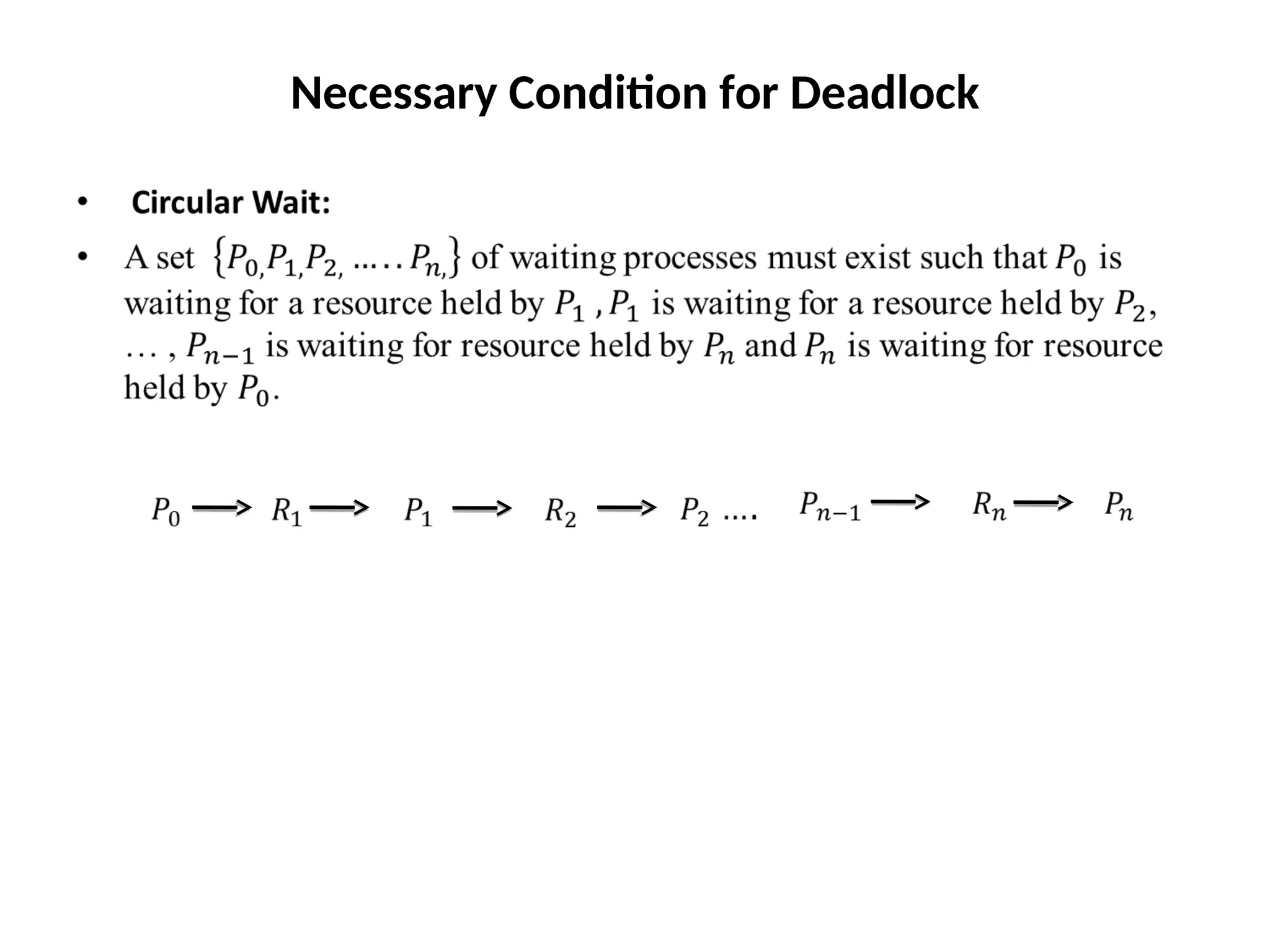

Chapter 4 discusses CPU scheduling and algorithms, emphasizing the necessity of efficient resource allocation in multiprogramming systems to maximize CPU utilization. Different scheduling methods are outlined, such as first-come, first-served, shortest job first, priority scheduling, and round-robin, each with unique advantages and criteria for process management. The chapter also touches on deadlock conditions and prevention techniques to ensure smooth operation in an operating system.