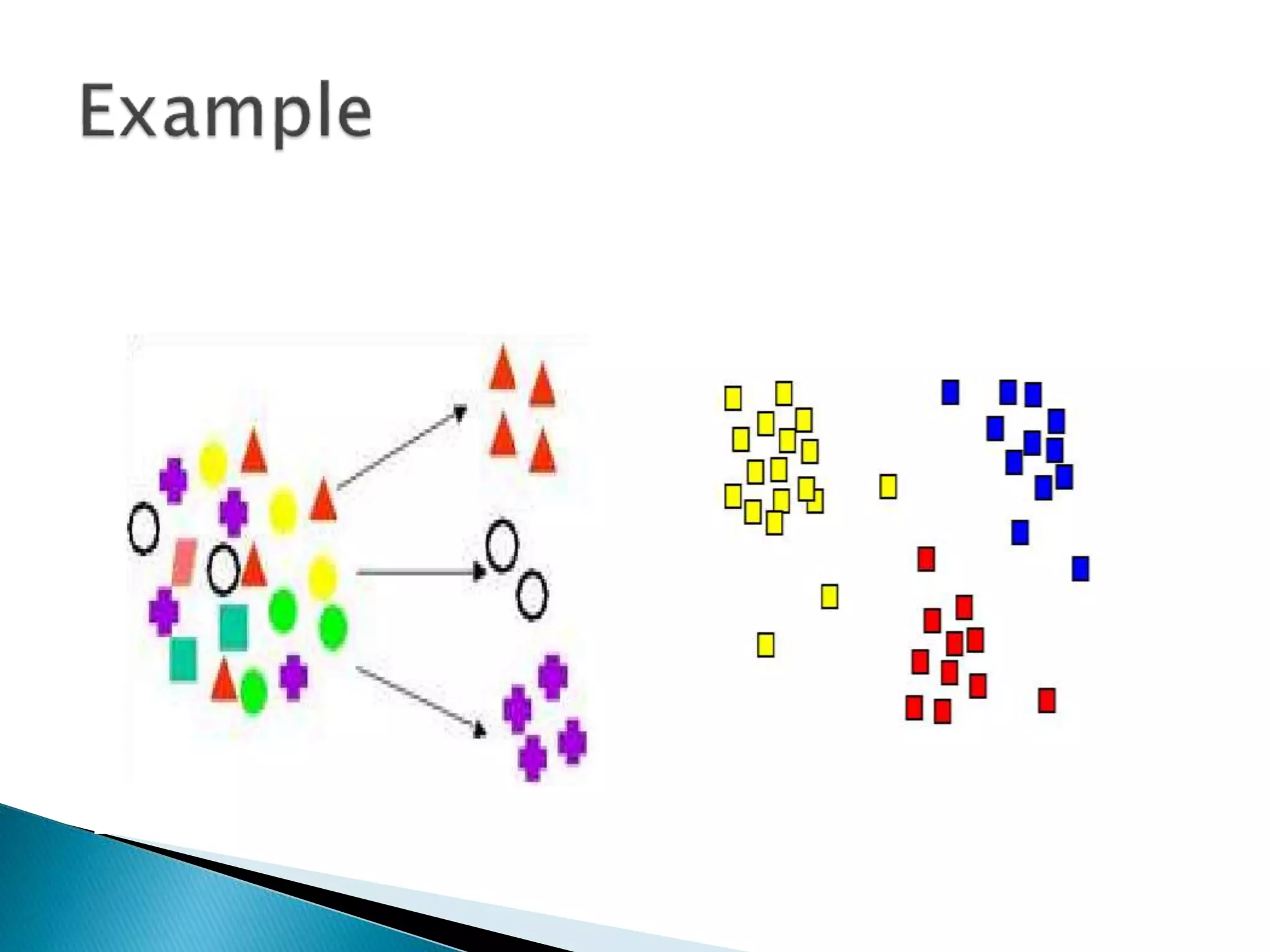

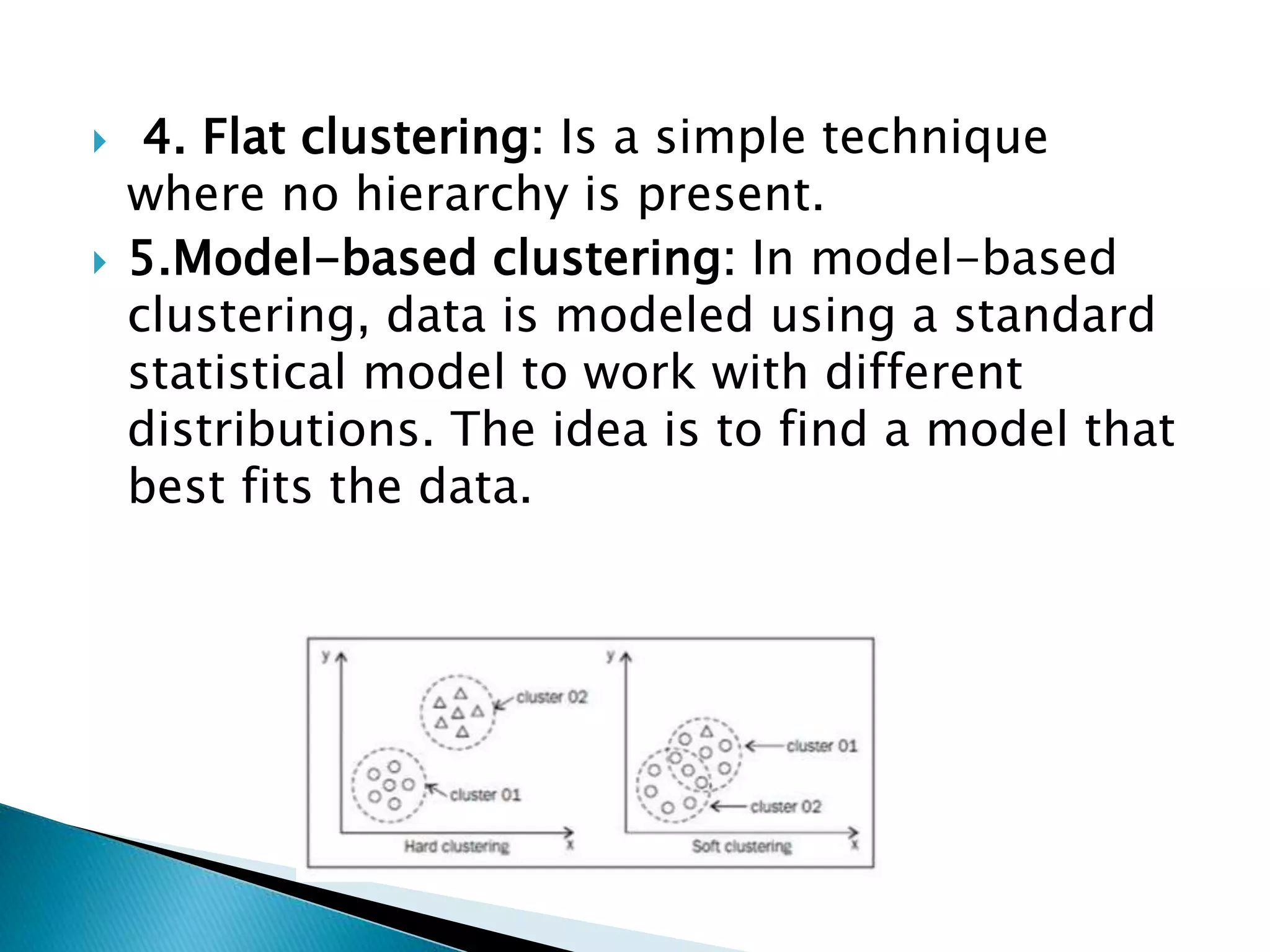

The document discusses the concept of clustering, which groups similar data objects together for analysis. It covers various types of clustering methods, their applications in fields like marketing and biology, and the importance of adaptability, scalability, and interpretability in clustering algorithms. Additionally, it highlights different approaches such as hard, soft, hierarchical, and model-based clustering, along with the challenges posed by noisy data and high dimensionality.