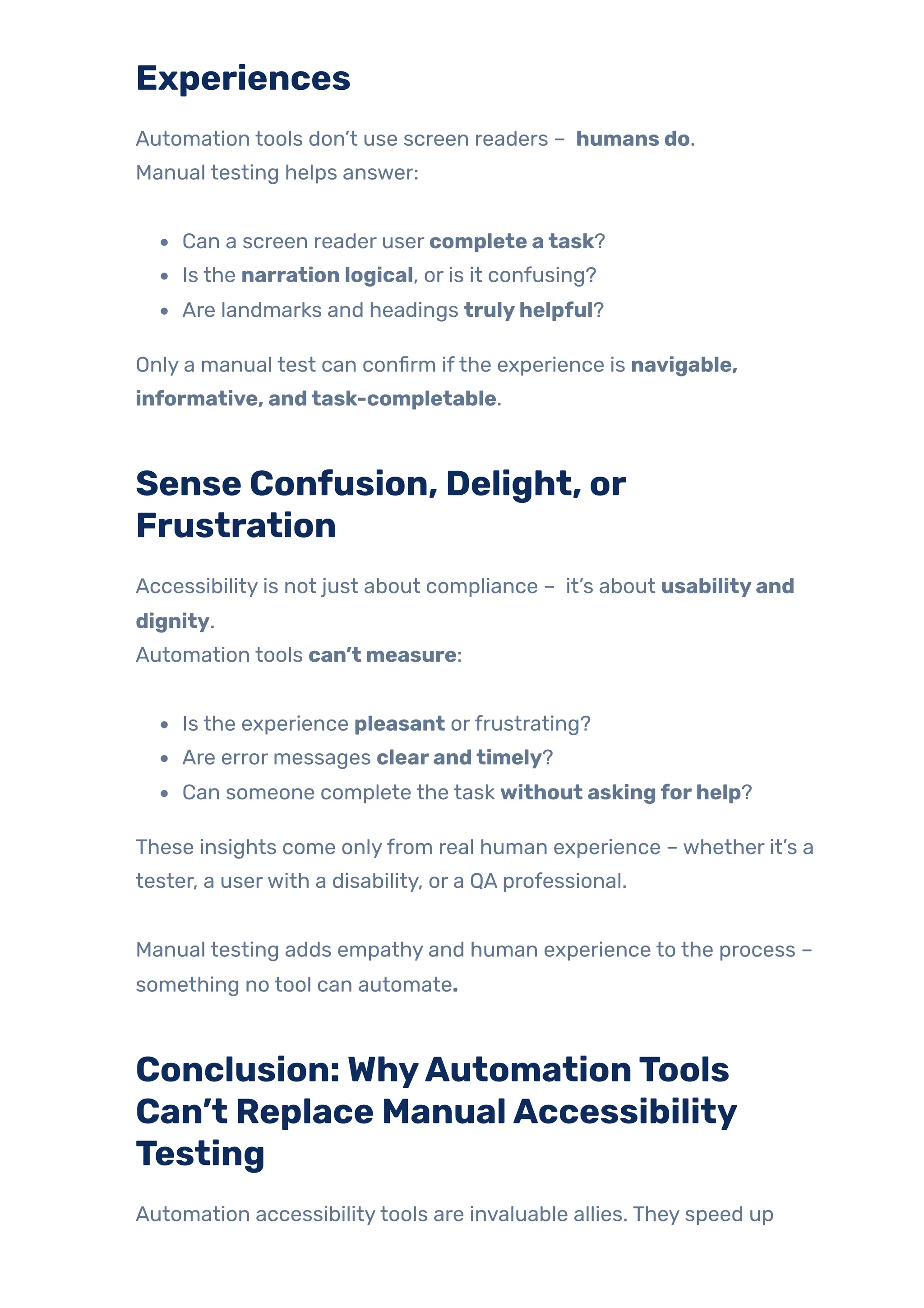

As digital accessibility becomes increasingly important, many teams incorporate automation tools to check if their website or application is accessible.

To meet this growing need efficiently, many QA teams and developers turn to automation accessibility testing tools. These tools promise to speed up testing, catch compliance issues early, and integrate seamlessly into CI/CD pipelines. Their power lies in their ability to quickly scan large codebases and identify common violations of standards like WCAG.

If you haven’t already, check out our previous blog – Everything You Need to Know About Accessibility Testing – where we debunk common misconceptions and explore why accessibility is much broader than screen readers and visual impairments.

In this blog, we will examine what automation accessibility testing tools promise, what they provide based on real-world testing, and why human judgment will remain a key component of the process. We will also look at the most common tools today – what they do well, where they fail, and how to best use them in your QA process.

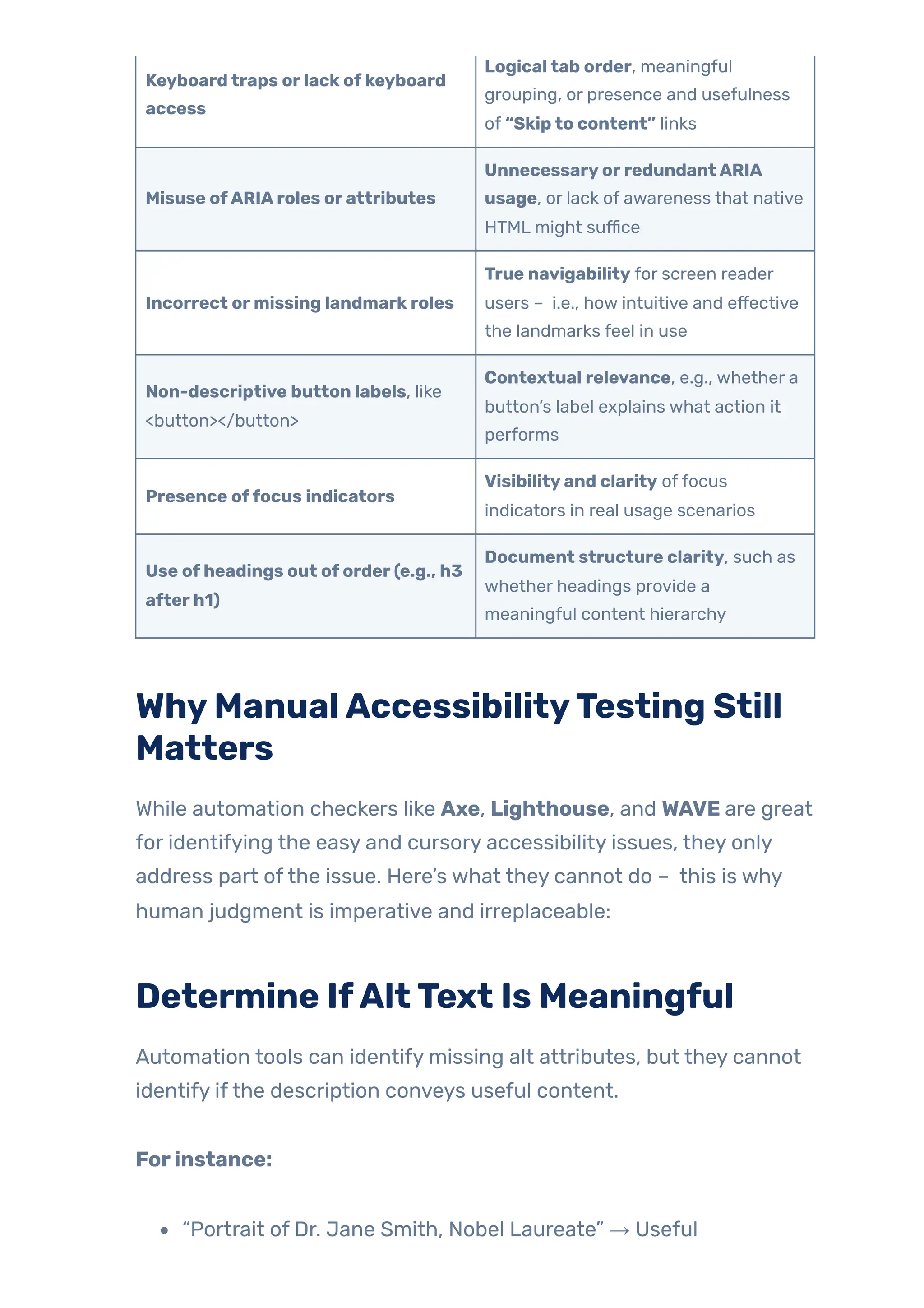

![“image123.jpg” or picture → Useless

A human being is the only one who can identify ifthe image was

meaningful content or decorative, and whetherthe alt text achieves

that purpose.

Assess Logical Focus Orderand

Navigation Flow

Tools can confirm keyboard access, but they can’t assess whetherthe

tab order makes sense.

Humanstest:

Is it possible to navigate from search to results without jumping

around the page?

Is there a visible focus indicatorthat you can see?

Logical flow is critical for users relying on a keyboard or assistive

tech, and only manual testing can fullyvalidate it.]

Understand Complex Interactions

Components like modals, dynamic dropdowns, sliders, and popovers

can behave unpredictably:

Does focus stay inside the modal while it’s open?

Can you close it using onlythe keyboard?

Is ARIA used appropriately, or is it misapplied?

Automation tools may not fully grasp interaction nuances, especially

when JavaScript behaviors are involved.

Simulate Real Screen Reader](https://image.slidesharecdn.com/jignect-tech-complete-guide-to-automation-accessibility-testing-250904053337-cbf87712/75/Complete-Guide-to-Automation-Accessibility-Testing-21-2048.jpg)