Convolutional neural networks (CNNs) are better suited than traditional neural networks for processing image data due to properties of images. CNNs apply filters with local receptive fields and shared weights across the input, allowing them to detect features regardless of position. A CNN architecture consists of convolutional layers that apply filters, and pooling layers for downsampling. This reduces parameters and allows the network to learn representations of the input with minimal feature engineering.

![Convolution

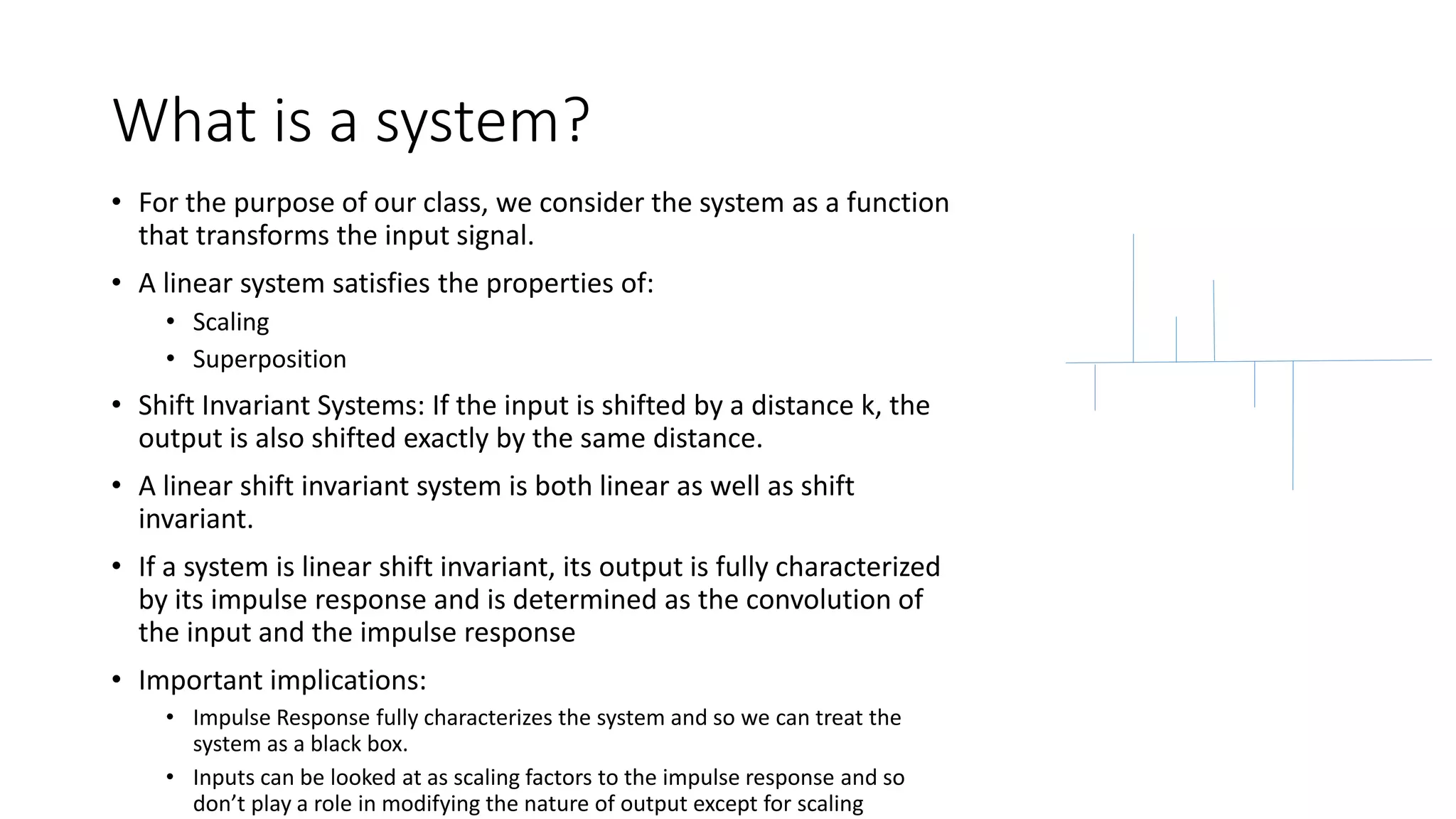

𝐶𝑜𝑛𝑣𝑜𝑙𝑢𝑡𝑖𝑜𝑛 𝑖𝑛 1 𝐷𝑖𝑚𝑒𝑛𝑠𝑖𝑜𝑛:

𝑦 𝑛 =

𝑘=−∞

𝑘=∞

𝑥 𝑘 ℎ[𝑛 − 𝑘]

𝐶𝑜𝑛𝑣𝑜𝑙𝑢𝑡𝑖𝑜𝑛 𝑖𝑛 2 𝐷𝑖𝑚𝑒𝑛𝑠𝑖𝑜𝑛𝑠:

𝑦 𝑛1, 𝑛2 =

𝑘1=−∞

𝑘1=−∞

𝑘2=−∞

𝑘2=∞

𝑥 𝑘1, 𝑘2 ℎ[ 𝑛1 − 𝑘1 , 𝑛2 − 𝑘2 ]](https://image.slidesharecdn.com/cnnoverview-160328045040/75/Convolutional-Neural-Networks-Part-1-7-2048.jpg)