The document discusses the use of multiple GPUs in CUDA programming, highlighting the advantages of zero-copy memory which allows direct access from GPU to host memory. It explains how to implement this in a vector dot product example and compares performance between standard memory and zero-copy memory allocations. Additionally, it provides important considerations for optimizing performance based on whether the GPU is integrated or discrete.

![emory

215

ero

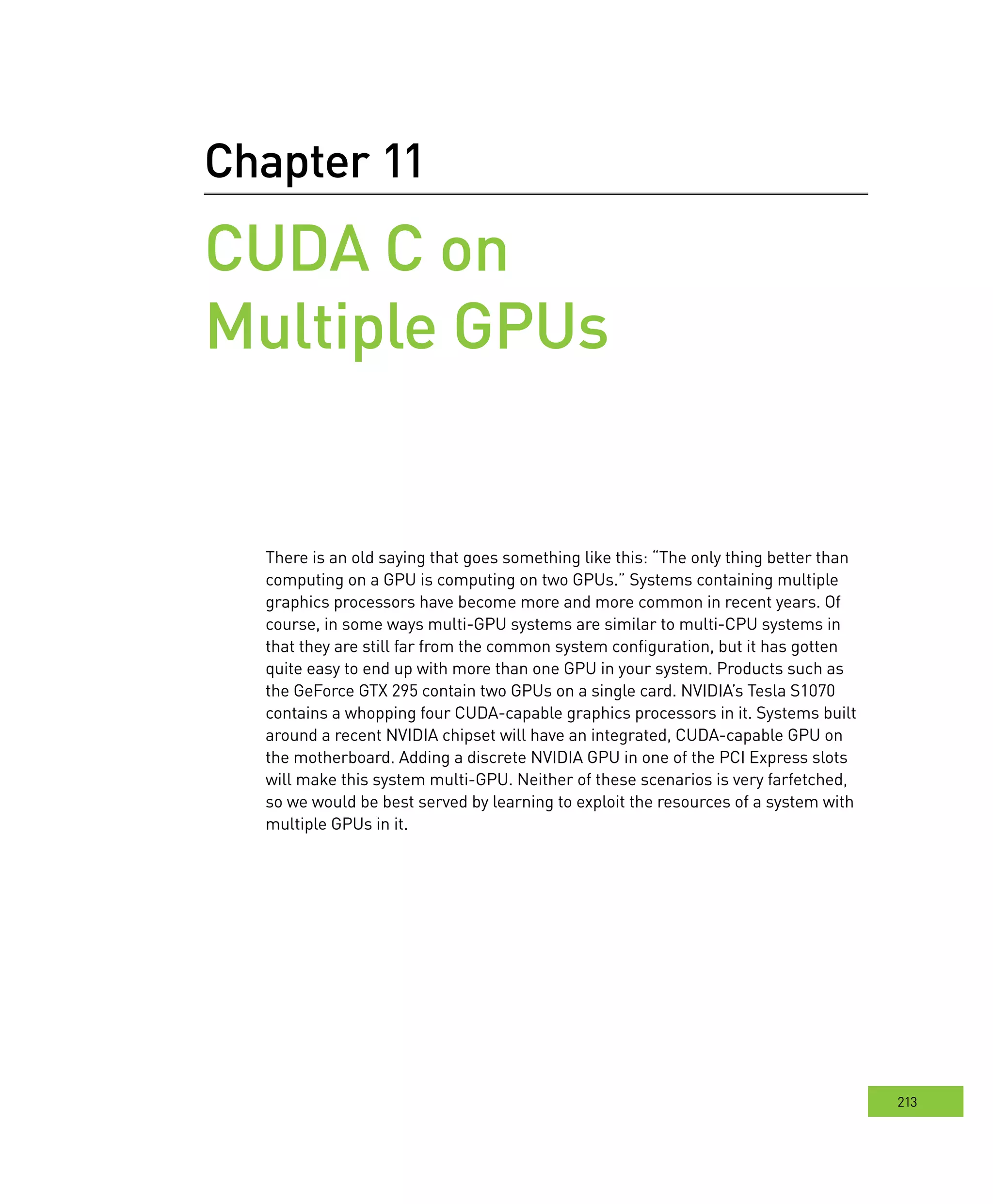

In this version, we’ll skip the explicit copies of our input up to the GPU and instead

use zero-copy memory to access the data directly from the GPU. This version of

dot product will be set up exactly like our pinned memory test. Specifically, we’ll

write two functions; one will perform the test with standard host memory, and

the other will finish the reduction on the GPU using zero-copy memory to hold

the input and output buffers. First let’s take a look at the standard host memory

version of the dot product. We start in the usual fashion by creating timing events,

allocating input and output buffers, and filling our input buffers with data.

float malloc_test( int size ) {

cudaEvent_t start, stop;

float *a, *b, c, *partial_c;

float *dev_a, *dev_b, *dev_partial_c;

float elapsedTime;

HANDLE_ERROR( cudaEventCreate( &start ) );

HANDLE_ERROR( cudaEventCreate( &stop ) );

// allocate memory on the CPU side

a = (float*)malloc( size*sizeof(float) );

b = (float*)malloc( size*sizeof(float) );

partial_c = (float*)malloc( blocksPerGrid*sizeof(float) );

// allocate the memory on the GPU

HANDLE_ERROR( cudaMalloc( (void**)&dev_a,

size*sizeof(float) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_b,

size*sizeof(float) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_partial_c,

blocksPerGrid*sizeof(float) ) );

// fill in the host memory with data

for (int i=0; i<size; i++) {

a[i] = i;

b[i] = i*2;

}](https://image.slidesharecdn.com/cudabyexample-pages-234-257-200910165814/75/CUDA-by-Example-CUDA-C-on-Multiple-GPUs-Notes-3-2048.jpg)

![cudA c on multIPle GPus

216

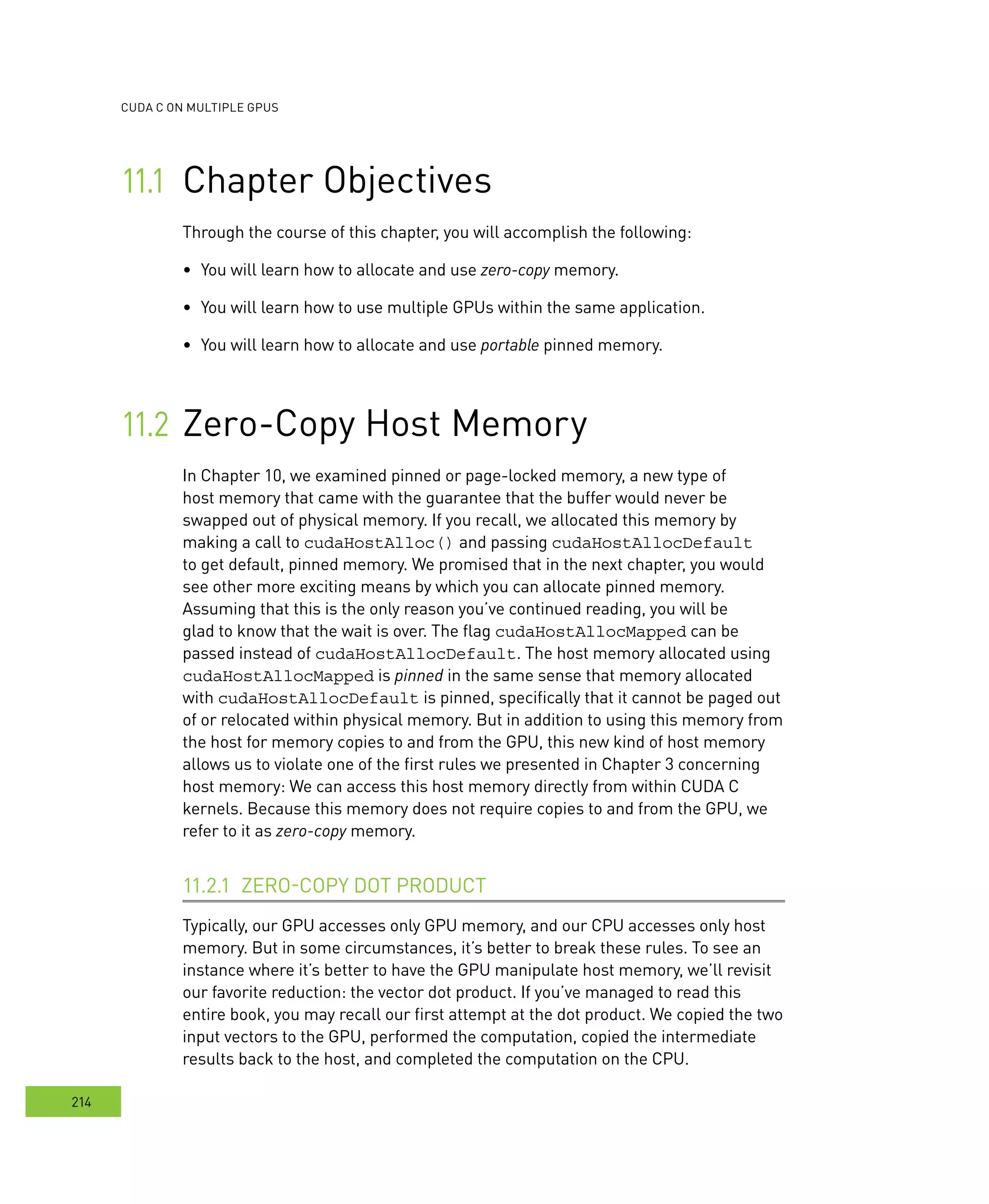

After the allocations and data creation, we can begin the computations. We start

our timer, copy our inputs to the GPU, execute the dot product kernel, and copy

the partial results back to the host.

HANDLE_ERROR( cudaEventRecord( start, 0 ) );

// copy the arrays 'a' and 'b' to the GPU

HANDLE_ERROR( cudaMemcpy( dev_a, a, size*sizeof(float),

cudaMemcpyHostToDevice ) );

HANDLE_ERROR( cudaMemcpy( dev_b, b, size*sizeof(float),

cudaMemcpyHostToDevice ) );

dot<<<blocksPerGrid,threadsPerBlock>>>( size, dev_a, dev_b,

dev_partial_c );

// copy the array 'c' back from the GPU to the CPU

HANDLE_ERROR( cudaMemcpy( partial_c, dev_partial_c,

blocksPerGrid*sizeof(float),

cudaMemcpyDeviceToHost ) );

Now we need to finish up our computations on the CPU as we did in Chapter 5.

Before doing this, we’ll stop our event timer because it only measures work that’s

being performed on the GPU:

HANDLE_ERROR( cudaEventRecord( stop, 0 ) );

HANDLE_ERROR( cudaEventSynchronize( stop ) );

HANDLE_ERROR( cudaEventElapsedTime( &elapsedTime,

start, stop ) );

Finally, we sum our partial results and free our input and output buffers.

// finish up on the CPU side

c = 0;

for (int i=0; i<blocksPerGrid; i++) {

c += partial_c[i];

}](https://image.slidesharecdn.com/cudabyexample-pages-234-257-200910165814/75/CUDA-by-Example-CUDA-C-on-Multiple-GPUs-Notes-4-2048.jpg)

![cudA c on multIPle GPus

218

HANDLE_ERROR( cudaHostAlloc( (void**)&b,

size*sizeof(float),

cudaHostAllocWriteCombined |

cudaHostAllocMapped ) );

HANDLE_ERROR( cudaHostAlloc( (void**)&partial_c,

blocksPerGrid*sizeof(float),

cudaHostAllocMapped ) );

// fill in the host memory with data

for (int i=0; i<size; i++) {

a[i] = i;

b[i] = i*2;

}

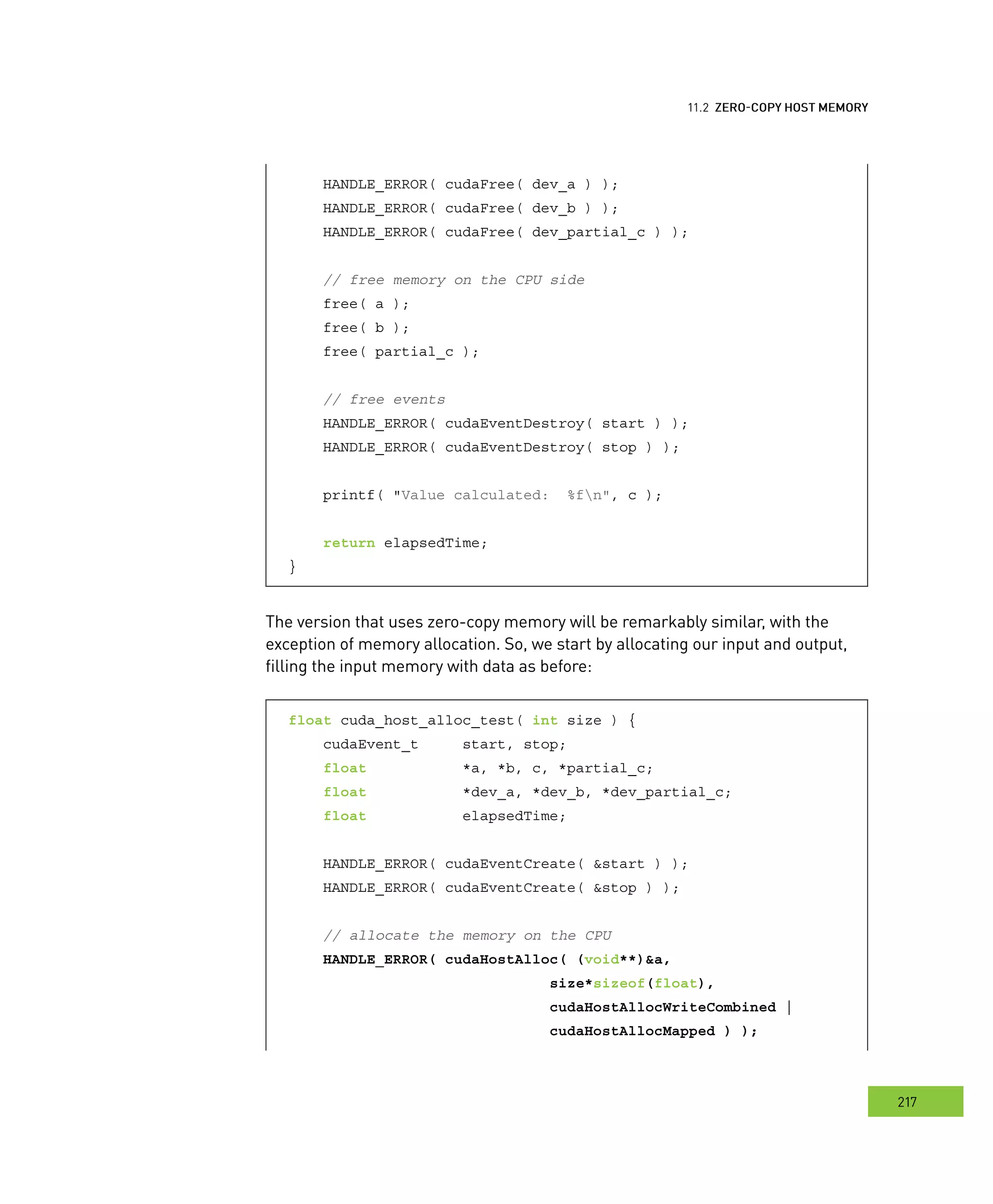

As with Chapter 10, we see cudaHostAlloc() in action again, although we’re

now using the flags argument to specify more than just default behavior. The

flag cudaHostAllocMapped tells the runtime that we intend to access this

buffer from the GPU. In other words, this flag is what makes our buffer zero-copy.

For the two input buffers, we specify the flag cudaHostAllocWriteCombined.

This flag indicates that the runtime should allocate the buffer as write-combined

with respect to the CPU cache. This flag will not change functionality in our appli-

cation but represents an important performance enhancement for buffers that

will be read only by the GPU. However, write-combined memory can be extremely

inefficient in scenarios where the CPU also needs to perform reads from the

buffer, so you will have to consider your application’s likely access patterns when

making this decision.

Since we’ve allocated our host memory with the flag cudaHostAllocMapped,

the buffers can be accessed from the GPU. However, the GPU has a different

virtual memory space than the CPU, so the buffers will have different addresses

when they’re accessed on the GPU as compared to the CPU. The call to

cudaHostAlloc() returns the CPU pointer for the memory, so we need to call

cudaHostGetDevicePointer() in order to get a valid GPU pointer for the

memory. These pointers will be passed to the kernel and then used by the GPU to

read from and write to our host allocations:](https://image.slidesharecdn.com/cudabyexample-pages-234-257-200910165814/75/CUDA-by-Example-CUDA-C-on-Multiple-GPUs-Notes-6-2048.jpg)

![emory

219

ero

HANDLE_ERROR( cudaHostGetDevicePointer( &dev_a, a, 0 ) );

HANDLE_ERROR( cudaHostGetDevicePointer( &dev_b, b, 0 ) );

HANDLE_ERROR( cudaHostGetDevicePointer( &dev_partial_c,

partial_c, 0 ) );

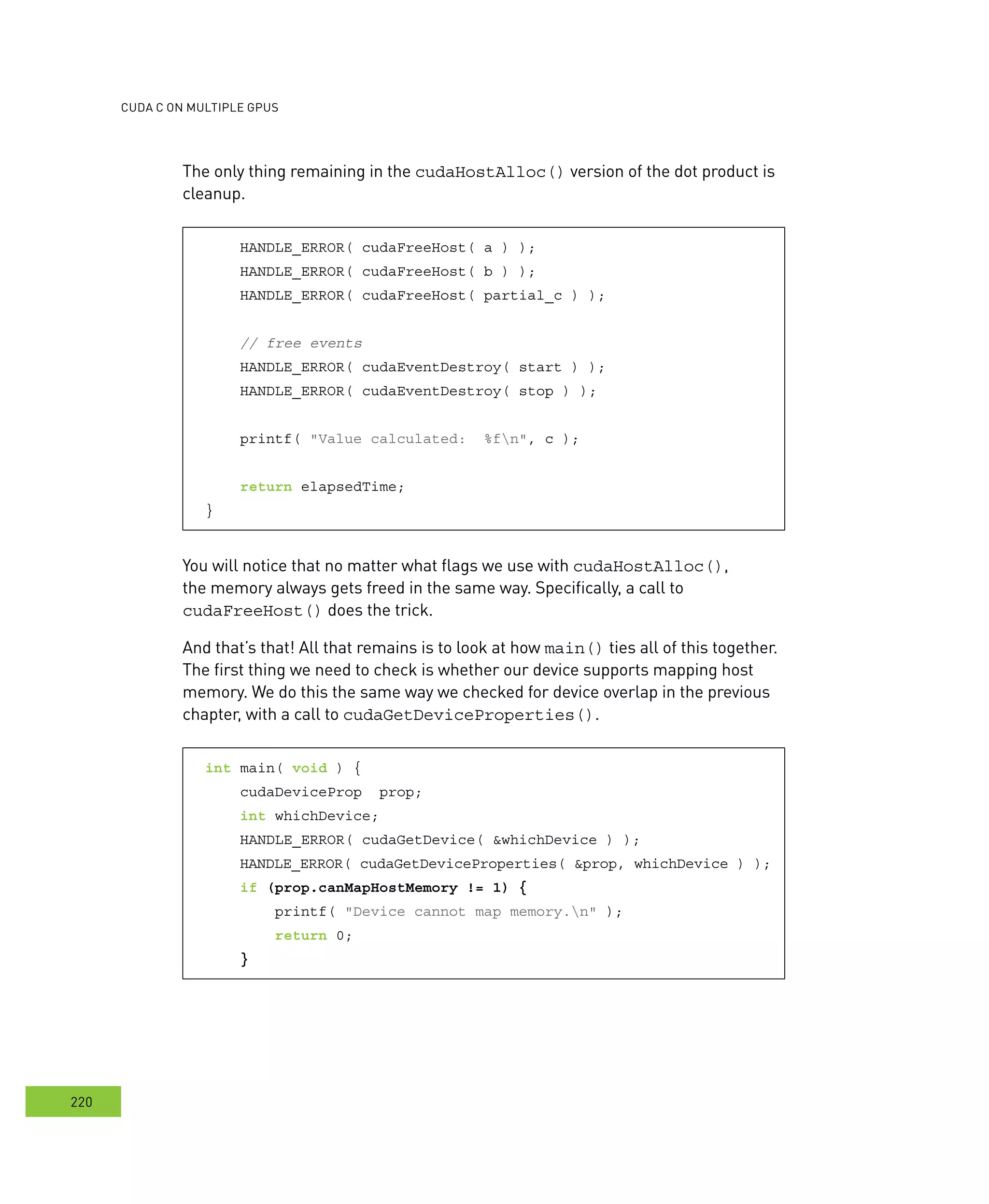

With valid device pointers in hand, we’re ready to start our timer and launch our

kernel.

HANDLE_ERROR( cudaEventRecord( start, 0 ) );

dot<<<blocksPerGrid,threadsPerBlock>>>( size, dev_a, dev_b,

dev_partial_c );

HANDLE_ERROR( cudaThreadSynchronize() );

Even though the pointers dev_a, dev_b, and dev_partial_c all reside on

the host, they will look to our kernel as if they are GPU memory, thanks to our

calls to cudaHostGetDevicePointer(). Since our partial results are already

on the host, we don’t need to bother with a cudaMemcpy() from the device.

However, you will notice that we’re synchronizing the CPU with the GPU by calling

cudaThreadSynchronize(). The contents of zero-copy memory are undefined

during the execution of a kernel that potentially makes changes to its contents.

After synchronizing, we’re sure that the kernel has completed and that our zero-

copy buffer contains the results so we can stop our timer and finish the computa-

tion on the CPU as we did before.

HANDLE_ERROR( cudaEventRecord( stop, 0 ) );

HANDLE_ERROR( cudaEventSynchronize( stop ) );

HANDLE_ERROR( cudaEventElapsedTime( &elapsedTime,

start, stop ) );

// finish up on the CPU side

c = 0;

for (int i=0; i<blocksPerGrid; i++) {

c += partial_c[i];

}](https://image.slidesharecdn.com/cudabyexample-pages-234-257-200910165814/75/CUDA-by-Example-CUDA-C-on-Multiple-GPUs-Notes-7-2048.jpg)

![emory

221

ero

Assuming that our device supports zero-copy memory, we place the runtime

into a state where it will be able to allocate zero-copy buffers for us. We accom-

plish this by a call to cudaSetDeviceFlags() and by passing the flag

cudaDeviceMapHost to indicate that we want the device to be allowed to map

host memory:

HANDLE_ERROR( cudaSetDeviceFlags( cudaDeviceMapHost ) );

That’s really all there is to main(). We run our two tests, display the elapsed

time, and exit the application:

float elapsedTime = malloc_test( N );

printf( "Time using cudaMalloc: %3.1f msn",

elapsedTime );

elapsedTime = cuda_host_alloc_test( N );

printf( "Time using cudaHostAlloc: %3.1f msn",

elapsedTime );

}

The kernel itself is unchanged from Chapter 5, but for the sake of completeness,

here it is in its entirety:

#define imin(a,b) (a<b?a:b)

const int N = 33 * 1024 * 1024;

const int threadsPerBlock = 256;

const int blocksPerGrid =

imin( 32, (N+threadsPerBlock-1) / threadsPerBlock );

__global__ void dot( int size, float *a, float *b, float *c ) {

__shared__ float cache[threadsPerBlock];

int tid = threadIdx.x + blockIdx.x * blockDim.x;

int cacheIndex = threadIdx.x;](https://image.slidesharecdn.com/cudabyexample-pages-234-257-200910165814/75/CUDA-by-Example-CUDA-C-on-Multiple-GPUs-Notes-9-2048.jpg)

![cudA c on multIPle GPus

222

float temp = 0;

while (tid < size) {

temp += a[tid] * b[tid];

tid += blockDim.x * gridDim.x;

}

// set the cache values

cache[cacheIndex] = temp;

// synchronize threads in this block

__syncthreads();

// for reductions, threadsPerBlock must be a power of 2

// because of the following code

int i = blockDim.x/2;

while (i != 0) {

if (cacheIndex < i)

cache[cacheIndex] += cache[cacheIndex + i];

__syncthreads();

i /= 2;

}

if (cacheIndex == 0)

c[blockIdx.x] = cache[0];

}

erformance11.2.2

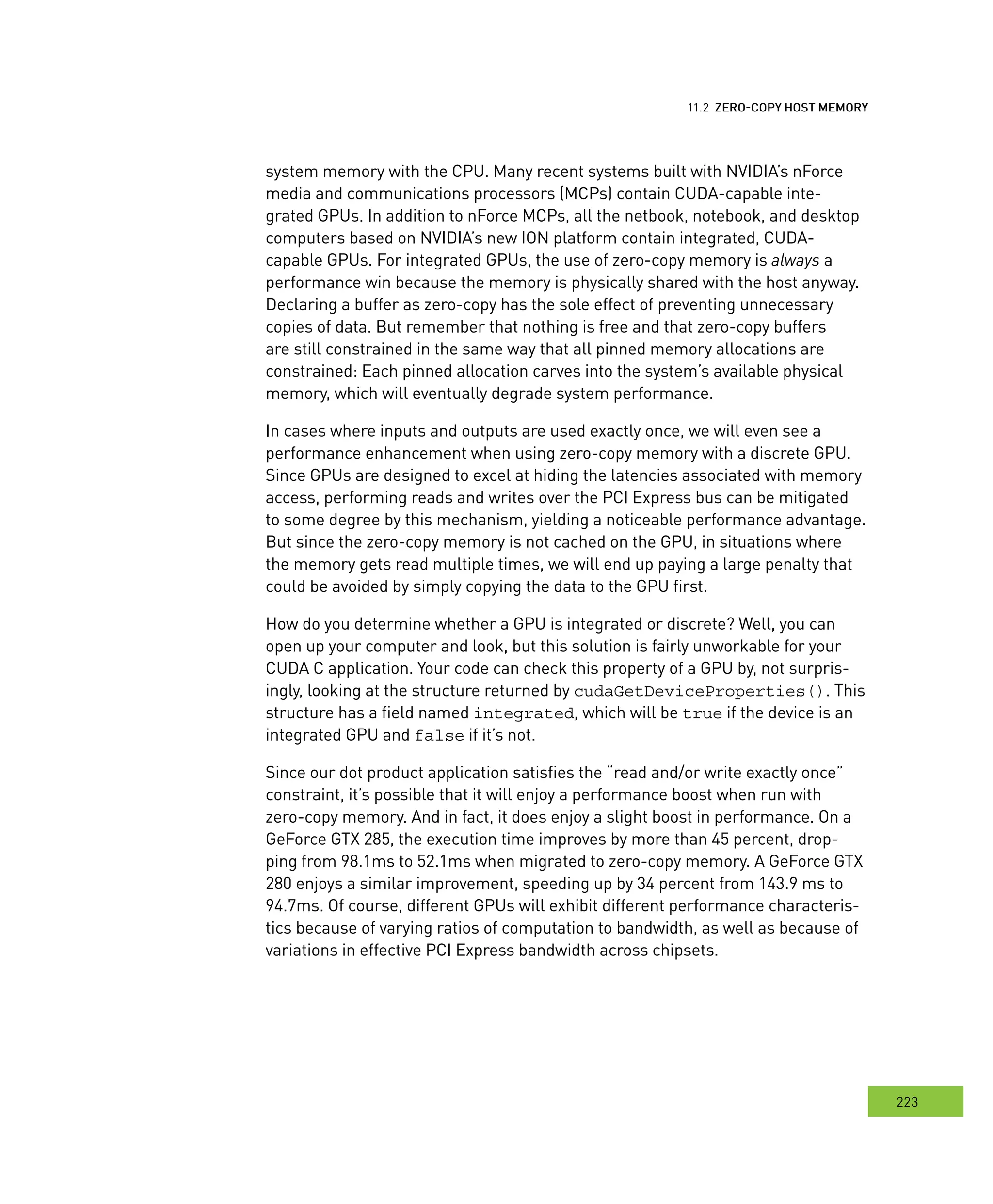

What should we expect to gain from using zero-copy memory? The answer to

this question is different for discrete GPUs and integrated GPUs. Discrete GPUs

are graphics processors that have their own dedicated DRAMs and typically sit

on separate circuit boards from the CPU. For example, if you have ever installed

a graphics card into your desktop, this GPU is a discrete GPU. Integrated GPUs

are graphics processors built into a system’s chipset and usually share regular](https://image.slidesharecdn.com/cudabyexample-pages-234-257-200910165814/75/CUDA-by-Example-CUDA-C-on-Multiple-GPUs-Notes-10-2048.jpg)

![usInG multIPle GPus

225

s

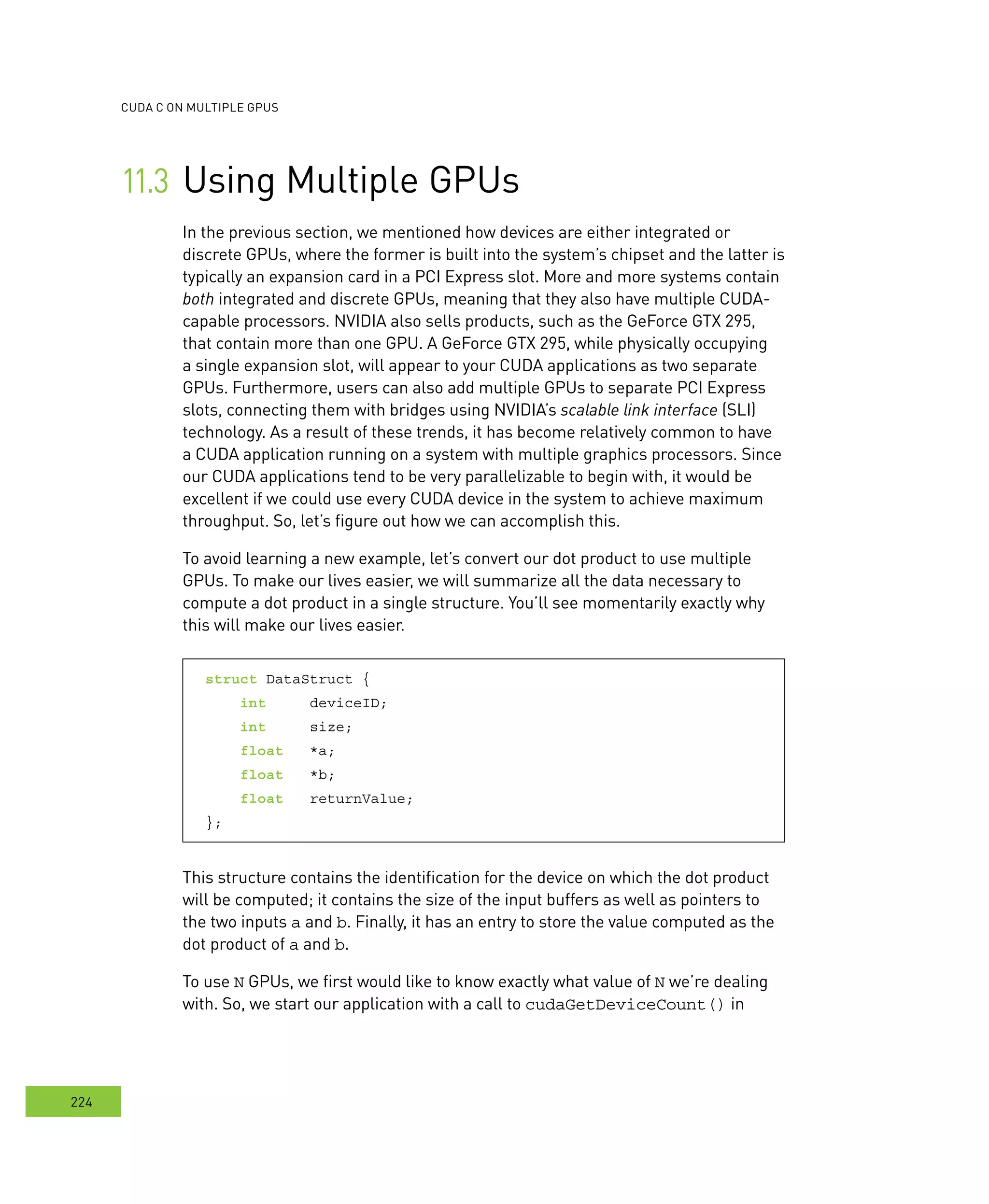

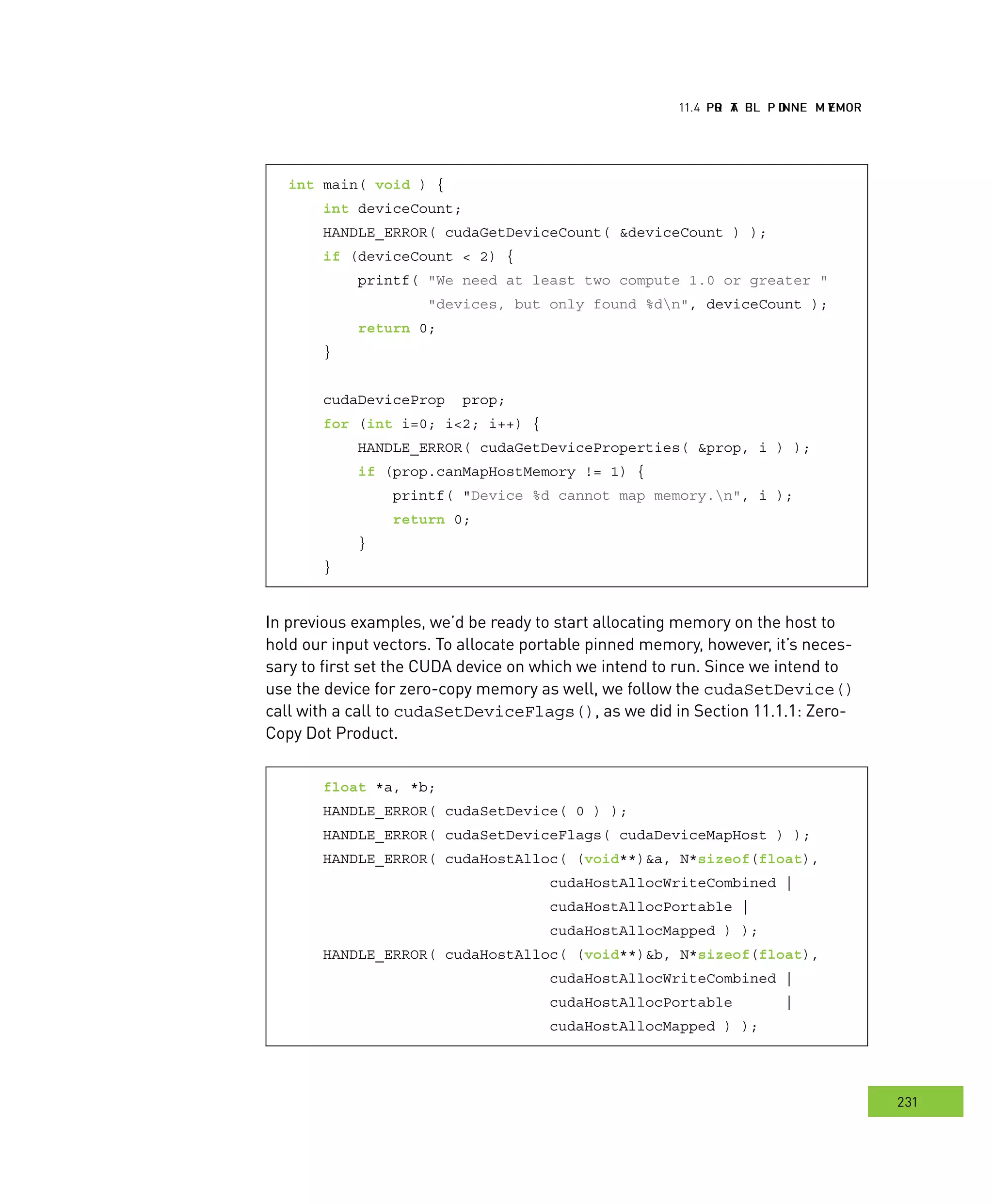

order to determine how many CUDA-capable processors have been installed in

our system.

int main( void ) {

int deviceCount;

HANDLE_ERROR( cudaGetDeviceCount( &deviceCount ) );

if (deviceCount < 2) {

printf( "We need at least two compute 1.0 or greater "

"devices, but only found %dn", deviceCount );

return 0;

}

This example is designed to show multi-GPU usage, so you’ll notice that we

simply exit if the system has only one CUDA device (not that there’s anything

wrong with that). This is not encouraged as a best practice for obvious reasons.

To keep things as simple as possible, we’ll allocate standard host memory for our

inputs and fill them with data exactly how we’ve done in the past.

float *a = (float*)malloc( sizeof(float) * N );

HANDLE_NULL( a );

float *b = (float*)malloc( sizeof(float) * N );

HANDLE_NULL( b );

// fill in the host memory with data

for (int i=0; i<N; i++) {

a[i] = i;

b[i] = i*2;

}

We’re now ready to dive into the multi-GPU code. The trick to using multiple GPUs

with the CUDA runtime API is realizing that each GPU needs to be controlled

by a different CPU thread. Since we have used only a single GPU before, we

haven’t needed to worry about this. We have moved a lot of the annoyance of

multithreaded code to our file of auxiliary code, book.h. With this code tucked

away, all we need to do is fill a structure with data necessary to perform the](https://image.slidesharecdn.com/cudabyexample-pages-234-257-200910165814/75/CUDA-by-Example-CUDA-C-on-Multiple-GPUs-Notes-13-2048.jpg)

![cudA c on multIPle GPus

226

computations. Although the system could have any number of GPUs greater than

one, we will use only two of them for clarity:

DataStruct data[2];

data[0].deviceID = 0;

data[0].size = N/2;

data[0].a = a;

data[0].b = b;

data[1].deviceID = 1;

data[1].size = N/2;

data[1].a = a + N/2;

data[1].b = b + N/2;

To proceed, we pass one of the DataStruct variables to a utility function we’ve

named start_thread(). We also pass start_thread() a pointer to a func-

tion to be called by the newly created thread; this example’s thread function is

called routine(). The function start_thread() will create a new thread that

then calls the specified function, passing the DataStruct to this function. The

other call to routine() gets made from the default application thread (so we’ve

created only one additional thread).

CUTThread thread = start_thread( routine, &(data[0]) );

routine( &(data[1]) );

Before we proceed, we have the main application thread wait for the other thread

to finish by calling end_thread().

end_thread( thread );

Since both threads have completed at this point in main(), it’s safe to clean up

and display the result.](https://image.slidesharecdn.com/cudabyexample-pages-234-257-200910165814/75/CUDA-by-Example-CUDA-C-on-Multiple-GPUs-Notes-14-2048.jpg)

![usInG multIPle GPus

227

s

free( a );

free( b );

printf( "Value calculated: %fn",

data[0].returnValue + data[1].returnValue );

return 0;

}

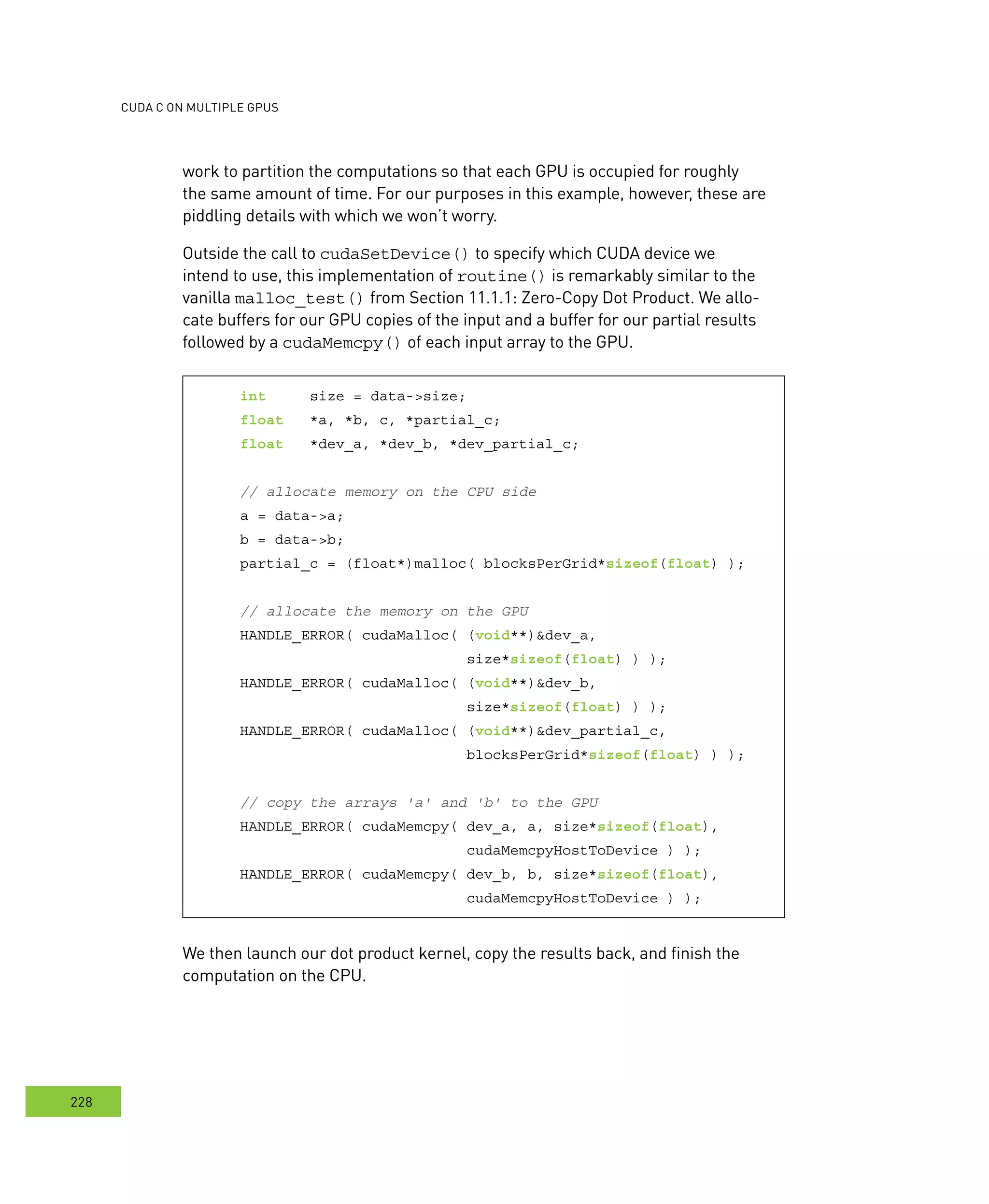

Notice that we sum the results computed by each thread. This is the last step

in our dot product reduction. In another algorithm, this combination of multiple

results may involve other steps. In fact, in some applications, the two GPUs may

be executing completely different code on completely different data sets. For

simplicity’s sake, this is not the case in our dot product example.

Since the dot product routine is identical to the other versions you’ve seen, we’ll

omit it from this section. However, the contents of routine() may be of interest.

We declare routine() as taking and returning a void* so that you can reuse

the start_thread() code with arbitrary implementations of a thread function.

Although we’d love to take credit for this idea, it’s fairly standard procedure for

callback functions in C:

void* routine( void *pvoidData ) {

DataStruct *data = (DataStruct*)pvoidData;

HANDLE_ERROR( cudaSetDevice( data->deviceID ) );

Each thread calls cudaSetDevice(), and each passes a different ID to this

function. As a result, we know each thread will be manipulating a different GPU.

These GPUs may have identical performance, as with the dual-GPU GeForce

GTX 295, or they may be different GPUs as would be the case in a system that

has both an integrated GPU and a discrete GPU. These details are not important

to our application, though they might be of interest to you. Particularly, these

details prove useful if you depend on a certain minimum compute capability to

launch your kernels or if you have a serious desire to load balance your applica-

tion across the system’s GPUs. If the GPUs are different, you will need to do some](https://image.slidesharecdn.com/cudabyexample-pages-234-257-200910165814/75/CUDA-by-Example-CUDA-C-on-Multiple-GPUs-Notes-15-2048.jpg)

![usInG multIPle GPus

229

s

dot<<<blocksPerGrid,threadsPerBlock>>>( size, dev_a, dev_b,

dev_partial_c );

// copy the array 'c' back from the GPU to the CPU

HANDLE_ERROR( cudaMemcpy( partial_c, dev_partial_c,

blocksPerGrid*sizeof(float),

cudaMemcpyDeviceToHost ) );

// finish up on the CPU side

c = 0;

for (int i=0; i<blocksPerGrid; i++) {

c += partial_c[i];

}

As usual, we clean up our GPU buffers and return the dot product we’ve

computed in the returnValue field of our DataStruct.

HANDLE_ERROR( cudaFree( dev_a ) );

HANDLE_ERROR( cudaFree( dev_b ) );

HANDLE_ERROR( cudaFree( dev_partial_c ) );

// free memory on the CPU side

free( partial_c );

data->returnValue = c;

return 0;

}

So when we get down to it, outside of the host thread management issue, using

multiple GPUs is not too much tougher than using a single GPU. Using our helper

code to create a thread and execute a function on that thread, this becomes

significantly more manageable. If you have your own thread libraries, you should

feel free to use them in your own applications. You just need to remember that

each GPU gets its own thread, and everything else is cream cheese.](https://image.slidesharecdn.com/cudabyexample-pages-234-257-200910165814/75/CUDA-by-Example-CUDA-C-on-Multiple-GPUs-Notes-17-2048.jpg)

![cudA c on multIPle GPus

232

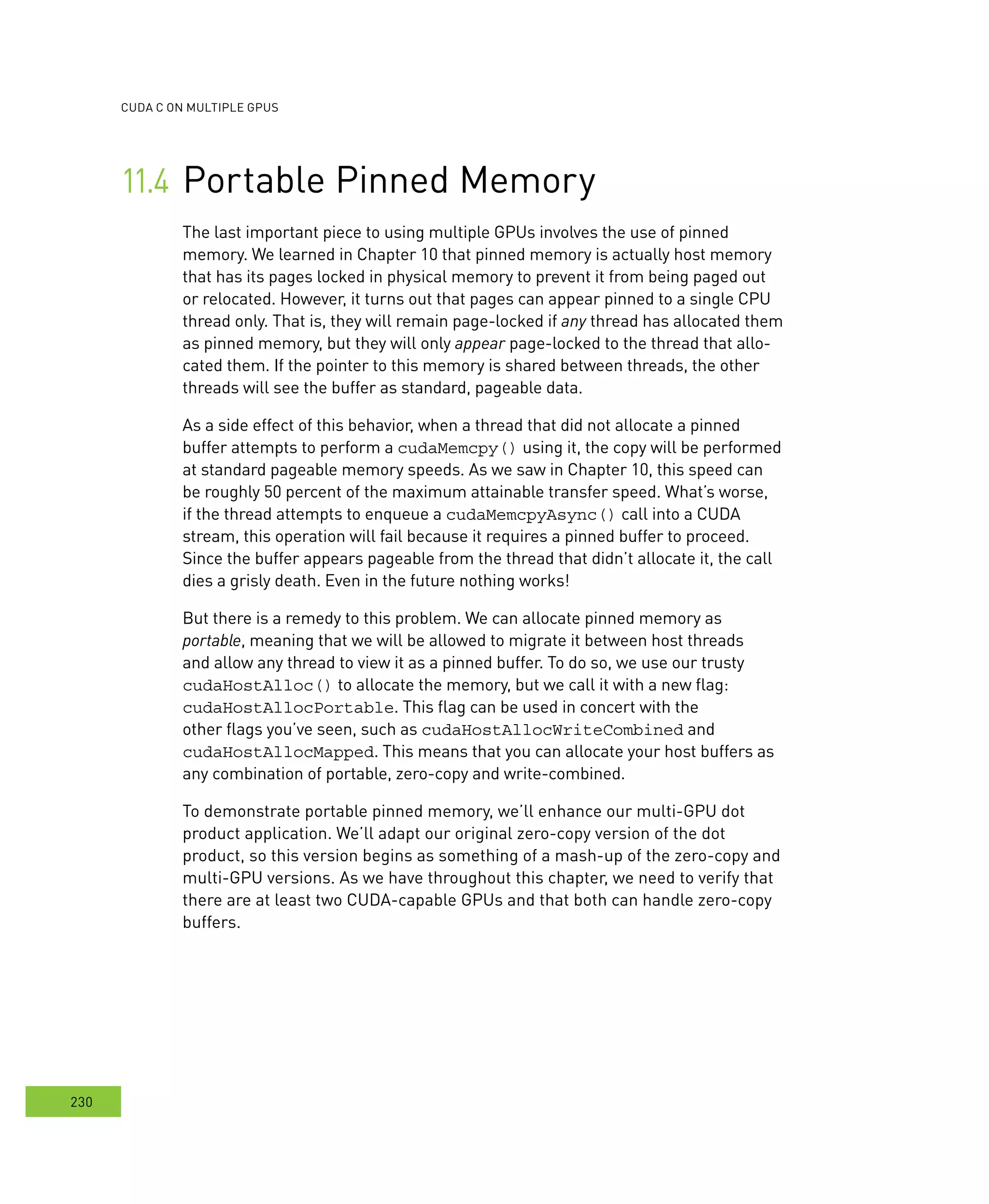

Earlier in this chapter, we called cudaSetDevice() but not until we had already

allocated our memory and created our threads. One of the requirements of allo-

cating page-locked memory with cudaHostAlloc(), though, is that we have

initialized the device first by calling cudaSetDevice(). You will also notice that

we pass our newly learned flag, cudaHostAllocPortable, to both allocations.

Since these were allocated after calling cudaSetDevice(0), only CUDA device

zero would see these buffers as pinned memory if we had not specified that they

were to be portable allocations.

We continue the application as we have in the past, generating data for our input

vectors and preparing our DataStruct structures as we did in the multi-GPU

example in Section 11.2: Zero-Copy Performance.

// fill in the host memory with data

for (int i=0; i<N; i++) {

a[i] = i;

b[i] = i*2;

}

// prepare for multithread

DataStruct data[2];

data[0].deviceID = 0;

data[0].offset = 0;

data[0].size = N/2;

data[0].a = a;

data[0].b = b;

data[1].deviceID = 1;

data[1].offset = N/2;

data[1].size = N/2;

data[1].a = a;

data[1].b = b;

We can then create our secondary thread and call routine() to begin

computing on each device.](https://image.slidesharecdn.com/cudabyexample-pages-234-257-200910165814/75/CUDA-by-Example-CUDA-C-on-Multiple-GPUs-Notes-20-2048.jpg)

![PPPRRR AAA EEE PPP DDD MMM emory

233

emory

CUTThread thread = start_thread( routine, &(data[1]) );

routine( &(data[0]) );

end_thread( thread );

Because our host memory was allocated by the CUDA runtime, we use

cudaFreeHost() to free it. Other than no longer calling free(), we have seen

all there is to see in main().

// free memory on the CPU side

HANDLE_ERROR( cudaFreeHost( a ) );

HANDLE_ERROR( cudaFreeHost( b ) );

printf( "Value calculated: %fn",

data[0].returnValue + data[1].returnValue );

return 0;

}

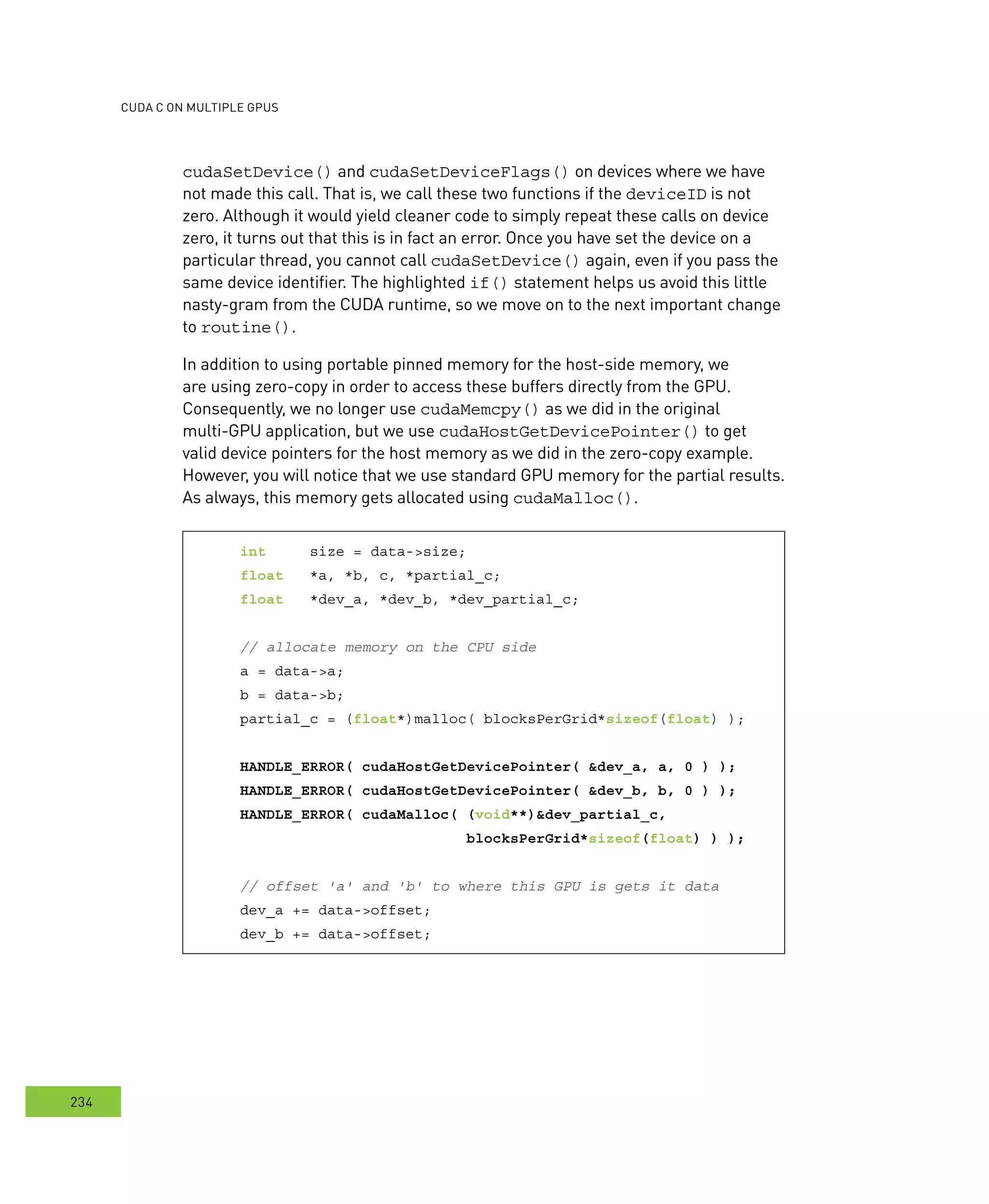

To support portable pinned memory and zero-copy memory in our multi-GPU

application, we need to make two notable changes in the code for routine().

The first is a bit subtle, and in no way should this have been obvious.

void* routine( void *pvoidData ) {

DataStruct *data = (DataStruct*)pvoidData;

if (data->deviceID != 0) {

HANDLE_ERROR( cudaSetDevice( data->deviceID ) );

HANDLE_ERROR( cudaSetDeviceFlags( cudaDeviceMapHost ) );

}

You may recall in our multi-GPU version of this code, we need a call to

cudaSetDevice() in routine() in order to ensure that each participating

thread controls a different GPU. On the other hand, in this example we have

already made a call to cudaSetDevice() from the main thread. We did so in

order to allocate pinned memory in main(). As a result, we only want to call](https://image.slidesharecdn.com/cudabyexample-pages-234-257-200910165814/75/CUDA-by-Example-CUDA-C-on-Multiple-GPUs-Notes-21-2048.jpg)

![eview

235

11.5 CCC PPPRRR RRR eview

At this point, we’re pretty much ready to go, so we launch our kernel and copy our

results back from the GPU.

dot<<<blocksPerGrid,threadsPerBlock>>>( size, dev_a, dev_b,

dev_partial_c );

// copy the array 'c' back from the GPU to the CPU

HANDLE_ERROR( cudaMemcpy( partial_c, dev_partial_c,

blocksPerGrid*sizeof(float),

cudaMemcpyDeviceToHost ) );

We conclude as we always have in our dot product example by summing

our partial results on the CPU, freeing our temporary storage, and returning

to main().

// finish up on the CPU side

c = 0;

for (int i=0; i<blocksPerGrid; i++) {

c += partial_c[i];

}

HANDLE_ERROR( cudaFree( dev_partial_c ) );

// free memory on the CPU side

free( partial_c );

data->returnValue = c;

return 0;

}

Chapter Review

We have seen some new types of host memory allocations, all of which get

allocated with a single call, cudaHostAlloc(). Using a combination of this

one entry point and a set of argument flags, we can allocate memory as any

combination of zero-copy, portable, and/or write-combined. We used zero-copy](https://image.slidesharecdn.com/cudabyexample-pages-234-257-200910165814/75/CUDA-by-Example-CUDA-C-on-Multiple-GPUs-Notes-23-2048.jpg)