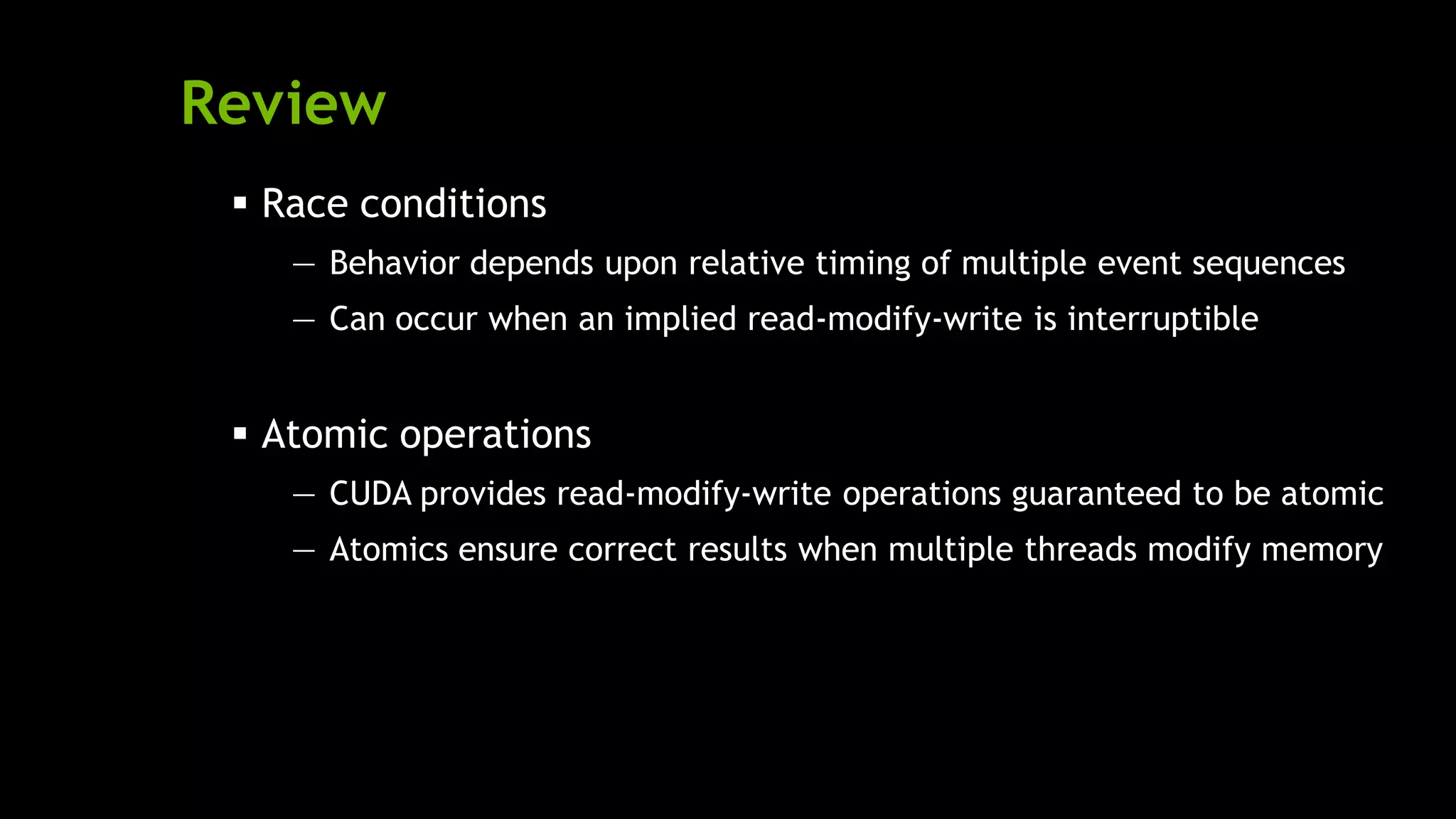

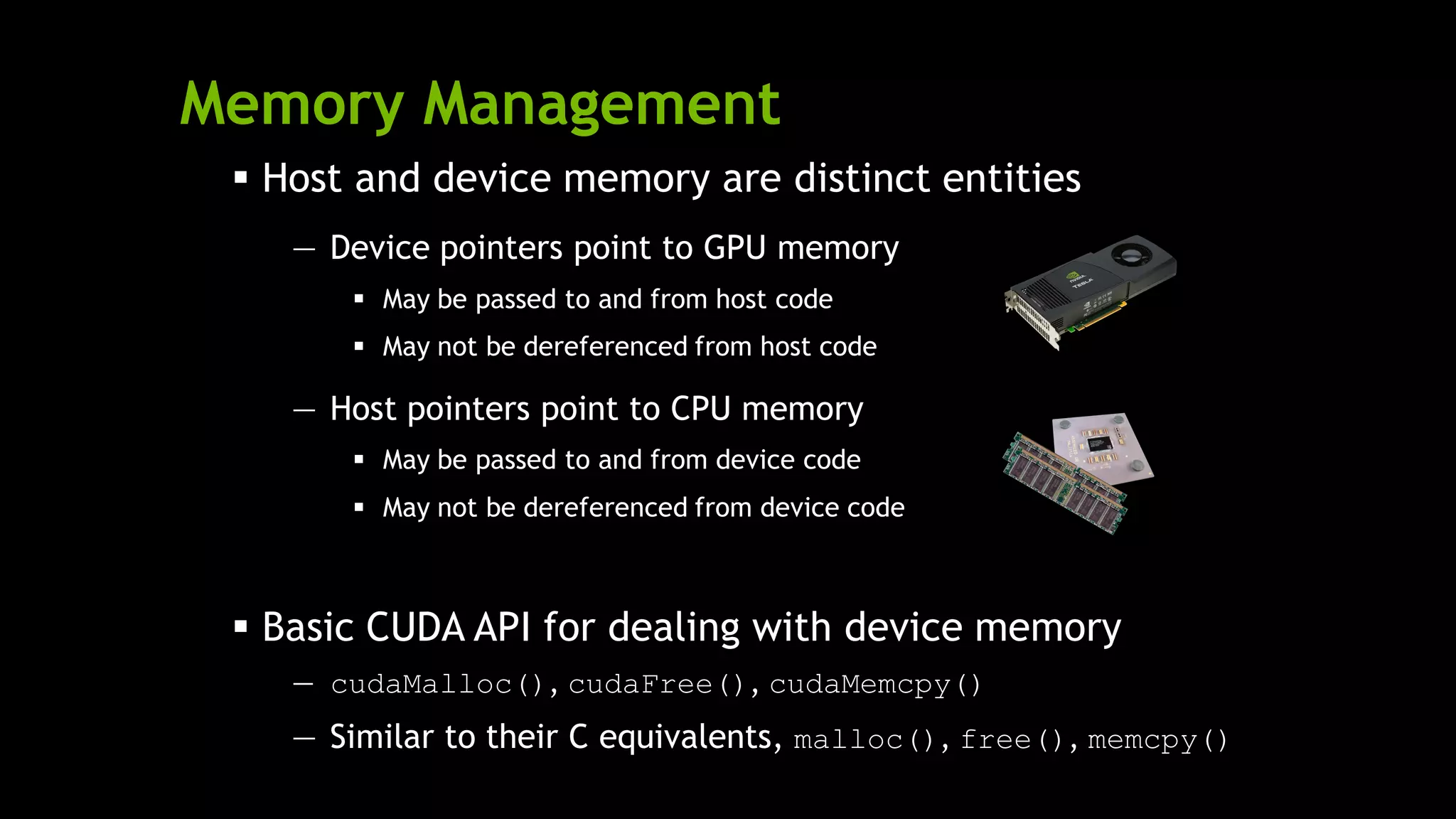

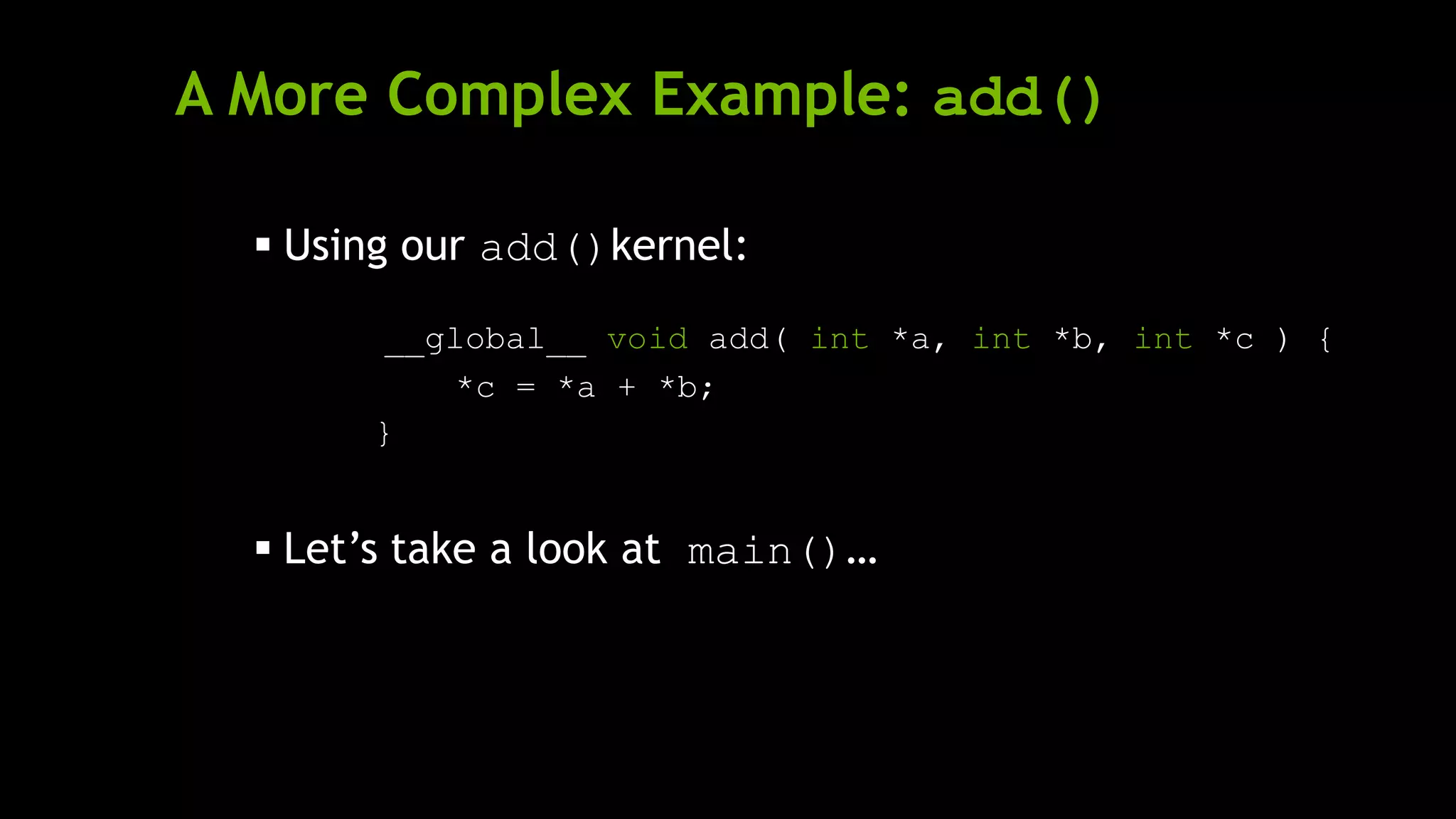

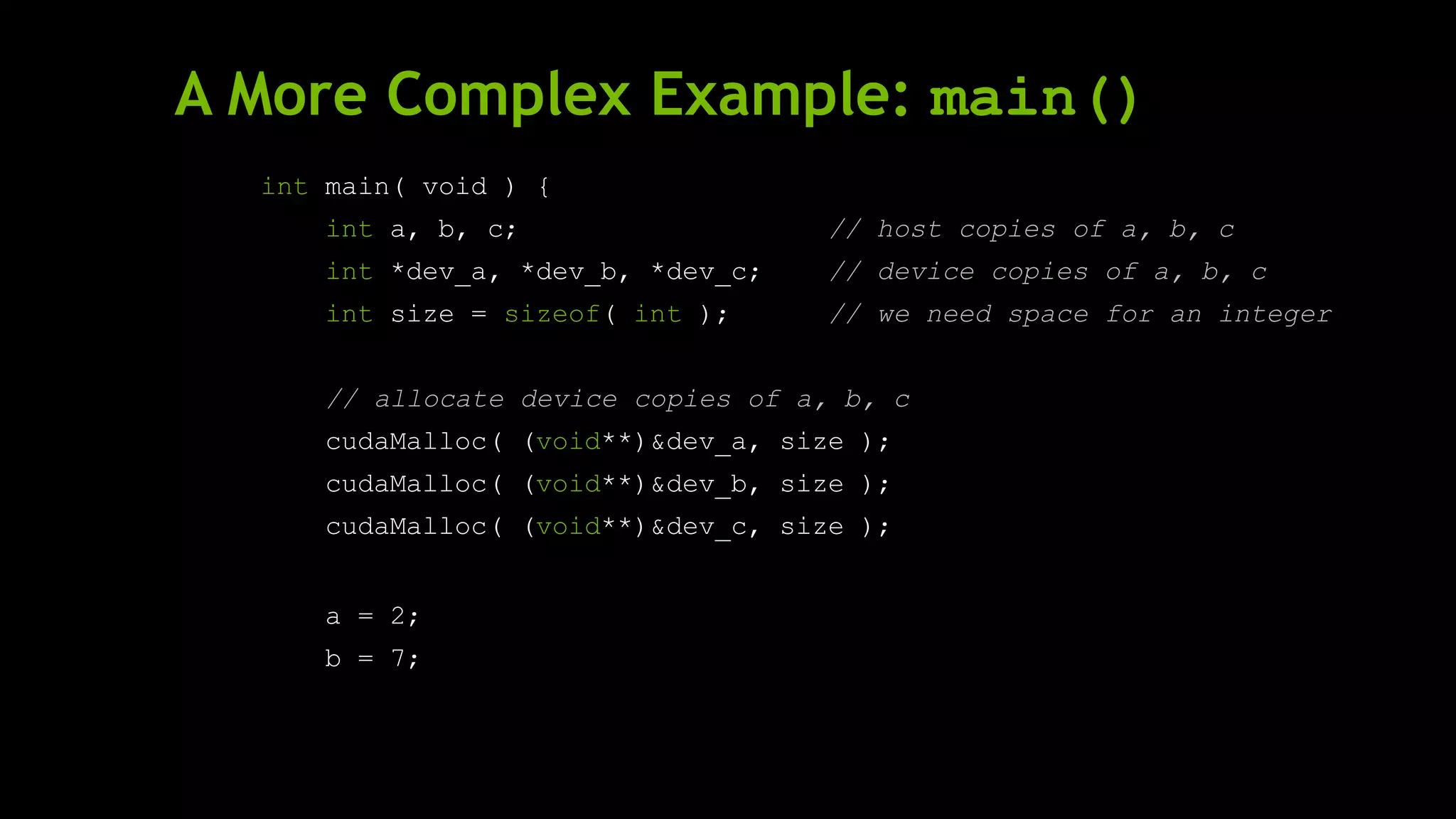

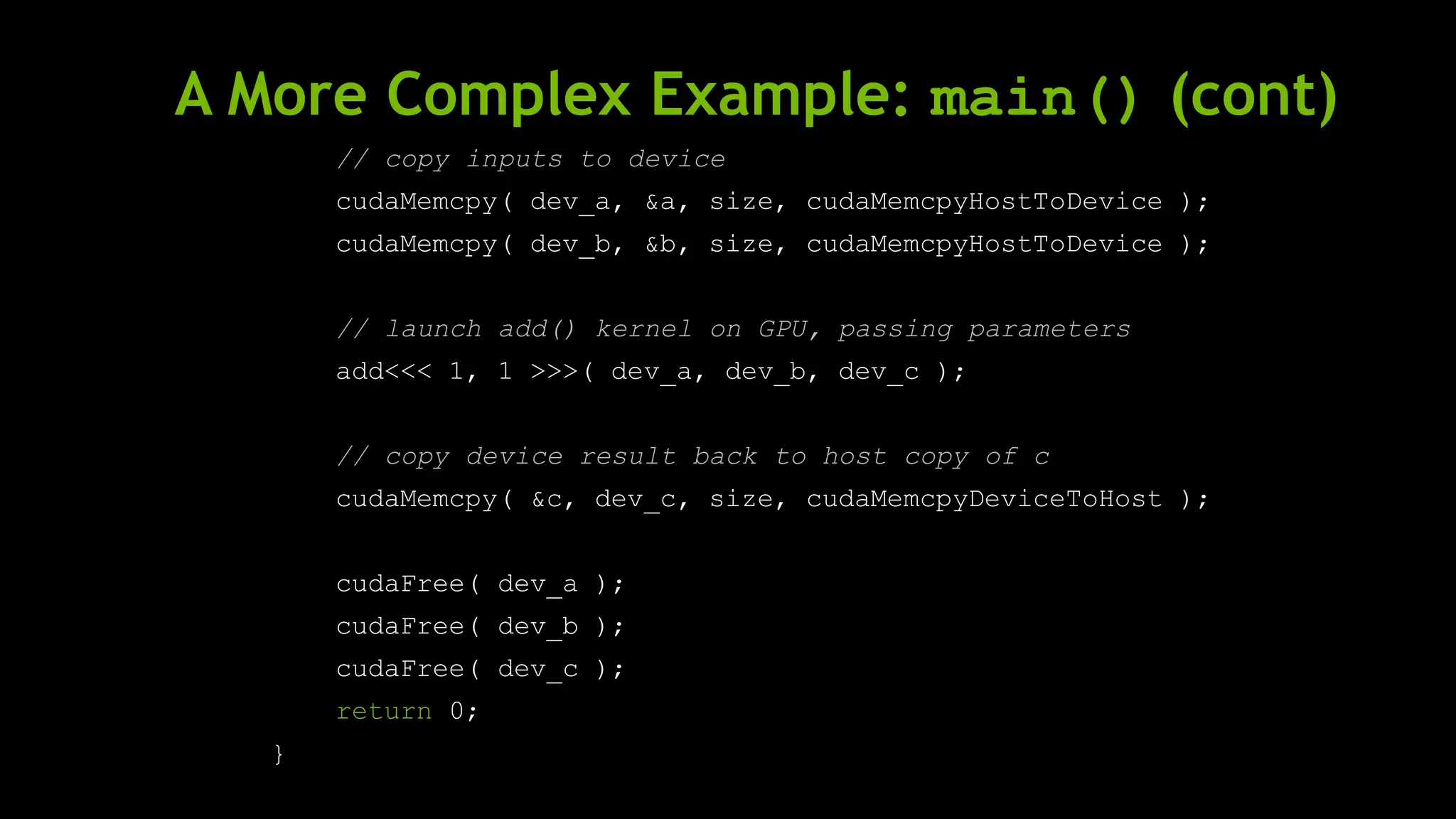

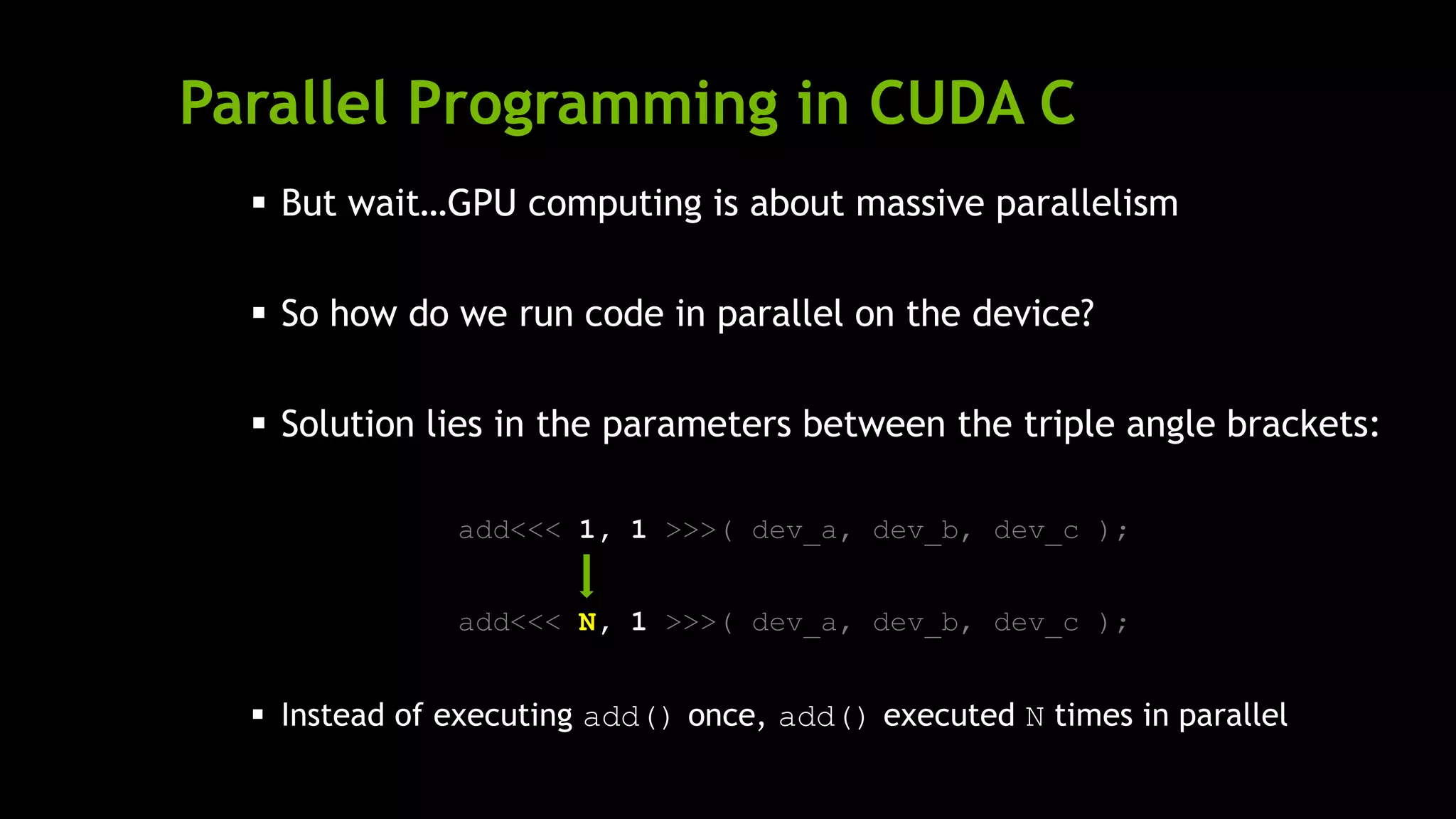

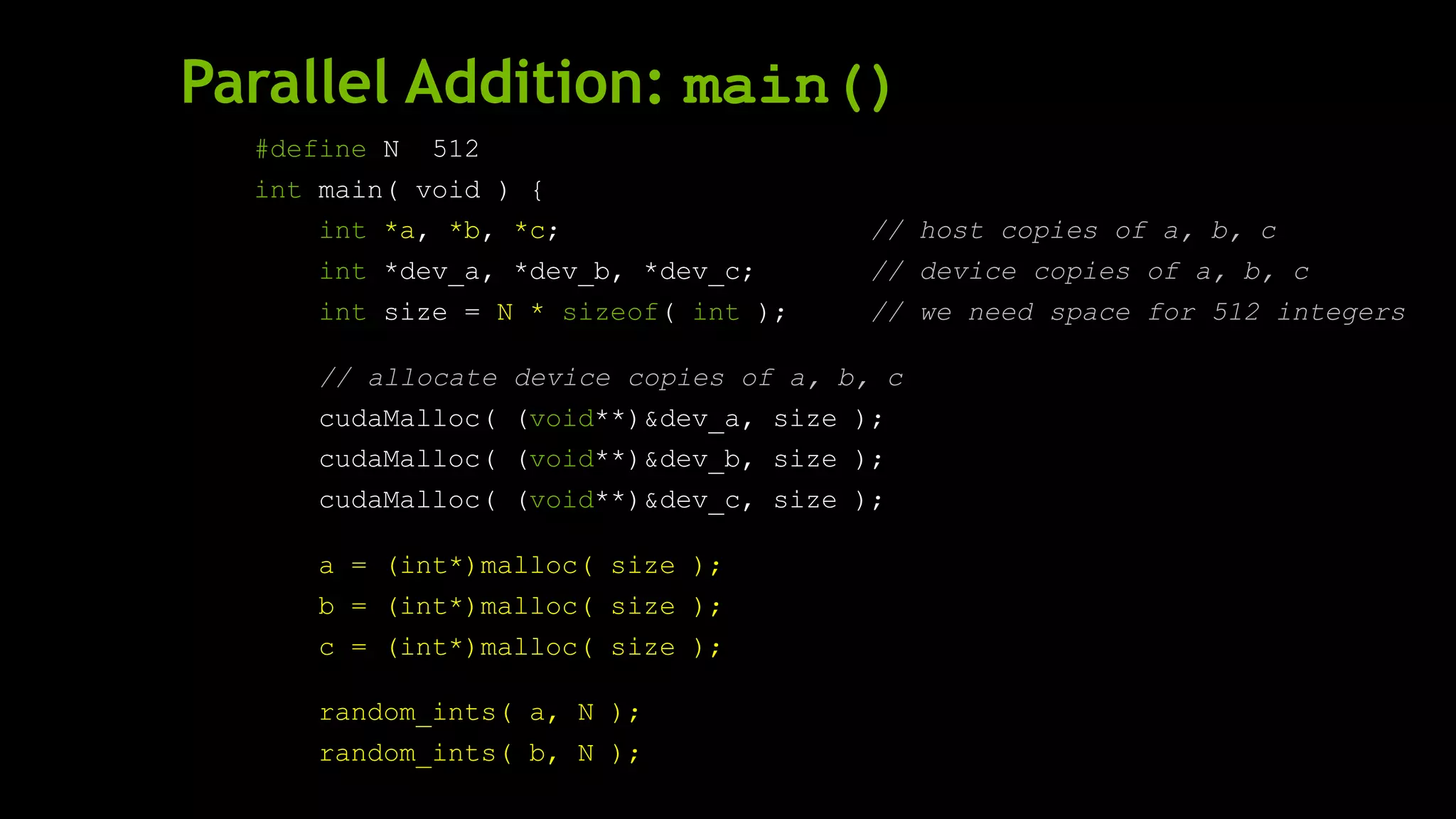

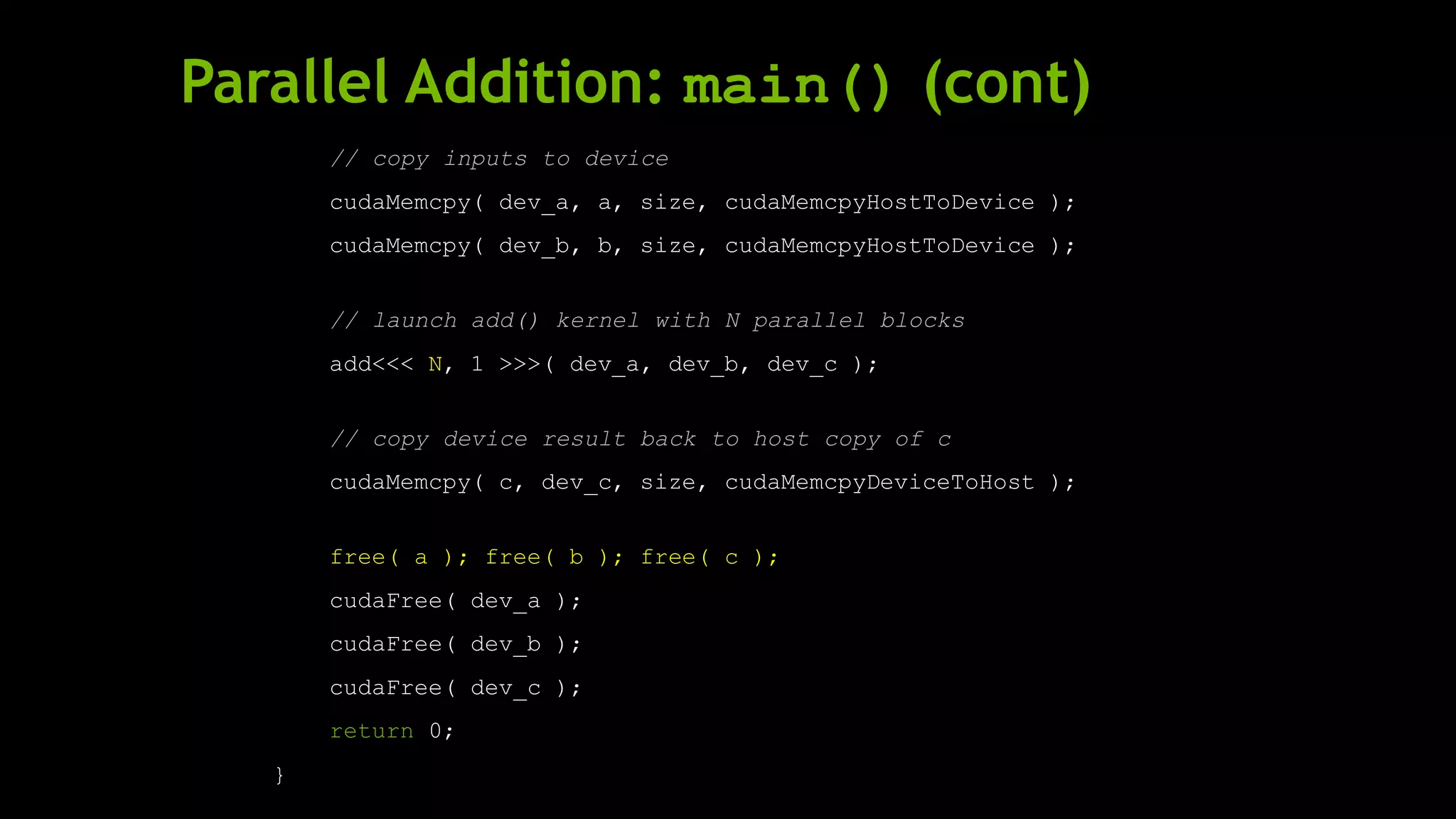

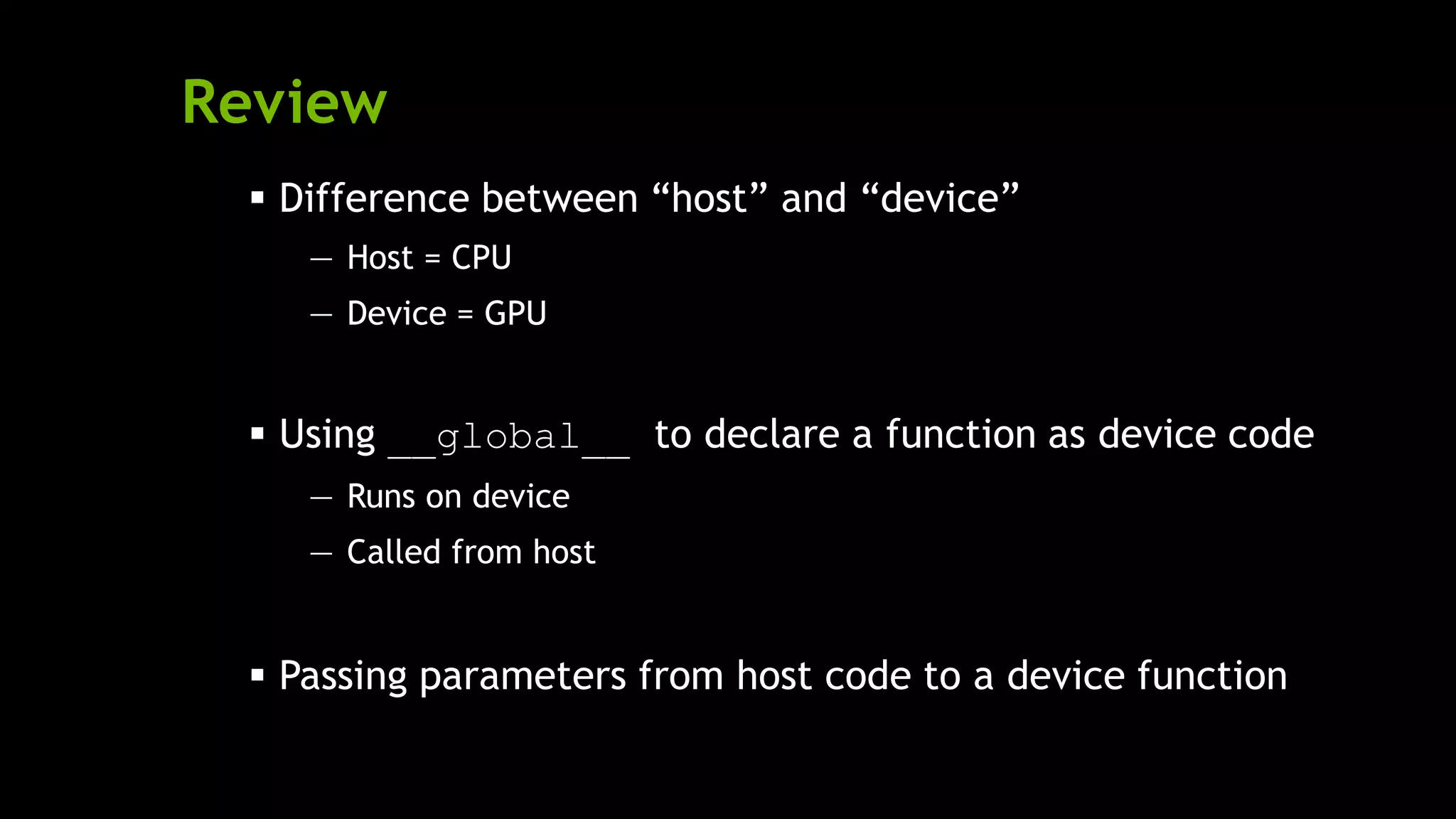

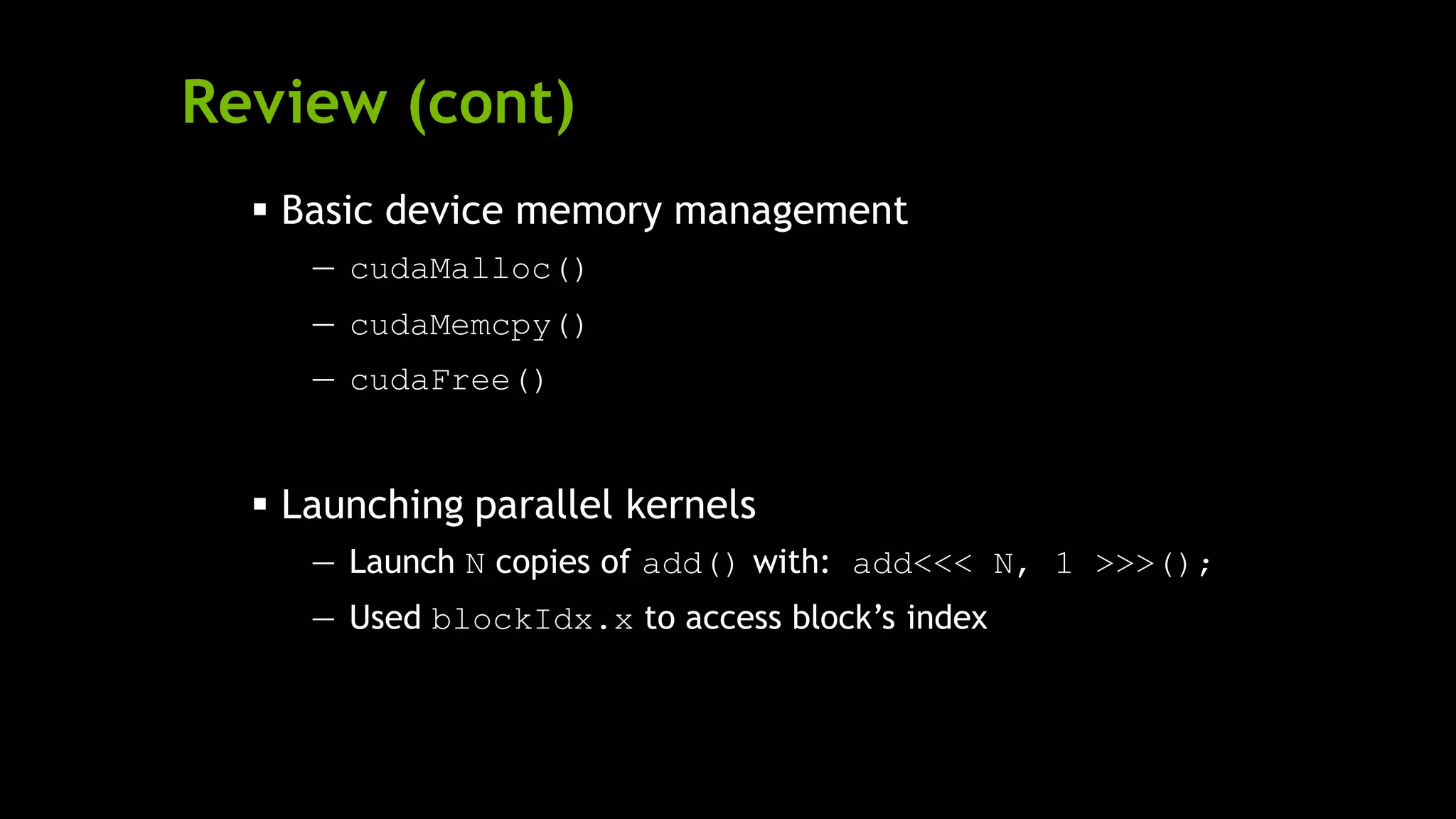

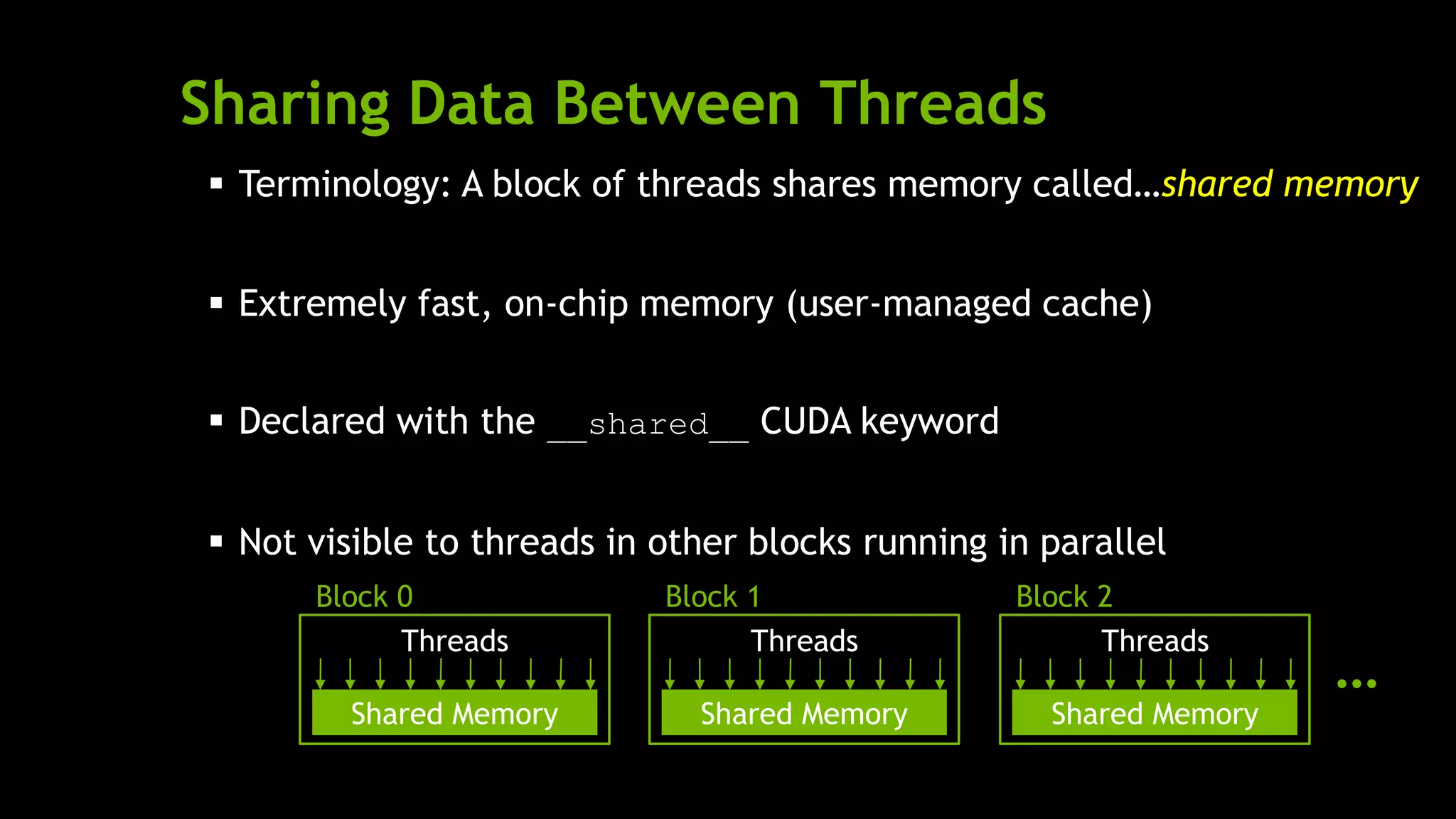

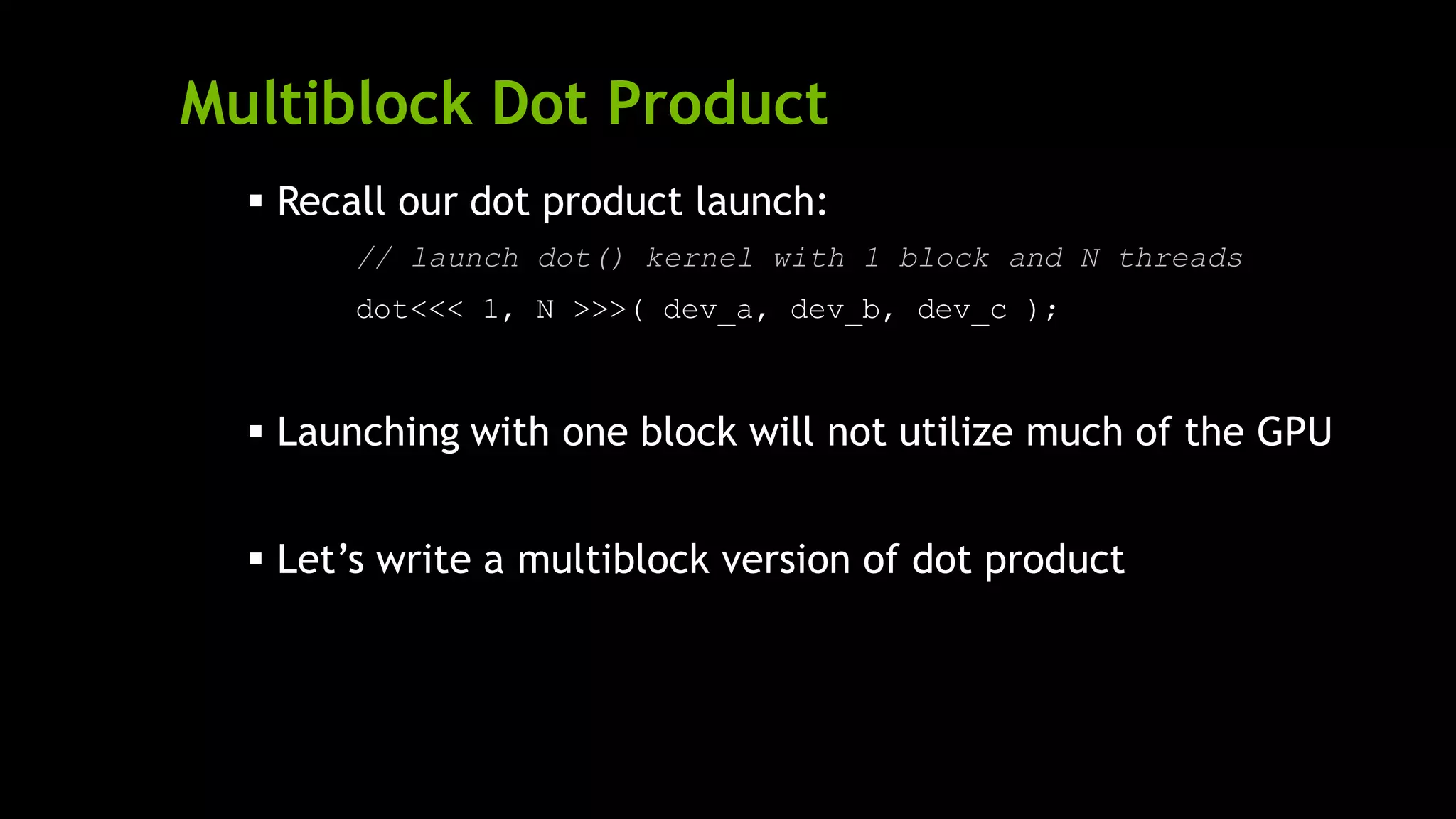

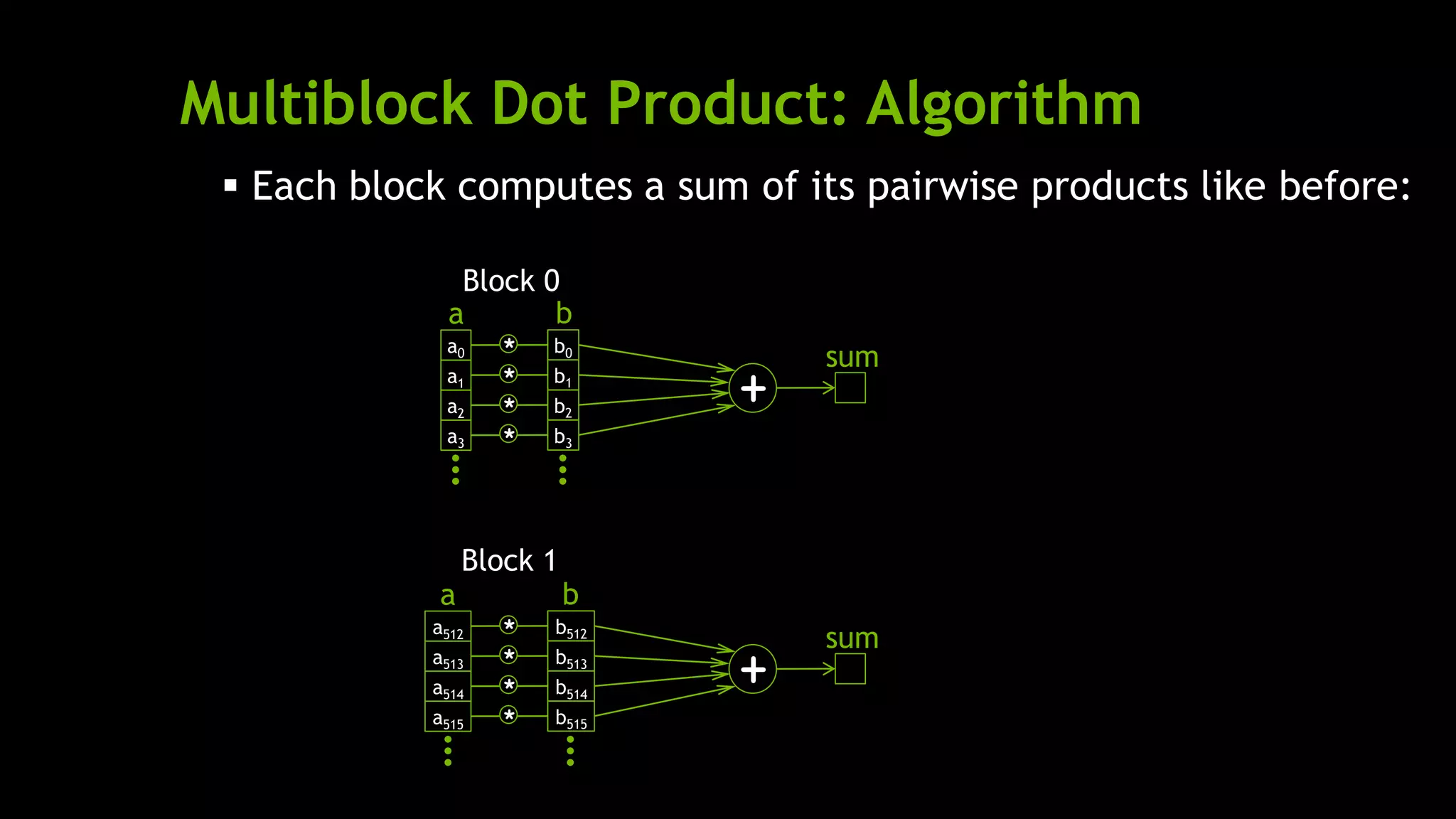

This document serves as an introduction to CUDA C, a parallel computing platform and programming model that leverages GPU computing. It outlines the basics of CUDA C, including memory management, kernel launches, and techniques for parallel programming such as using blocks and threads. The document also provides examples of vector addition and dot product operations, highlighting key CUDA concepts like device vs. host memory, the use of the __global__ keyword, and the importance of shared memory for synchronizing data between threads.

![Parallel Programming in CUDA C

With add() running in parallel…let’s do vector addition

Terminology: Each parallel invocation of add() referred to as a block

Kernel can refer to its block’s index with the variable blockIdx.x

Each block adds a value from a[] and b[], storing the result in c[]:

__global__ void add( int *a, int *b, int *c ) {

c[blockIdx.x] = a[blockIdx.x] + b[blockIdx.x];

}

By using blockIdx.x to index arrays, each block handles different indices](https://image.slidesharecdn.com/nvidia-200907043931/75/Introduction-to-CUDA-C-NVIDIA-Notes-17-2048.jpg)

![Parallel Programming in CUDA C

Block 1

c[1] = a[1] + b[1];

We write this code:

__global__ void add( int *a, int *b, int *c ) {

c[blockIdx.x] = a[blockIdx.x] + b[blockIdx.x];

}

This is what runs in parallel on the device:

Block 0

c[0] = a[0] + b[0];

Block 2

c[2] = a[2] + b[2];

Block 3

c[3] = a[3] + b[3];](https://image.slidesharecdn.com/nvidia-200907043931/75/Introduction-to-CUDA-C-NVIDIA-Notes-18-2048.jpg)

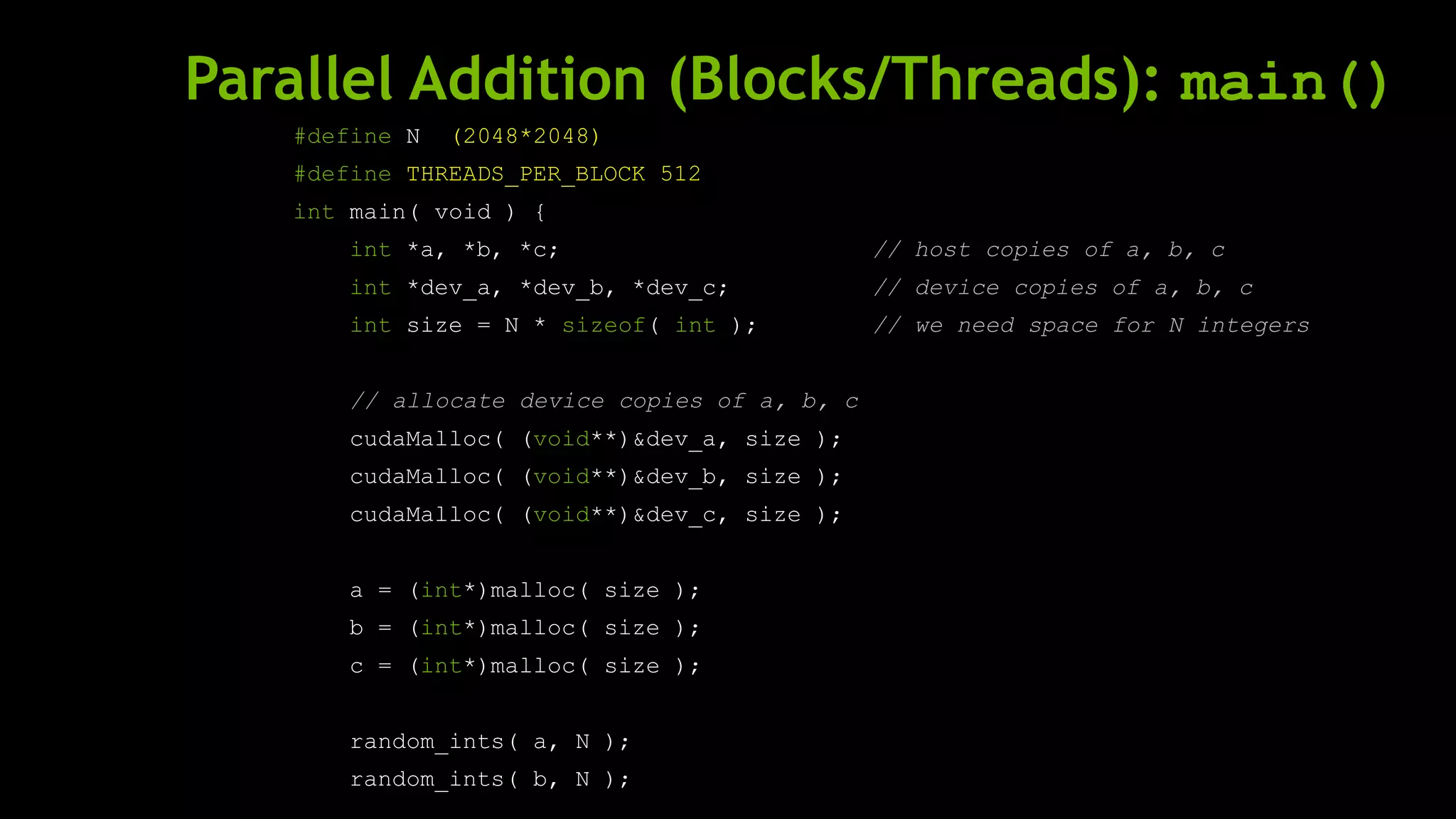

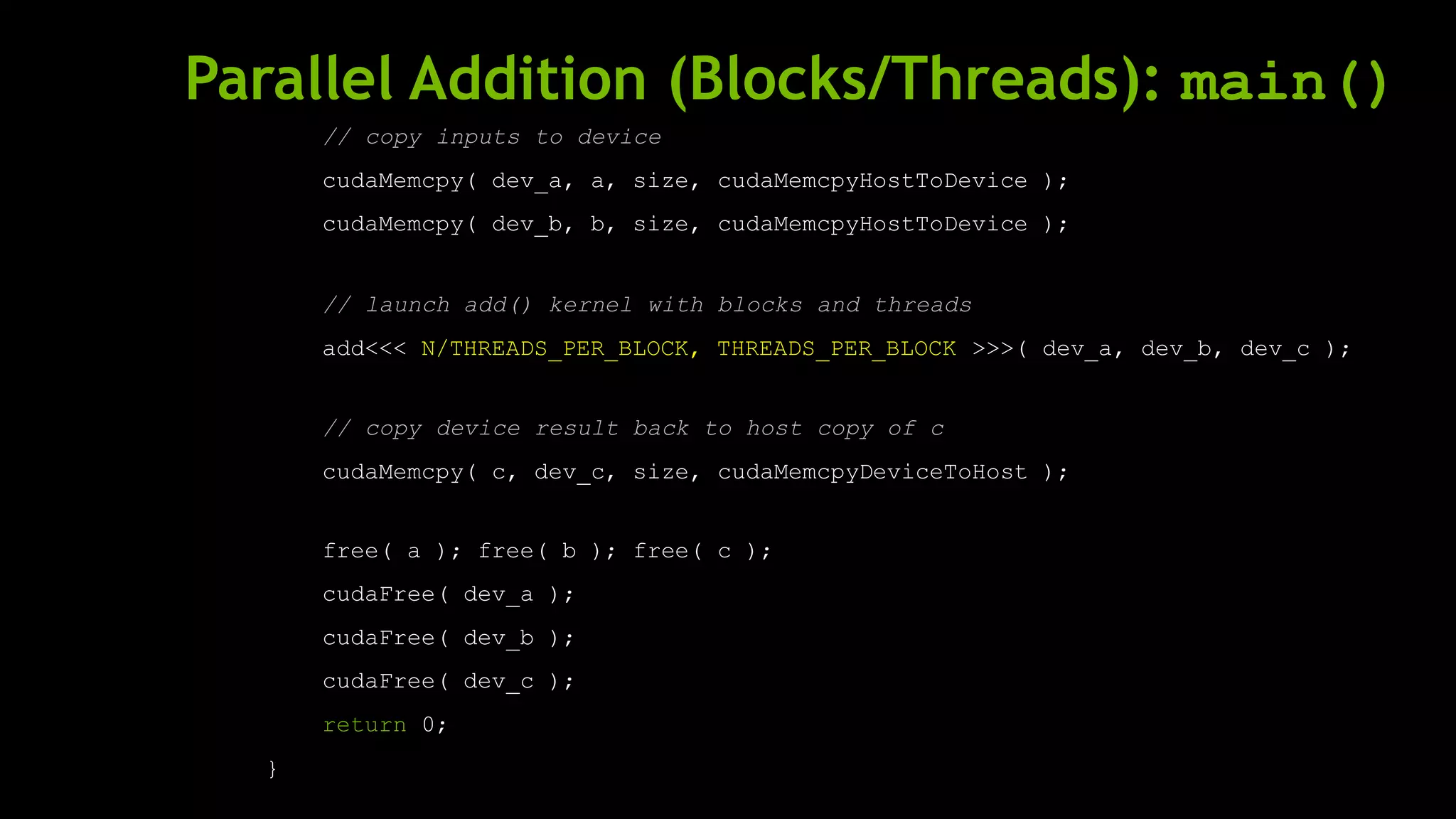

![Parallel Addition: add()

Using our newly parallelized add()kernel:

__global__ void add( int *a, int *b, int *c ) {

c[blockIdx.x] = a[blockIdx.x] + b[blockIdx.x];

}

Let’s take a look at main()…](https://image.slidesharecdn.com/nvidia-200907043931/75/Introduction-to-CUDA-C-NVIDIA-Notes-19-2048.jpg)

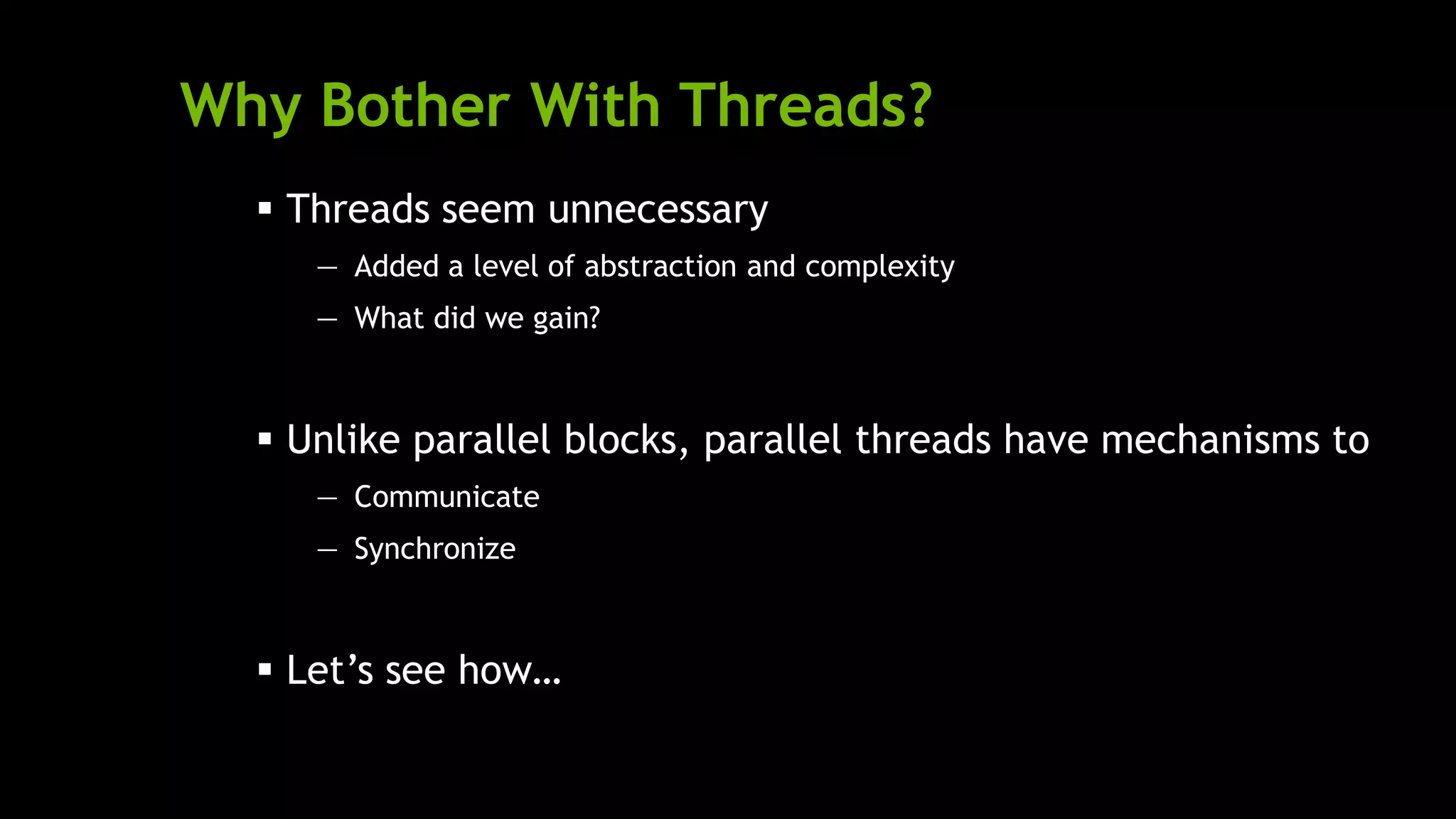

![Threads

Terminology: A block can be split into parallel threads

Let’s change vector addition to use parallel threads instead of parallel blocks:

__global__ void add( int *a, int *b, int *c ) {

c[ ] = a[ ] + b[ ];

}

We use threadIdx.x instead of blockIdx.x in add()

main() will require one change as well…

threadIdx.x threadIdx.x threadIdx.xblockIdx.x blockIdx.x blockIdx.x](https://image.slidesharecdn.com/nvidia-200907043931/75/Introduction-to-CUDA-C-NVIDIA-Notes-24-2048.jpg)

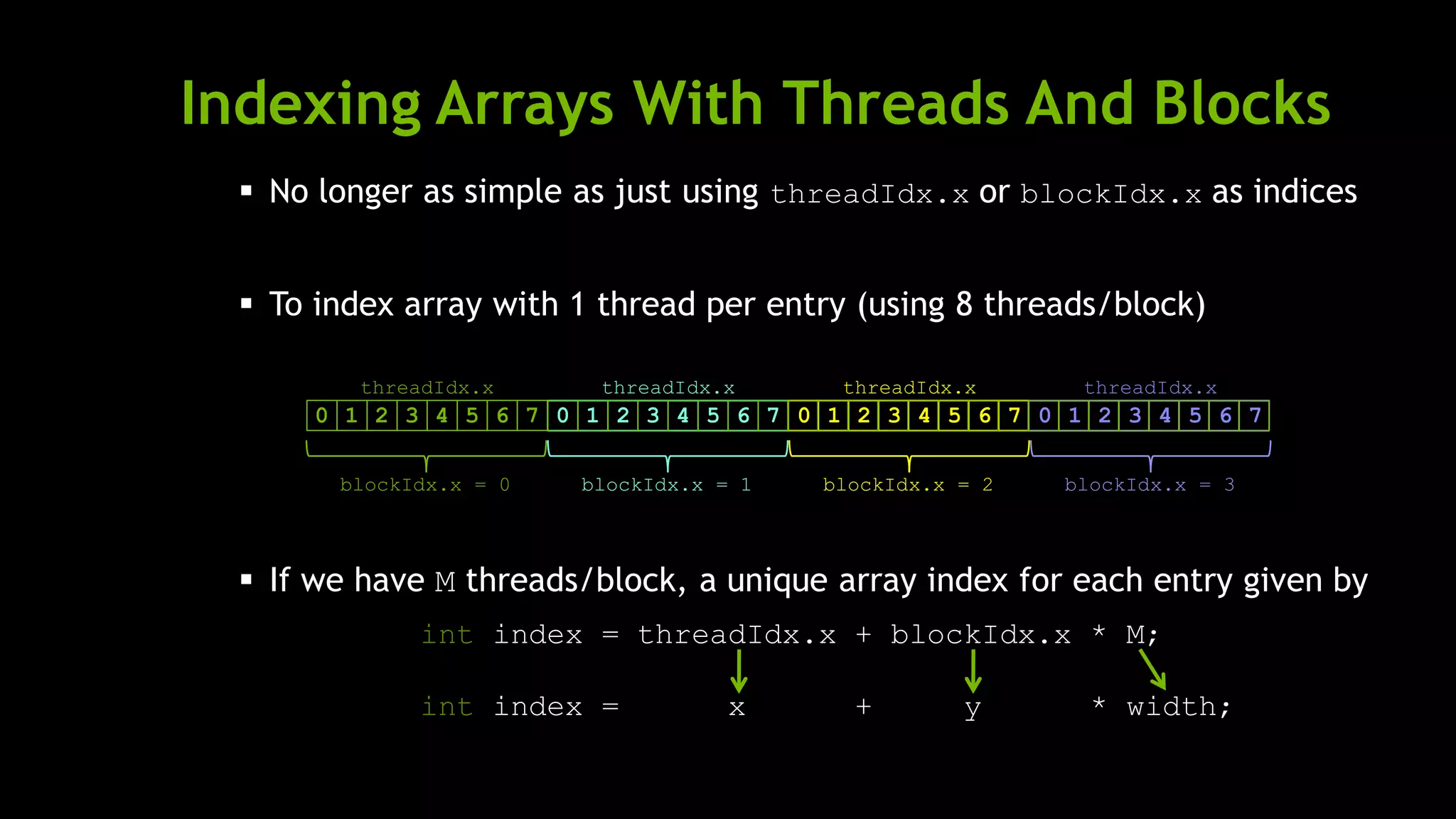

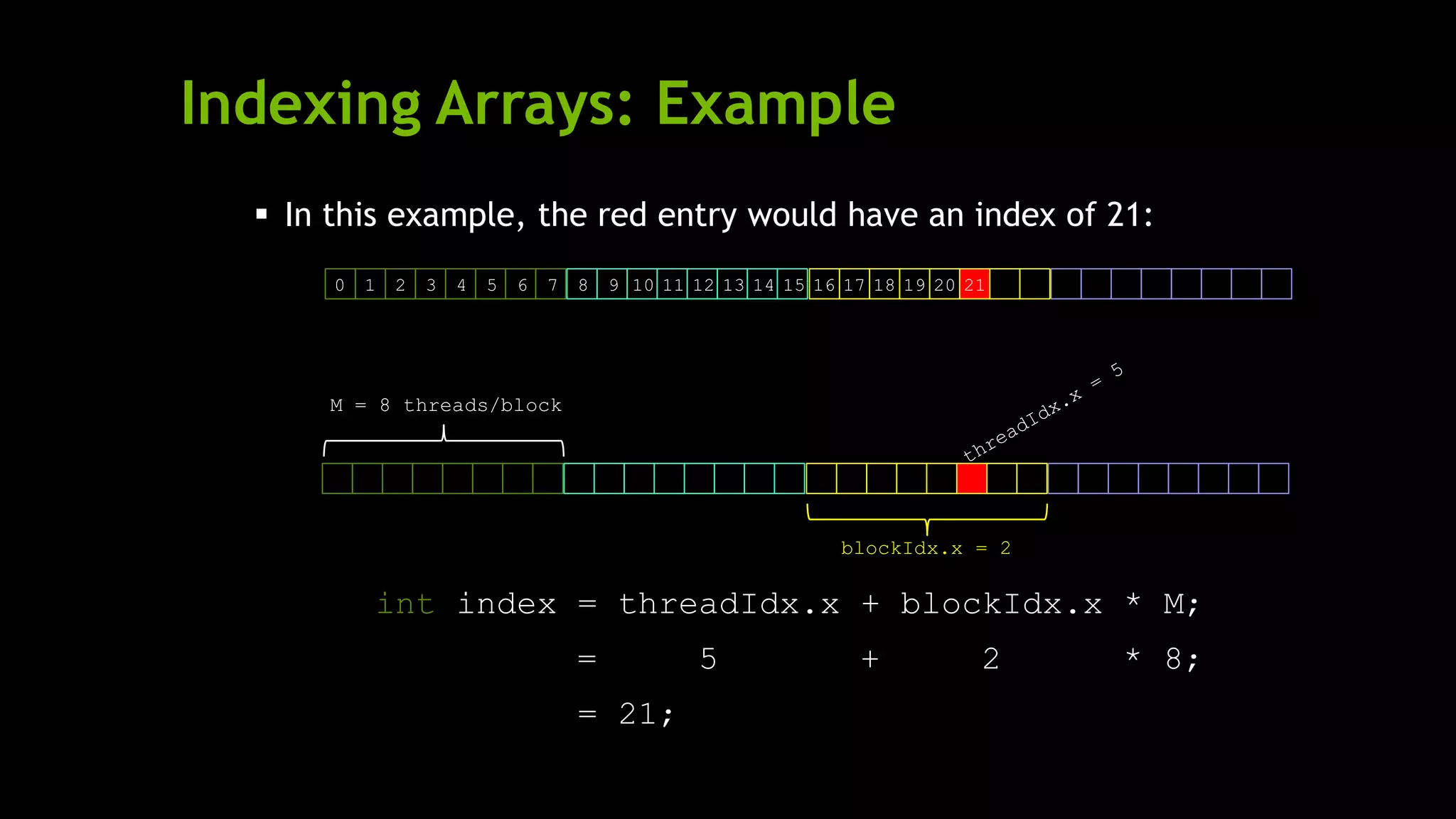

![Addition with Threads and Blocks

The blockDim.x is a built-in variable for threads per block:

int index= threadIdx.x + blockIdx.x * blockDim.x;

A combined version of our vector addition kernel to use blocks and threads:

__global__ void add( int *a, int *b, int *c ) {

int index = threadIdx.x + blockIdx.x * blockDim.x;

c[index] = a[index] + b[index];

}

So what changes in main() when we use both blocks and threads?](https://image.slidesharecdn.com/nvidia-200907043931/75/Introduction-to-CUDA-C-NVIDIA-Notes-30-2048.jpg)

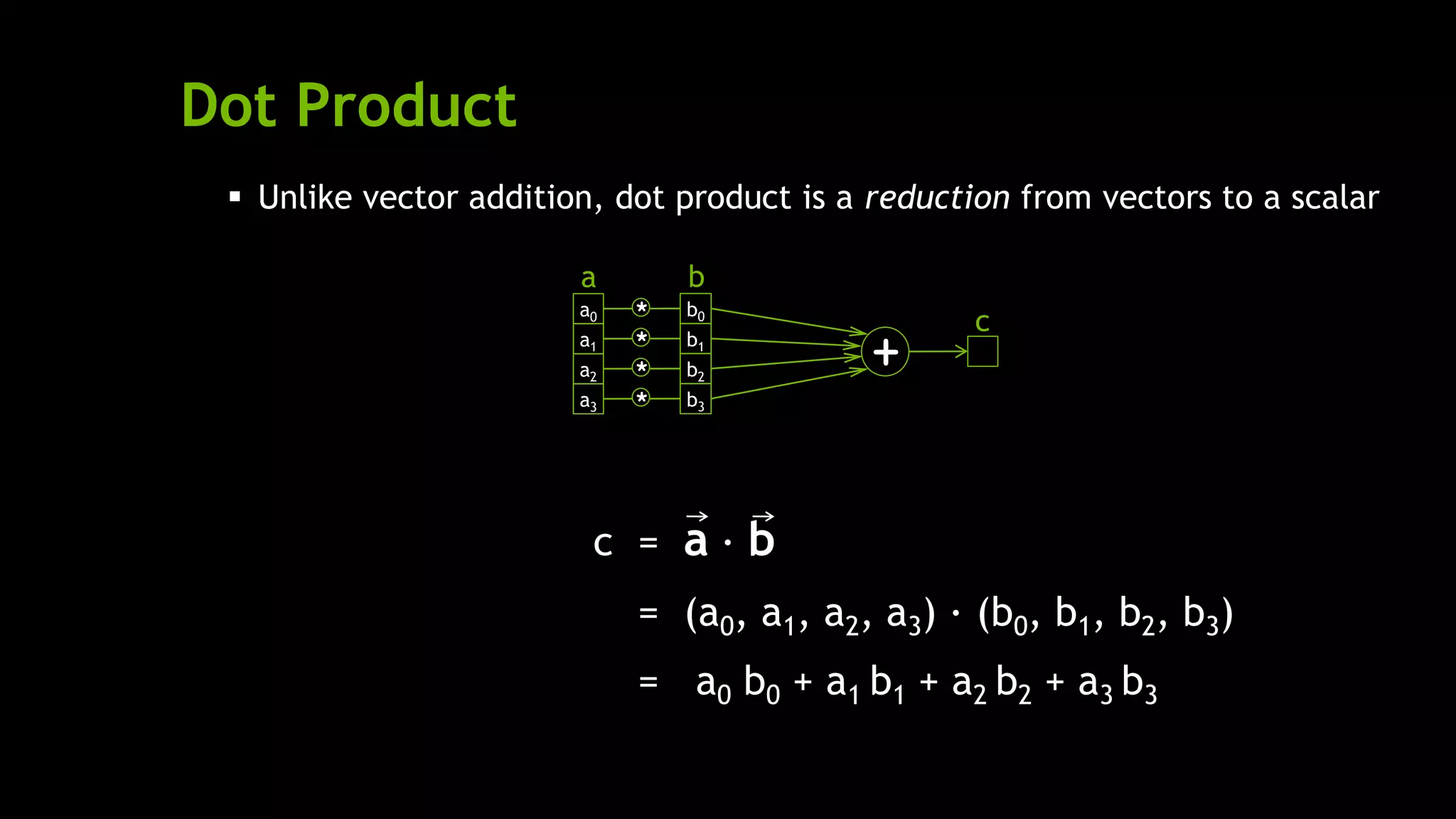

![Dot Product

Parallel threads have no problem computing the pairwise products:

So we can start a dot product CUDA kernel by doing just that:

__global__ void dot( int *a, int *b, int *c ) {

// Each thread computes a pairwise product

int temp = a[threadIdx.x] * b[threadIdx.x];

a0

a1

a2

a3

b0

b1

b2

b3

*

*

*

*

+

a b](https://image.slidesharecdn.com/nvidia-200907043931/75/Introduction-to-CUDA-C-NVIDIA-Notes-35-2048.jpg)

![Dot Product

But we need to share data between threads to compute the final sum:

__global__ void dot( int *a, int *b, int *c ) {

// Each thread computes a pairwise product

int temp = a[threadIdx.x] * b[threadIdx.x];

// Can’t compute the final sum

// Each thread’s copy of ‘temp’ is private

}

a0

a1

a2

a3

b0

b1

b2

b3

*

*

*

*

+

a b](https://image.slidesharecdn.com/nvidia-200907043931/75/Introduction-to-CUDA-C-NVIDIA-Notes-36-2048.jpg)

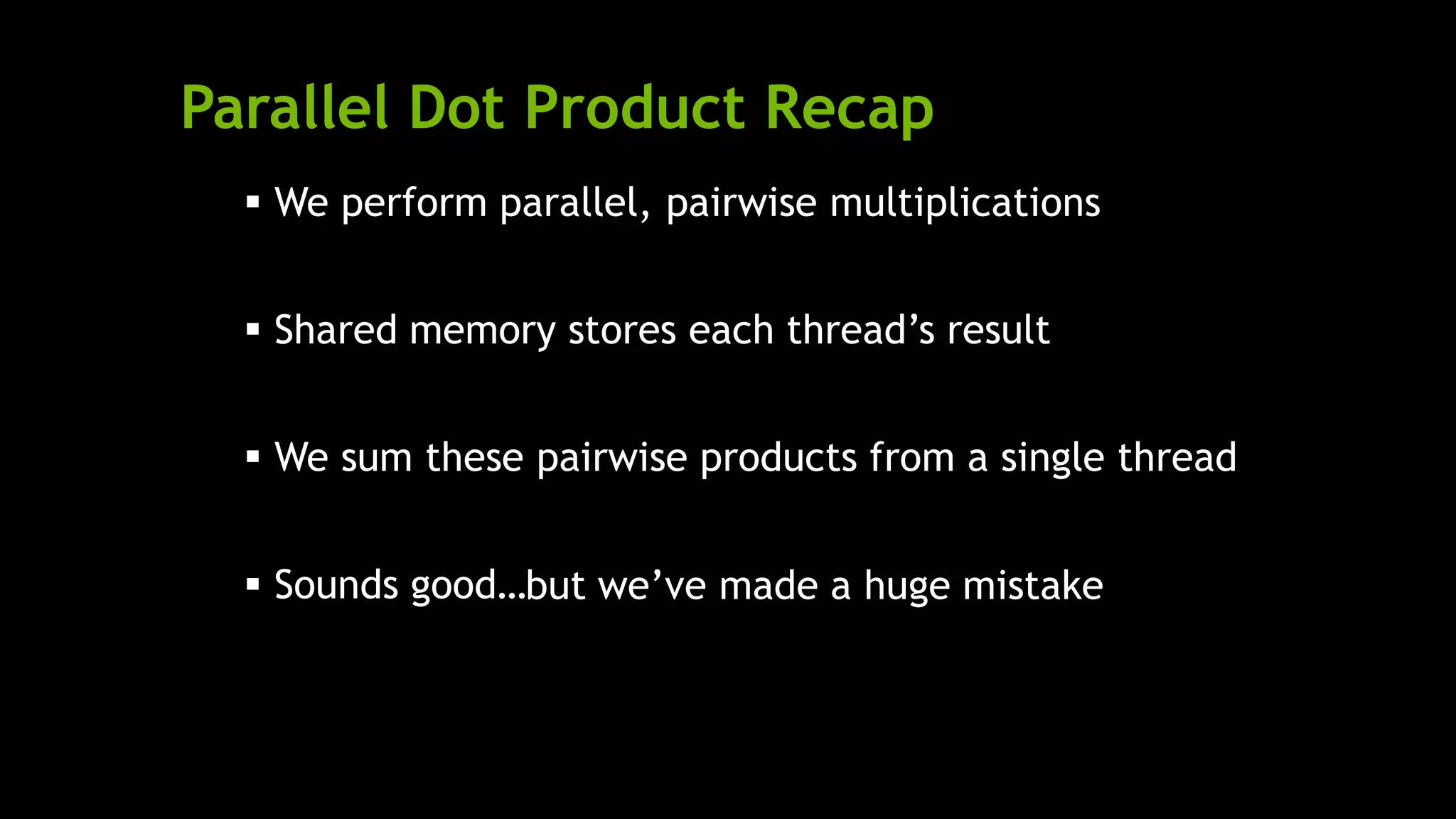

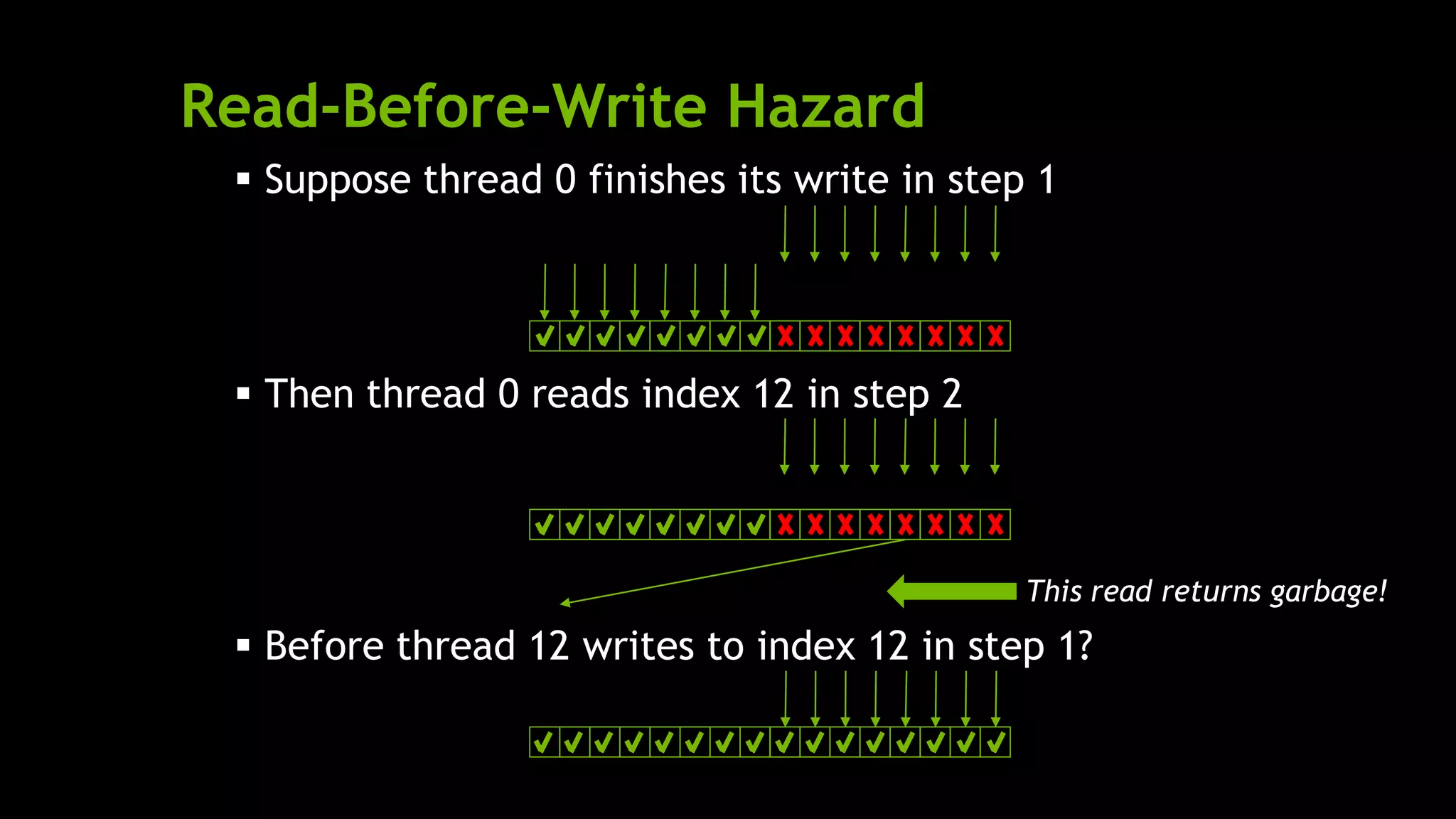

![Parallel Dot Product: dot()

We perform parallel multiplication, serial addition:

#define N 512

__global__ void dot( int *a, int *b, int *c ) {

// Shared memory for results of multiplication

__shared__ int temp[N];

temp[threadIdx.x] = a[threadIdx.x] * b[threadIdx.x];

// Thread 0 sums the pairwise products

if( 0 == threadIdx.x ) {

int sum = 0;

for( int i = 0; i < N; i++ )

sum += temp[i];

*c = sum;

}

}](https://image.slidesharecdn.com/nvidia-200907043931/75/Introduction-to-CUDA-C-NVIDIA-Notes-38-2048.jpg)

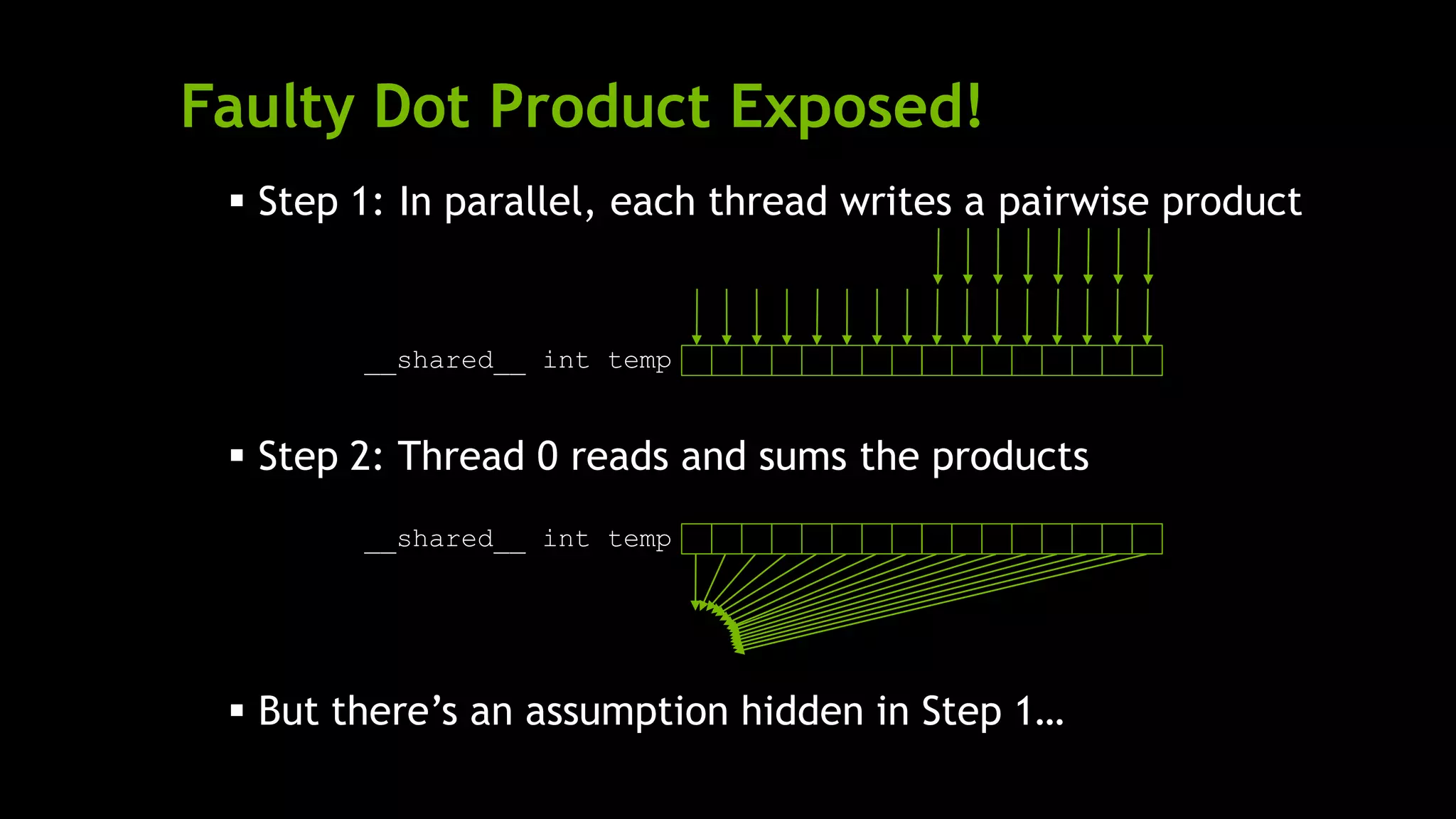

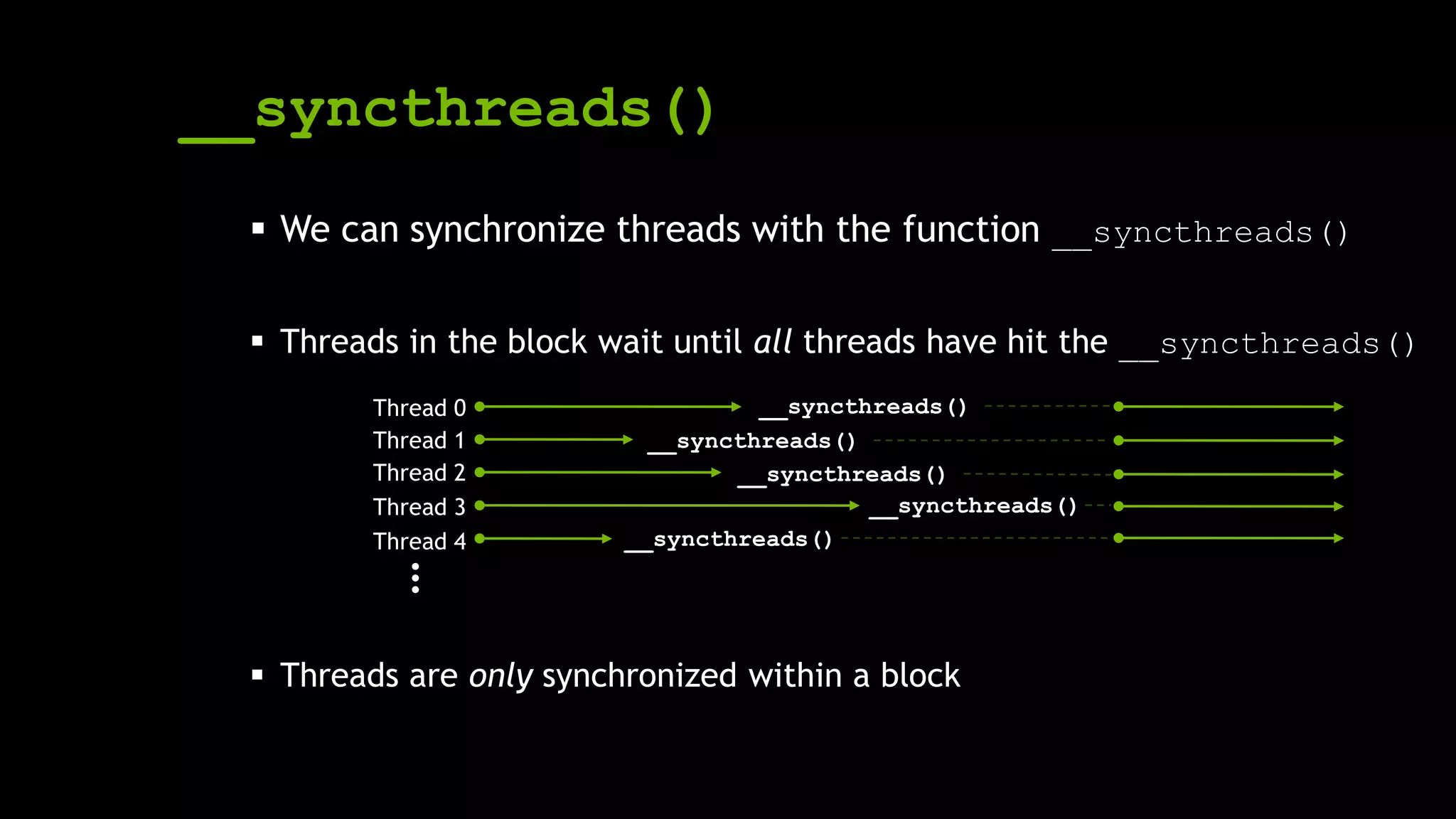

![Synchronization

We need threads to wait between the sections of dot():

__global__ void dot( int *a, int *b, int *c ) {

__shared__ int temp[N];

temp[threadIdx.x] = a[threadIdx.x] * b[threadIdx.x];

// * NEED THREADS TO SYNCHRONIZE HERE *

// No thread can advance until all threads

// have reached this point in the code

// Thread 0 sums the pairwise products

if( 0 == threadIdx.x ) {

int sum = 0;

for( int i = 0; i < N; i++ )

sum += temp[i];

*c = sum;

}

}](https://image.slidesharecdn.com/nvidia-200907043931/75/Introduction-to-CUDA-C-NVIDIA-Notes-42-2048.jpg)

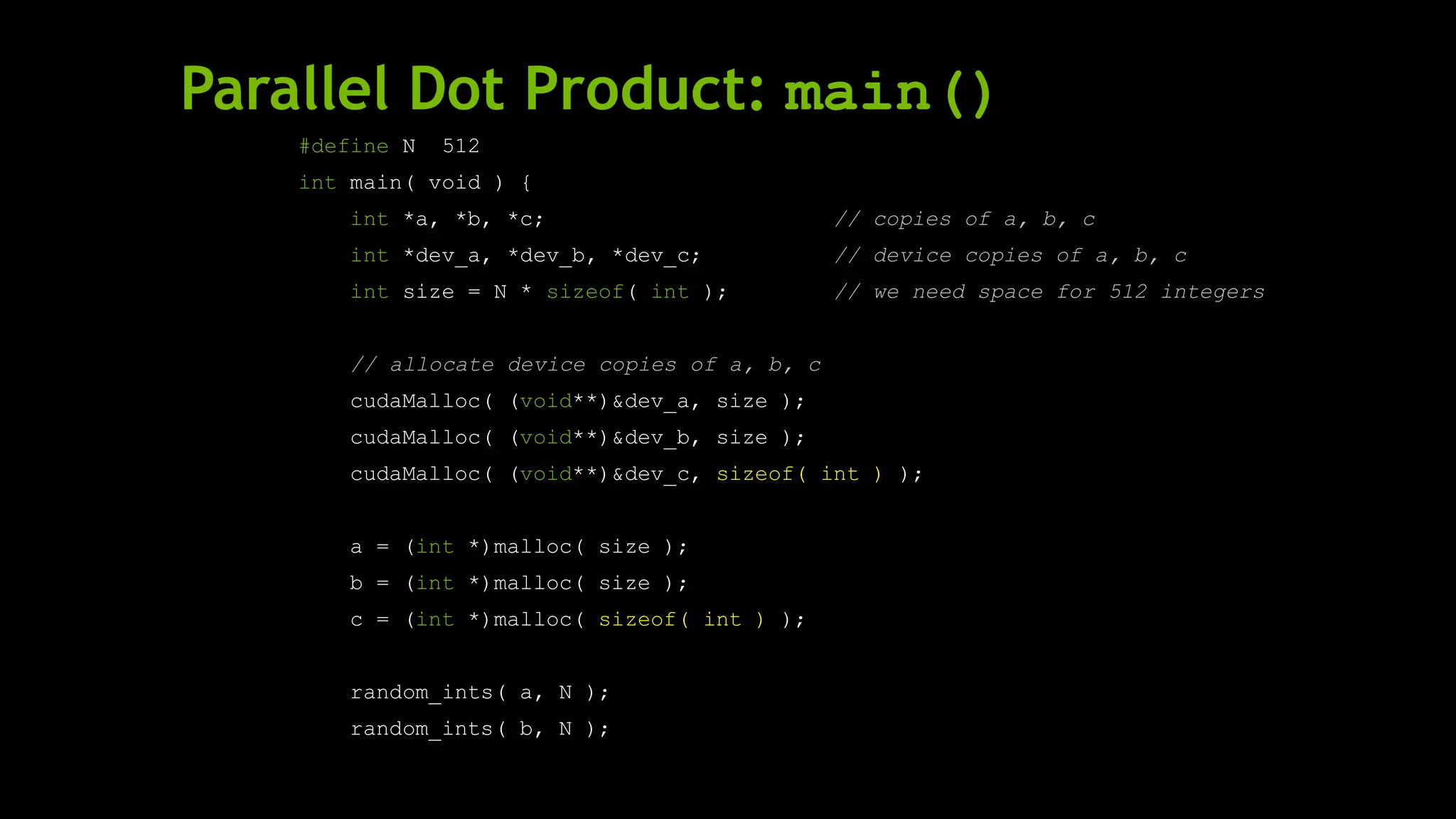

![Parallel Dot Product: dot()

__global__ void dot( int *a, int *b, int *c ) {

__shared__ int temp[N];

temp[threadIdx.x] = a[threadIdx.x] * b[threadIdx.x];

__syncthreads();

if( 0 == threadIdx.x ) {

int sum = 0;

for( int i = 0; i < N; i++ )

sum += temp[i];

*c = sum;

}

}

With a properly synchronized dot() routine, let’s look at main()](https://image.slidesharecdn.com/nvidia-200907043931/75/Introduction-to-CUDA-C-NVIDIA-Notes-44-2048.jpg)

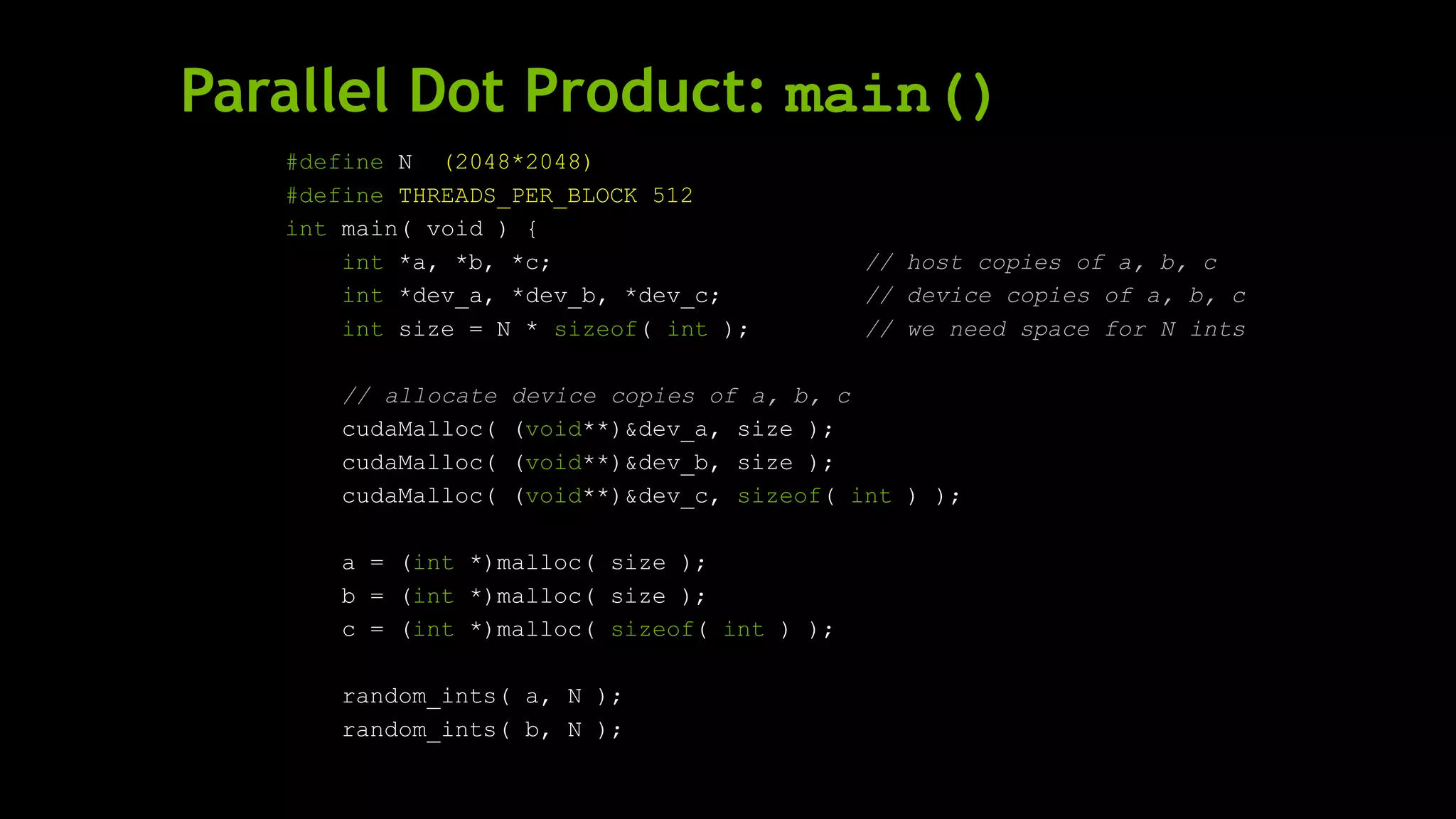

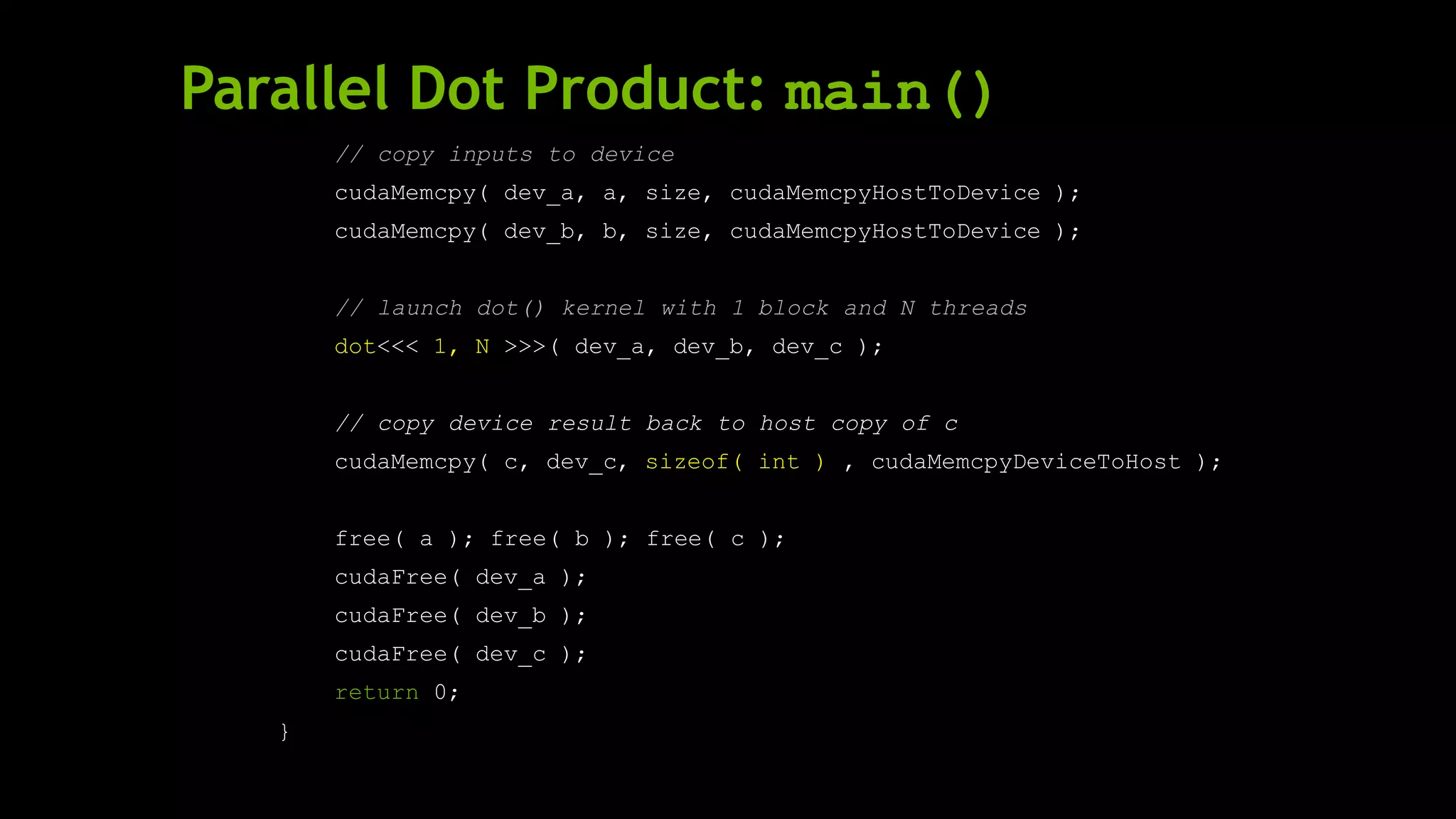

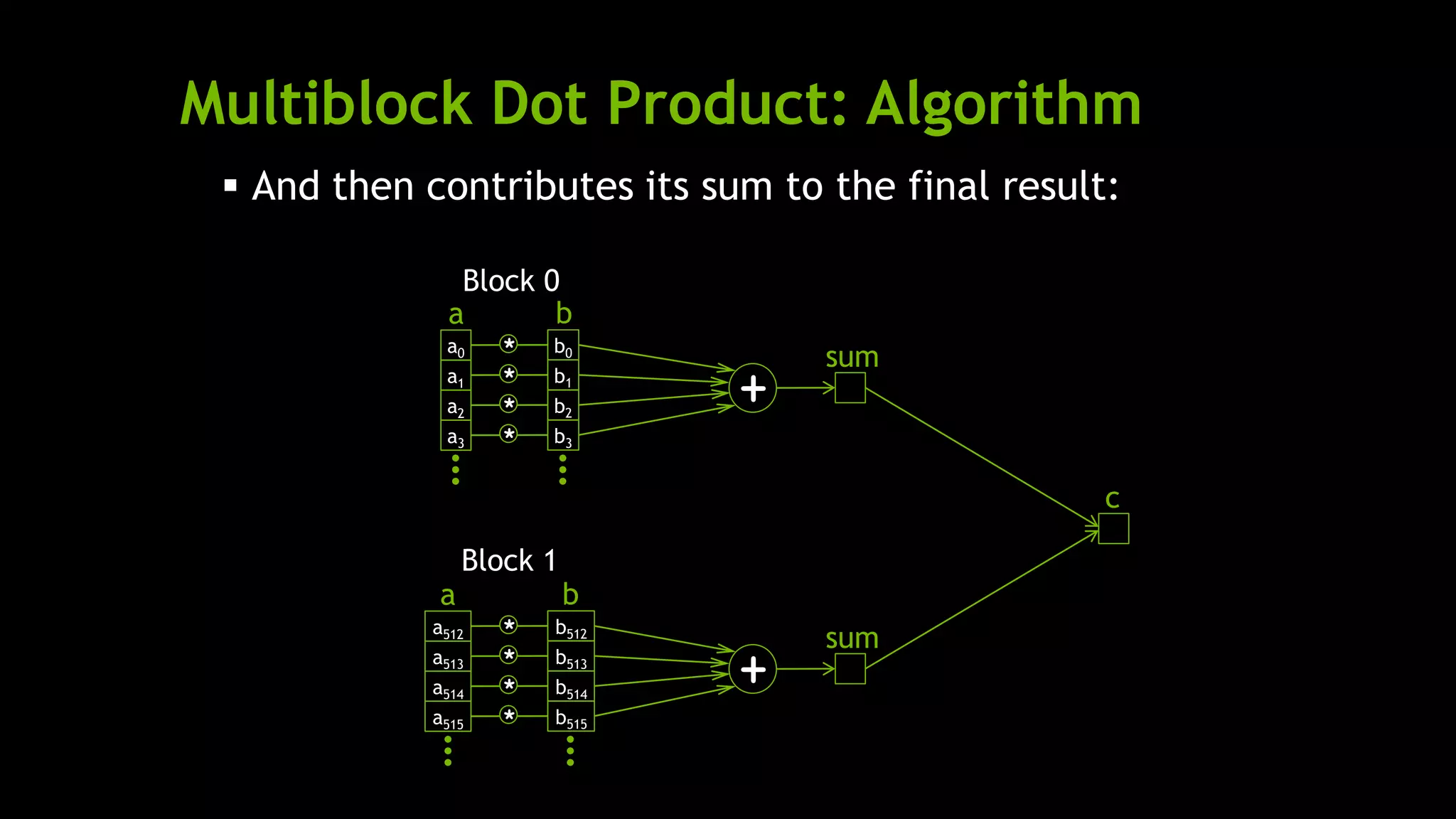

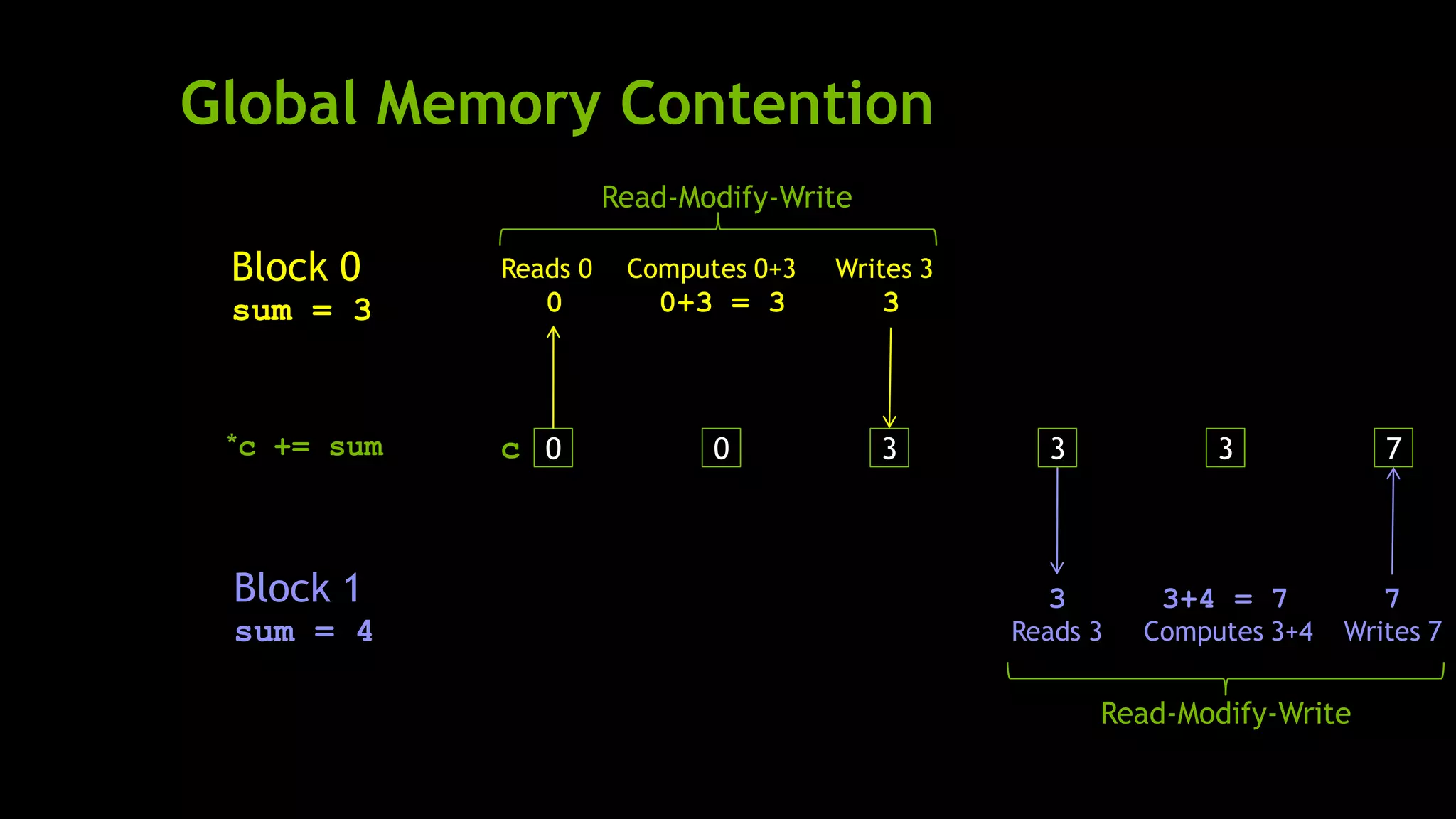

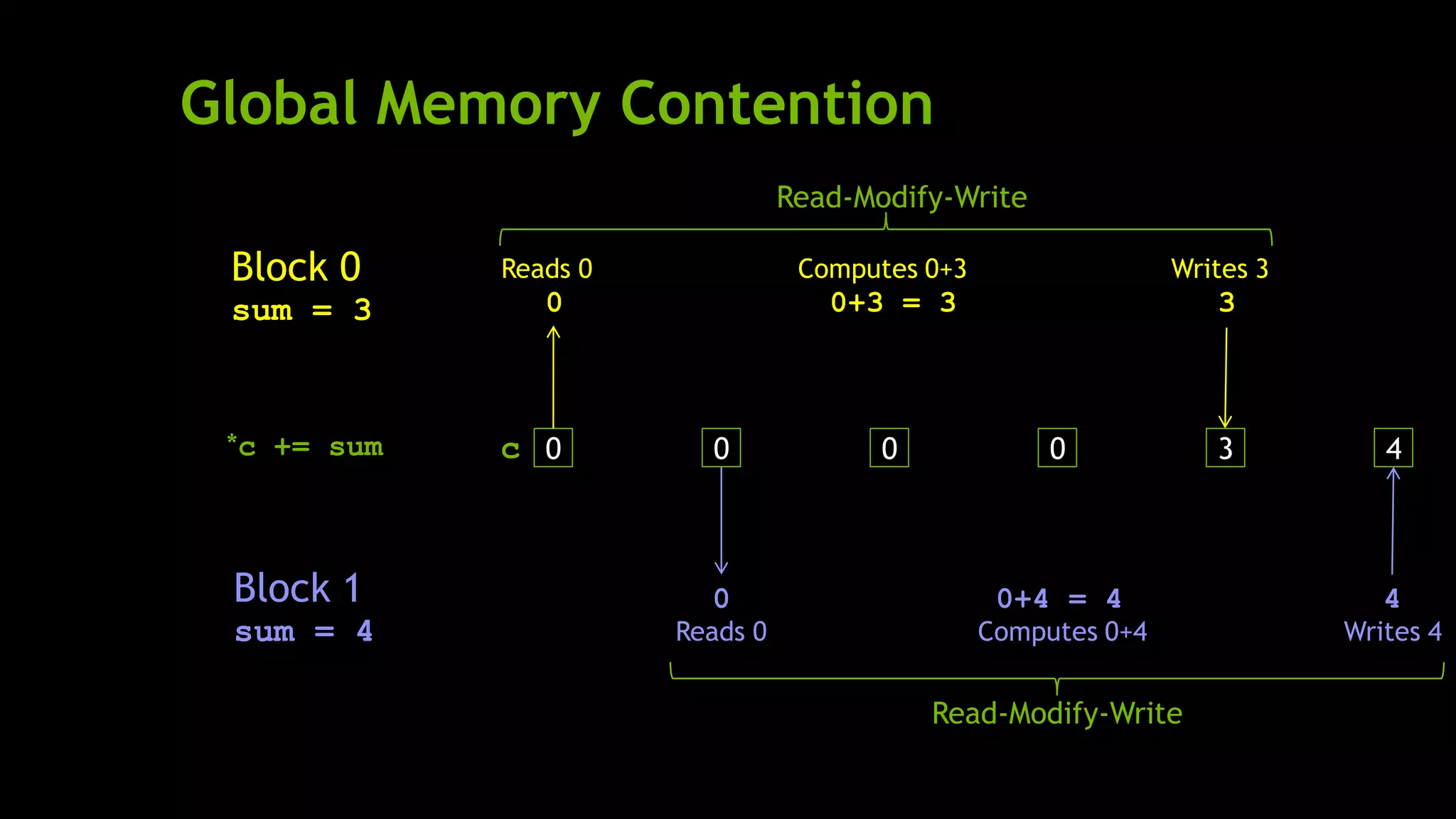

![Multiblock Dot Product: dot()

#define N (2048*2048)

#define THREADS_PER_BLOCK 512

__global__ void dot( int *a, int *b, int *c ) {

__shared__ int temp[THREADS_PER_BLOCK];

int index = threadIdx.x + blockIdx.x * blockDim.x;

temp[threadIdx.x] = a[index] * b[index];

__syncthreads();

if( 0 == threadIdx.x ) {

int sum = 0;

for( int i = 0; i < THREADS_PER_BLOCK; i++ )

sum += temp[i];

}

}

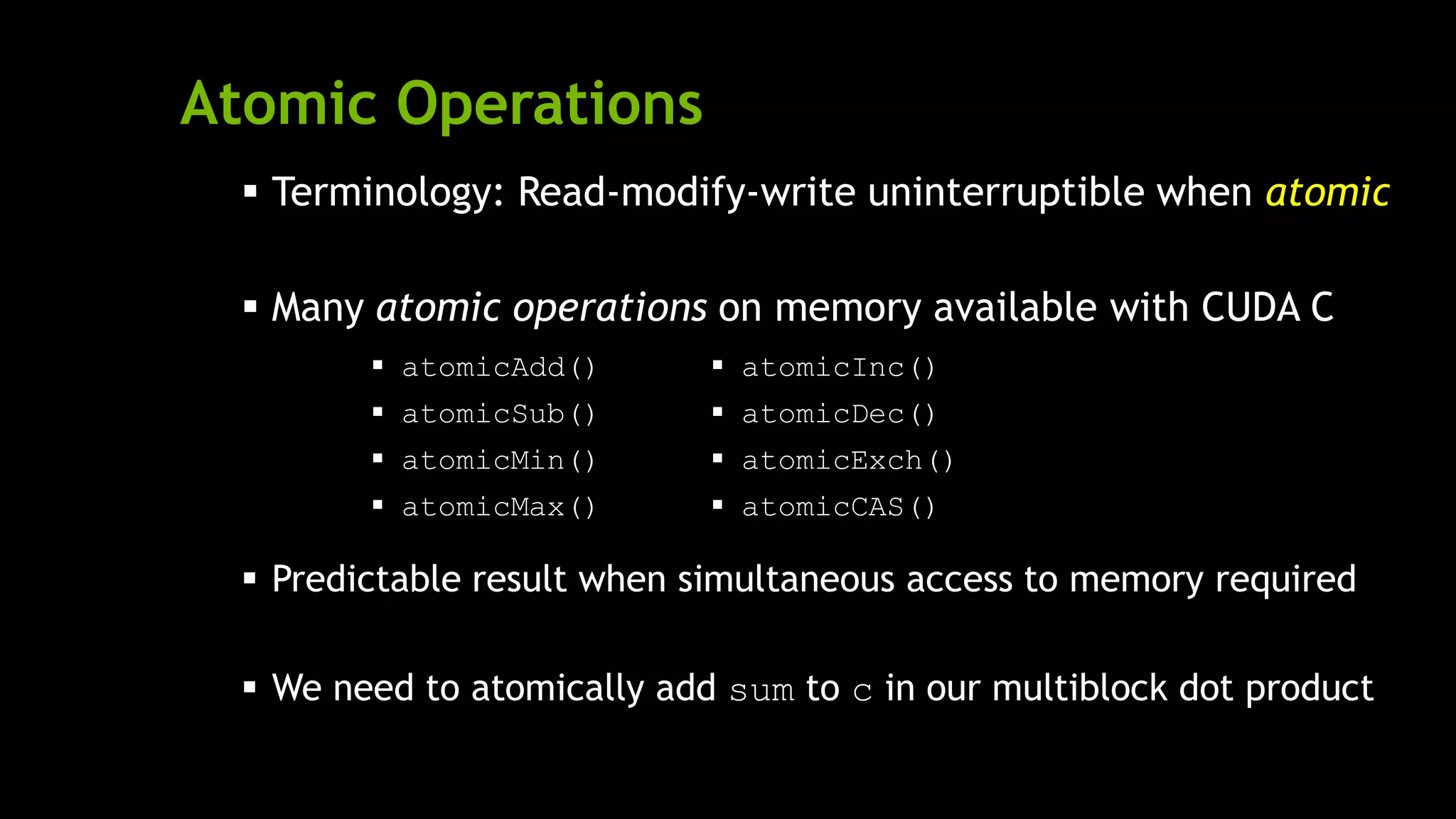

But we have a race condition…

We can fix it with one of CUDA’s atomic operations

*c += sum;atomicAdd( c , sum );](https://image.slidesharecdn.com/nvidia-200907043931/75/Introduction-to-CUDA-C-NVIDIA-Notes-52-2048.jpg)

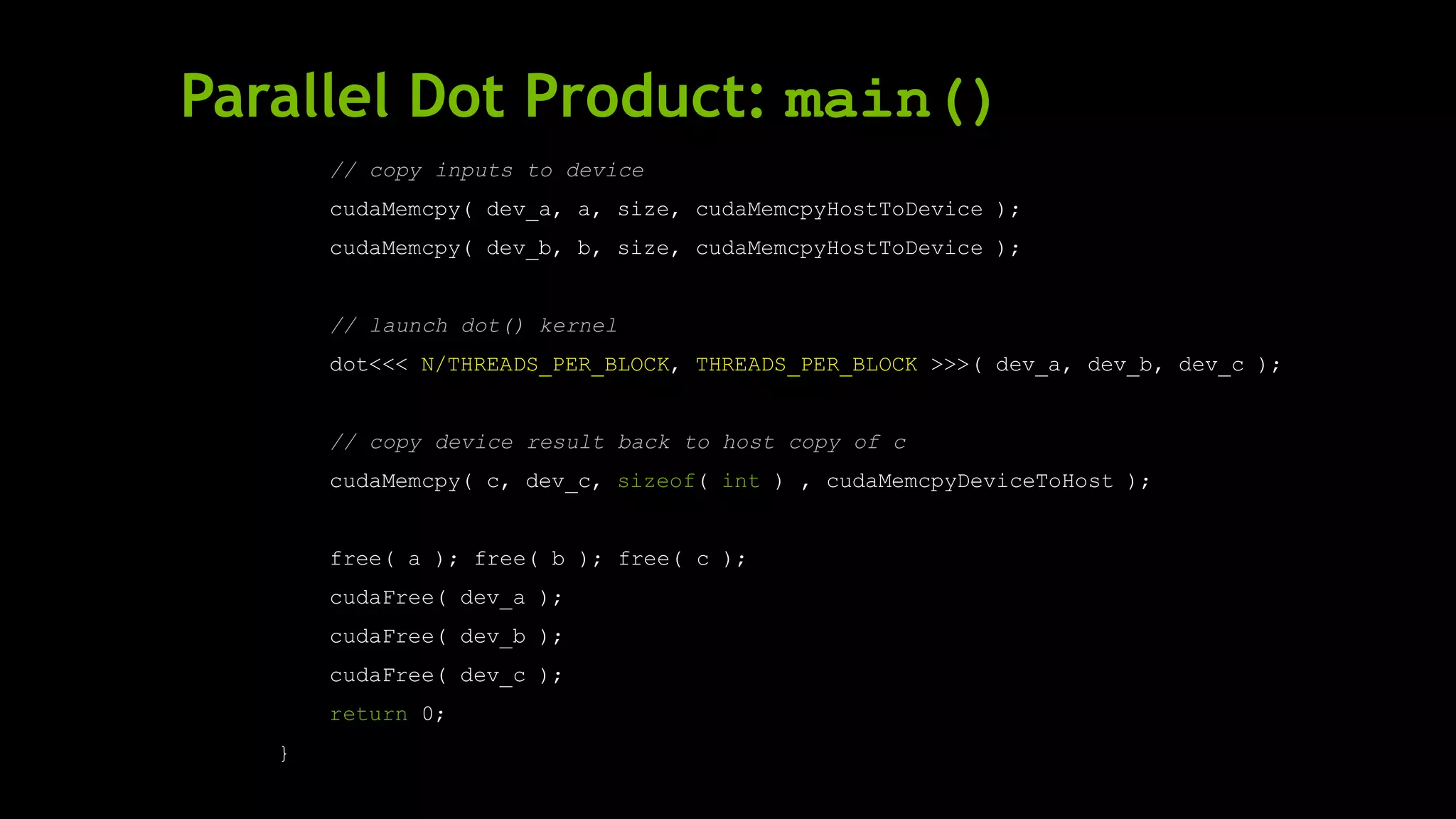

![Multiblock Dot Product: dot()

__global__ void dot( int *a, int *b, int *c ) {

__shared__ int temp[THREADS_PER_BLOCK];

int index = threadIdx.x + blockIdx.x * blockDim.x;

temp[threadIdx.x] = a[index] * b[index];

__syncthreads();

if( 0 == threadIdx.x ) {

int sum = 0;

for( int i = 0; i < THREADS_PER_BLOCK; i++ )

sum += temp[i];

atomicAdd( c , sum );

}

}

Now let’s fix up main() to handle a multiblock dot product](https://image.slidesharecdn.com/nvidia-200907043931/75/Introduction-to-CUDA-C-NVIDIA-Notes-57-2048.jpg)