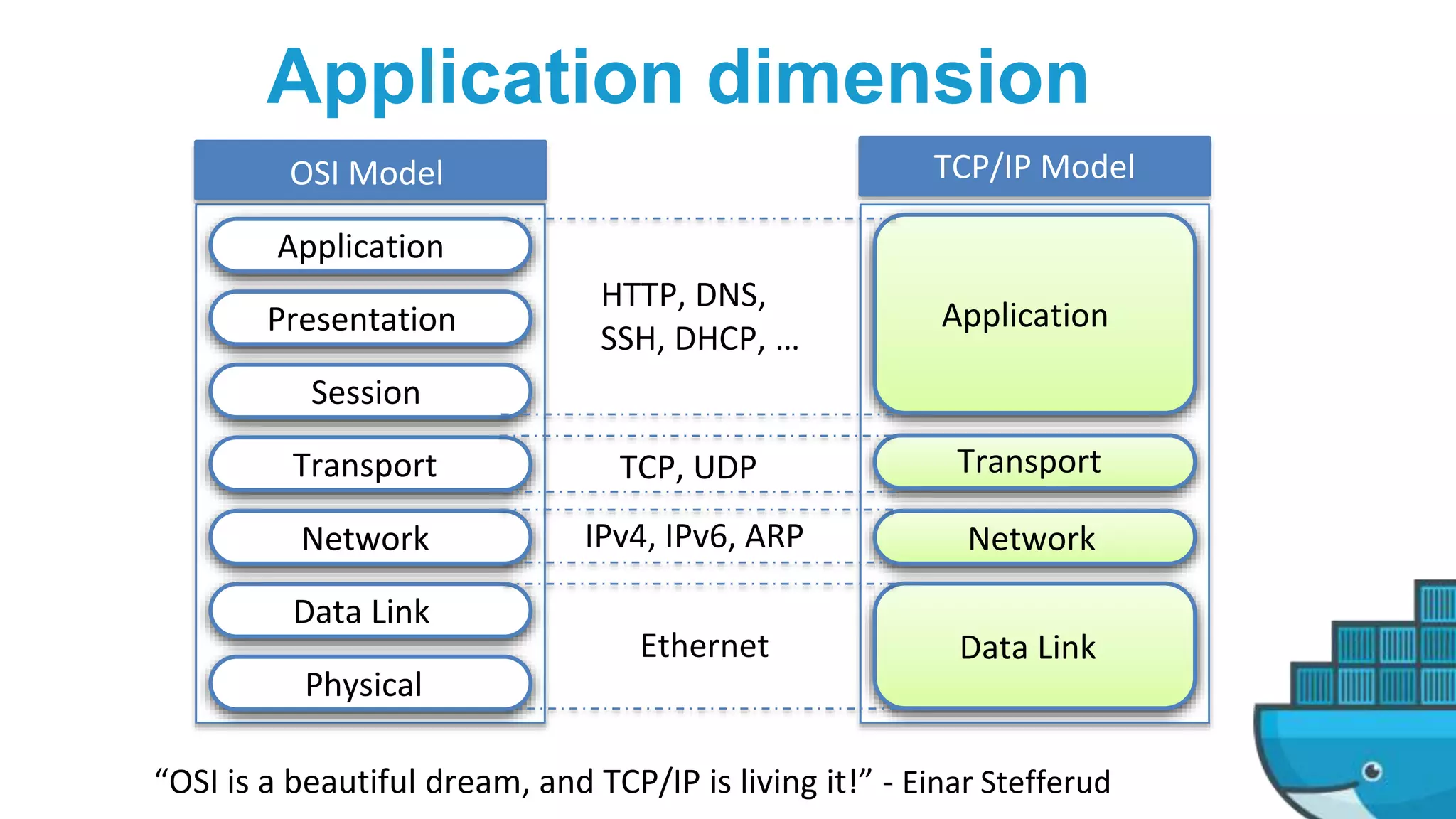

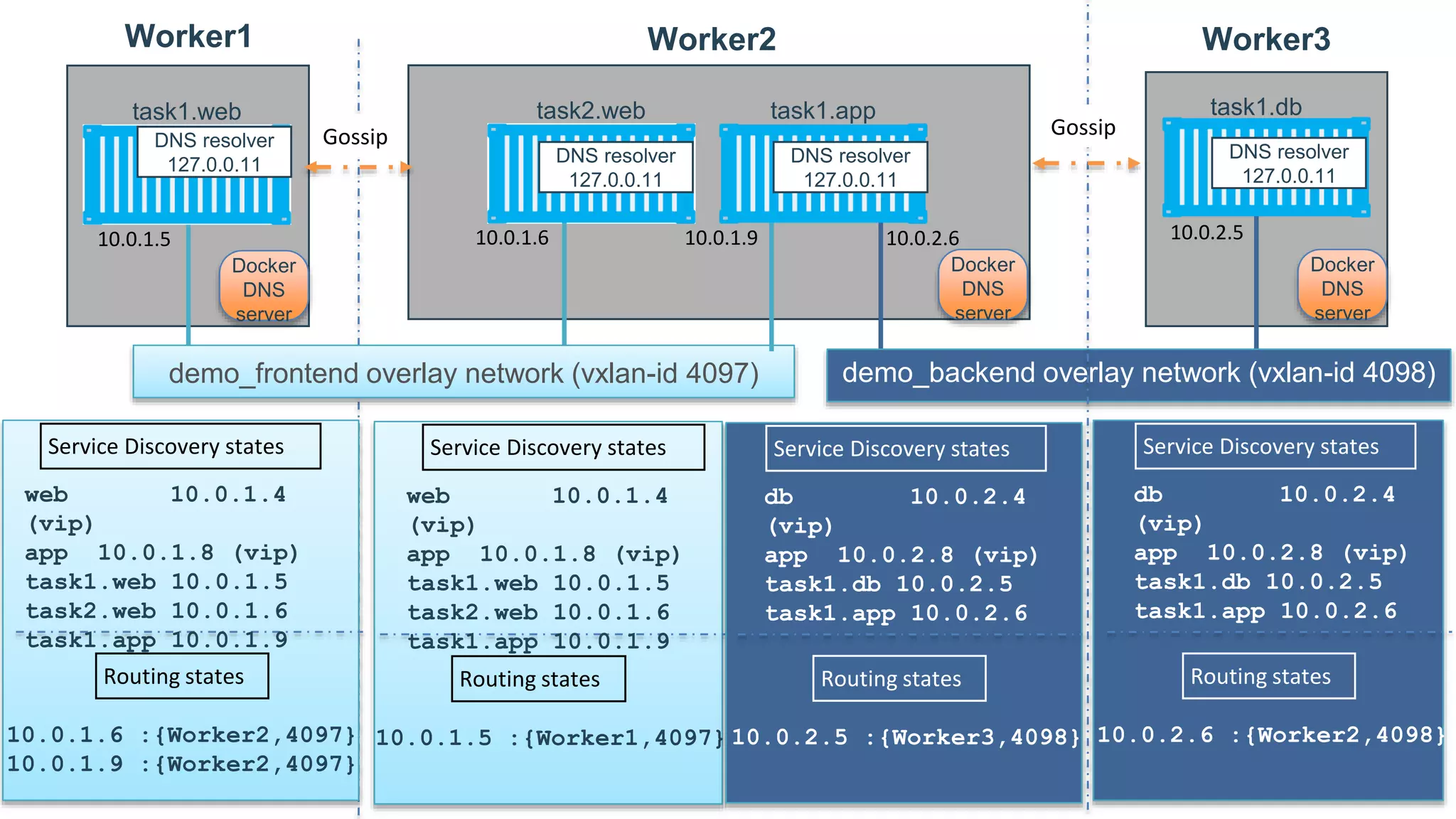

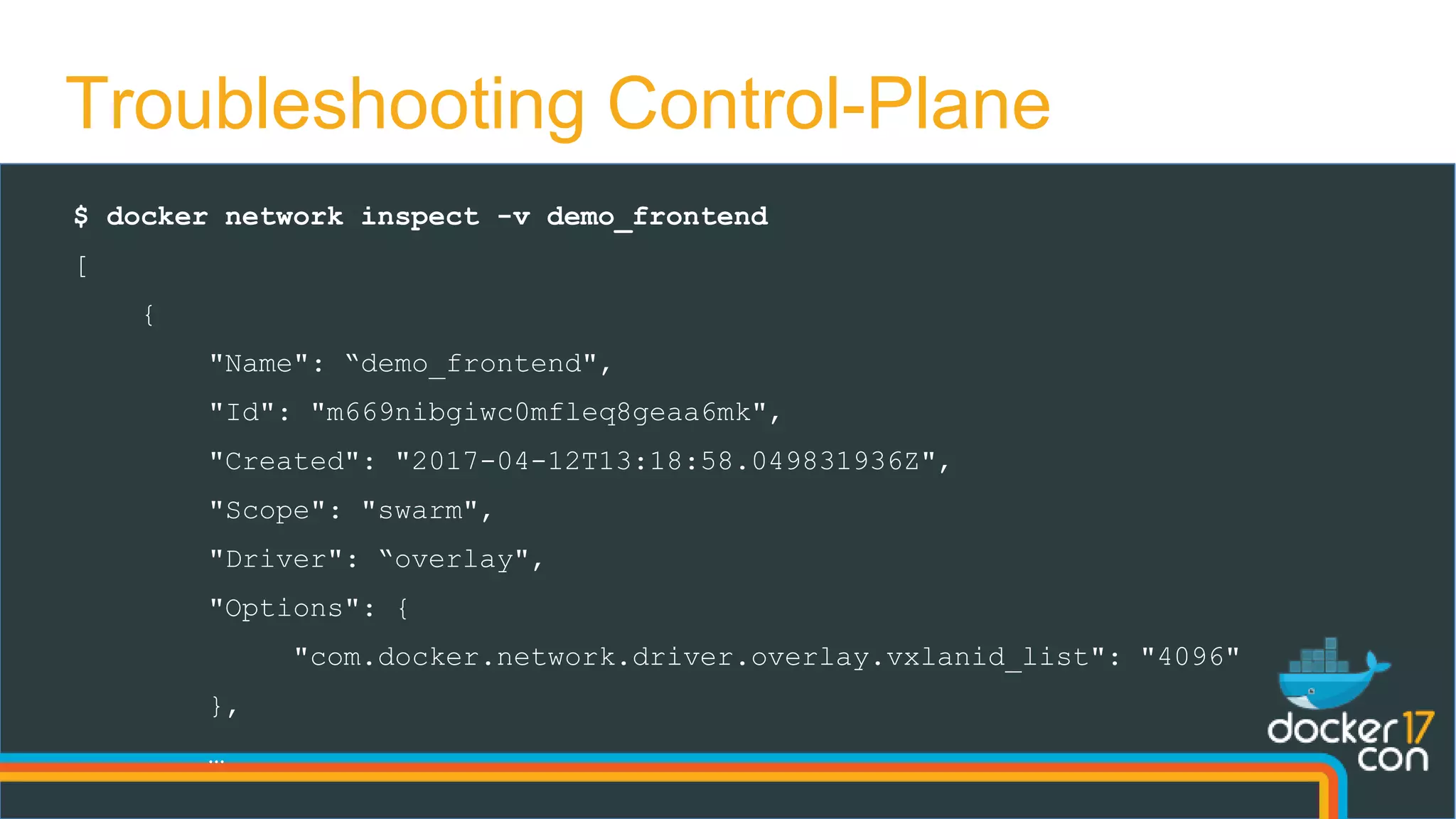

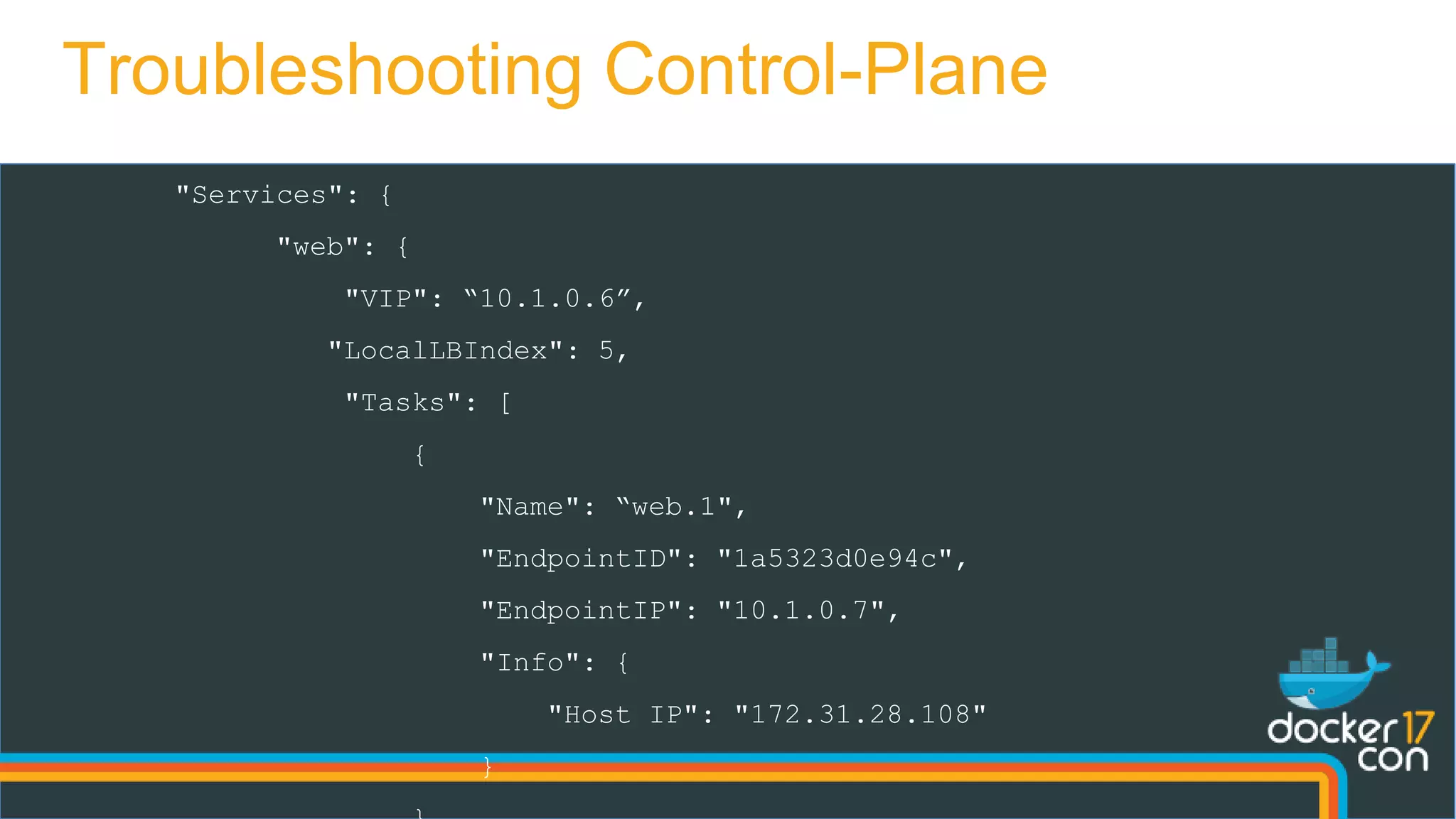

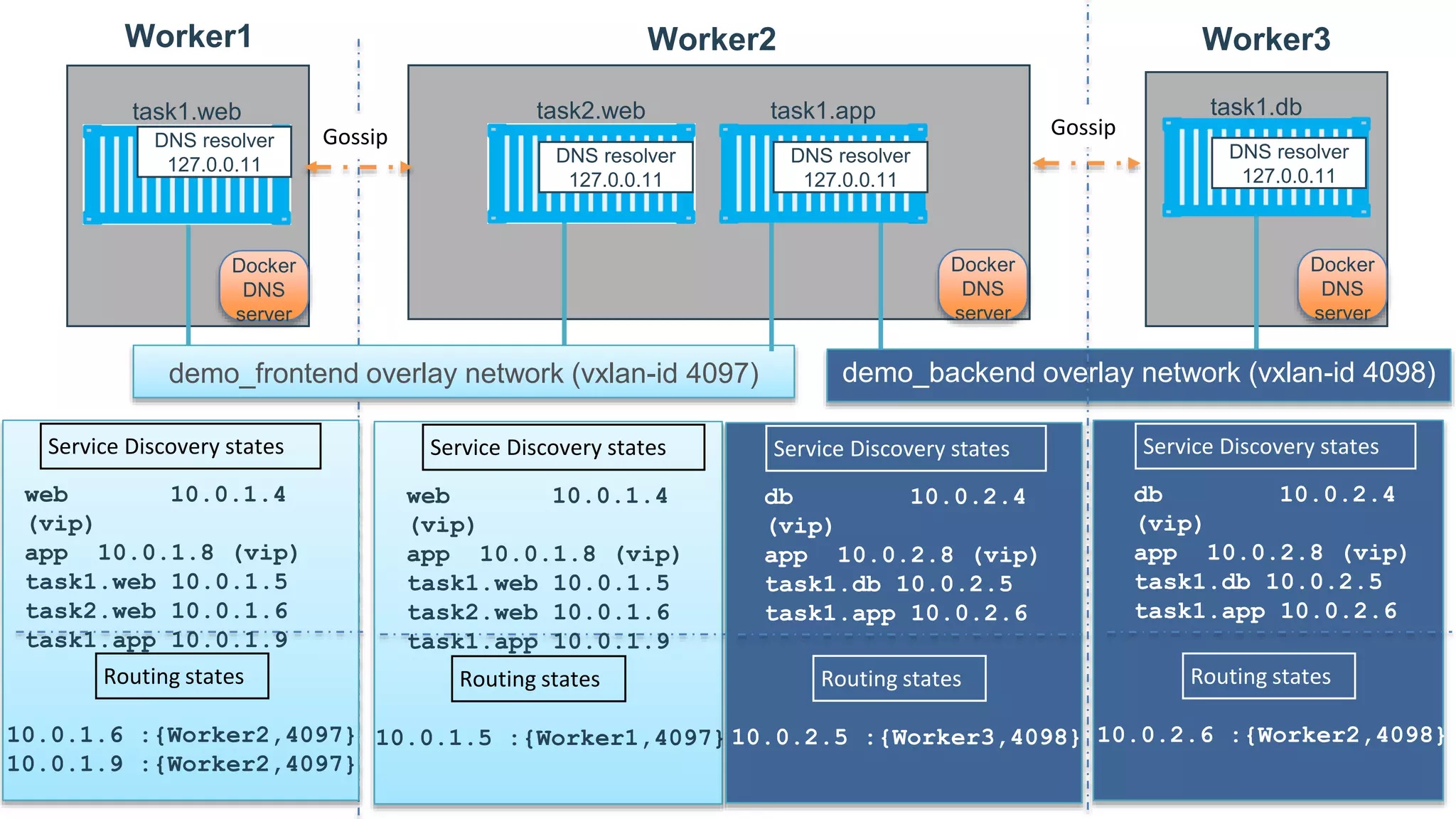

This document provides an in-depth exploration of Docker networking, specifically addressing the application and data planes, as well as the various layers and dimensions involved. It outlines the functionalities of Docker's networking features like service discovery, load balancing, and management through control and data planes, utilizing overlay networks and VXLAN technology. Additionally, it discusses troubleshooting techniques and the underlying processes for network resource allocation within a Docker swarm environment.

![…

"Peers": [

{

"Name": "ip-172-31-28-108",

"IP": "172.31.28.108"

},

{

"Name": "ip-172-31-46-47",

"IP": "172.31.46.47"

},

]

Troubleshooting Control-Plane](https://image.slidesharecdn.com/dockernetworkingdeepdive-dcus17-170418041516/75/DCUS17-Docker-networking-deep-dive-14-2048.jpg)

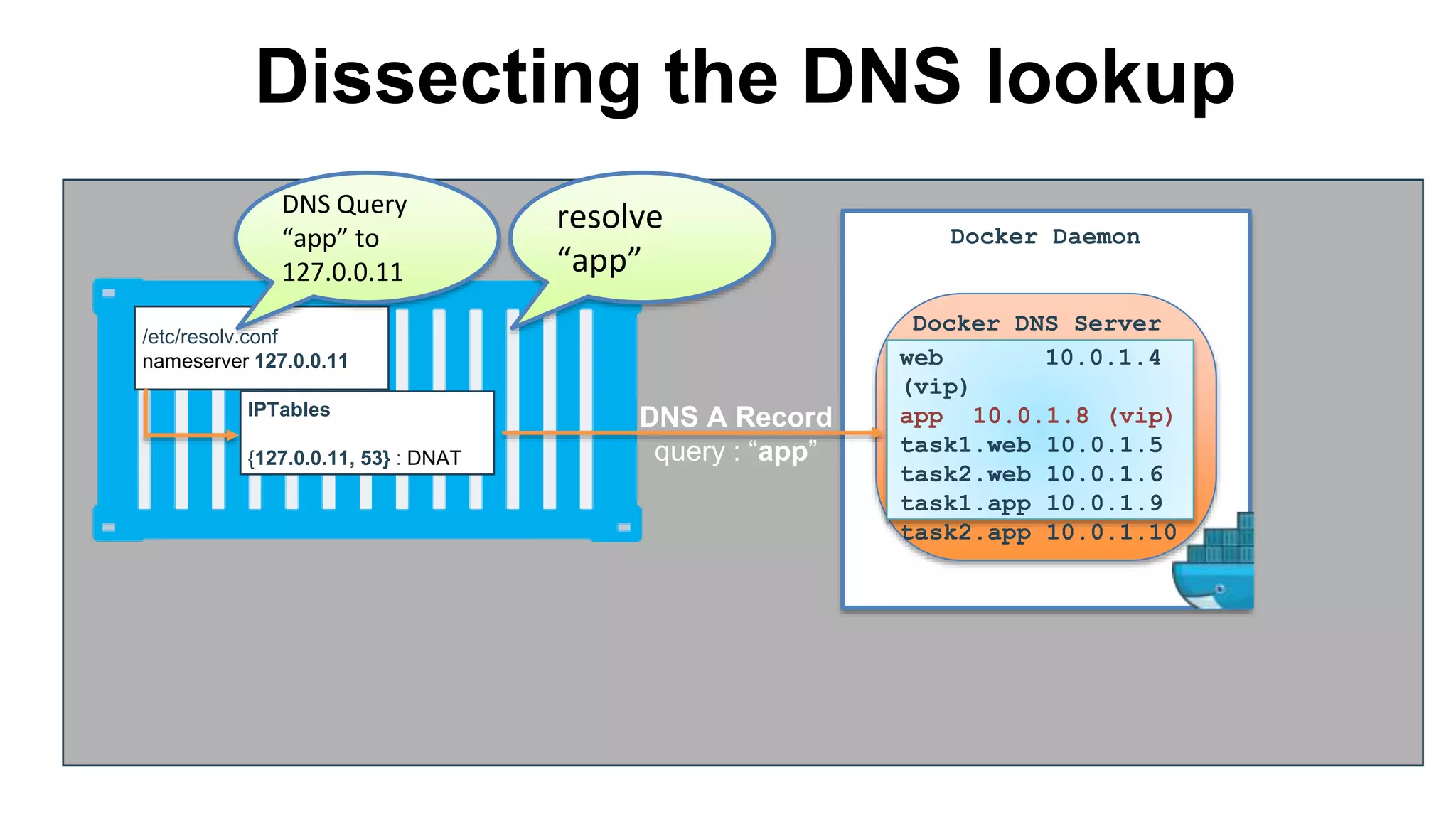

![/etc/resolv.conf

nameserver 127.0.0.11

Dissecting the DNS-rr lookup

task1.web

IPTables

{127.0.0.11, 53} : DNAT

DNS A Record

response : “app”

: [

10.0.1.9,

10.0.1.10

]

web 10.0.1.4

(vip)

app 10.0.1.9

10.0.1.10

task1.app 10.0.1.9

task2.app 10.0.1.10

task1.web 10.0.1.5

Docker DNS Server

Docker Daemon

docker service create —name=app —endpoint-mode=dns-rr demo/my-app](https://image.slidesharecdn.com/dockernetworkingdeepdive-dcus17-170418041516/75/DCUS17-Docker-networking-deep-dive-20-2048.jpg)

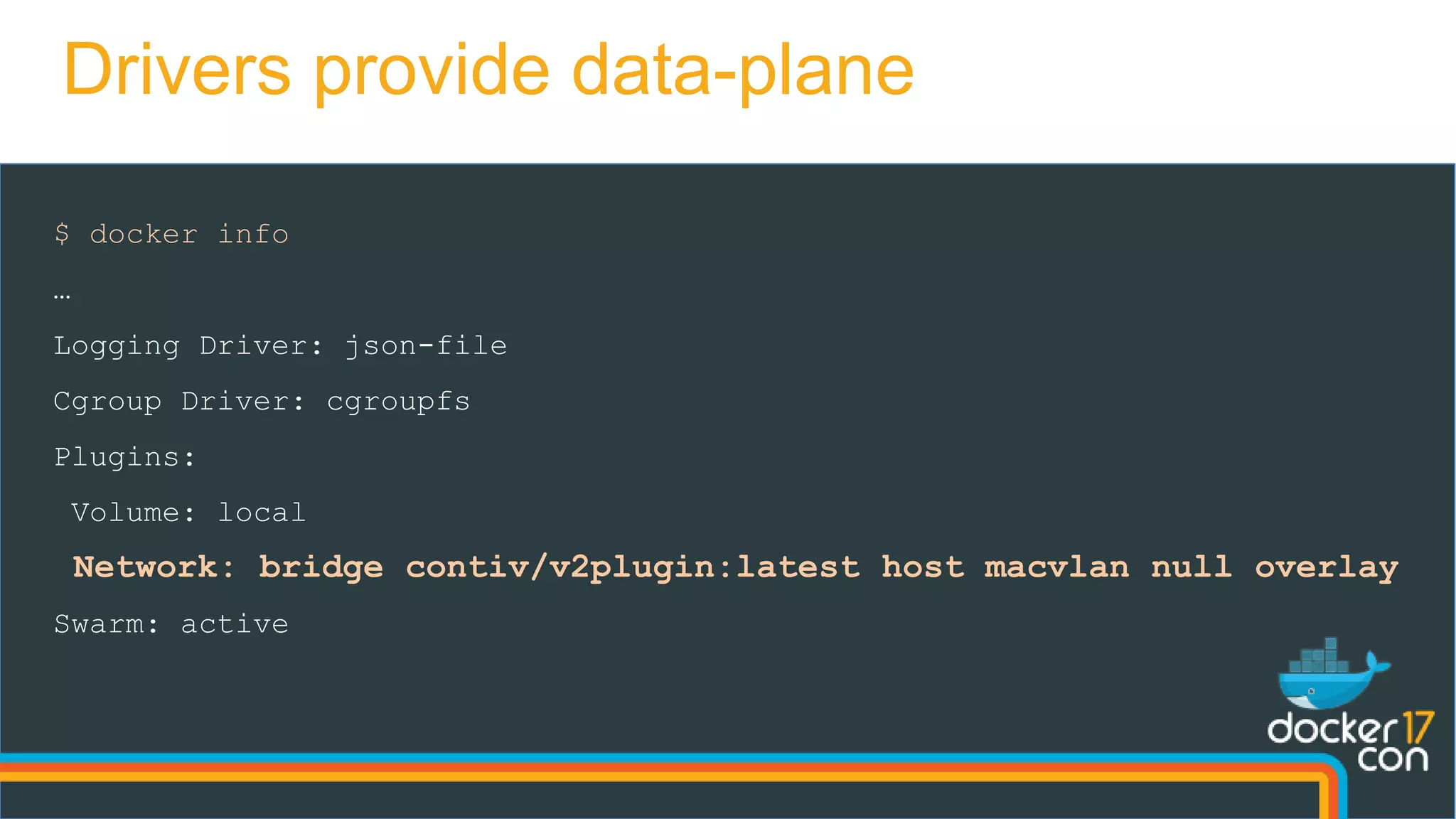

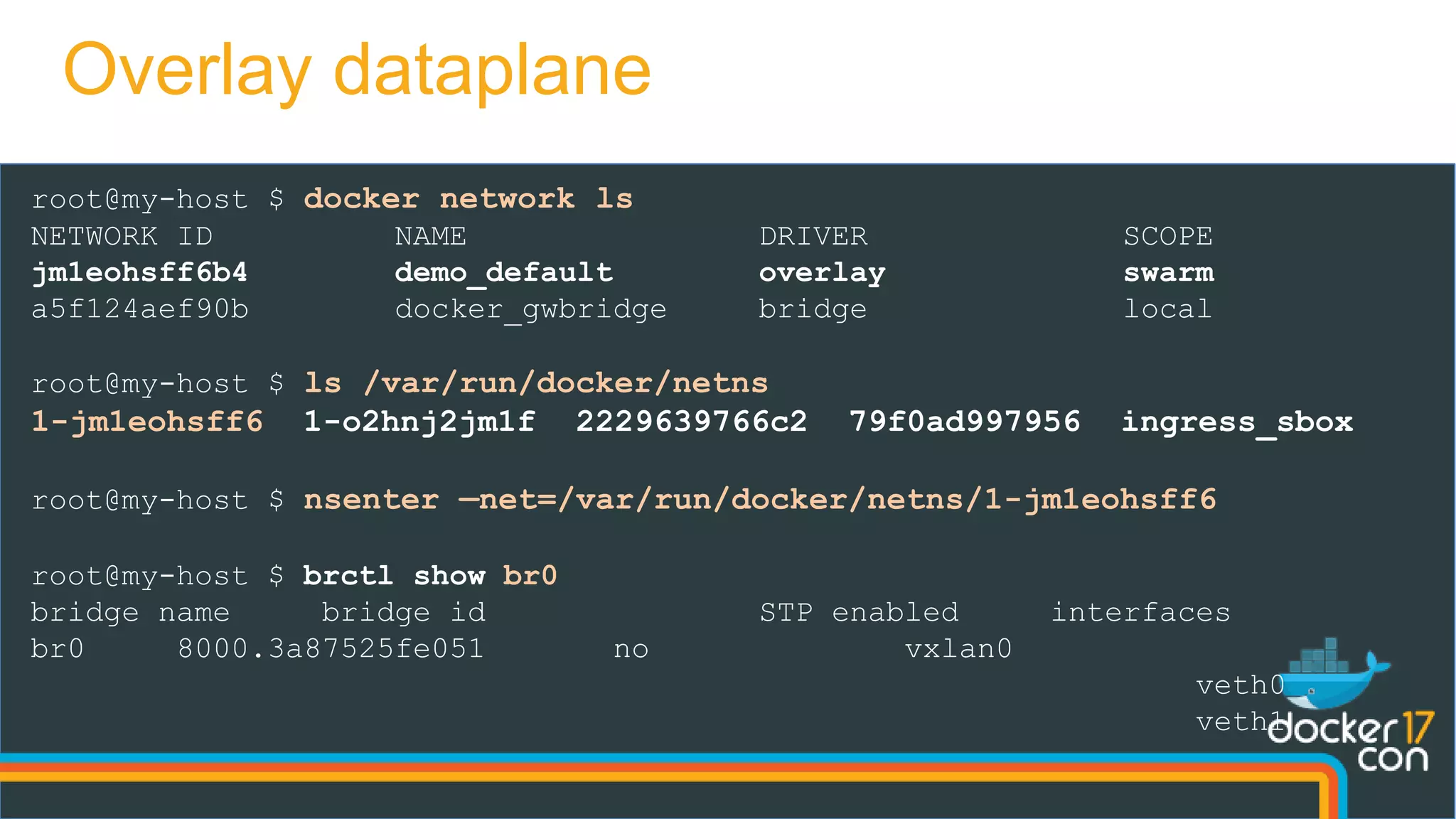

![root@my-host $ iptables -nvL -t mangle

Chain OUTPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

0 0 MARK all -- * * 0.0.0.0/0 10.0.0.7 MARK set 0x101

0 0 MARK all -- * * 0.0.0.0/0 10.0.0.4 MARK set 0x100

root@my-host $ ipvsadm -L

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

FWM 256 rr

-> 10.0.0.5:0 Masq 1 0 0

-> 10.0.0.6:0 Masq 1 0 0

FWM 257 rr

-> 10.0.0.3:0 Masq 1 0 0

root@my-host $ conntrack -L

tcp 6 431997 ESTABLISHED src=10.0.0.8 dst=10.0.0.4 sport=33635 dport=80

src=10.0.0.5 dst=10.0.0.8 sport=80 dport=33635 [ASSURED] mark=0 use=1

Client-side Load Balancing](https://image.slidesharecdn.com/dockernetworkingdeepdive-dcus17-170418041516/75/DCUS17-Docker-networking-deep-dive-36-2048.jpg)

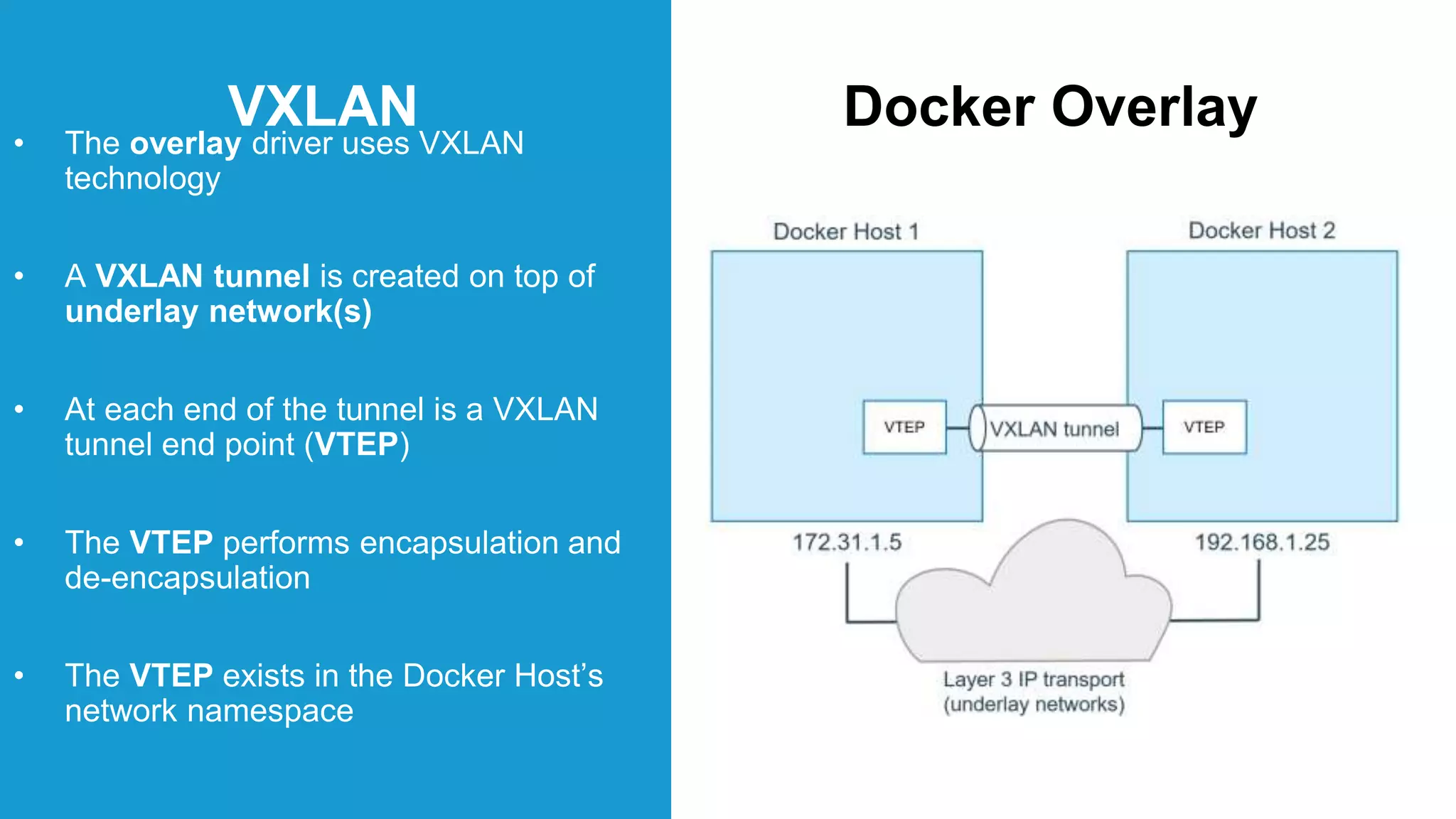

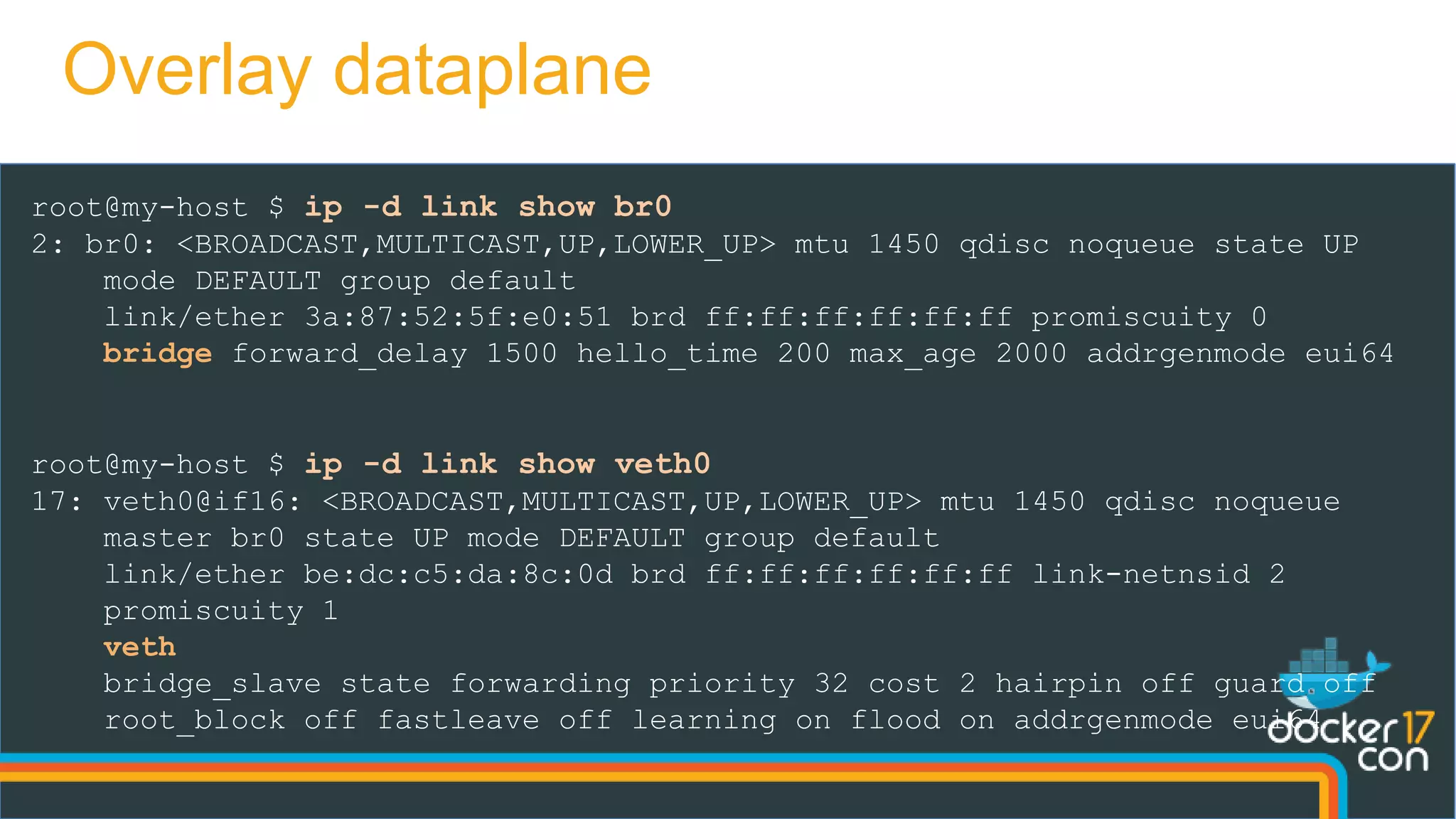

![/etc/resolv.conf

nameserver 127.0.0.11

Client-side DNS-rr Load Balancing

task1.web

DNS A Record

response : “app”

: [

10.0.1.9,

10.0.1.10

]

web 10.0.1.4

(vip)

app 10.0.1.9

10.0.1.10

task1.app 10.0.1.9

task2.app 10.0.1.10

task1.web 10.0.1.5

Docker DNS Server

Docker Daemon

docker service create —name=app —endpoint-mode=dns-rr demo/my-app

app : [ 10.0.1.9,

10.0.1.10 ]](https://image.slidesharecdn.com/dockernetworkingdeepdive-dcus17-170418041516/75/DCUS17-Docker-networking-deep-dive-37-2048.jpg)