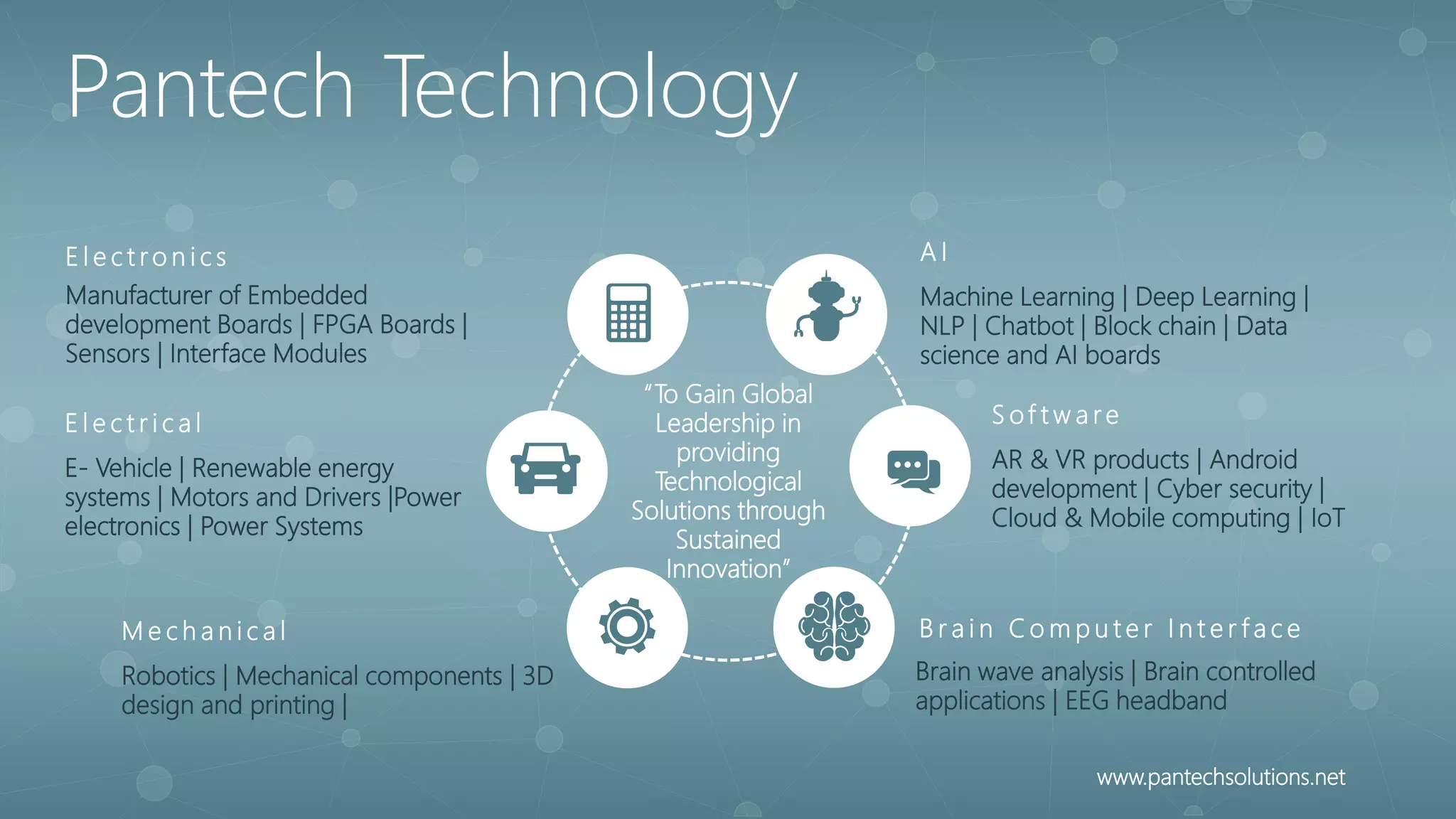

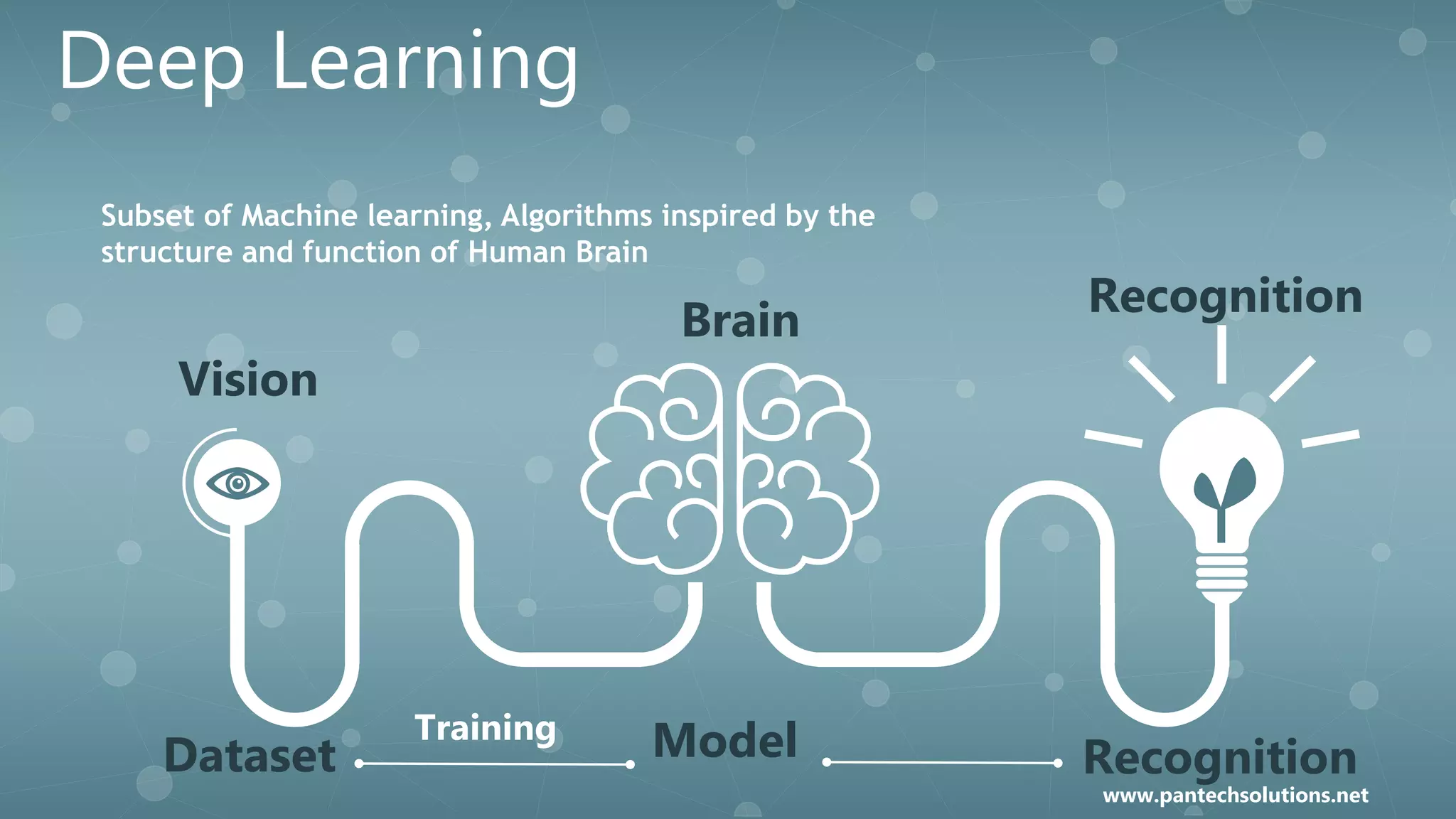

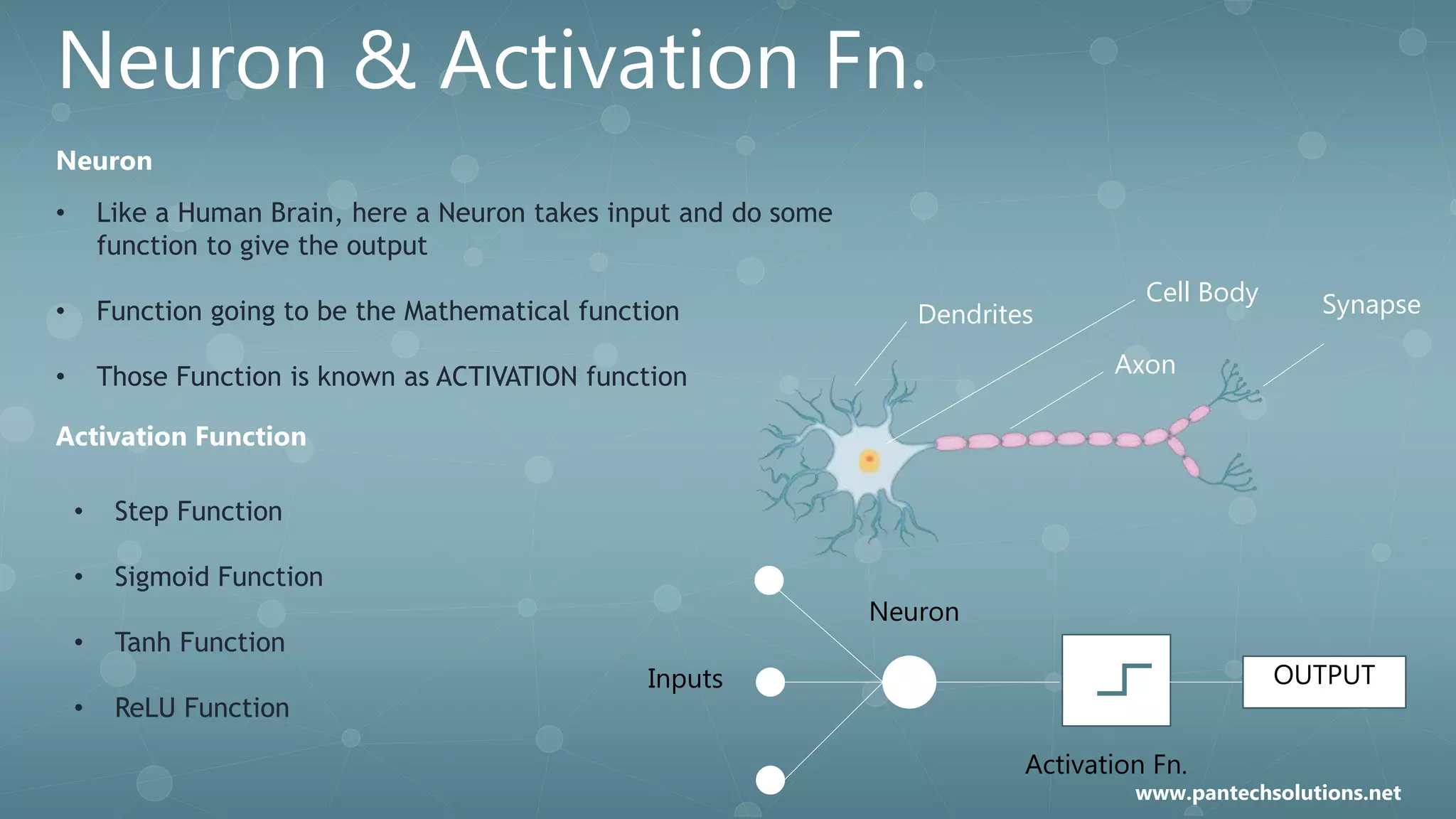

This document provides information about a development deep learning architecture event organized by Pantech Solutions and The Institution of Electronics and Telecommunication. The event agenda includes general talks on AI, deep learning libraries, deep learning algorithms like ANN, RNN and CNN, and demonstrations of character recognition and emotion recognition. Details are provided about the organizers Pantech Solutions and IETE, as well as deep learning topics like neural networks, activation functions, common deep learning libraries, algorithms, applications, and the event agenda.

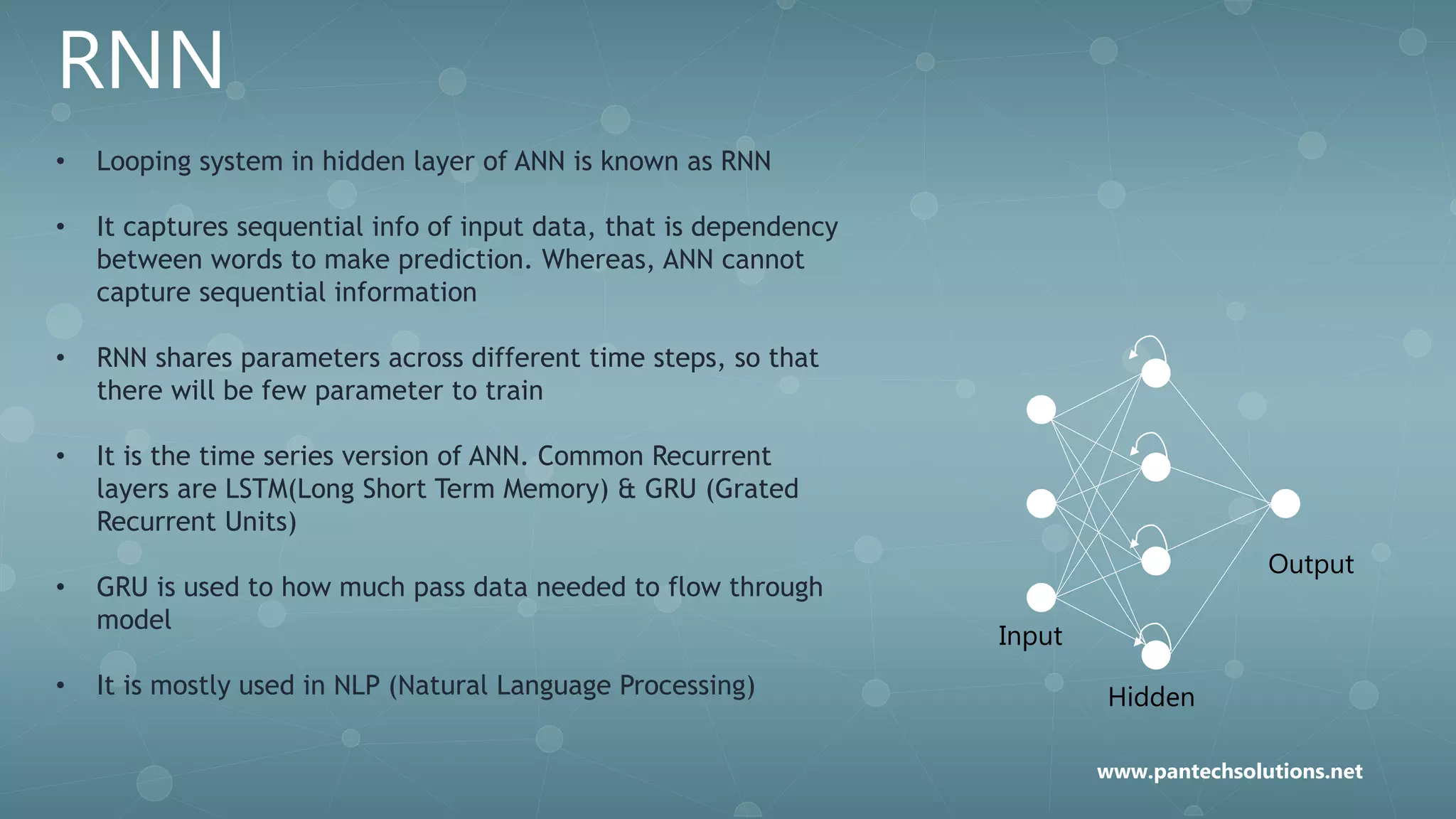

![Activation Function

STEP Function

• If value of X is greater than or equal to 0, then output is 1,

If value of X is less than 0, then output is 0

• NN uses back propagation & Gradient descent method to

calculate weight of different layers

• Since step Function is non differentiable to zero, it can’t do

the gradient descent method, so it can’t update weights.

SIGMOID Function

• If value of X is infinity, then output is 1,

If value of X is negative infinity, then output is 0

• It captures non-linearity in the data

• It can use Gradient descent & Back propagation method to

calculate weights.

• Output range [0,1]

Image source from Towards Data Science

www.pantechsolutions.net](https://image.slidesharecdn.com/pantechdeeplearning-200525045728/75/Development-of-Deep-Learning-Architecture-14-2048.jpg)

![Activation Function

Tanh Function

• Rescaled of Sigmoid Function

• Output range [-1,1]

• Better learning rate requires, higher gradient. In some times,

for the data is centred around 0, derivatives are higher.

ReLU Function

• Rectified Linear Unit. if any negative input is detected, it

returns 0, otherwise it returns the value back.

Leaky ReLU Function

• Same as ReLU, like returning same for Positive. But for

negative values instead of returning zero, it has constant slope

www.pantechsolutions.net](https://image.slidesharecdn.com/pantechdeeplearning-200525045728/75/Development-of-Deep-Learning-Architecture-15-2048.jpg)

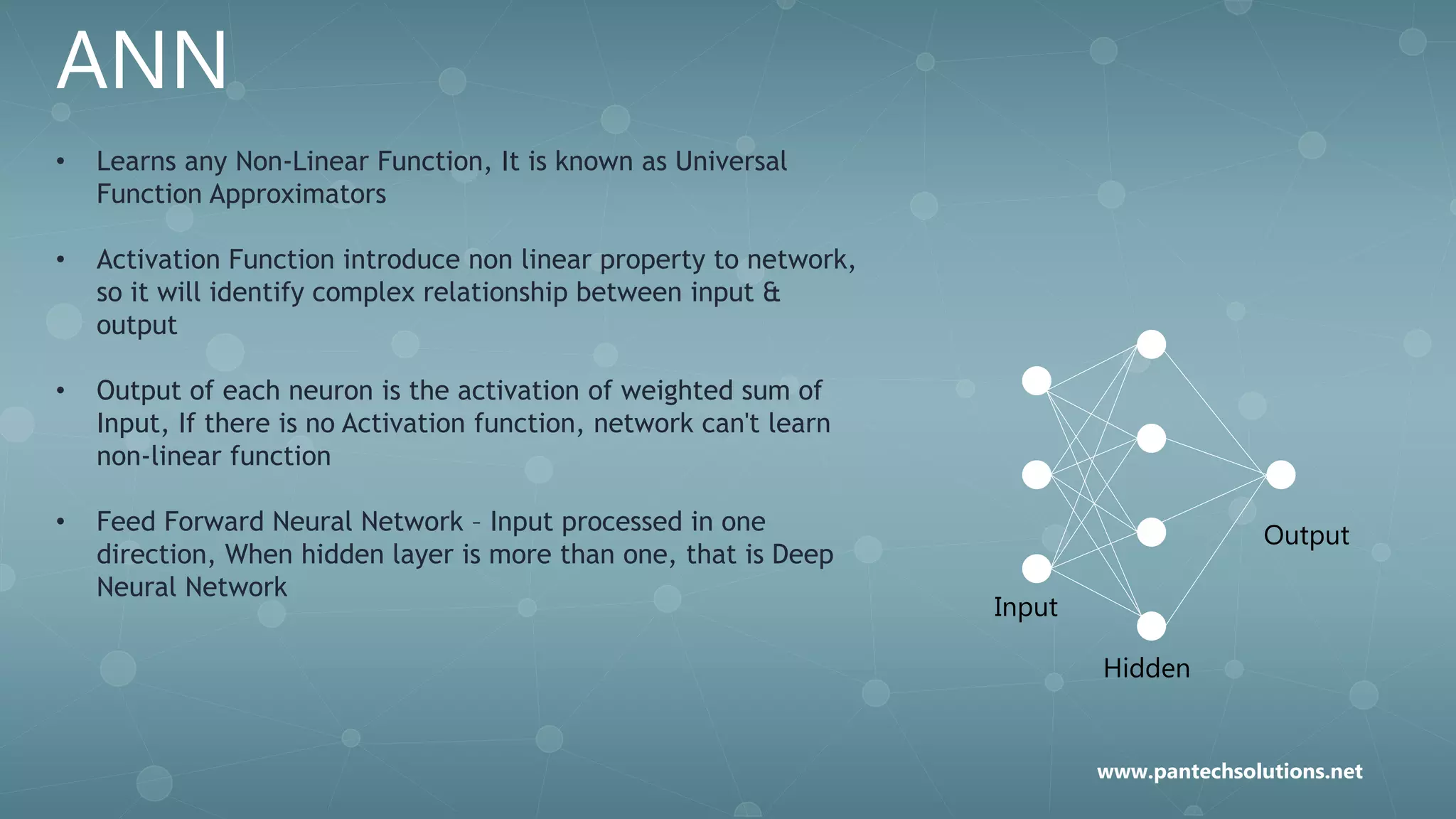

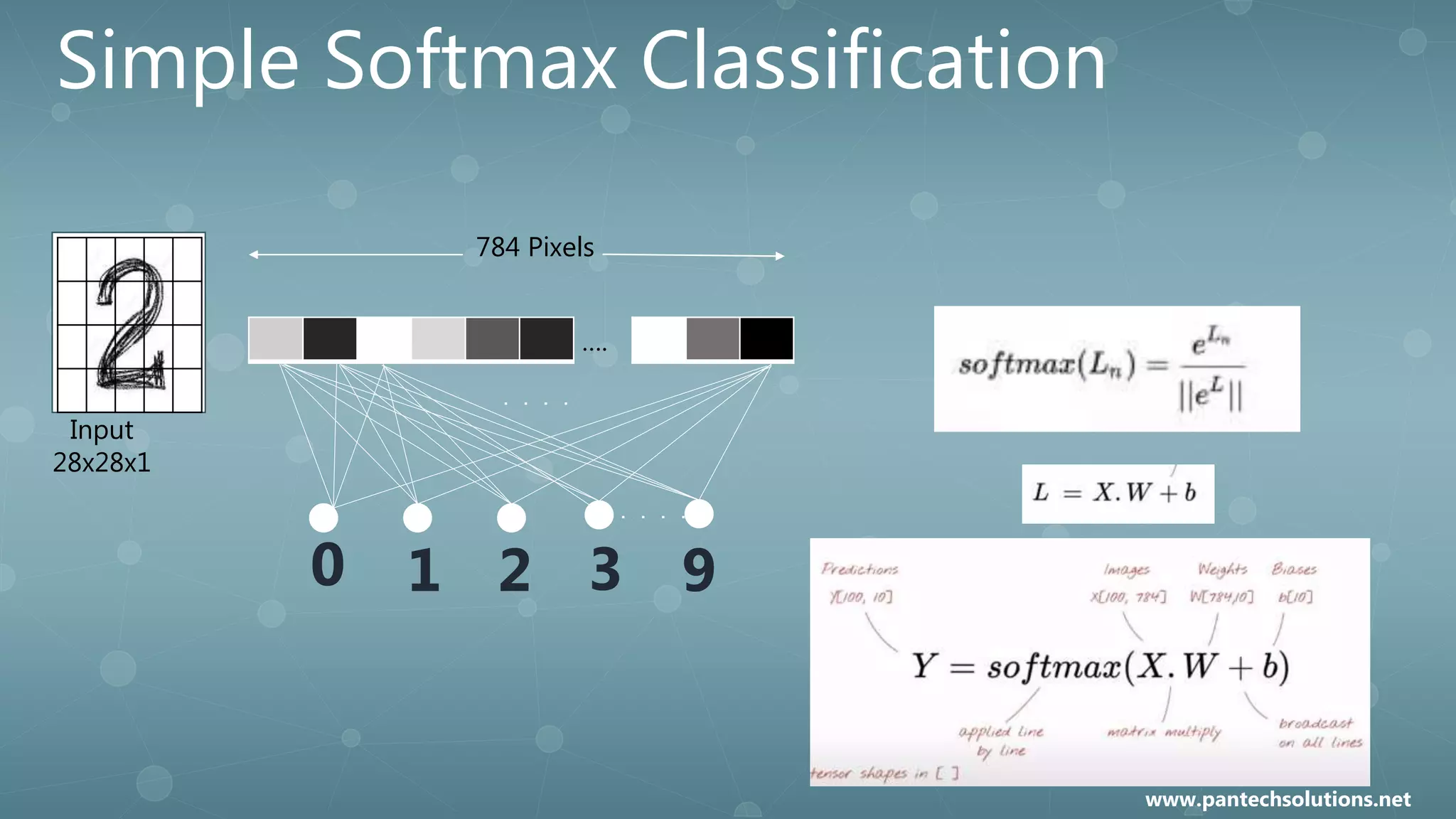

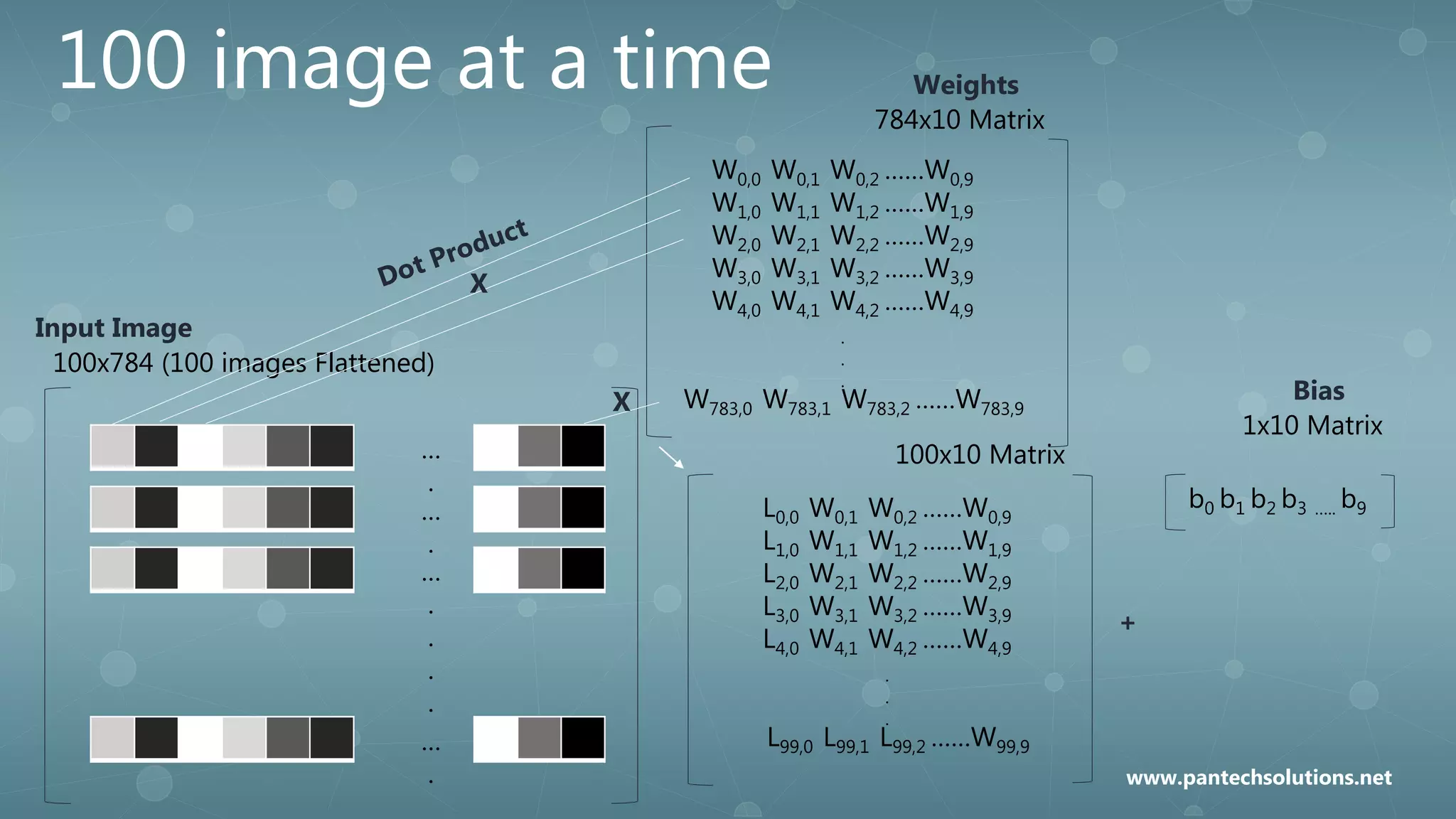

![In Tensor Flow

Y =

tf.nn.softmax(tf.matmul(X,W)+b)

W[784x10]

X[100x784]

b[10]

Y[100x10]

Cross Entropy

0 0 1 0 0 0 0 0 00

0.2 0.9 0.5 0.3 0.1 0.2 0.1 0.3 0.10.1

0 1 2 3 4 5 6 7 8 9

0 1 2 3 4 5 6 7 8 9

Actual Probability

Computed Probability

www.pantechsolutions.net](https://image.slidesharecdn.com/pantechdeeplearning-200525045728/75/Development-of-Deep-Learning-Architecture-25-2048.jpg)