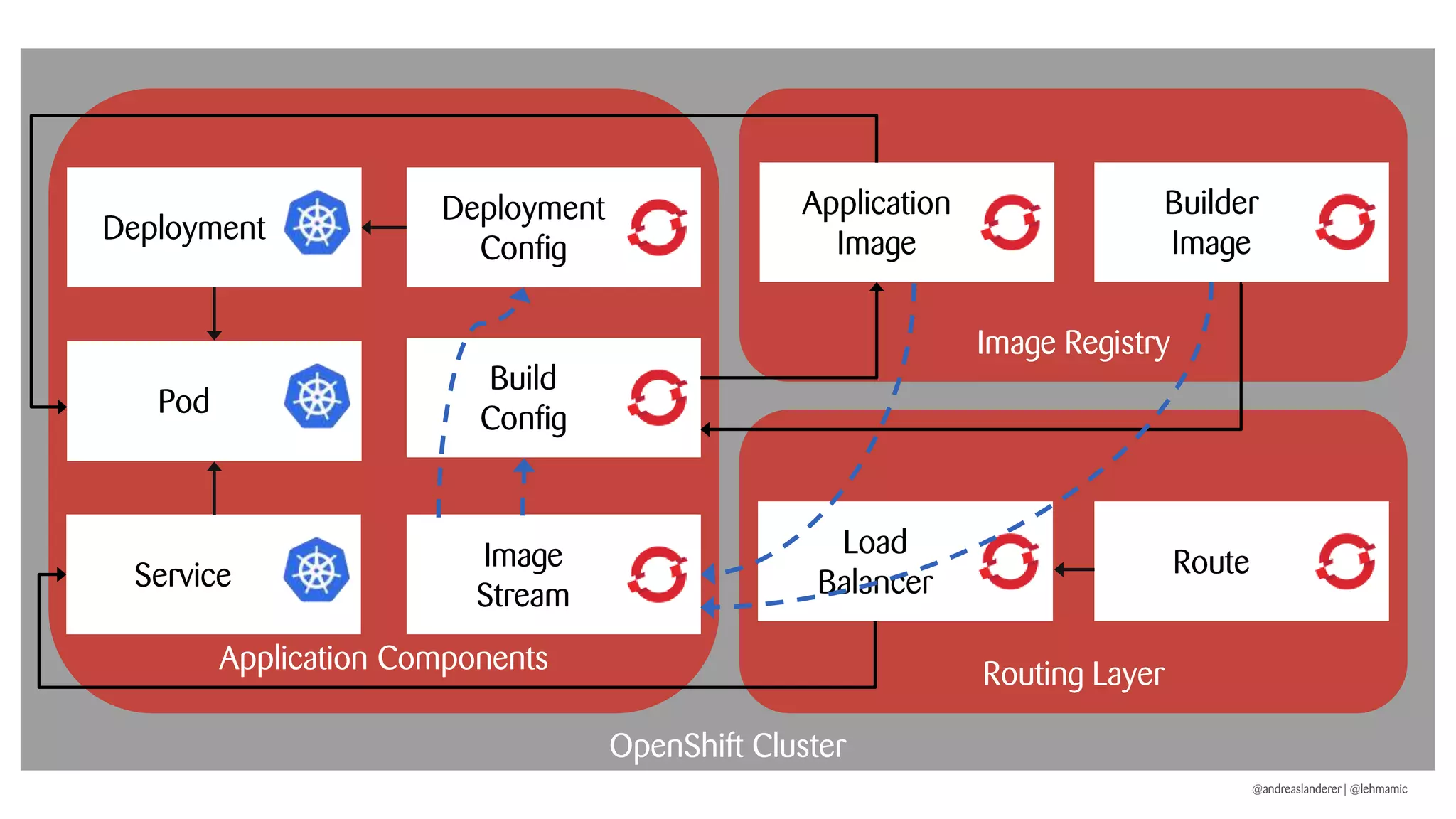

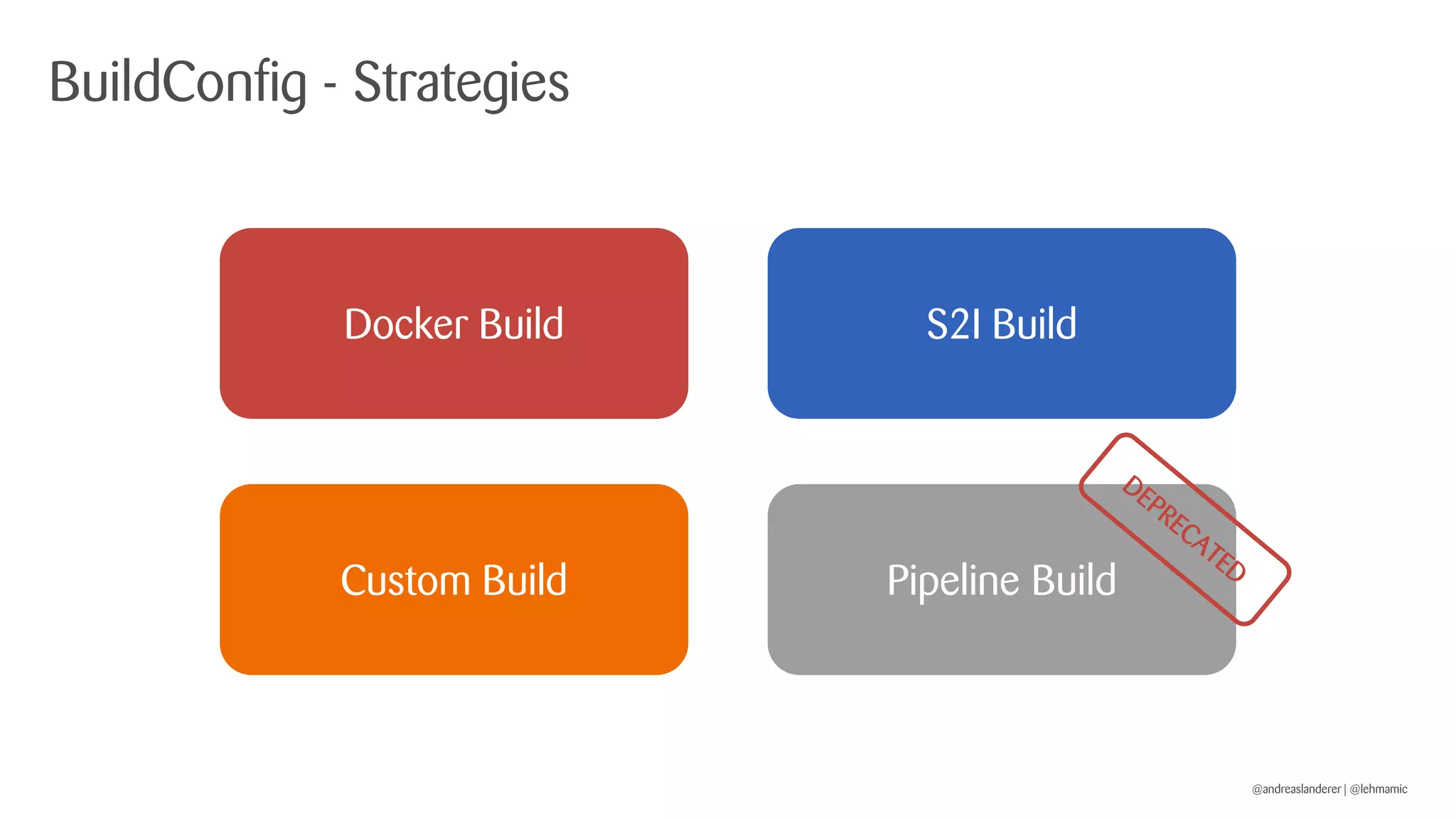

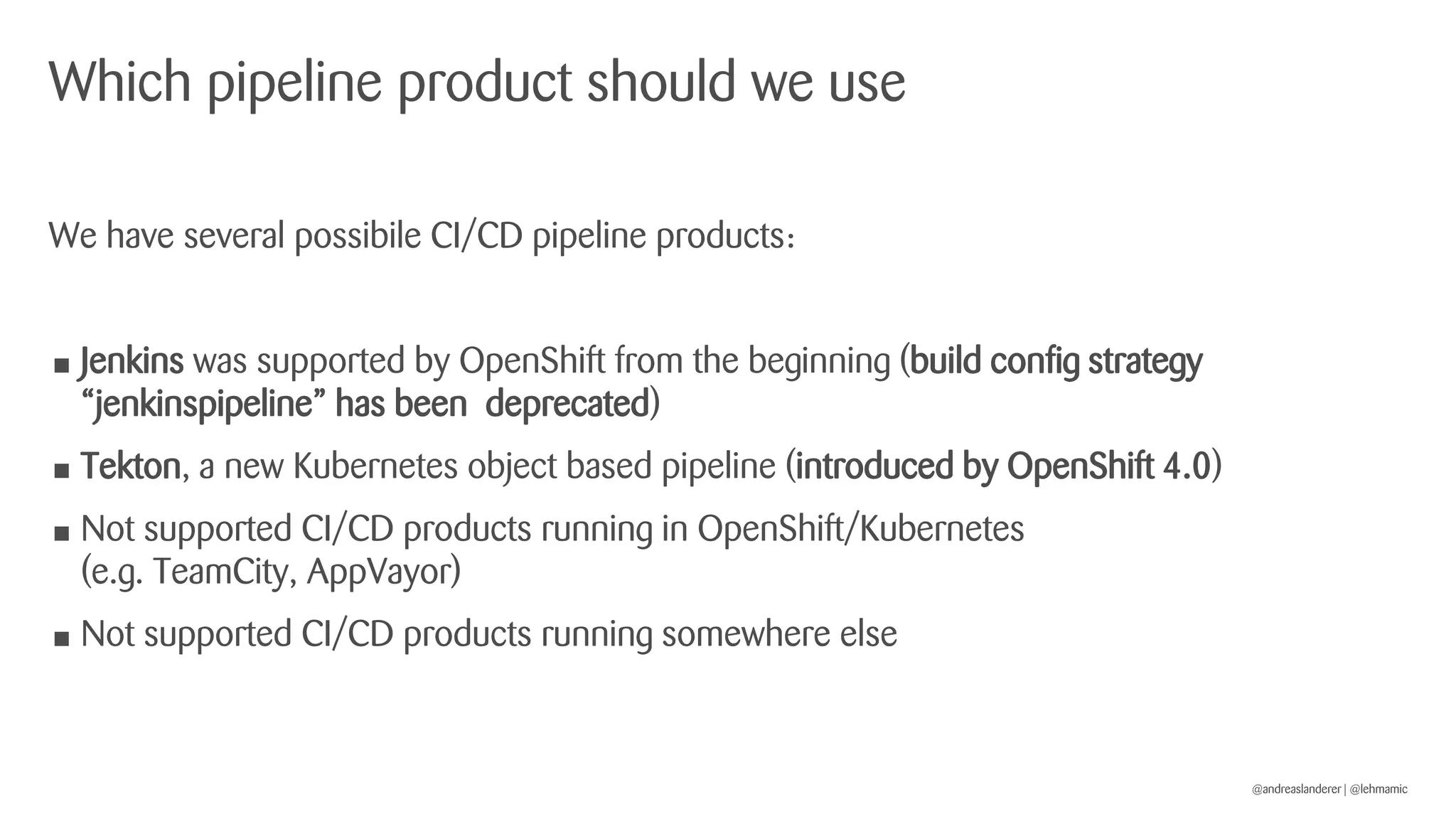

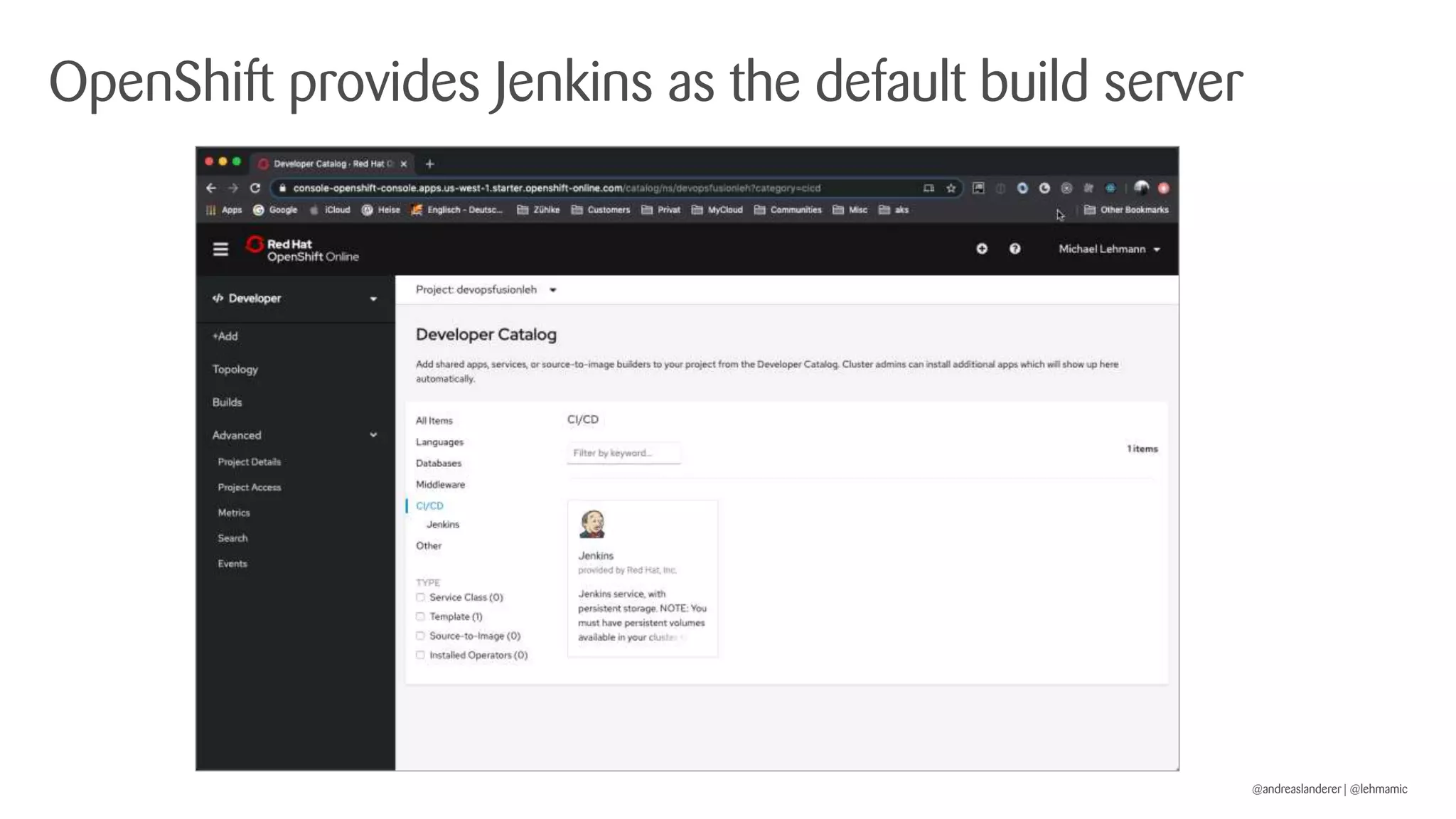

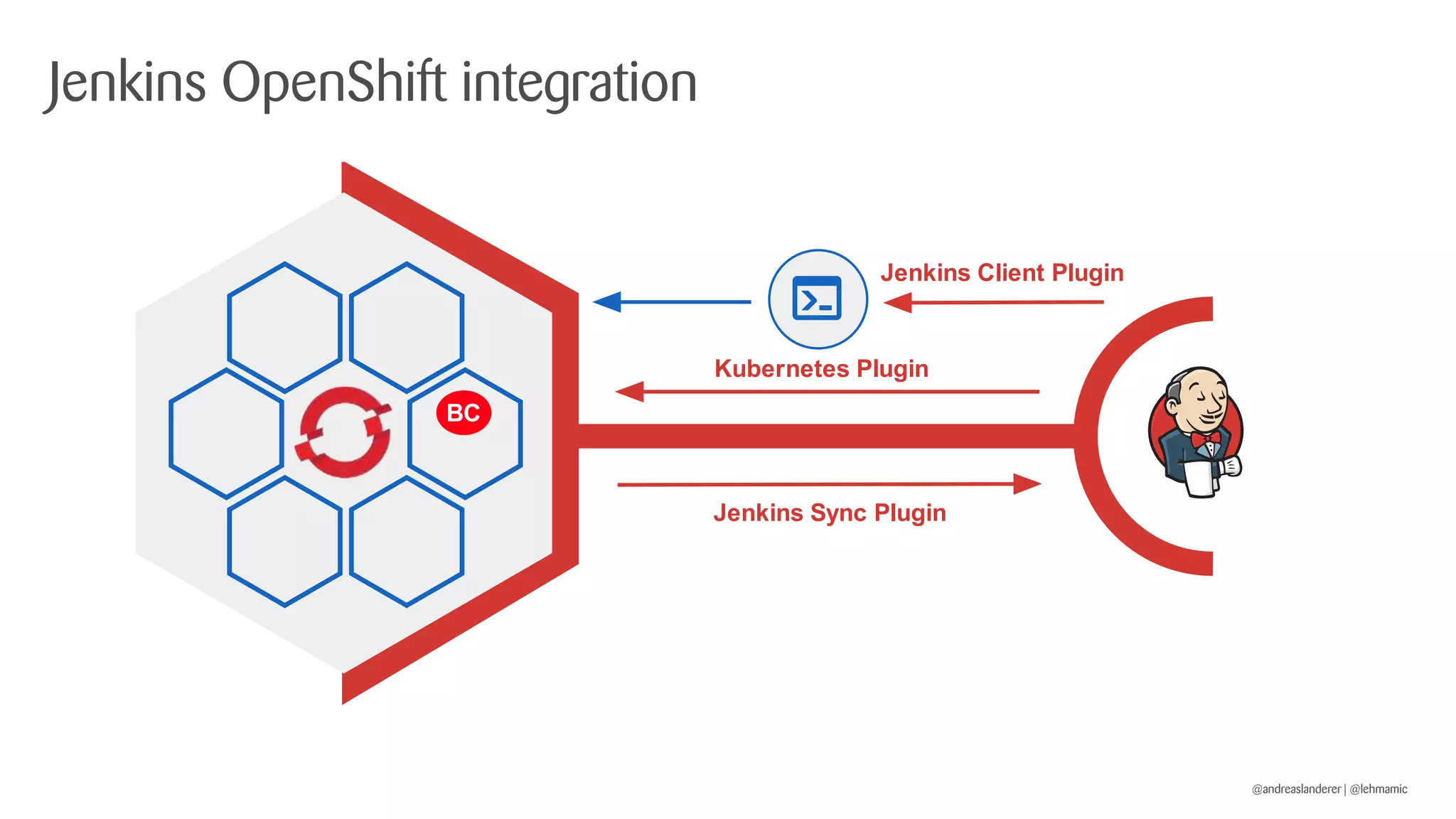

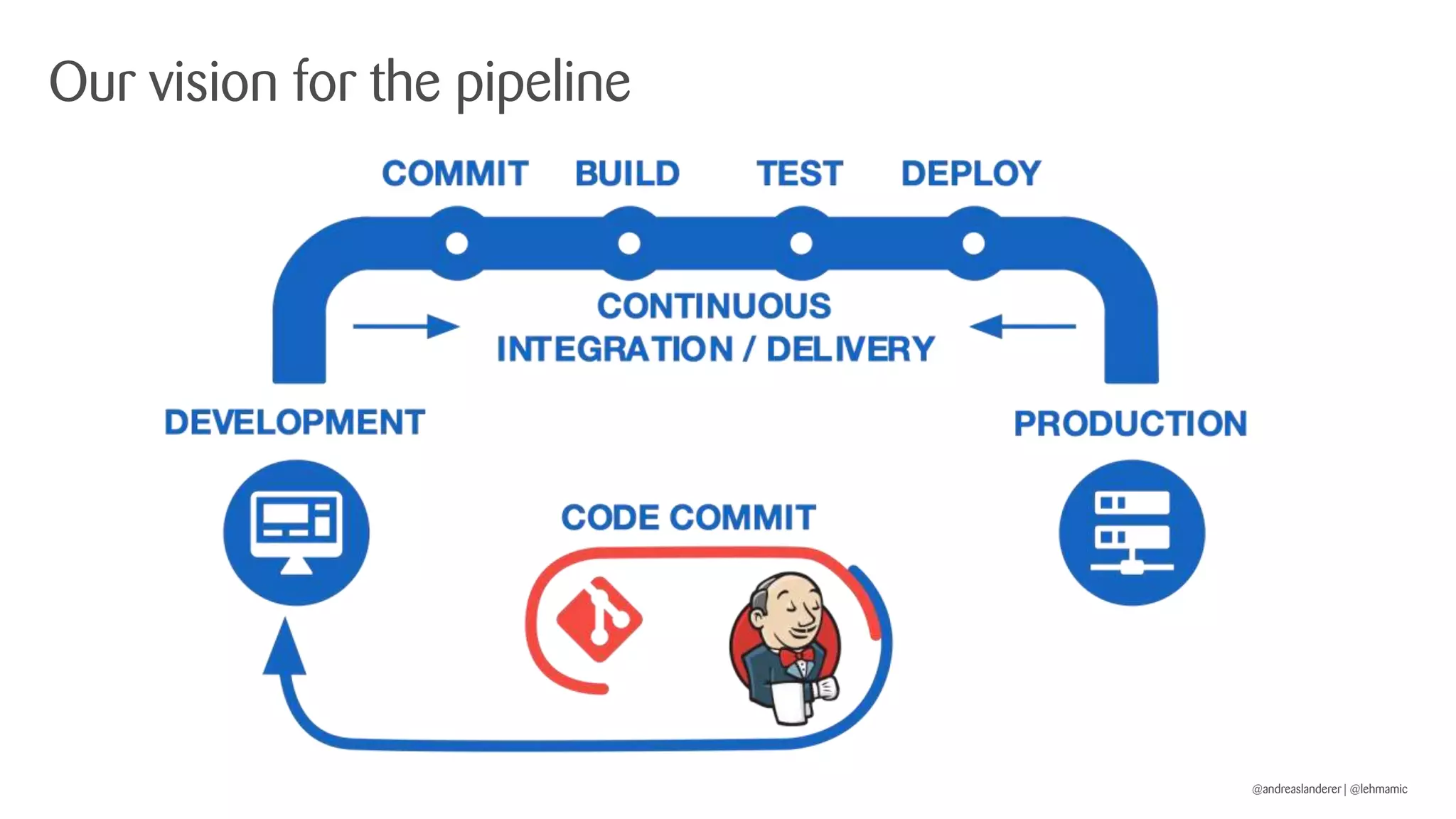

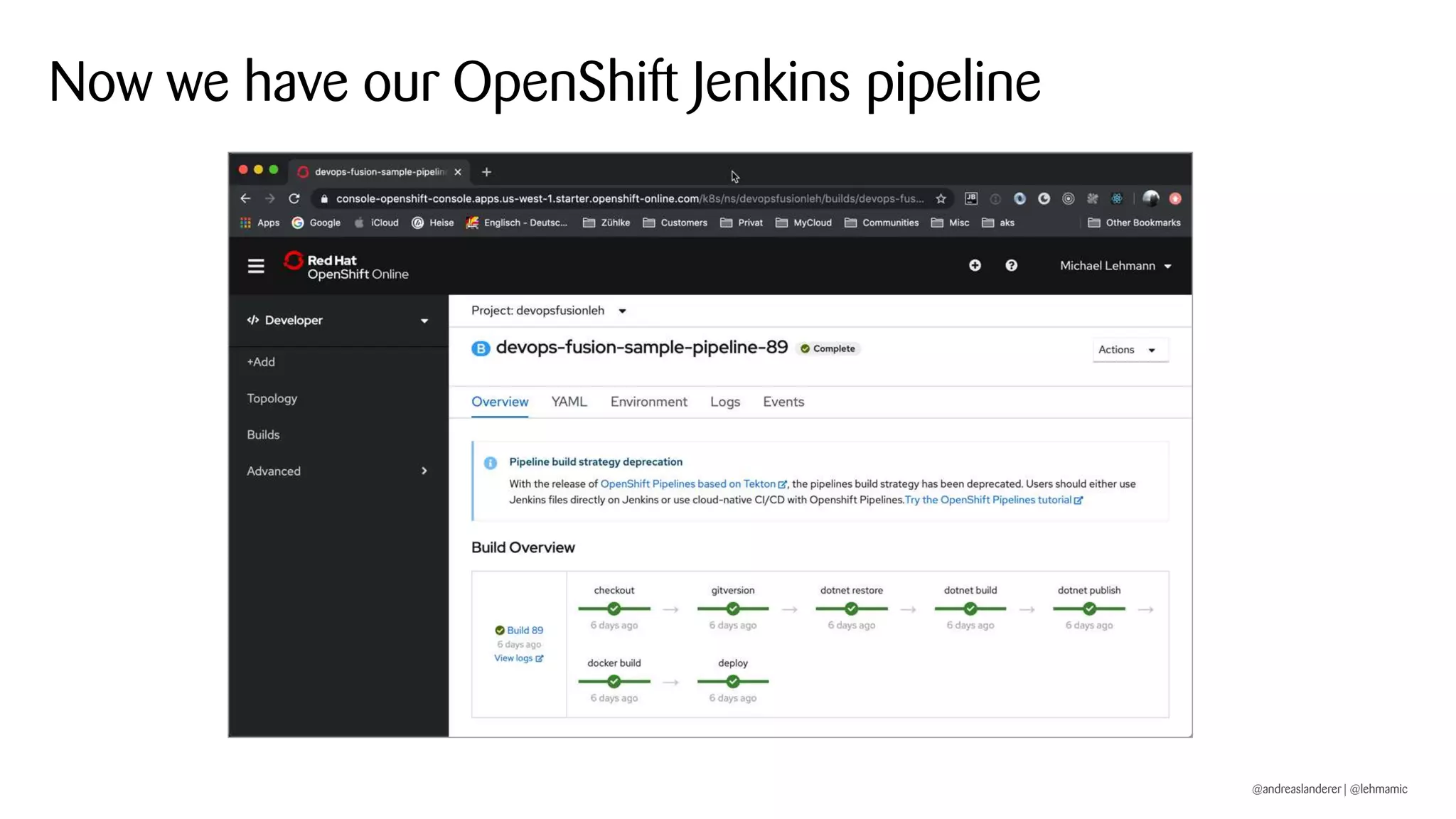

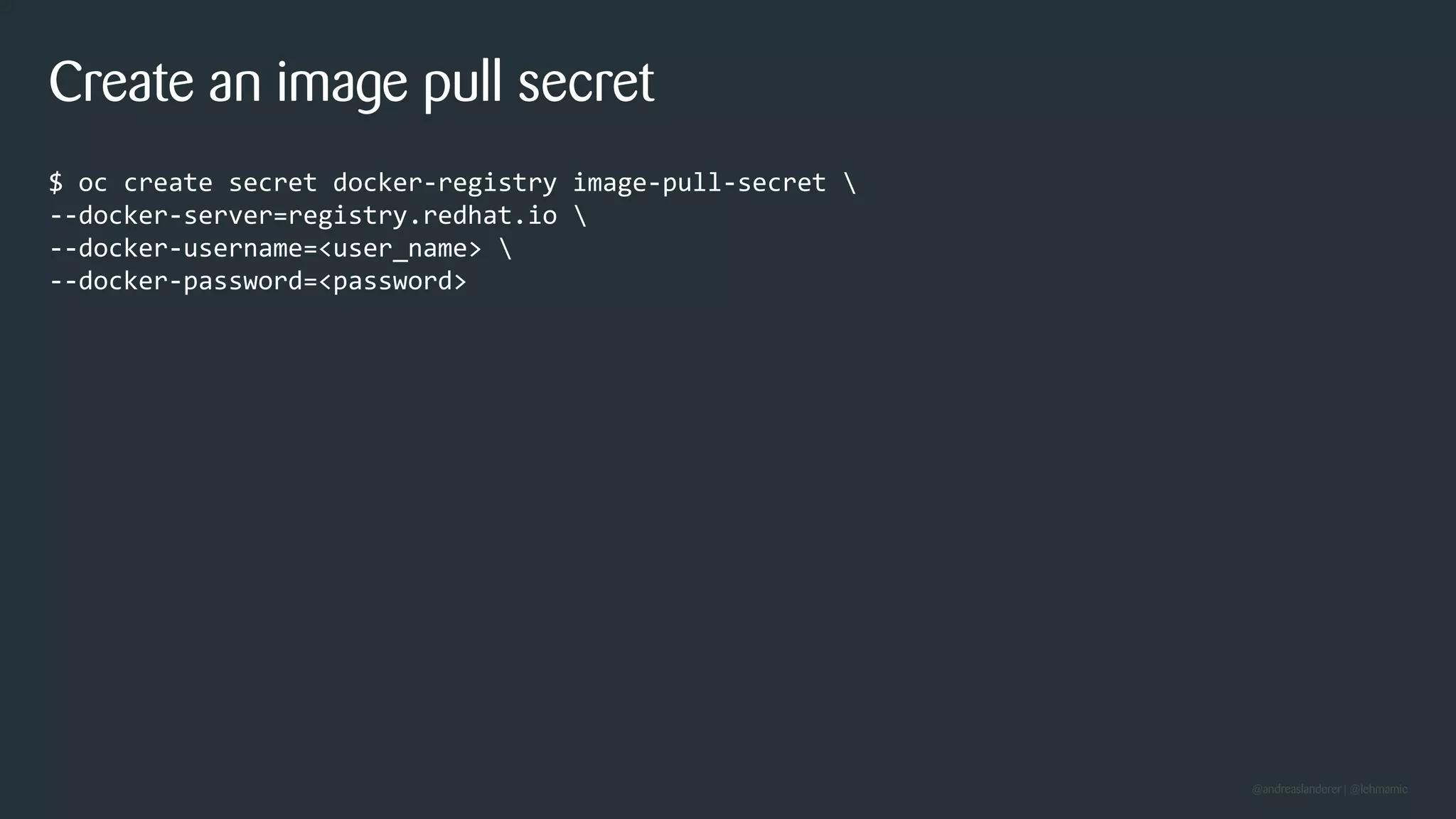

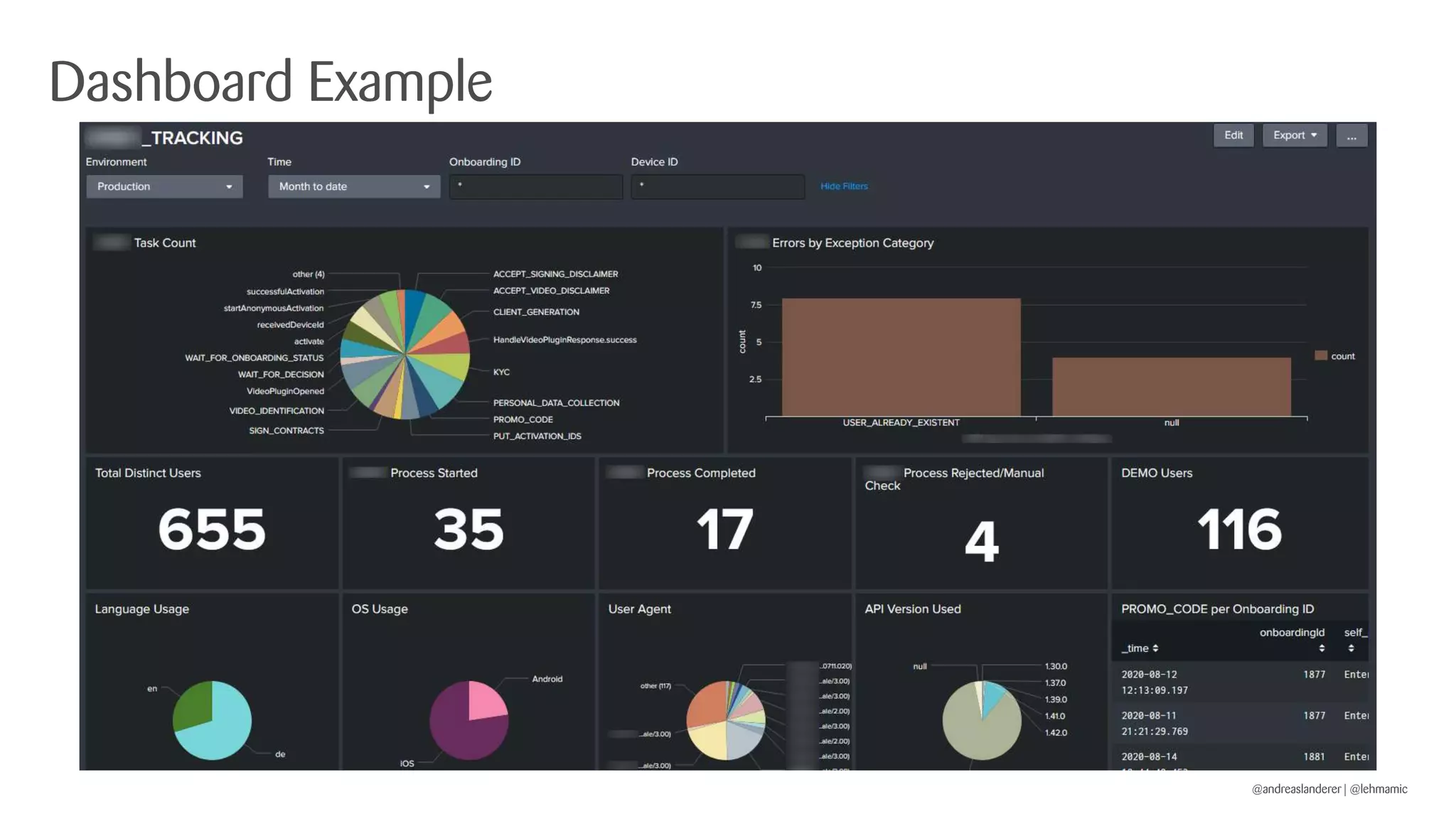

The document outlines best practices for implementing CI/CD pipelines using OpenShift, highlighting the use of Jenkins and Tekton for builds. It provides a step-by-step guide on setting up a Jenkins pipeline, building a sample app, and deploying it within OpenShift. Additionally, it emphasizes the importance of monitoring, logging, and customizing pod templates to suit specific needs.