The document discusses the dynamic programming approach to solving the matrix chain multiplication problem. It explains that dynamic programming breaks problems down into overlapping subproblems, solves each subproblem once, and stores the solutions in a table to avoid recomputing them. It then presents the algorithm MATRIX-CHAIN-ORDER that uses dynamic programming to solve the matrix chain multiplication problem in O(n^3) time, as opposed to a brute force approach that would take exponential time.

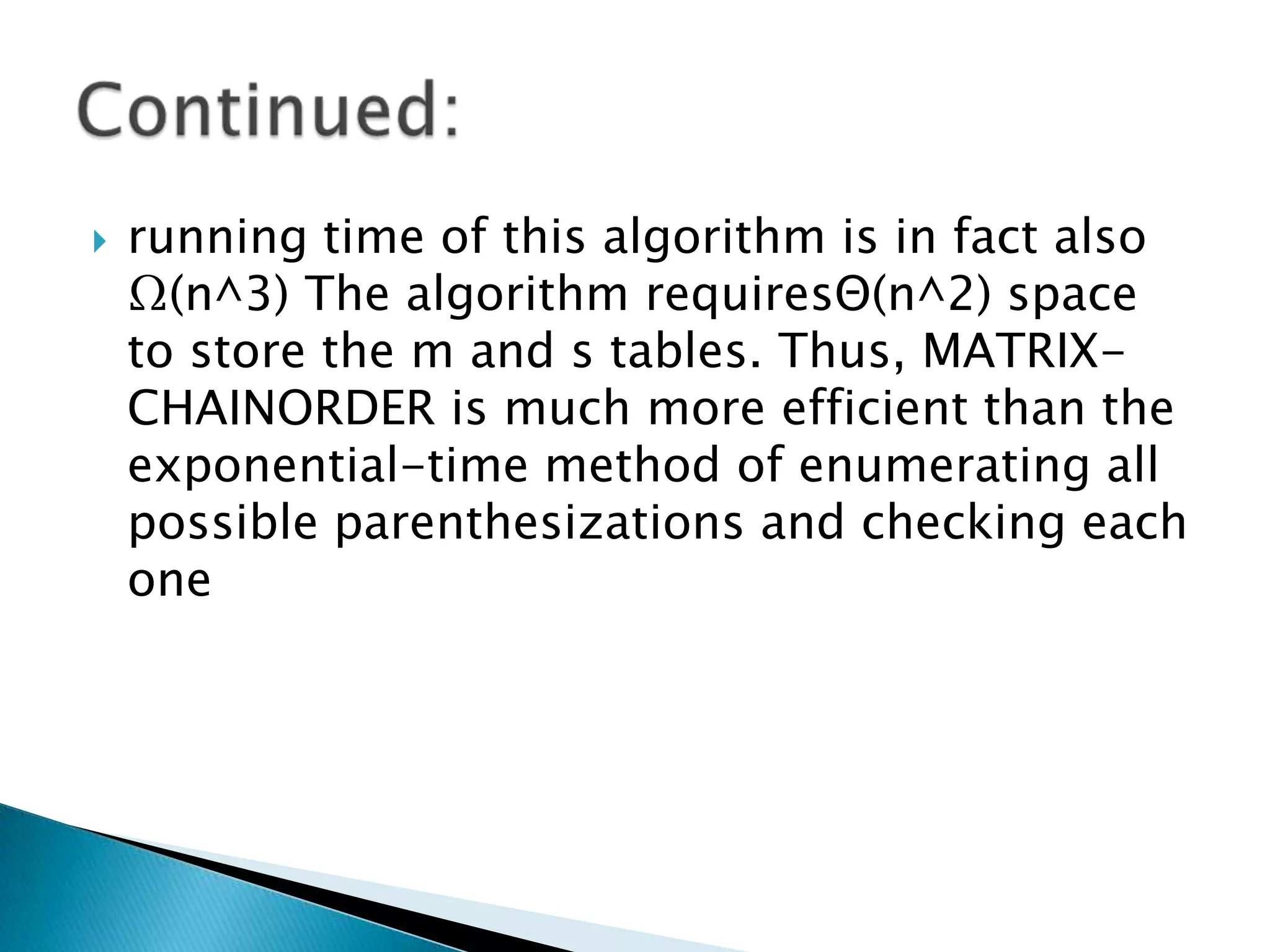

![MATRIX-MULTIPLY(A,B)

1 .if columns[A] ≠rows[B]

2 . Then error “incompatible dimensions”

3 . let C be a new A:rows B:columns matrix

4 .for i <-1 to A.rows

5 .for j<-1 to B.columns

6 .cij =0

7 .for k =1 to A.columns

8 .cij =cij + aik .bkj

9 .return C](https://image.slidesharecdn.com/dynamicprogramming1-140422091147-phpapp02/75/Dynamic-programming1-7-2048.jpg)

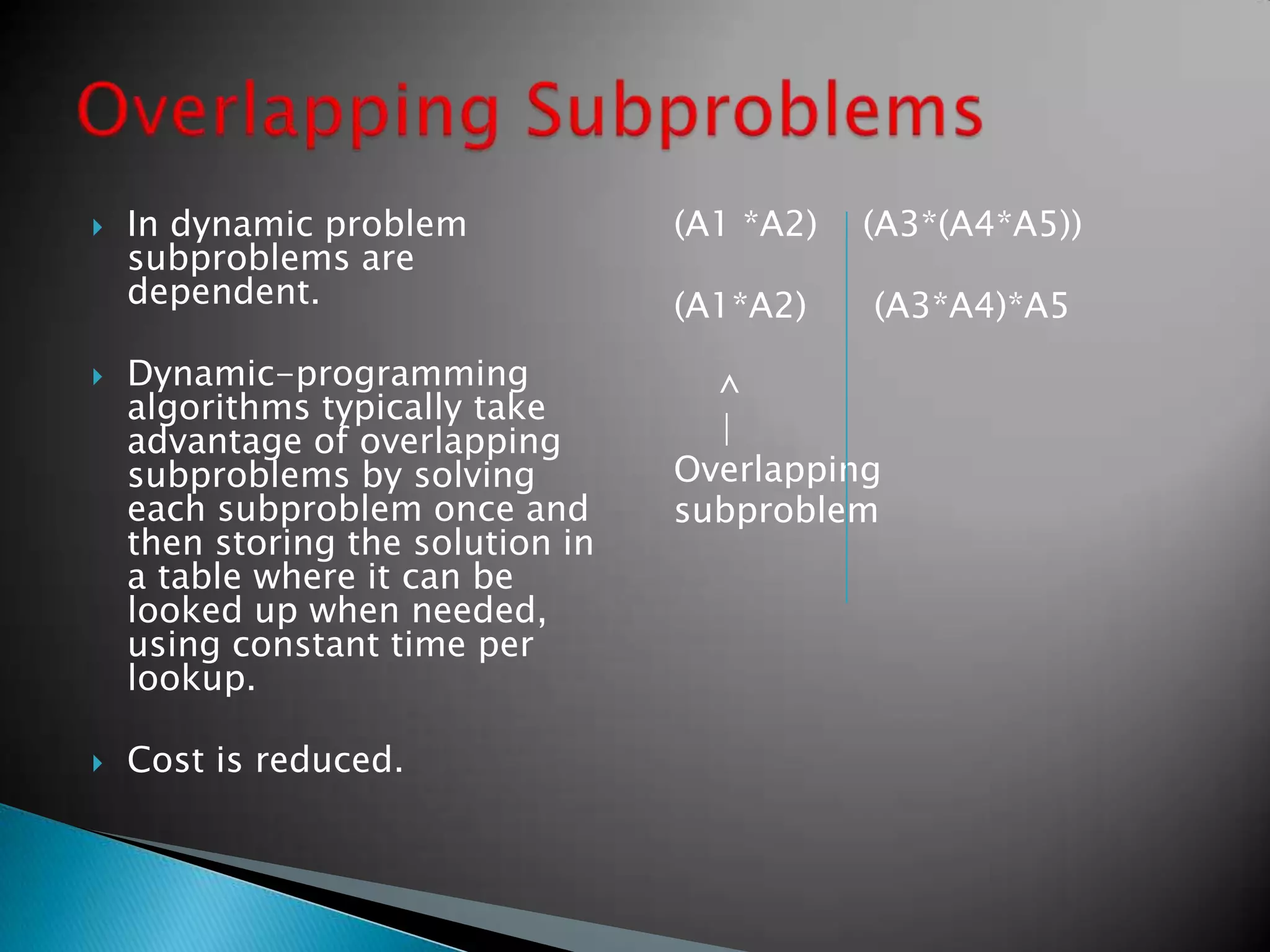

![ At this point, we could easily write a recursive

algorithm based on recurrence to compute the

minimum cost m[1,n]for multiplying A1,A2,…, An.

Aswe sawfor the rod-cutting problem, and this

recursive algorithm takes exponential time, which is

no better than the brute-force method of checking

each way of parenthesizing the product.

we have relatively few distinct subproblems: one

subproblem for each choice of i and j satisfying 1 ≤i≤

j≤n, or (nc2 +n)=Θ(n^2) in all. A recursive algorithm

may encounter each subproblem many times in

different branches of its recursion tree. This property

of overlapping subproblems is the second hallmark of

when dynamic programming applies.](https://image.slidesharecdn.com/dynamicprogramming1-140422091147-phpapp02/75/Dynamic-programming1-14-2048.jpg)

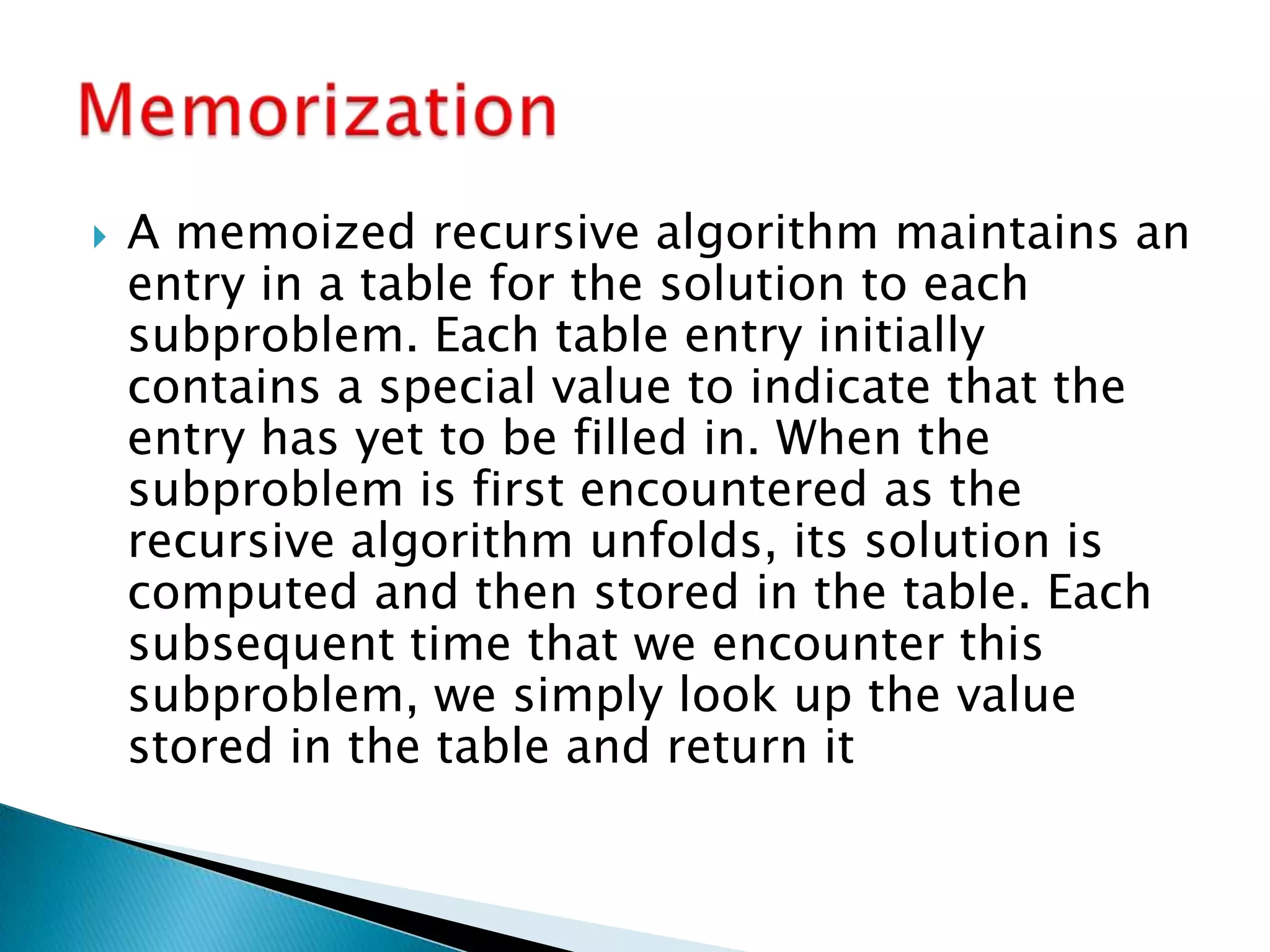

![ Instead of computing the solution to recurrence

recursively, we compute the optimal cost by using a

tabular, bottom-up approach.

We shall implement the tabular, bottom-up method

in the procedure MATRIXCHAIN-ORDER, which

appears below. This procedure assumes that matrix

Ai has dimensions pi-1 *pi for i=1,2,…,n. Its input is

a sequence p=<p0; p1; : : : ;pni> where length[p] =

n+1. The procedure uses an auxiliary table

m[1…n,1…n] for storing the m[I,j] costs and another

auxiliary table s[1…n,1…n]that records which index

of k achieved the optimal cost in computing m[i; j

].We shall use the table s to construct an optimal

solution.](https://image.slidesharecdn.com/dynamicprogramming1-140422091147-phpapp02/75/Dynamic-programming1-15-2048.jpg)

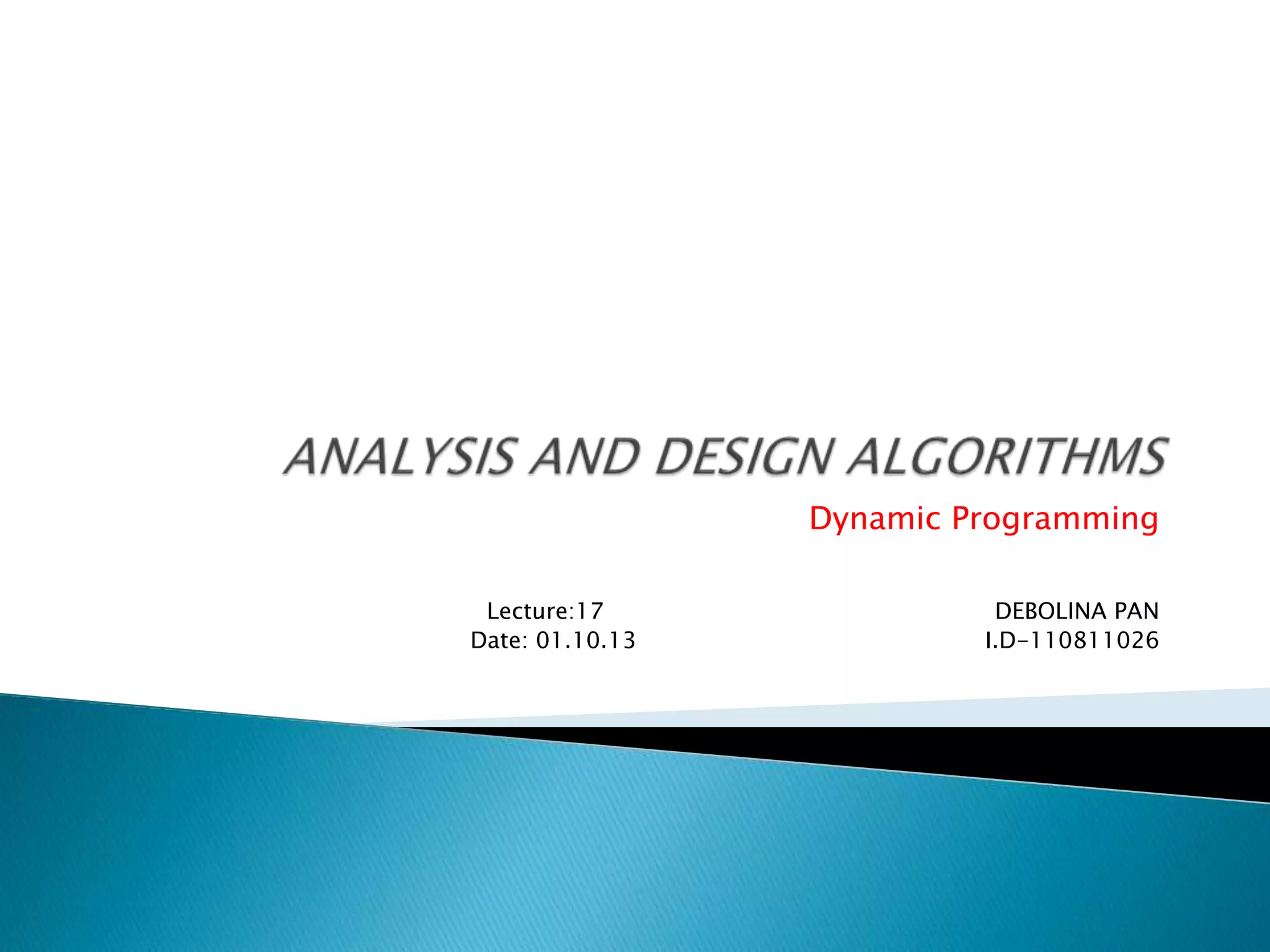

![ In order to implement the bottom-up approach, we

must determine which entries of the table we refer to

when computing m[i,j].the cost m[i ,j] of computing a

matrix-chain product of j-i+1matrices depends only

on the costs of computing matrix-chain products of

fewer than j-i+1 matrices. That is, for k =I,i +1,…,j-

1, the matrix Ai…k is a product of k-i+1< j-i+1

matrices and the matrix Ak+1…j is a product of j-k<

j-i +1 matrices. Thus, the algorithm should fill in the

table min a manner that corresponds to solving the

parenthesization problem on matrix chains of

increasing length. For the subproblem of optimally

parenthesizing the chain Ai,Ai+1,… Aj,we consider

the subproblem size to be the length j-i+1 of the

chain.](https://image.slidesharecdn.com/dynamicprogramming1-140422091147-phpapp02/75/Dynamic-programming1-16-2048.jpg)

![MATRIX-CHAIN-ORDER.p/

1. n D p:length 1

2.Let m[1,… n.1,… n] and s[1,… n-1. 2…n] be new tables

3. for i =1 to n

4.Do m[i,j] <-0

5. for l =2 to n // l is the chain length

6. dofor i =1 to n –l+1

7. Do j<-i+l-1

8. m[i, j]<- infinity

9.for k <-i to j-1

10. Do q <-m[i, k]+m[k+1,j]+pi-1.pk.pj

11. if q <m[i,j]

12. m[i, j]<-q

13. s[i,j]<-k

14. return m and s](https://image.slidesharecdn.com/dynamicprogramming1-140422091147-phpapp02/75/Dynamic-programming1-17-2048.jpg)