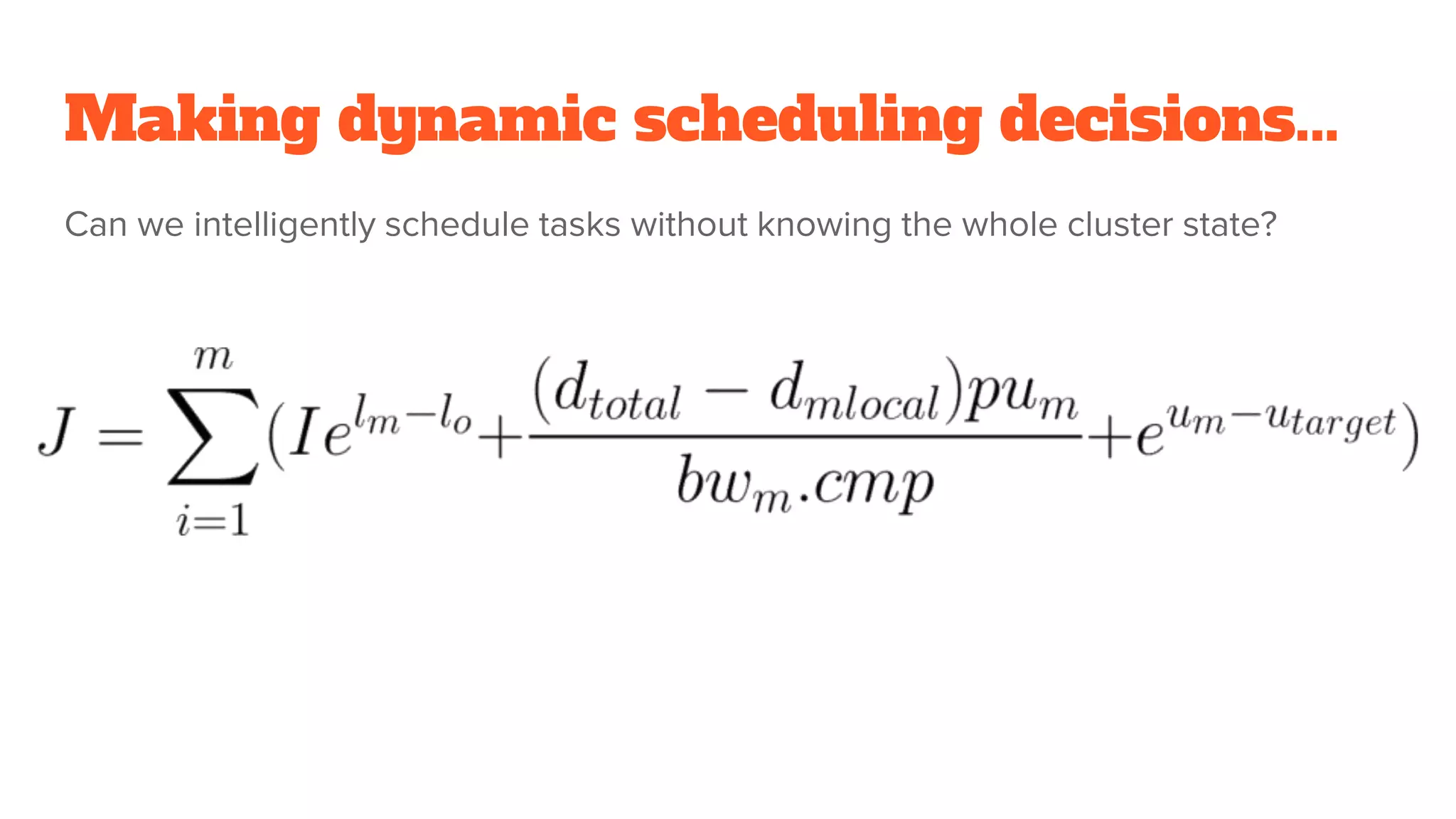

Aaron Carey's document discusses the need for federated clusters in Mesos to share rendering resources across sites in different time zones, focusing on cloud burst rendering. It outlines a proposed design led by a master and gossip protocol, emphasizing a simple framework that minimizes the complexity of master interactions. The document also touches on scheduling strategies, penalties related to latency and data, and invites collaboration on further proposals.