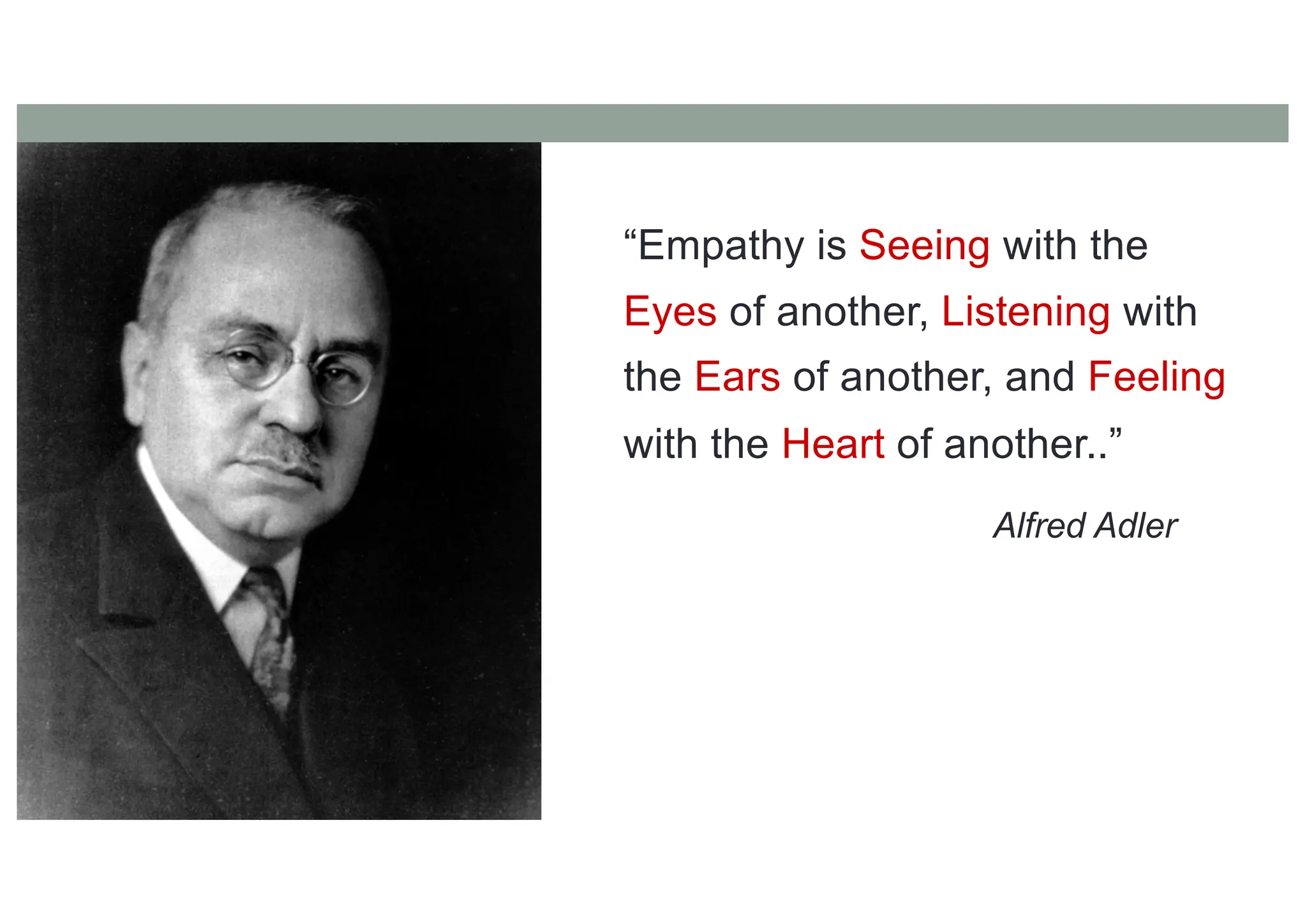

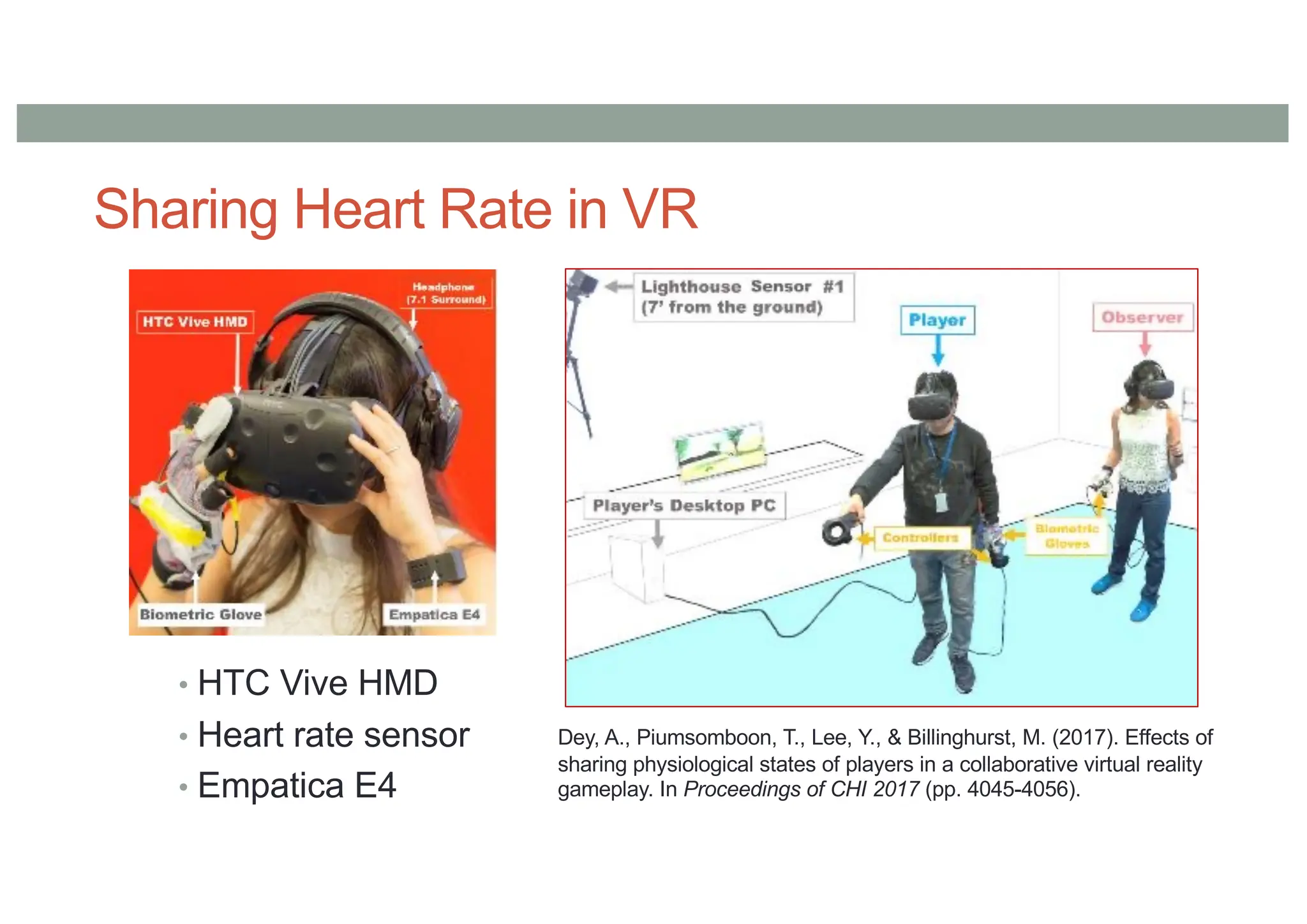

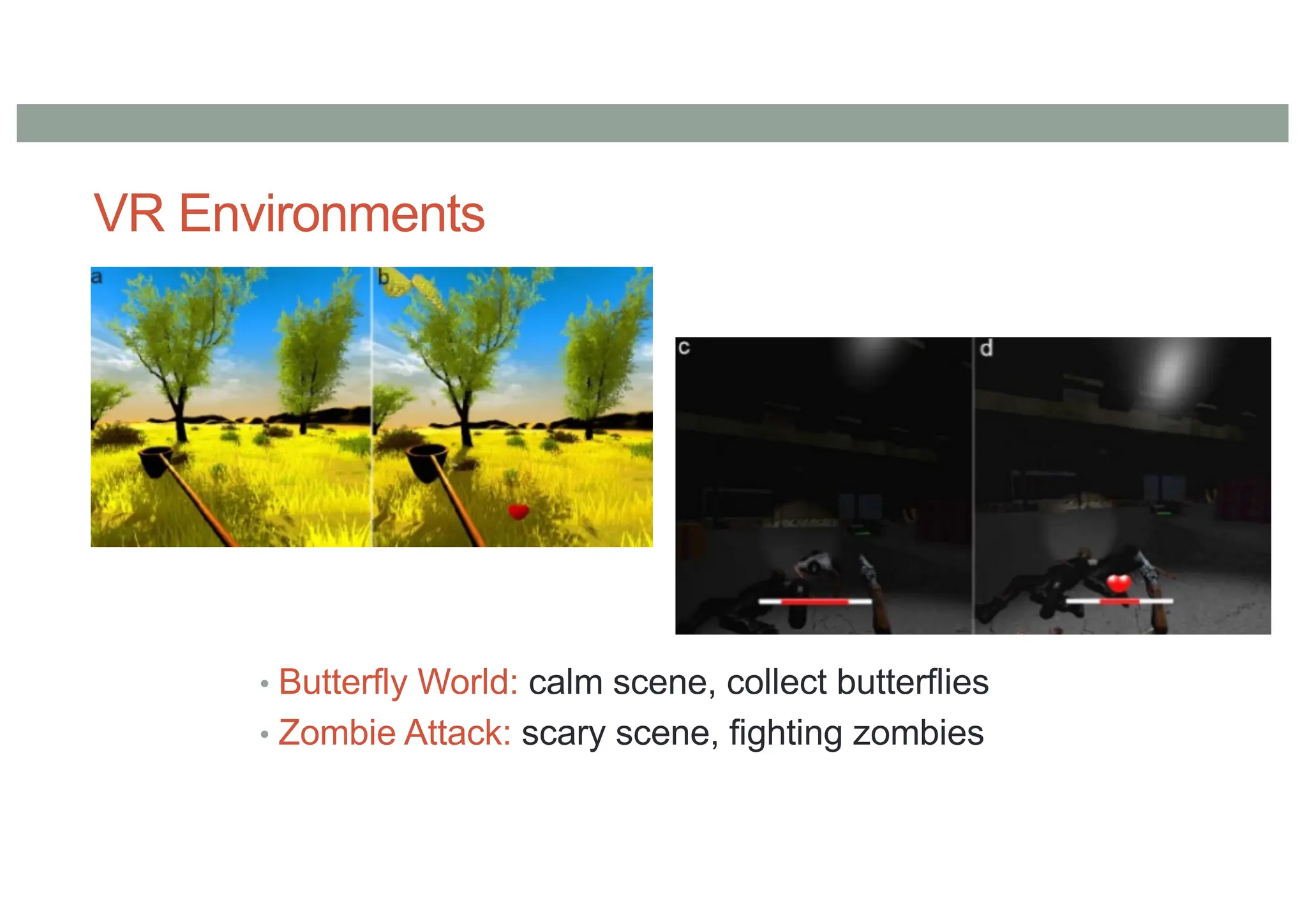

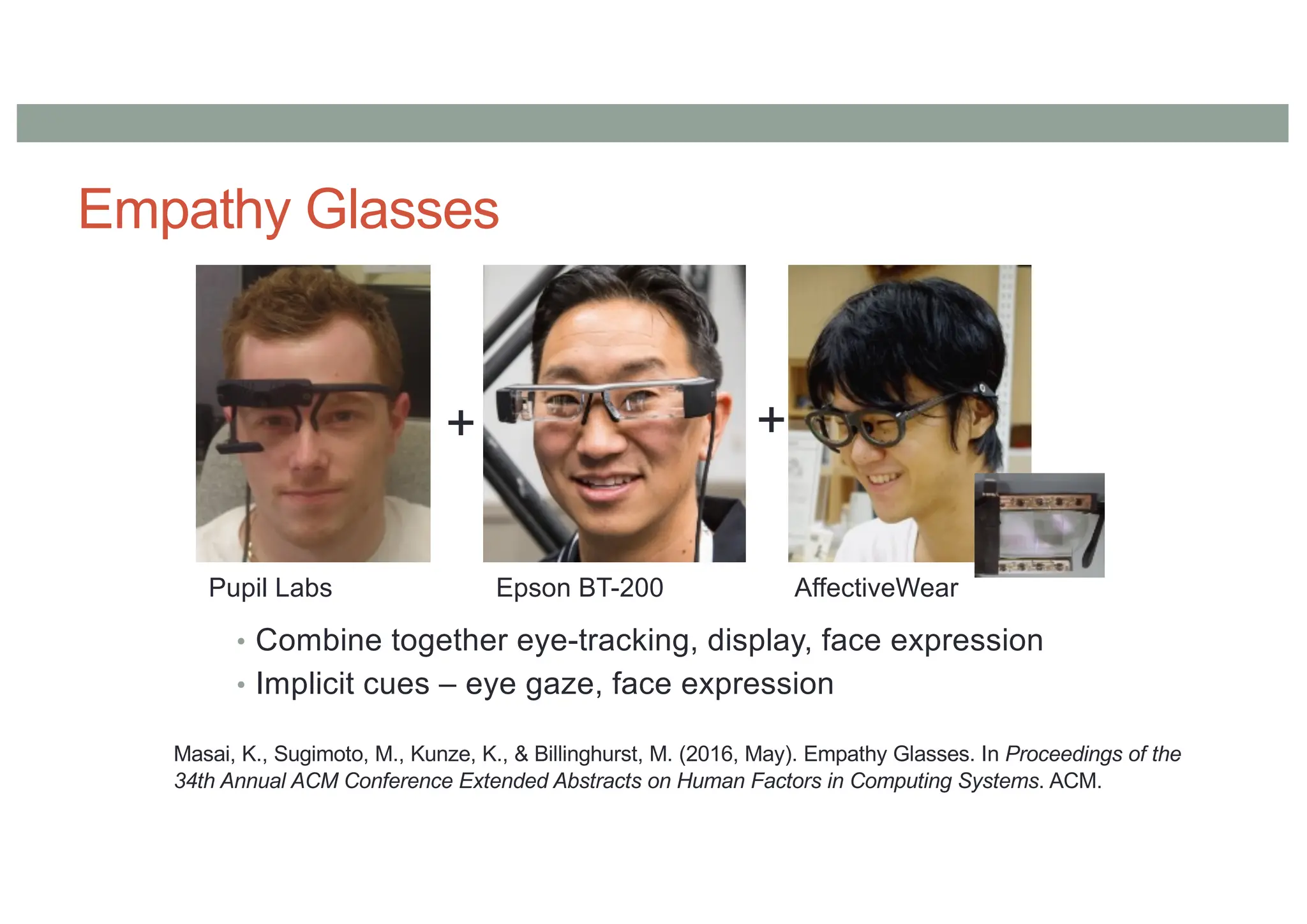

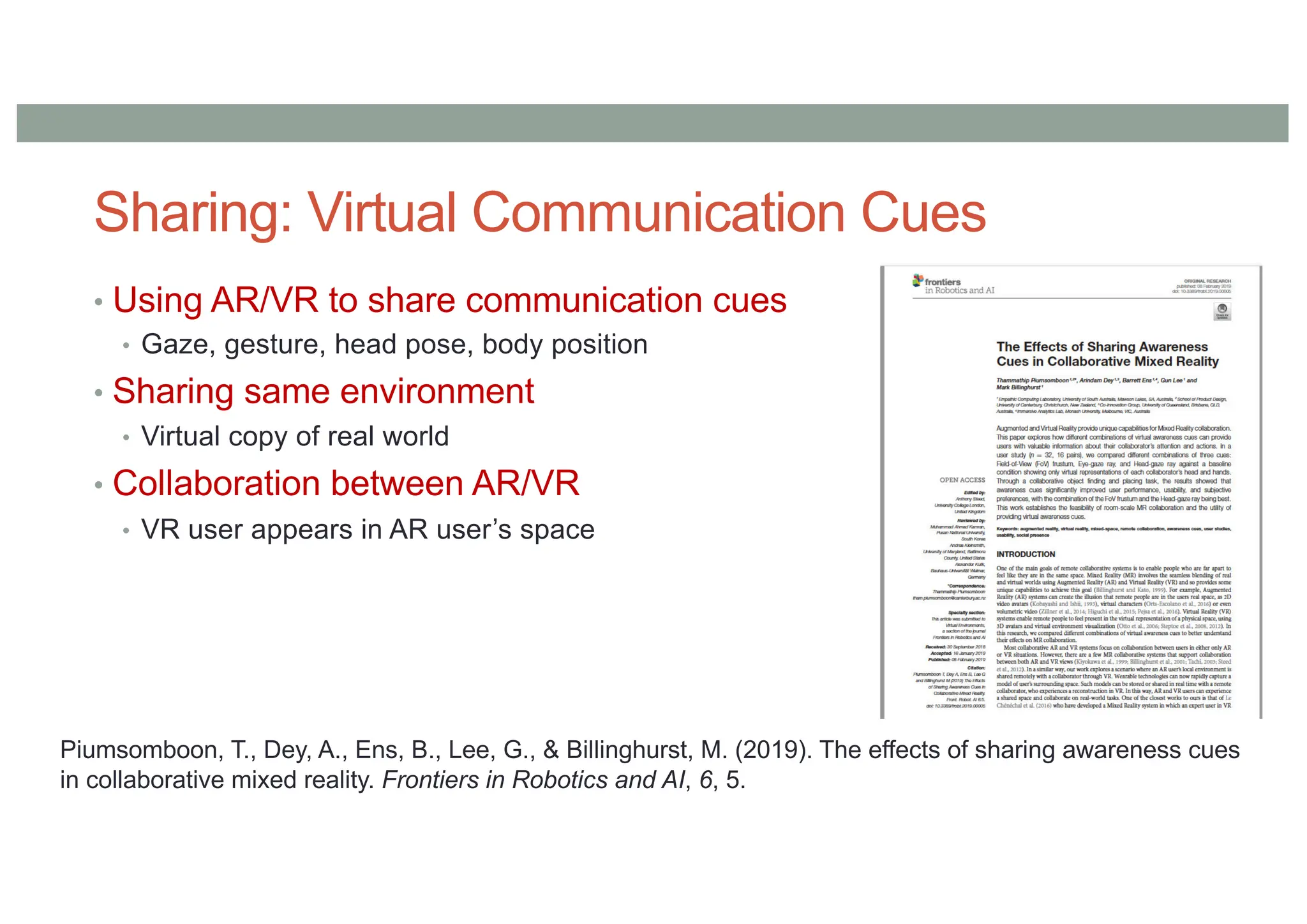

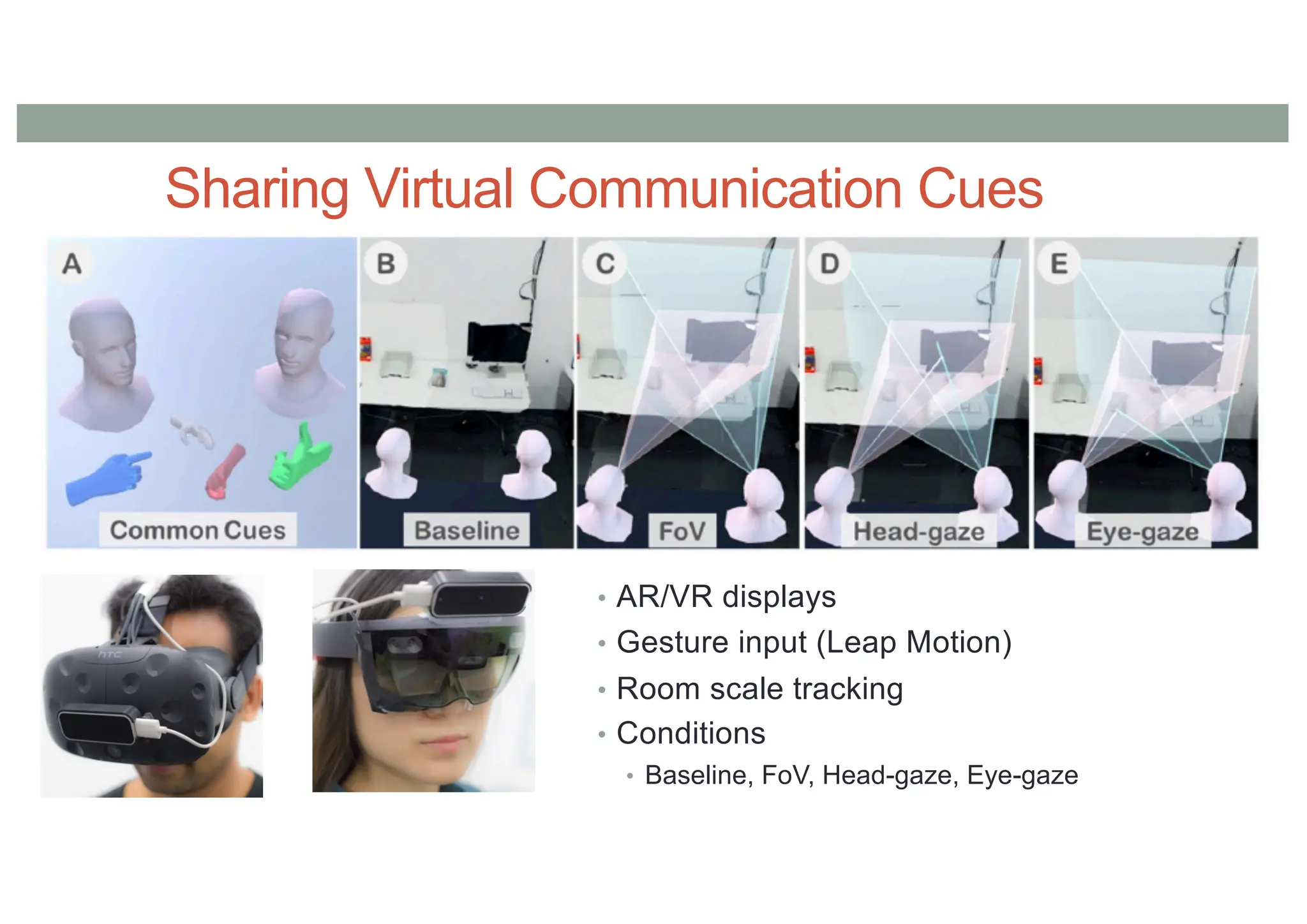

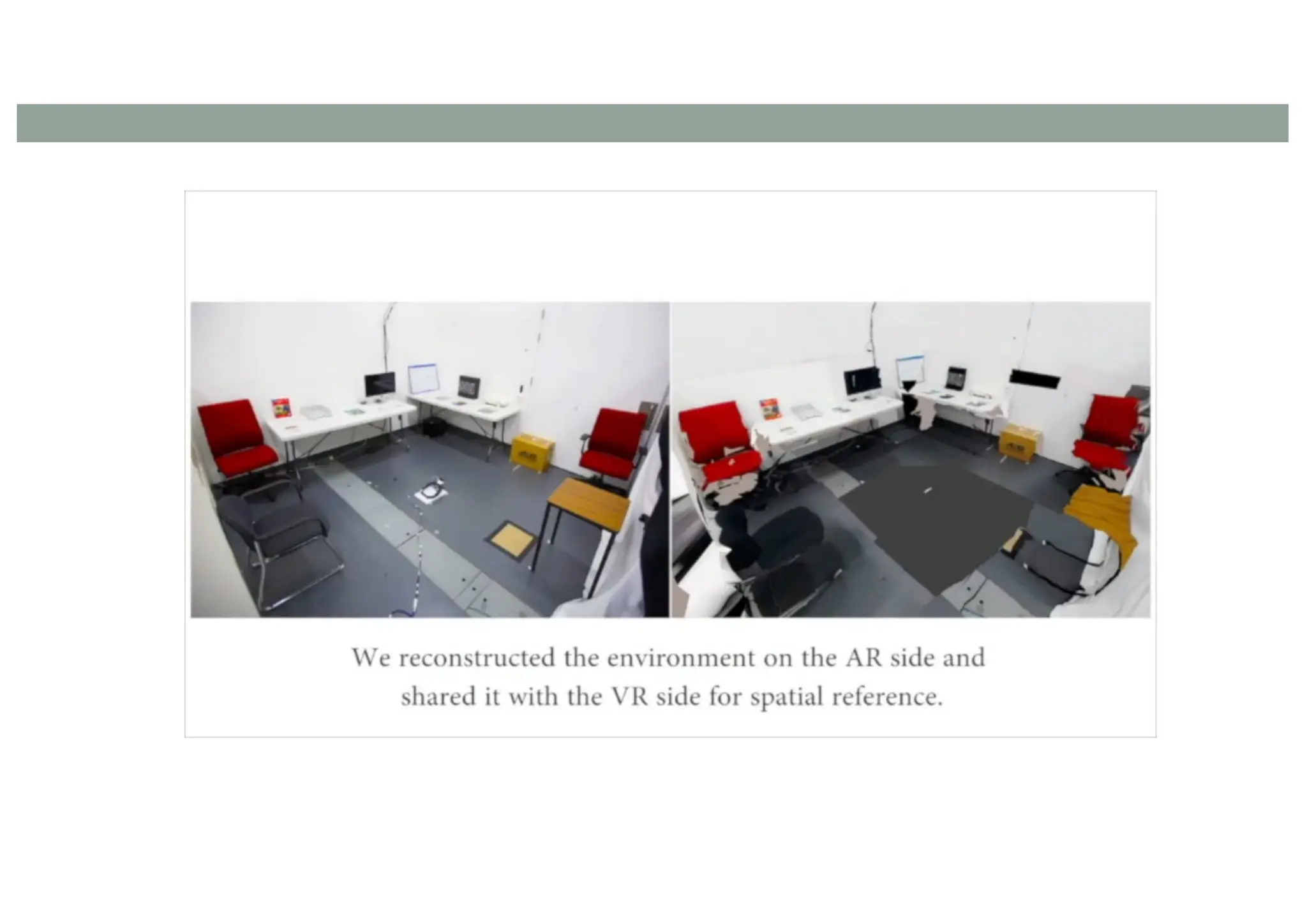

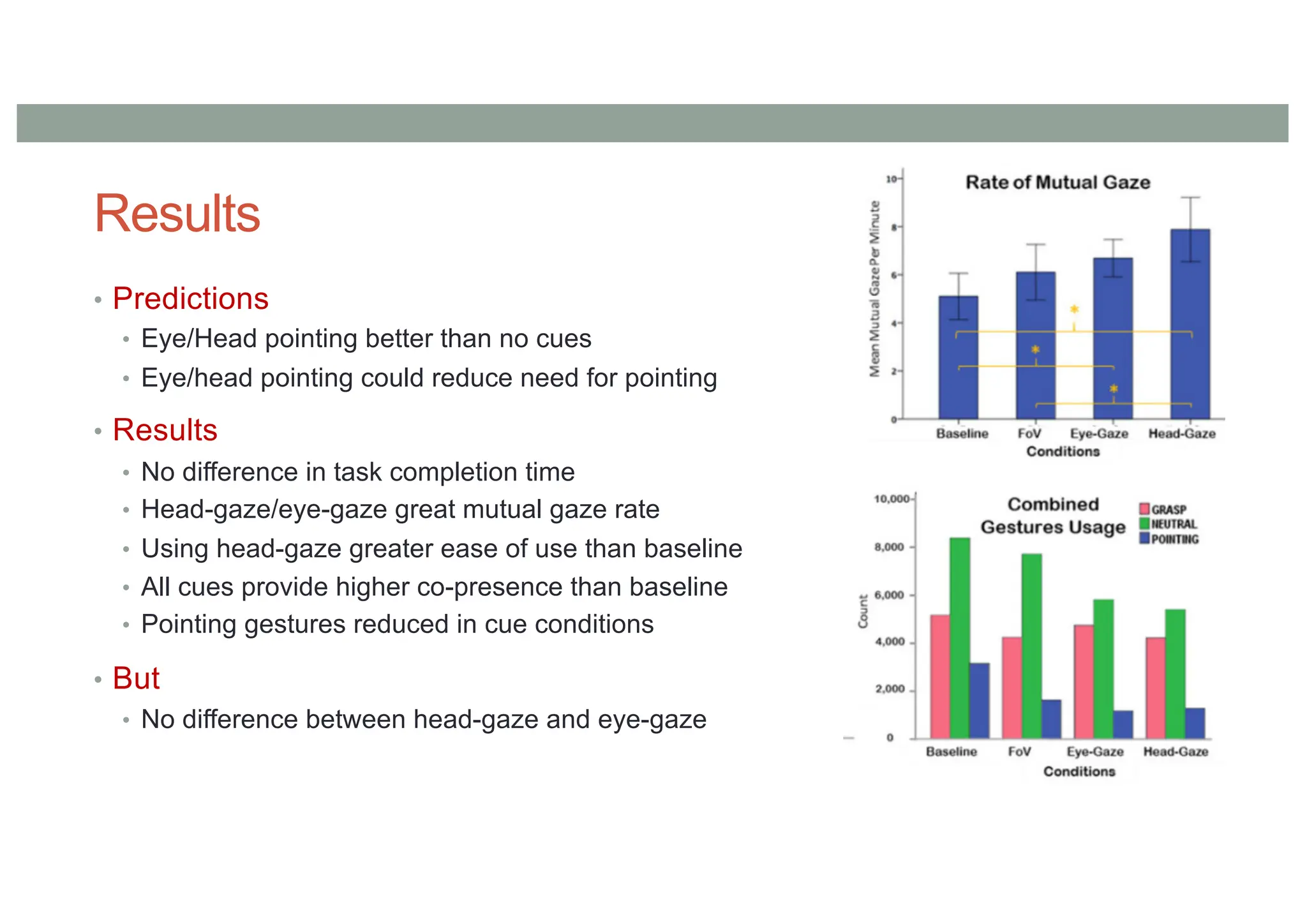

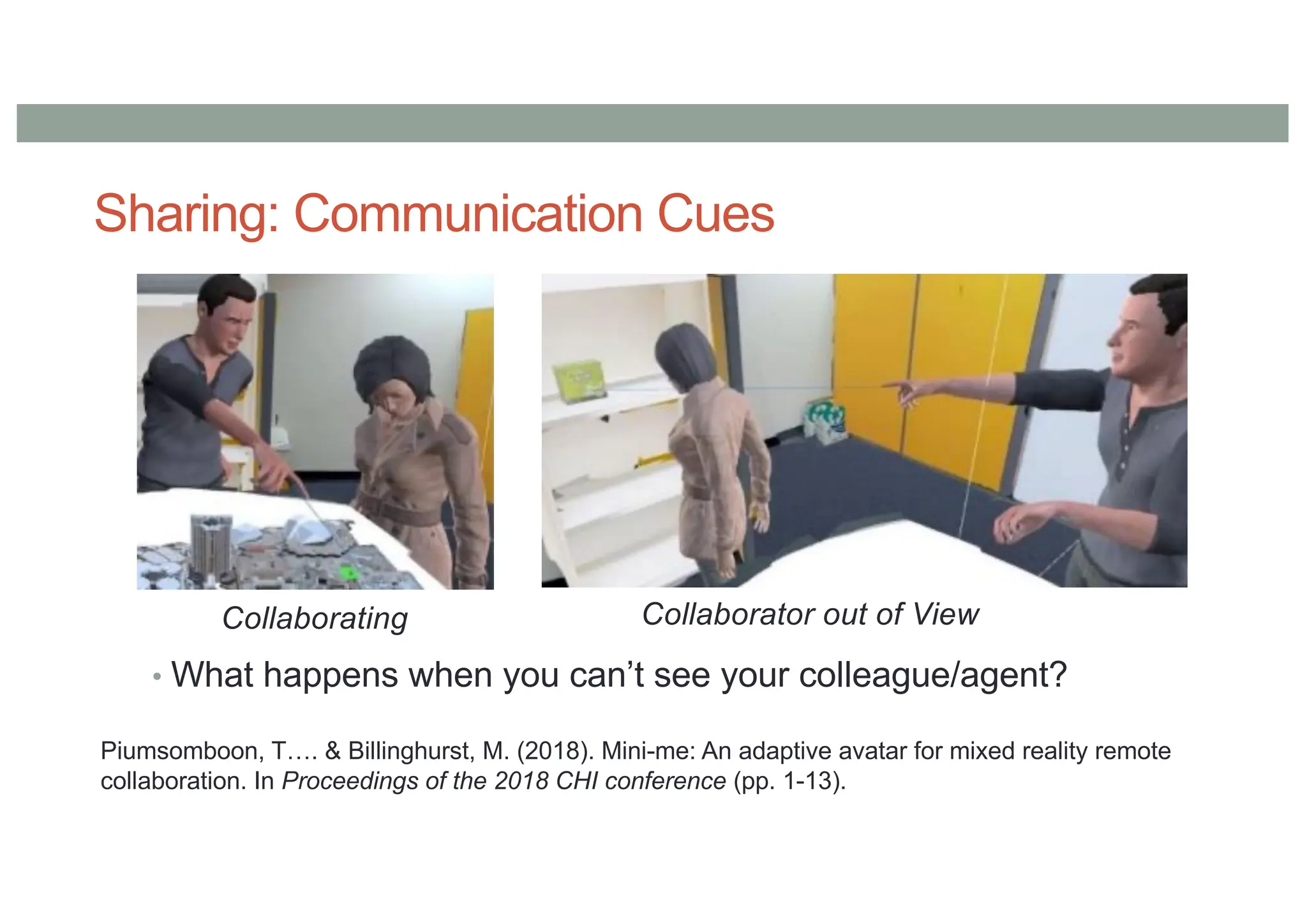

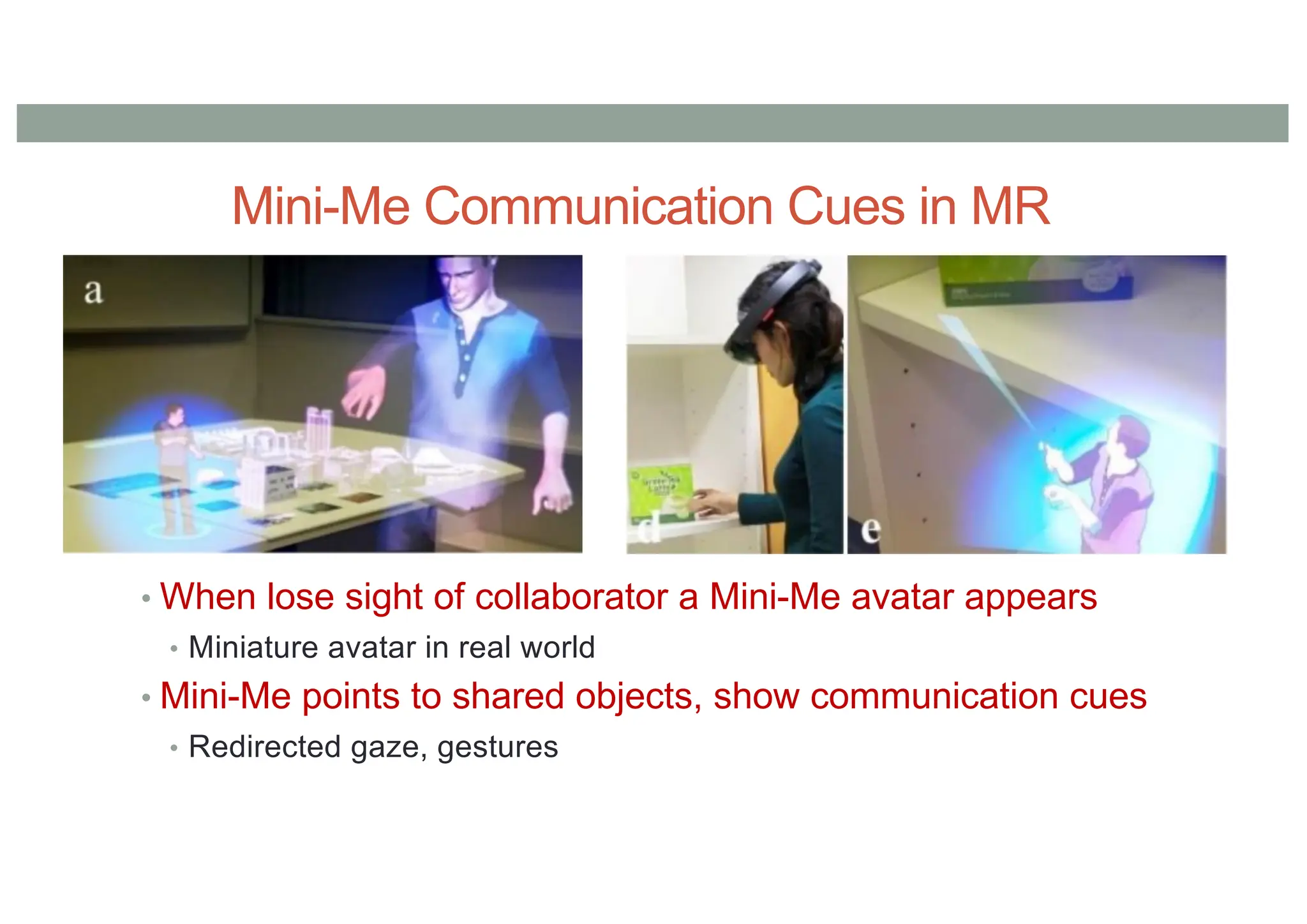

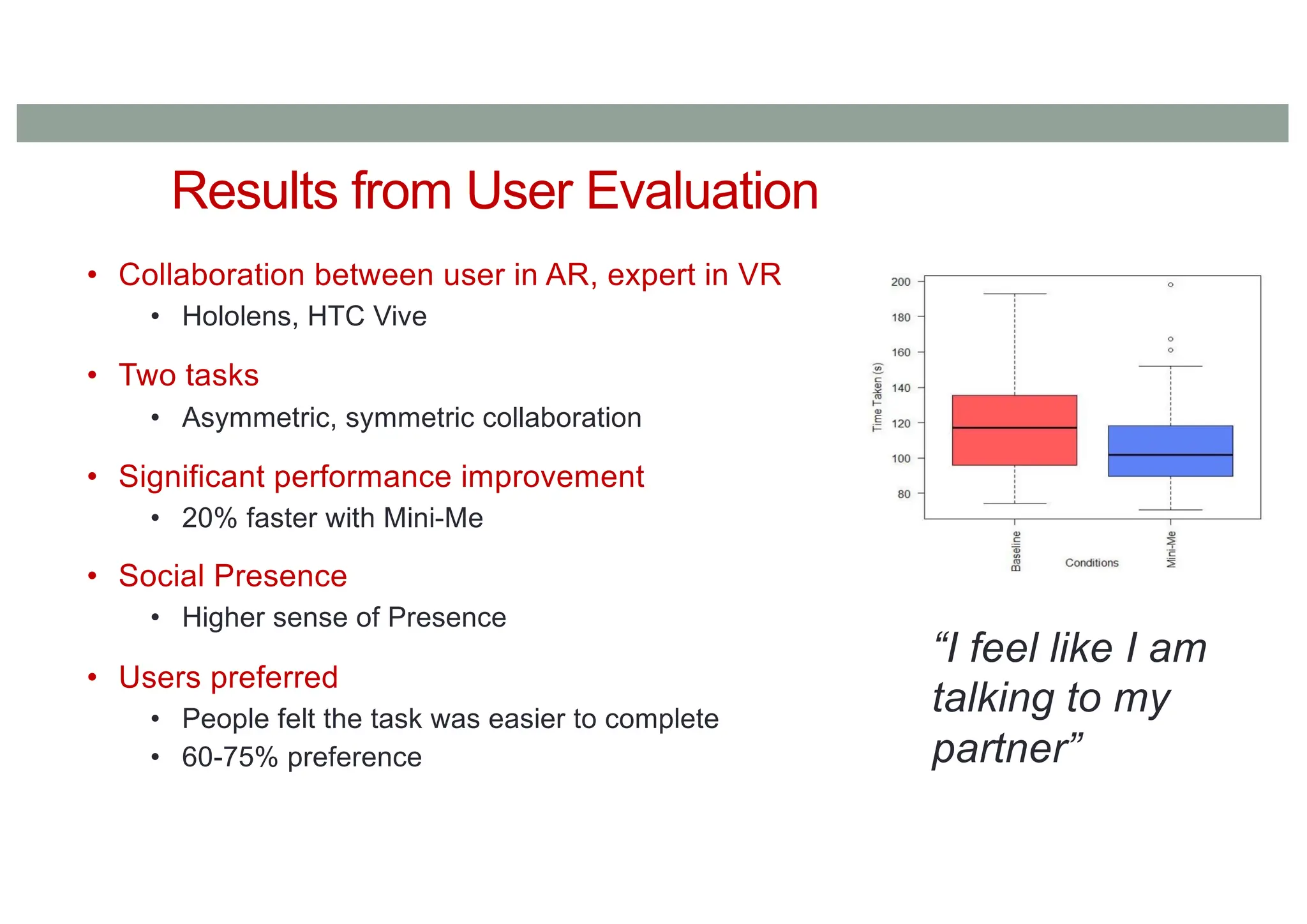

Keynote speech given by Mark Billinghurst at the 11th International Conference on Innovative Production and Construction (IPC2025) on August 8th 2025. This talk gives an overview of Empathic Computing and it's application to supporting remote collaboration in construction engineering.

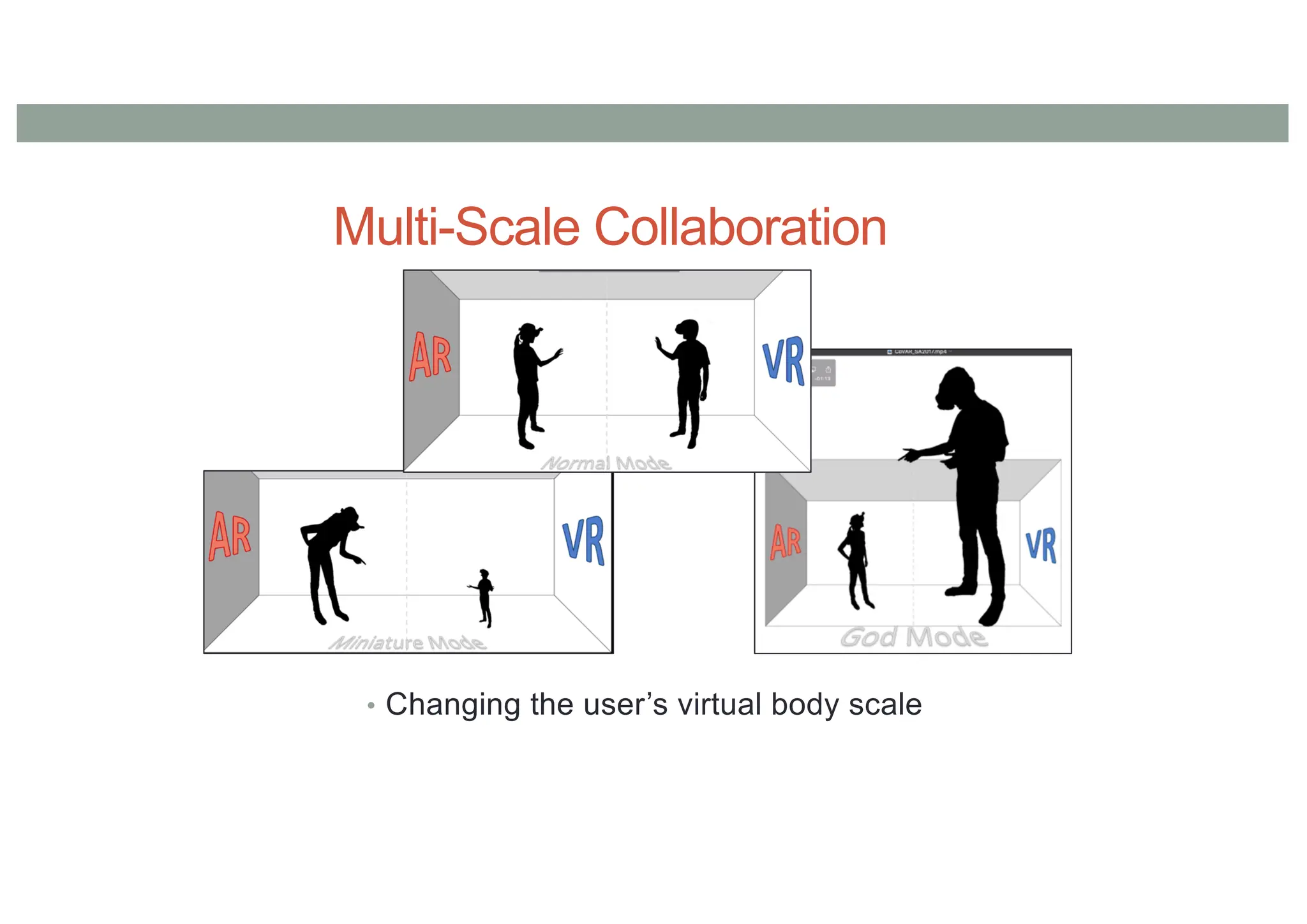

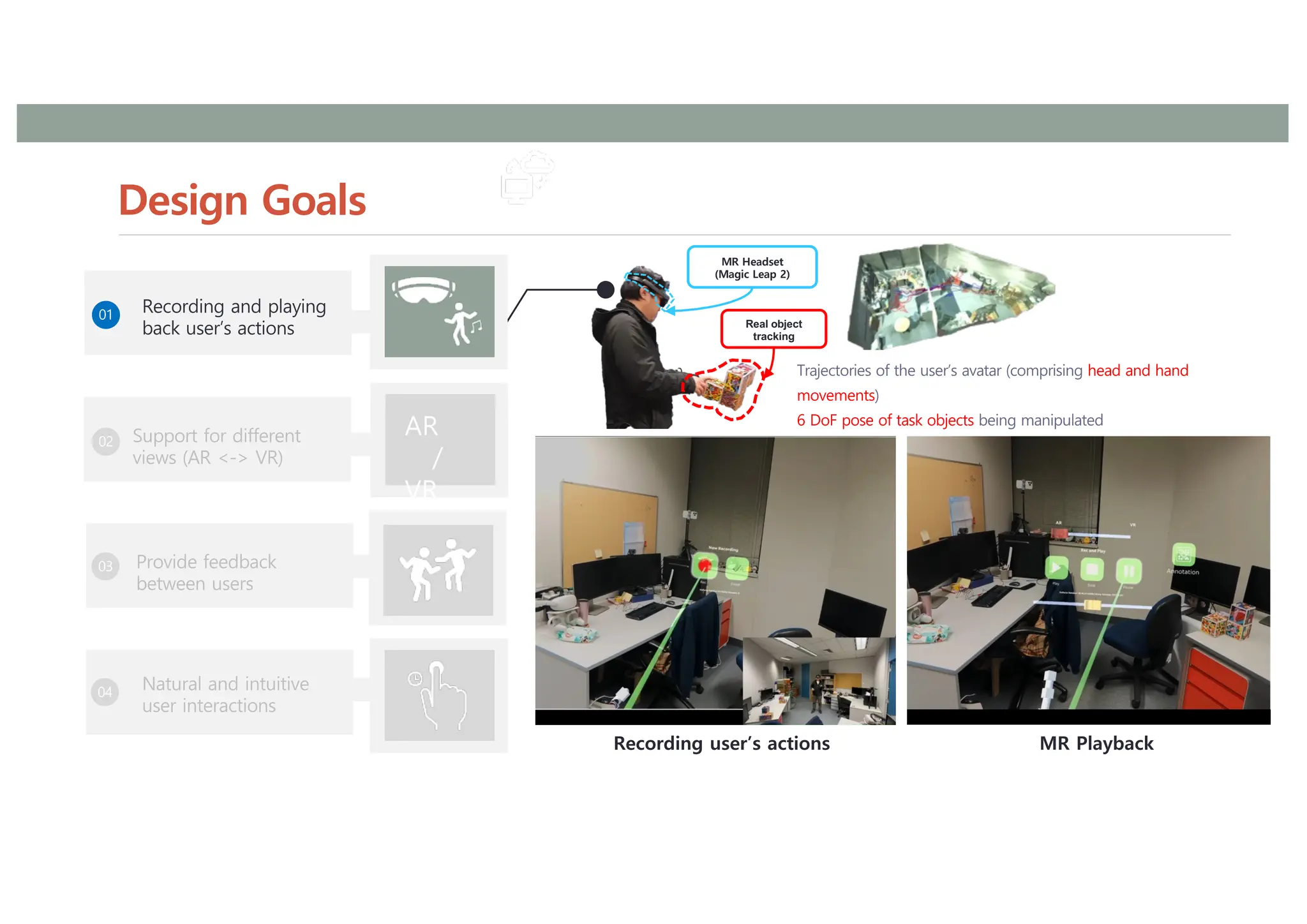

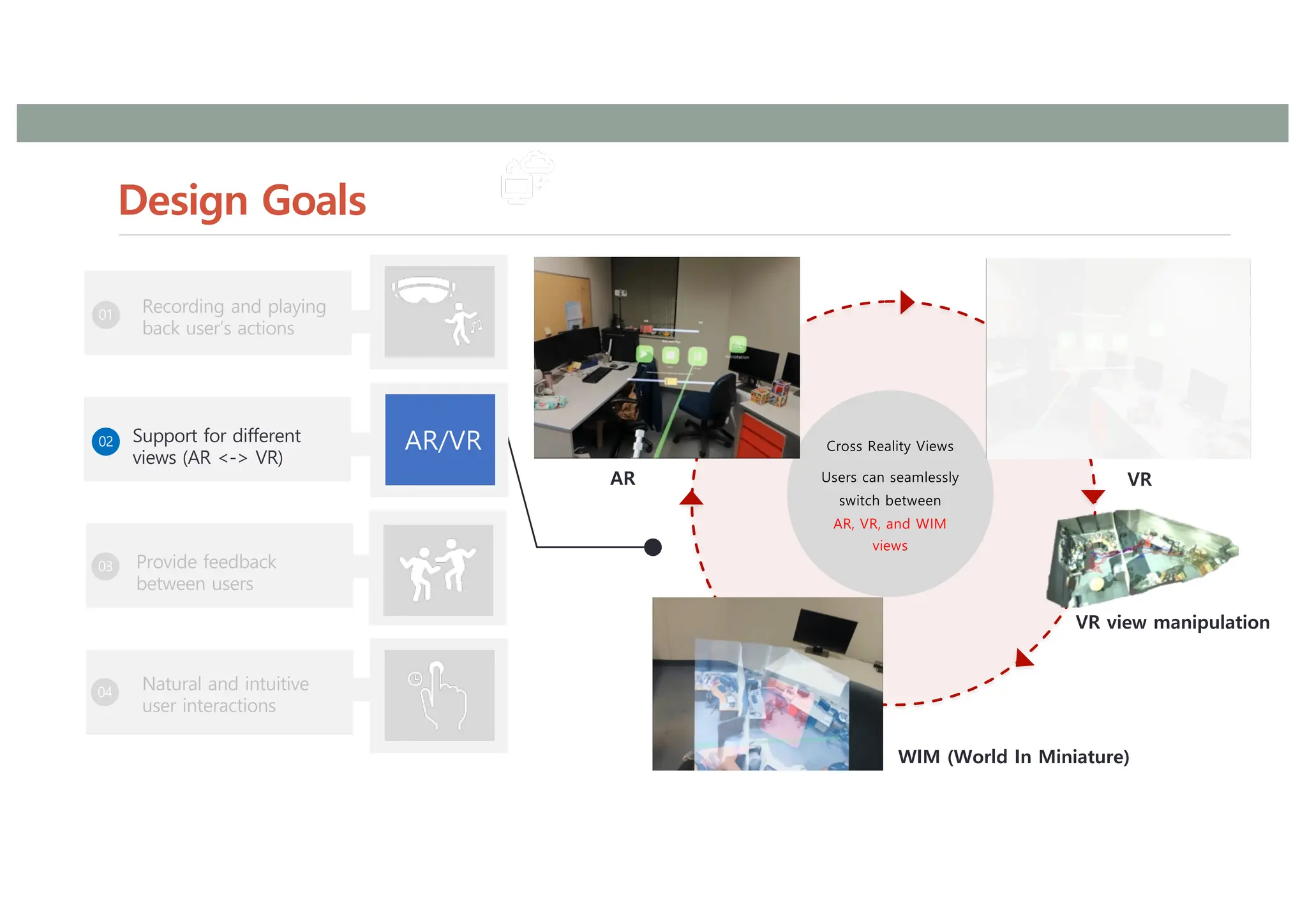

![Design Goals

37

AR

/

VR

[ Seamless Transition ]

AR -> VR

VR -> AR

[ Avatar, Virtual Replica ]](https://image.slidesharecdn.com/empathiccomputingoverview-250808061115-cf36a9e4/75/Empathic-Computing-Creating-Shared-Understanding-37-2048.jpg)

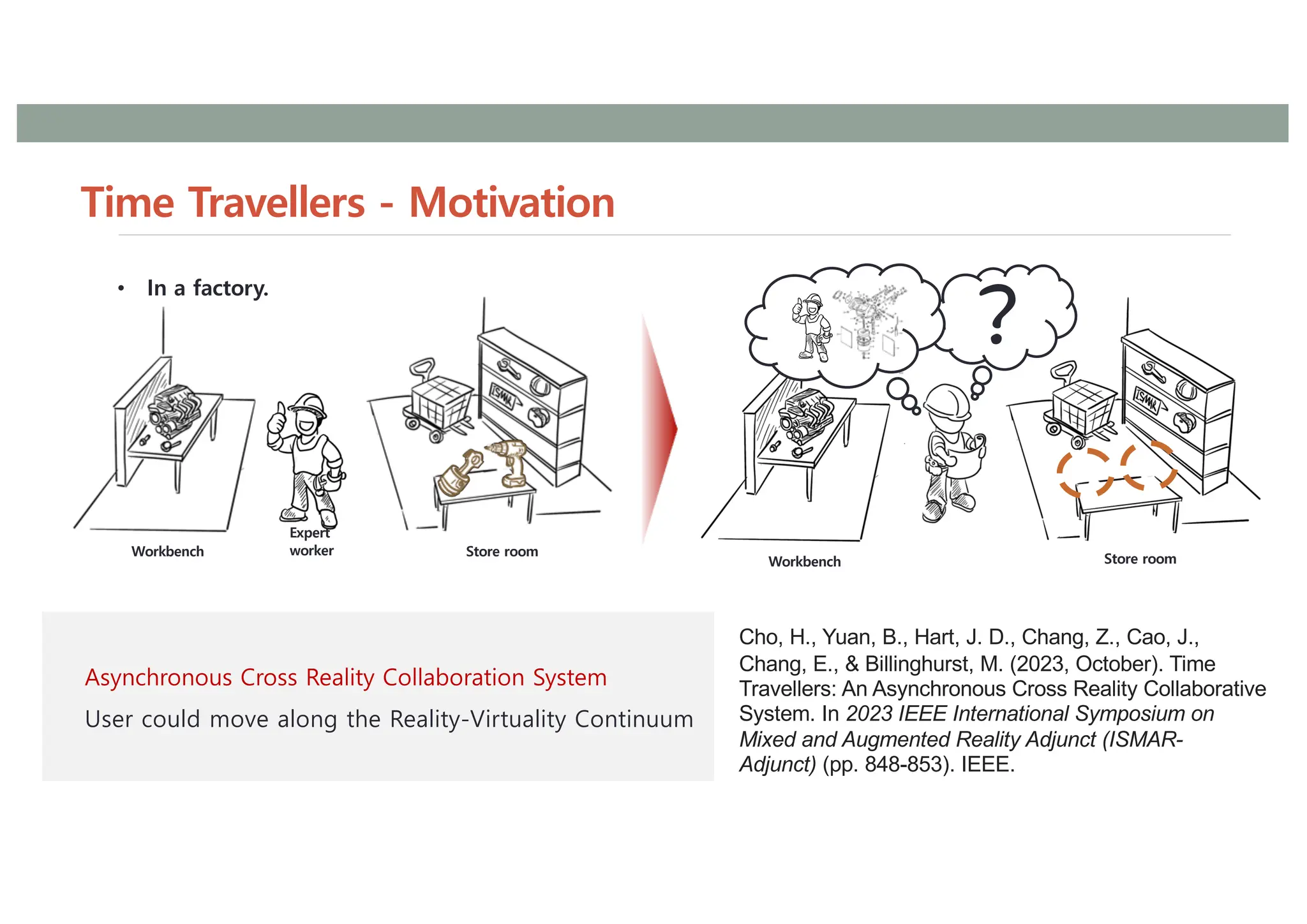

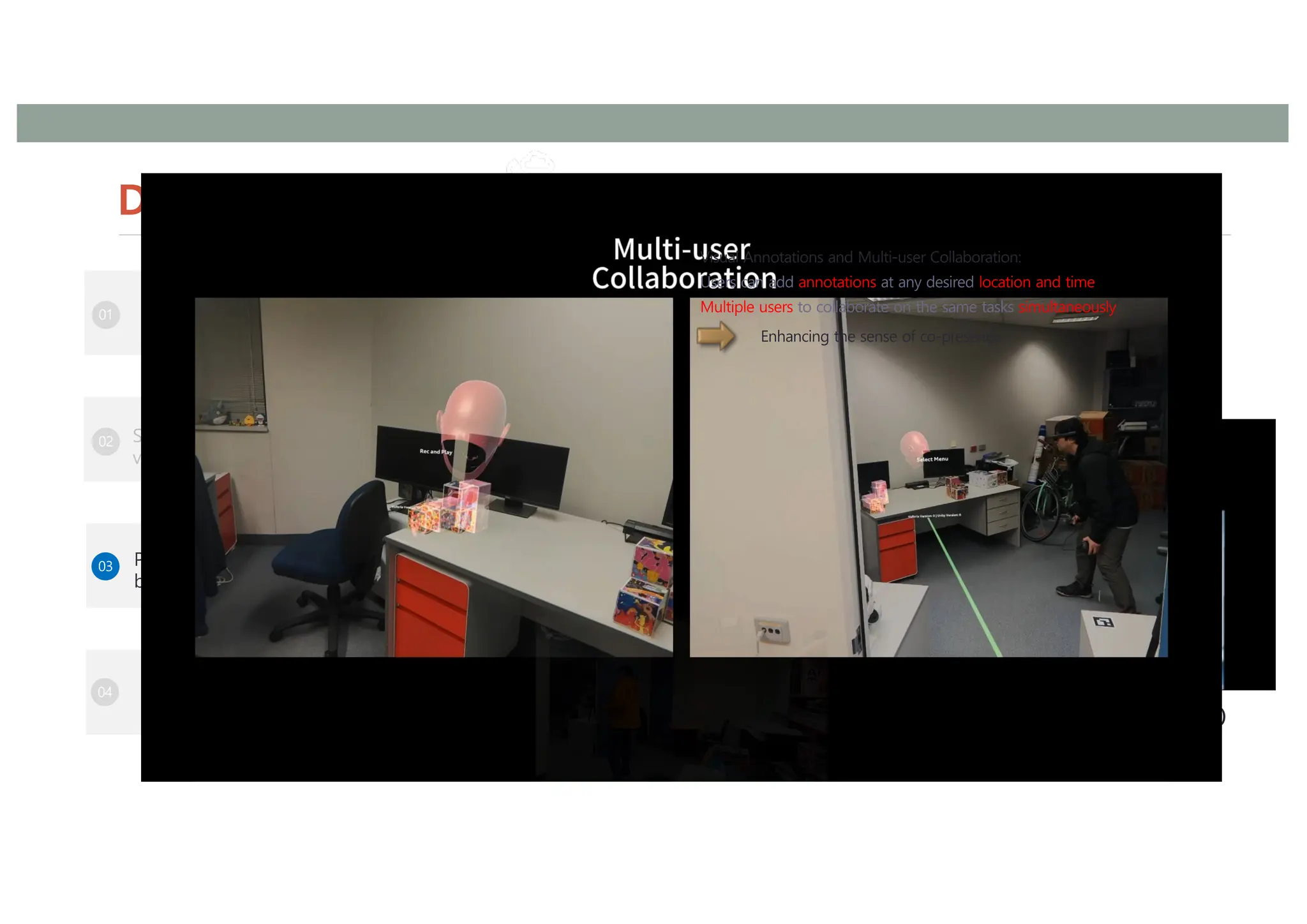

![Time Travellers Overview

38

Step 1: Recording an expert’s

standard process

Step 2: Reviewing the recorded process

through the hybrid cross-reality playback

system

2nd User

1st User

MR

Headset

(Magic Leap

2)

Real

object

tracking

1st User’s

view

Visual annotation

Avatar interaction

2nd User’s

view

Timeline

manipulation

Recording

Data

[ 3D Work space

]

[ Avatar, Object ]

Spatial Data

1st User’s

view

Real

object

tracking

AR mode VR mode

Cross reality asynchronous collaborative system

AR mode VR mode](https://image.slidesharecdn.com/empathiccomputingoverview-250808061115-cf36a9e4/75/Empathic-Computing-Creating-Shared-Understanding-38-2048.jpg)