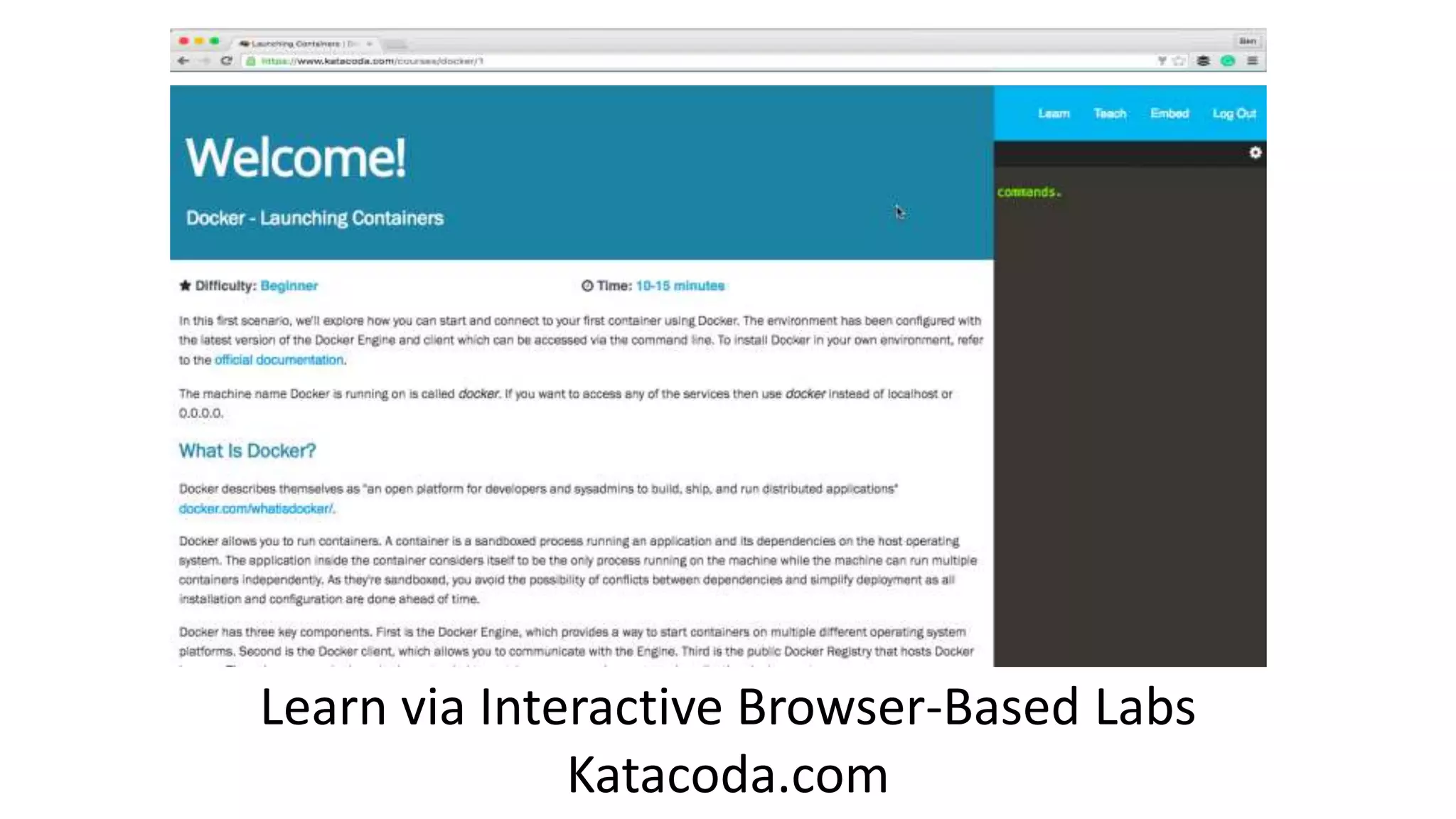

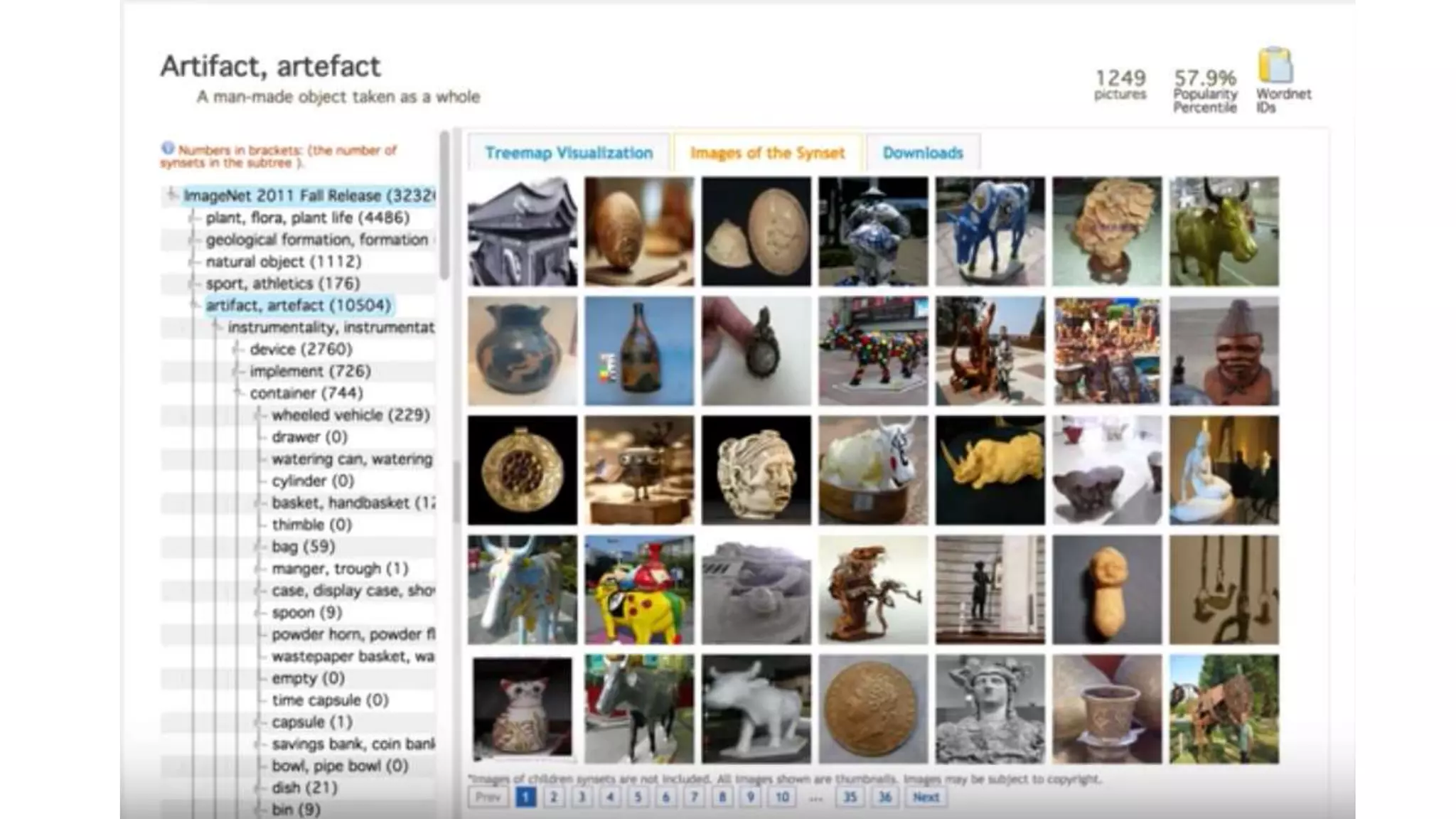

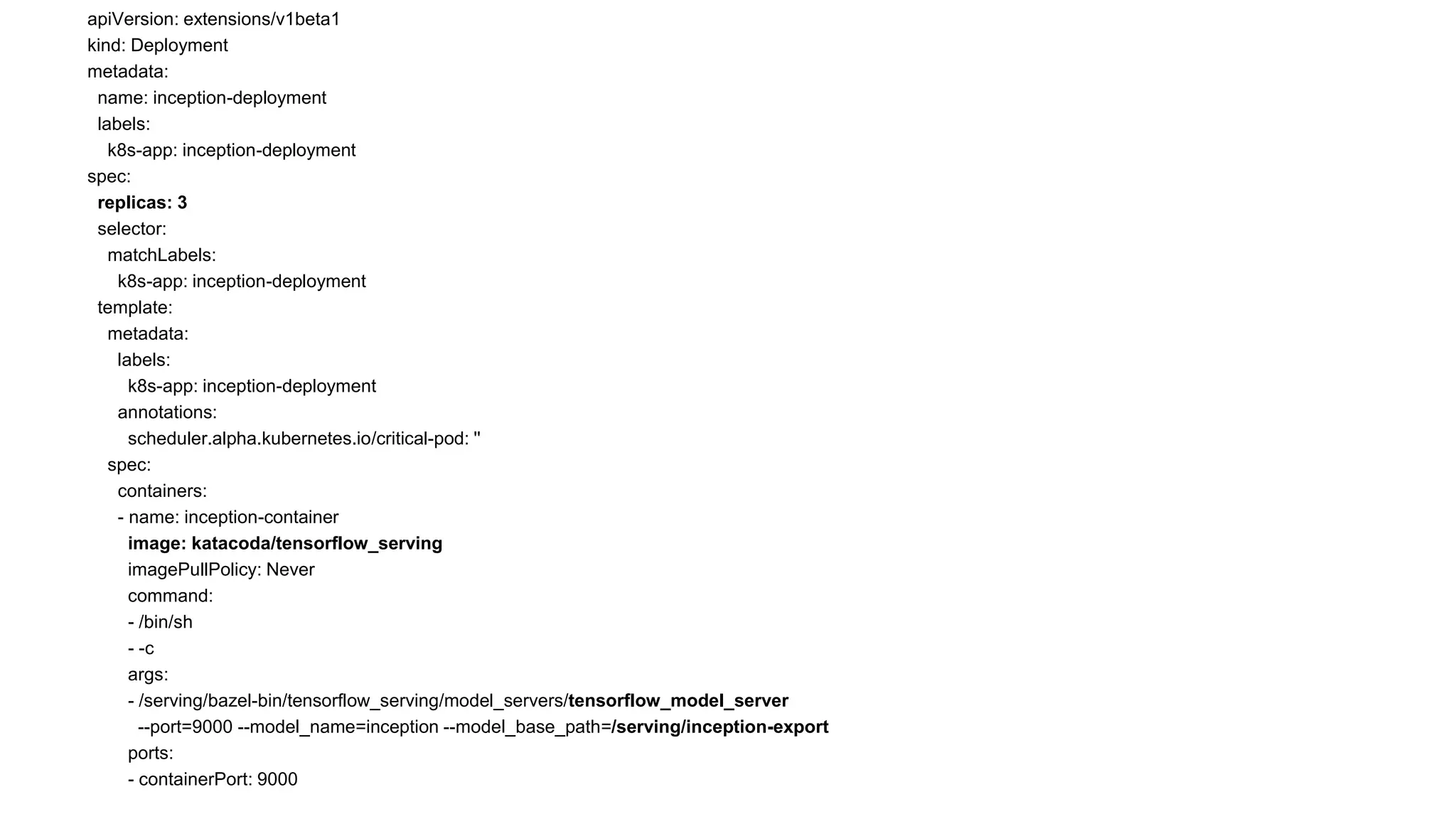

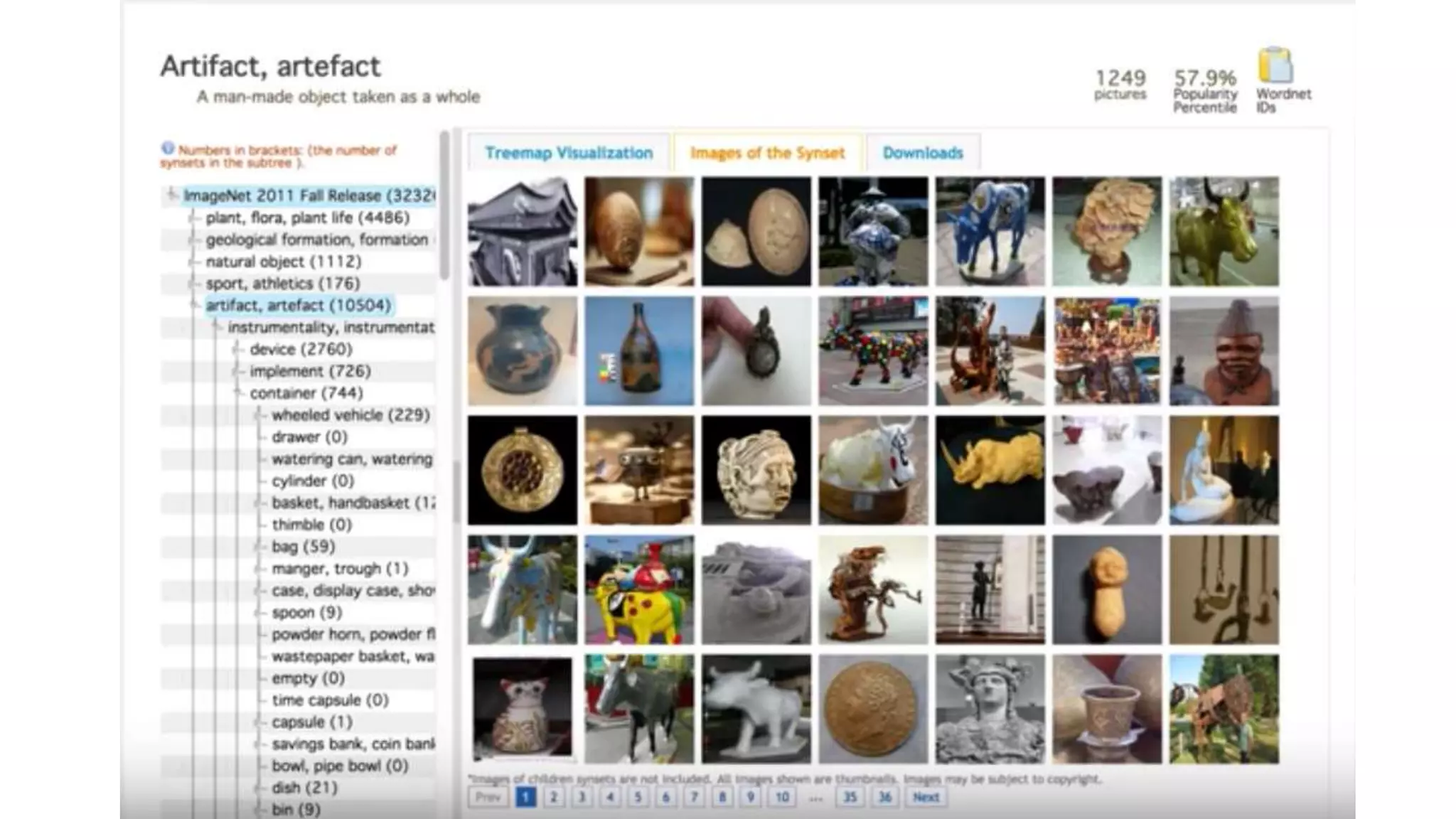

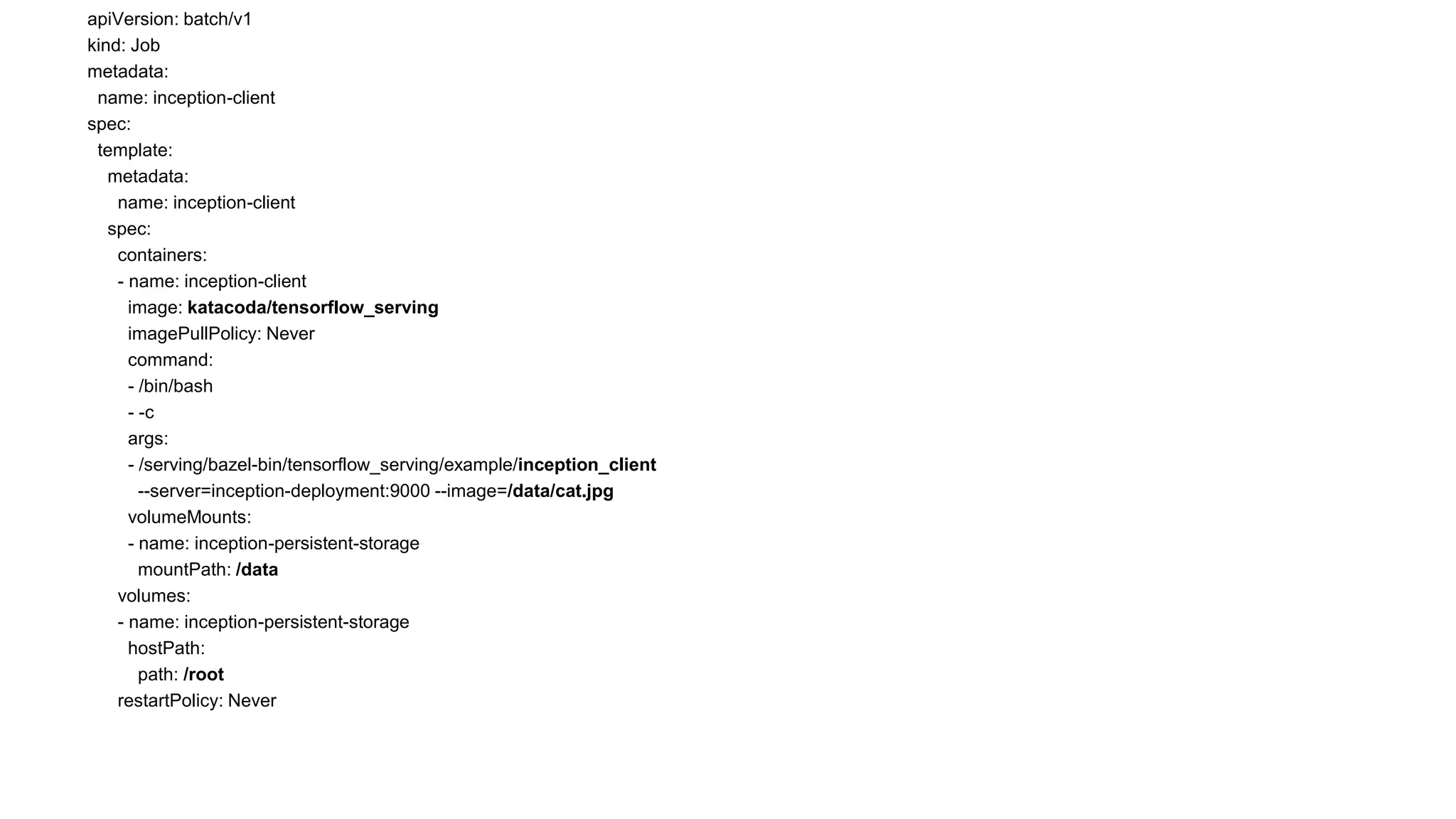

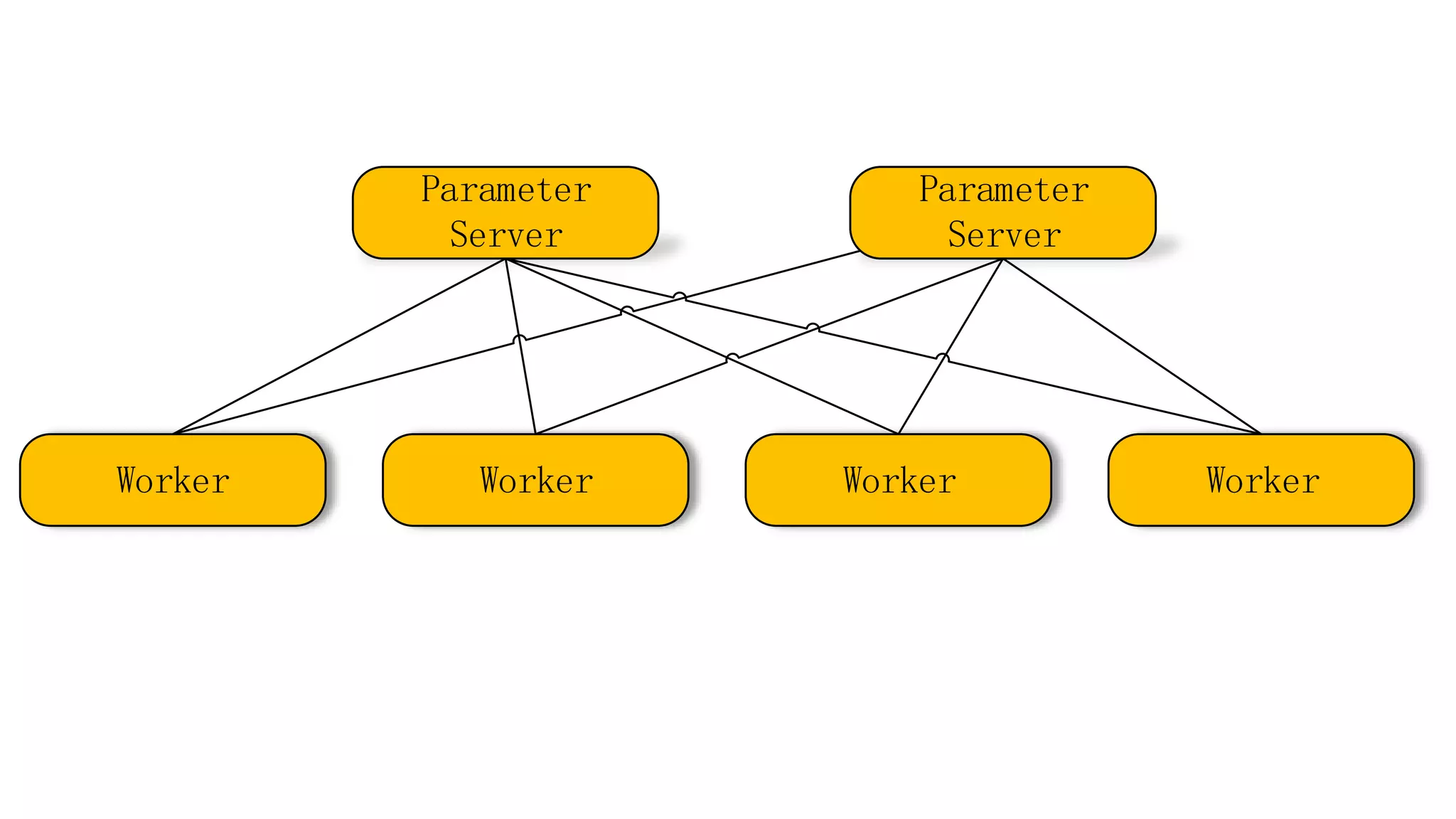

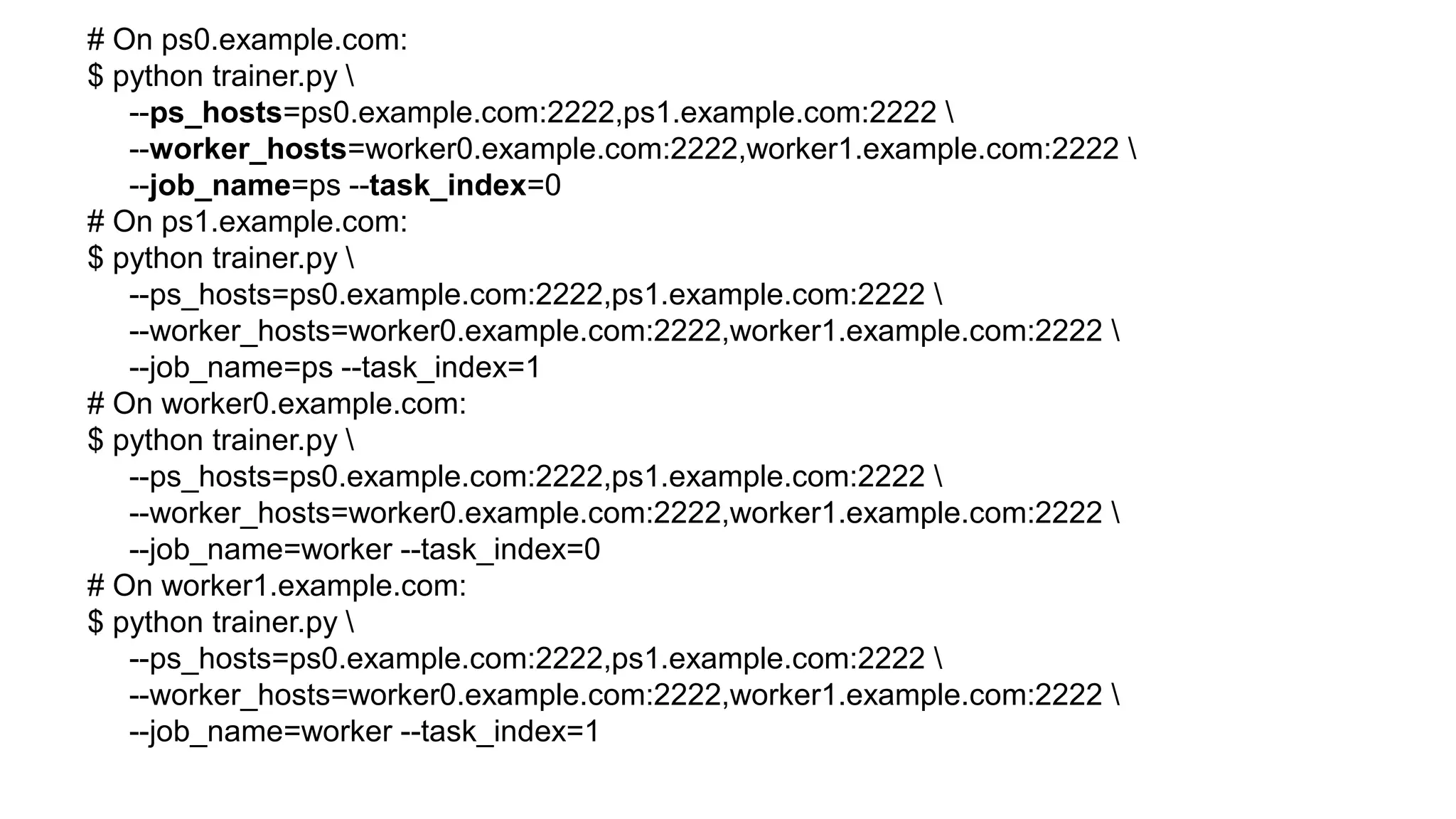

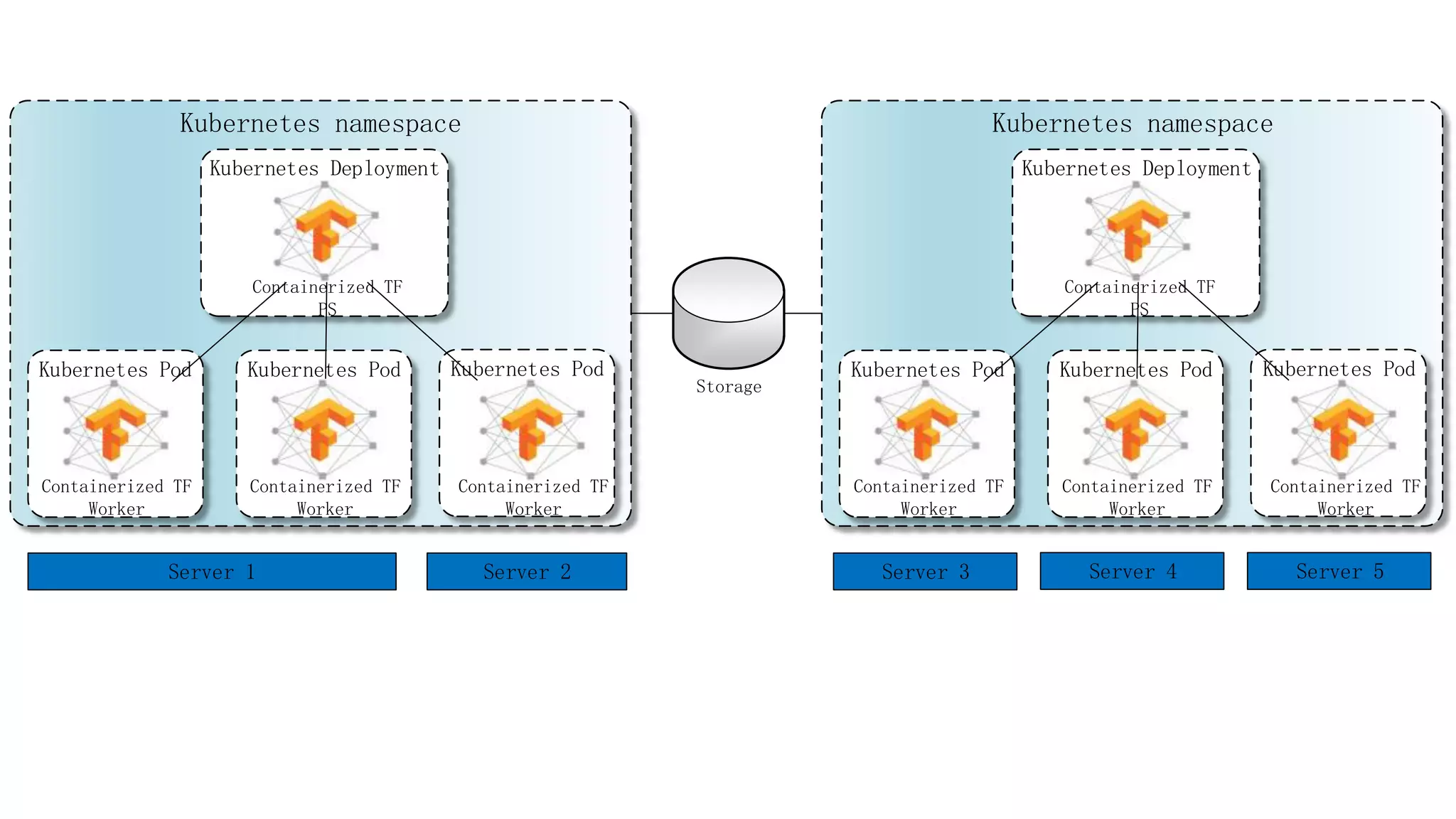

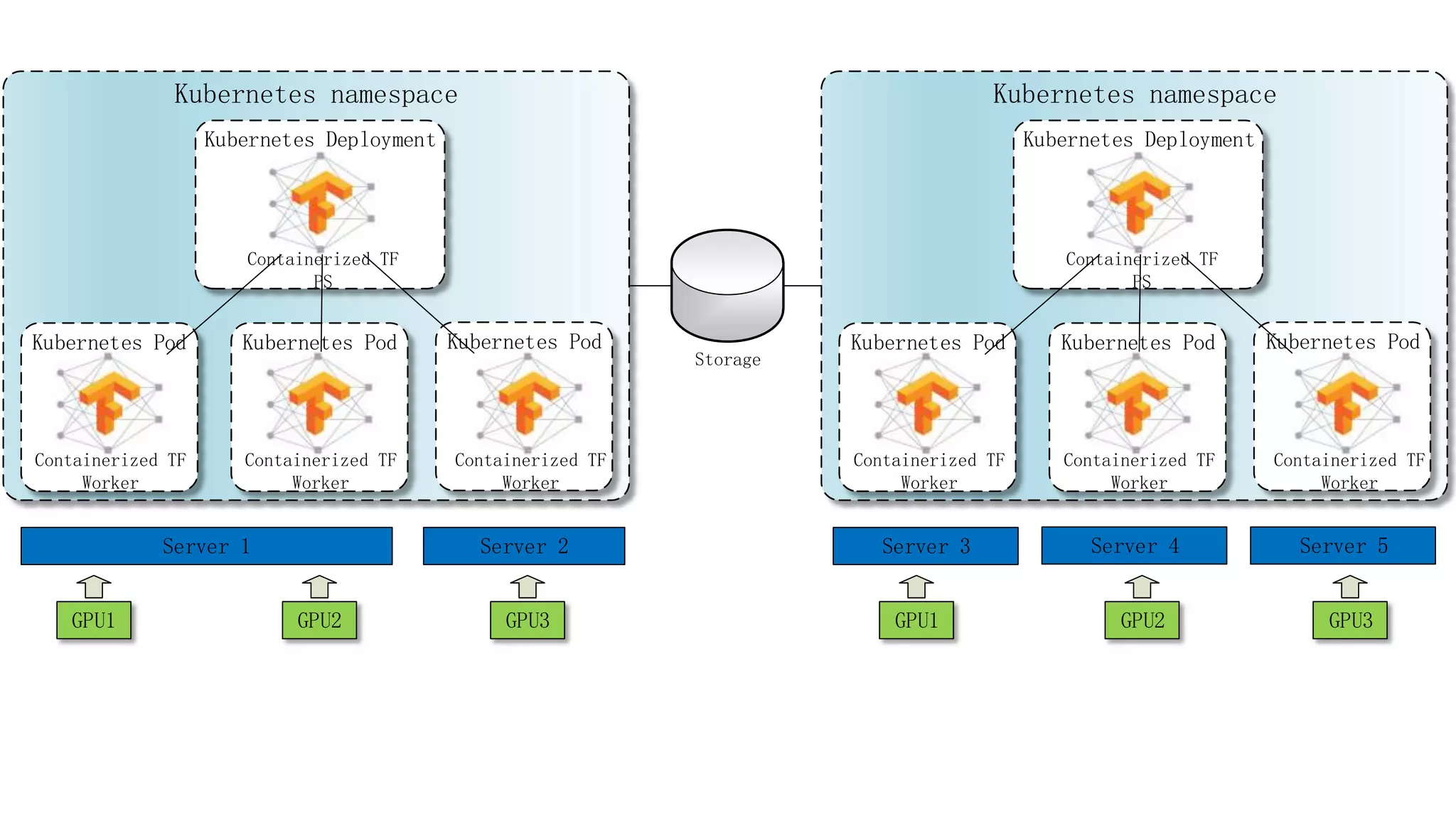

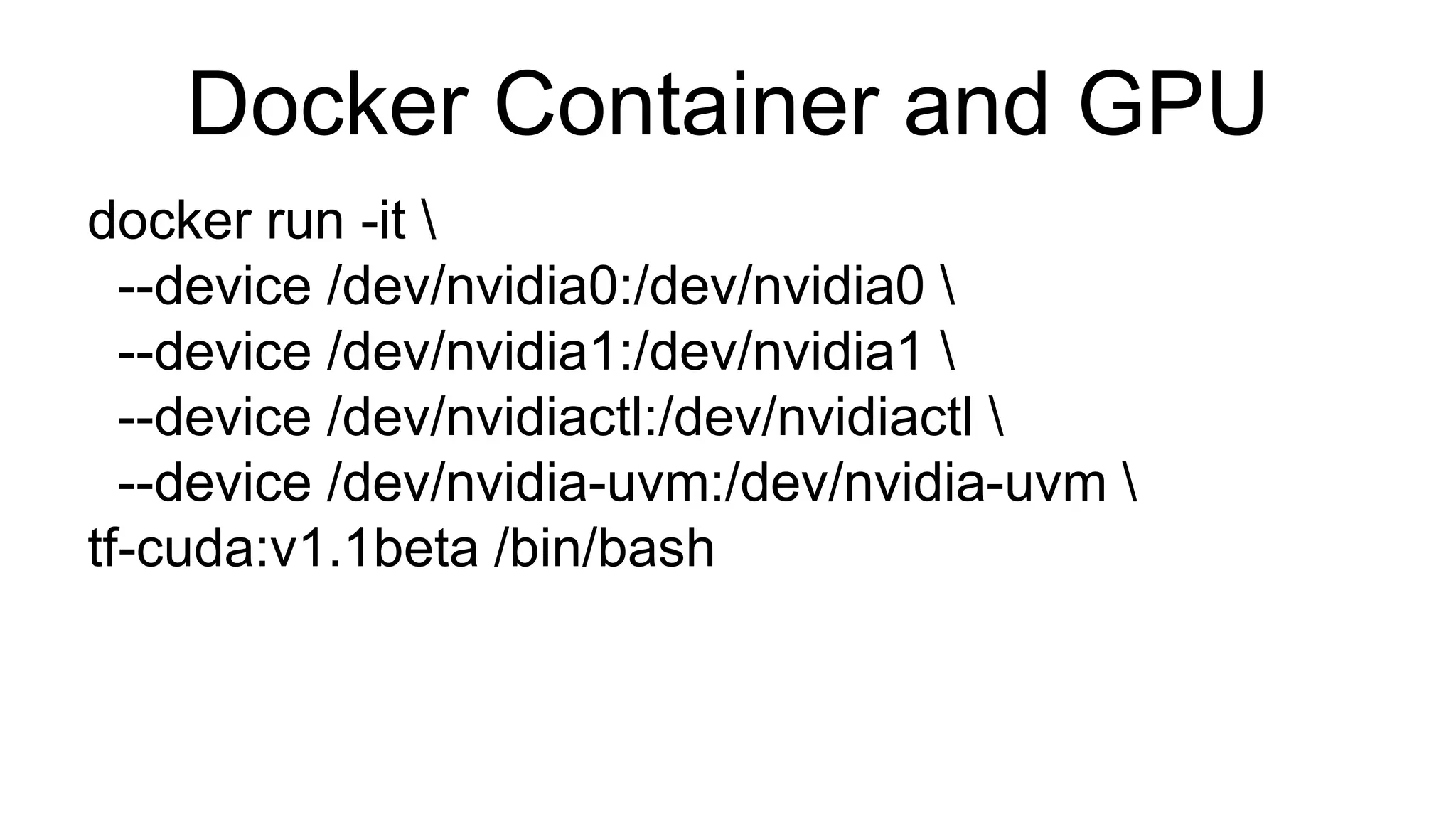

This document discusses experimenting with Kubernetes and Tensorflow. It begins with an introduction of the author and overview of learning via interactive browser-based labs on Katacoda.com. Then it demonstrates setting up Minikube and Kubeadm to create Kubernetes clusters, deploying Tensorflow models and services on Kubernetes using Deployments and Jobs, and considerations for scaling Kubernetes and Tensorflow workloads. It concludes with a call to action for others to share their experiences by writing scenarios on Katacoda.