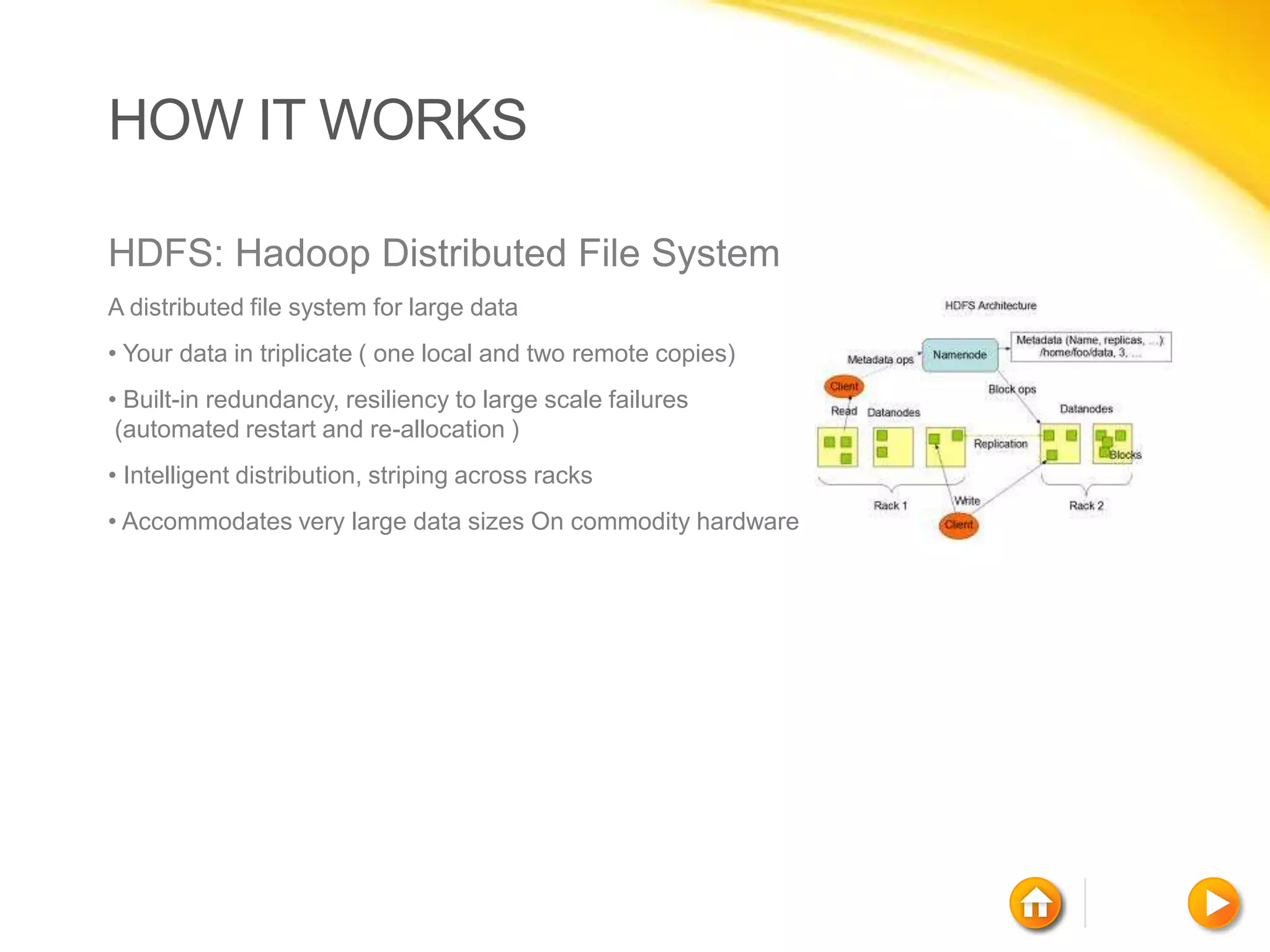

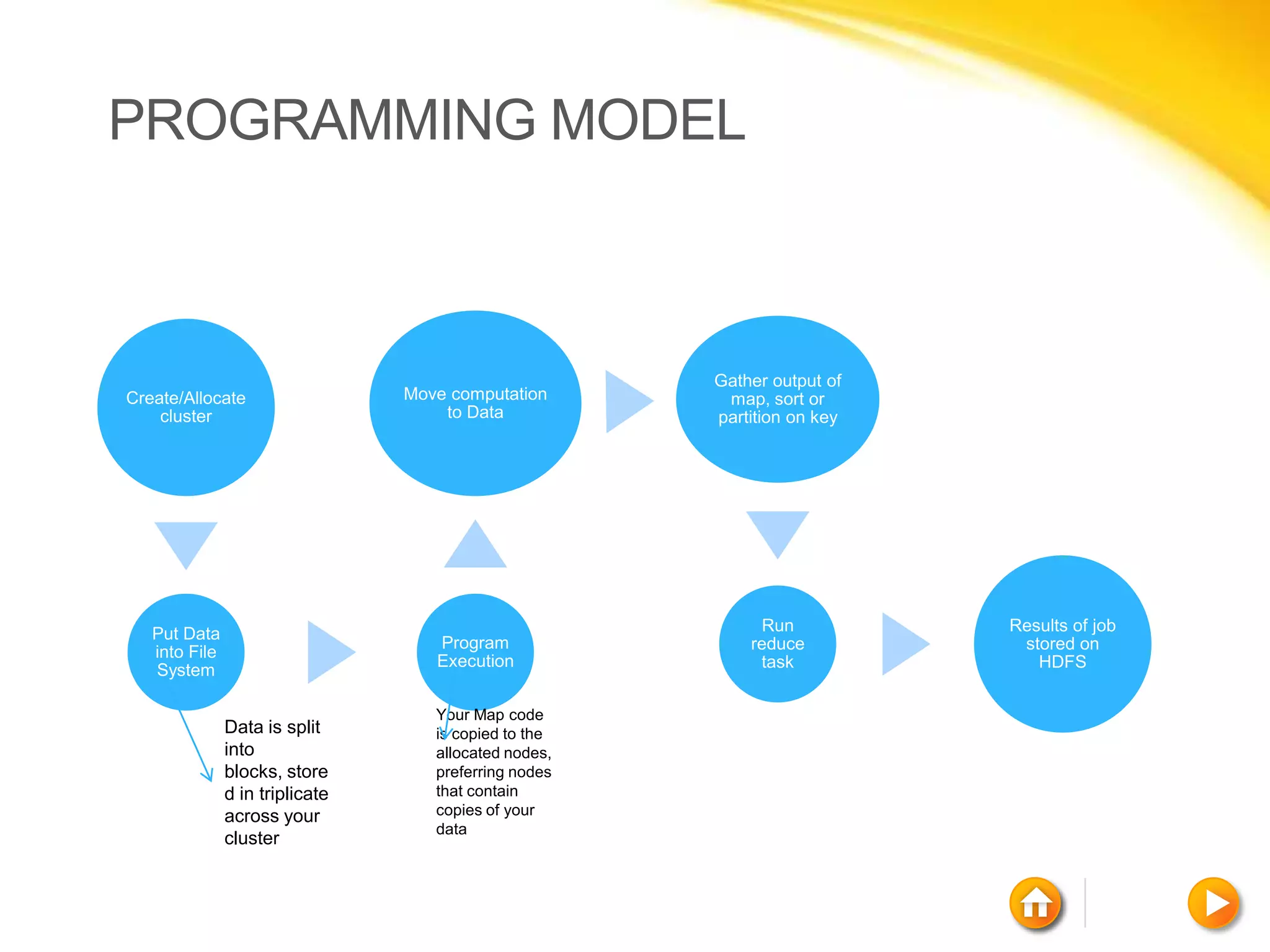

This document provides an overview of Hadoop and distributed cloud computing. It defines cloud computing as the delivery of computing resources like software, hardware, and utilities over a network. Distributed cloud computing uses many networked computers to partition large problems and solve them in parallel, as with Hadoop. Hadoop is an open-source software platform that can scale to thousands of computers across clusters to efficiently process enormous amounts of data. It uses HDFS for distributed storage and a MapReduce programming model to distribute computations across nodes.