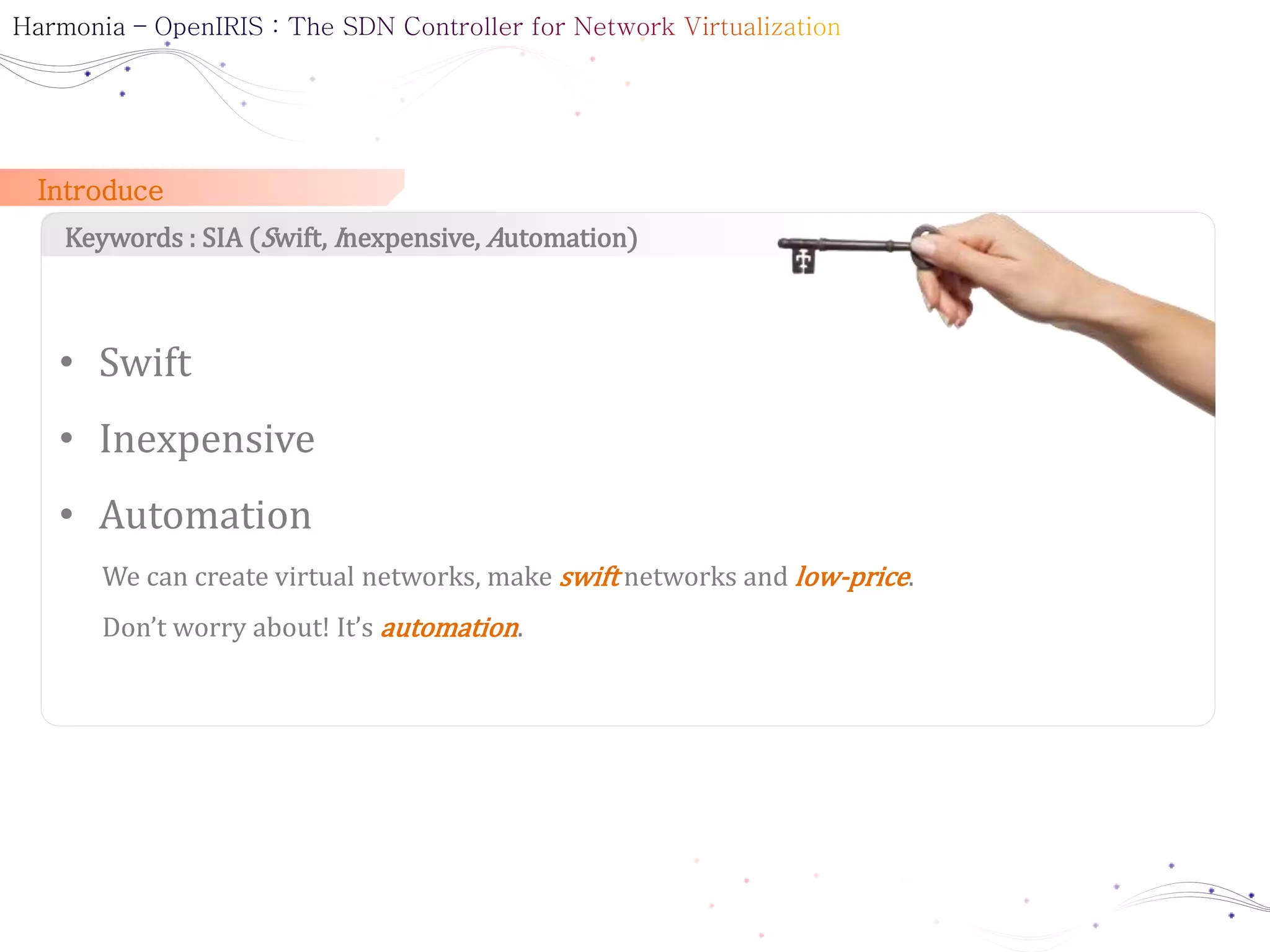

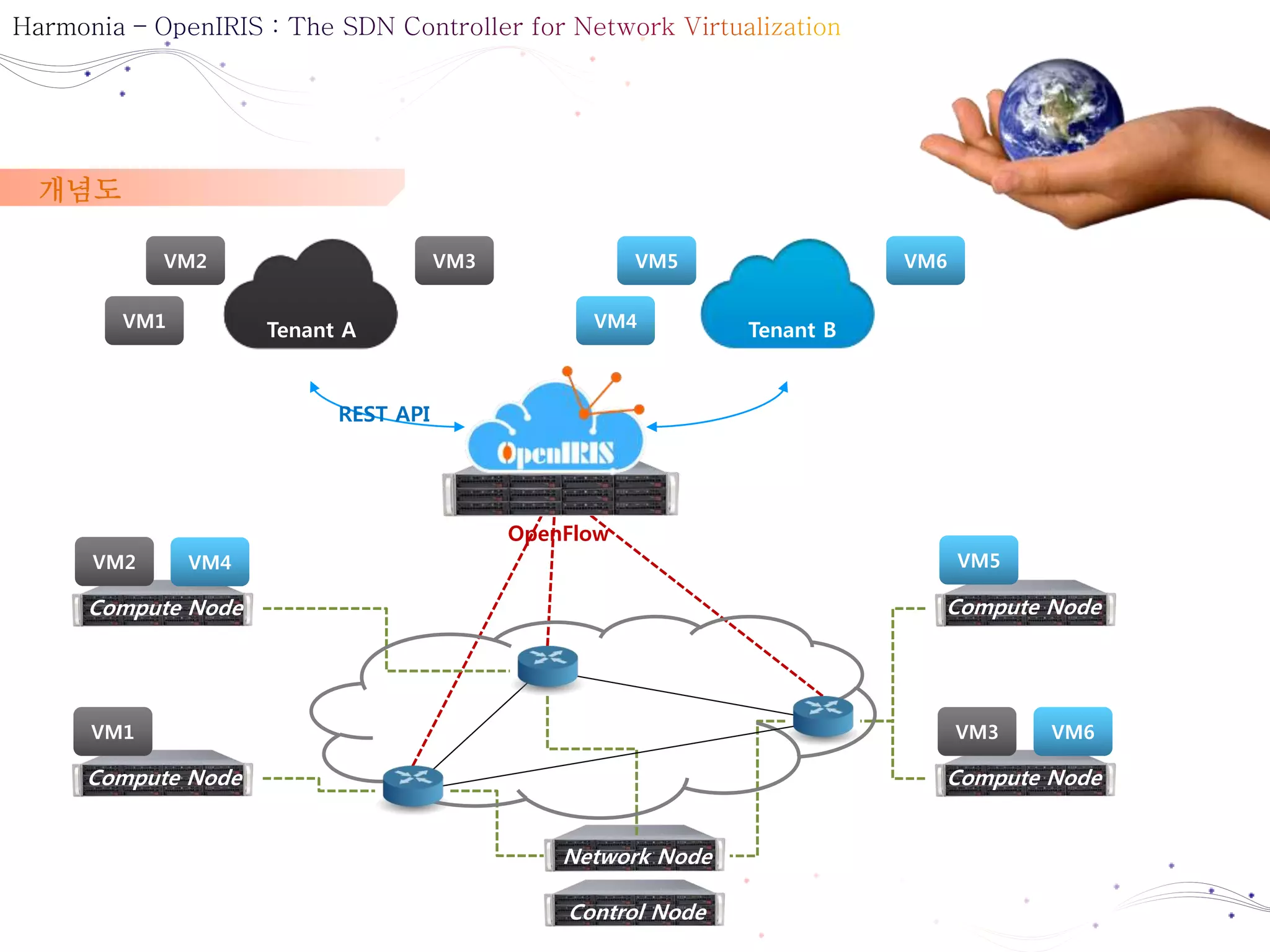

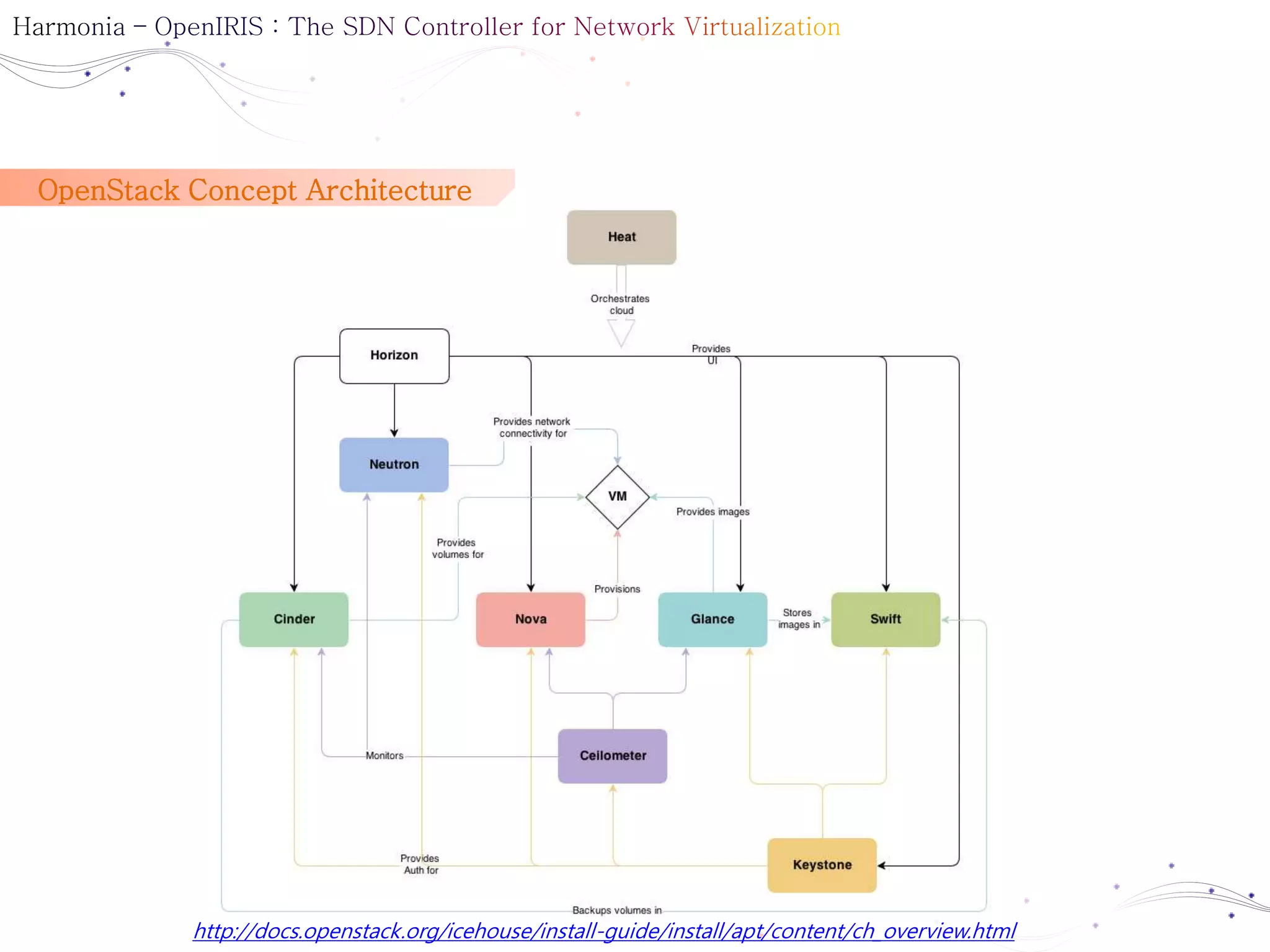

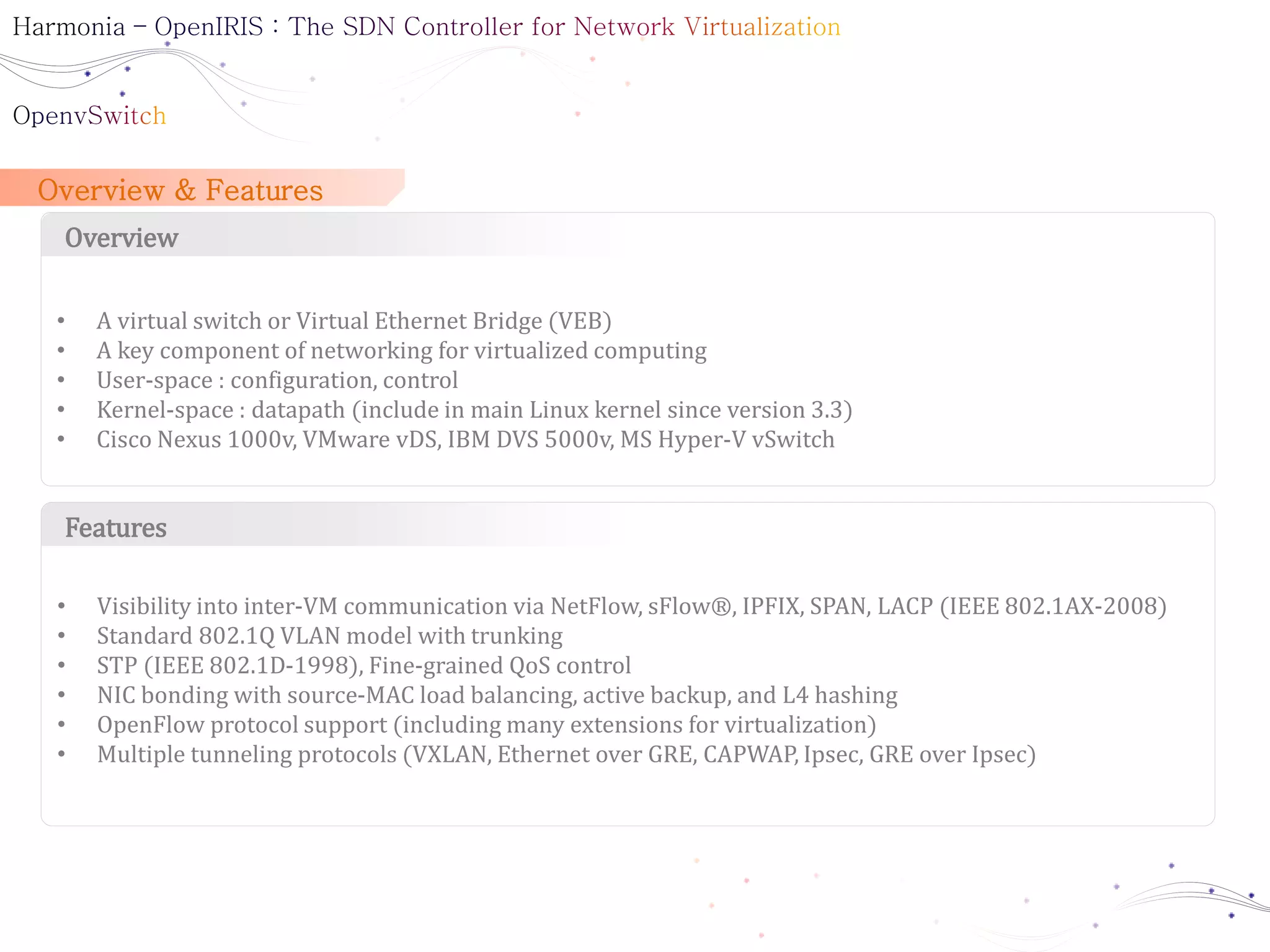

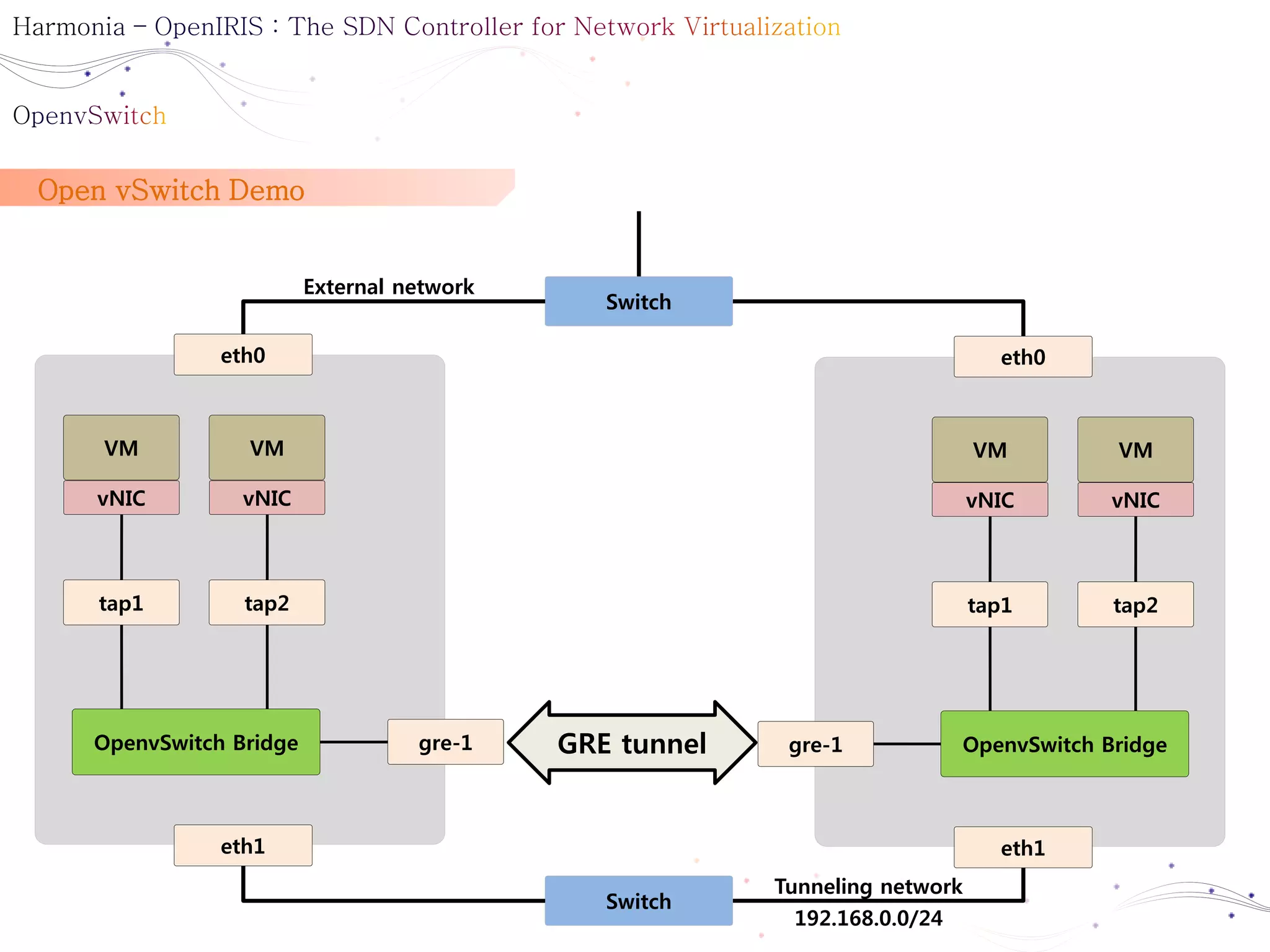

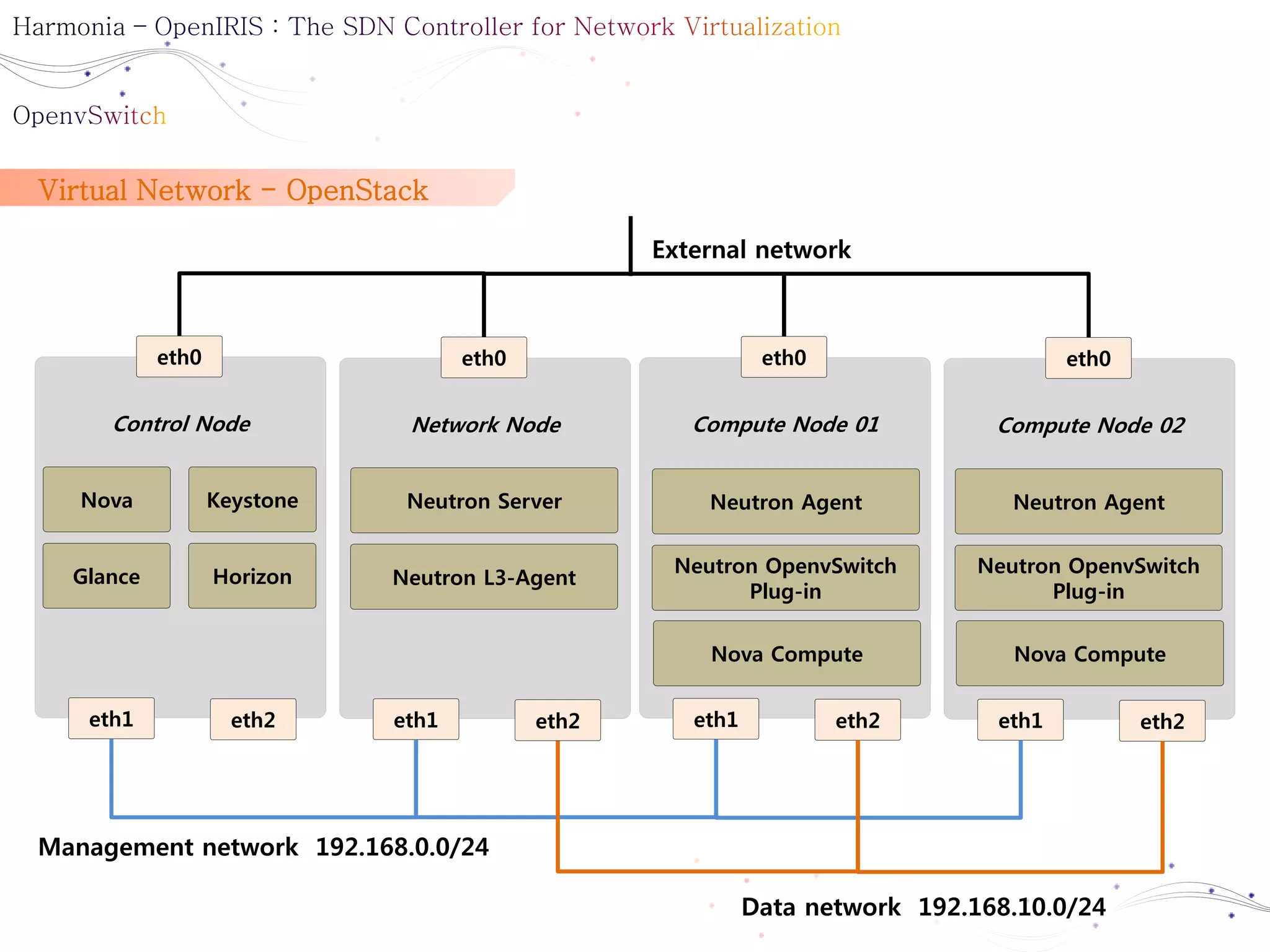

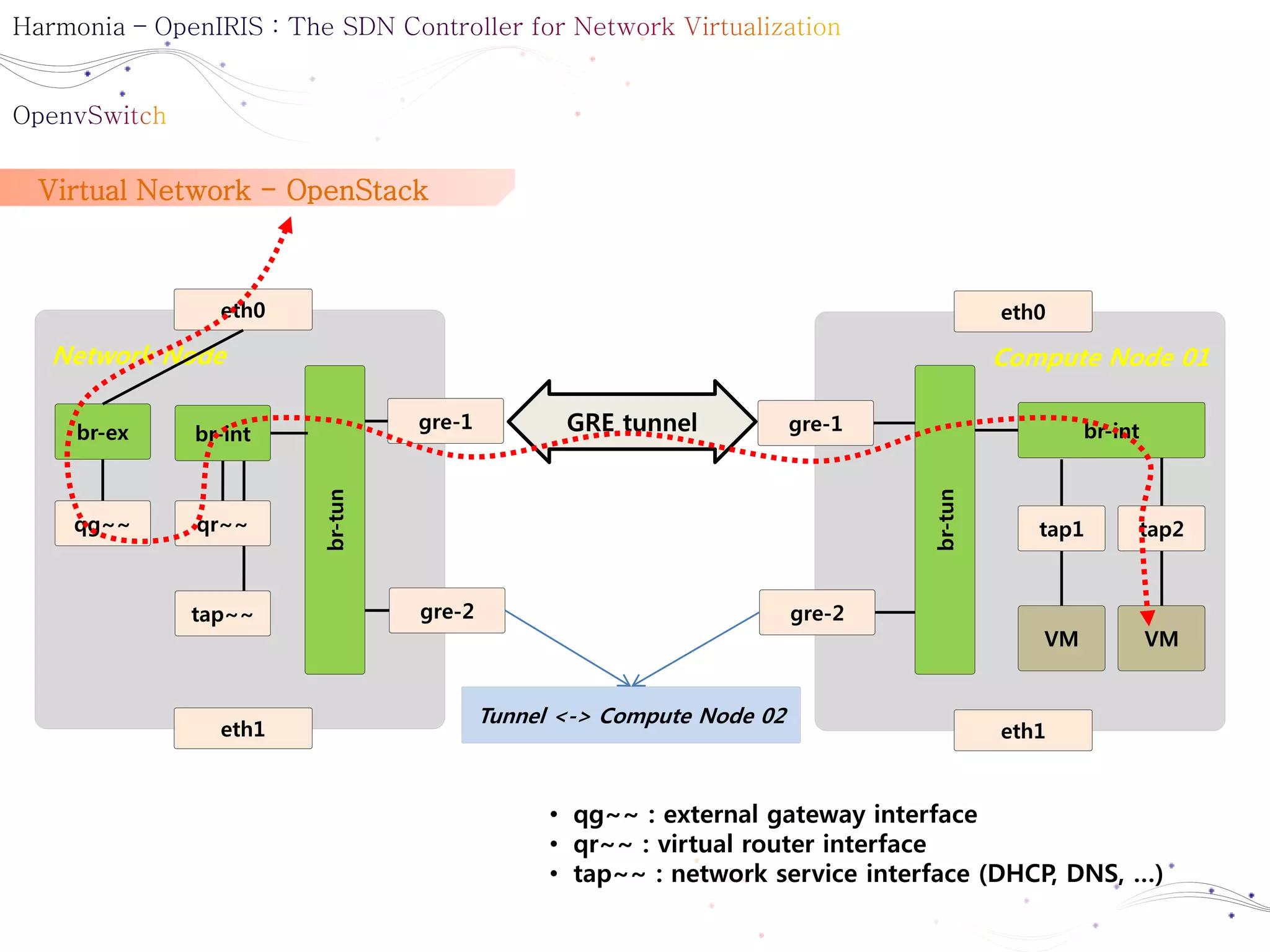

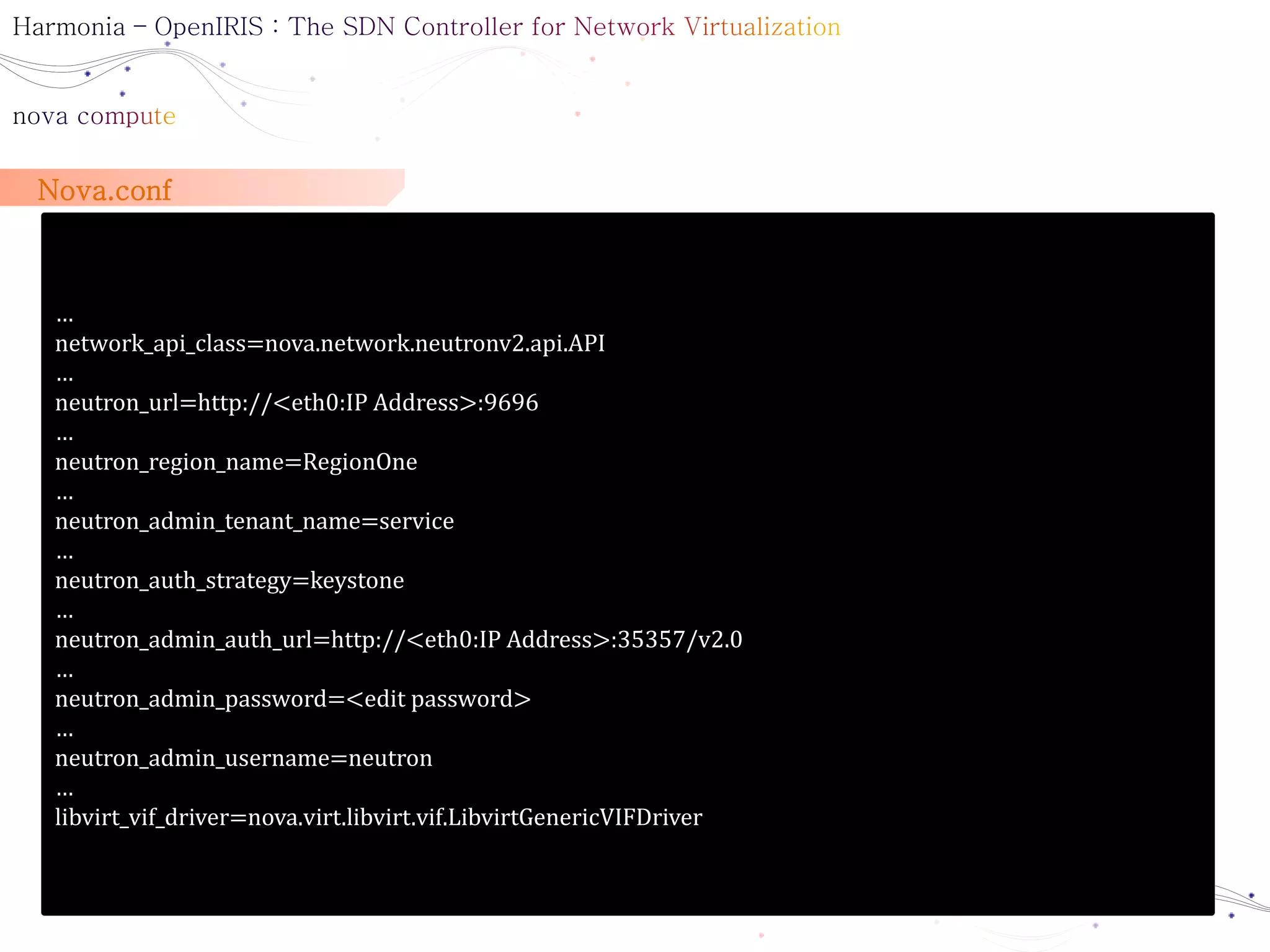

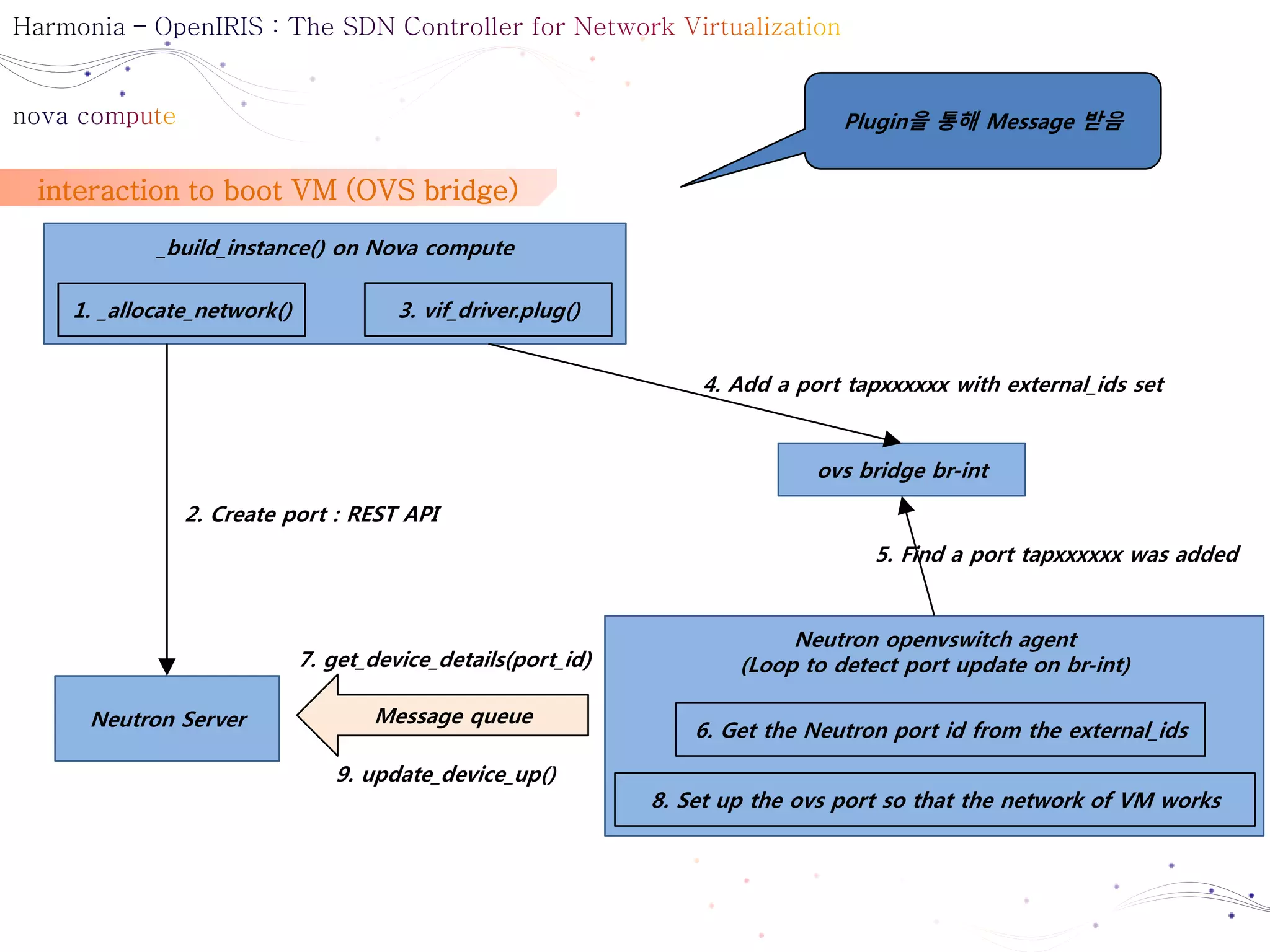

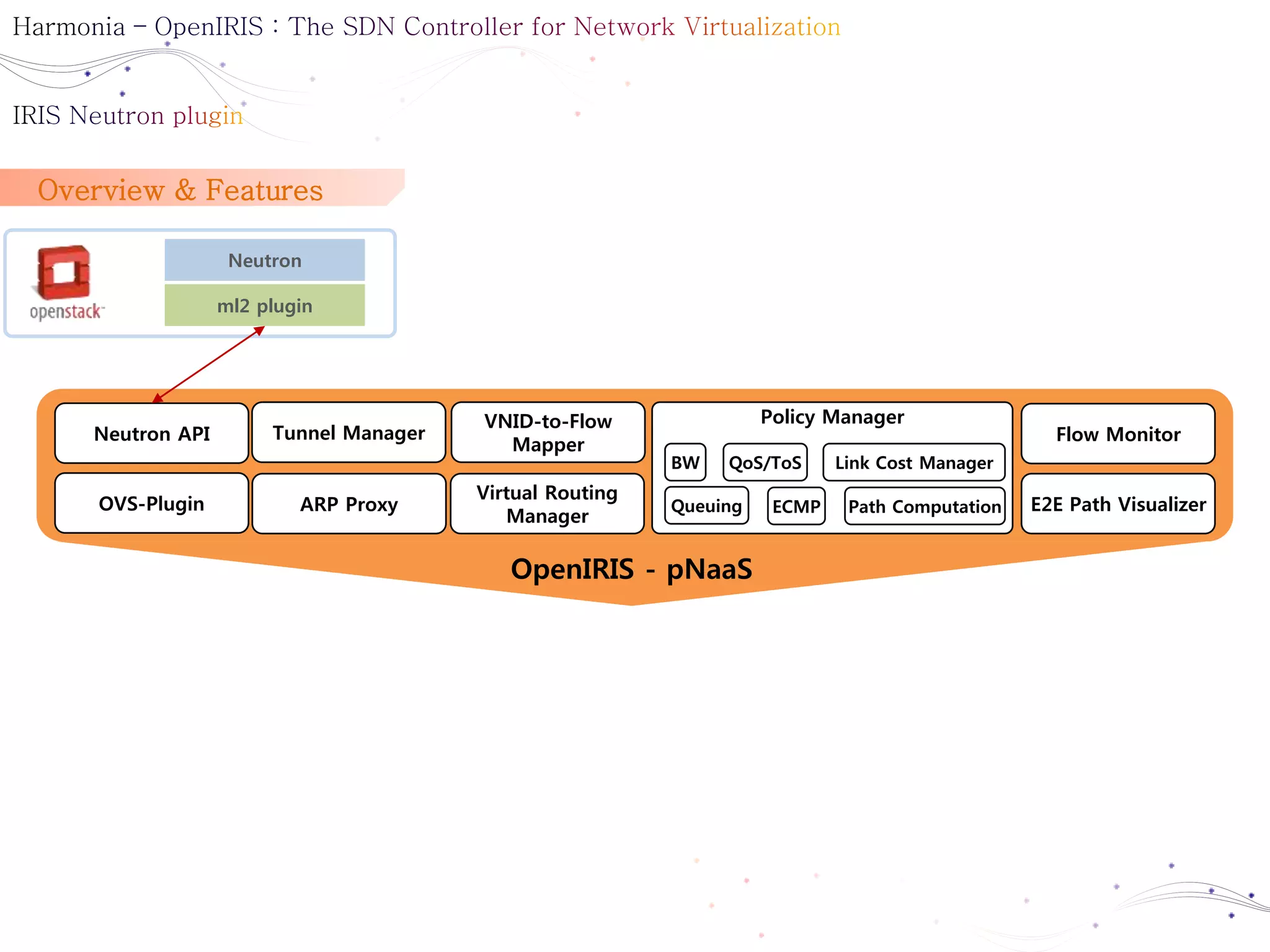

1. The document discusses OpenStack Neutron and Open vSwitch (OVS), describing their architecture and configuration. It explains that Neutron uses OVS to provide virtual networking and switching capabilities between virtual machines.

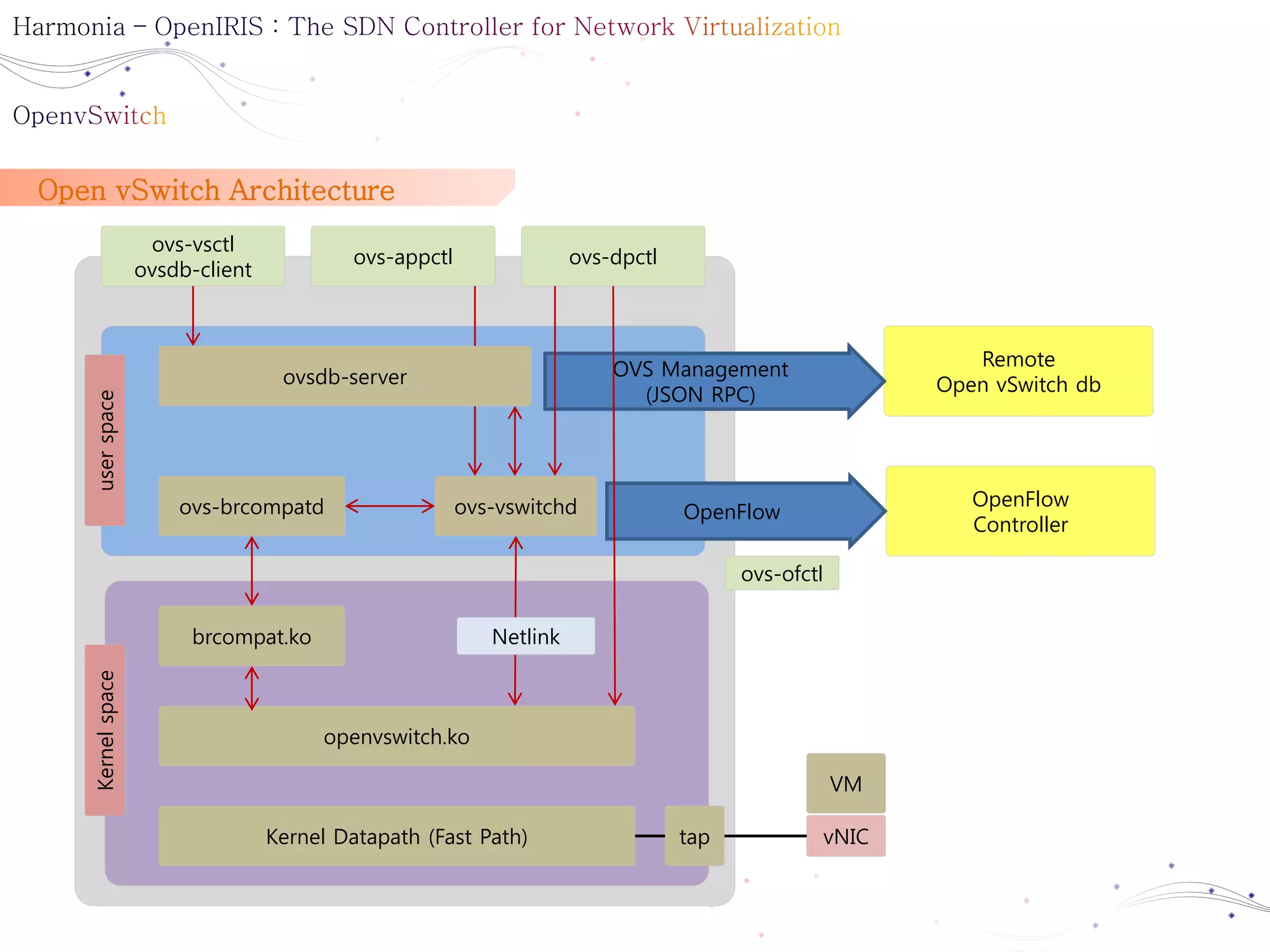

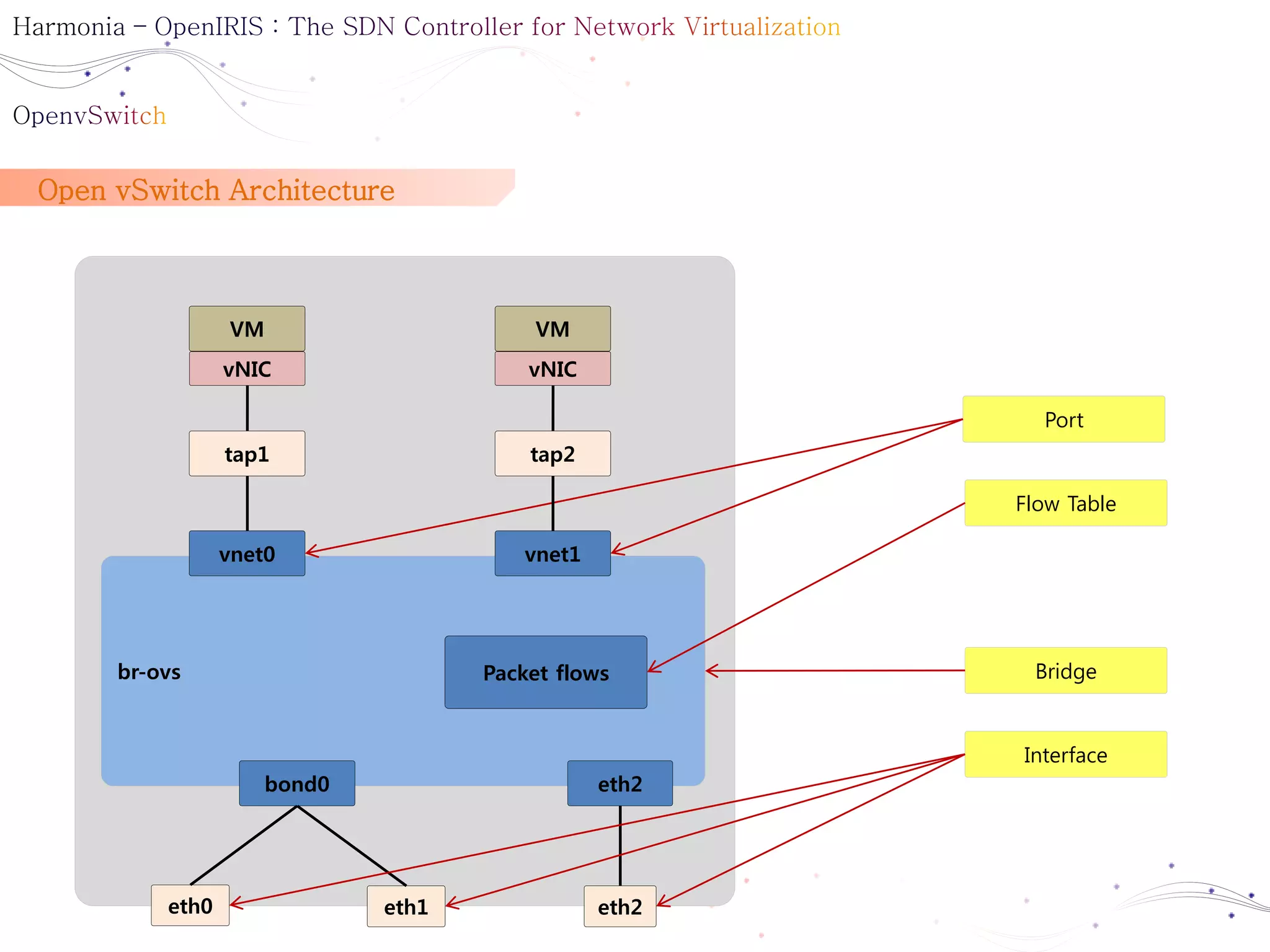

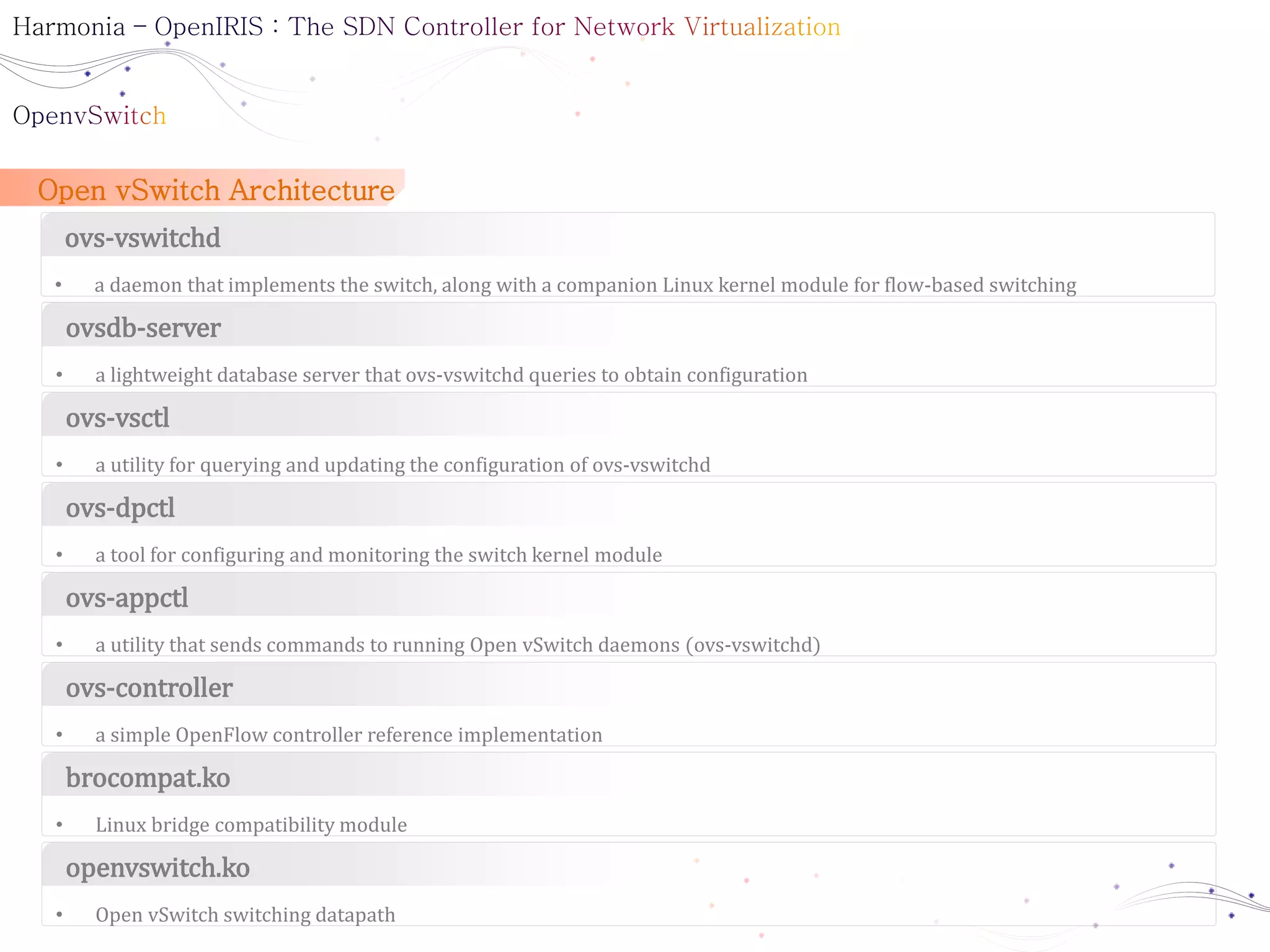

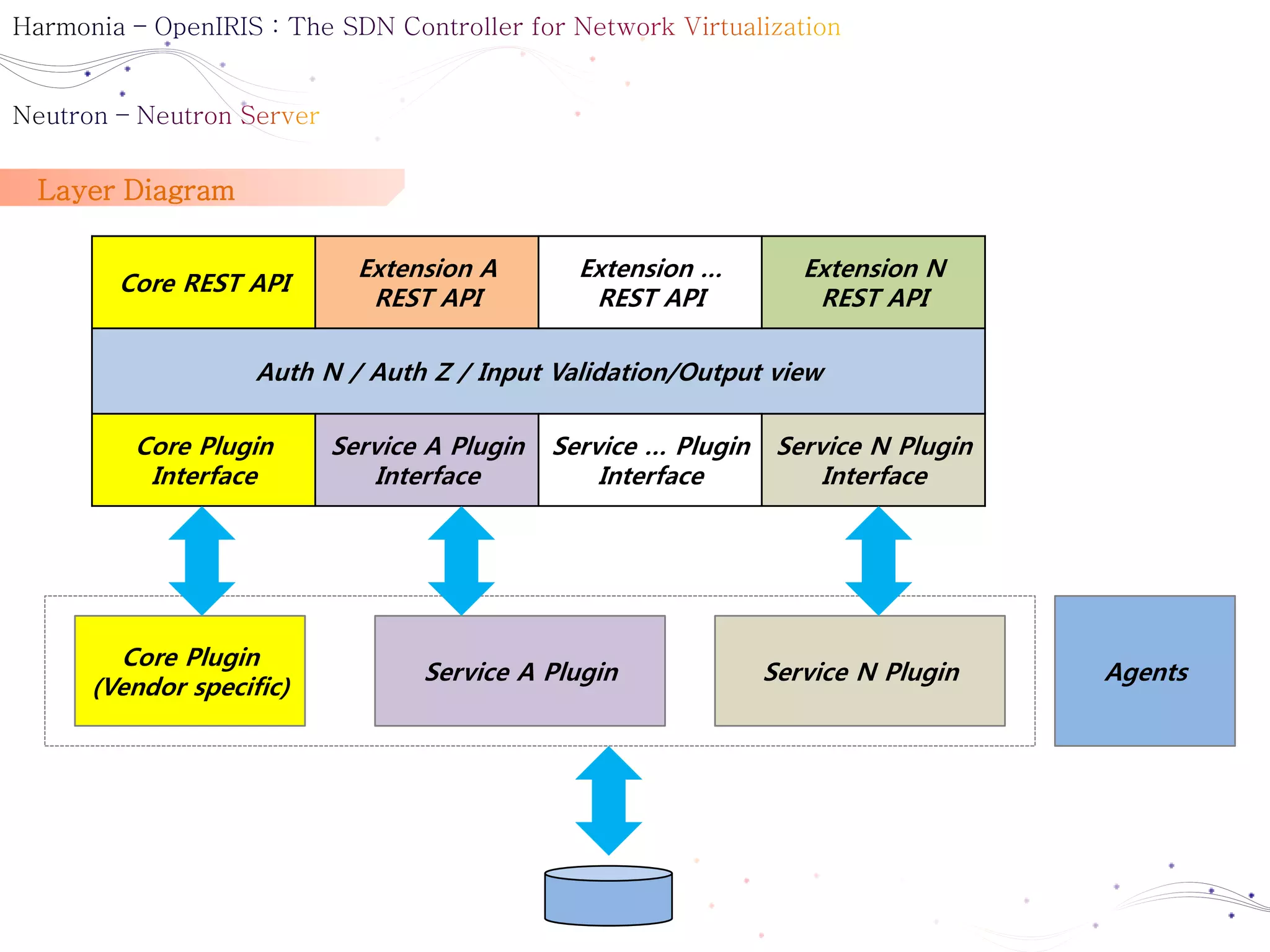

2. Key components of the Neutron-OVS architecture include the Neutron server, OVS agents on compute nodes, and the OVS daemon that implements the switch in the kernel and userspace.

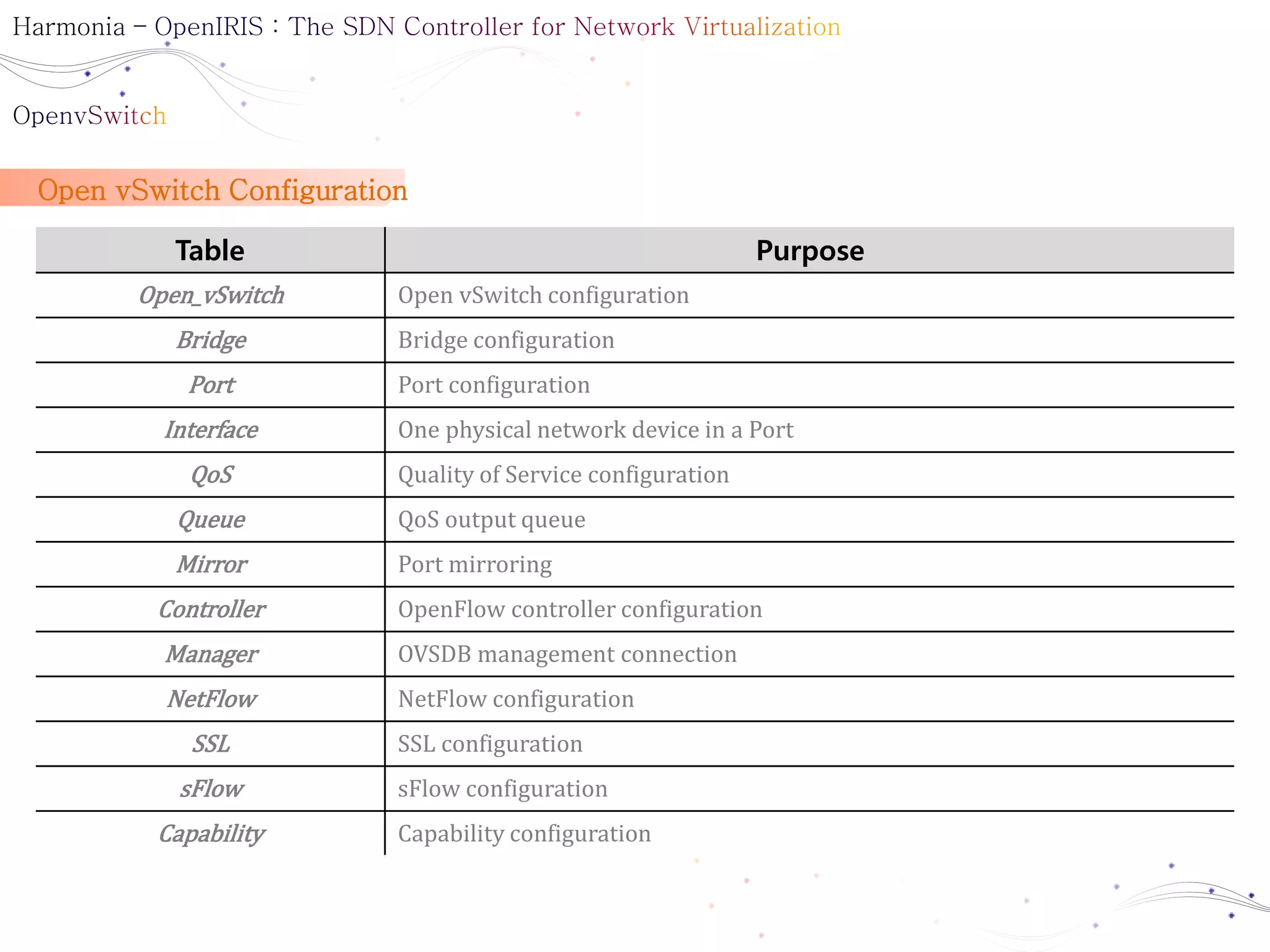

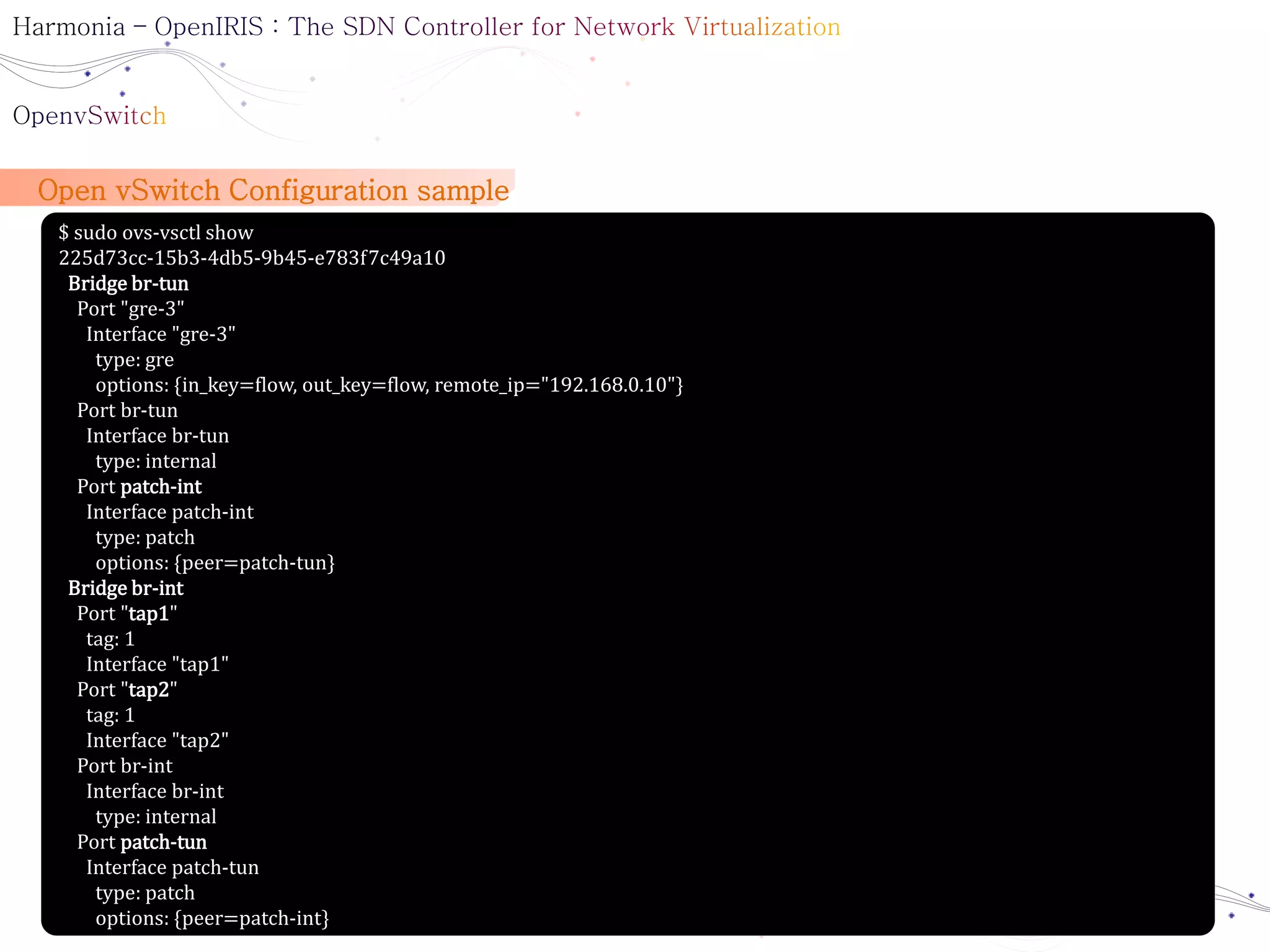

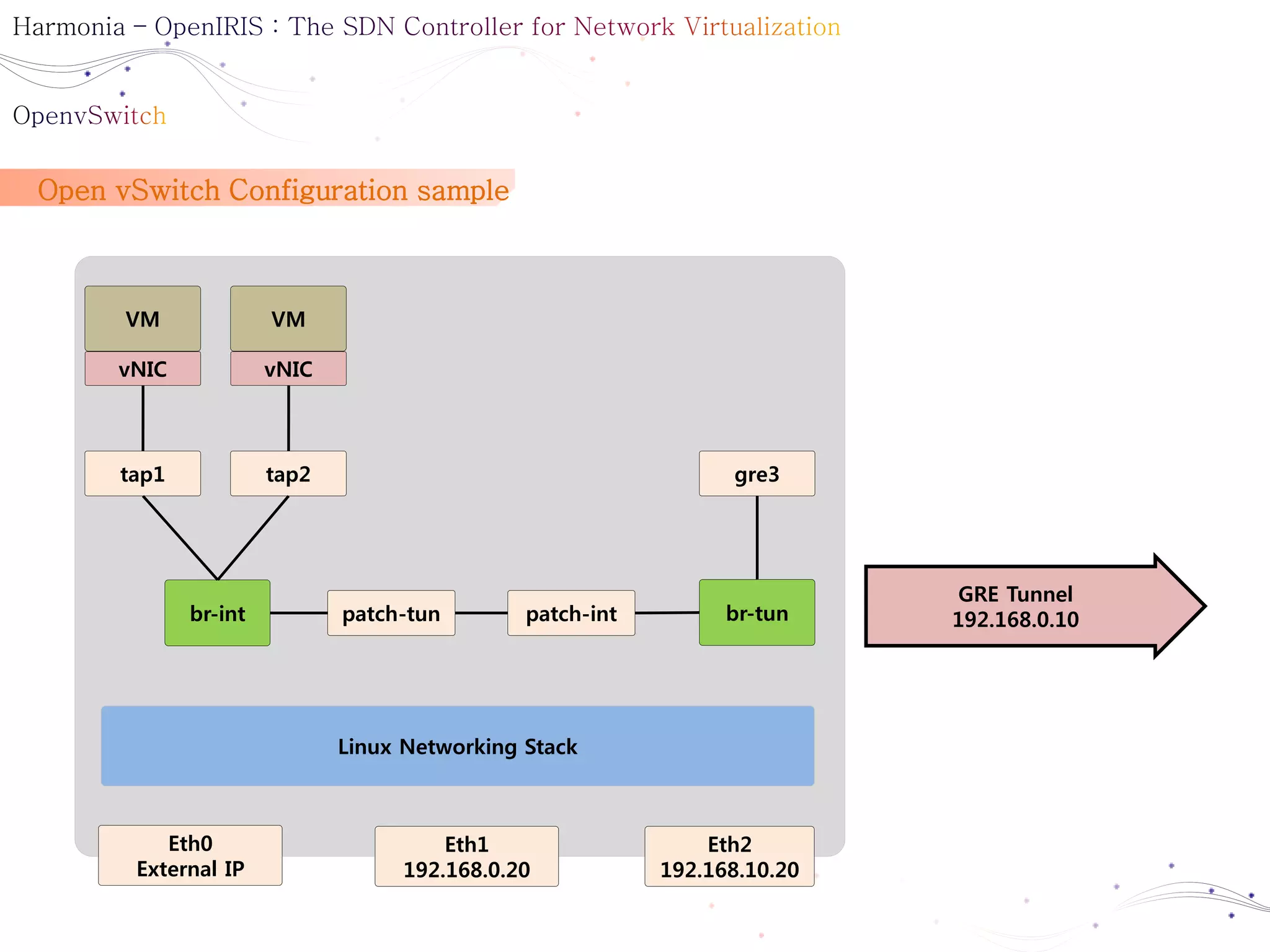

3. The document also provides examples of configuring an OVS bridge and ports for virtual networking in OpenStack.

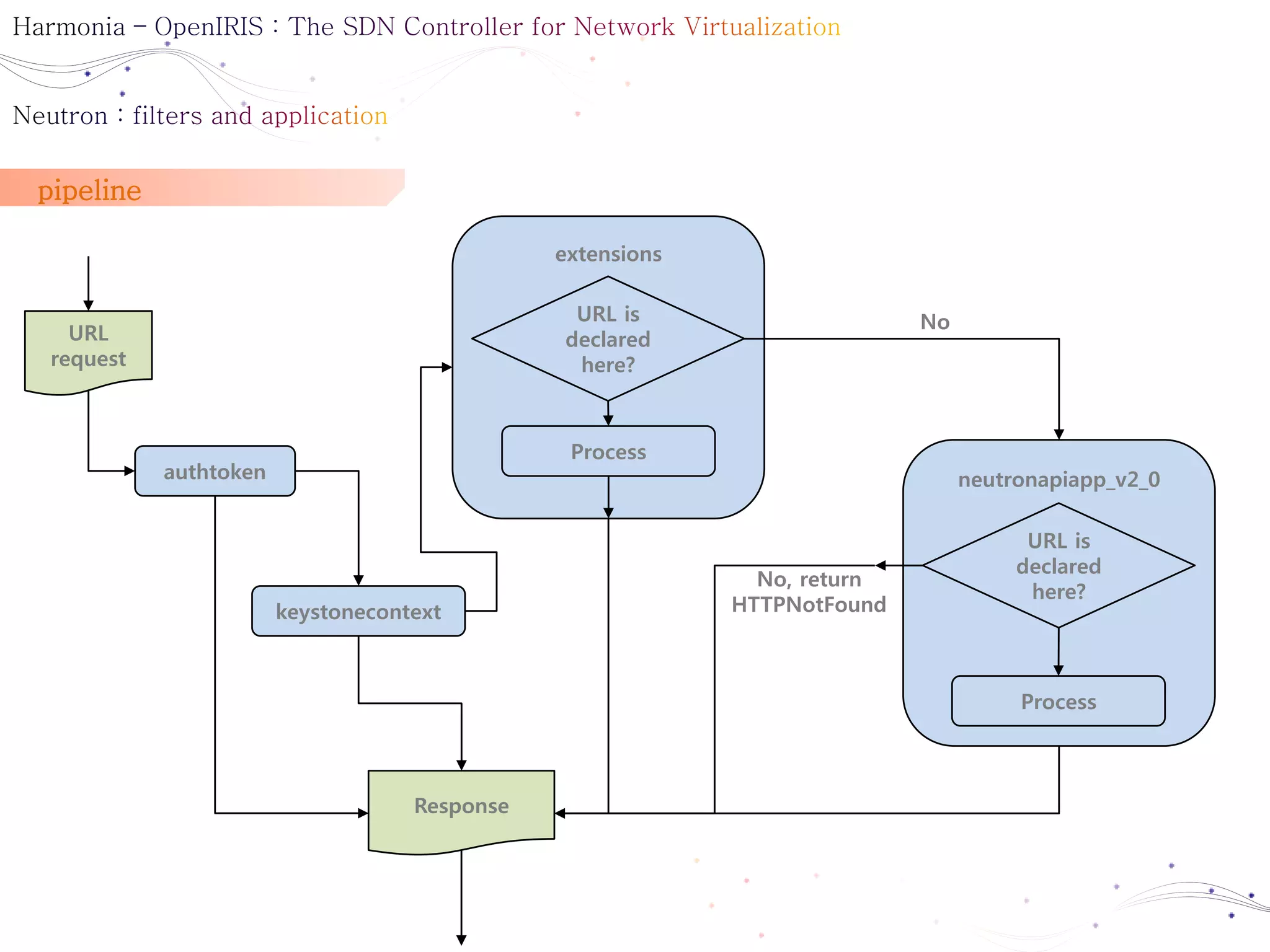

![Application and filters

[composite:neutron]

use = egg:Paste#urlmap

/: neutronversions

/v2.0: neutronapi_v2_0

[composite:neutronapi_v2_0]

use = call:neutron.auth:pipeline_factory

keystone = authtoken keystonecontext extensions neutronapiapp_v2_0

[filter:keystonecontext]

paste.filter_factory = neutron.auth:NeutronKeystoneContext.factory

[filter:authtoken]

paste.filter_factory = keystoneclient.middleware.auth_token:filter_factory

[filter:extensions]

paste.filter_factory = neutron.api.extensions:plugin_aware_extension_middleware_factory

[app:neutronversions]

paste.app_factory = neutron.api.versions:Versions.factory

[app:neutronapiapp_v2_0]

paste.app_factory = neutron.api.v2.router:APIRouter.factory](https://image.slidesharecdn.com/harmoniaopenirisbasicv0-140721001540-phpapp02/75/Harmonia-open-iris_basic_v0-1-26-2048.jpg)

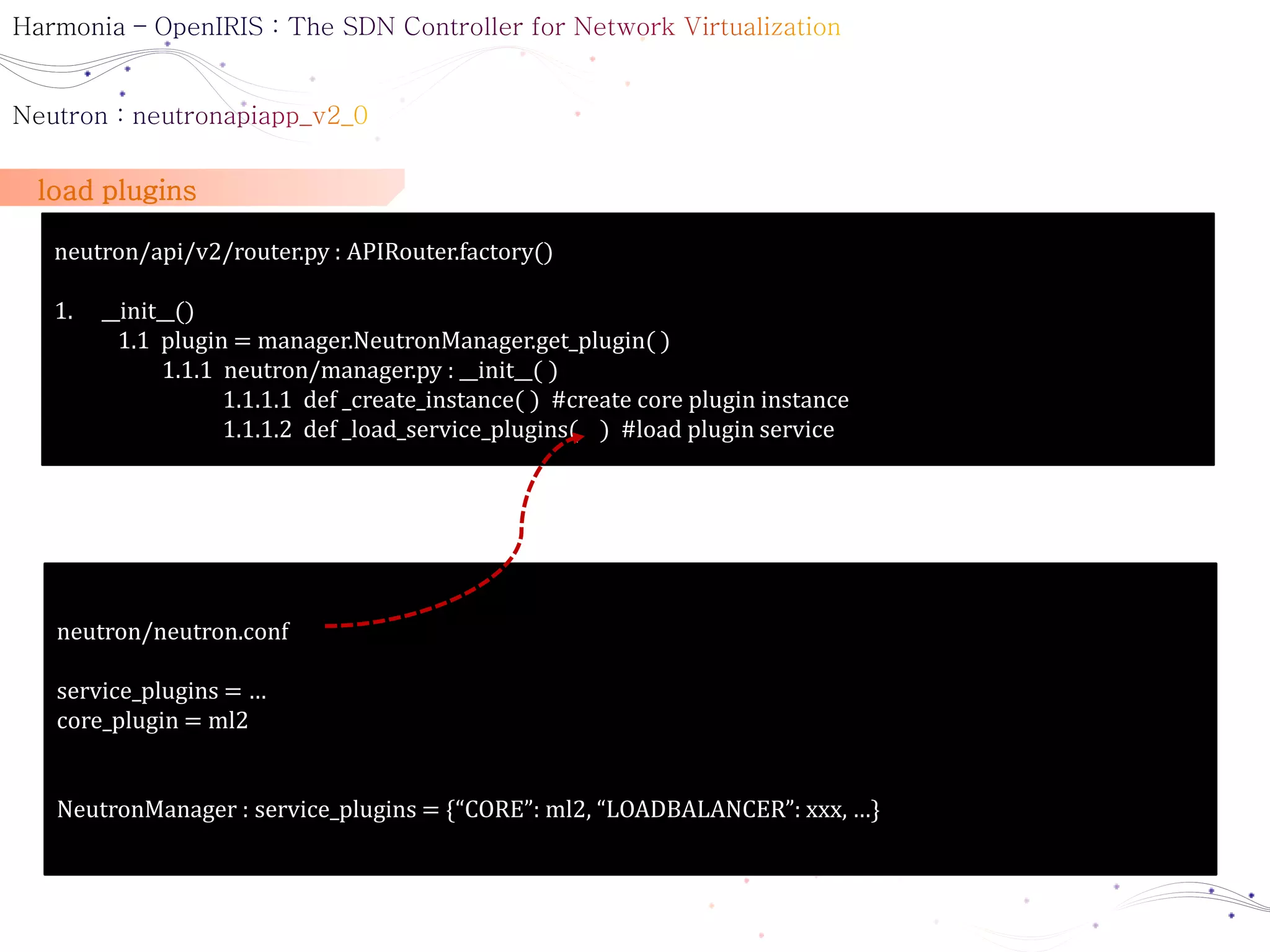

![neutron/server/__init__.py: main()

config.parse(sys.argv[1:]) # --config-file neutron.conf --config-file XXXXX.ini

neutron/common/config.py

def load_paste_app(app_name) # Name of the application to load ex) def load_paste_app(“neutron”)

• neutron/auth.py

def pipeline_factory(loader, global_conf, **local_conf):

• neutron/api/v2/router.py

class APIRouter(wsgi.router):

def factory(cls, global_config, **local_config):

• neutron/api/extensions.py

def plugin_aware_extension_middleware_factory(global_config, **local_config):

neutron/auth.py

class NeutronKeystoneContext(wsgi.Middleware):](https://image.slidesharecdn.com/harmoniaopenirisbasicv0-140721001540-phpapp02/75/Harmonia-open-iris_basic_v0-1-27-2048.jpg)

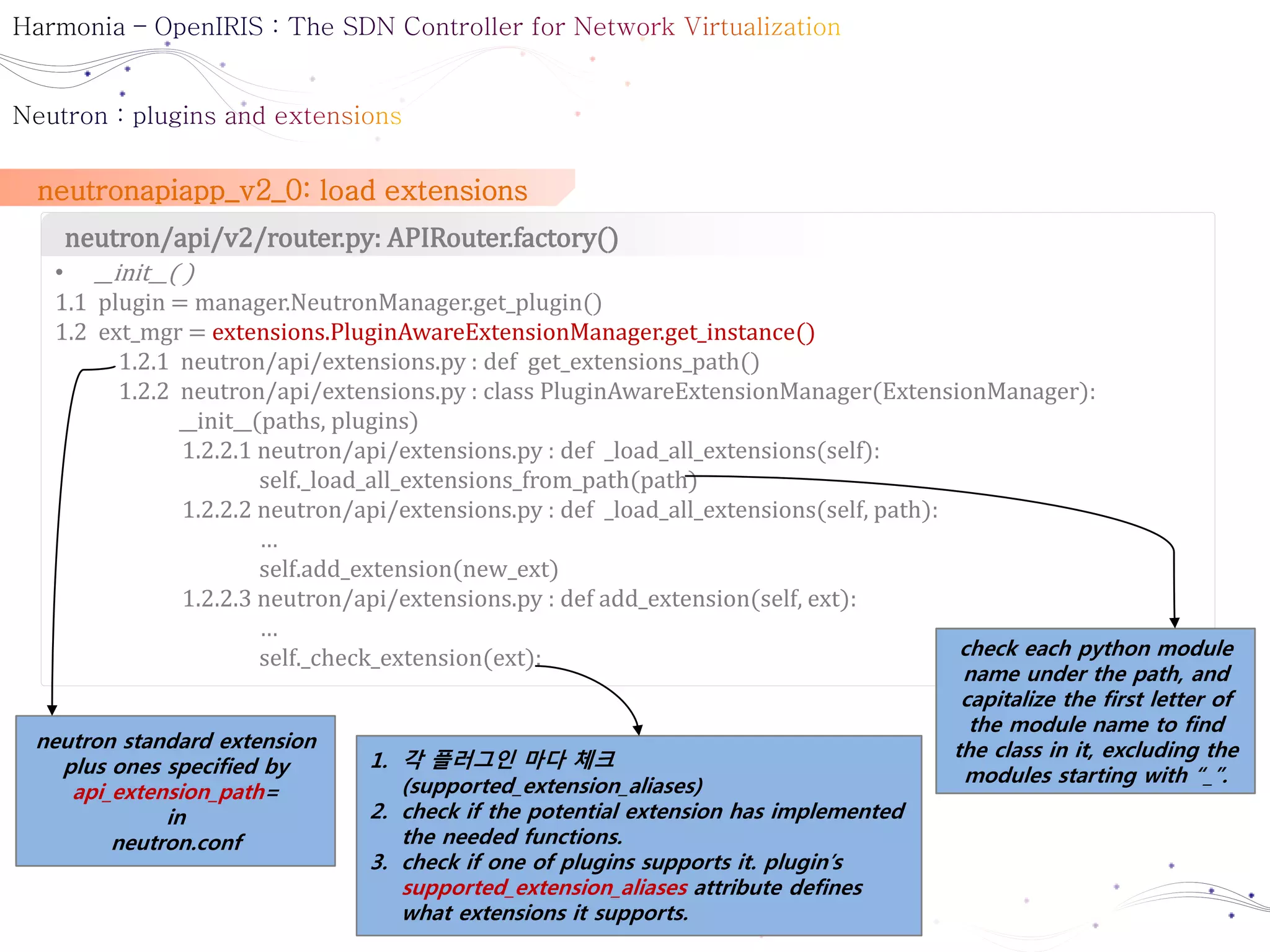

![What are plugins & extensions

extensions are about resources and the actions on them

• neutron/plugins/cisco|vmware|nuage/extensions/xxx.py

@classmethod

def get_resources(cls):

for resource_name in [‘router’, ‘floatingip’]:

…

controller = base.create_resource (collection_name, resource_name, plugin…)

ex = ResourceExtension(collection_name, controller, member_actions…)

Plugins are used to support the resources

• neutron/services/l3_router/l3_router_plugin.py

• neutron/plugins/bigswitch/plugin.py

supported_extension_aliases = [“router”, “ext-gw-mode”, “extraroute”, “l3_agent_scheduler”]

• neutron/extensions/l3.py

• neutron/plugins/bigswitch/plugin.py

def update_router(self, context, id, router):

• neutron/extensions/l3.py

• neutron/plugins/bigswitch/routerrule_db.py

def get_router(self, context, id, fields=None):](https://image.slidesharecdn.com/harmoniaopenirisbasicv0-140721001540-phpapp02/75/Harmonia-open-iris_basic_v0-1-30-2048.jpg)

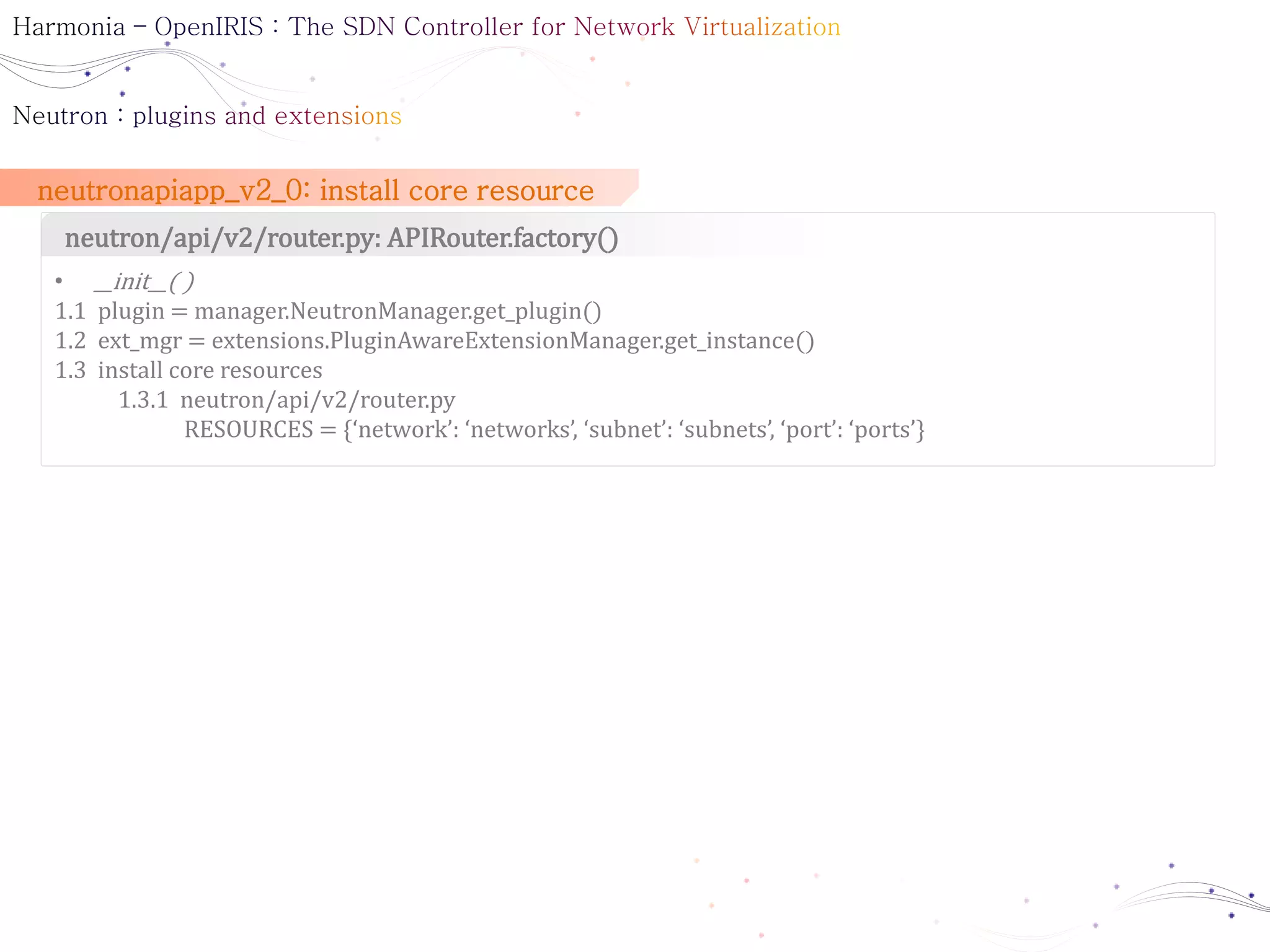

![extension filter: assemble extensions

neutron/api/extension.py

• def plugin_aware_extension_middleware_factory(global_config, **local_config)

1.1 def _factory(app):

ext_mgr = PluginAwareExtensionManager.get_instance()

return ExtensionMiddleware(app, ext_mgr=ext_mgr)

:ExtensionMiddleware :PluginAwareExtensionManager :ExtensionDescriptor

1. __init__(application, ext_mgr)

1.1 get_resource()

[for each extension]

1.1.1 get_resources()

Loop

1.2 install route objects](https://image.slidesharecdn.com/harmoniaopenirisbasicv0-140721001540-phpapp02/75/Harmonia-open-iris_basic_v0-1-33-2048.jpg)

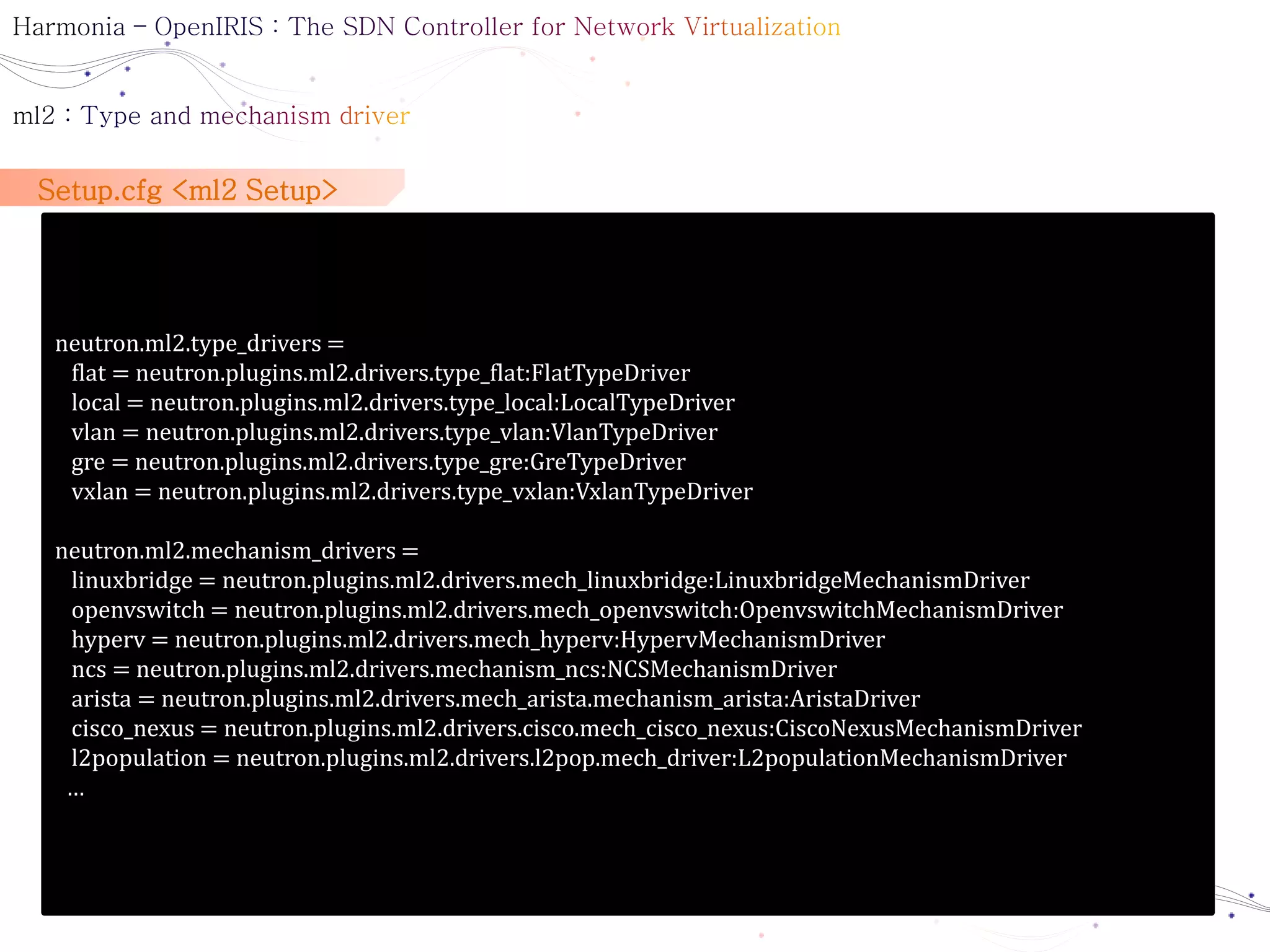

![ml2.ini <ml2 설정 파일>

neutron-server --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/ml2.ini

[ml2]

type_drivers = local,flat,vlan,gre,vxlan

mechanism_drivers = openvswitch,linuxbridge

tenant_network_types = vlan,gre,vxlan

[ml2_type_flat]

flat_networks = physnet1,physnet2

[ml2_type_vlan]

network_vlan_ranges = physnet1:1000:2999,physnet2

[ml2_type_gre]

tunnel_id_ranges = 1:1000

[ml2_type_vxlan]

vni_ranges = 1001:2000](https://image.slidesharecdn.com/harmoniaopenirisbasicv0-140721001540-phpapp02/75/Harmonia-open-iris_basic_v0-1-38-2048.jpg)

![__init__ : neutron manager (server)

neutron/manager.py: __init__()

• Create core plugin instance [core_plugin=]

Ml2 plugin :TypeManager :TypeDriver :MechanismManager :MechanismDriver

1: __init__()

1.1: initialize()

loop

[loop on drivers]

1.1.1: initialize()

[loop on ordered_mech_drivers]

1.2.1: initialize()

loop

1.2: initialize()

1.3: _setup_rpc()

ml2.ini를 통하여 어떠한

드라이버를 사용할 것인지

읽고 환경을 설정함](https://image.slidesharecdn.com/harmoniaopenirisbasicv0-140721001540-phpapp02/75/Harmonia-open-iris_basic_v0-1-39-2048.jpg)

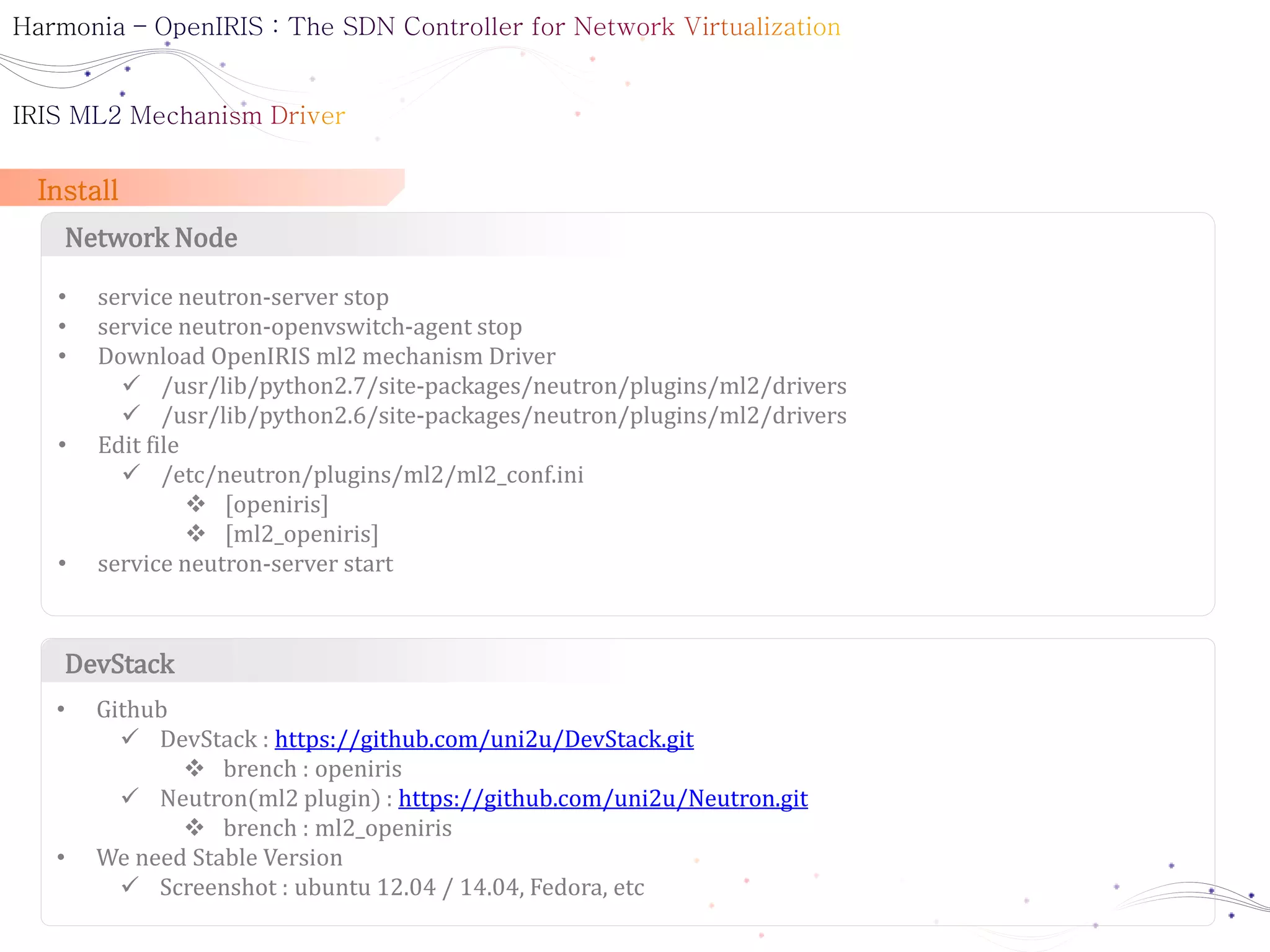

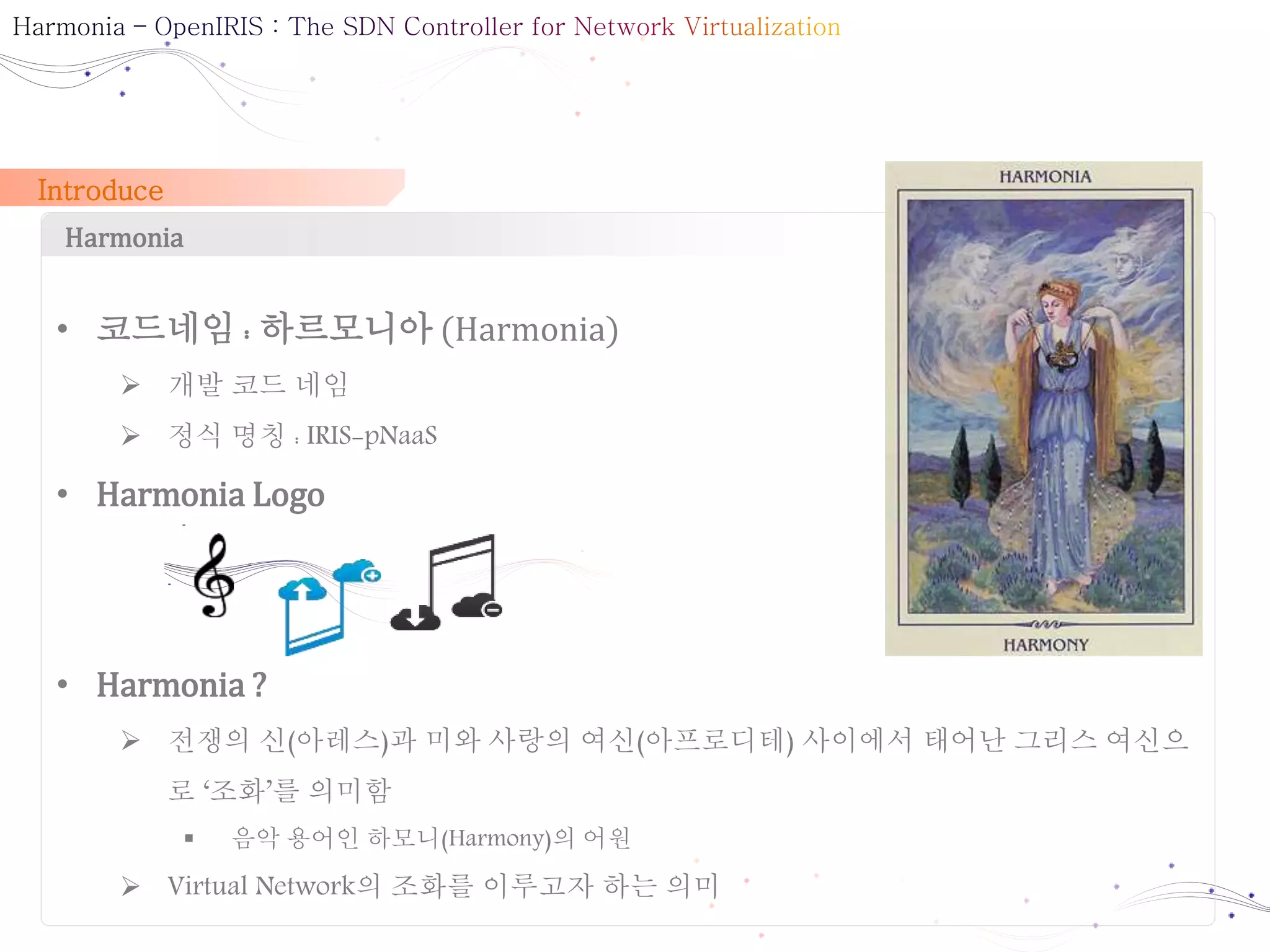

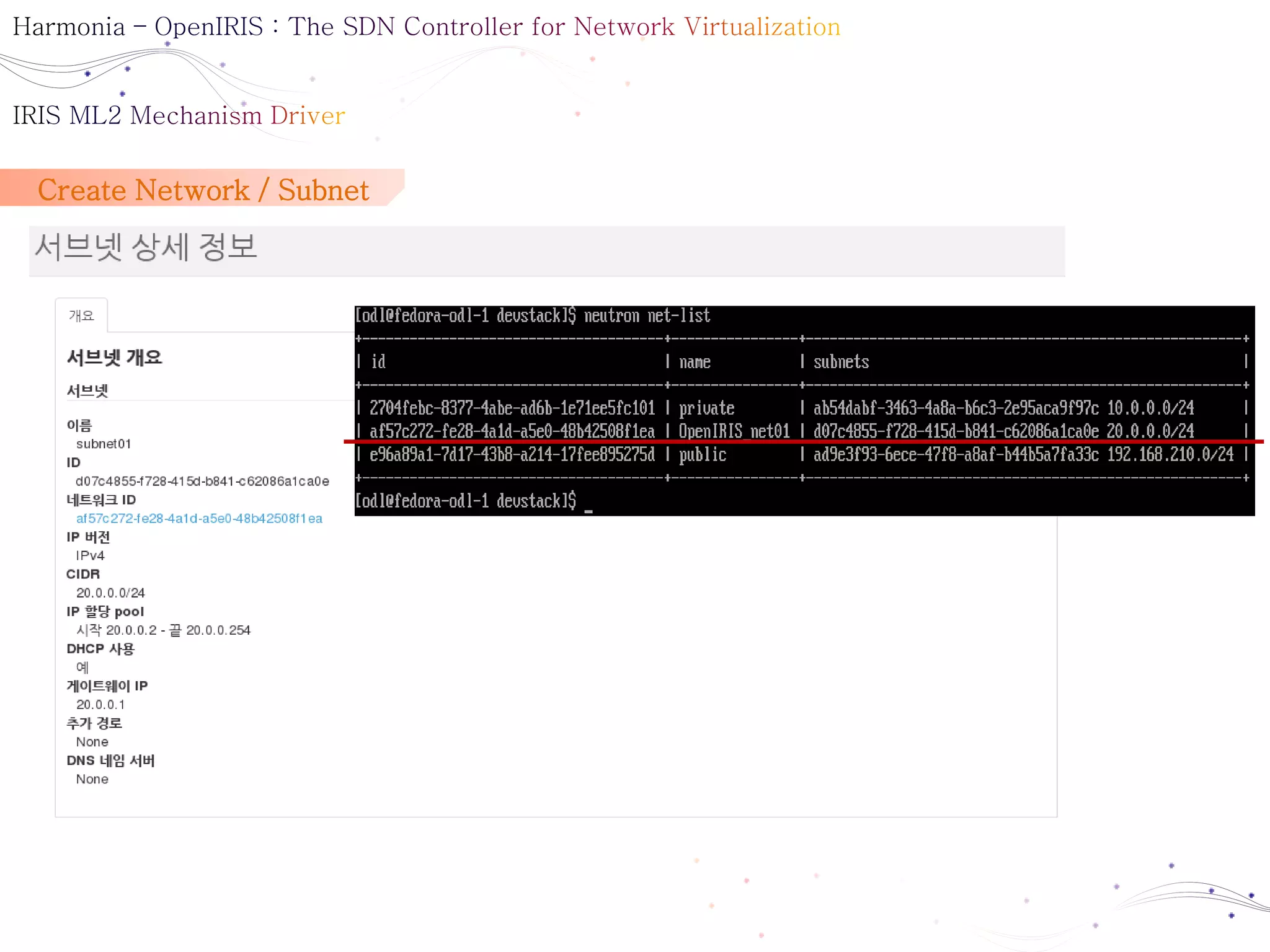

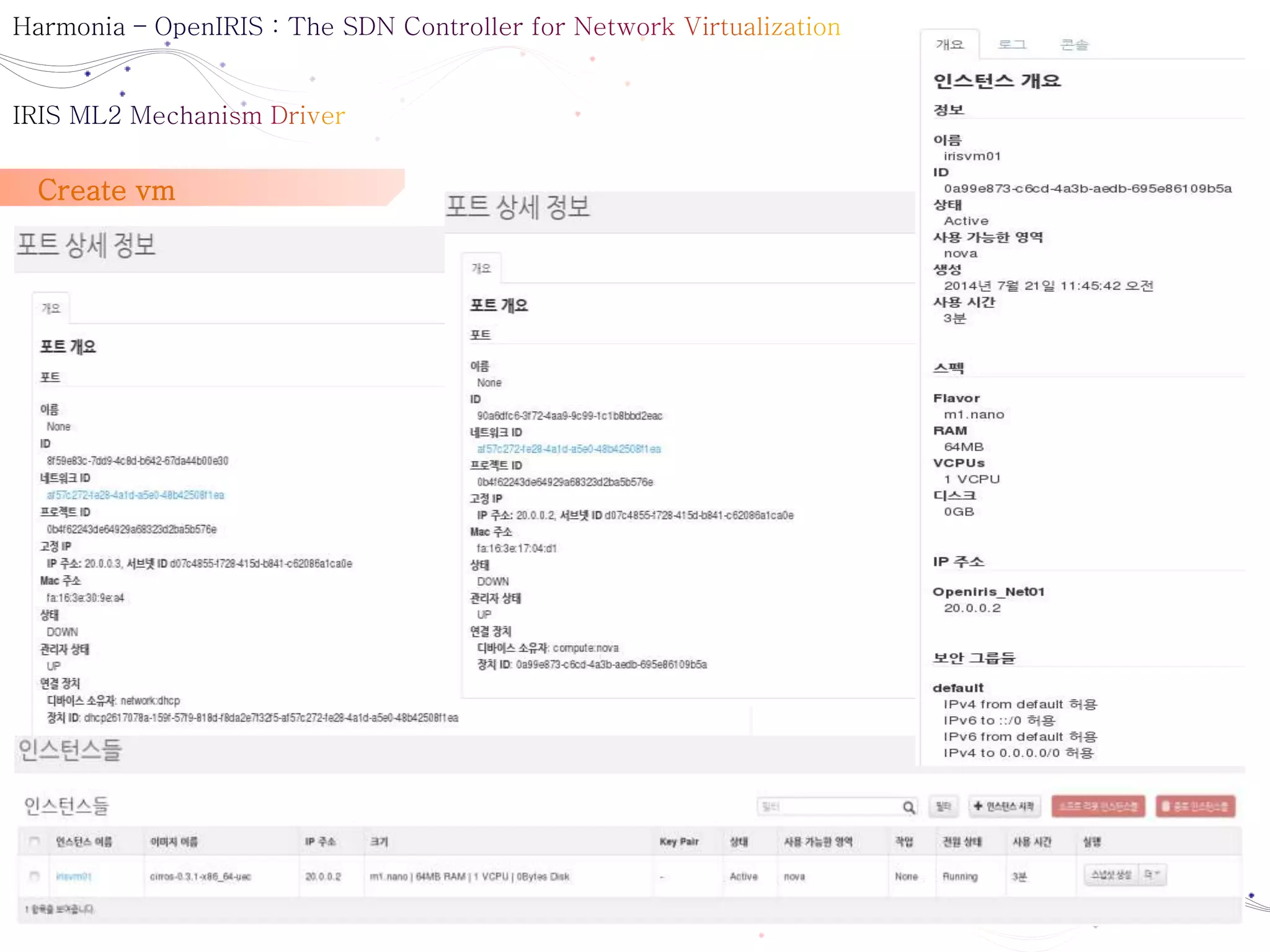

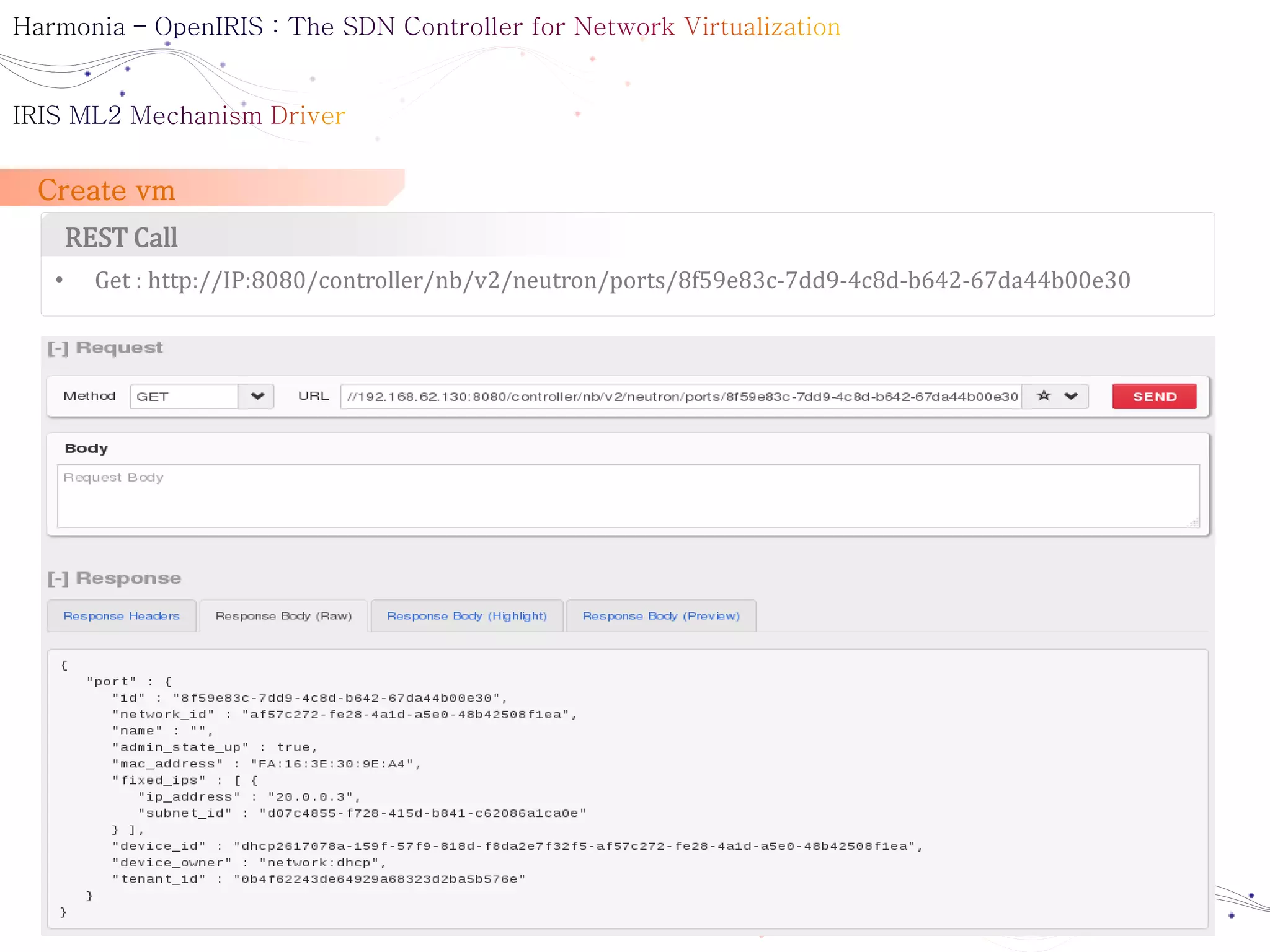

![Install

Network Node

• service neutron-server stop

• service neutron-openvswitch-agent stop

• Download OpenIRIS ml2 mechanism Driver

/usr/lib/python2.7/site-packages/neutron/plugins/ml2/drivers

/usr/lib/python2.6/site-packages/neutron/plugins/ml2/drivers

• Edit file

/etc/neutron/plugins/ml2/ml2_conf.ini

[openiris]

[ml2_openiris]

• service neutron-server start

DevStack

• Github

DevStack : https://github.com/uni2u/DevStack.git (Find bugs...)

TBD

Neutron(ml2 plugin) : https://github.com/uni2u/Neutron.git (Find bugs...)

TBD

• We need Stable Version

Screenshot : ubuntu 12.04 / 14.04, Fedora, etc](https://image.slidesharecdn.com/harmoniaopenirisbasicv0-140721001540-phpapp02/75/Harmonia-open-iris_basic_v0-1-62-2048.jpg)