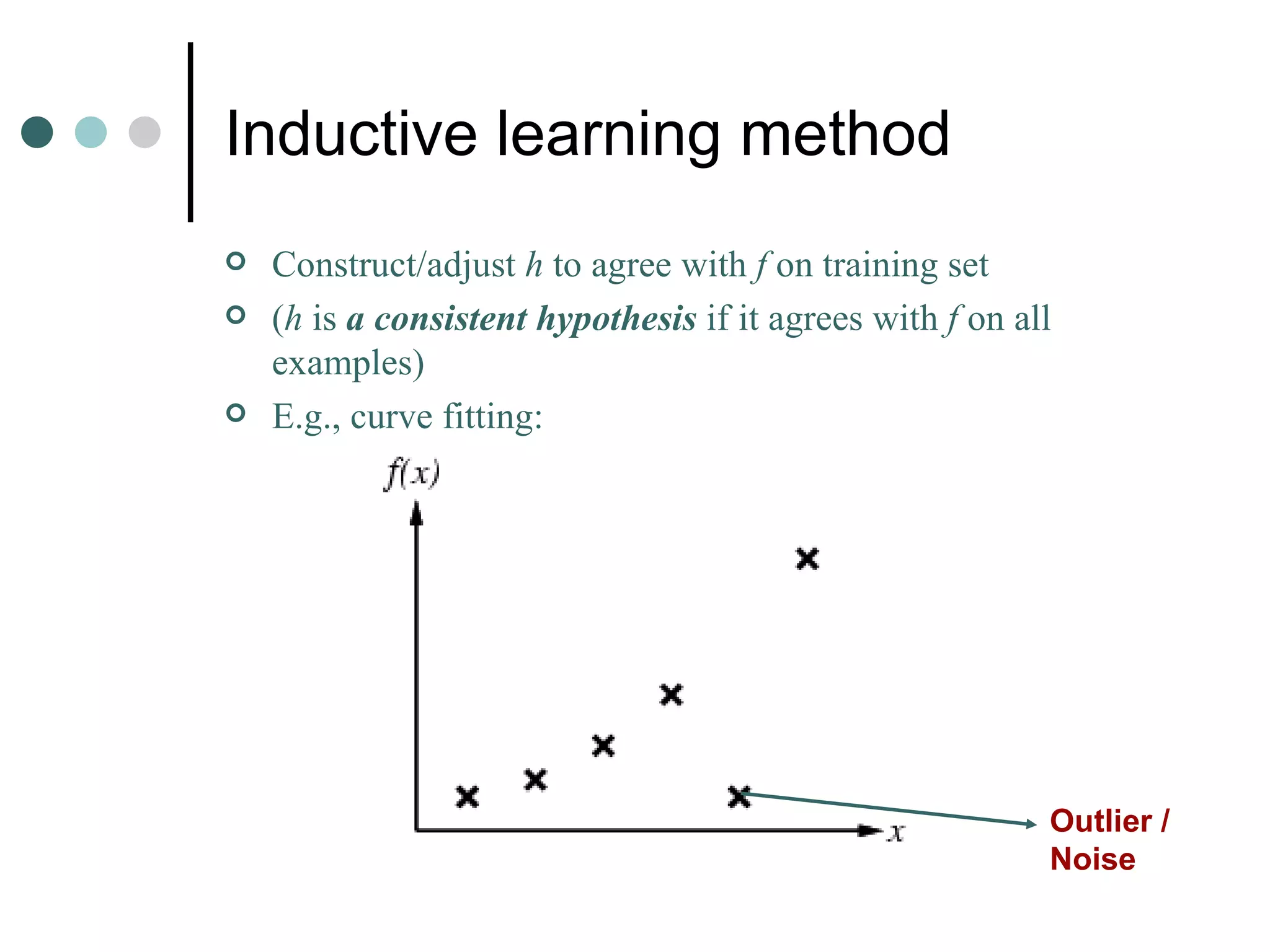

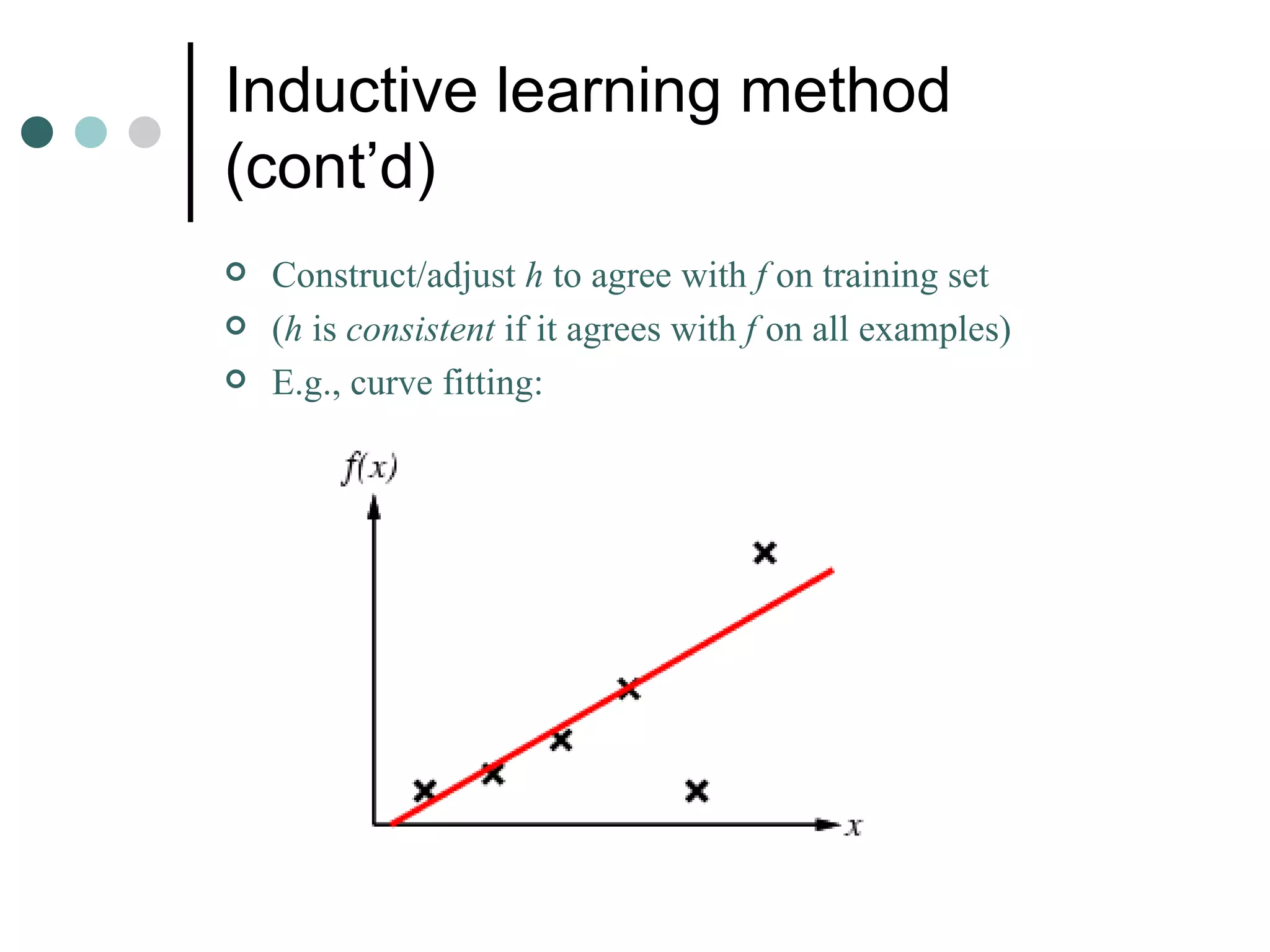

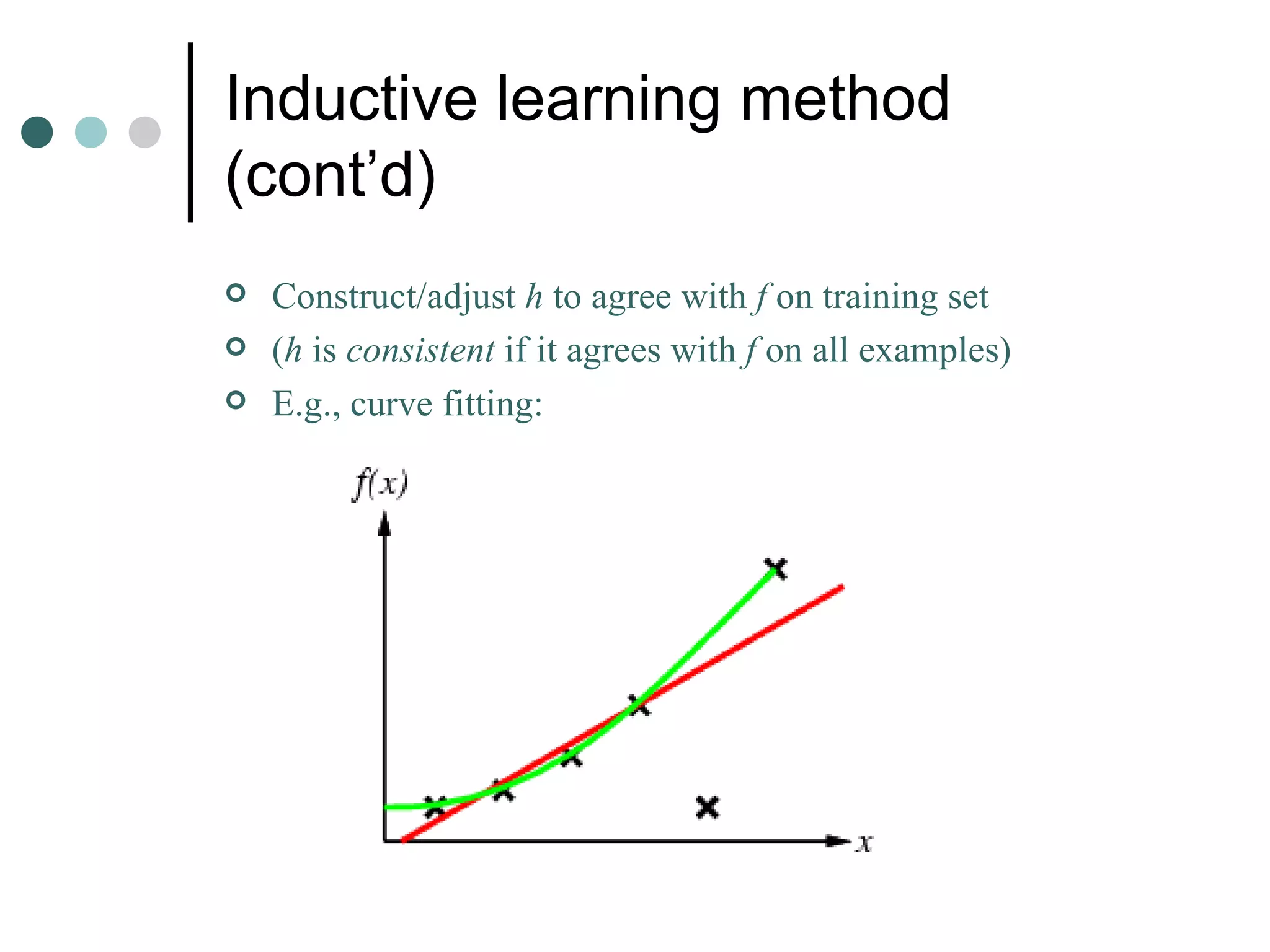

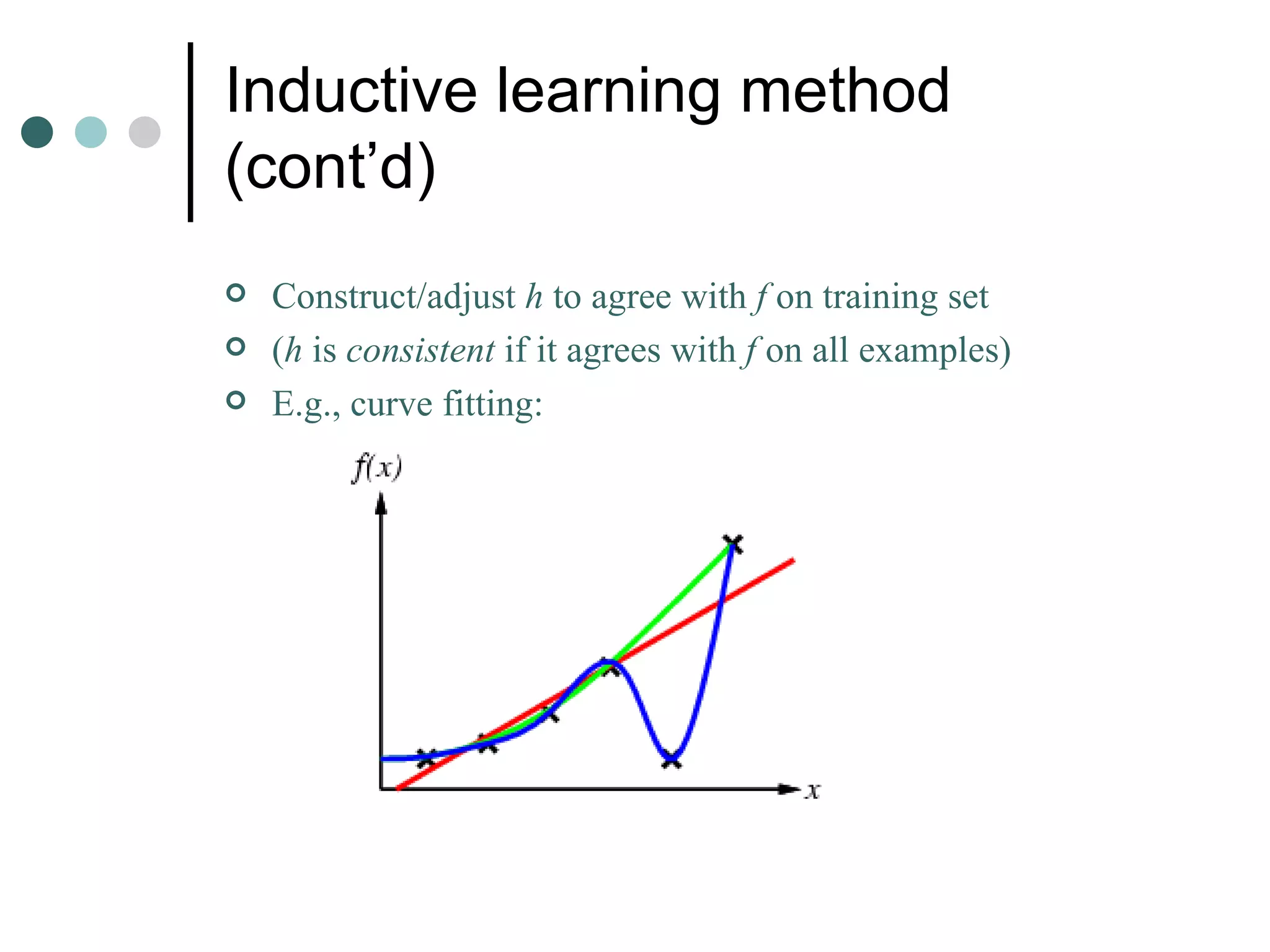

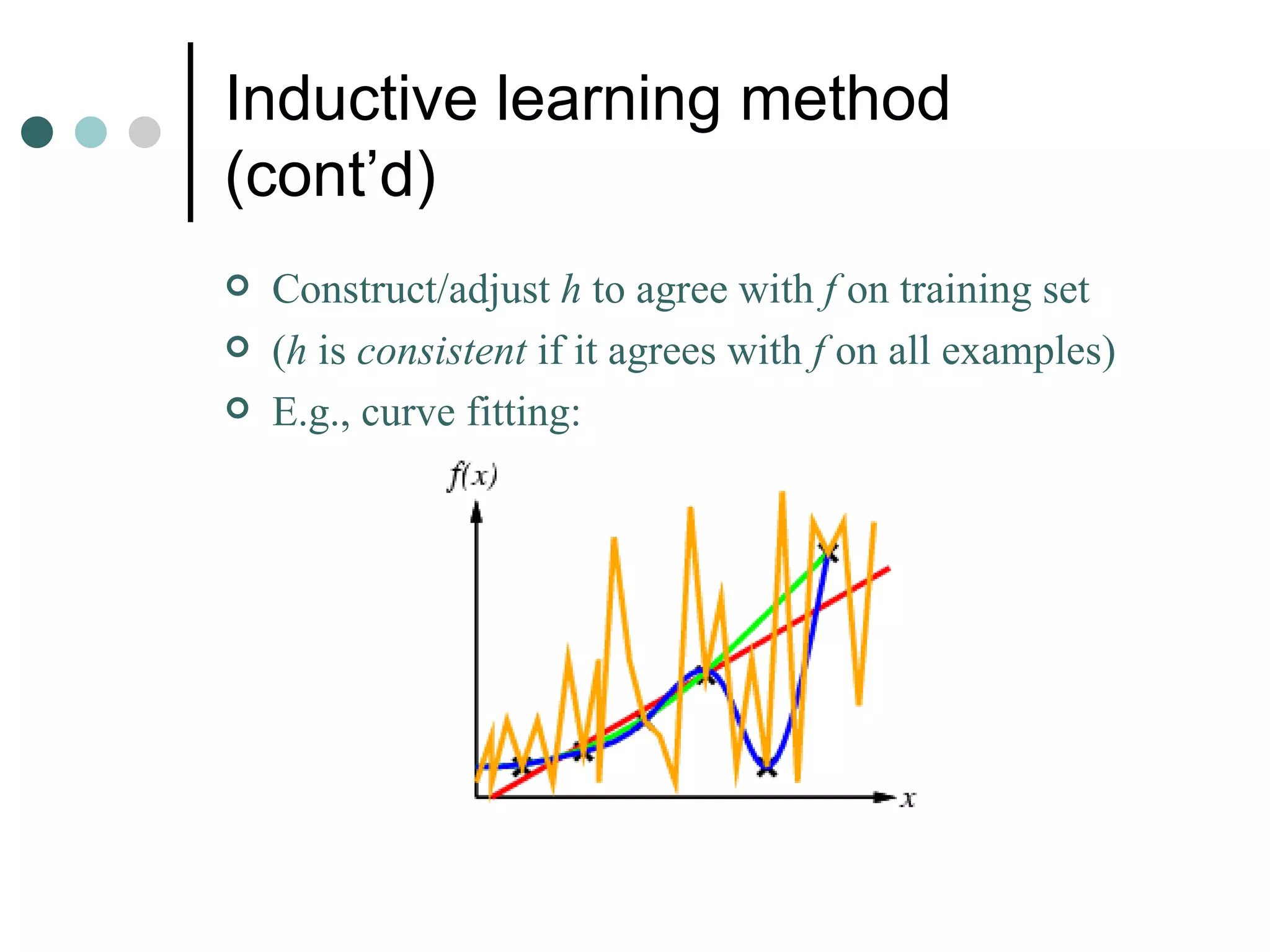

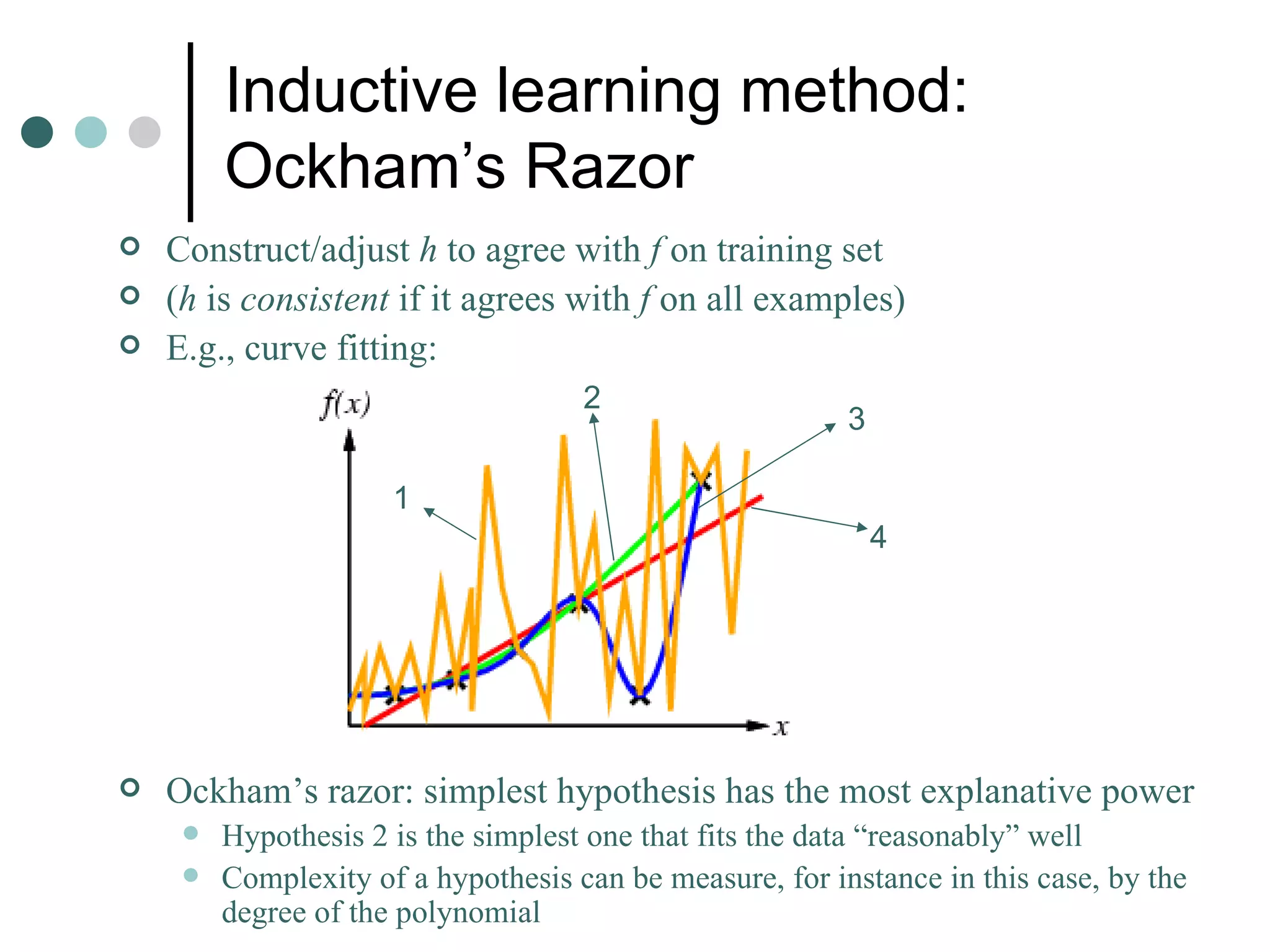

The document discusses machine learning and inductive learning. It provides an overview of types of machine learning including supervised, unsupervised, and reinforcement learning. It also discusses the history of machine learning and how inductive learning works, with the goal being to construct a hypothesis h that approximates the target function f based on examples in the training data. Decision tree learning is introduced as a method for inductive learning.