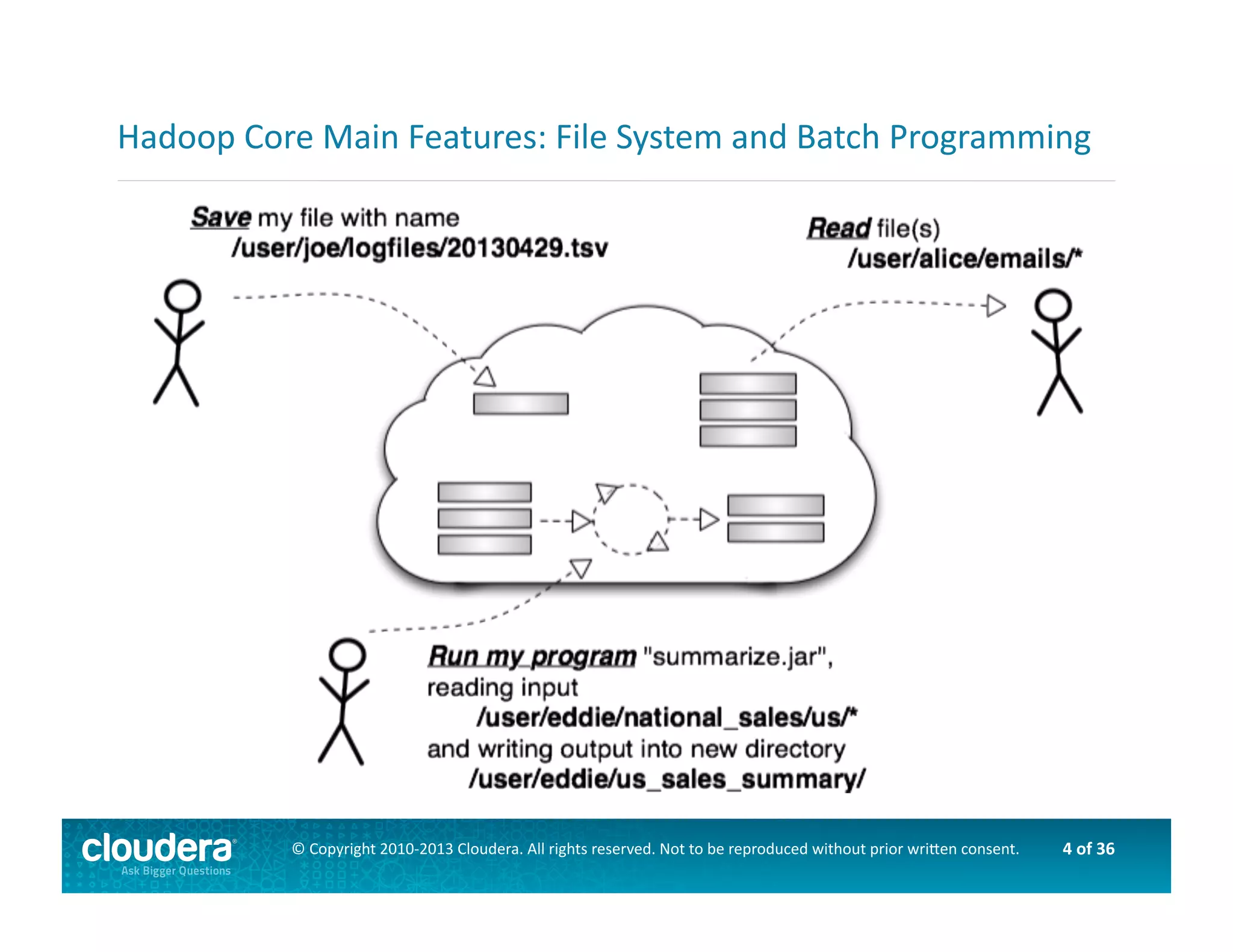

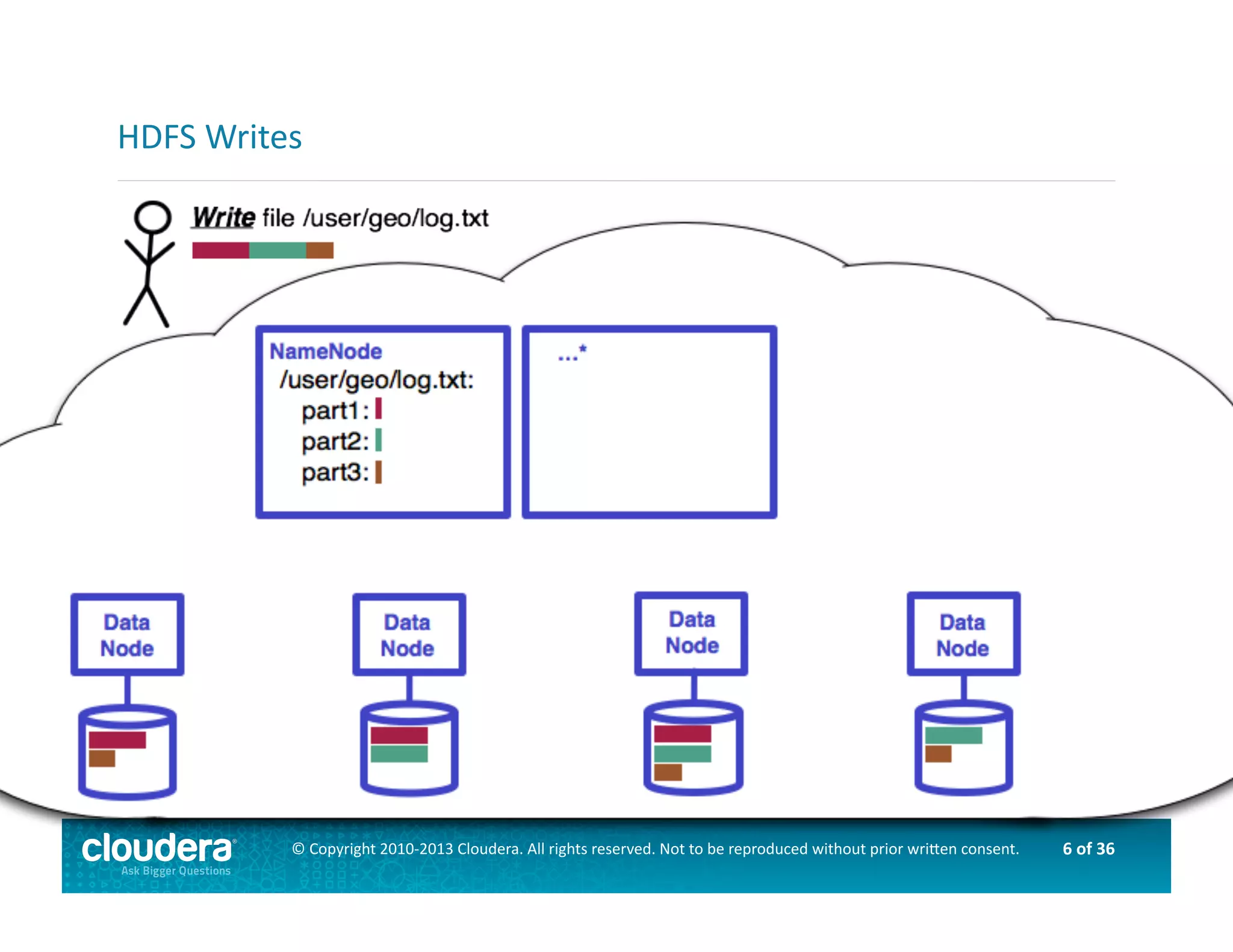

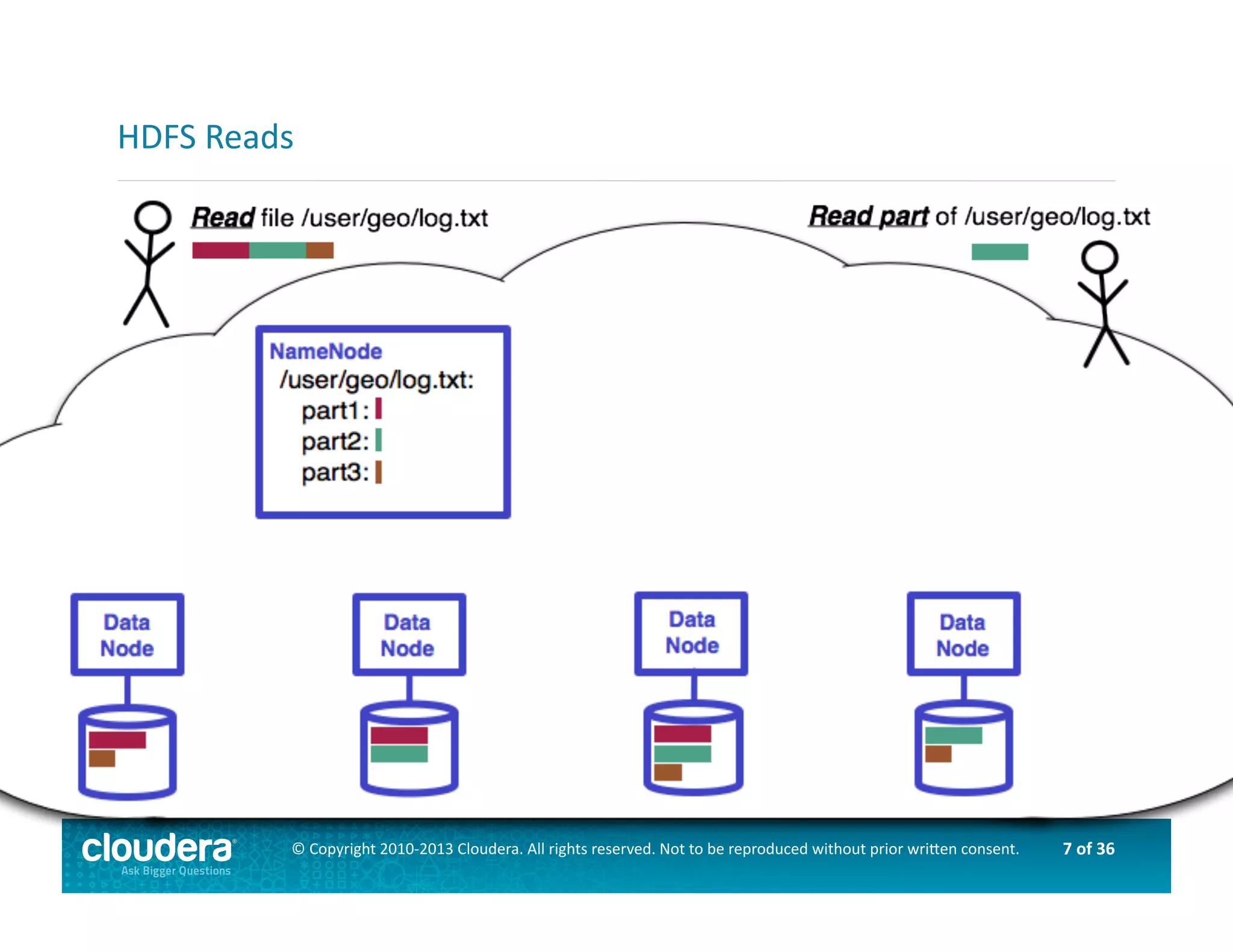

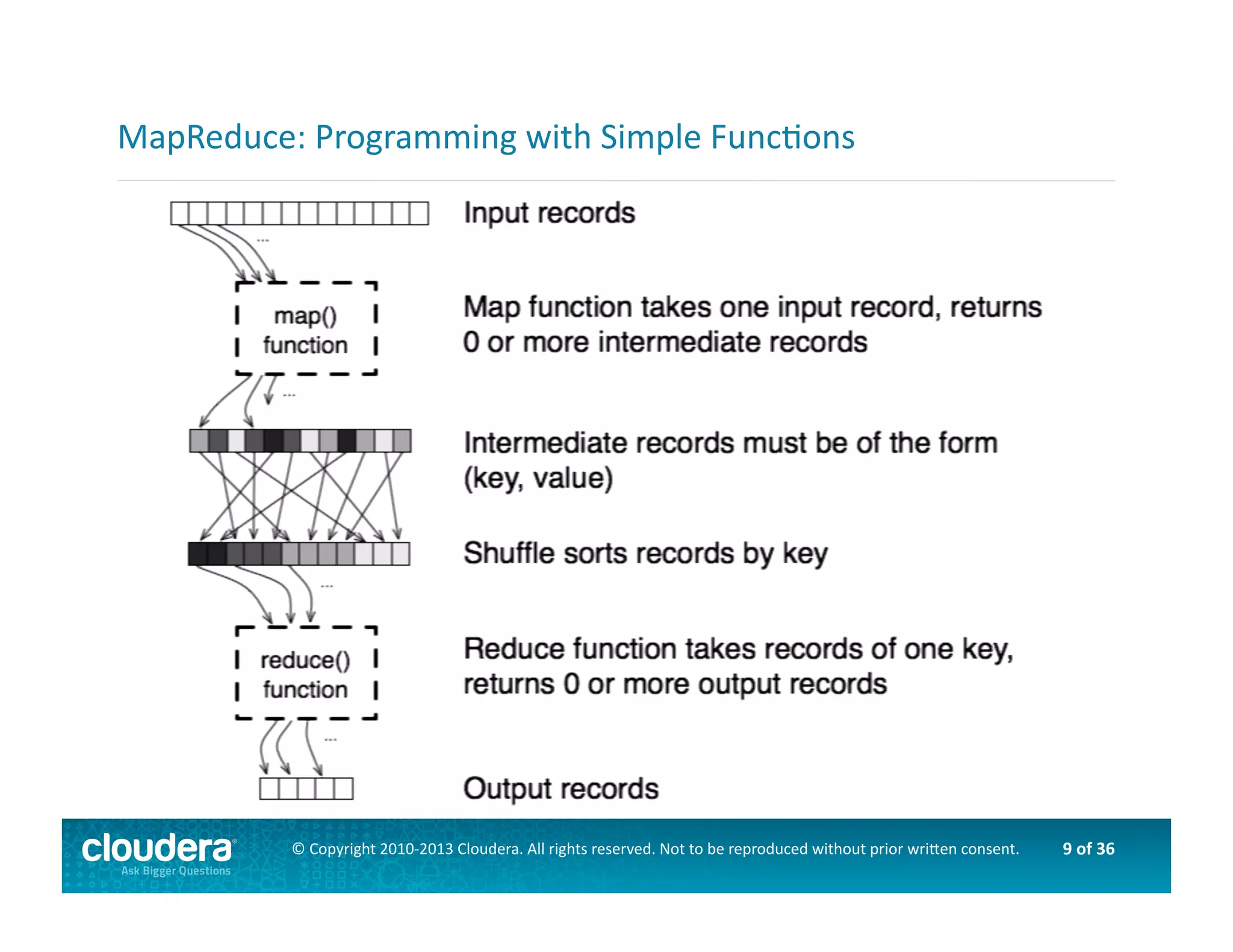

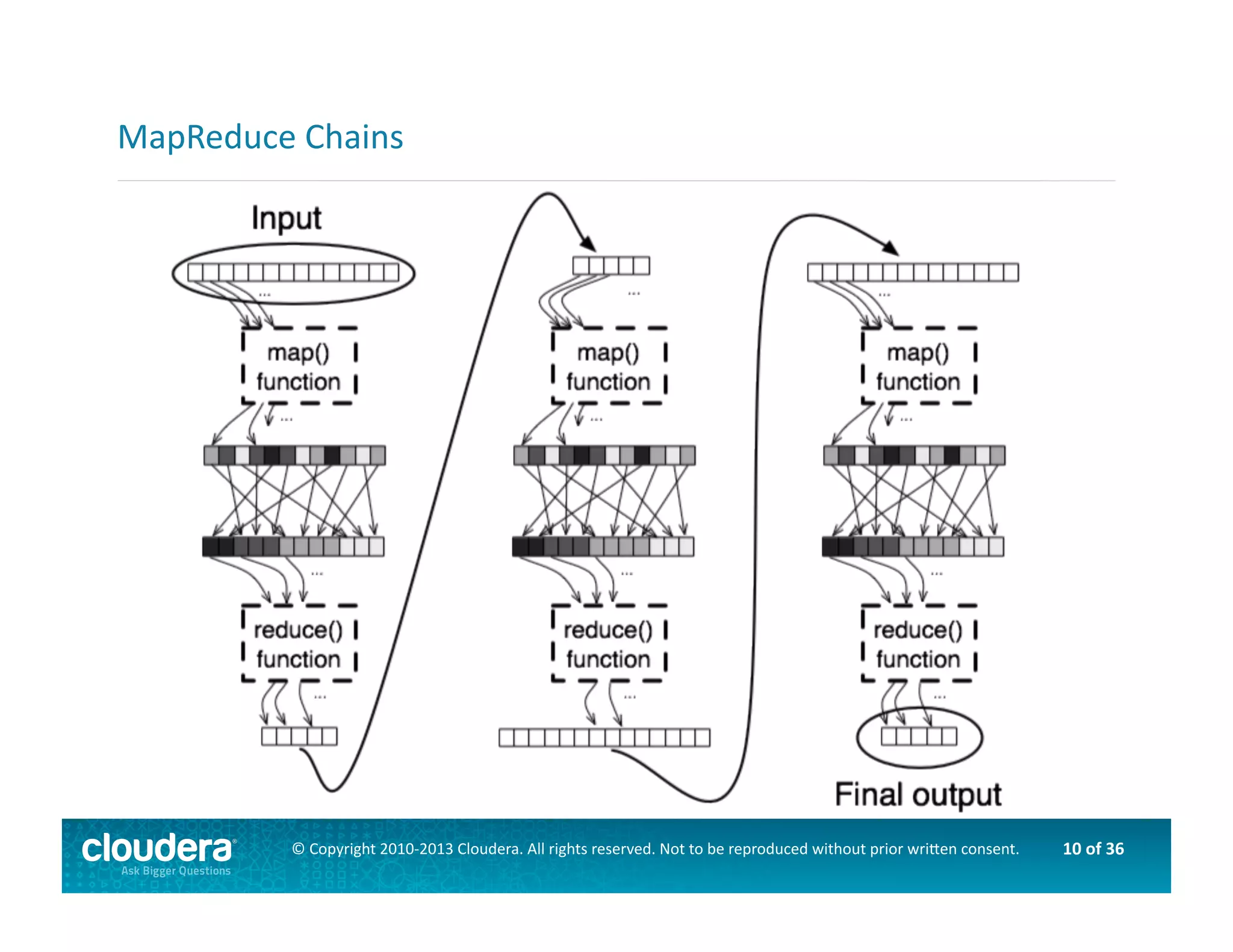

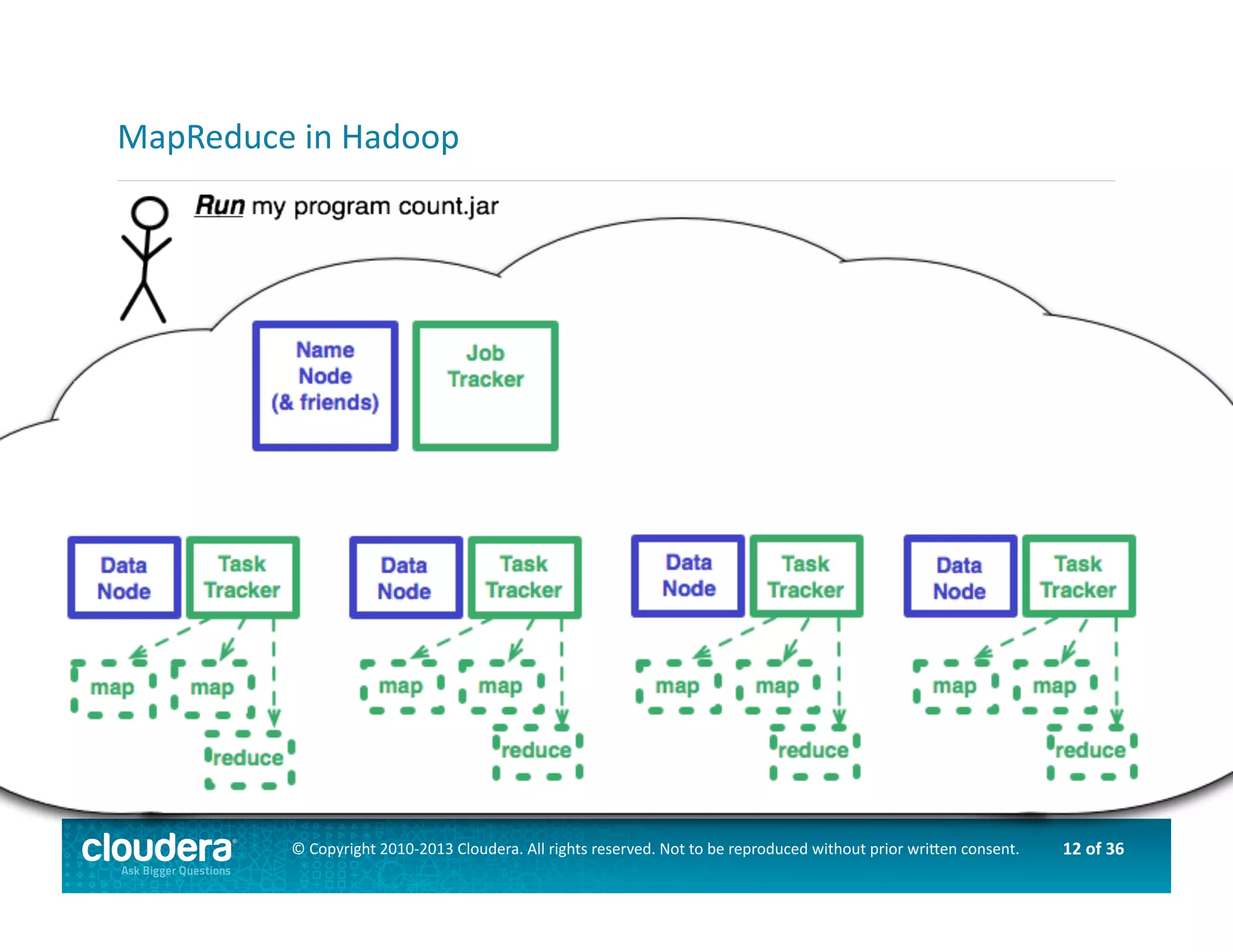

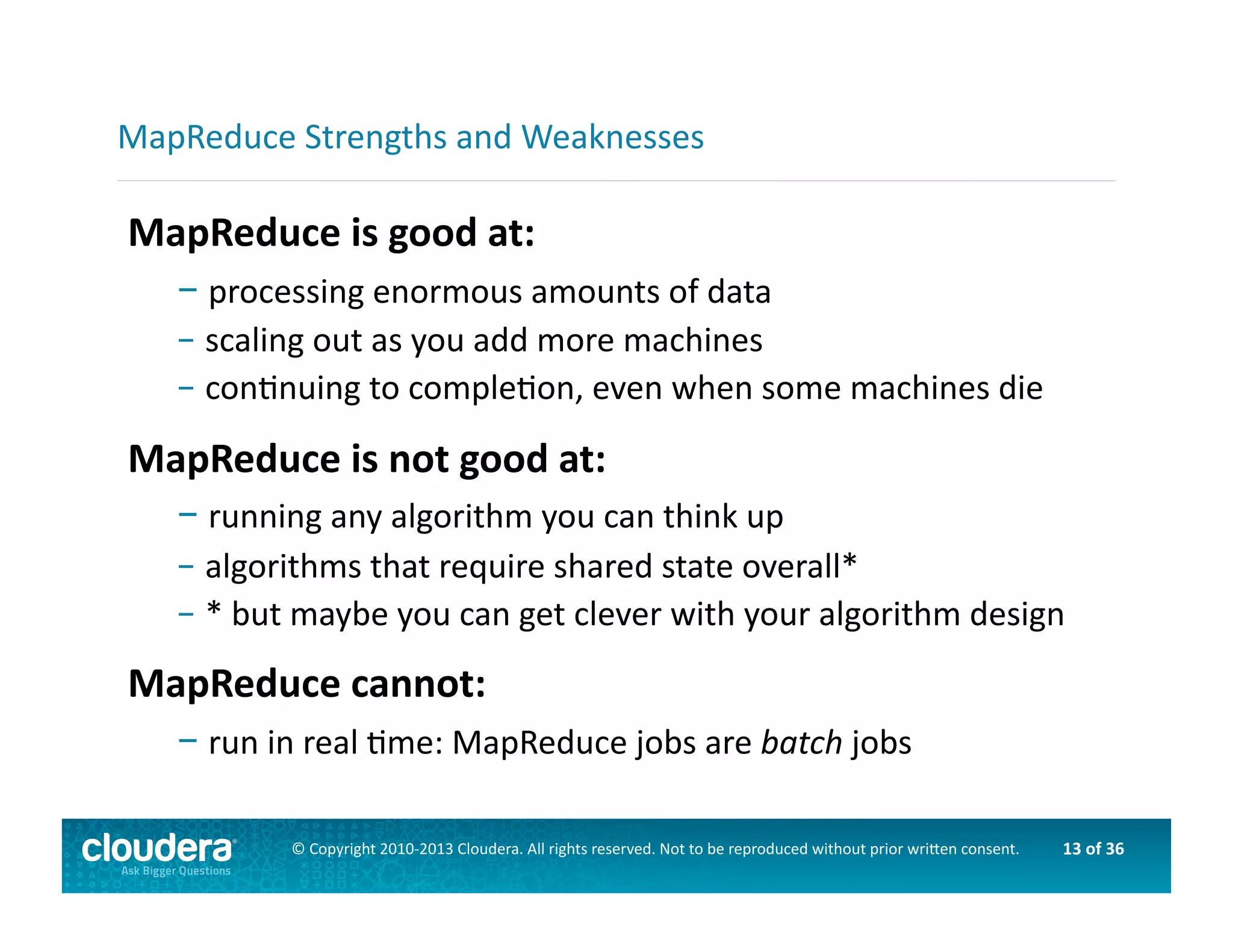

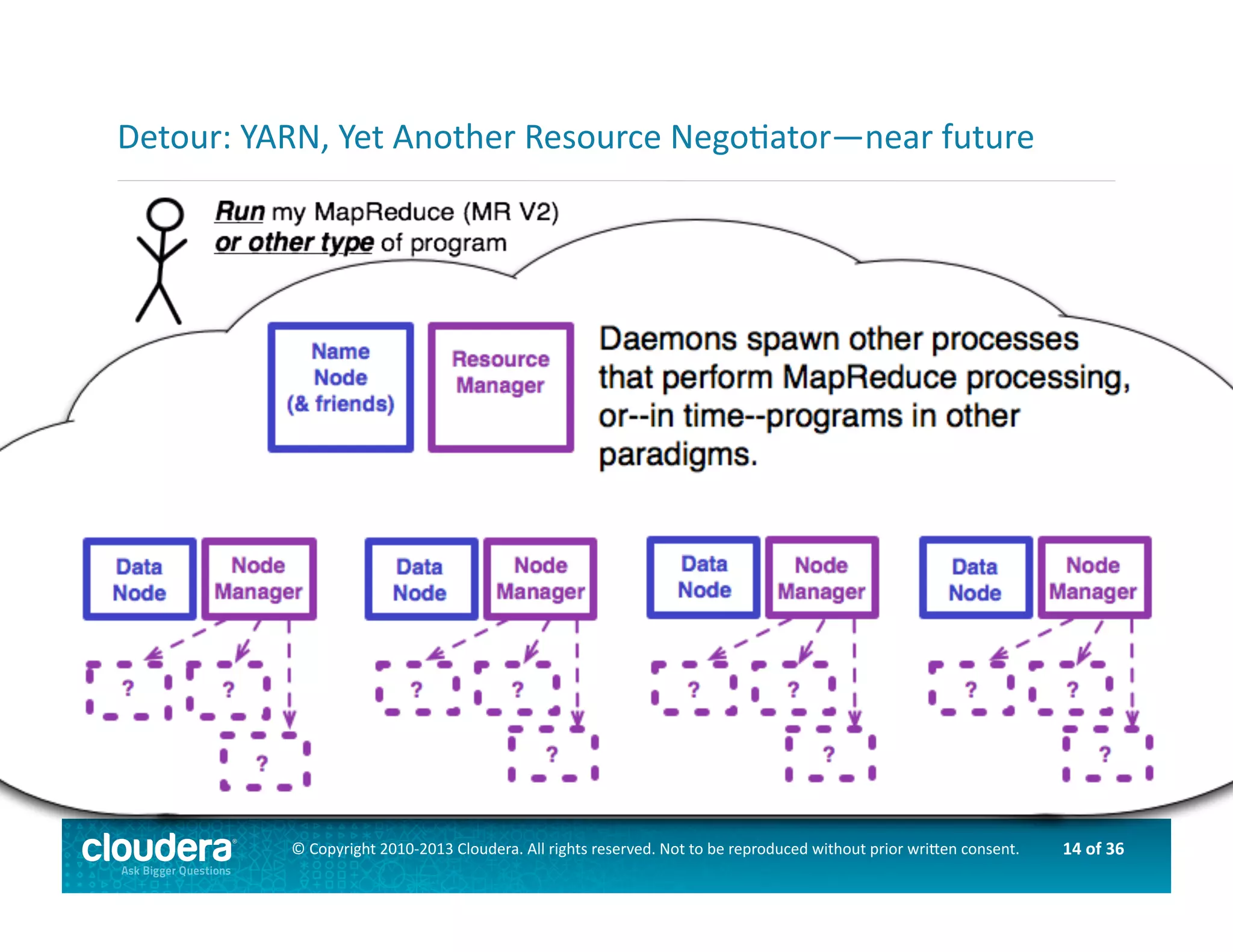

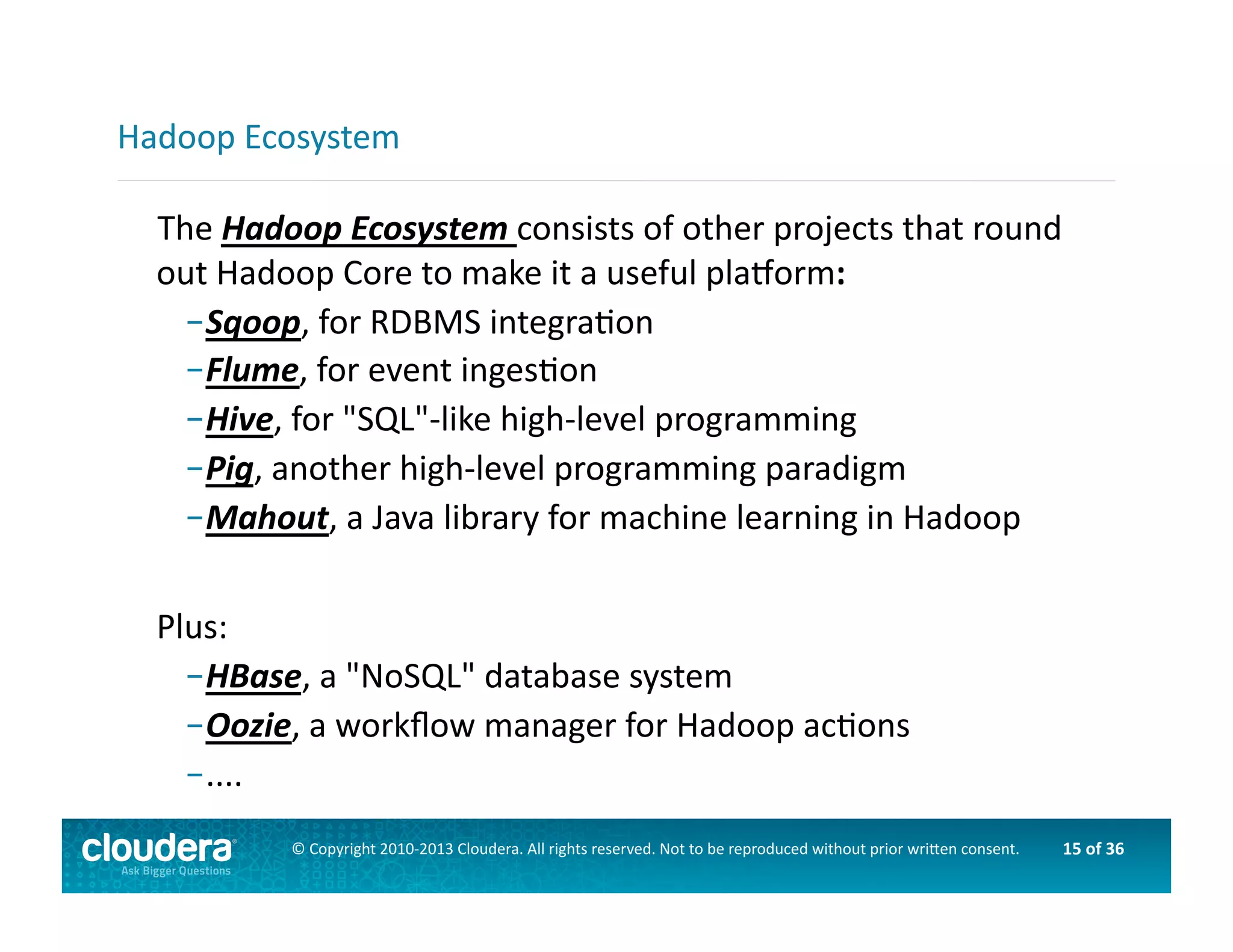

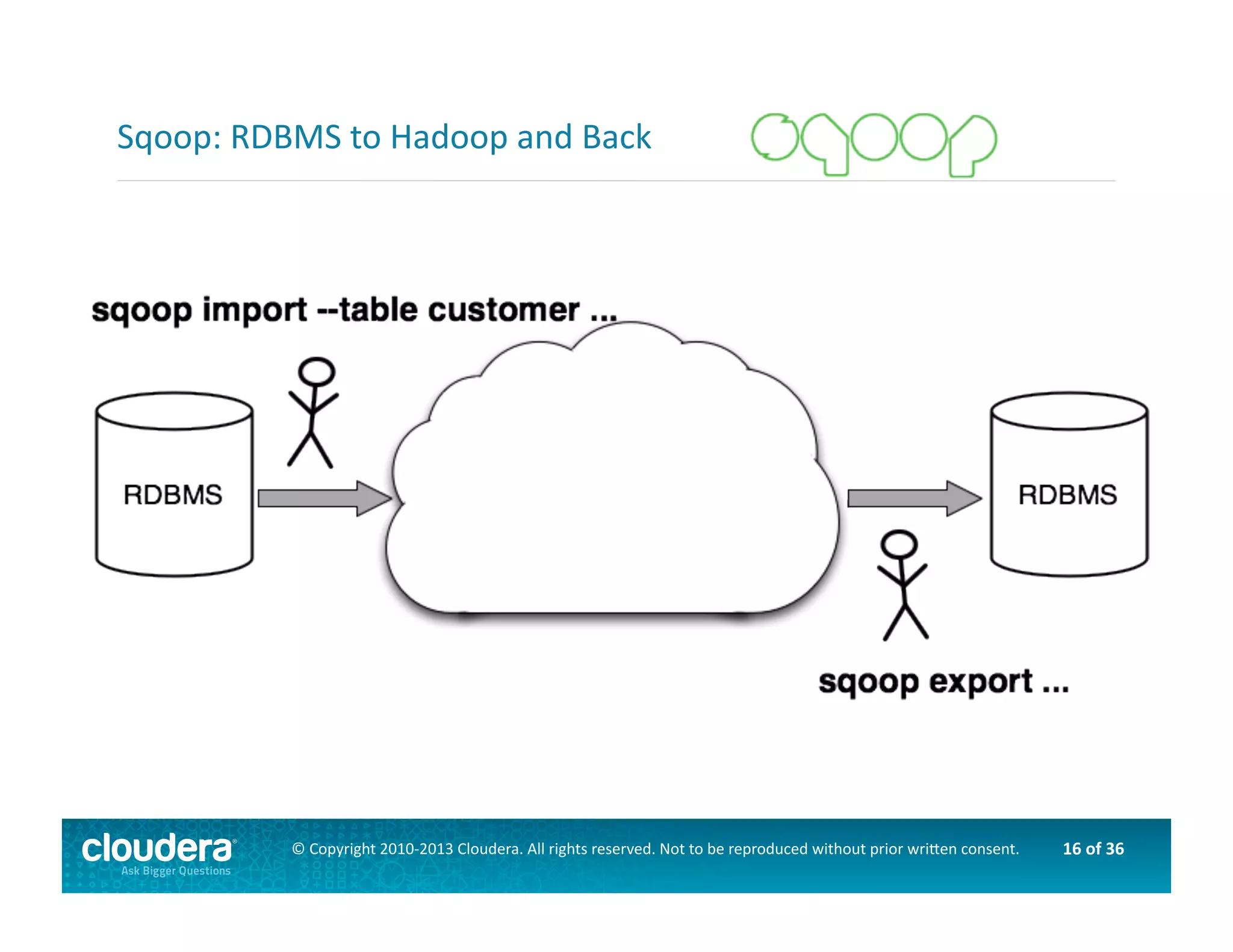

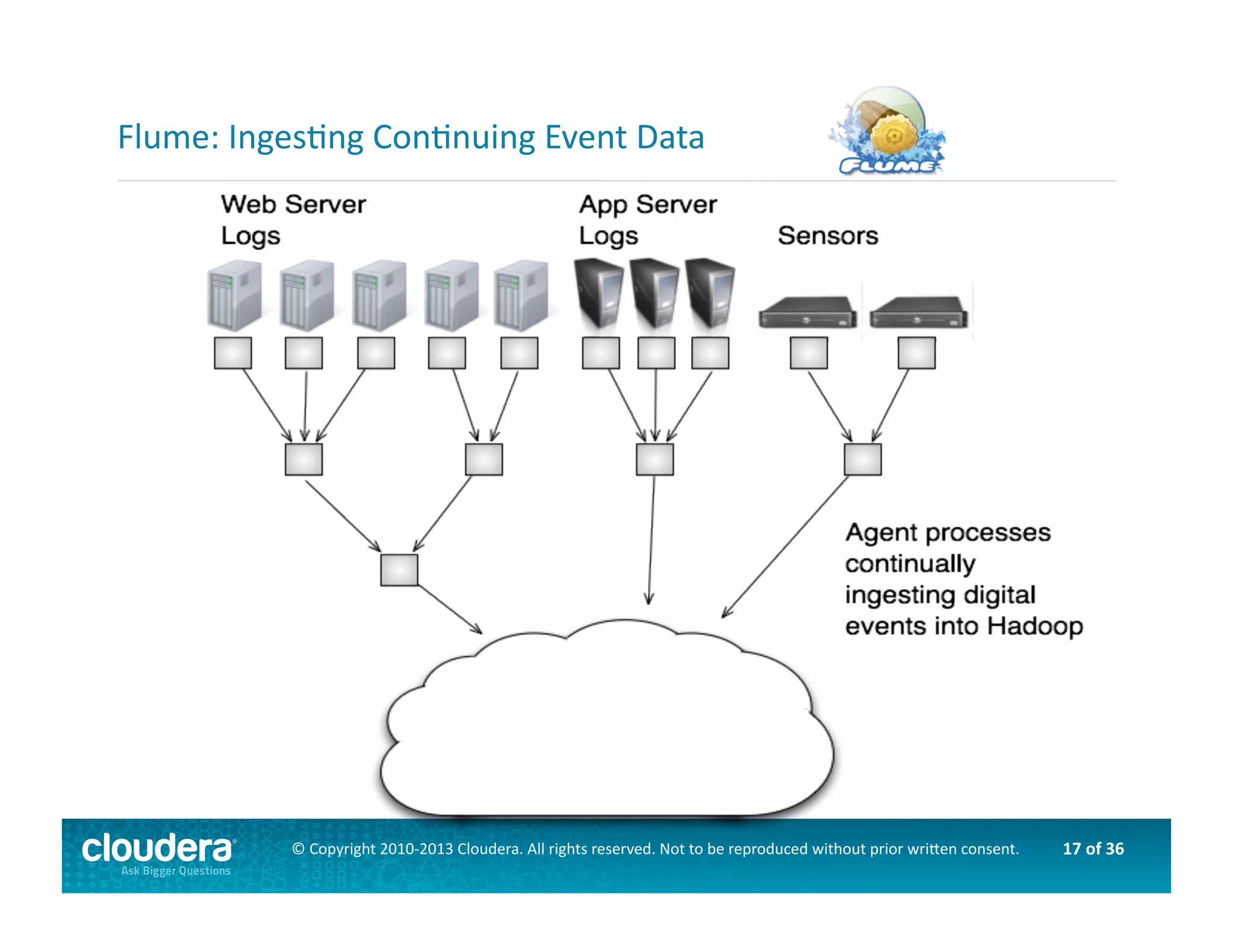

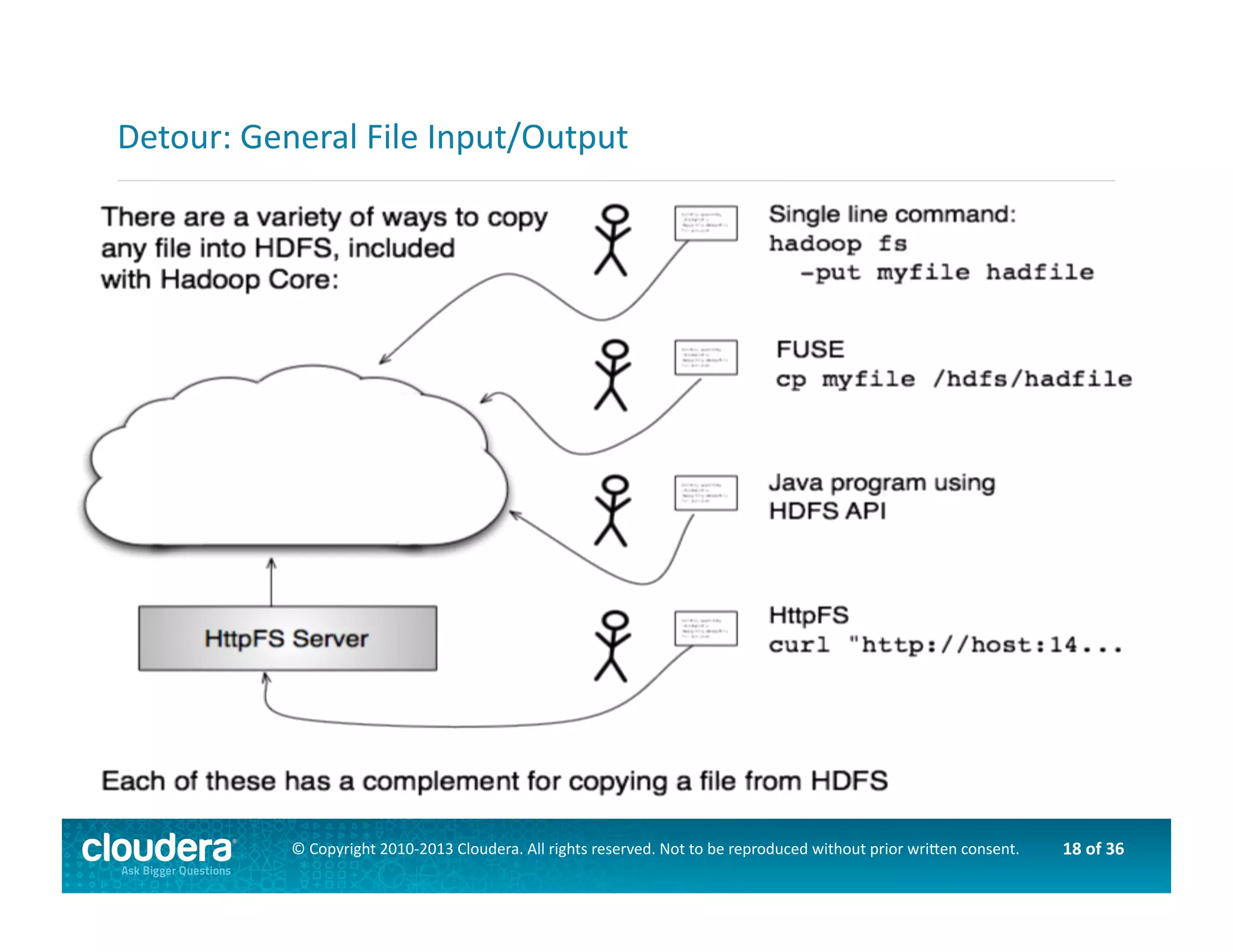

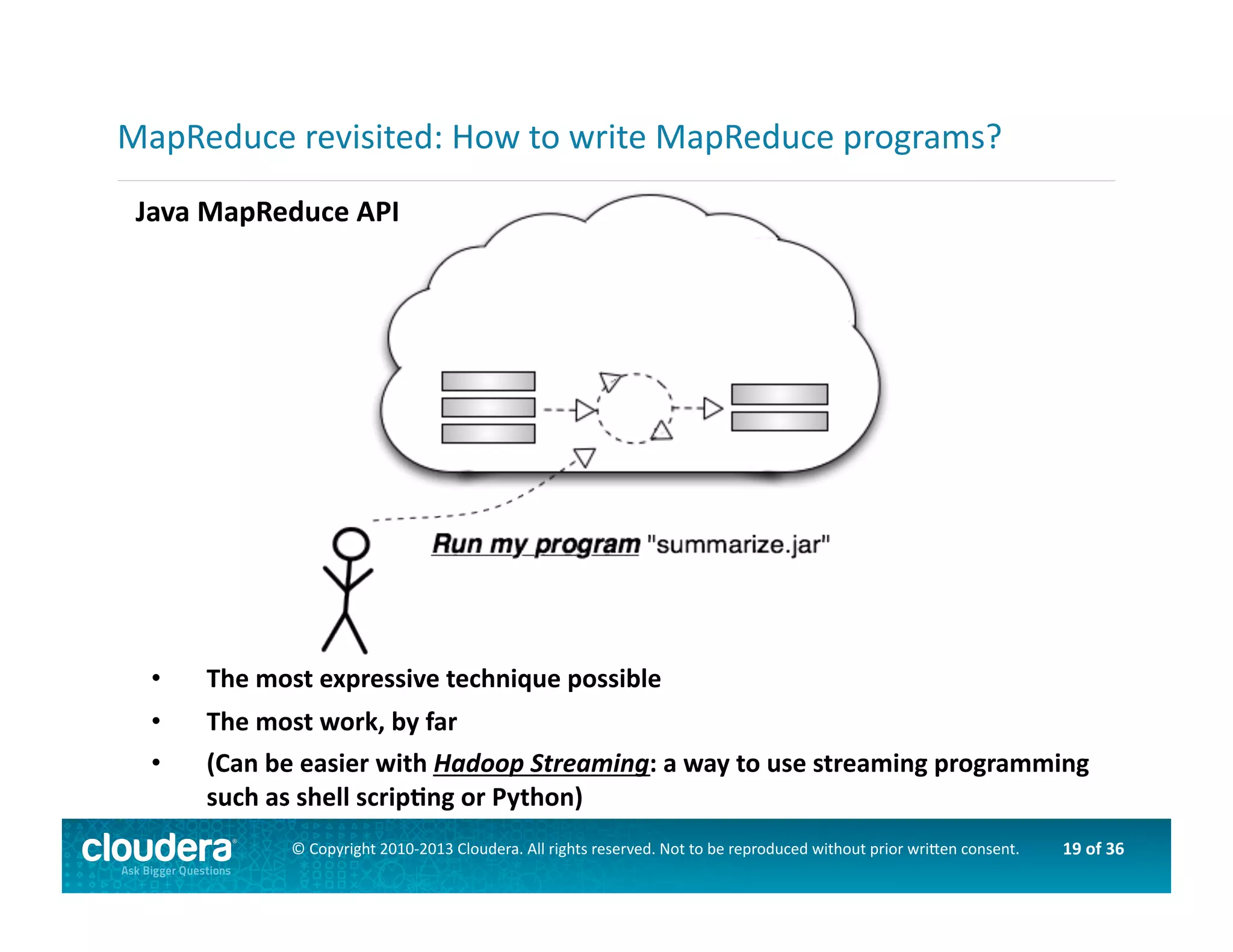

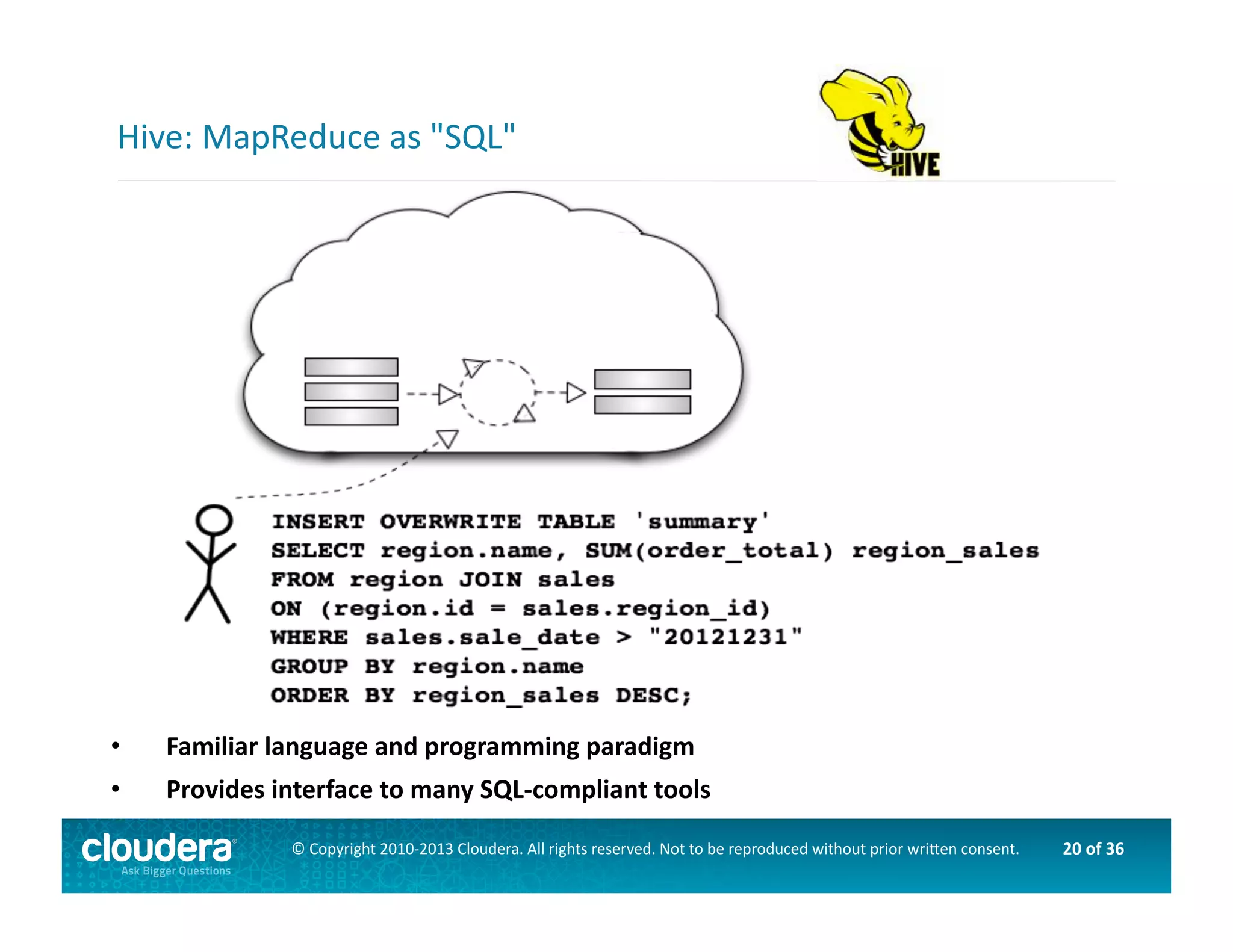

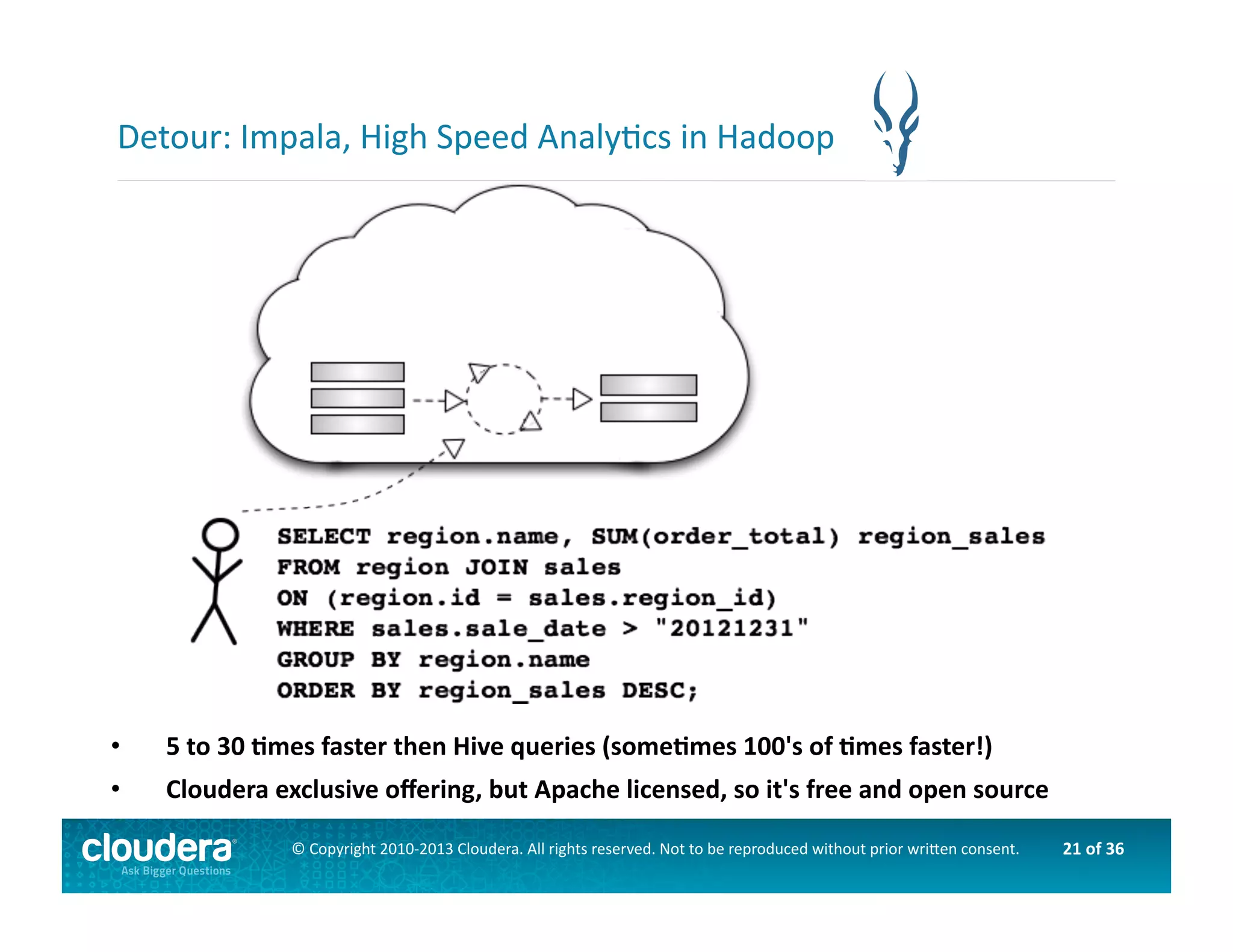

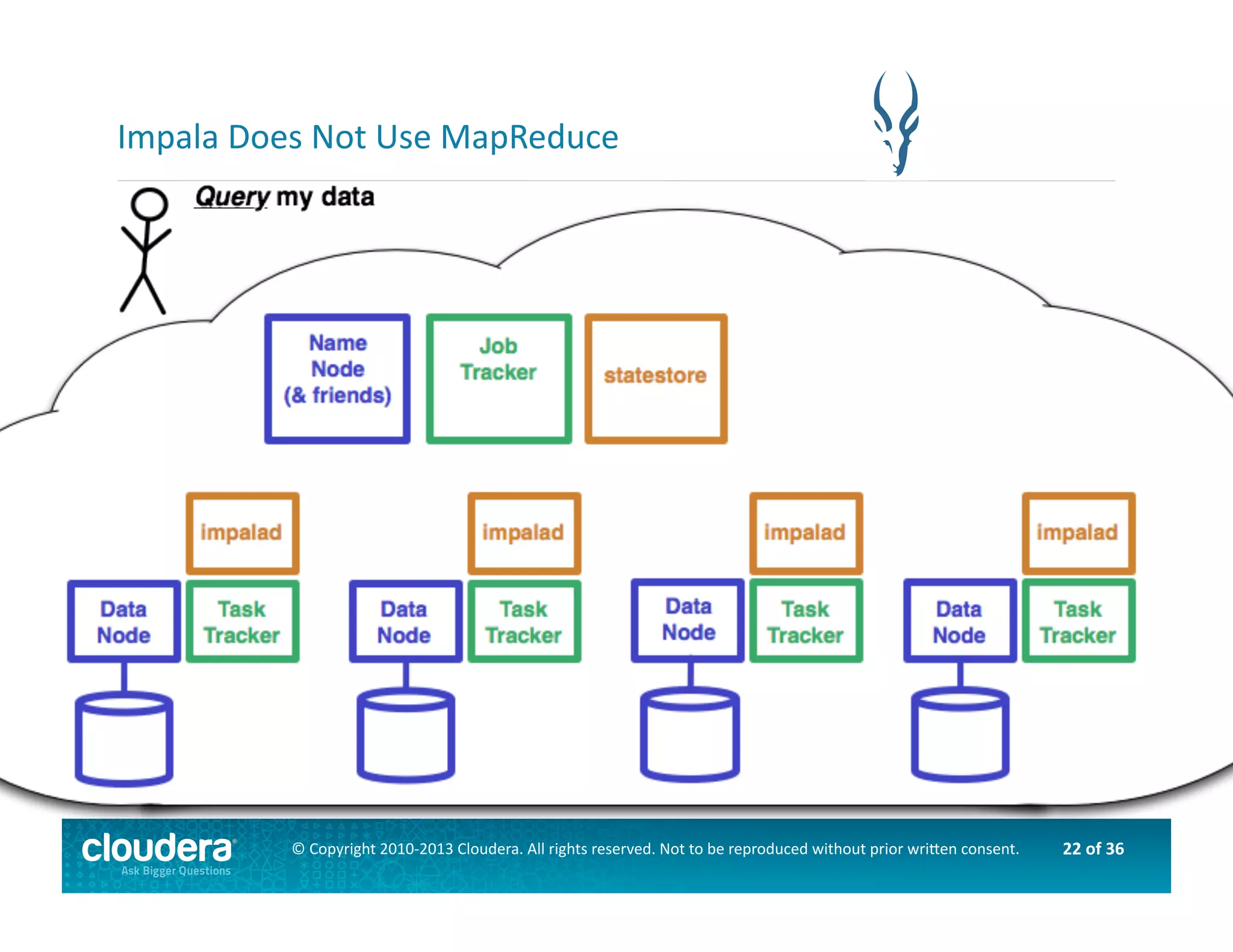

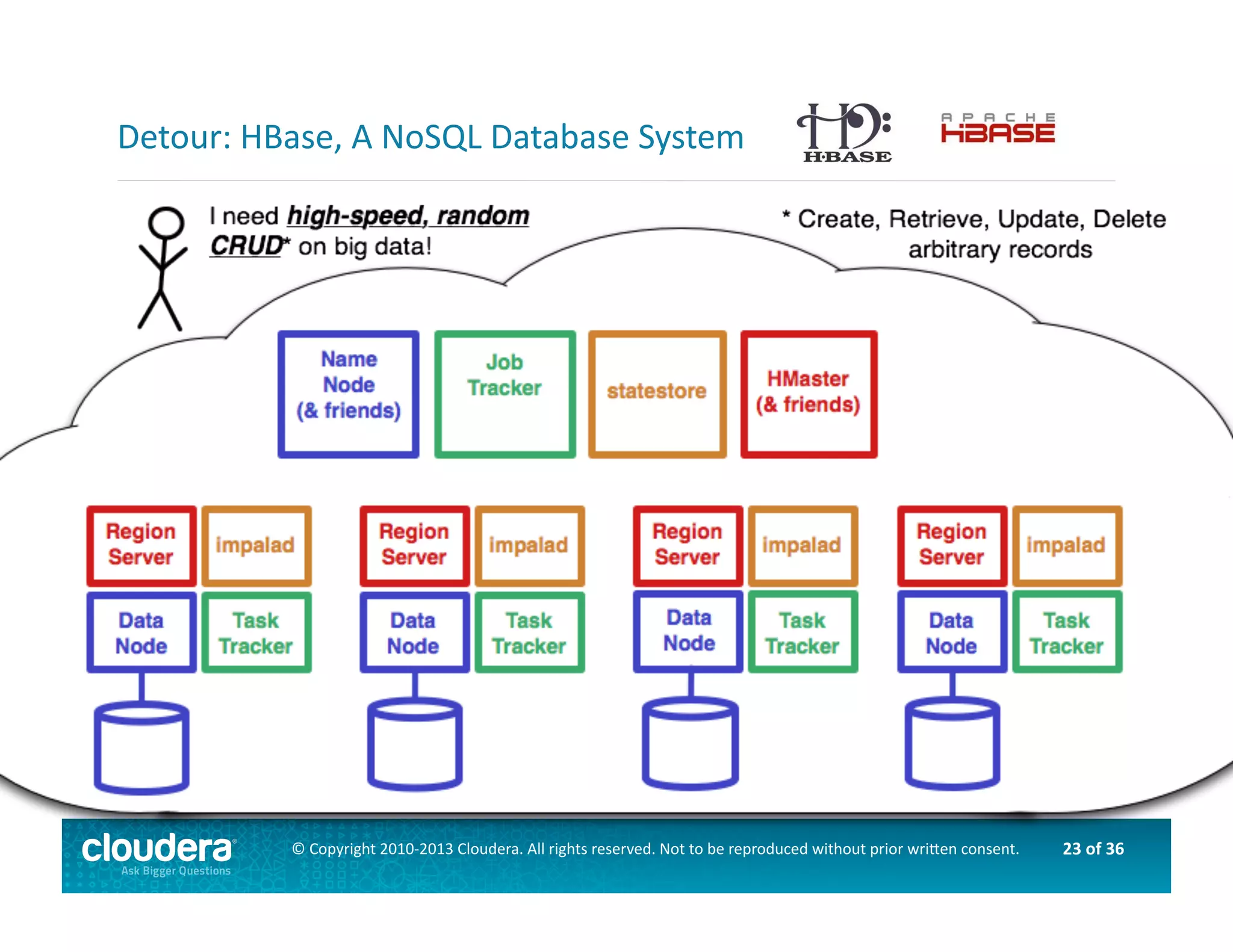

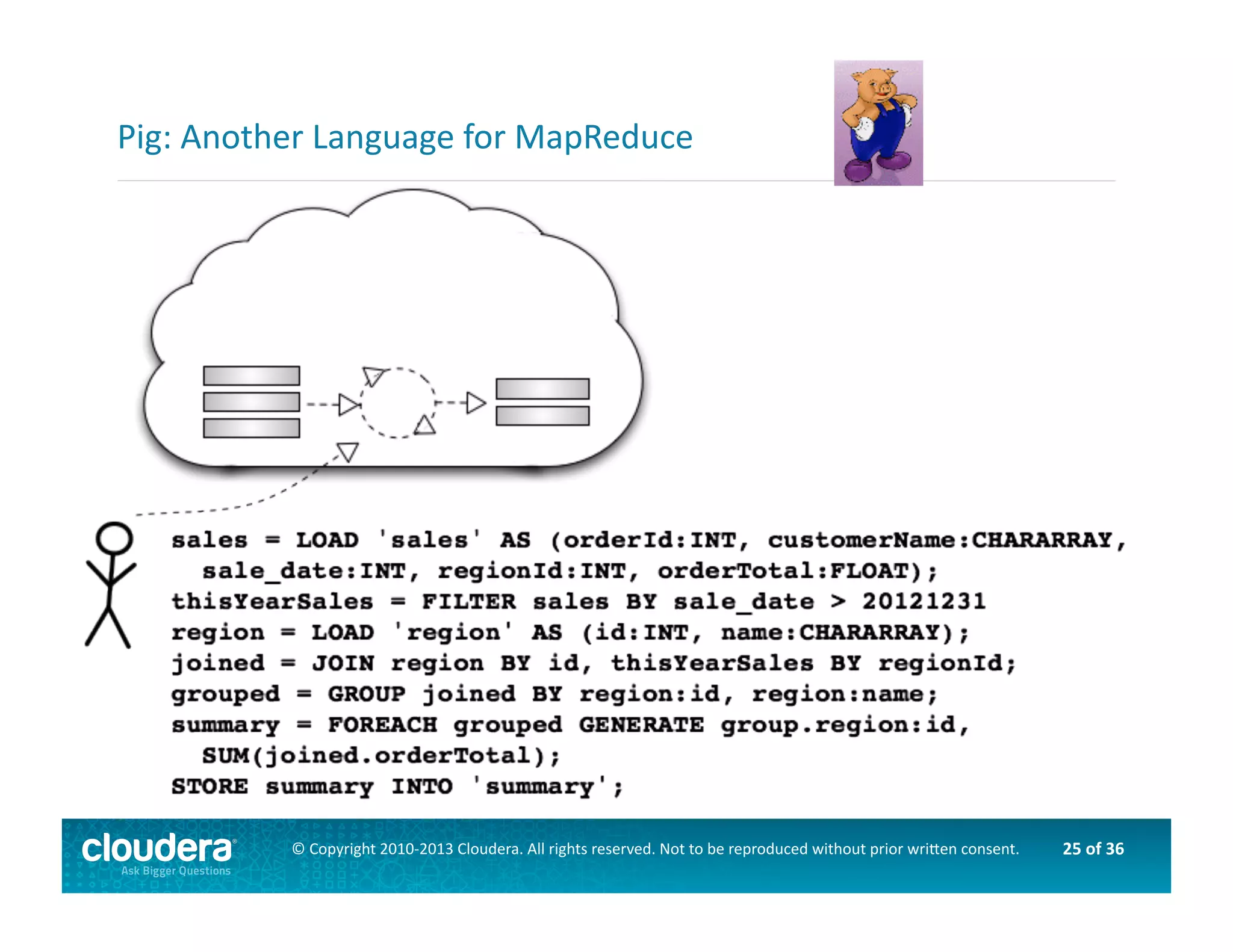

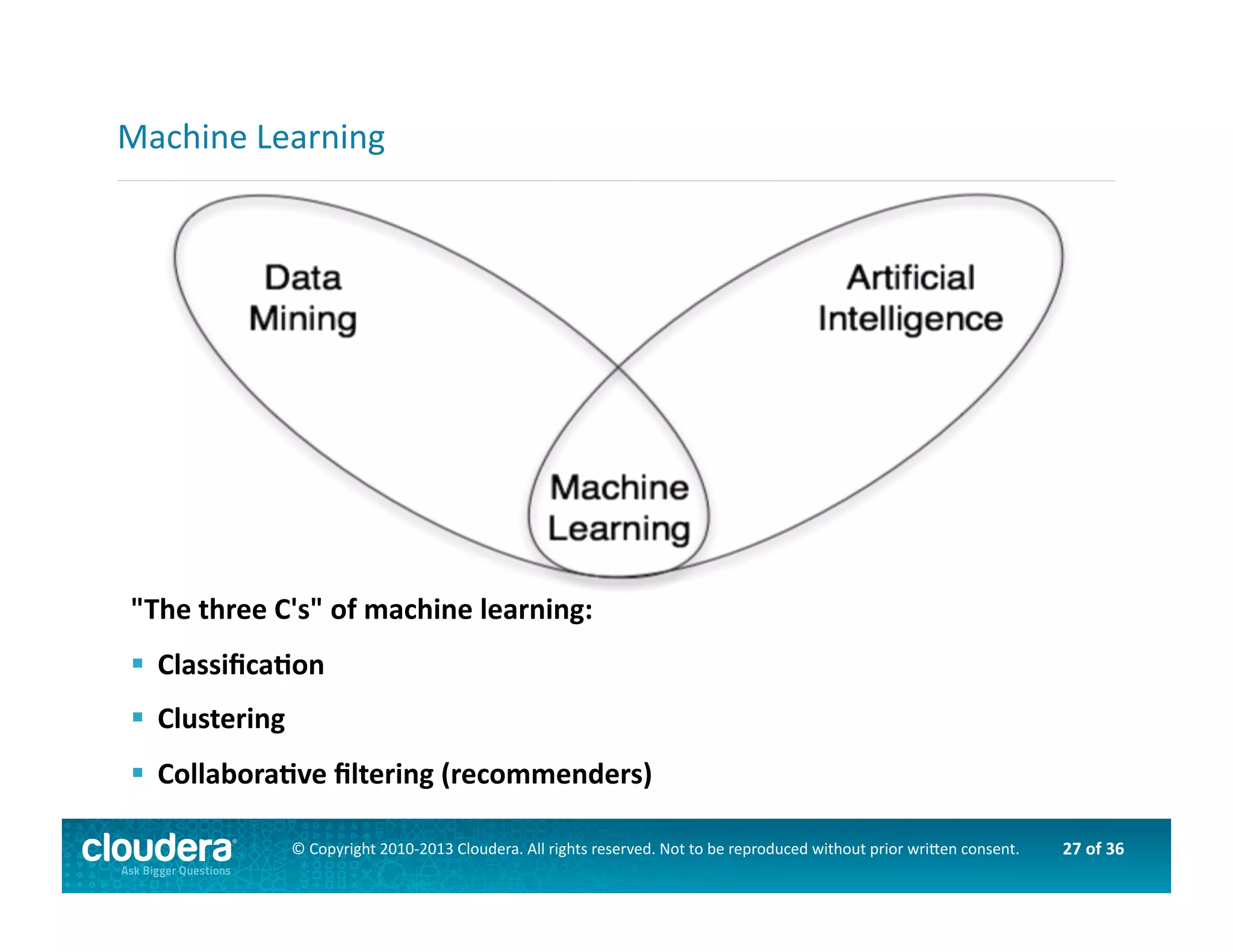

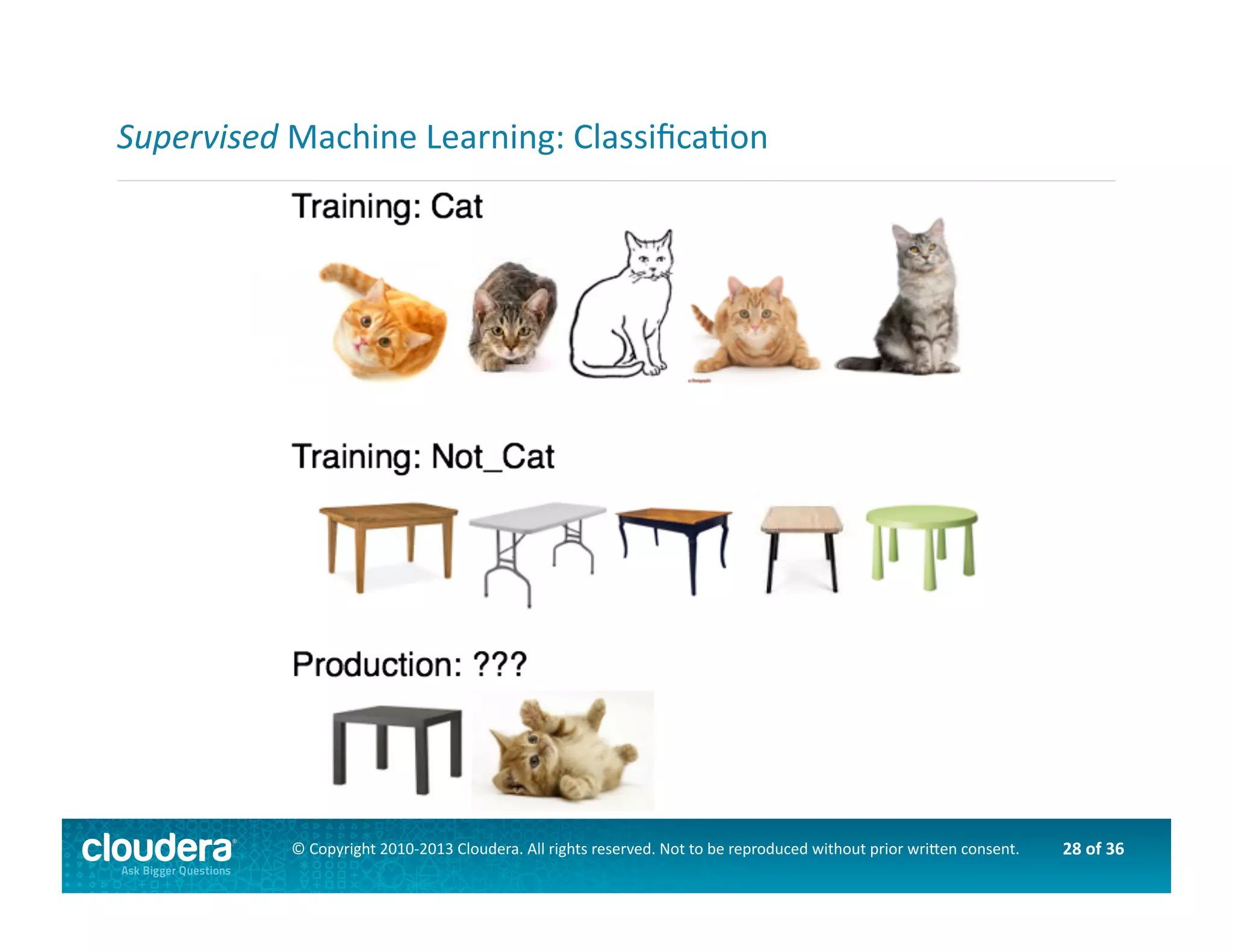

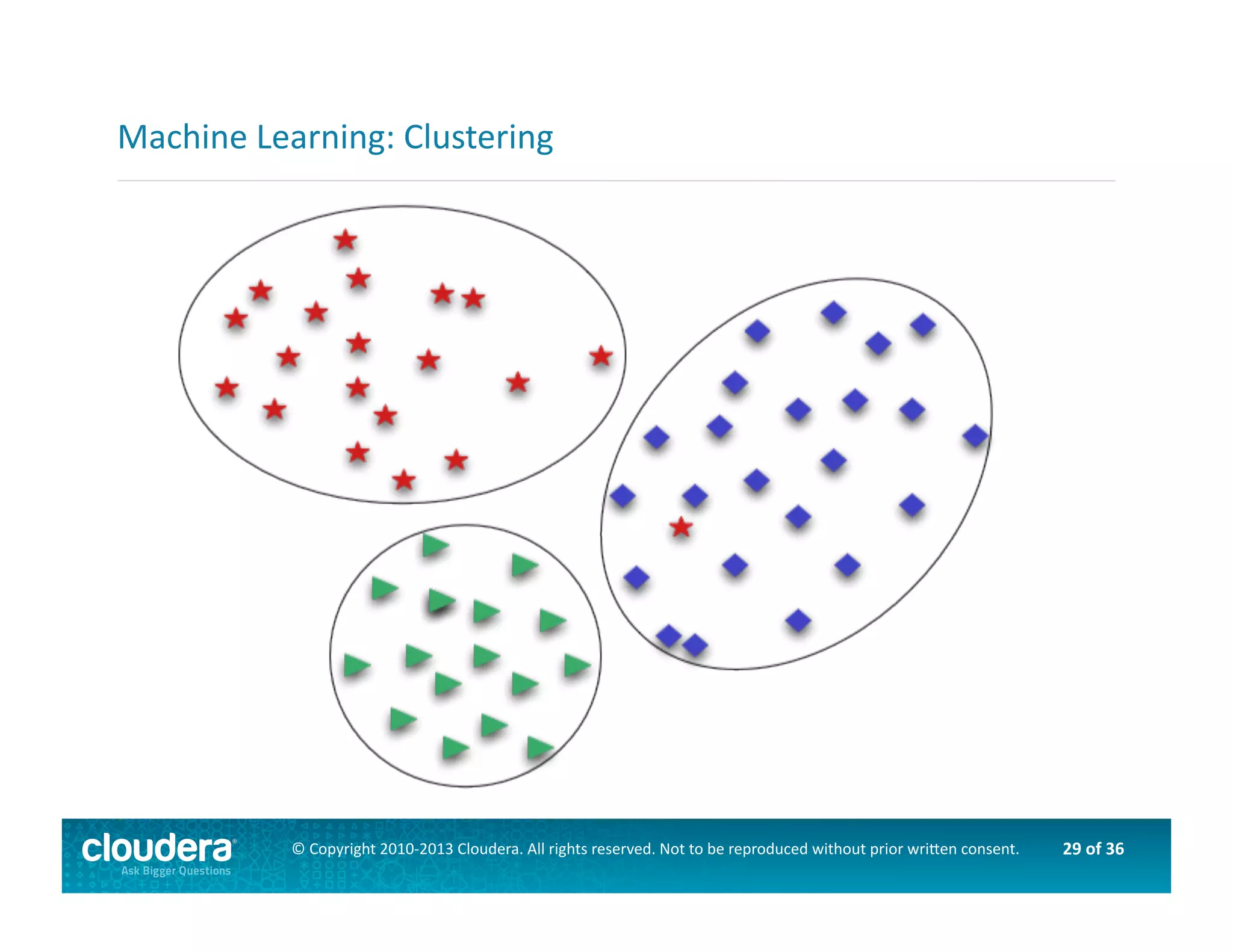

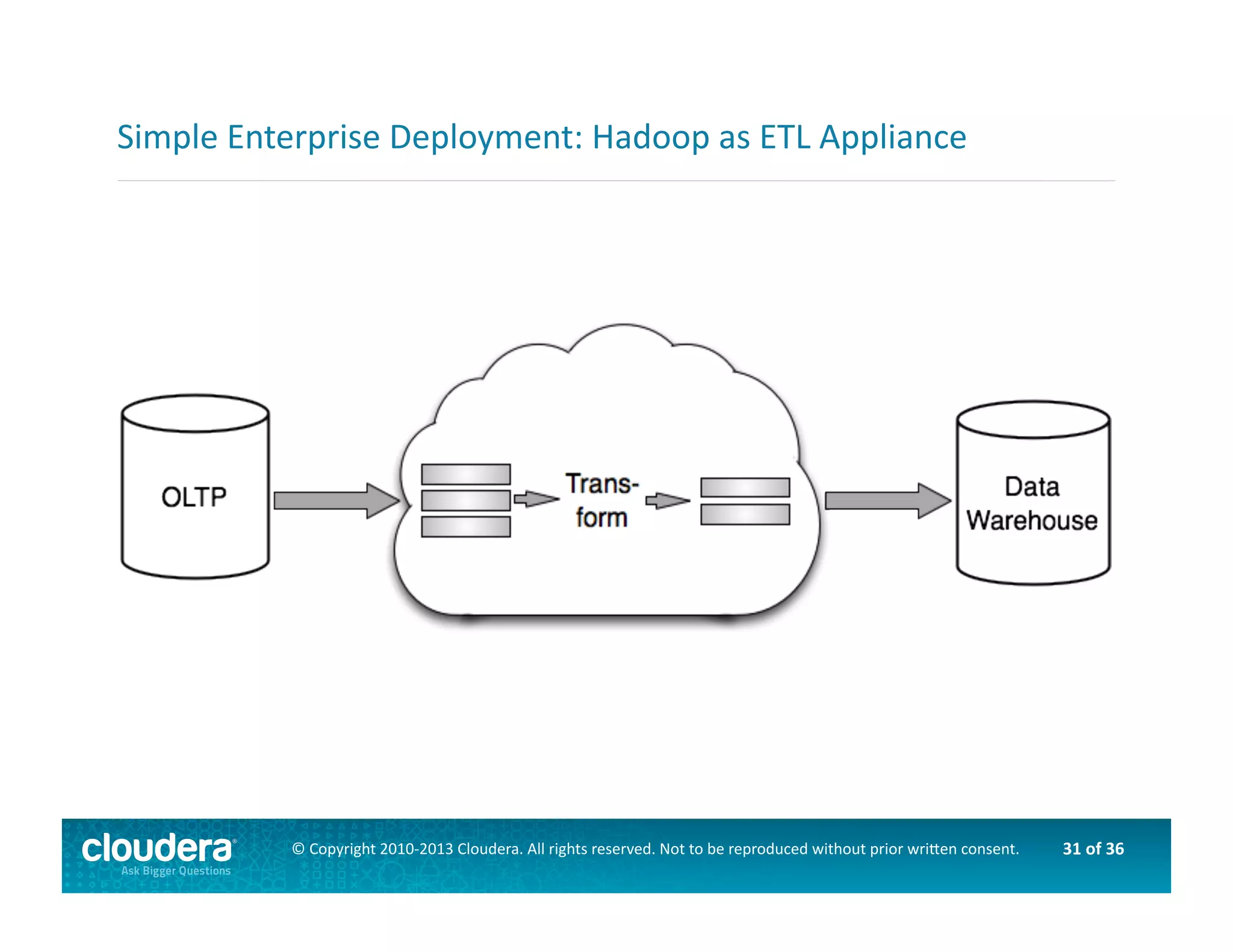

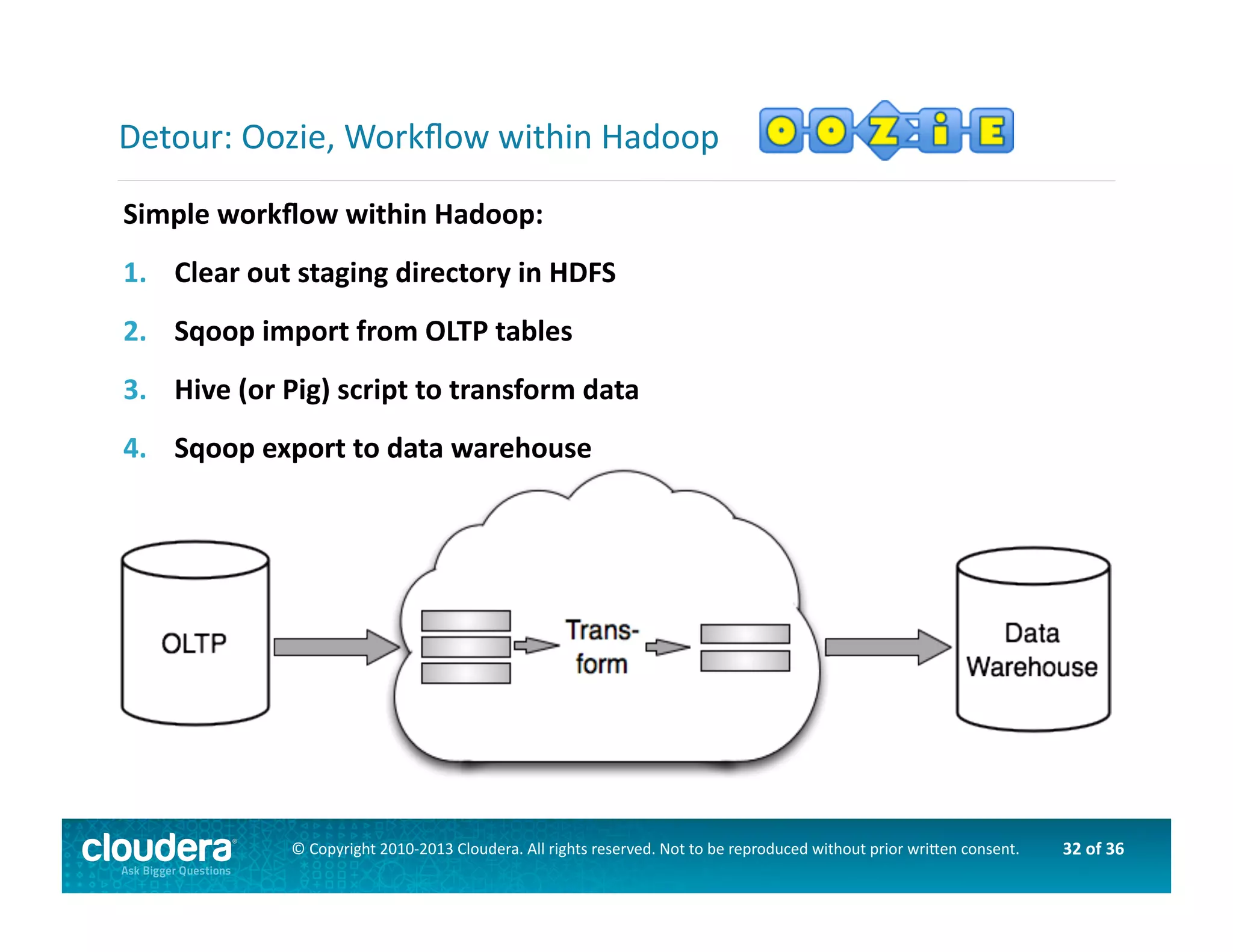

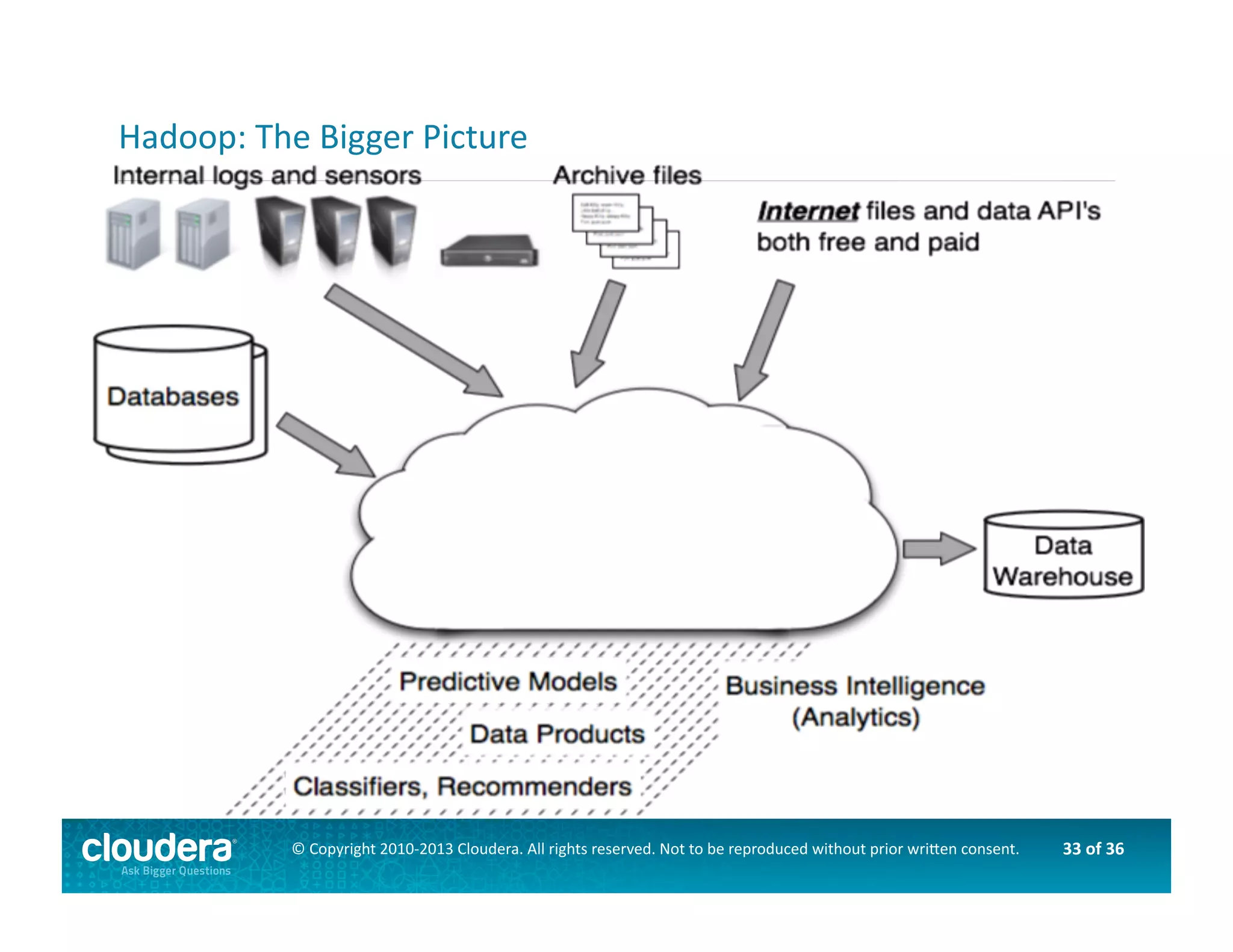

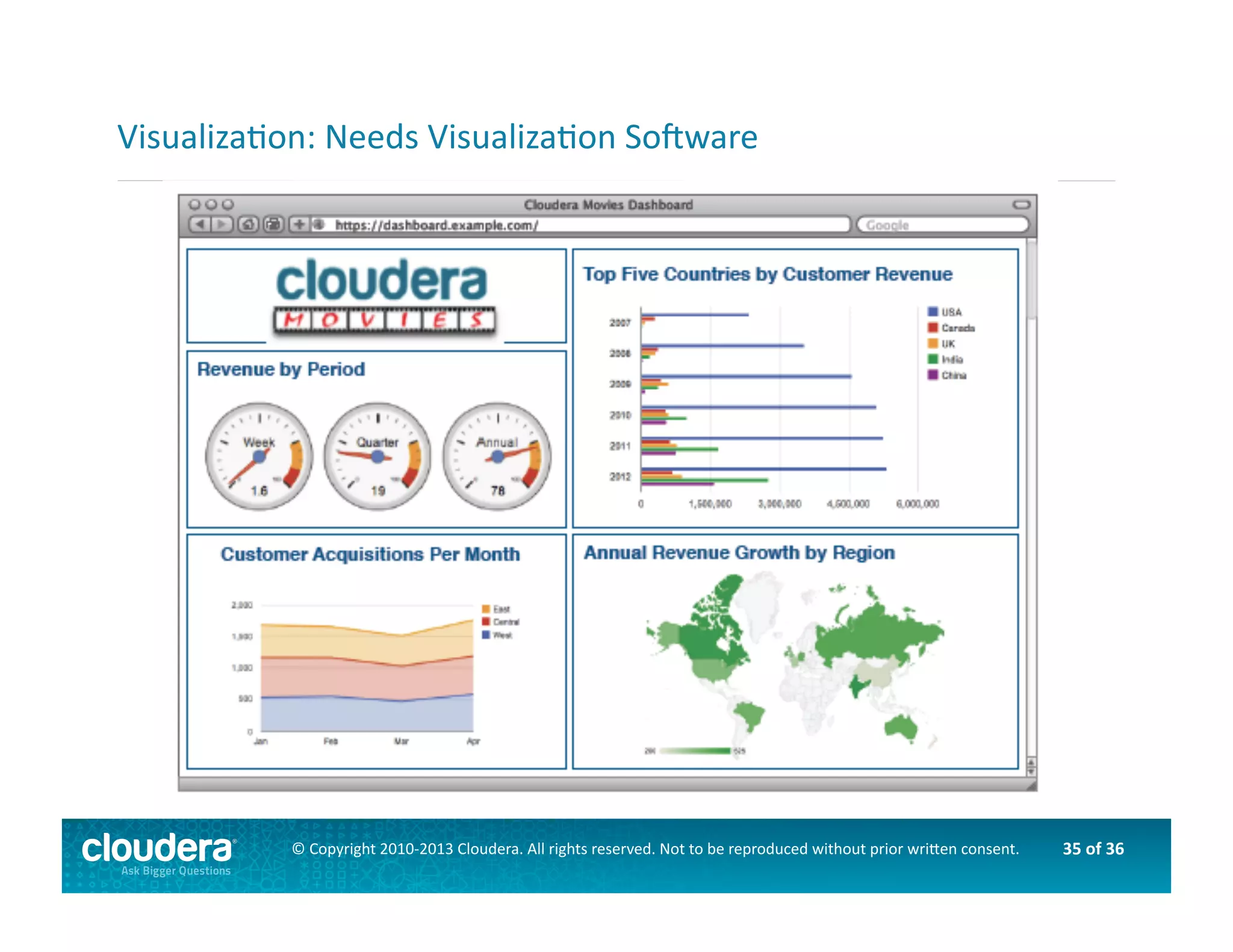

This document provides an introduction to data science using Hadoop, covering its core components like HDFS and MapReduce, as well as various ecosystem tools such as Sqoop, Flume, Hive, and Mahout. It explains the strengths and weaknesses of Hadoop's core features and describes the role of data scientists in data wrangling, exploration, modeling, and product development. Additionally, it touches upon high-level programming languages and frameworks that enhance Hadoop's functionality for data analysis.