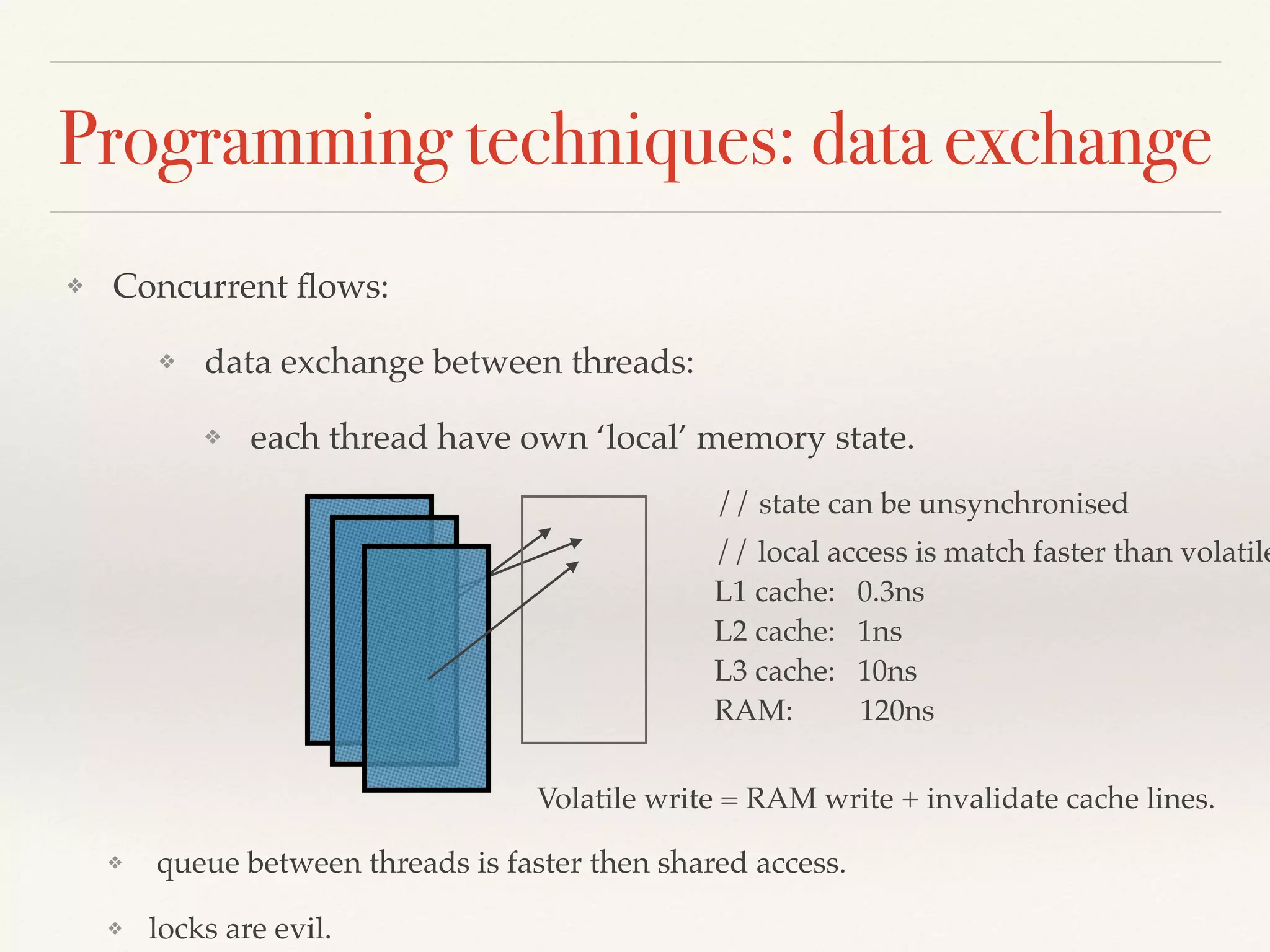

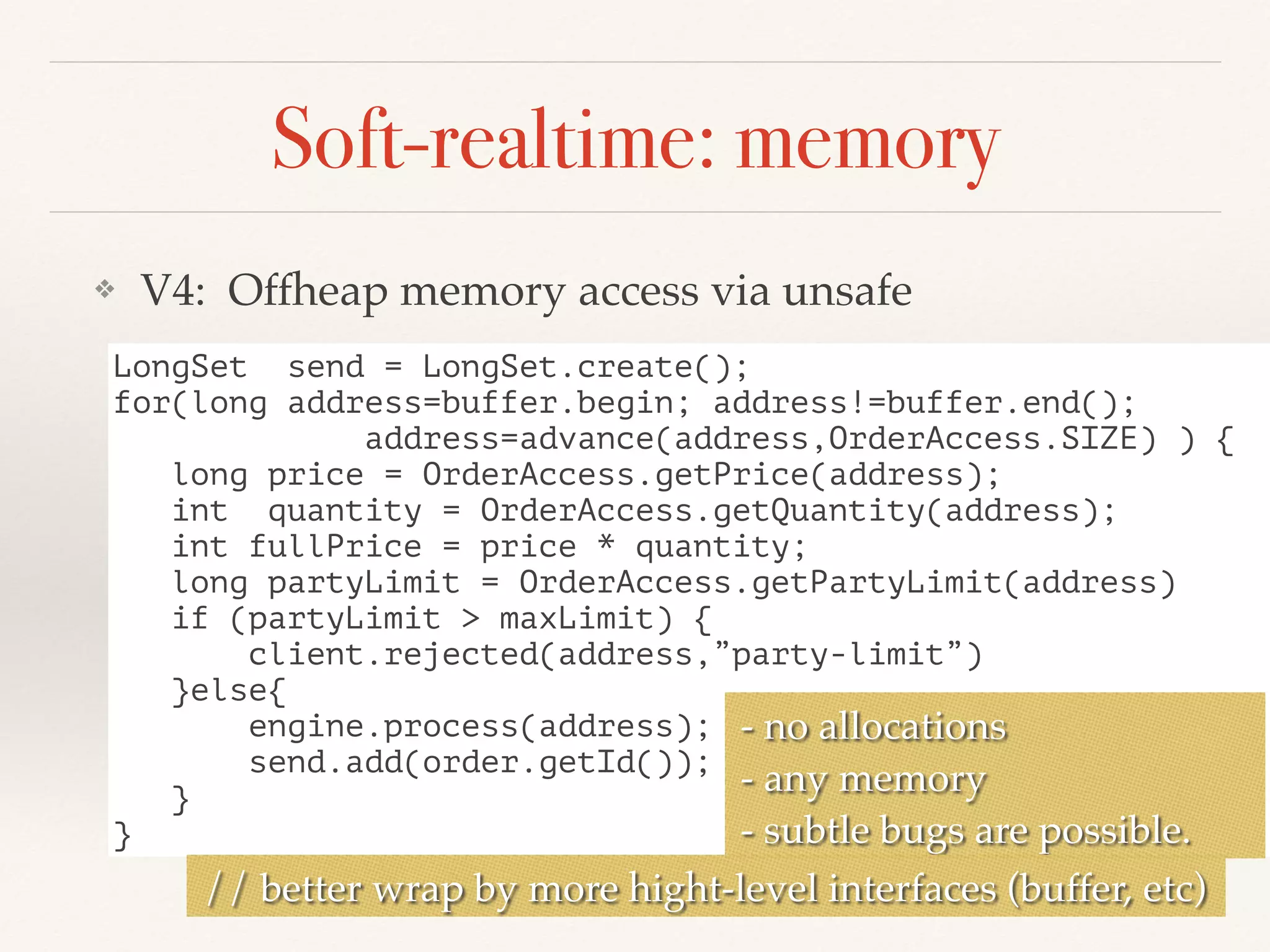

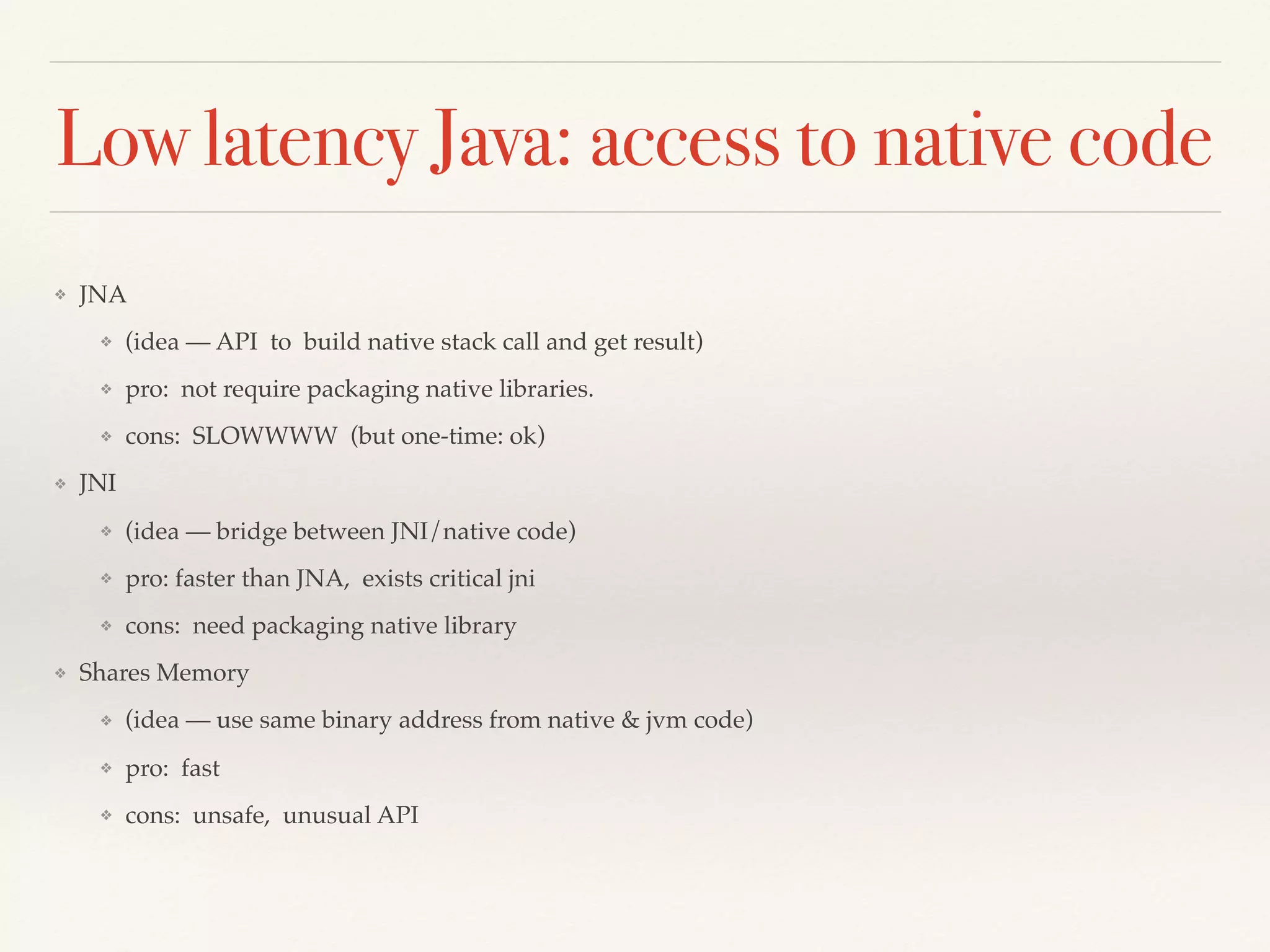

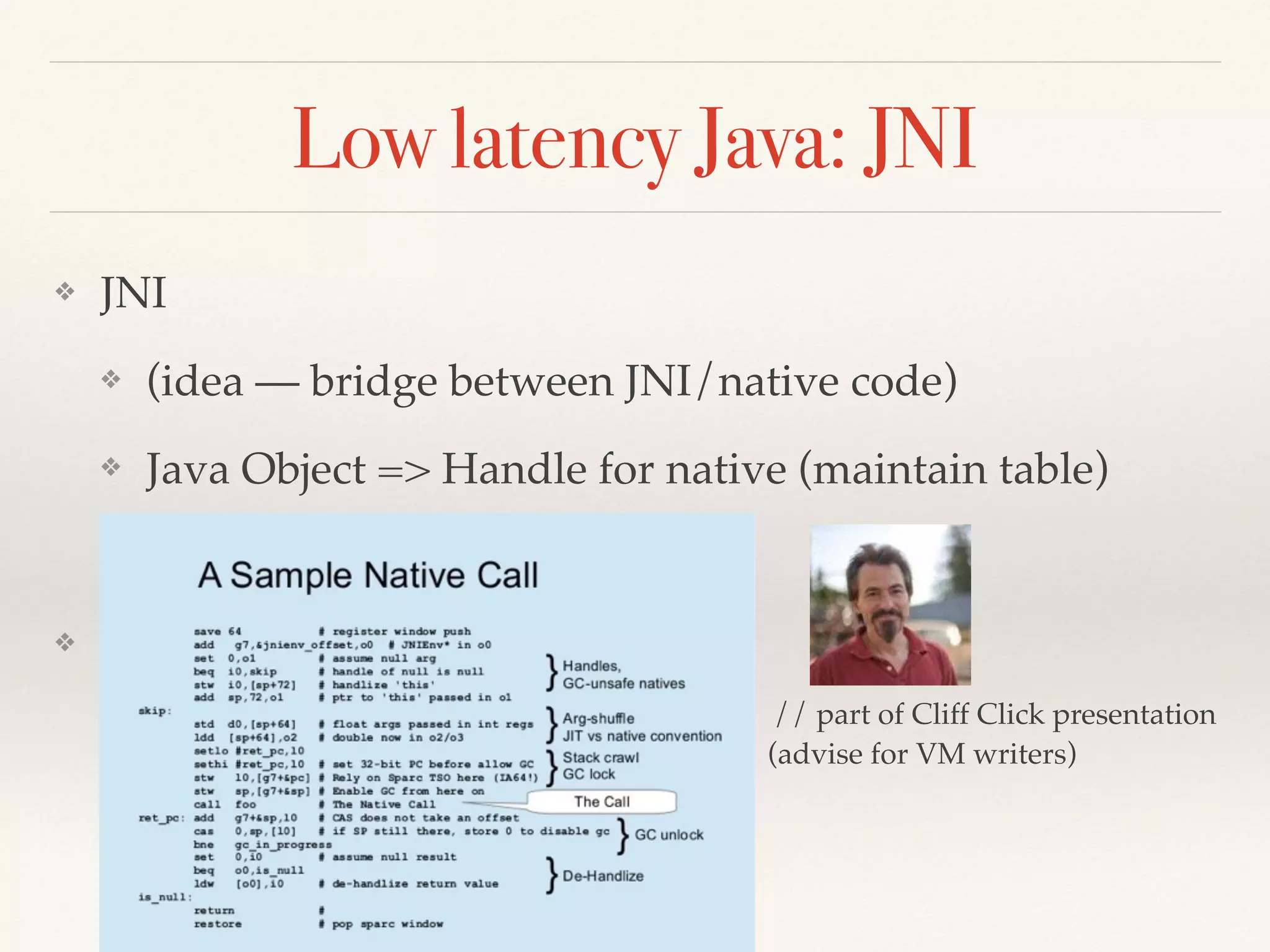

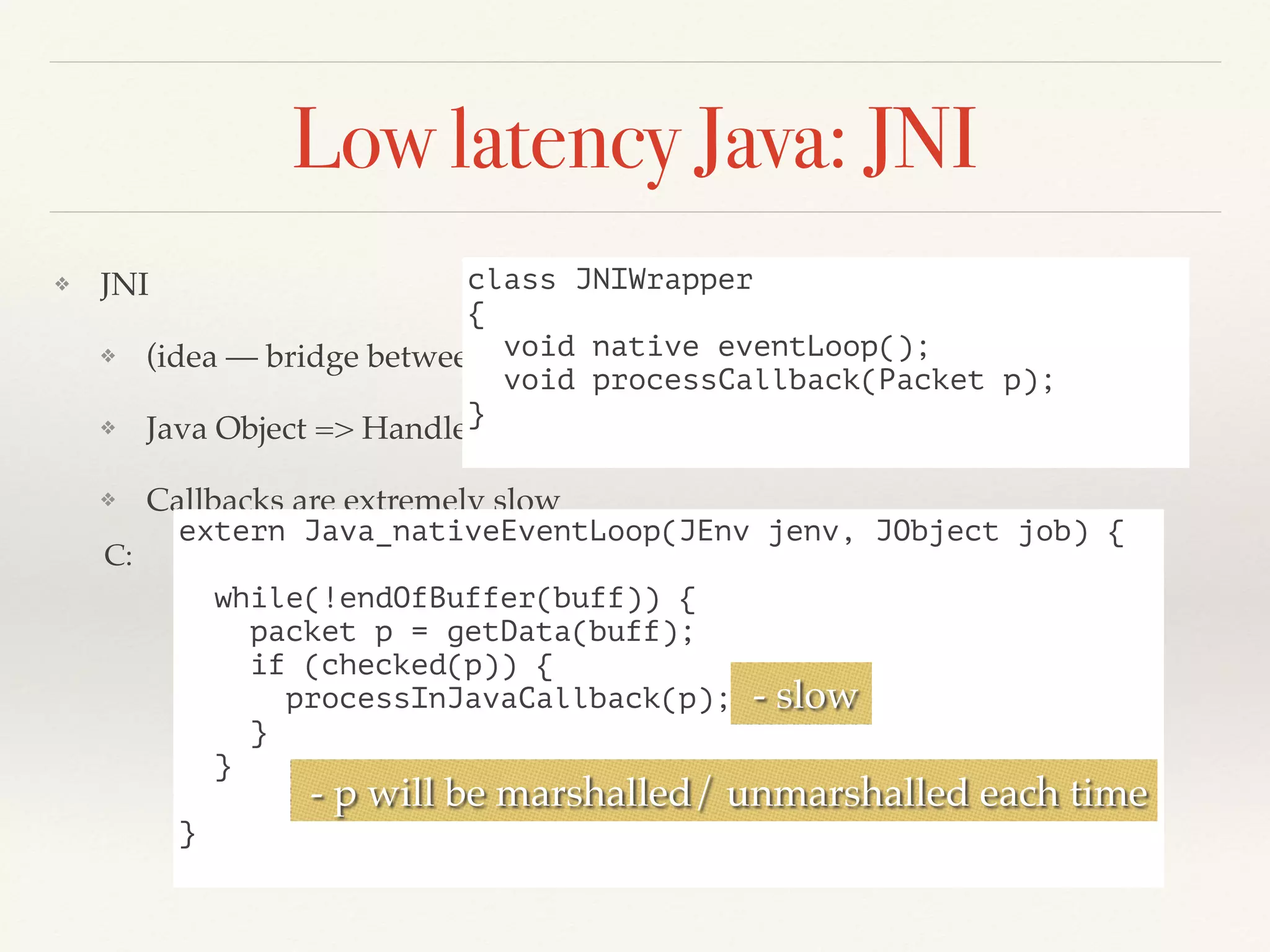

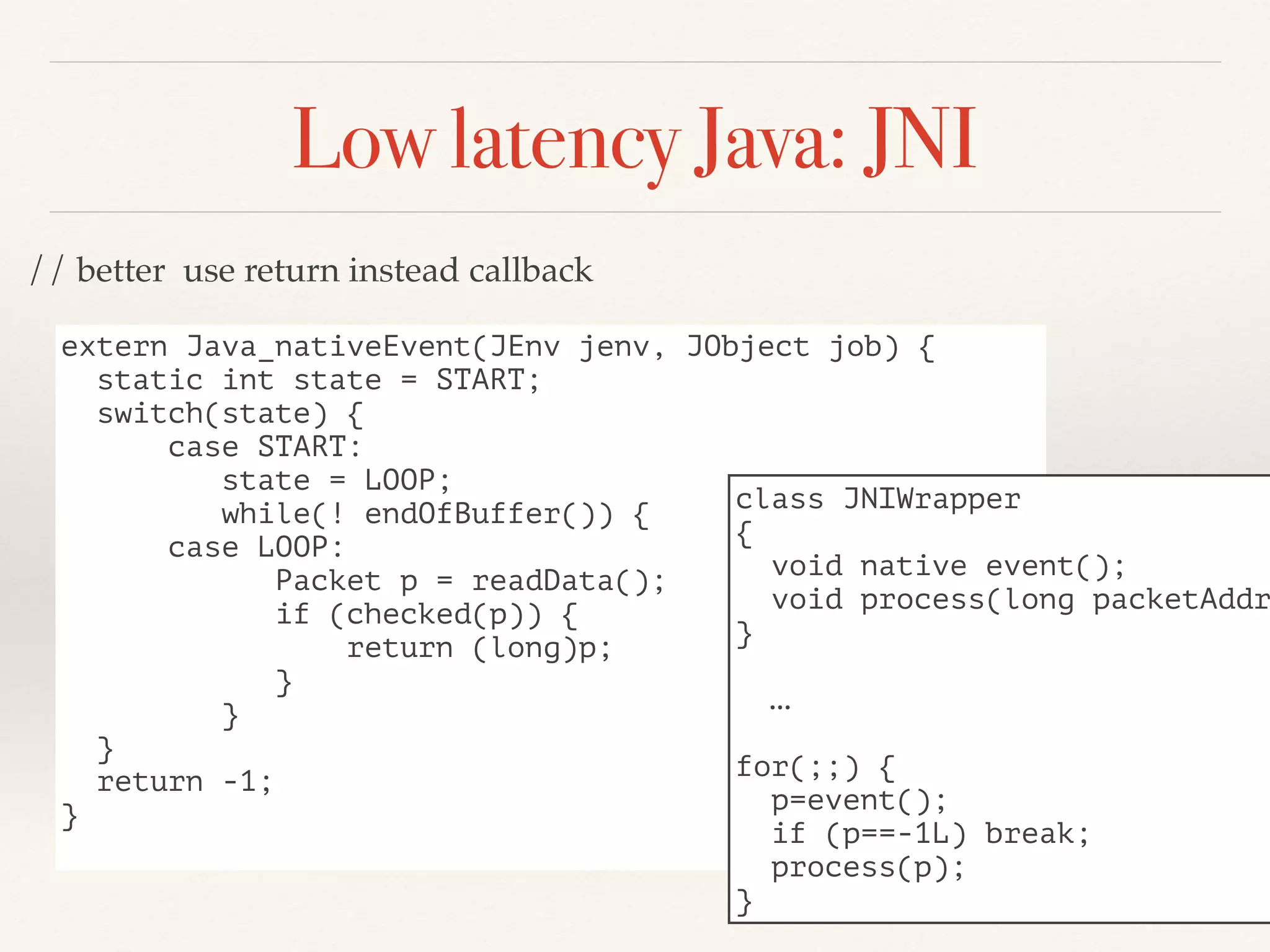

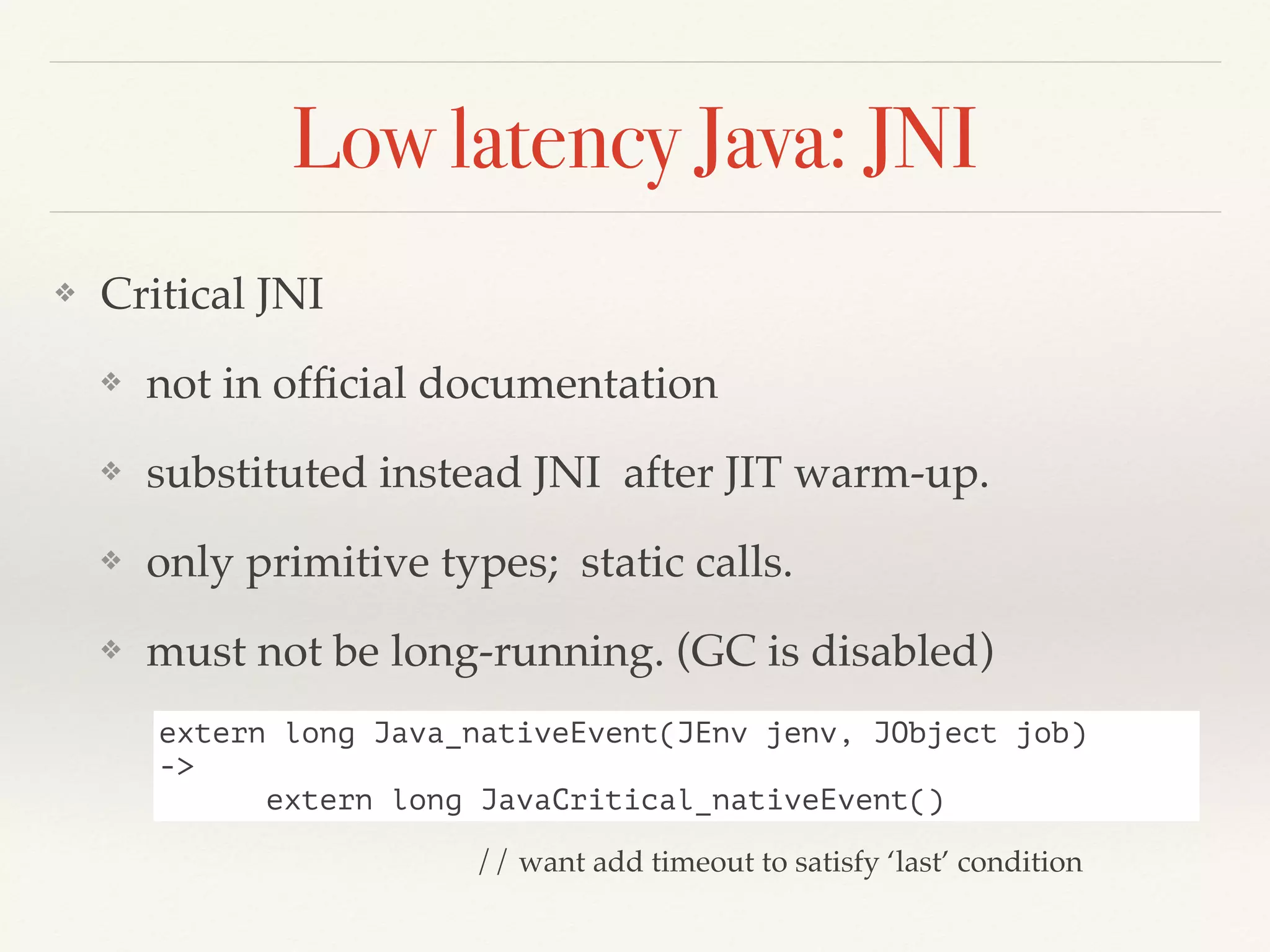

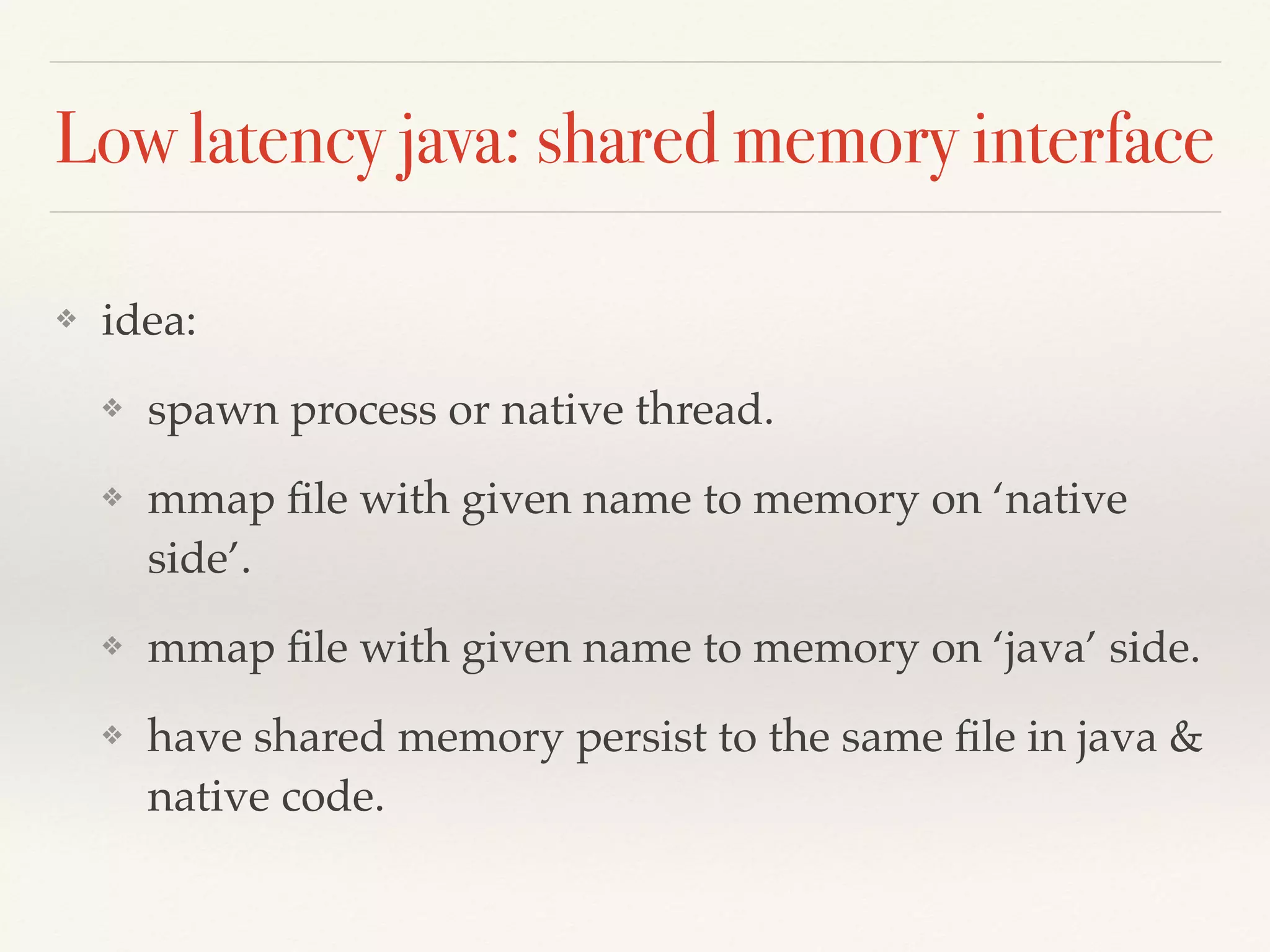

This document discusses programming techniques for low-latency Java applications. It begins by explaining what low-latency means and when it is needed. It then covers various techniques including: using concurrent flows and minimizing context switches; exchanging data between threads via queues instead of shared memory; preallocating objects to avoid allocations; and directly accessing serialized data instead of object instances. The document also discusses memory issues like garbage collection pauses and cache line contention. It covers alternatives for accessing native code like JNA, JNI, and shared memory. Critical JNI is presented as a faster option than regular JNI.

![softrealtime: why JVM (?)

❖ Balance (99 % - ‘Usual code’, 1% -

soft realtime)

❖ crossing JVM boundaries is expensive.

❖ don’t do this. [if you can]](https://image.slidesharecdn.com/ll2016-160331151644/75/Java-low-latency-applications-6-2048.jpg)

![Softrealtime & JVM: Programming techniques.

❖ Don’t guess, know.

❖ Measure

❖ profiling [not alw. practical];

❖ sampling (jvm option: -hprof) [jvisualvm, jconsole, flight recorder]

❖ log safepoint / gc pauses

❖ Experiments

❖ benchmarks: http://openjdk.java.net/projects/code-tools/jmh/

❖ critical patch: N2N flow.

❖ Know details, how code is executed.](https://image.slidesharecdn.com/ll2016-160331151644/75/Java-low-latency-applications-7-2048.jpg)

![Programming techniques: Concurrent models

❖ Concurrent flows:

❖ minimise context switches:

❖ don’t switch [ number of flows < number of all

processor cores].

❖ Java: main-thread + service-thread + listener-

thread; [ user threads: cores-3 ]

❖ pin thread to core [thread affinity]

❖ JNI [JNA], exists OSS libraries [OpenHFT]](https://image.slidesharecdn.com/ll2016-160331151644/75/Java-low-latency-applications-8-2048.jpg)

![Programming techniques: memory issues

❖ GC

❖ young generation (relative short)

❖ full.

❖ [Stop-the-world], pause ~ heap size

❖ Contention

❖ flushing cache lines is expensive](https://image.slidesharecdn.com/ll2016-160331151644/75/Java-low-latency-applications-10-2048.jpg)

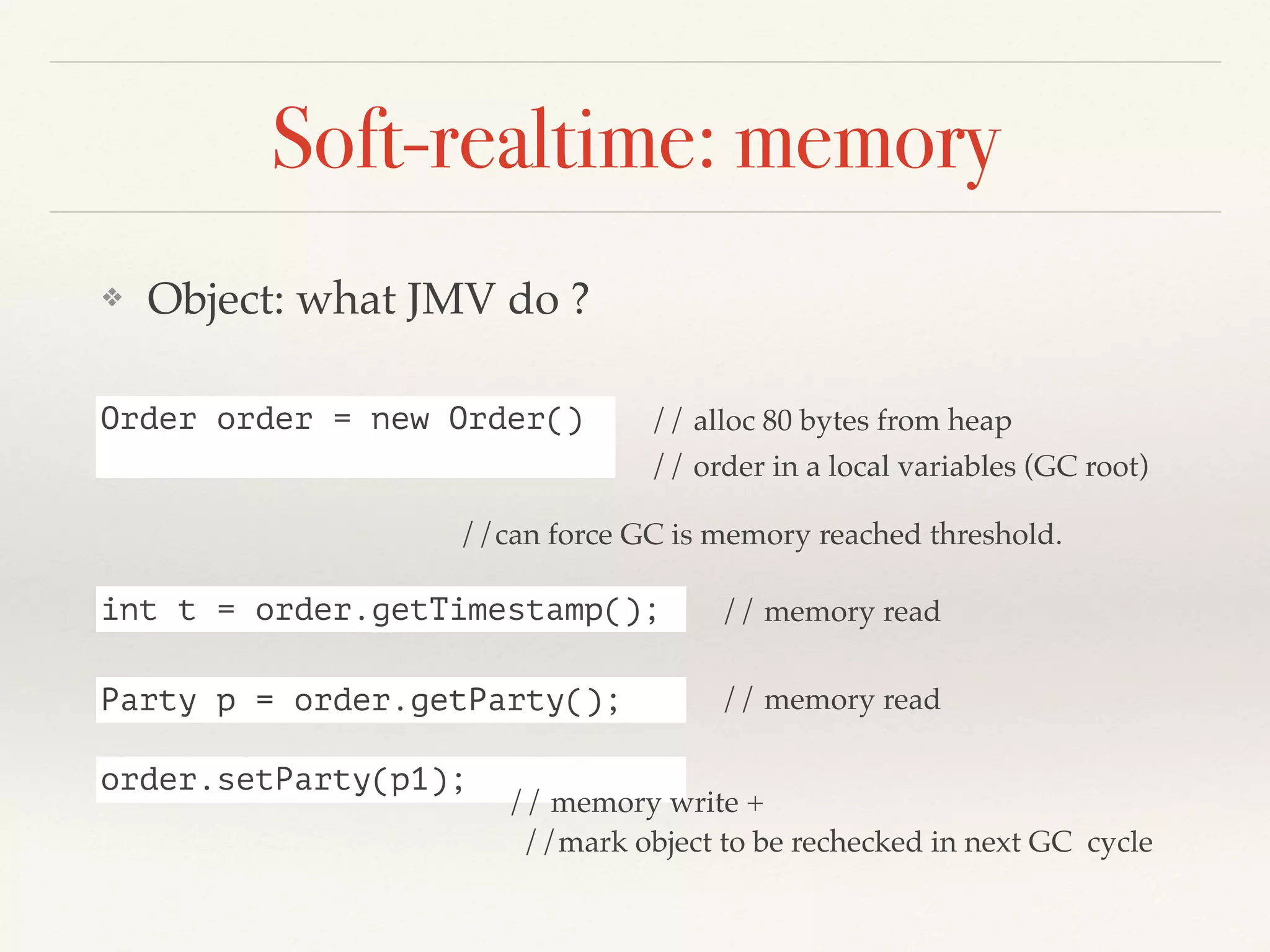

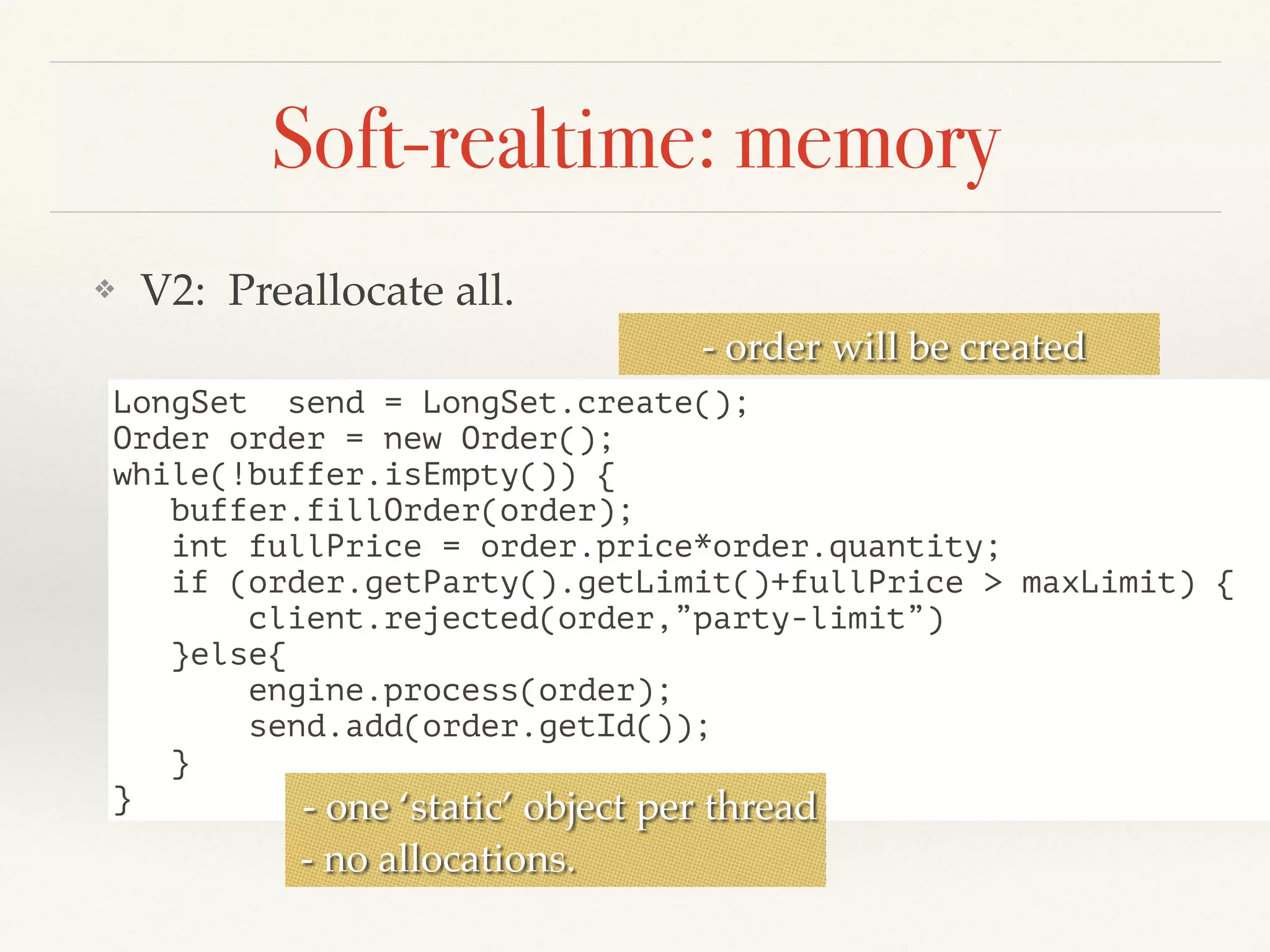

![Soft-realtime: memory

❖ Object: what is java object for the JVM ?

class Order

{

long id;

int timestamp;

String symbol;

long price;

int quantity;

Side side;

boolean limit;

Party party;

Venue venue;

}

t,”APPL”,p,q,Buy/

Sell, Owner, Venue.

header [64]:

class [32|64]

id [64]:

timestamp 32:

symbol [32-64];

price [64]

quantity[32]

side [32|64]

limit [32]

party [32|64]

venue [32|64]

… padding [0-63]

header,class:128

data: 64 “APPL”

header,class: 128

………..

header,class:128

………..

pointer (direct or compressed)

direct = address in memory](https://image.slidesharecdn.com/ll2016-160331151644/75/Java-low-latency-applications-12-2048.jpg)

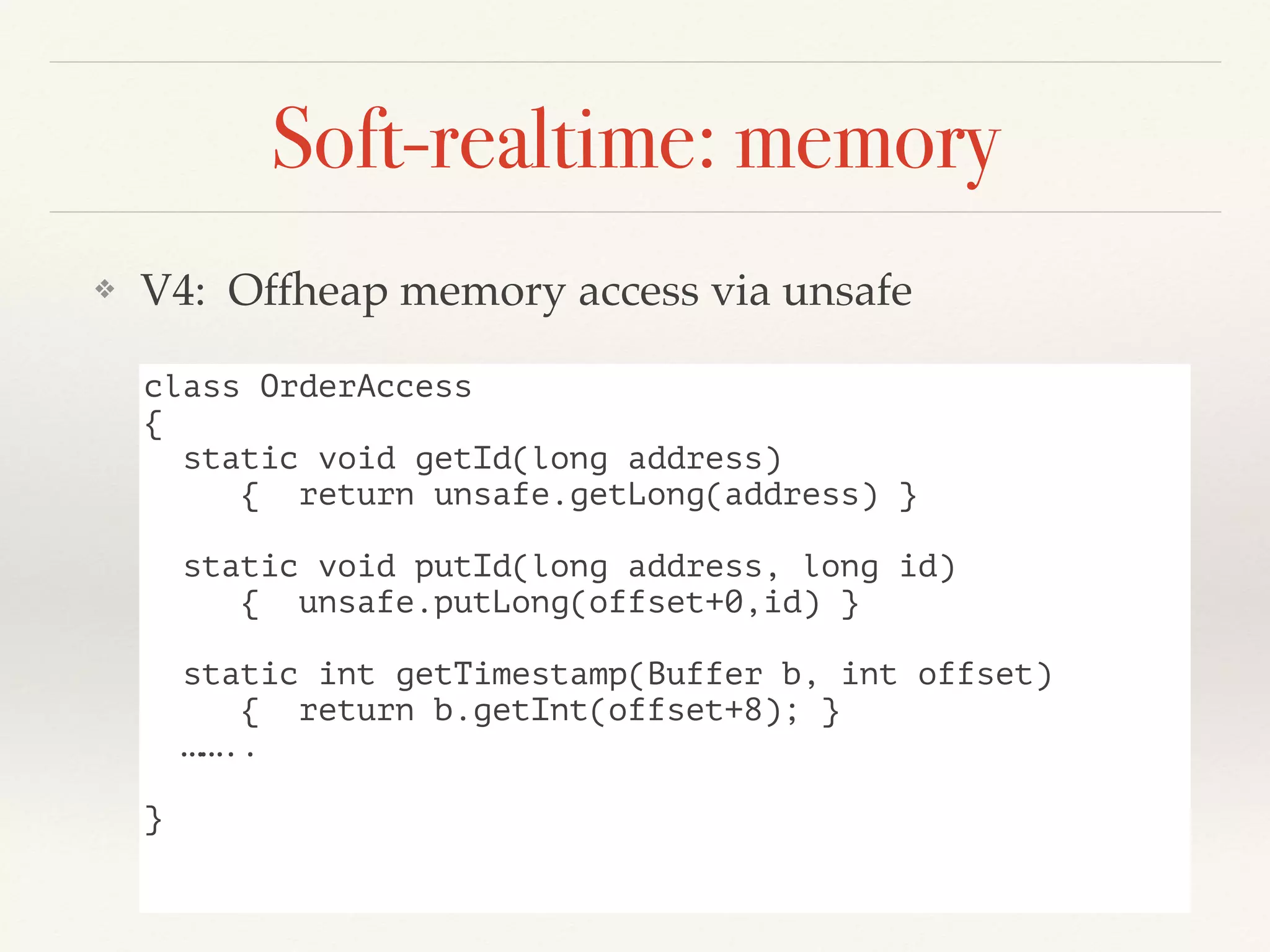

![Soft-realtime: memory

❖ V3, V4 Problem: Verbose API

- bytecode transformation from more convenient API

- Metaprogramming within hight-level JVM languages.

http://scala-miniboxing.org/ildl/

https://github.com/densh/scala-offheap

- Integration with unmanaged languages

- JVM with time-limited GC pauses. [Azul Systems]](https://image.slidesharecdn.com/ll2016-160331151644/75/Java-low-latency-applications-23-2048.jpg)

![Memory access API in next JVM versions

❖ VarHandlers (like methodHandlers)

❖ inject custom logic for variable access

❖ JEP 193 http://openjdk.java.net/jeps/193

❖ Value objects.

❖ can be located on stack

❖ JEP 169. http://openjdk.java.net/jeps/169

❖ Own intrinsics [project Panama]

❖ insert assembler instructions into JIT code.

❖ http://openjdk.java.net/projects/panama/

❖ JEP 191. http://openjdk.java.net/jeps/191

❖ ObjectLayout [Arrays 2.0]

❖ better contention

❖ http://objectlayout.github.io/ObjectLayout/](https://image.slidesharecdn.com/ll2016-160331151644/75/Java-low-latency-applications-24-2048.jpg)

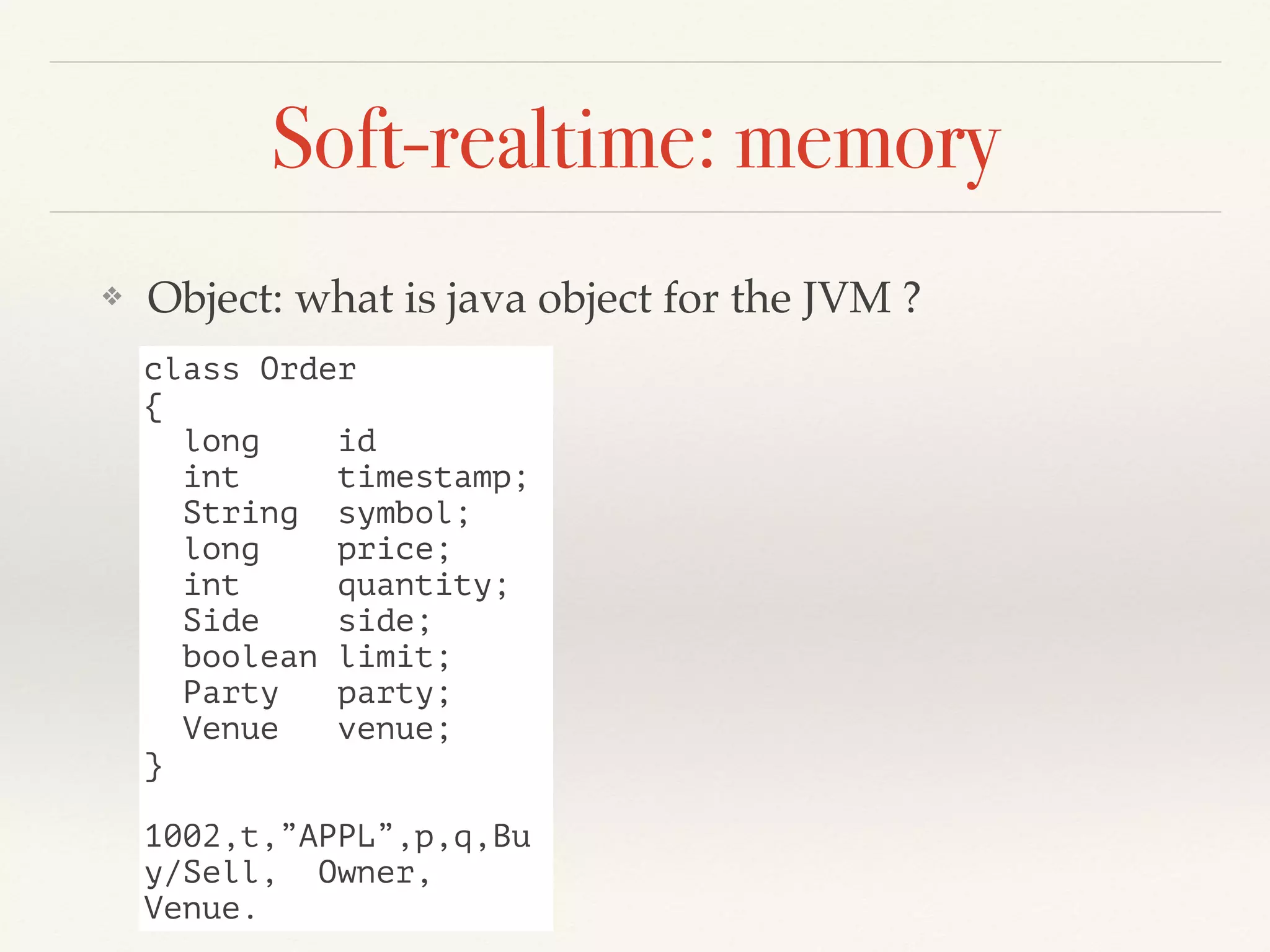

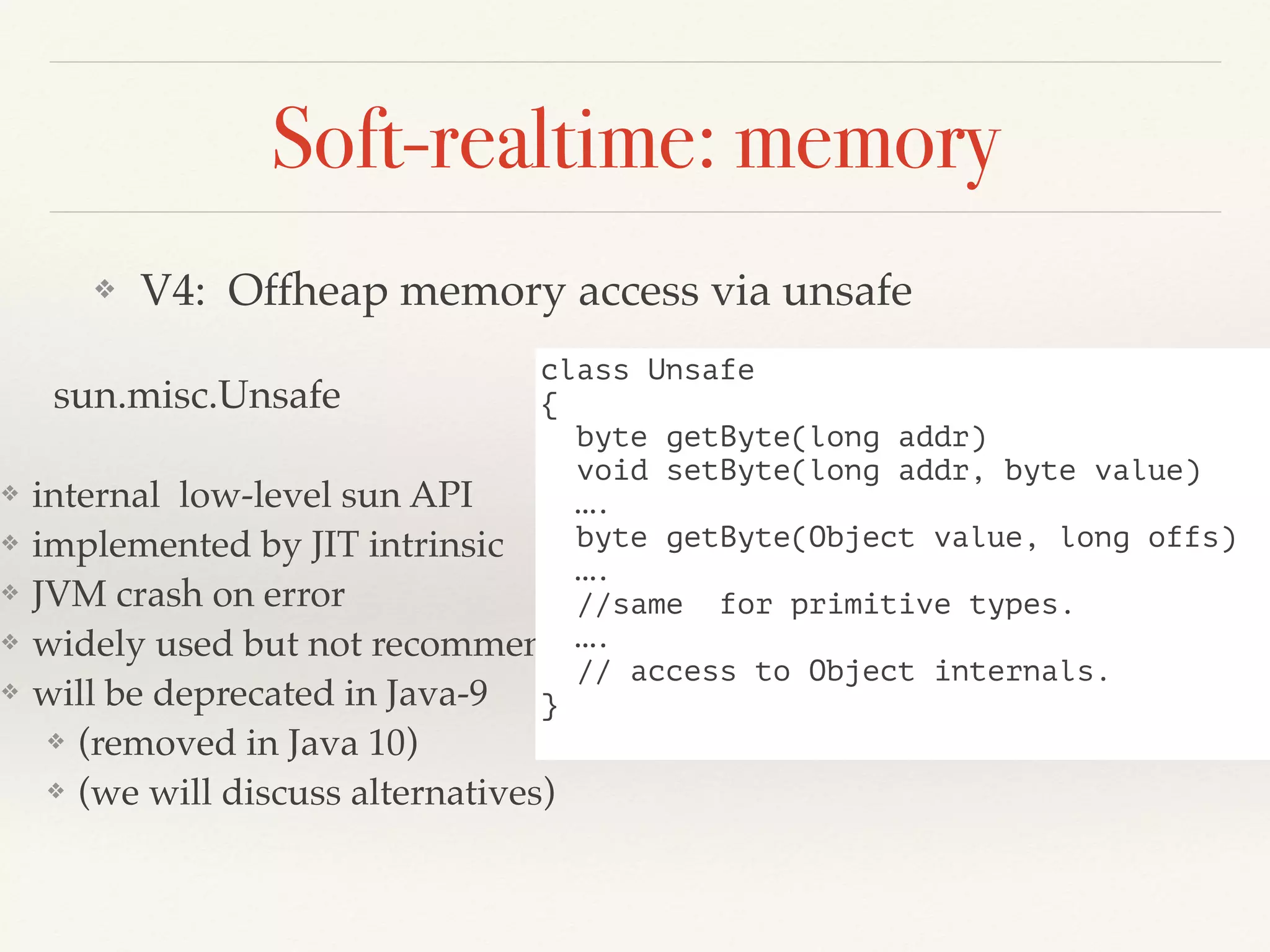

![Low latency Java/Native code data exchange

// buffer space

shared access

structure

struct BufferAccess

{

volatile long readIndex;

long[7] rpadding;

volatile long writeIndex;

long[7] wpadding;

}

C

class BufferAccessAccess // Java (example)

{

long getReadAddress(long baseAddr)

void setReadAddress(long baseAddr, long readAddr);

long getWriteAddress(long baseAddr)

}

base addr

Java](https://image.slidesharecdn.com/ll2016-160331151644/75/Java-low-latency-applications-31-2048.jpg)