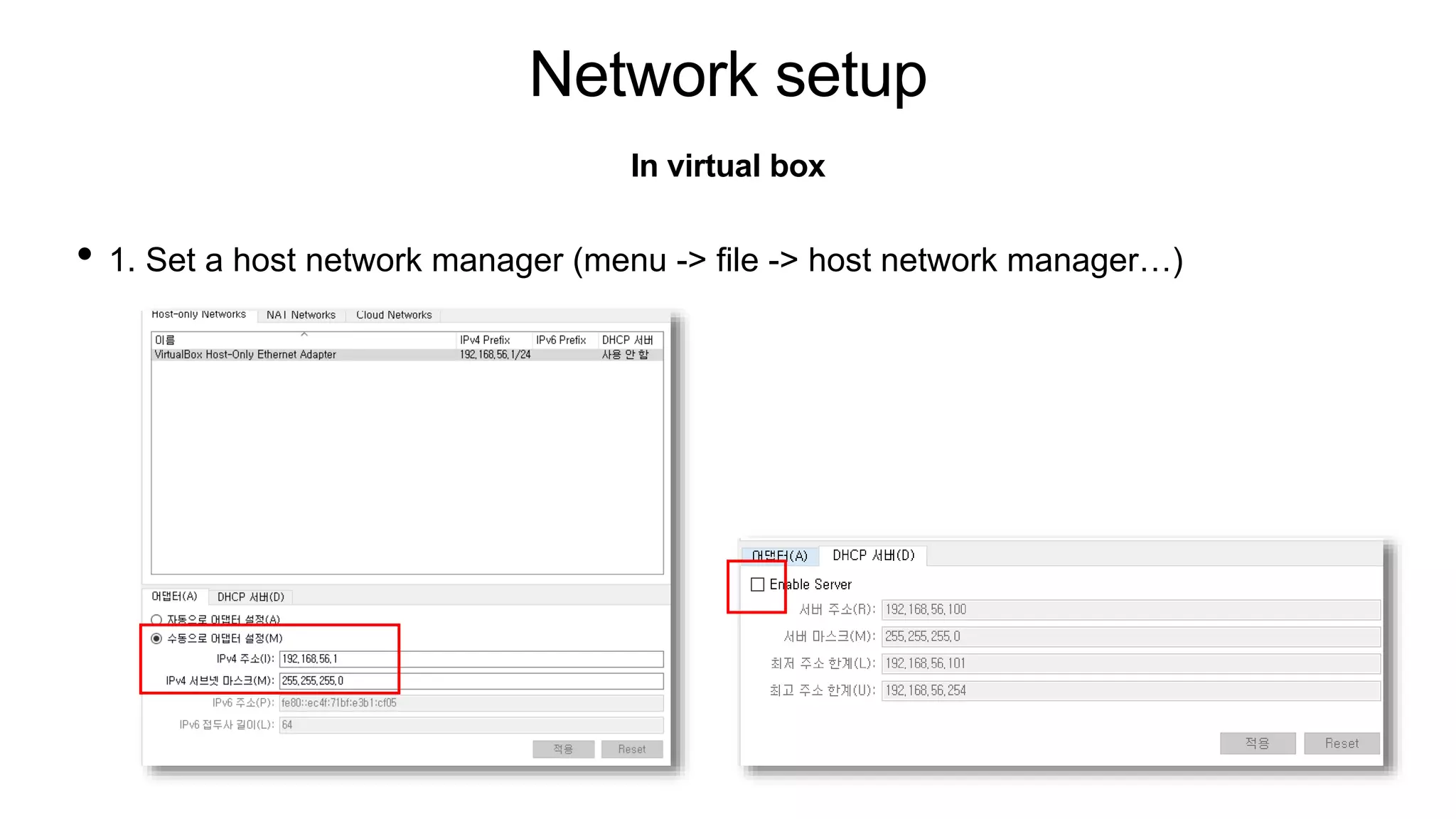

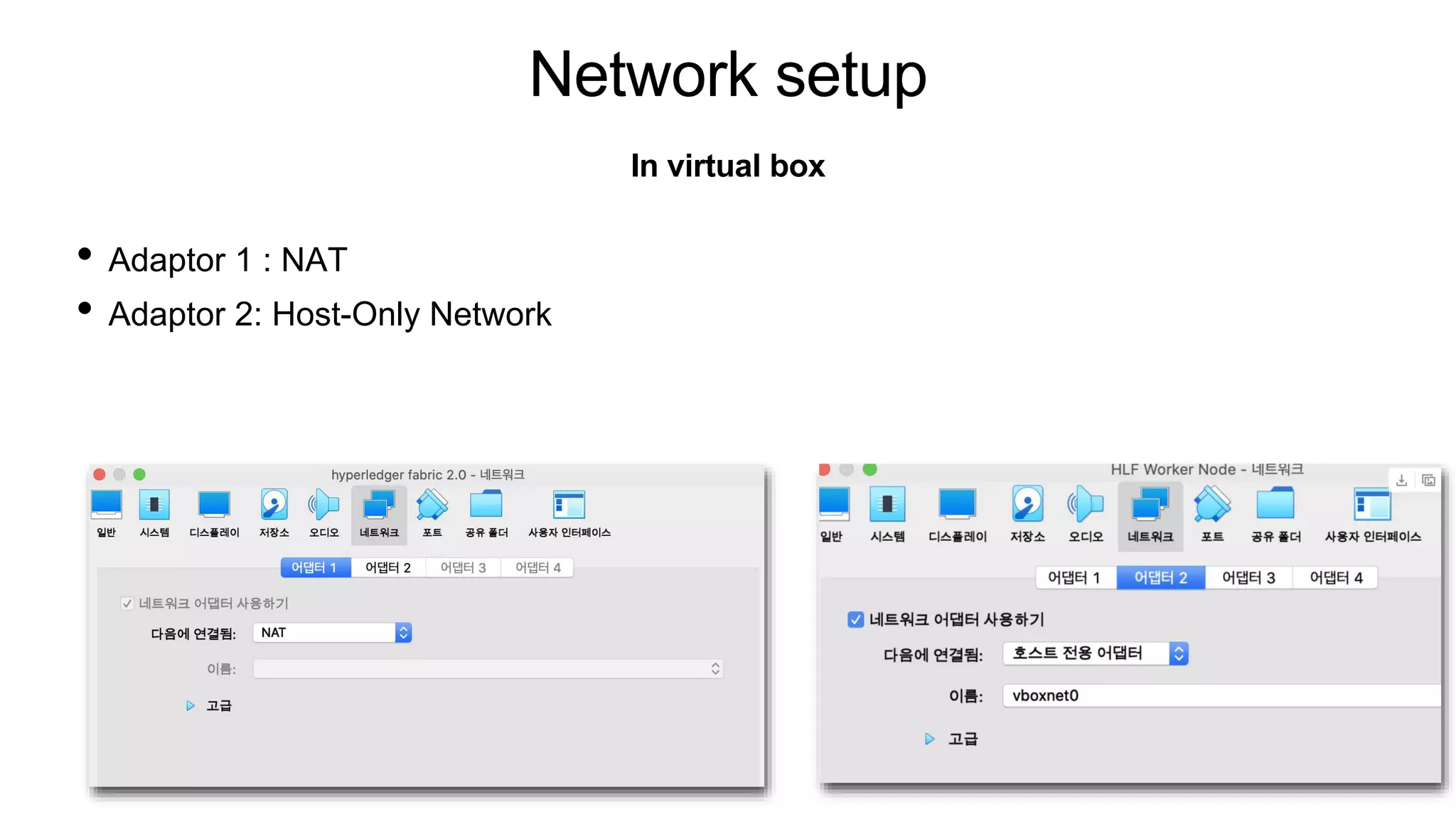

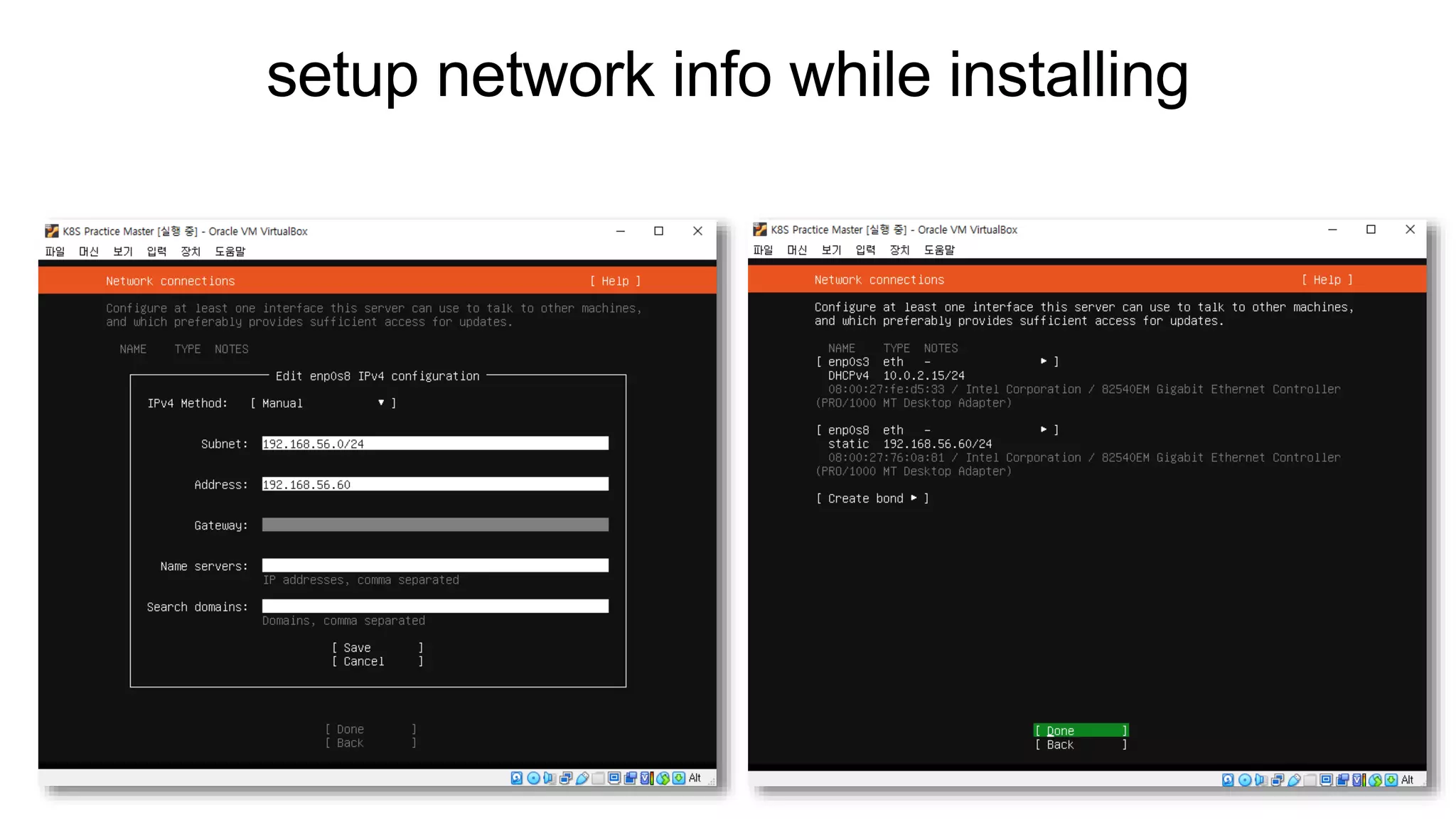

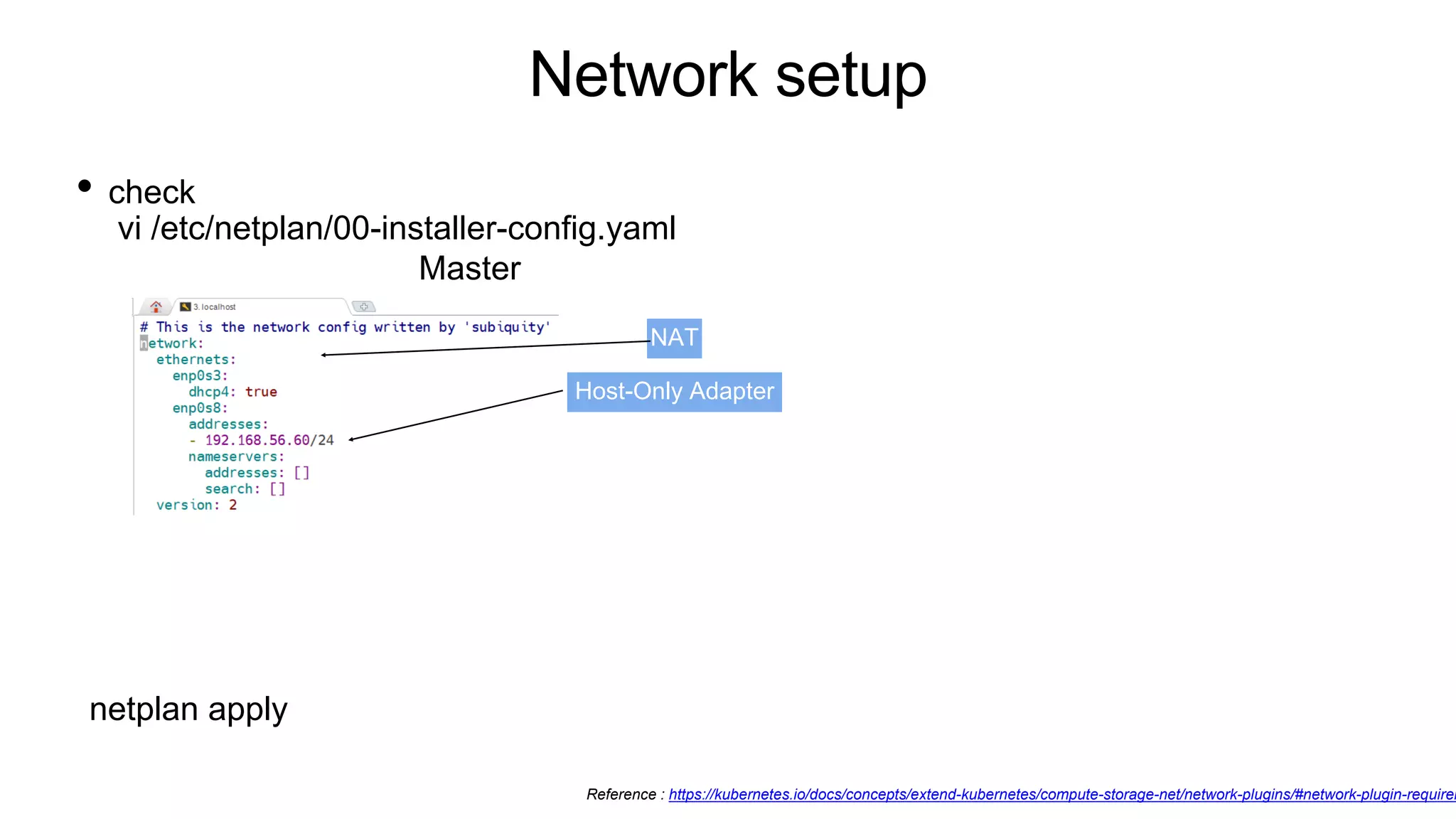

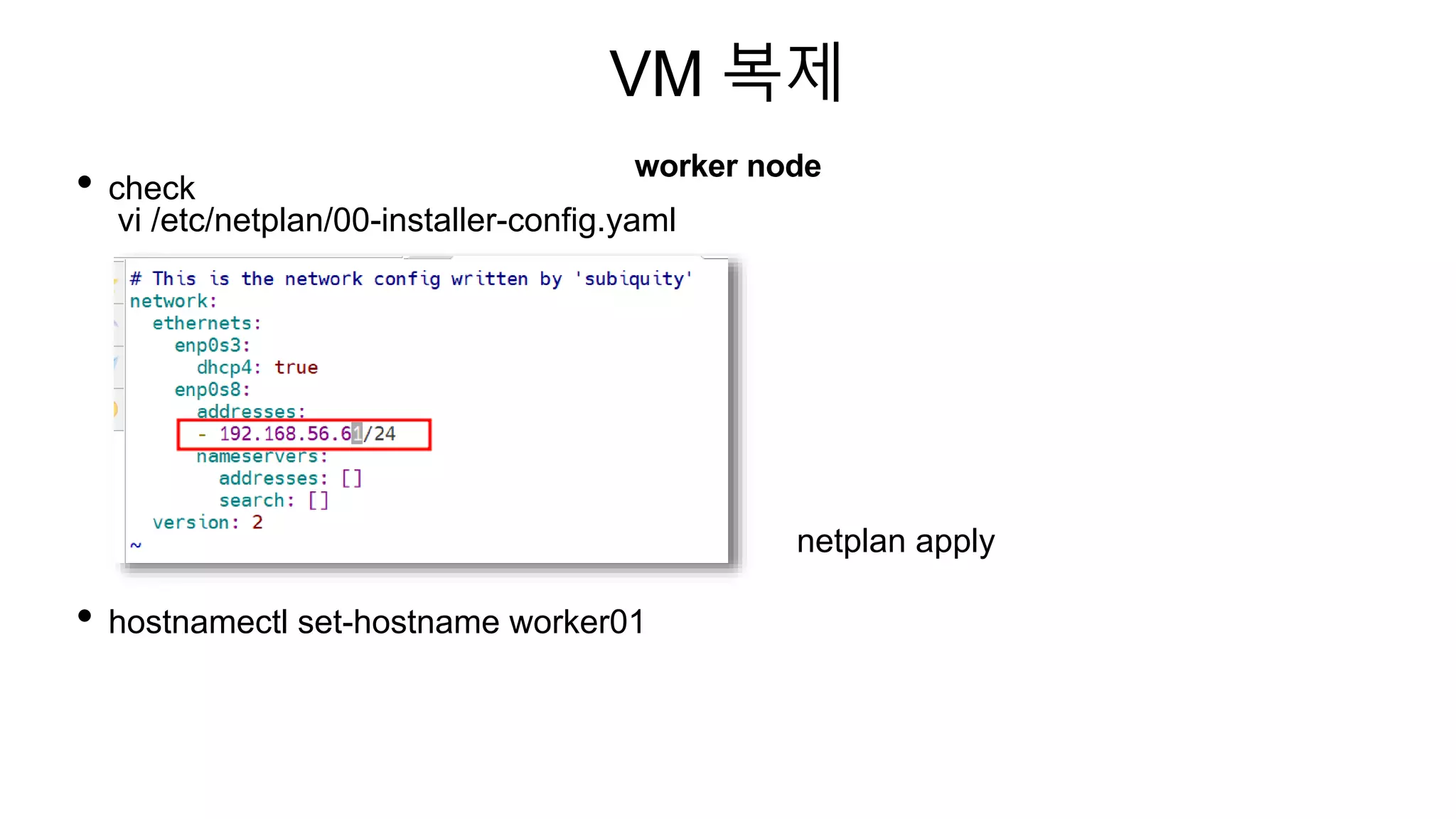

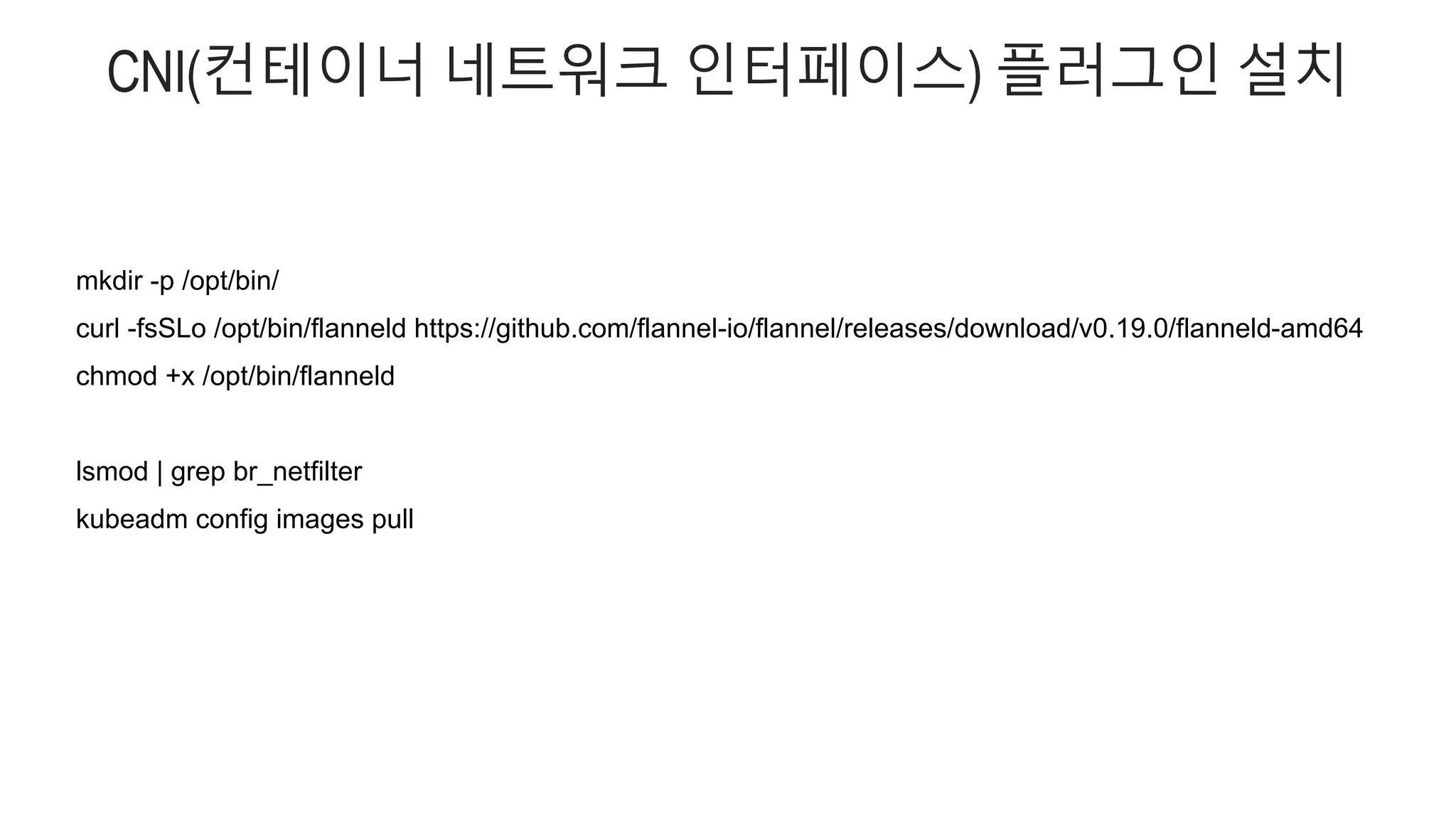

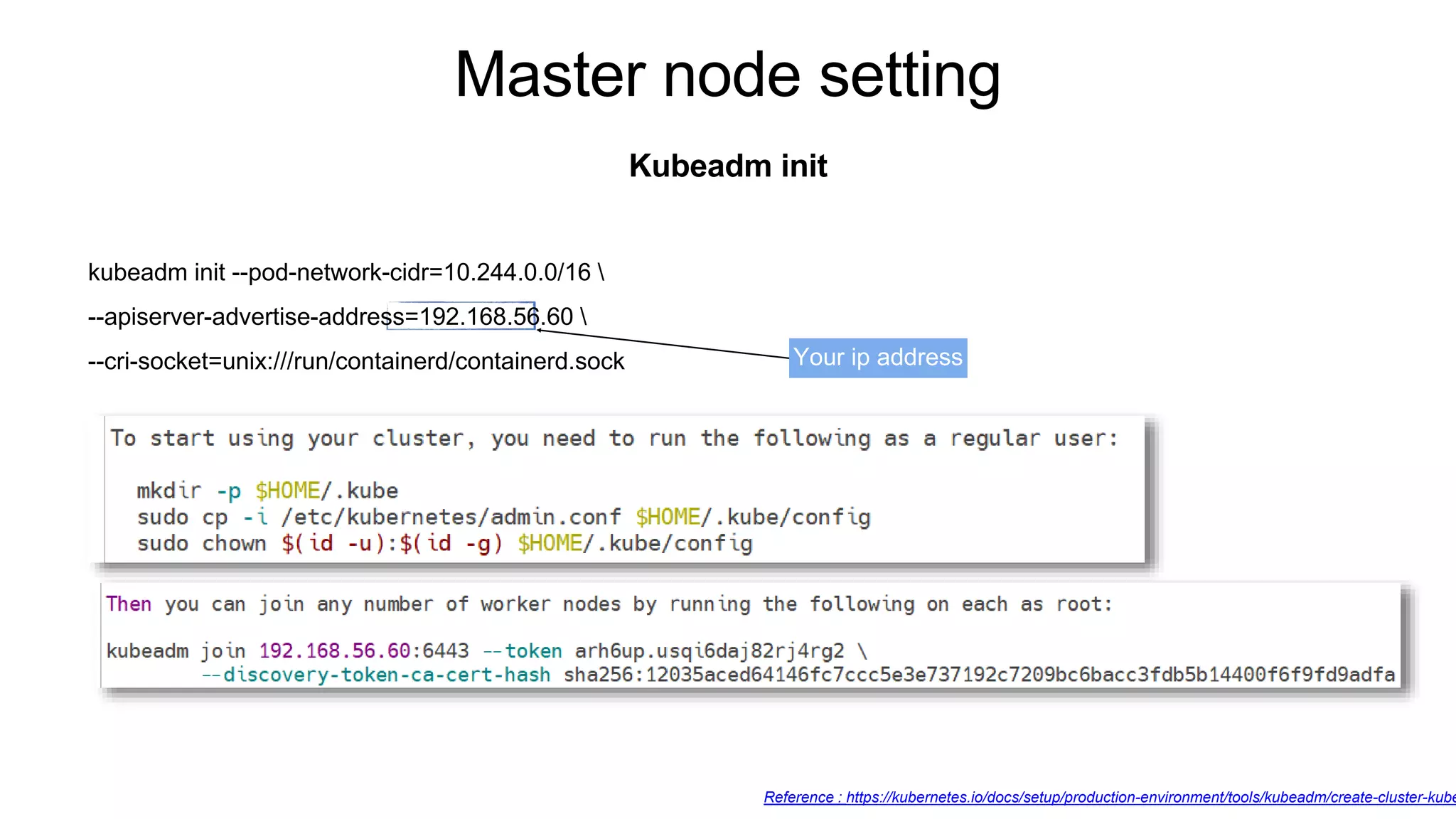

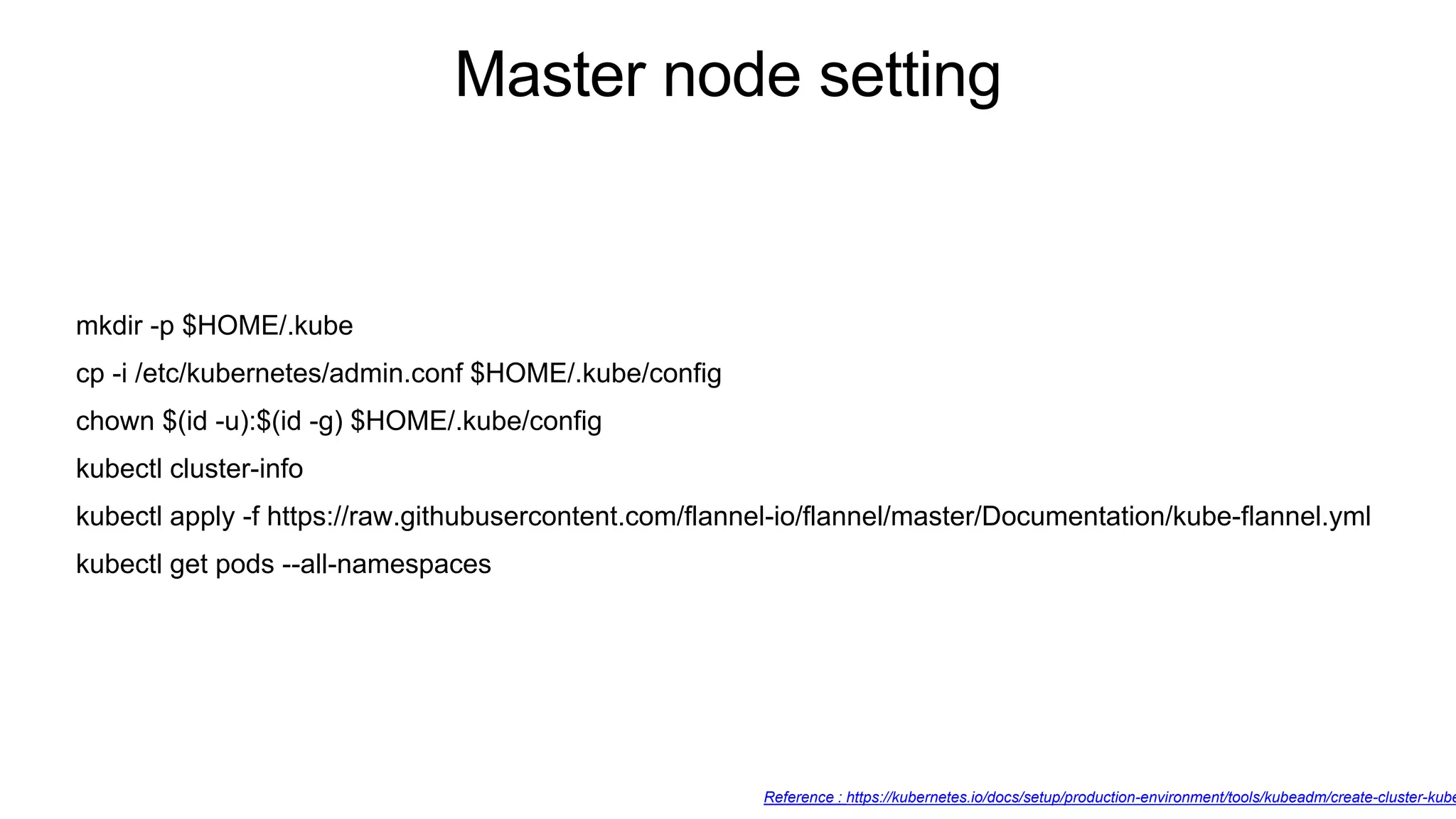

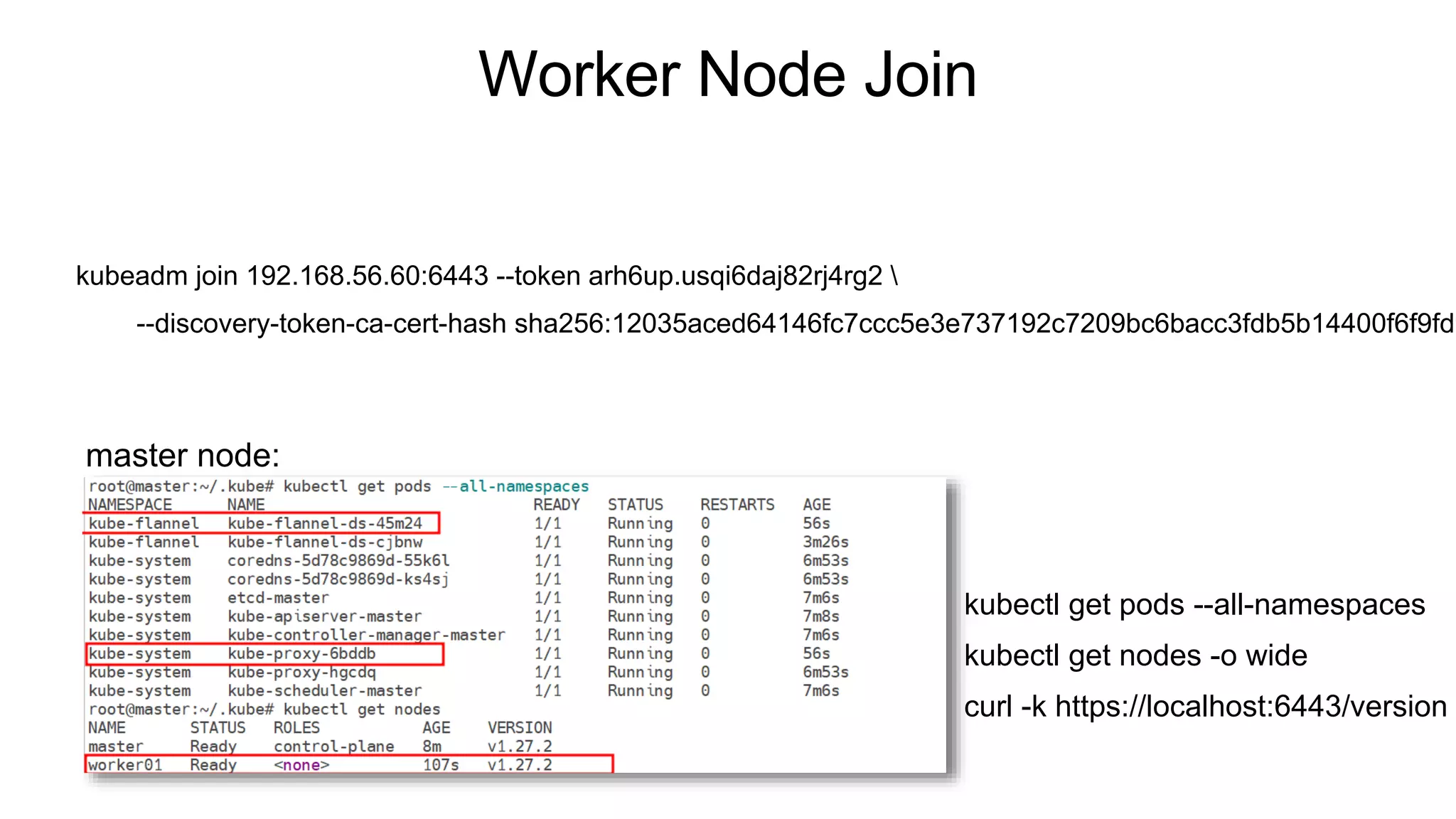

The document provides instructions for setting up Kubernetes on two VMs (master and worker nodes) using VirtualBox. It describes the minimum requirements for the VMs and outlines the steps to configure networking and install Kubernetes, container runtime (containerd), and CNI (Flannel). The steps covered include setting up NAT and host-only networking in VirtualBox, configuring the hosts file, installing Kubernetes packages (kubeadm, kubelet, kubectl), initializing the master node with kubeadm, joining the worker node to the cluster, and deploying a sample pod.

![[tip] virtual box range port forwarding

1. Vbox register(If needed) : VBoxManage registervm /Users/sf29/VirtualBox VMs/Worker/Worker.vbox

2. for i in {30000..30767}; do VBoxManage modifyvm "Worker" --natpf1 "tcp-port$i,tcp,,$i,,$i"; done

https://kubernetes.io/docs/reference/networking/ports-and-protocols/

ufw allow "OpenSSH"

ufw enable

ufw allow 6443/tcp

ufw allow 2379:2380/tcp

ufw allow 10250/tcp

ufw allow 10259/tcp

ufw allow 10257/tcp

ufw status

master(control plane) node

Worker node

ufw allow "OpenSSH"

ufw enable

ufw status

ufw allow 10250/tcp

ufw allow 30000:32767/tcp

ufw status

Port Open](https://image.slidesharecdn.com/k8spractice2023-230610150819-0475d323/75/k8s-practice-2023-pptx-14-2048.jpg)

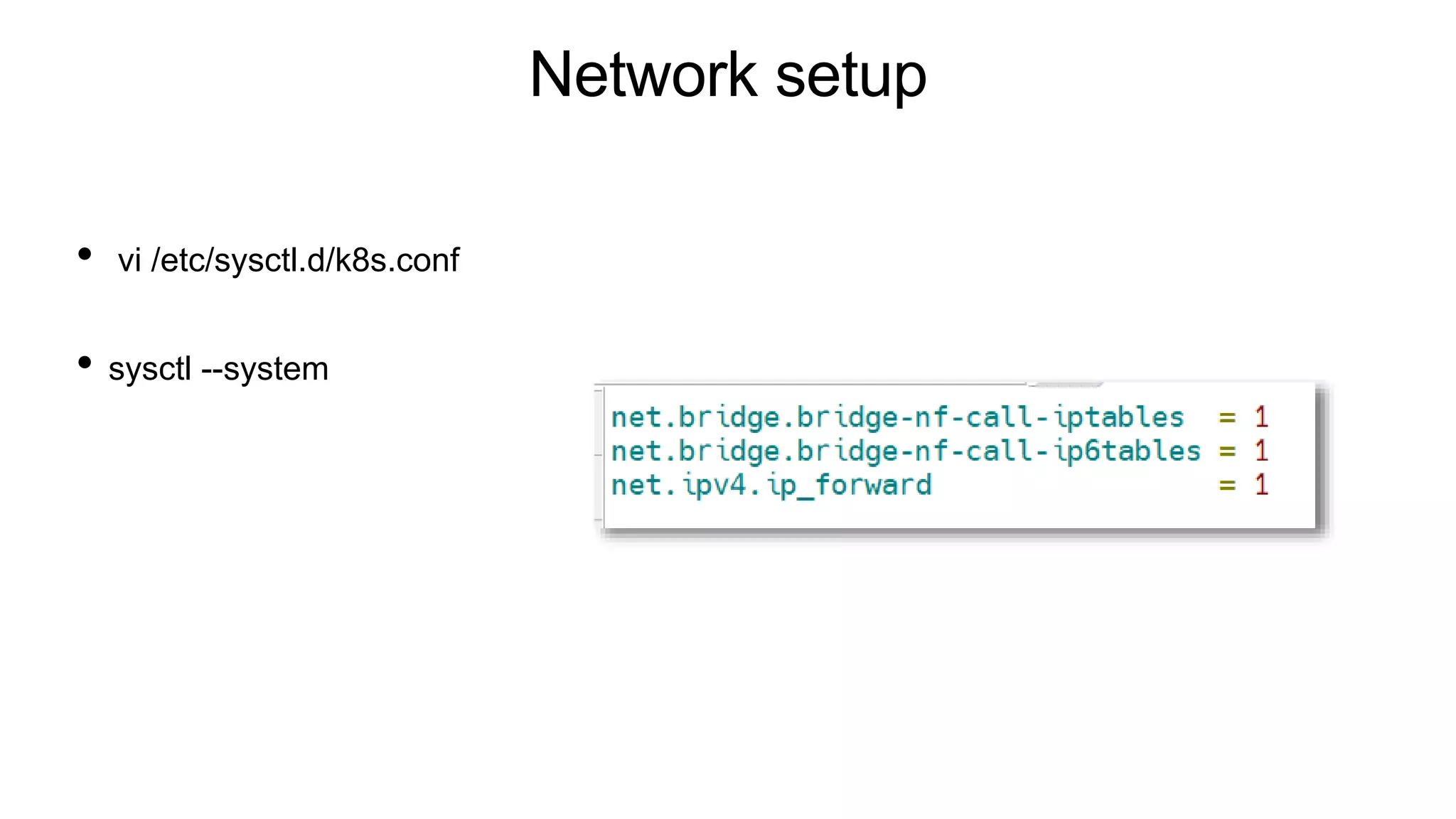

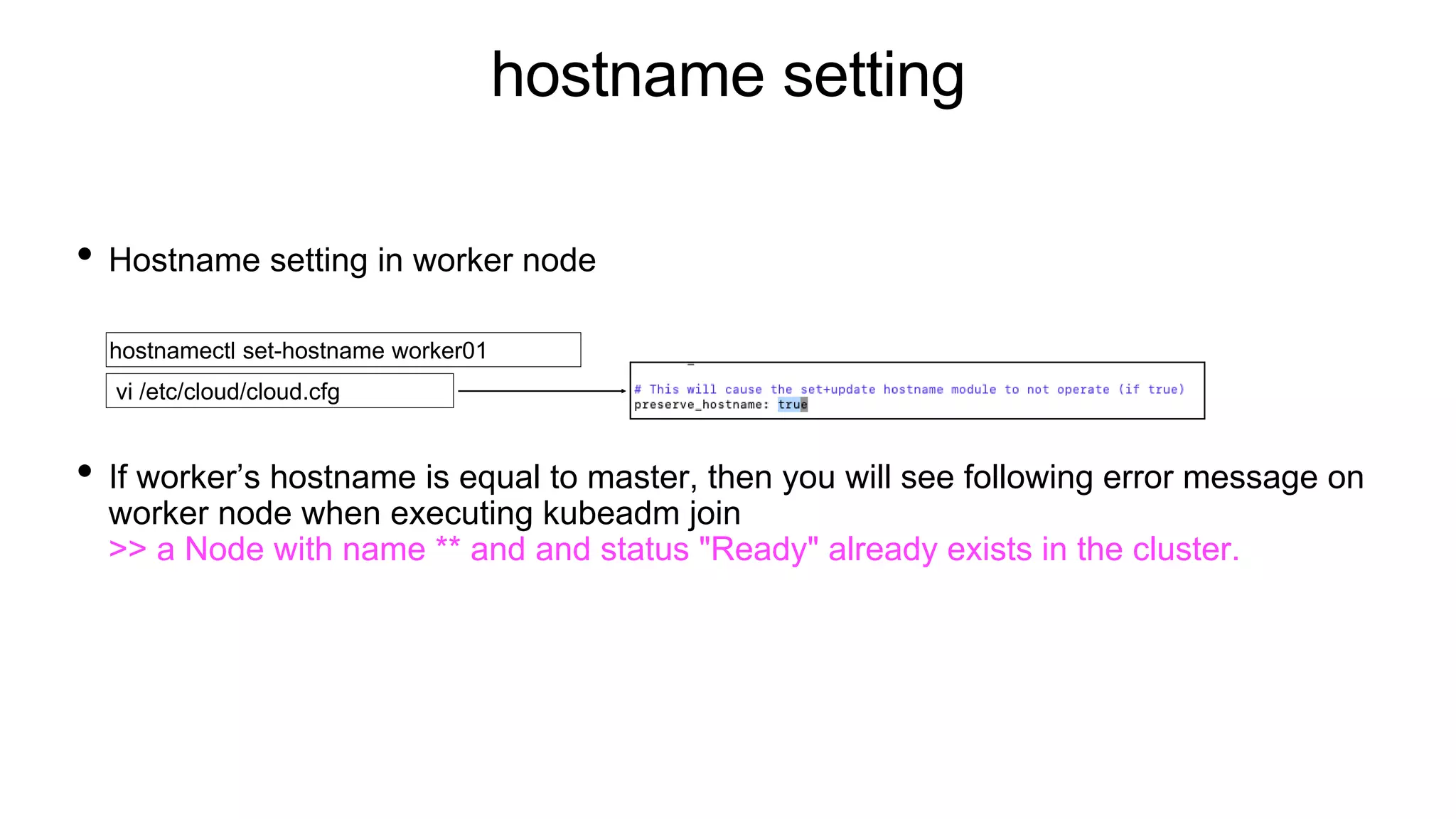

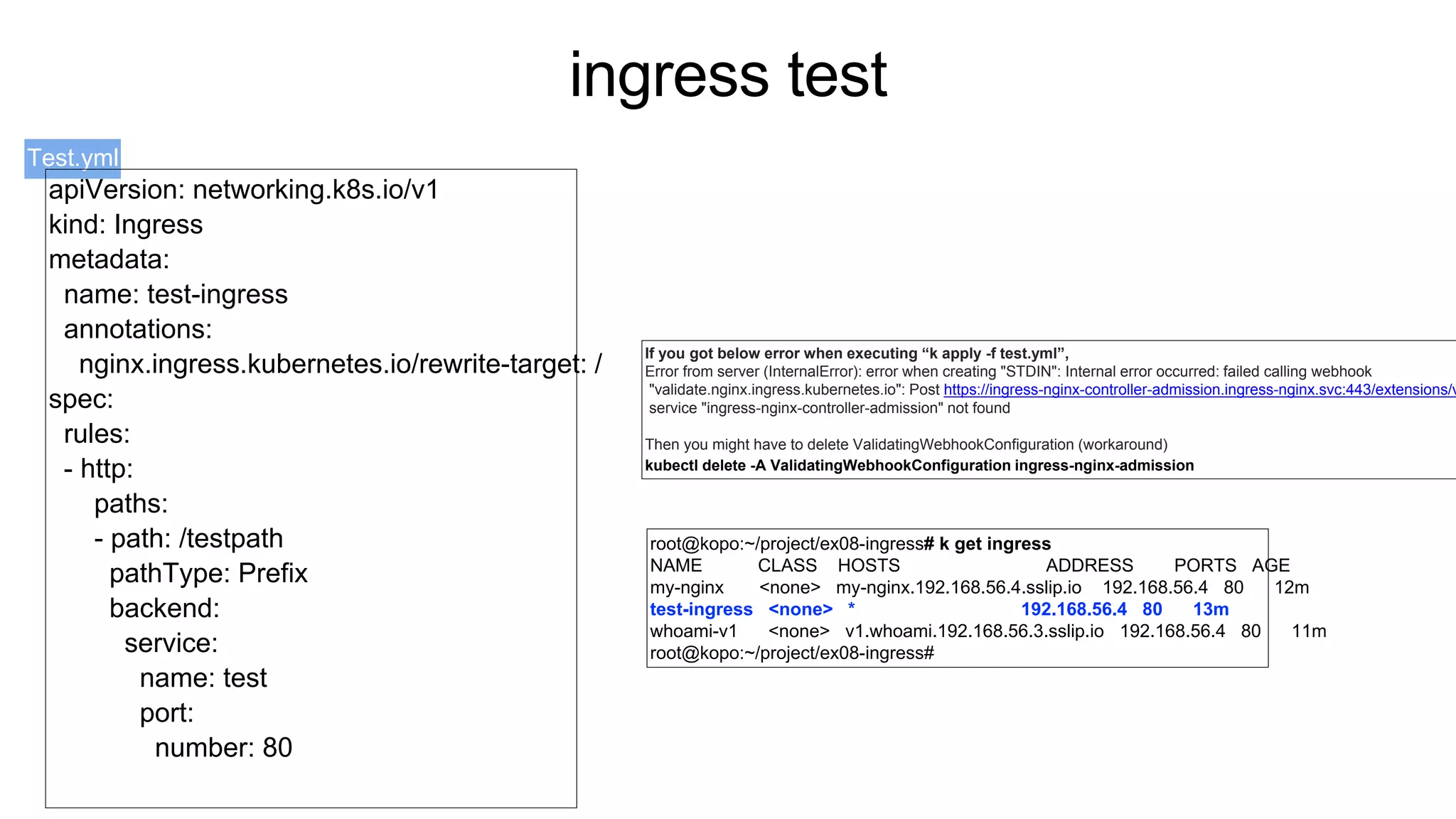

![Kubernetes setup

Install containerd

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker.gpg

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

apt update

apt install containerd.io

systemctl stop containerd

mv /etc/containerd/config.toml /etc/containerd/config.toml.orig

containerd config default > /etc/containerd/config.toml

vi /etc/containerd/config.toml

SystemdCgroup = true

systemctl start containerd

systemctl is-enabled containerd

systemctl status containerd](https://image.slidesharecdn.com/k8spractice2023-230610150819-0475d323/75/k8s-practice-2023-pptx-16-2048.jpg)

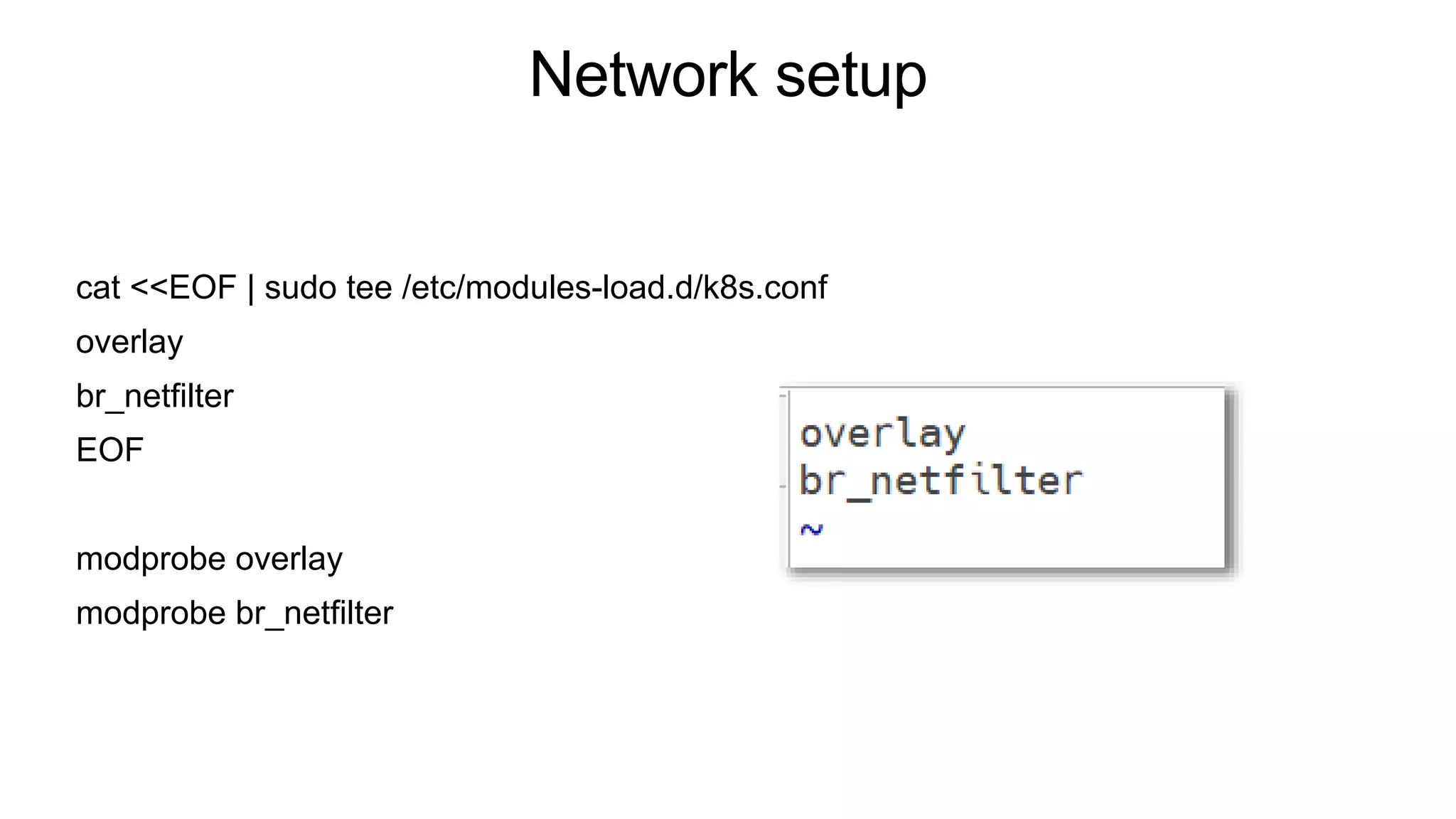

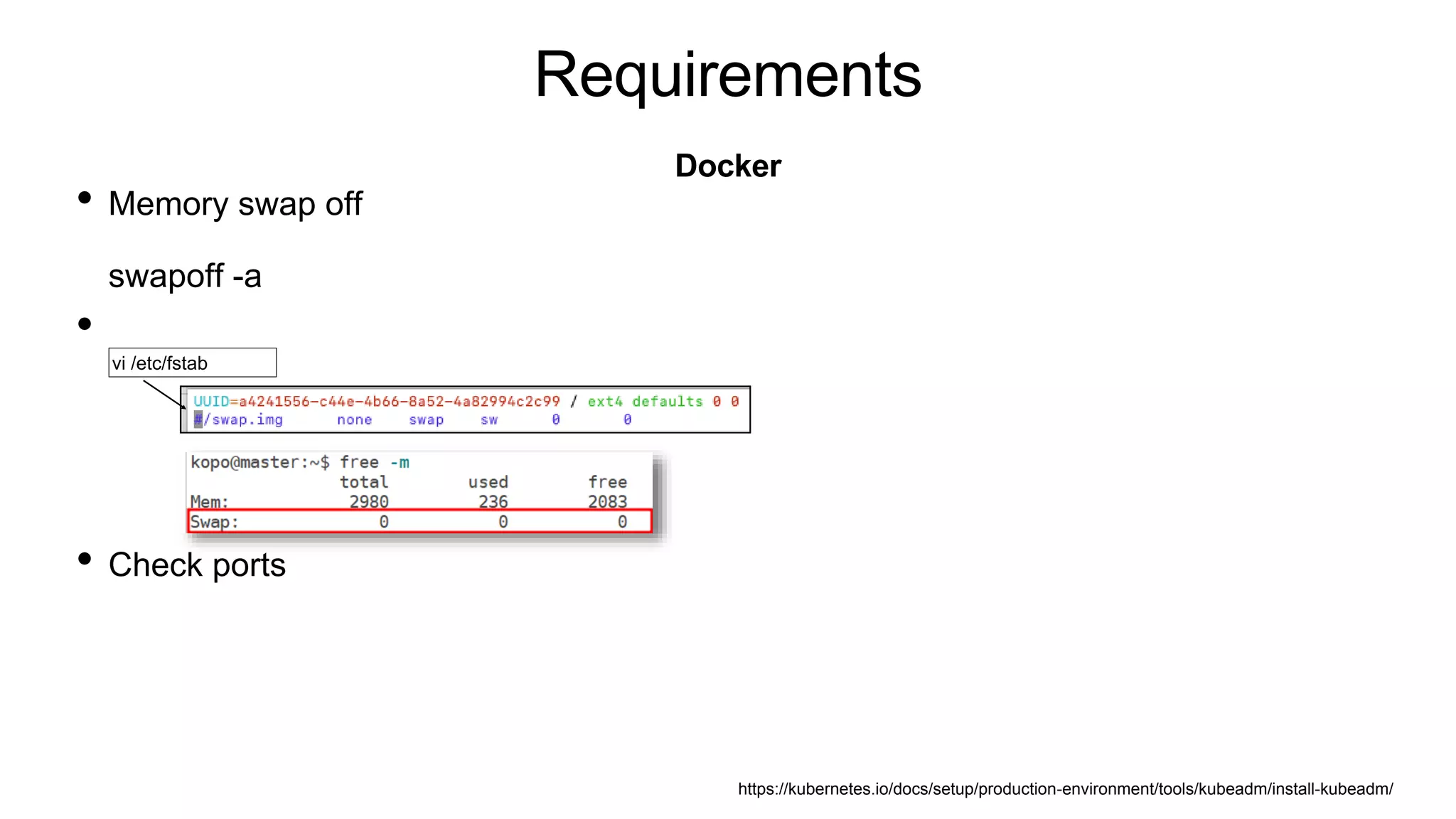

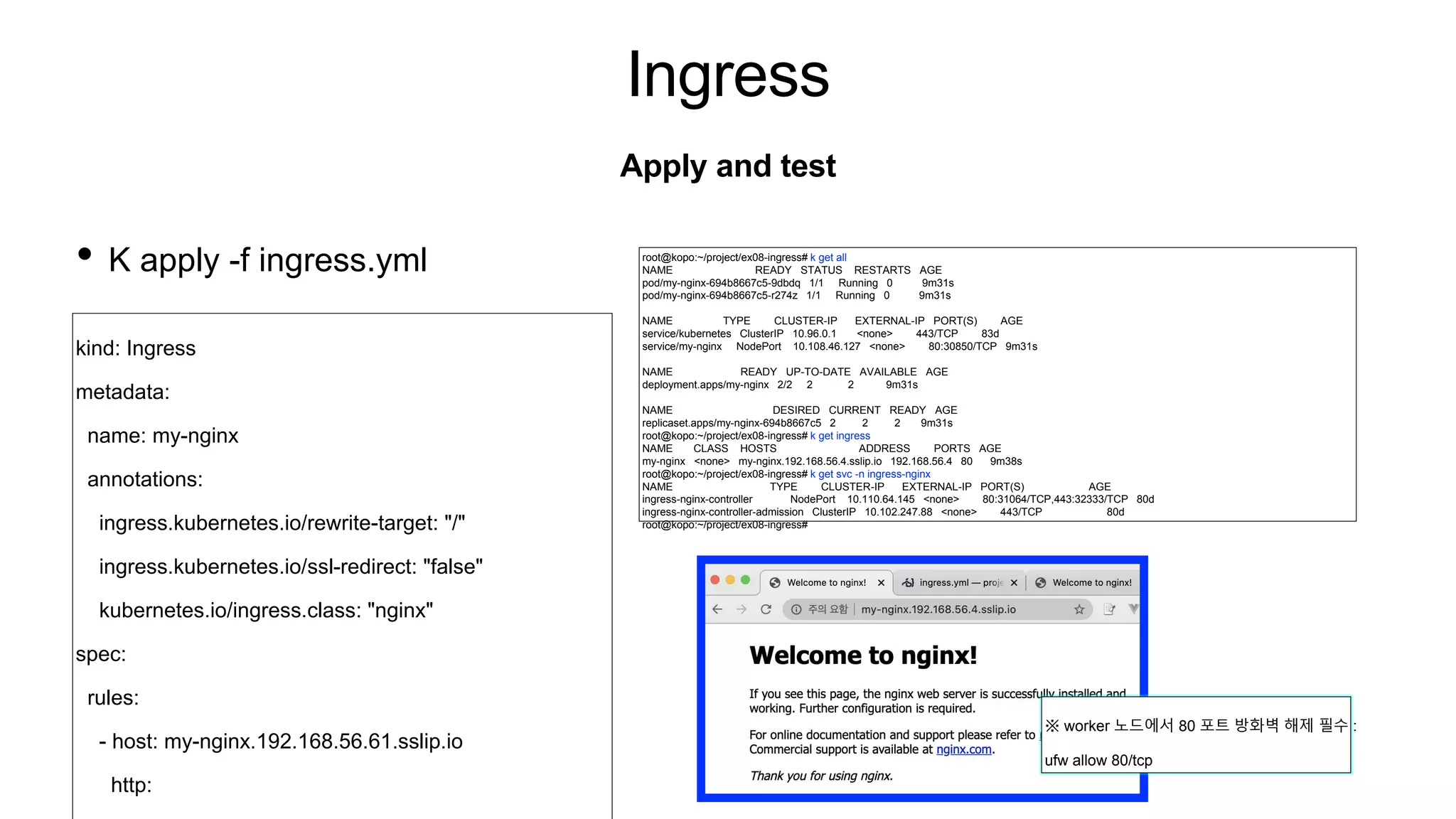

![Kubernetes setup

Install kubernetes

apt install apt-transport-https ca-certificates curl -y

# curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key --keyring /usr/share/keyrings/cloud.google.gpg add -

#echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

echo "deb [signed-by=/usr/share/keyrings/cloud.google.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

apt update

apt install kubelet kubeadm kubectl

apt-mark hold kubelet kubeadm kubectl # 패키지가 자동으로 업그레이드 되지 않도록 고정

kubeadm version

kubelet --version

kubectl version

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/install-kubeadm/](https://image.slidesharecdn.com/k8spractice2023-230610150819-0475d323/75/k8s-practice-2023-pptx-17-2048.jpg)

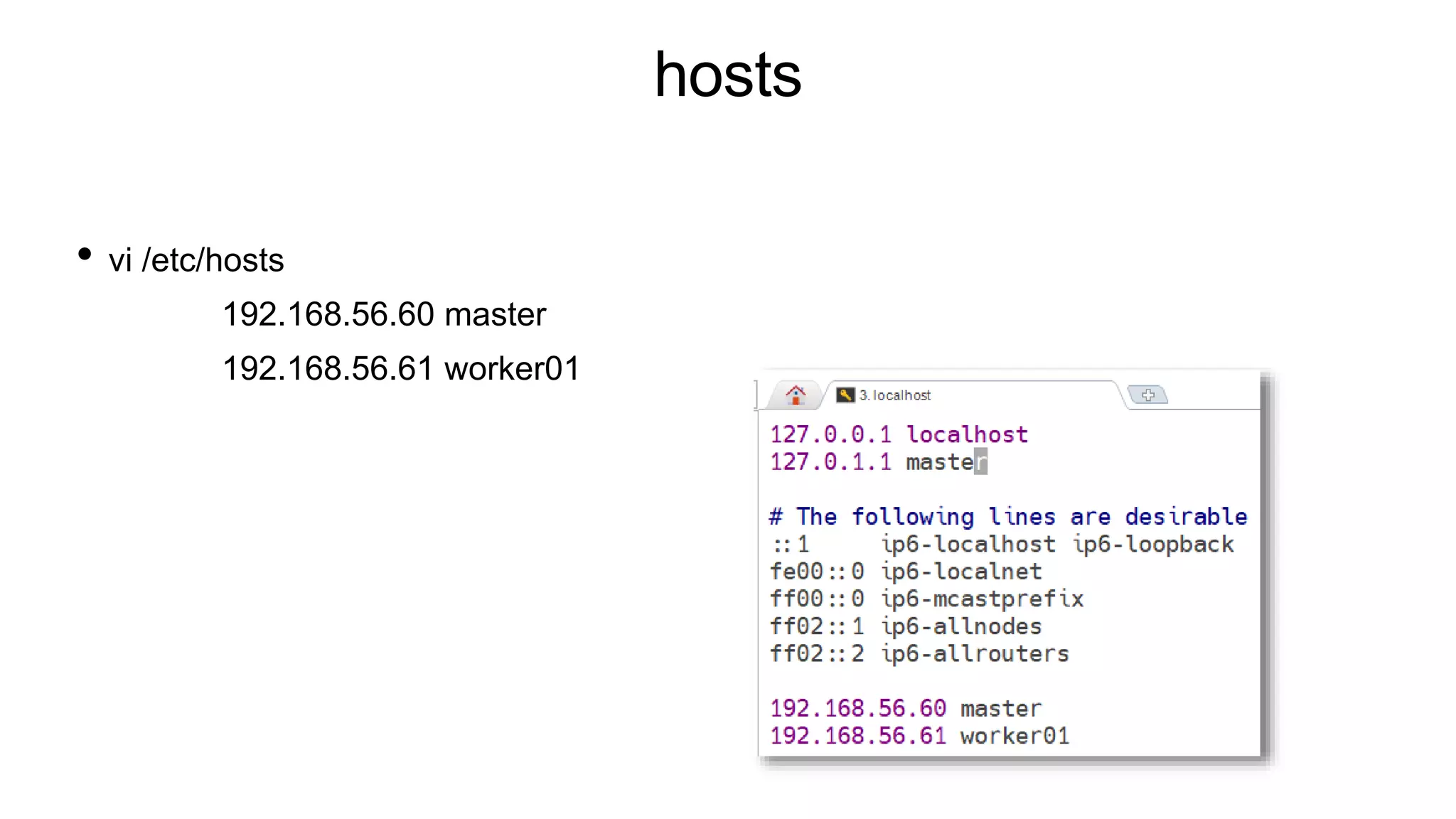

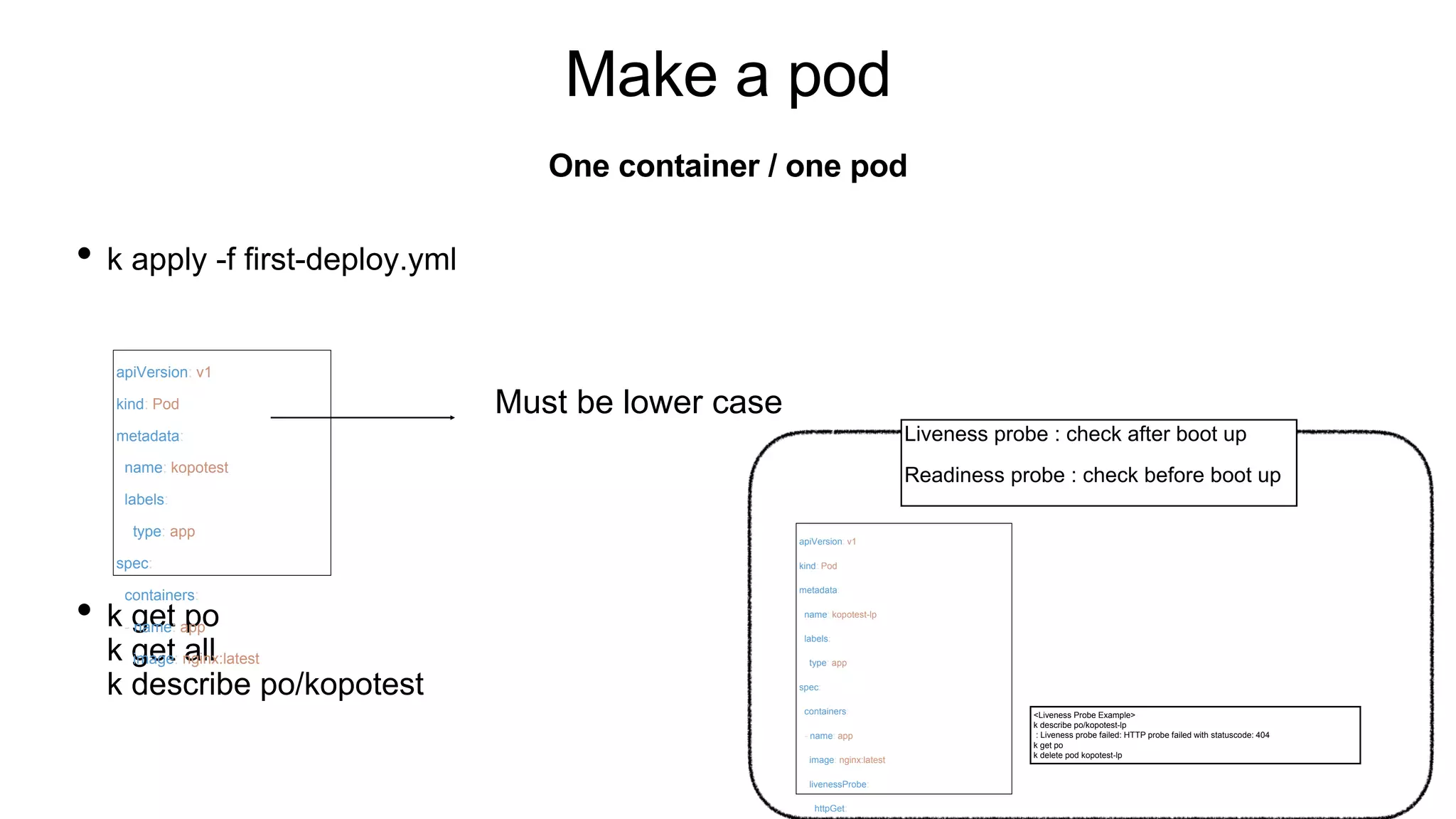

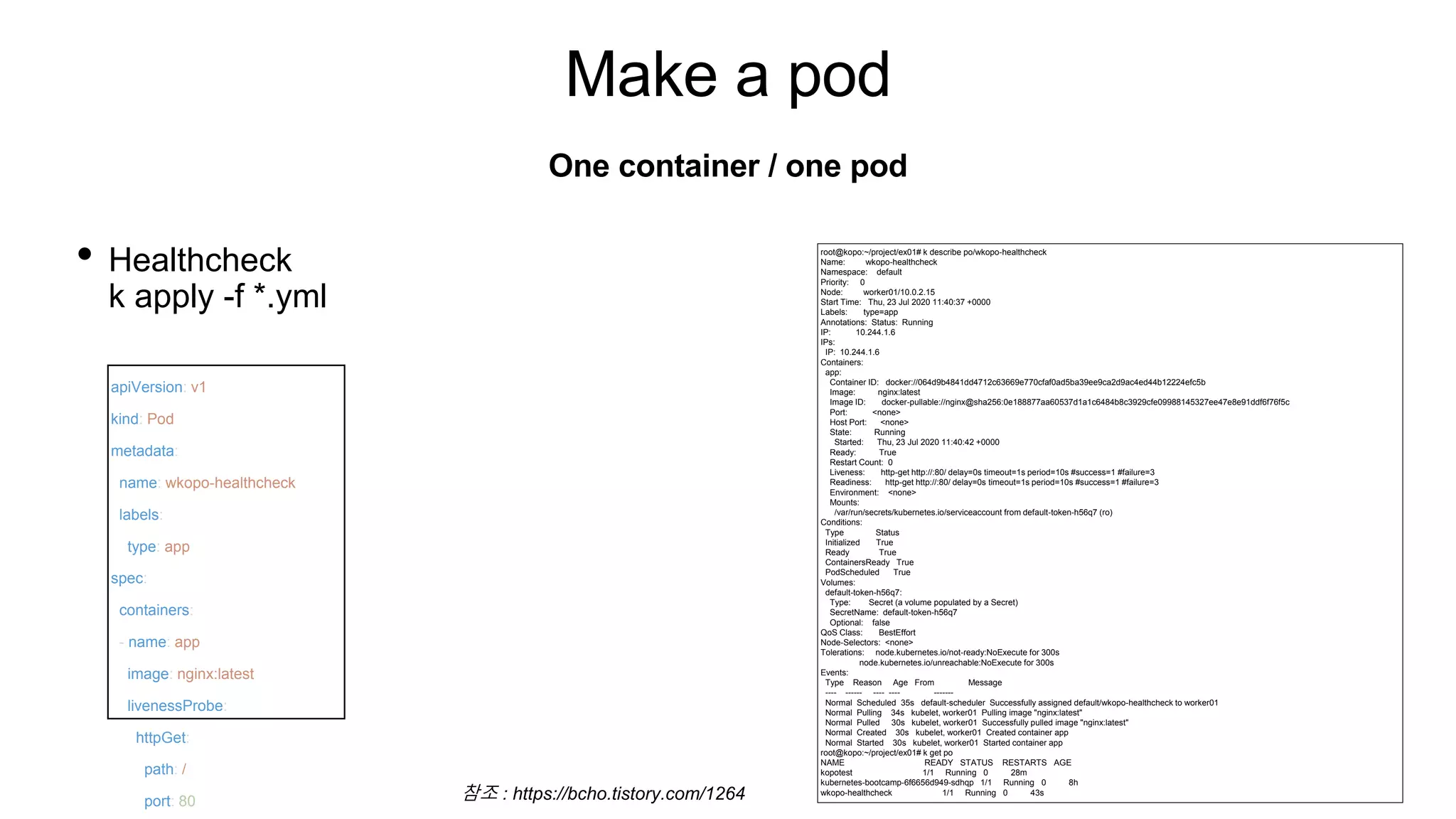

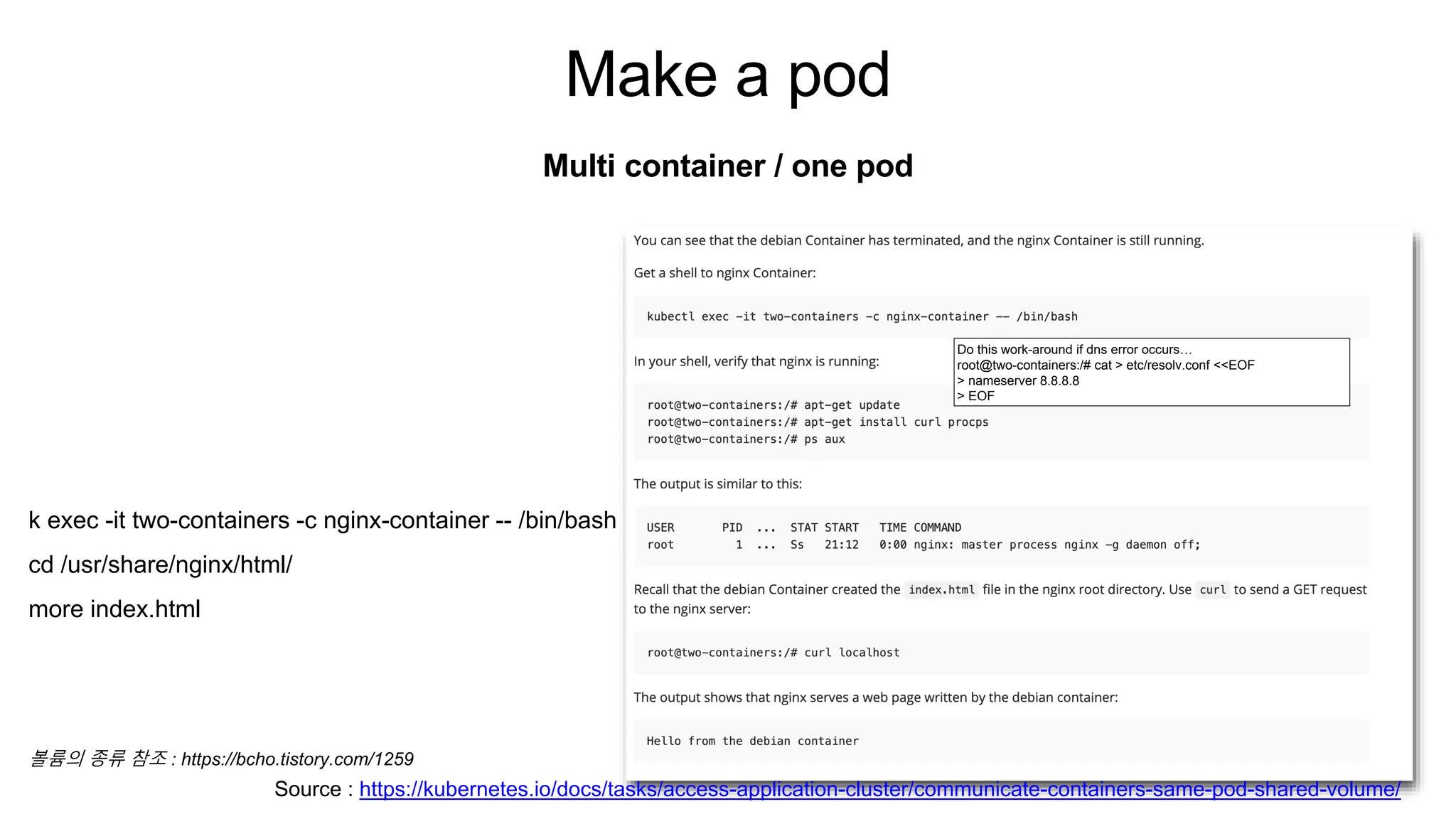

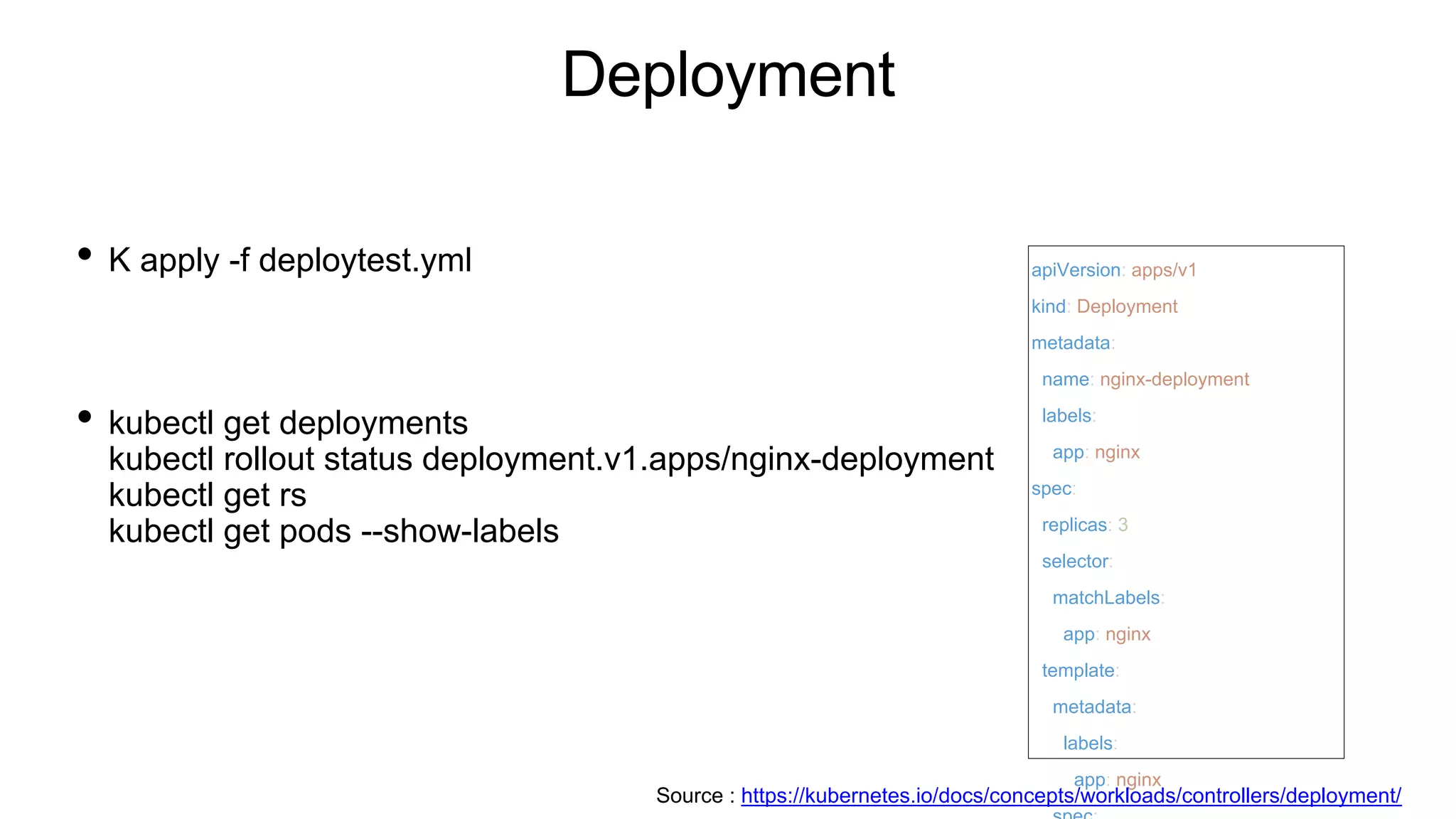

![Make a pod

• K apply -f multi-container.yml

Multi container / one pod

apiVersion: v1

kind: Pod

metadata:

name: two-containers

spec:

restartPolicy: Never

volumes:

- name: shared-data

emptyDir: {}

containers:

- name: nginx-container

image: nginx

volumeMounts:

- name: shared-data

root@kopo:~/project/ex02# k get pod

NAME READY STATUS RESTARTS AGE

kubernetes-bootcamp-6f6656d949-sdhqp 1/1 Running 0 10h

two-containers 1/2 NotReady 0 34m

root@kopo:~/project/ex02# k logs po/two-containers

error: a container name must be specified for pod two-containers, choose one of: [nginx-container debian-container]

root@kopo:~/project/ex02# k logs po/two-containers nginx-container

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

127.0.0.1 - - [23/Jul/2020:12:52:56 +0000] "GET / HTTP/1.1" 200 42 "-" "curl/7.64.0" "-"

Source : https://kubernetes.io/docs/tasks/access-application-cluster/communicate-containers-same-pod-shared-volume/](https://image.slidesharecdn.com/k8spractice2023-230610150819-0475d323/75/k8s-practice-2023-pptx-32-2048.jpg)

![[참고] docker VS containerd

source : https://kubernetes.io/blog/2018/05/24/kubernetes-containerd-integration-goes-ga/#containerd-1-0-cri-containerd-end-of-li](https://image.slidesharecdn.com/k8spractice2023-230610150819-0475d323/75/k8s-practice-2023-pptx-36-2048.jpg)

![Log search

• kubectl logs [pod name] -n kube-system

ex> kubectl logs coredns-383838-fh38fh8 -n kube-system

• kubectl describe nodes](https://image.slidesharecdn.com/k8spractice2023-230610150819-0475d323/75/k8s-practice-2023-pptx-59-2048.jpg)