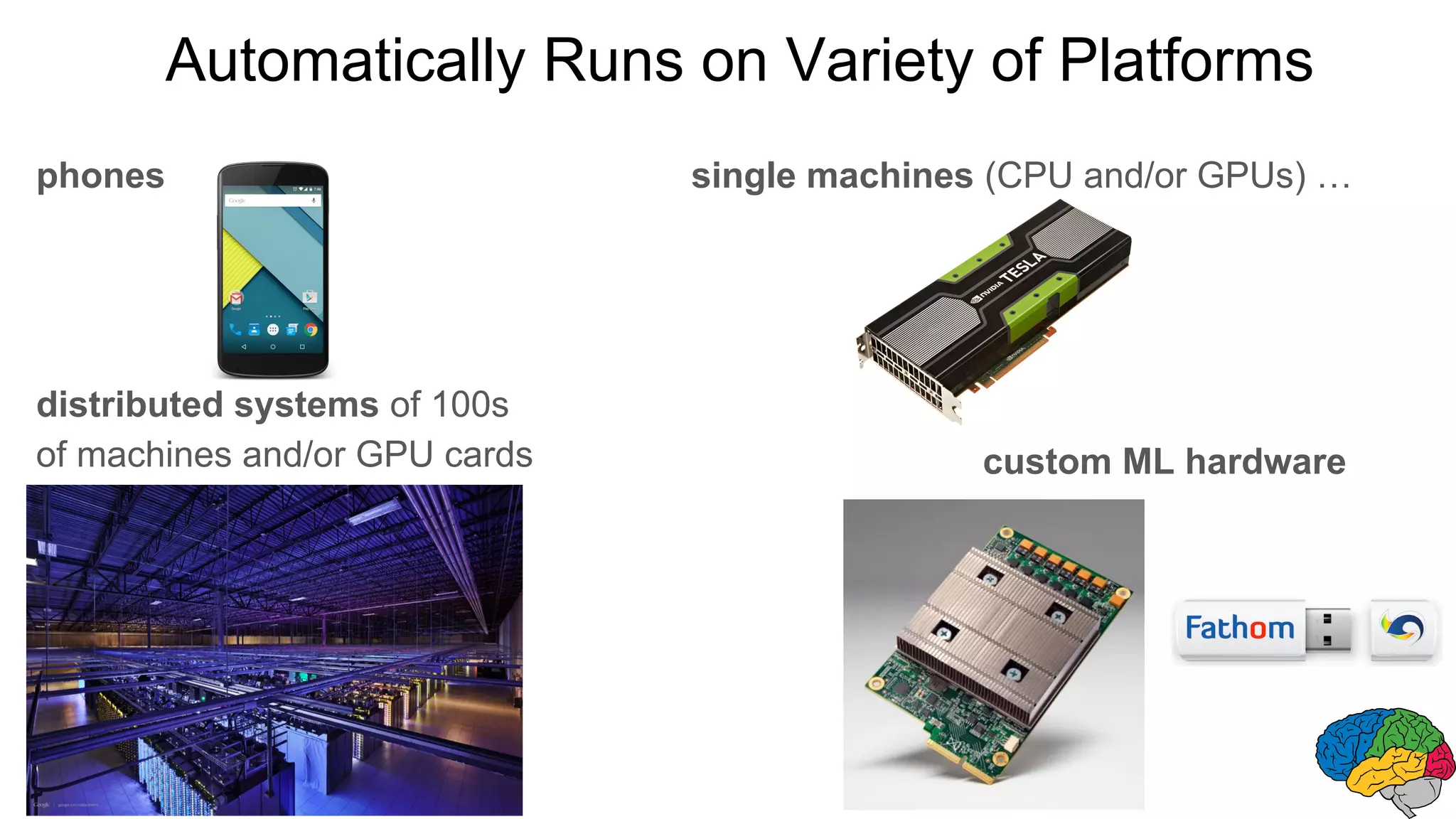

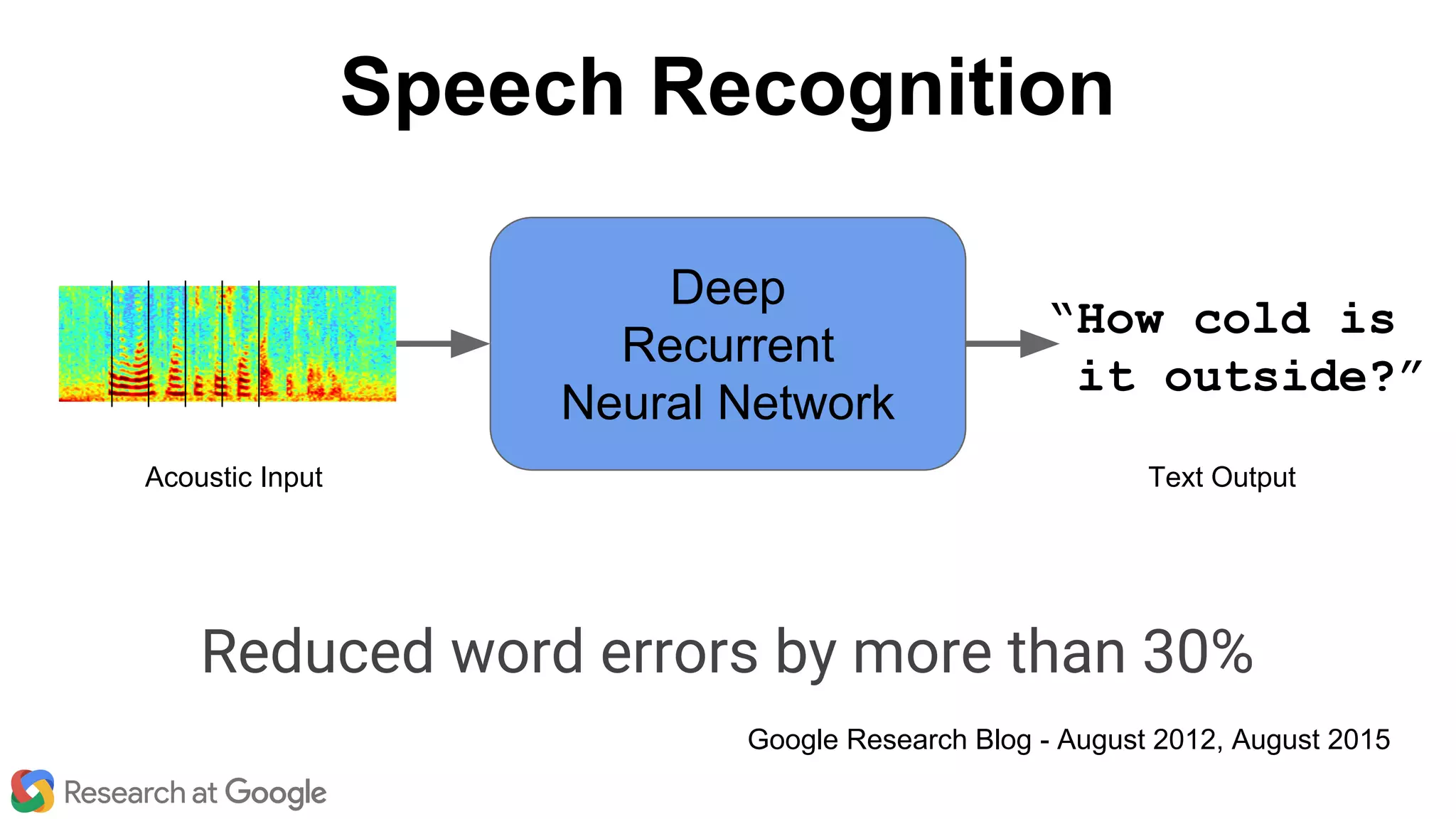

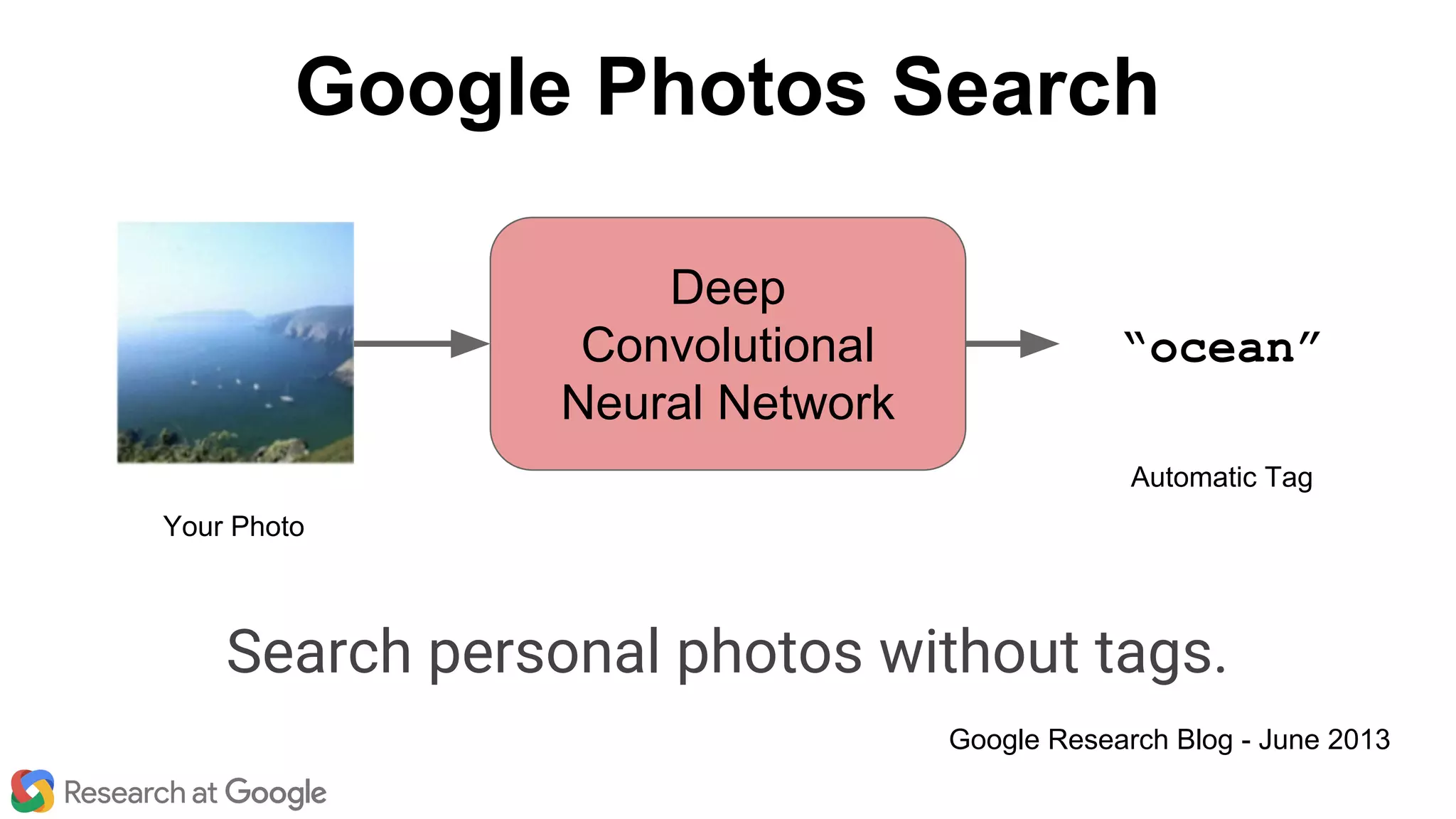

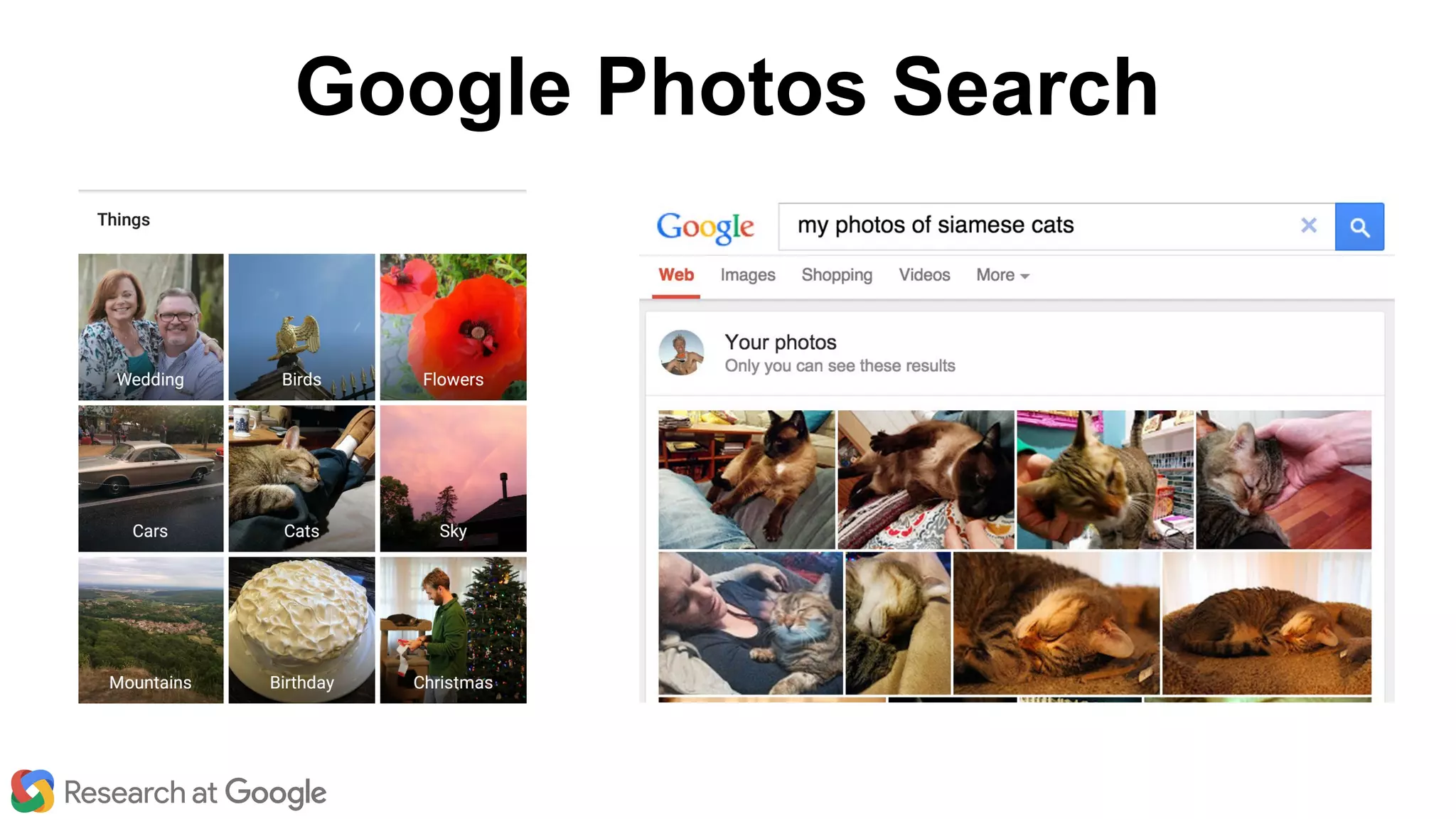

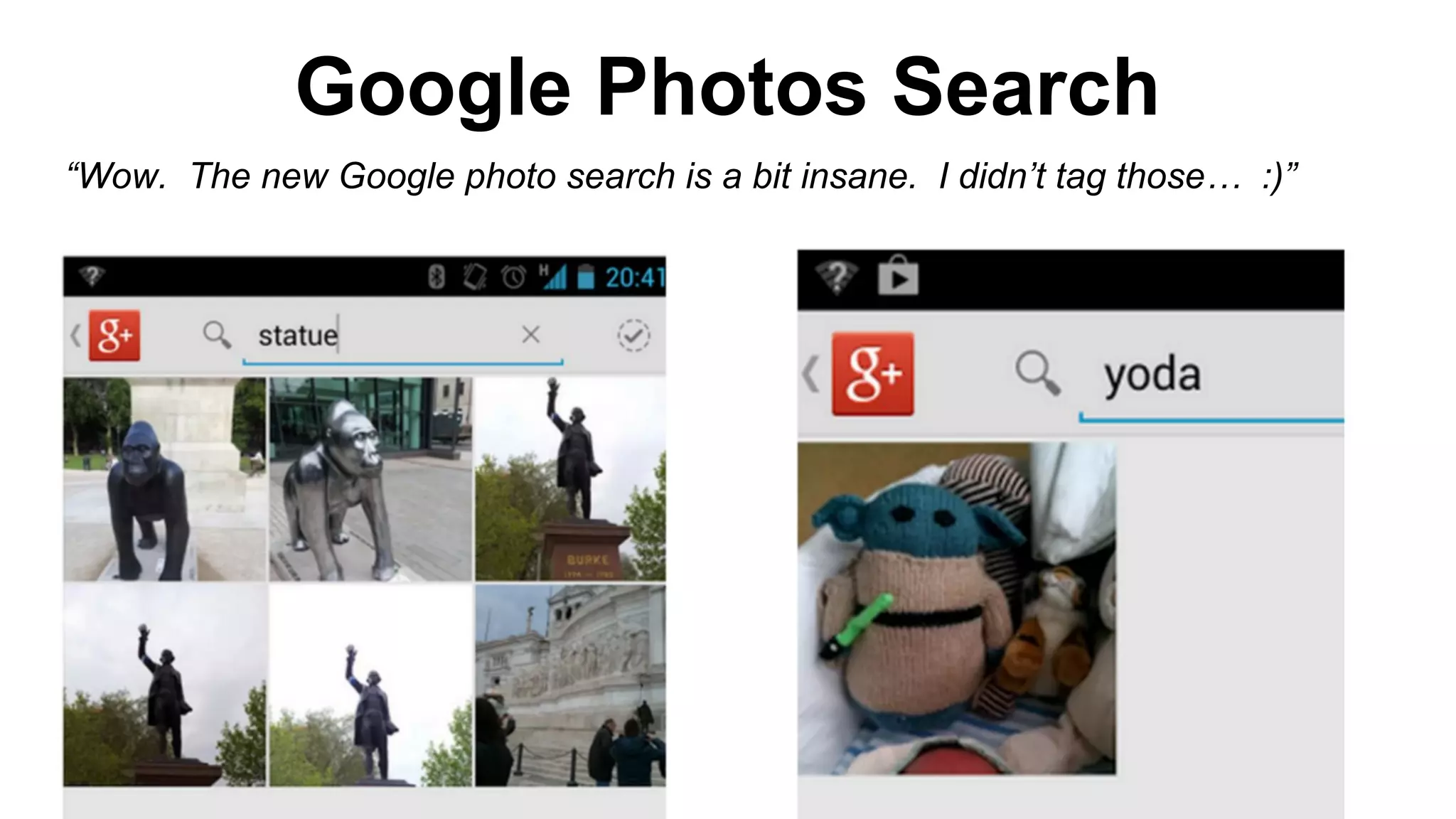

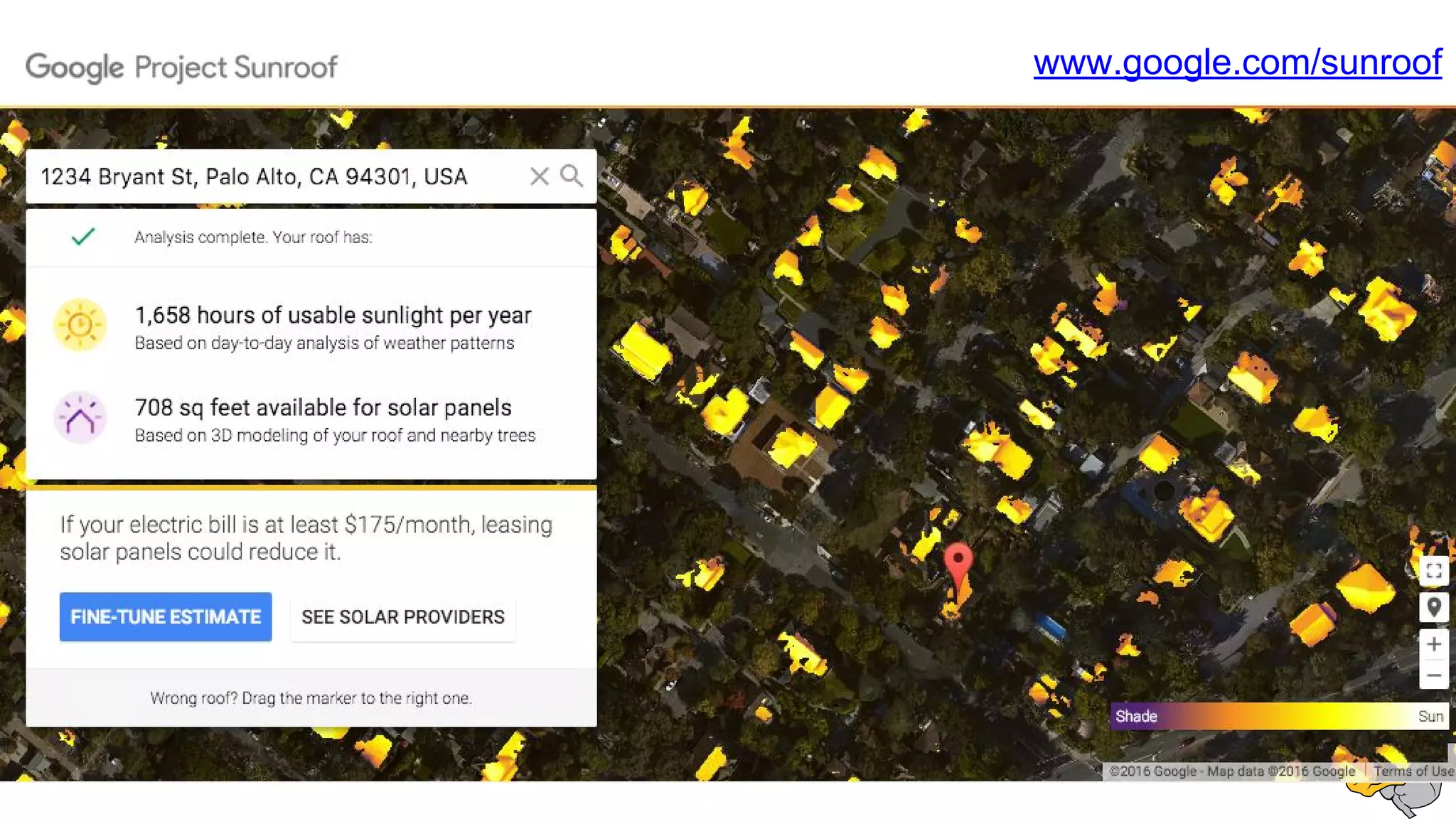

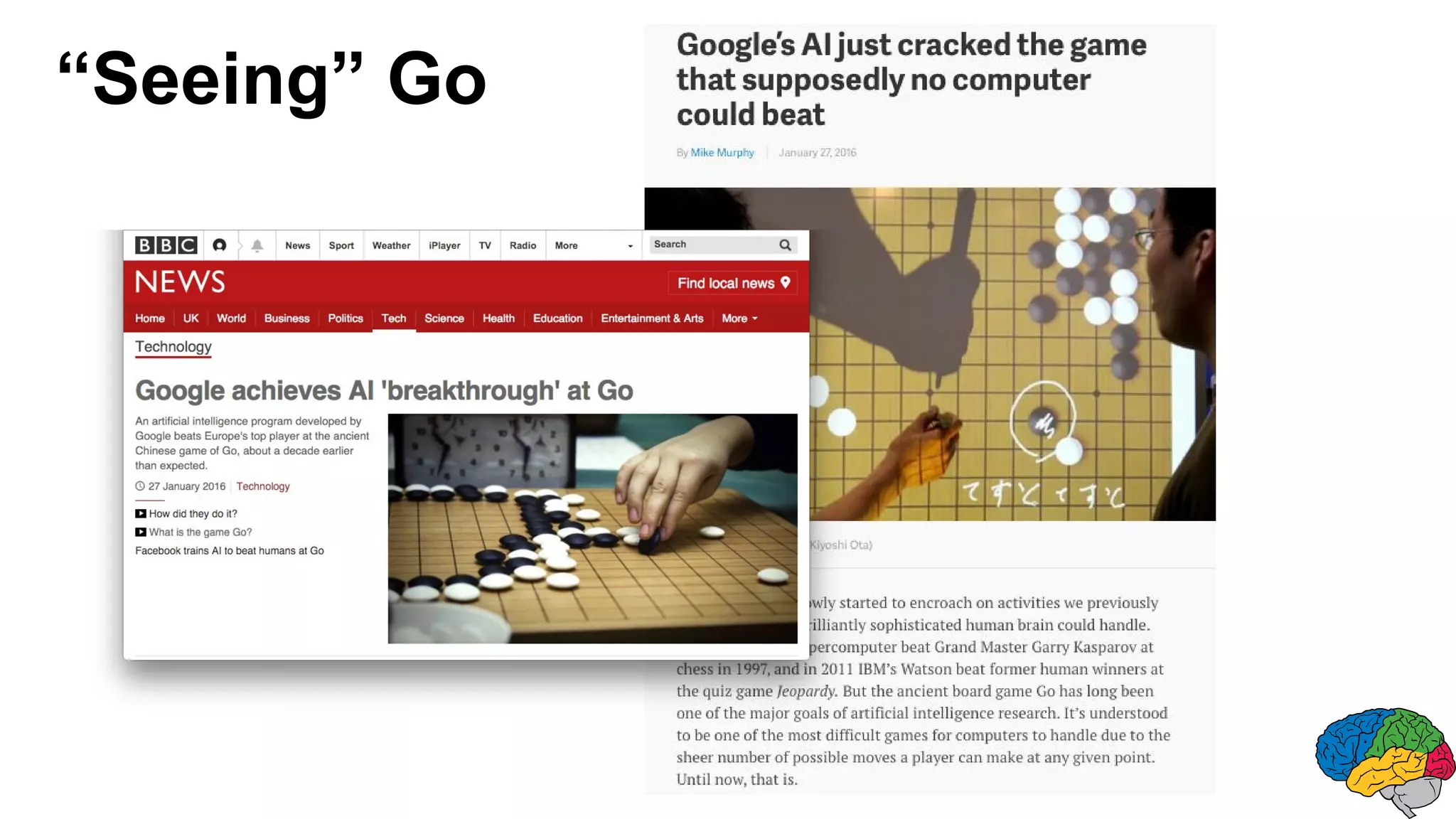

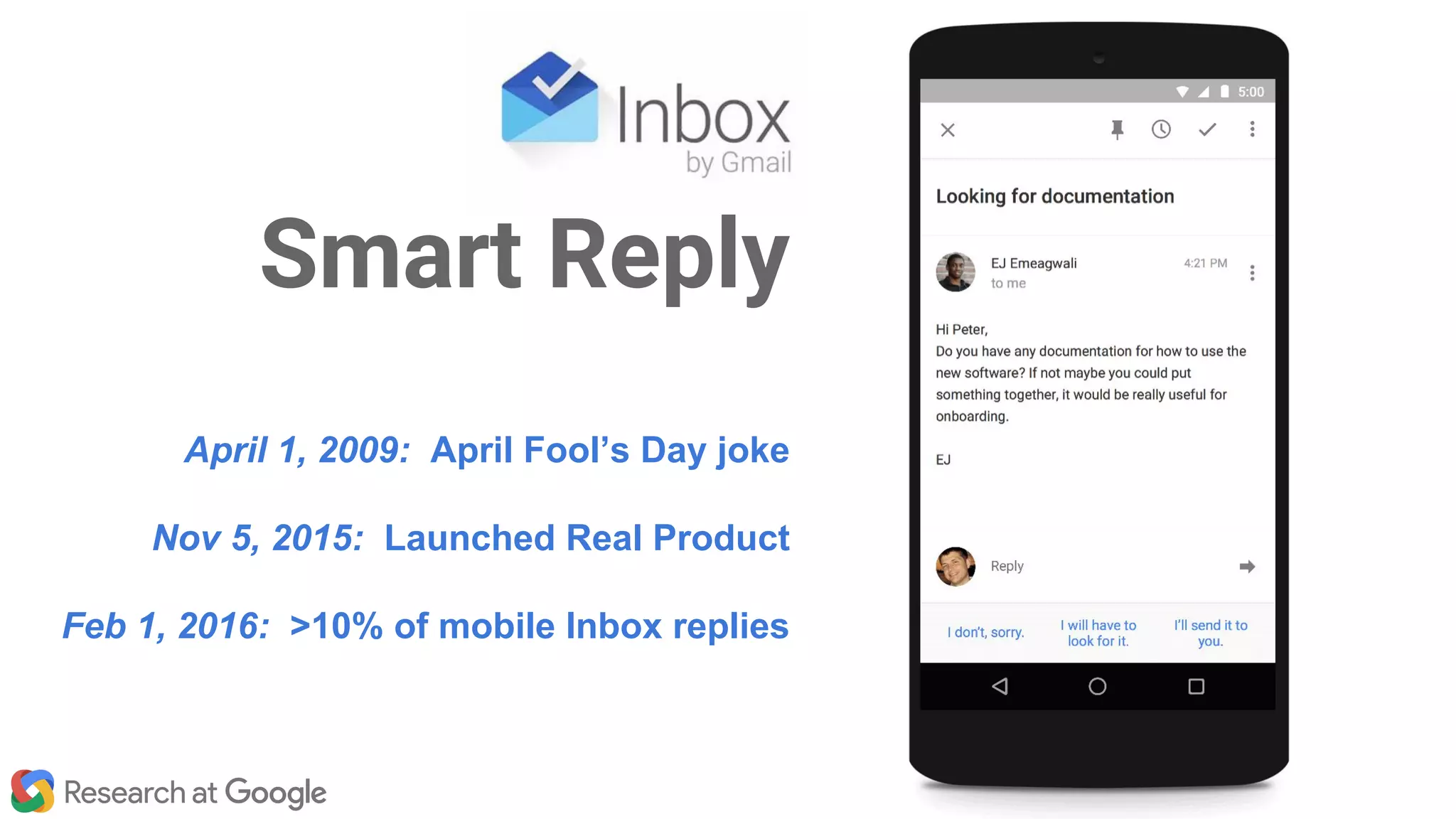

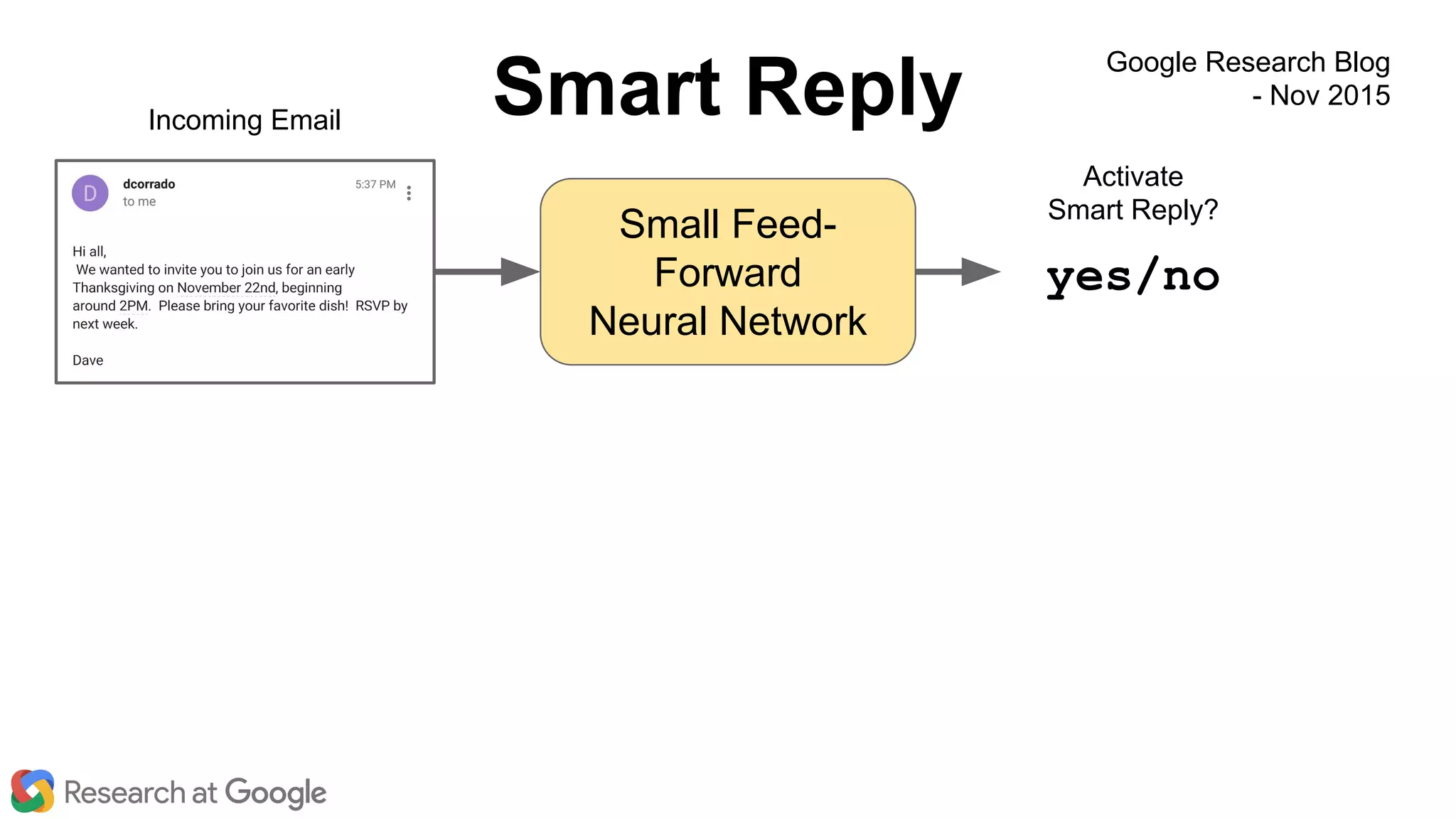

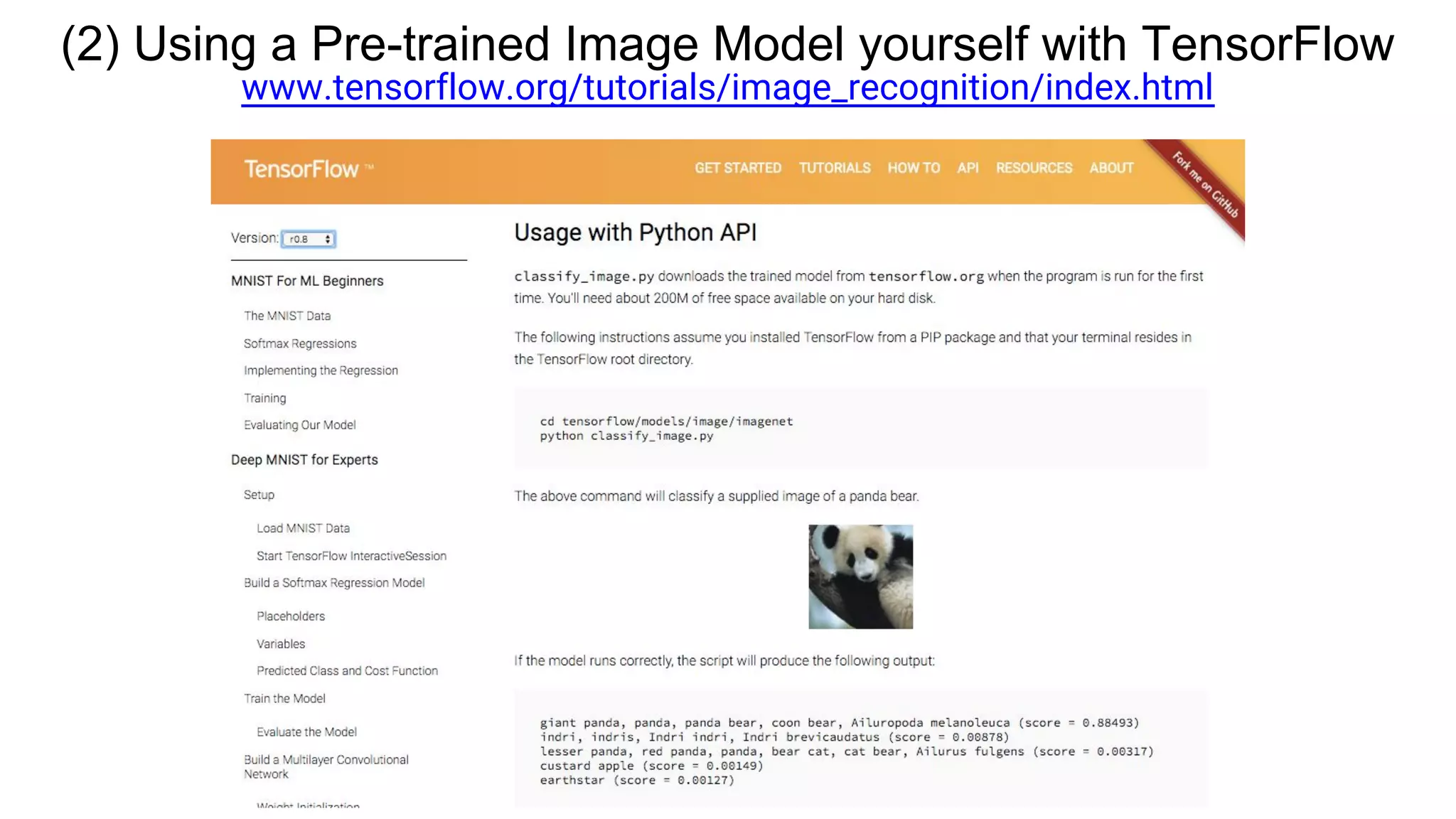

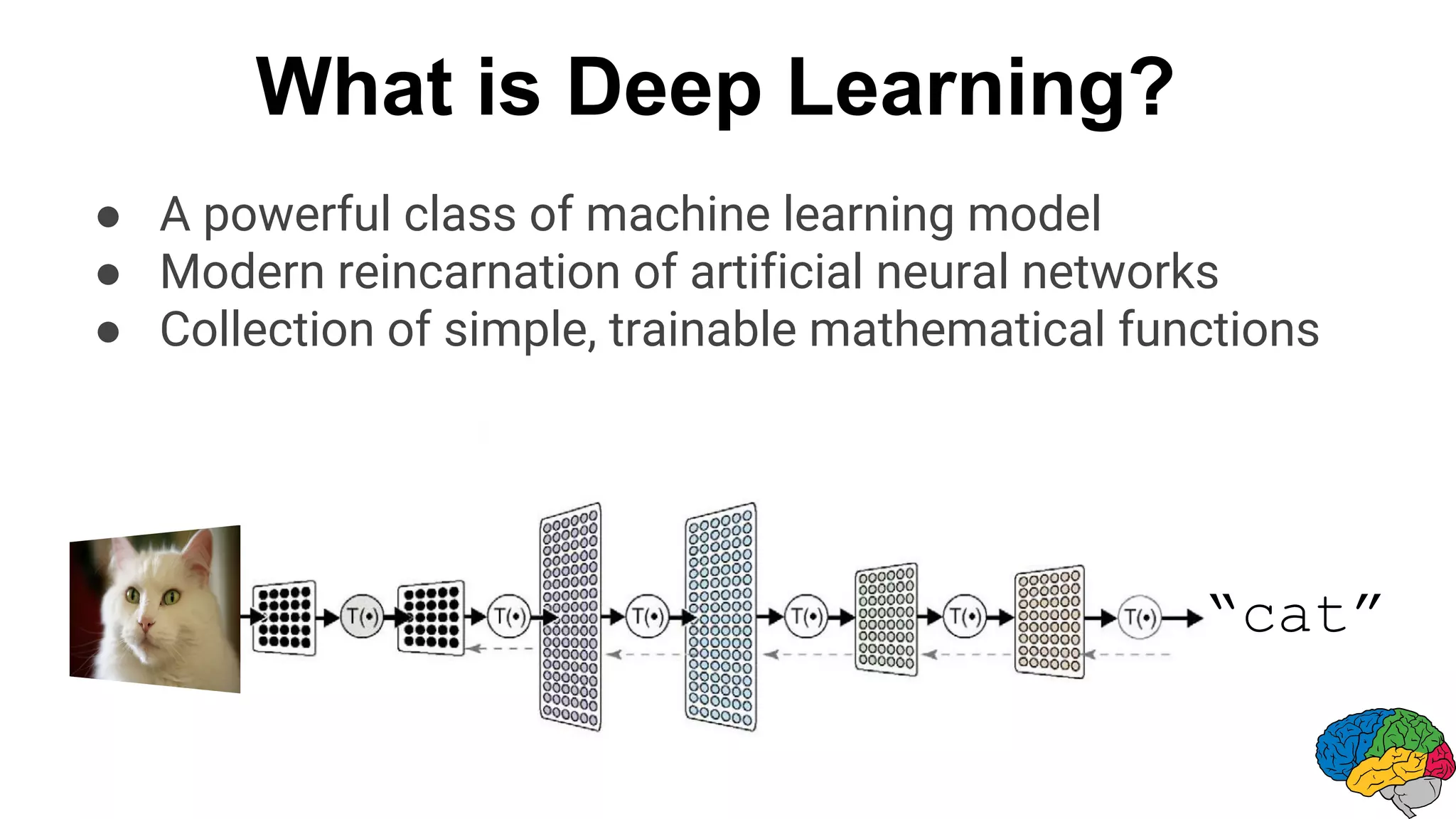

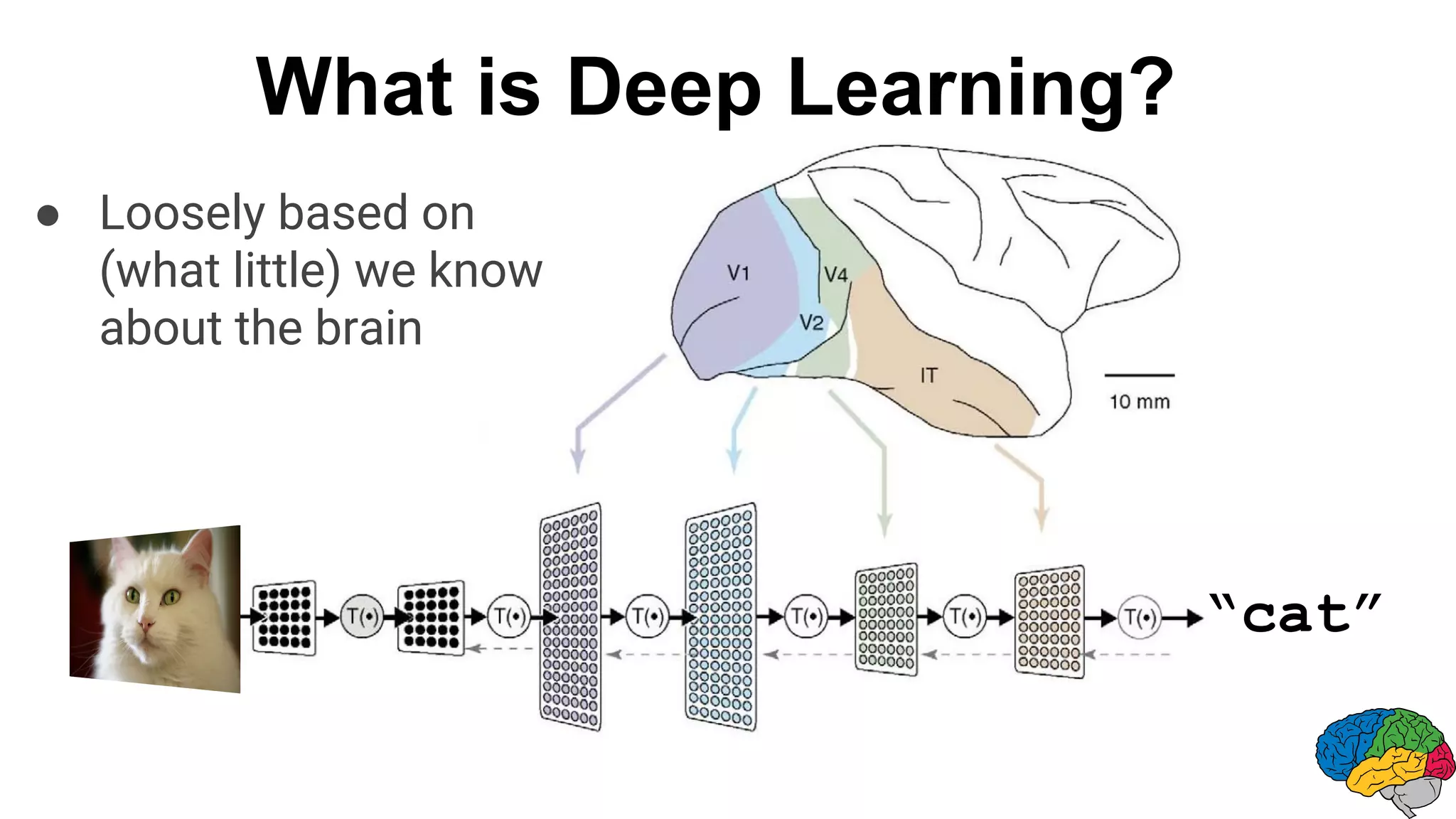

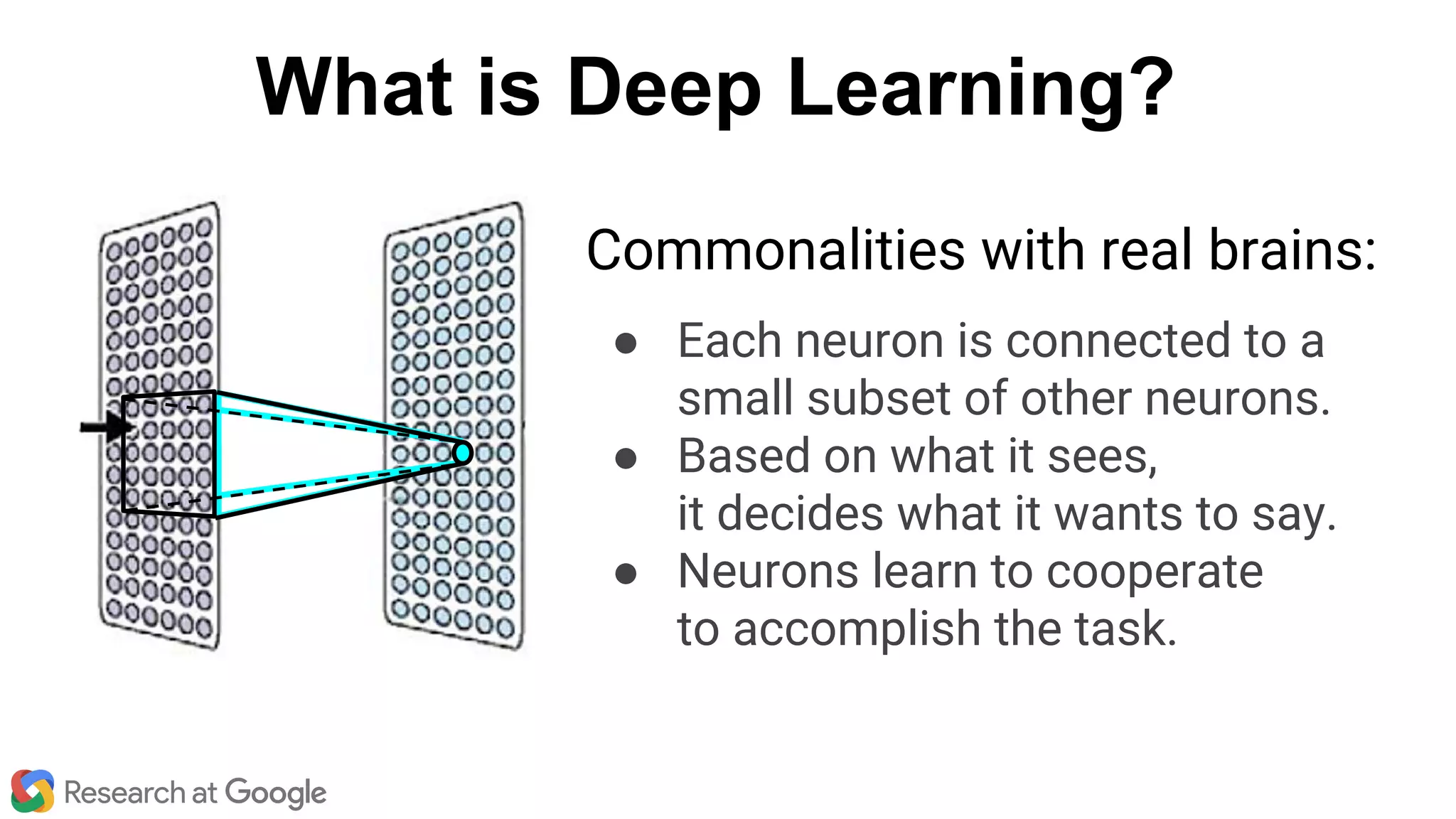

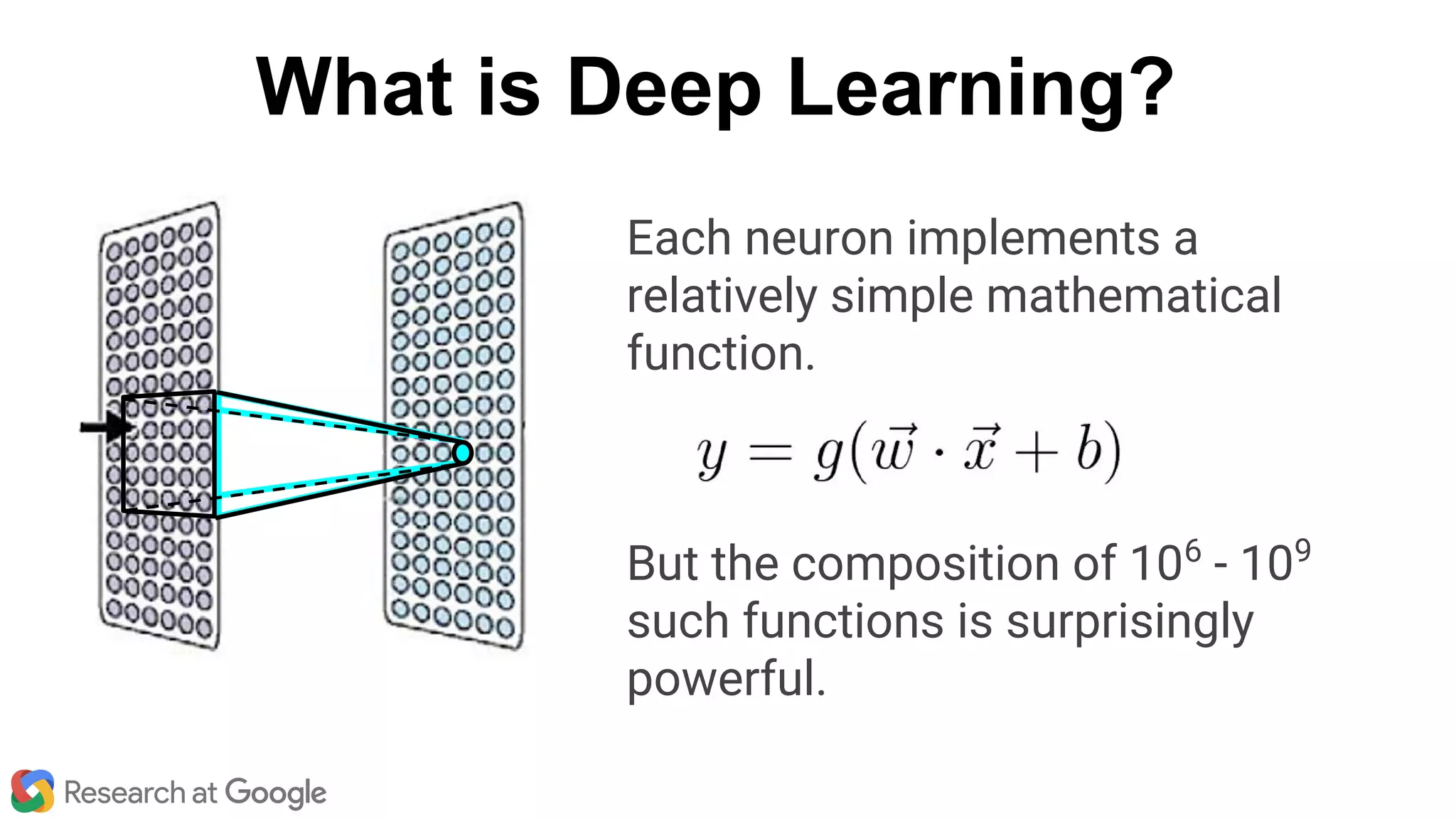

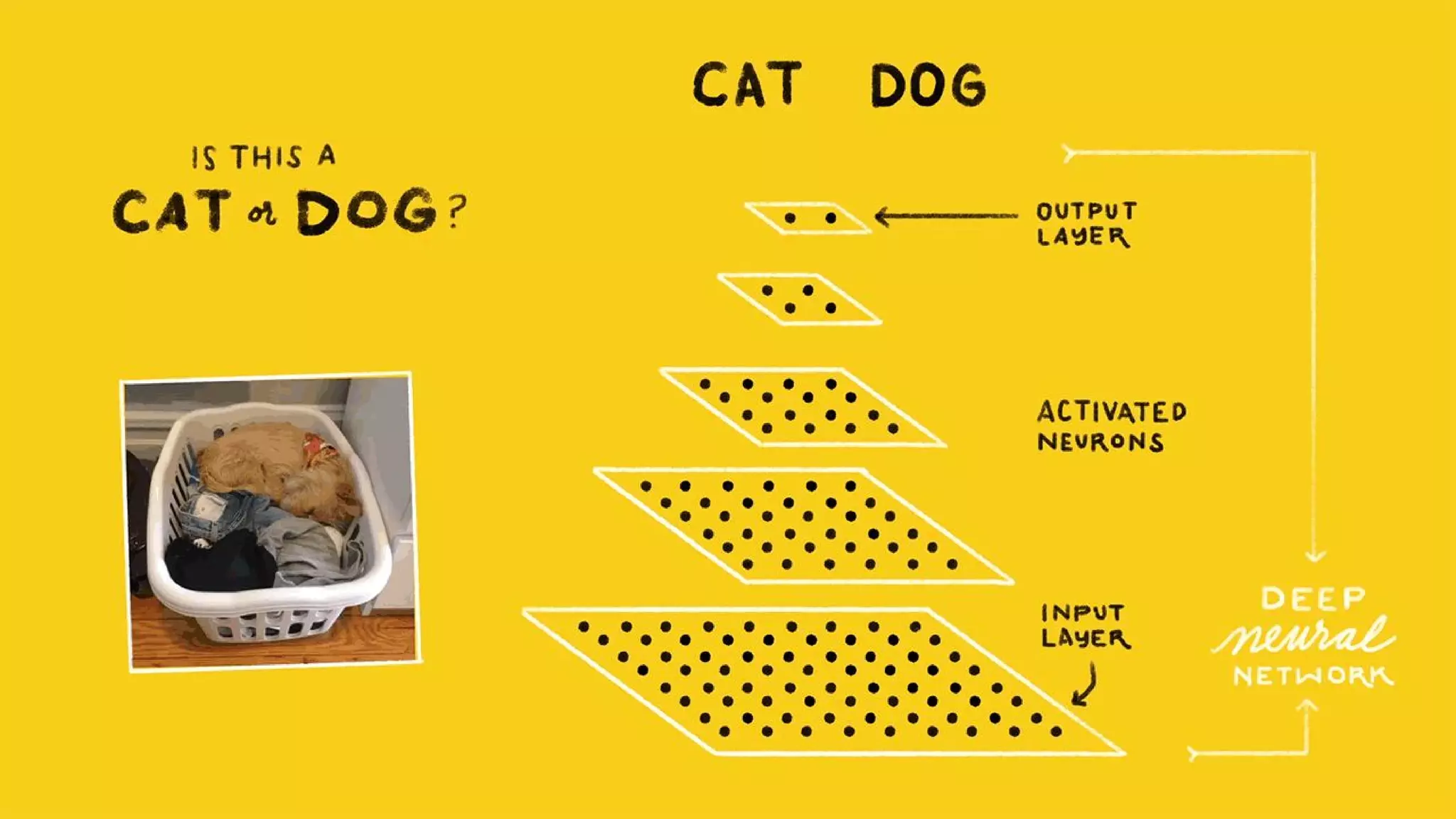

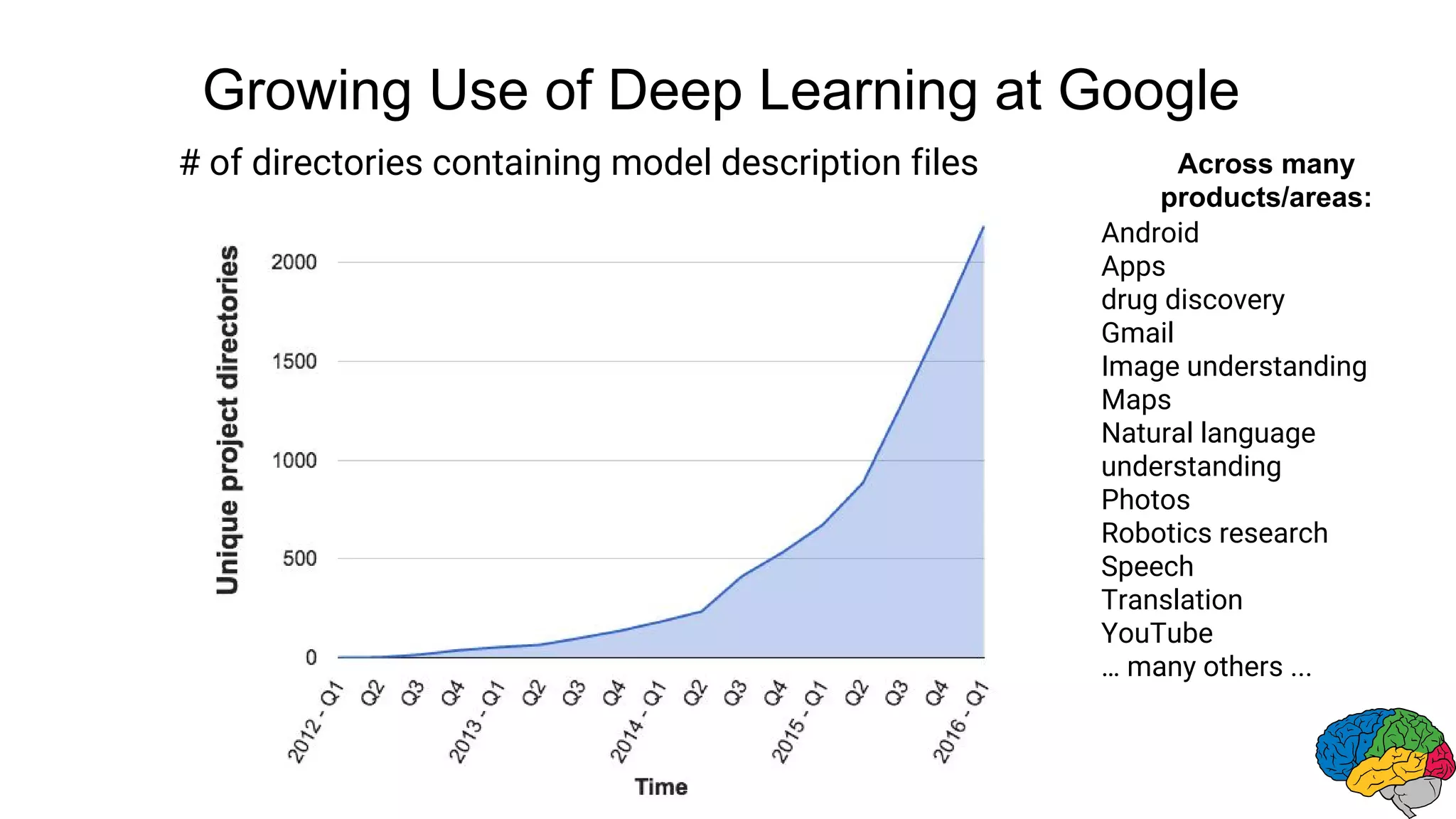

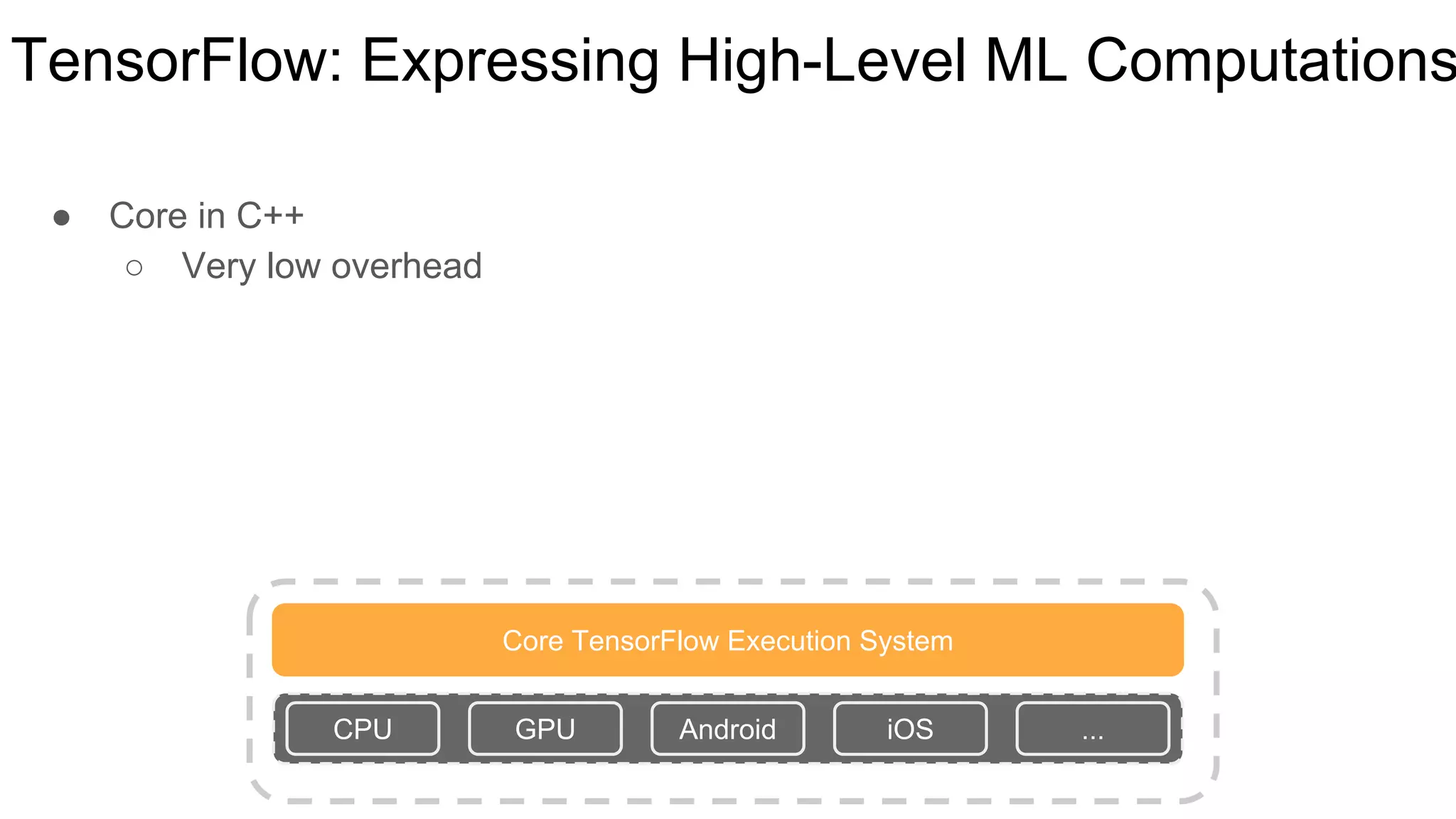

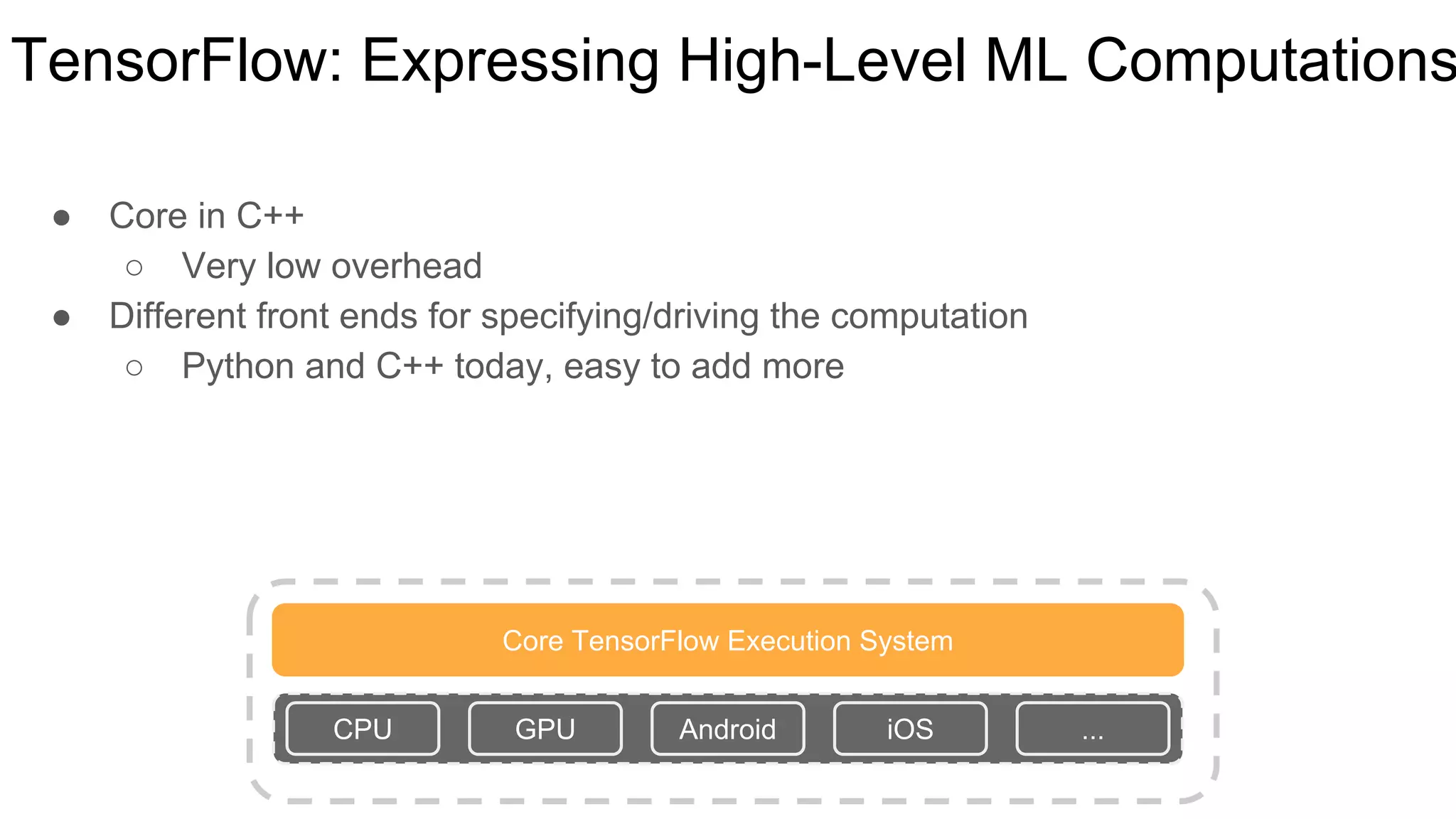

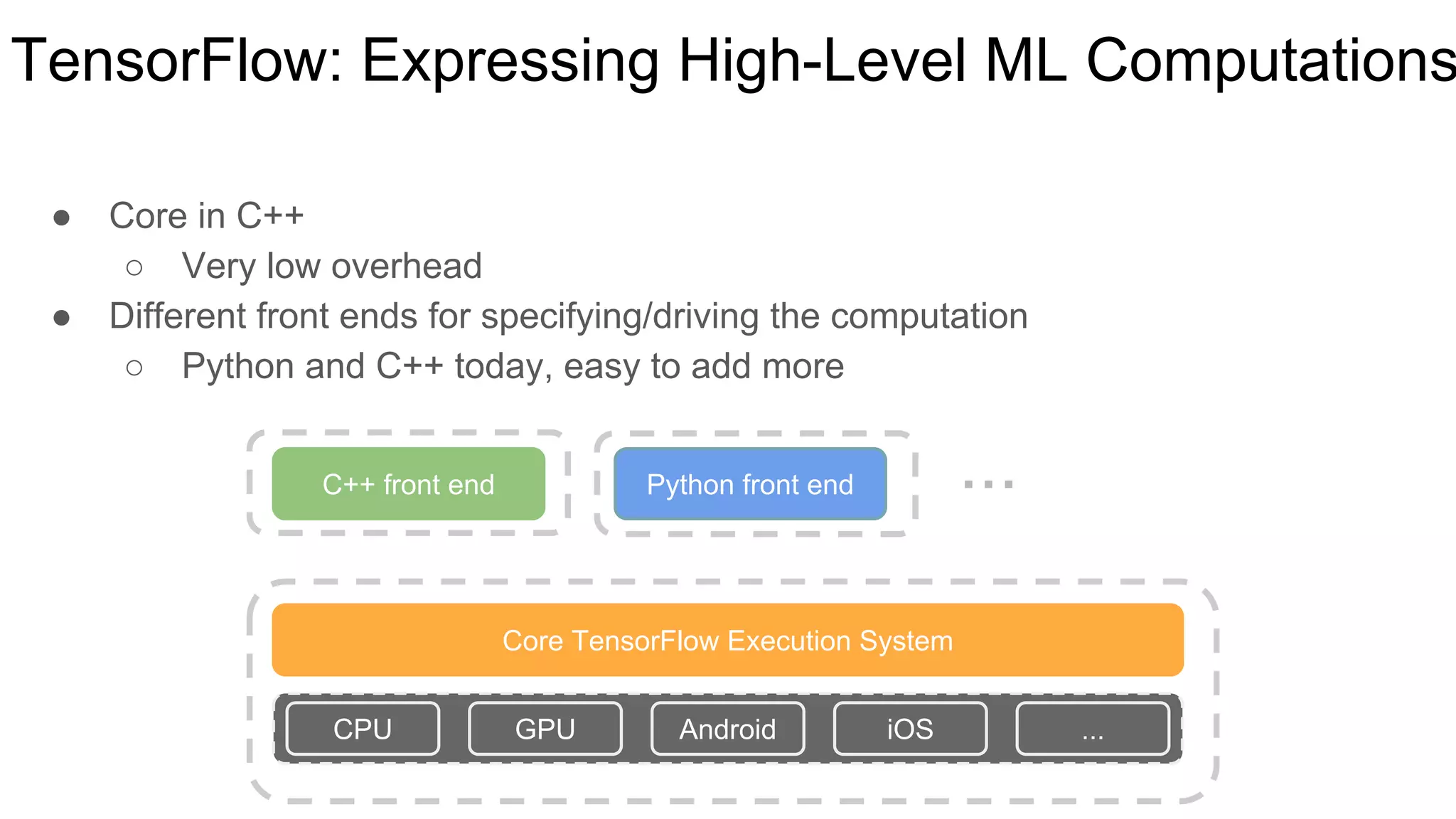

Large-scale deep learning with TensorFlow allows storing and performing computation on large datasets to develop computer systems that can understand data. Deep learning models like neural networks are loosely based on what is known about the brain and become more powerful with more data, larger models, and more computation. At Google, deep learning is being applied across many products and areas, from speech recognition to image understanding to machine translation. TensorFlow provides an open-source software library for machine learning that has been widely adopted both internally at Google and externally.

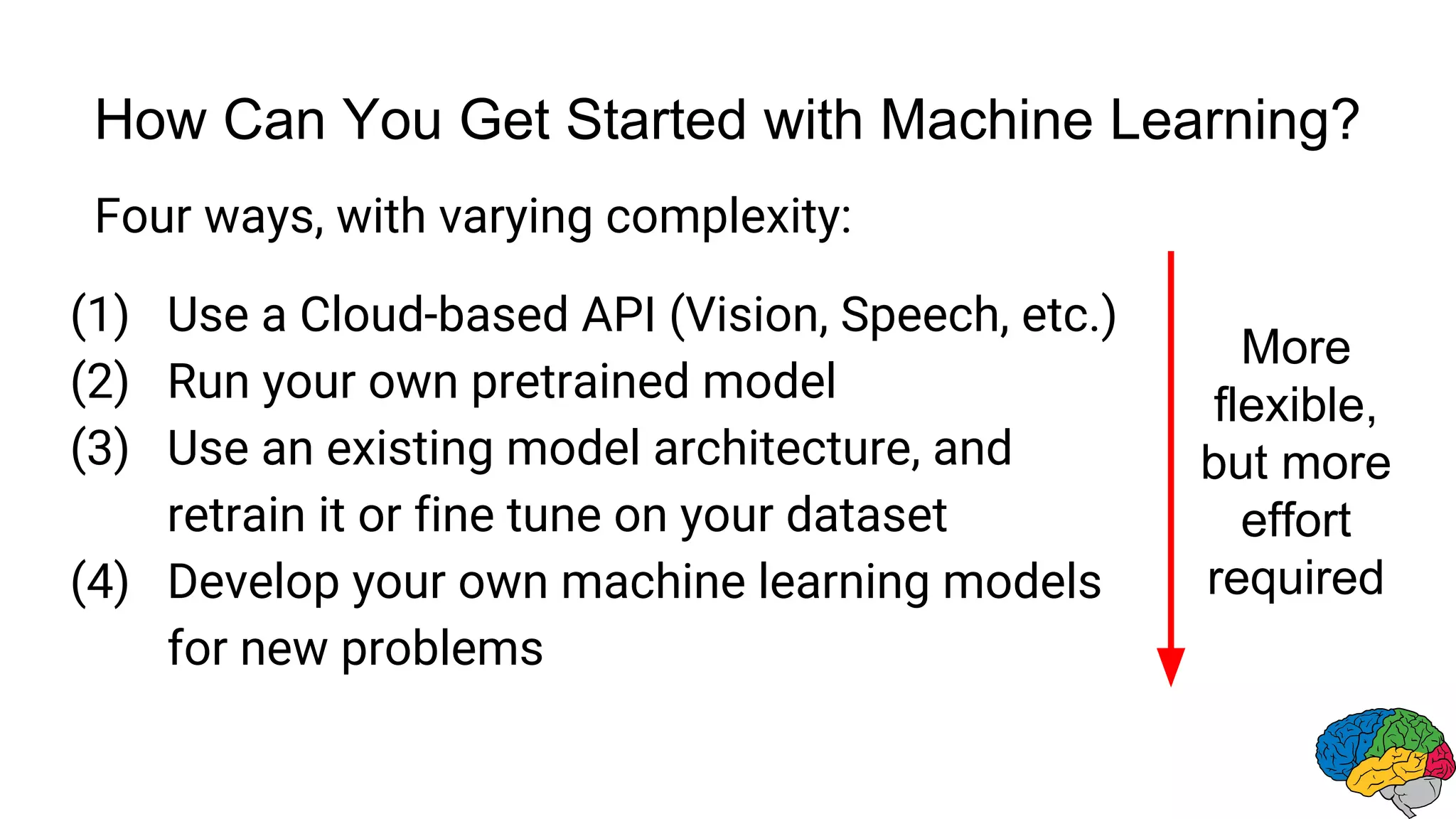

![● Build a graph computing a neural net inference.

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data', one_hot=True)

x = tf.placeholder("float", shape=[None, 784])

W = tf.Variable(tf.zeros([784,10]))

b = tf.Variable(tf.zeros([10]))

y = tf.nn.softmax(tf.matmul(x, W) + b)

Example TensorFlow fragment](https://image.slidesharecdn.com/k2jeffdean-160609173832/75/Large-Scale-Deep-Learning-with-TensorFlow-23-2048.jpg)

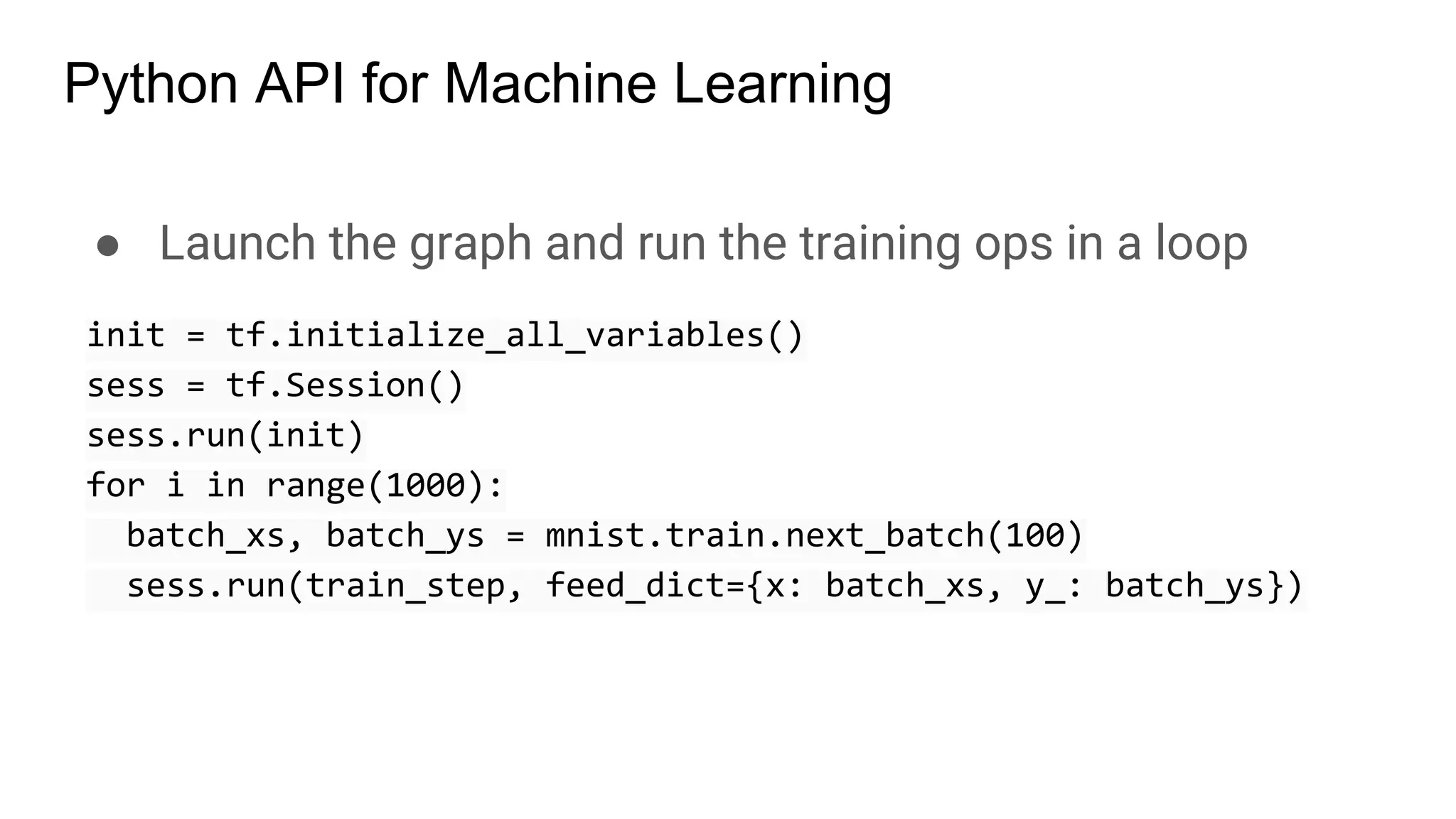

![● Automatically add ops to calculate symbolic gradients

of variables w.r.t. loss function.

● Apply these gradients with an optimization algorithm

y_ = tf.placeholder(tf.float32, [None, 10])

cross_entropy = -tf.reduce_sum(y_*tf.log(y))

opt = tf.train.GradientDescentOptimizer(0.01)

train_op = opt.minimize(cross_entropy)

Python API for Machine Learning](https://image.slidesharecdn.com/k2jeffdean-160609173832/75/Large-Scale-Deep-Learning-with-TensorFlow-24-2048.jpg)