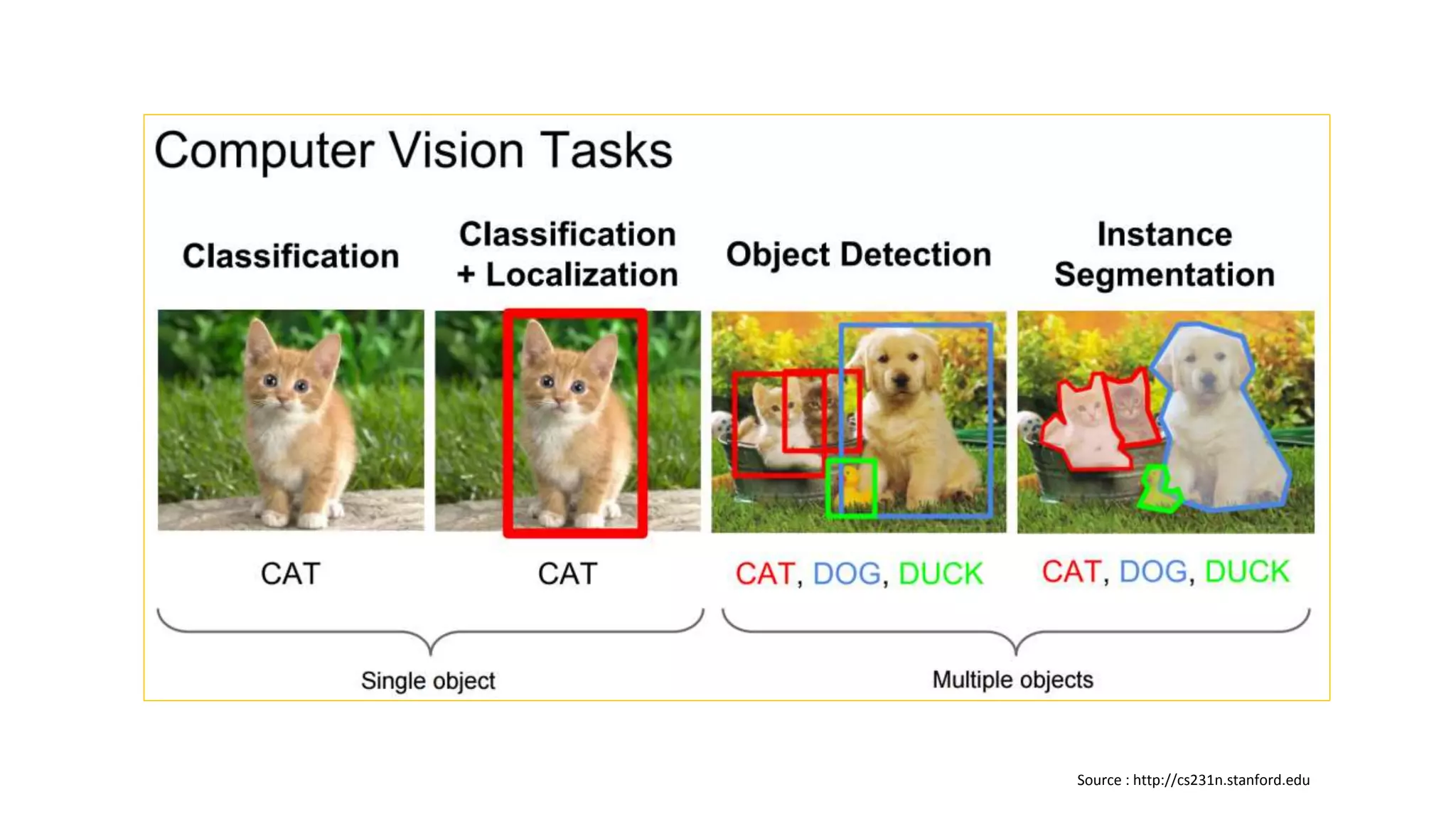

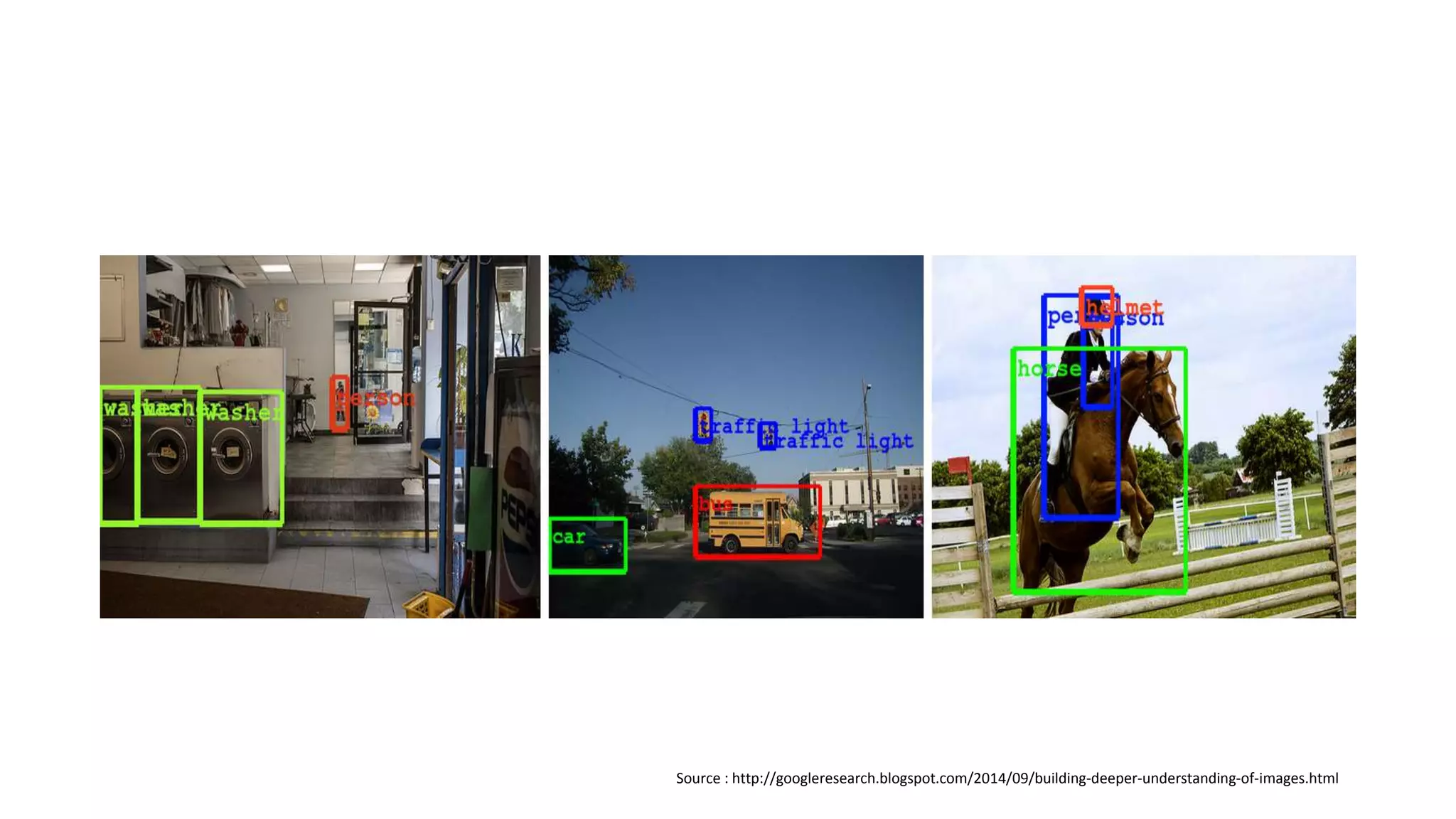

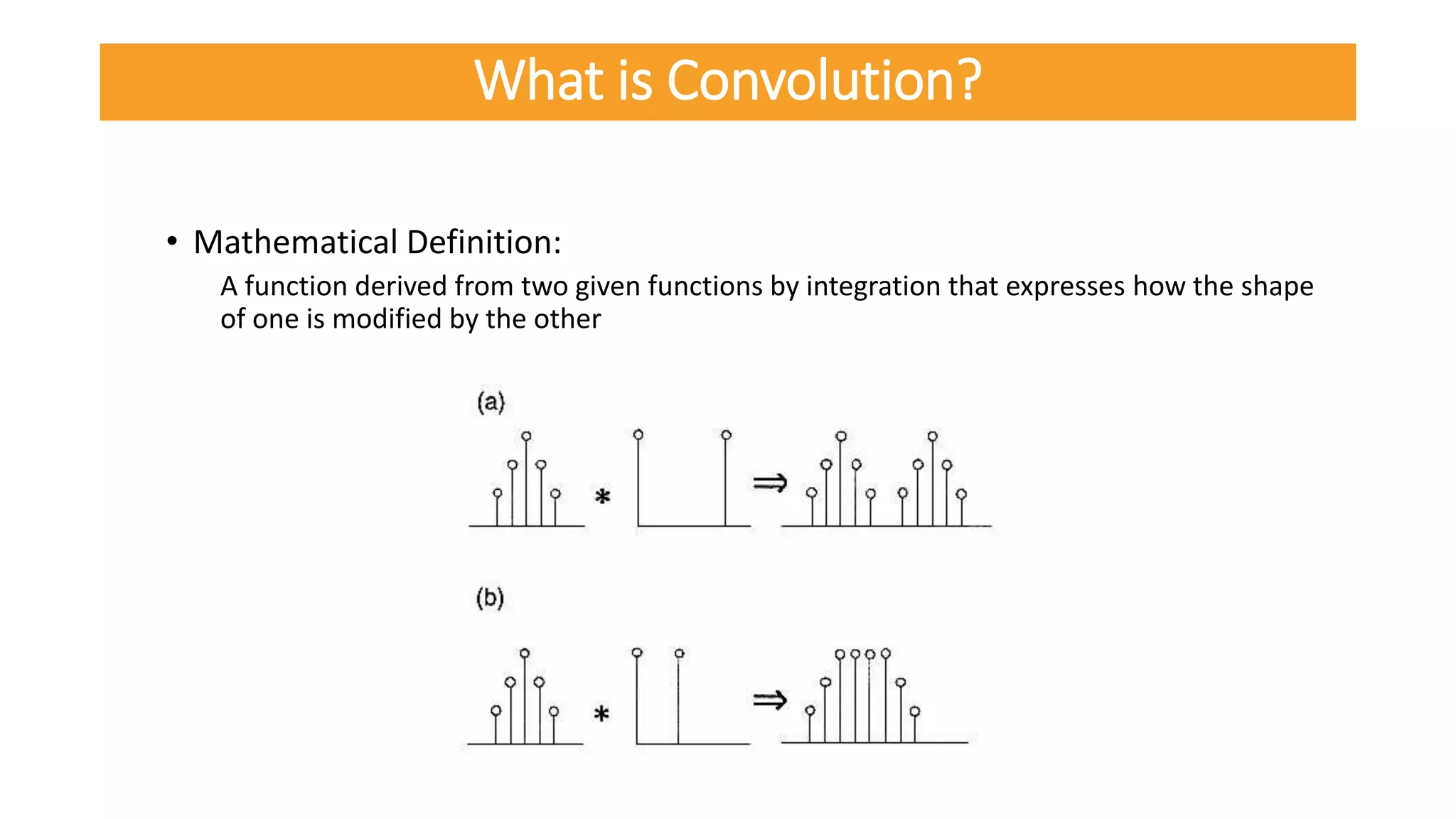

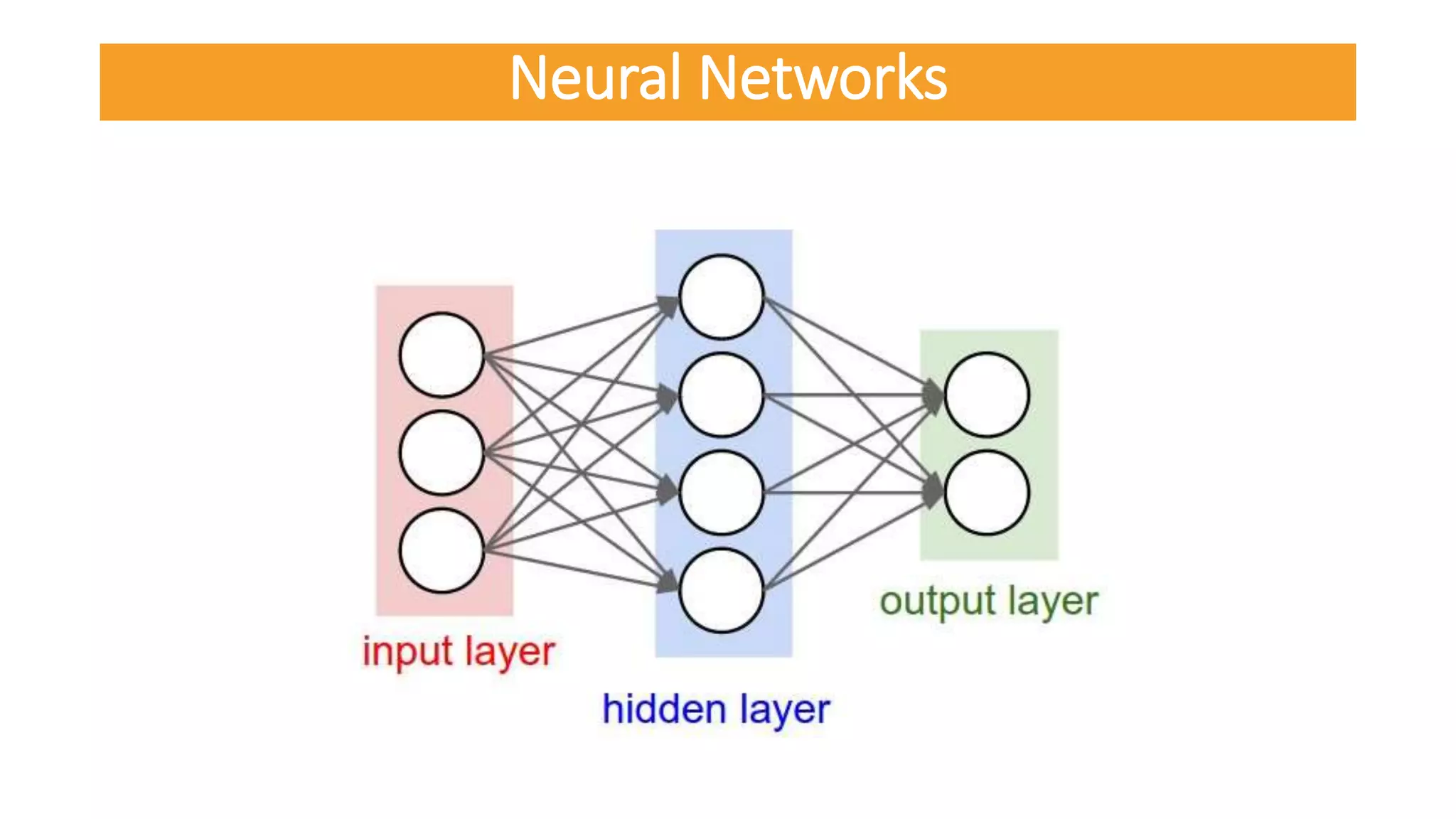

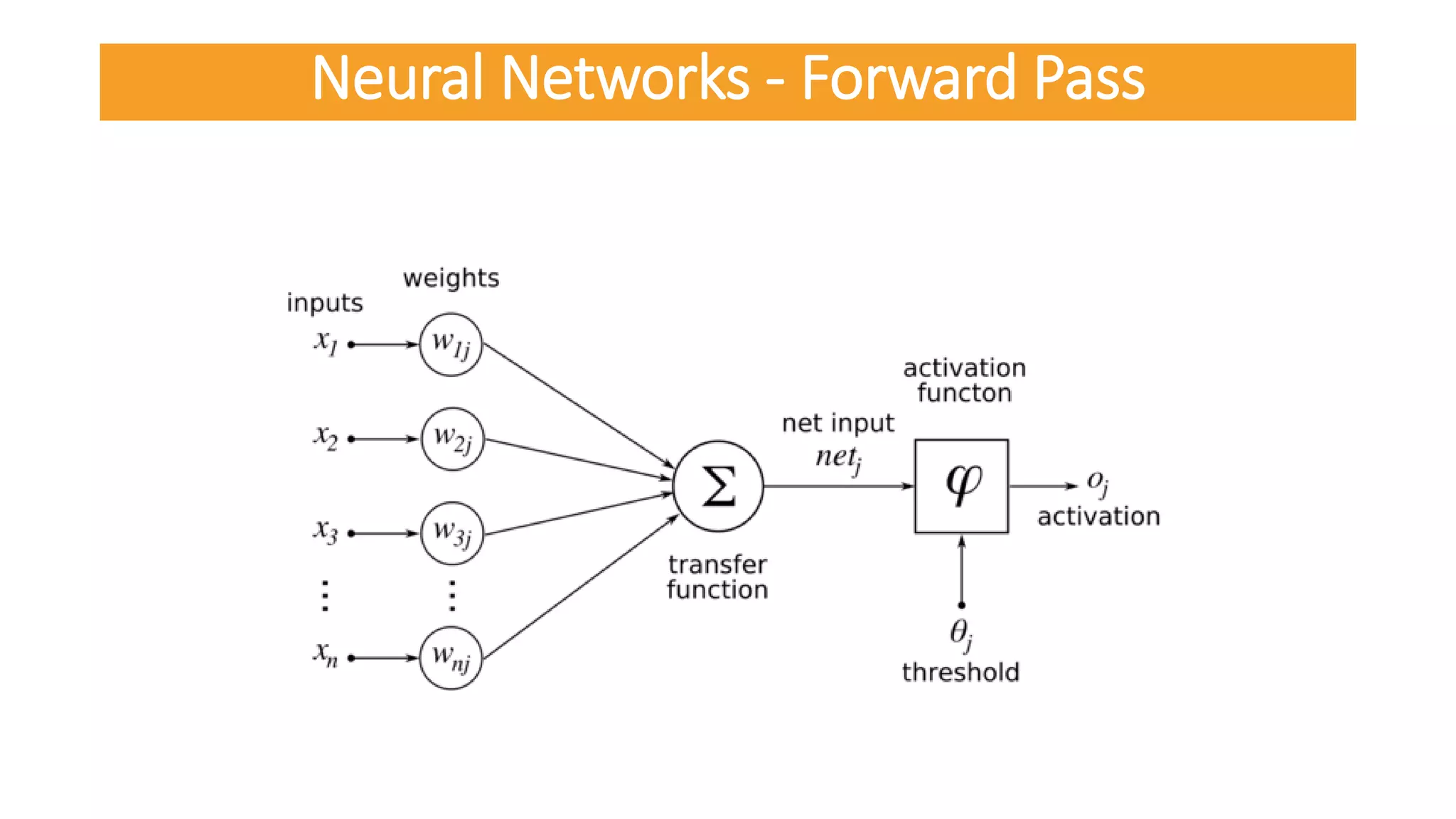

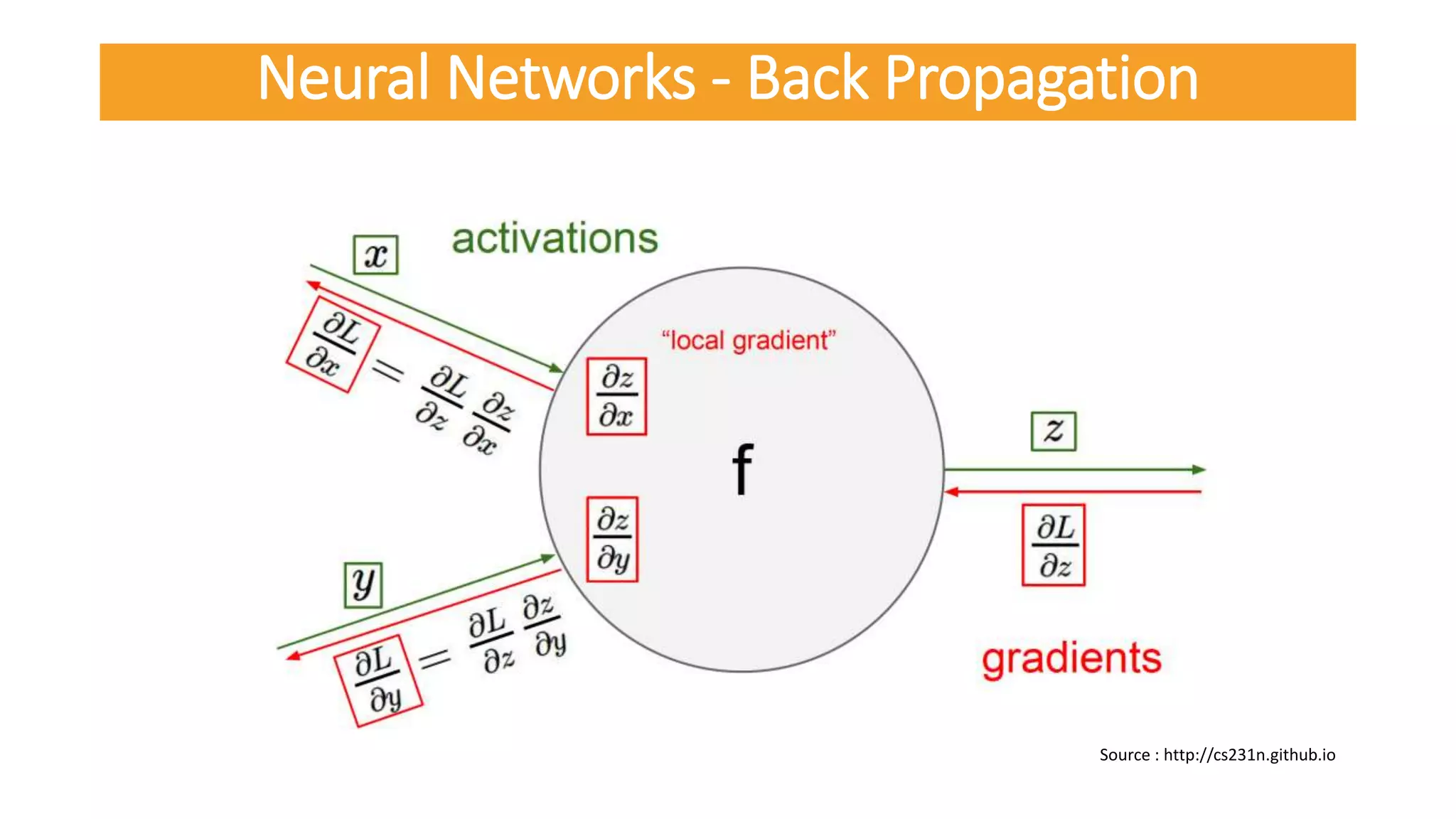

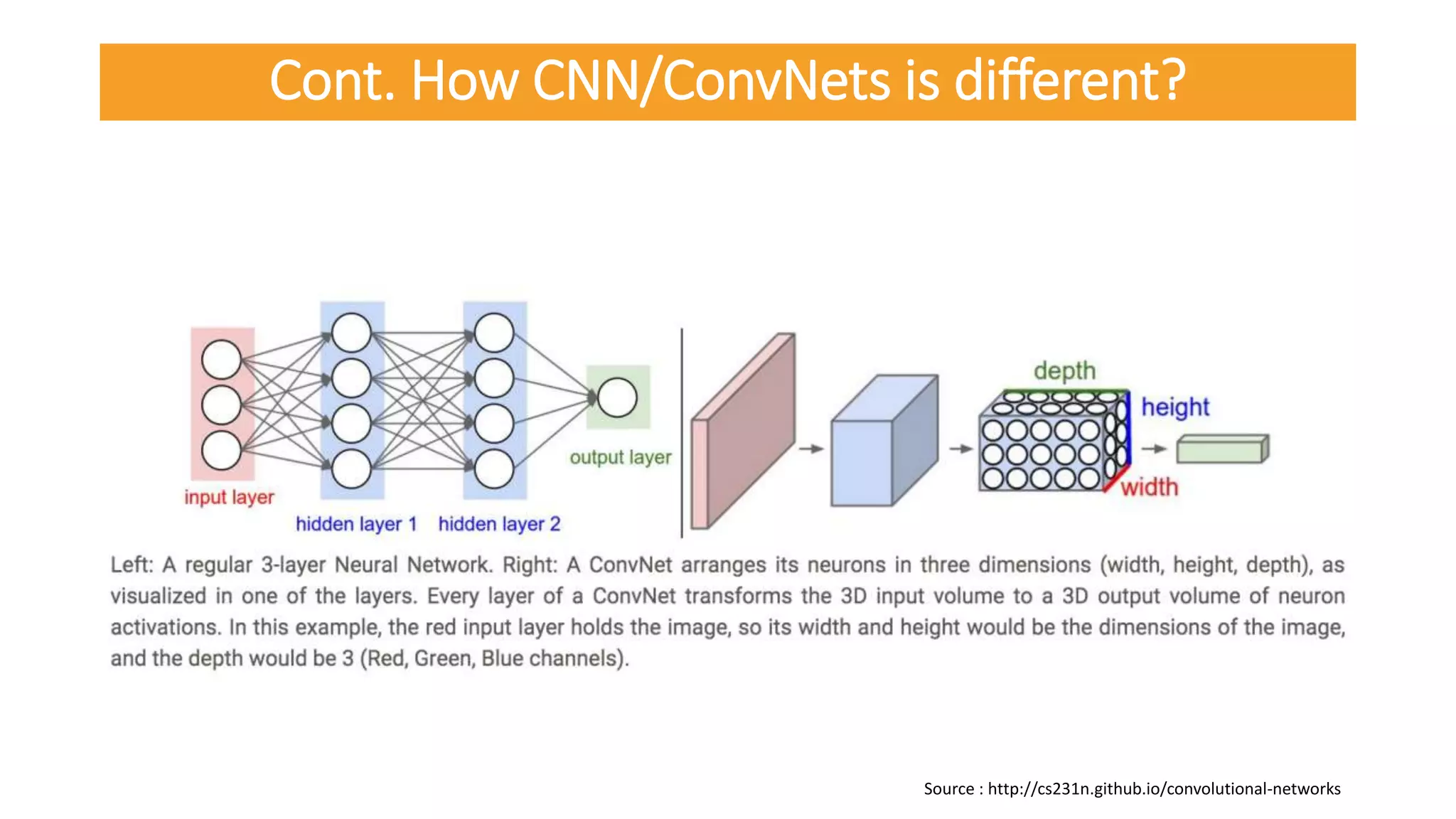

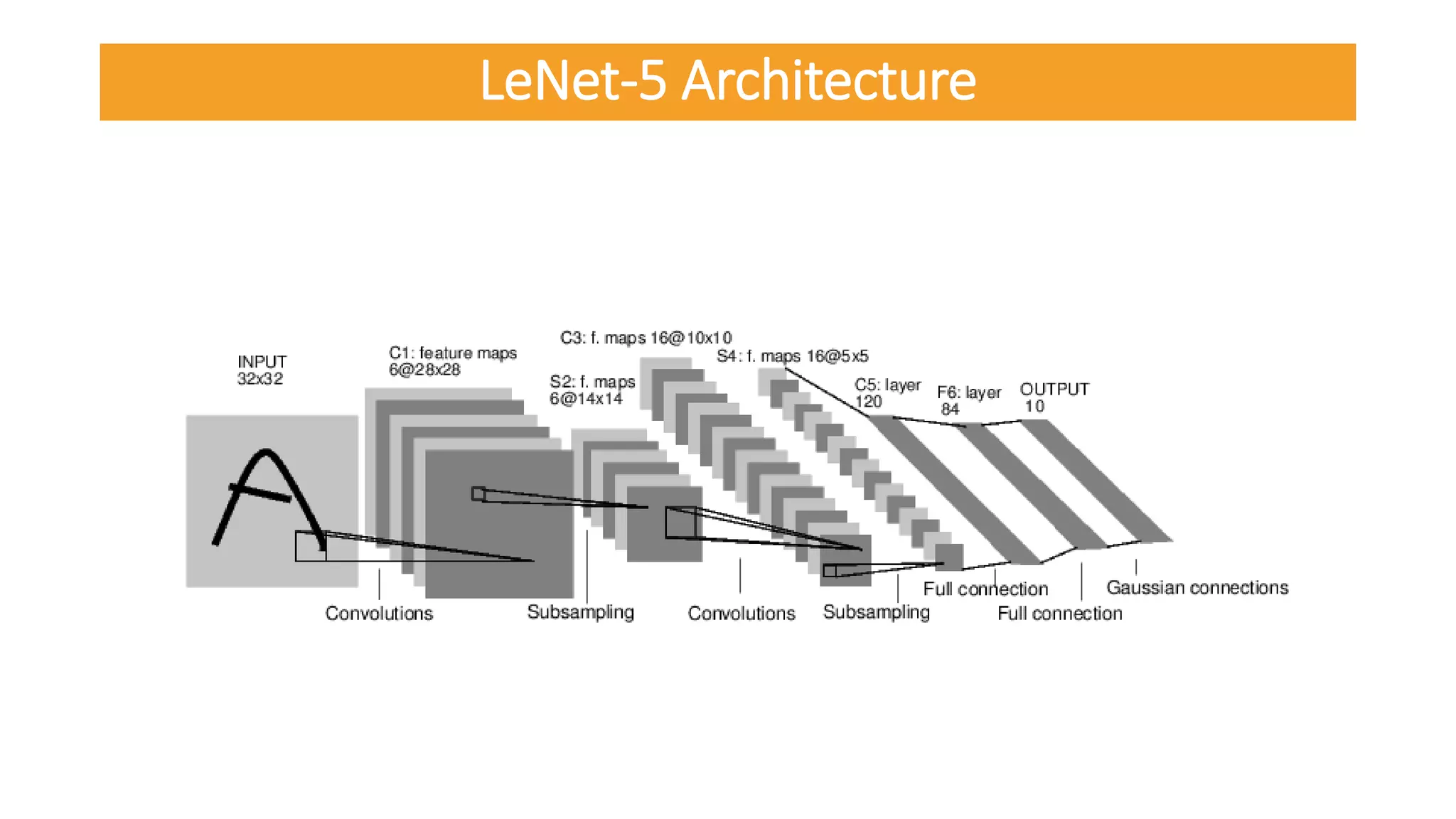

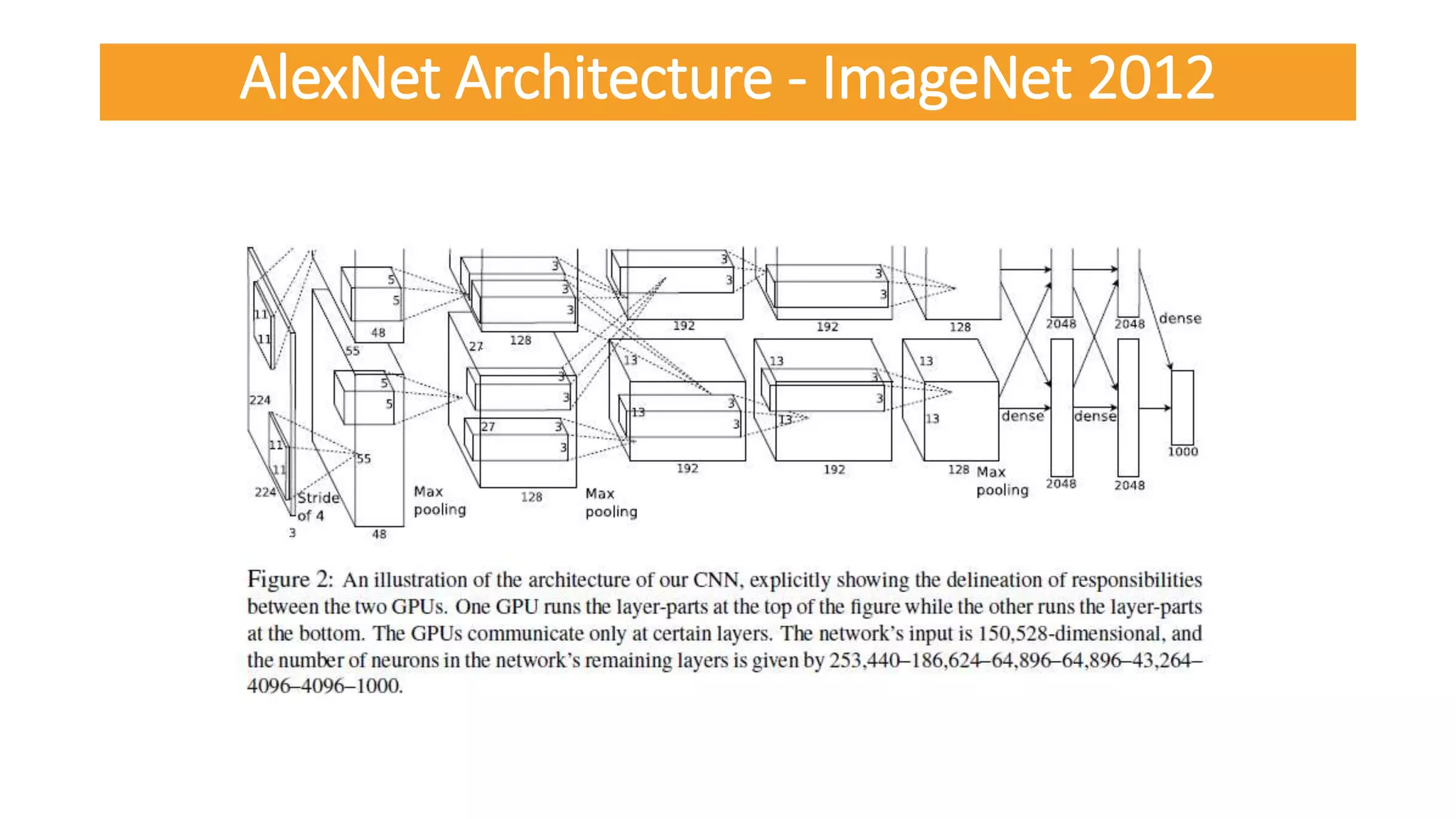

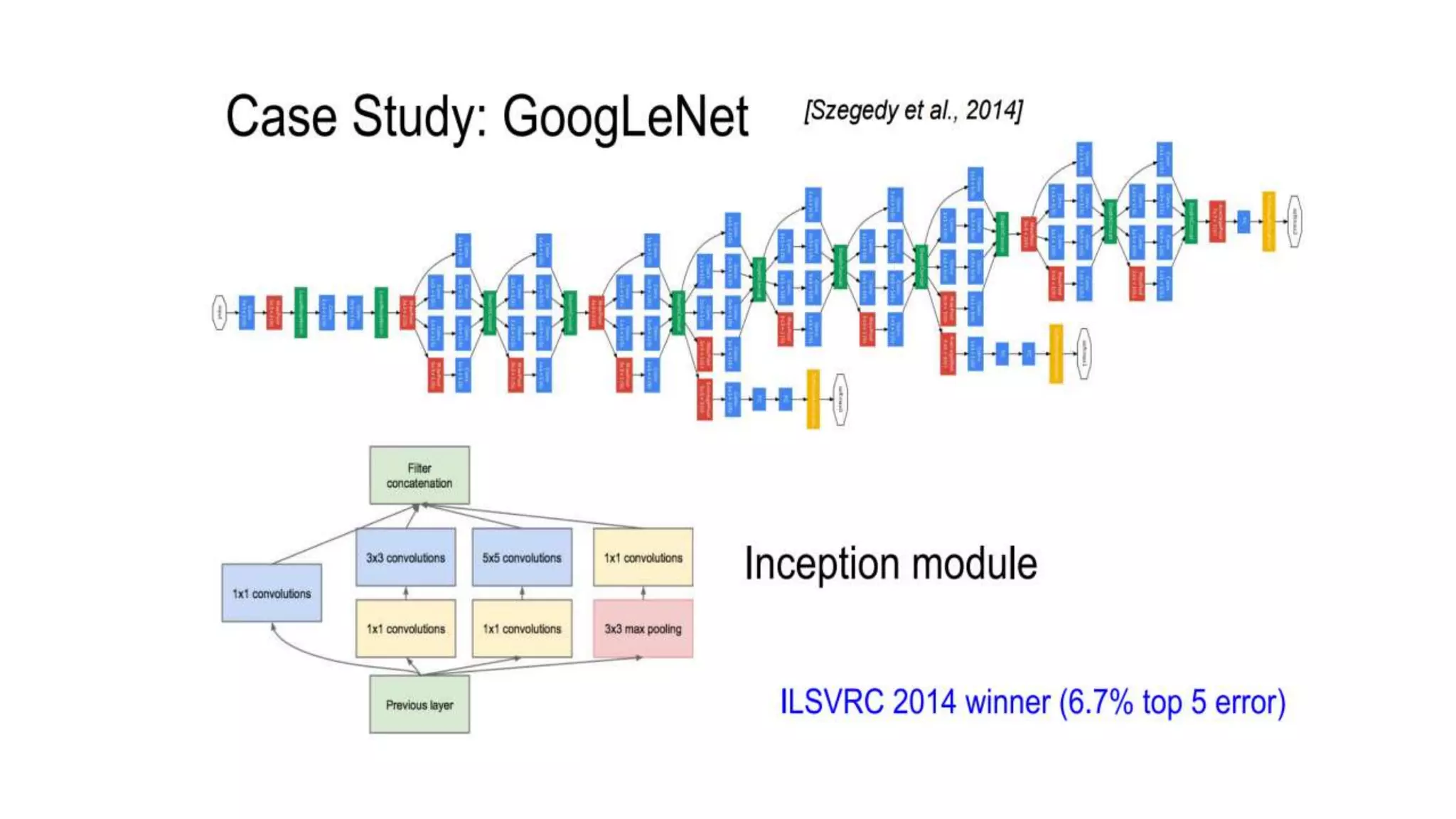

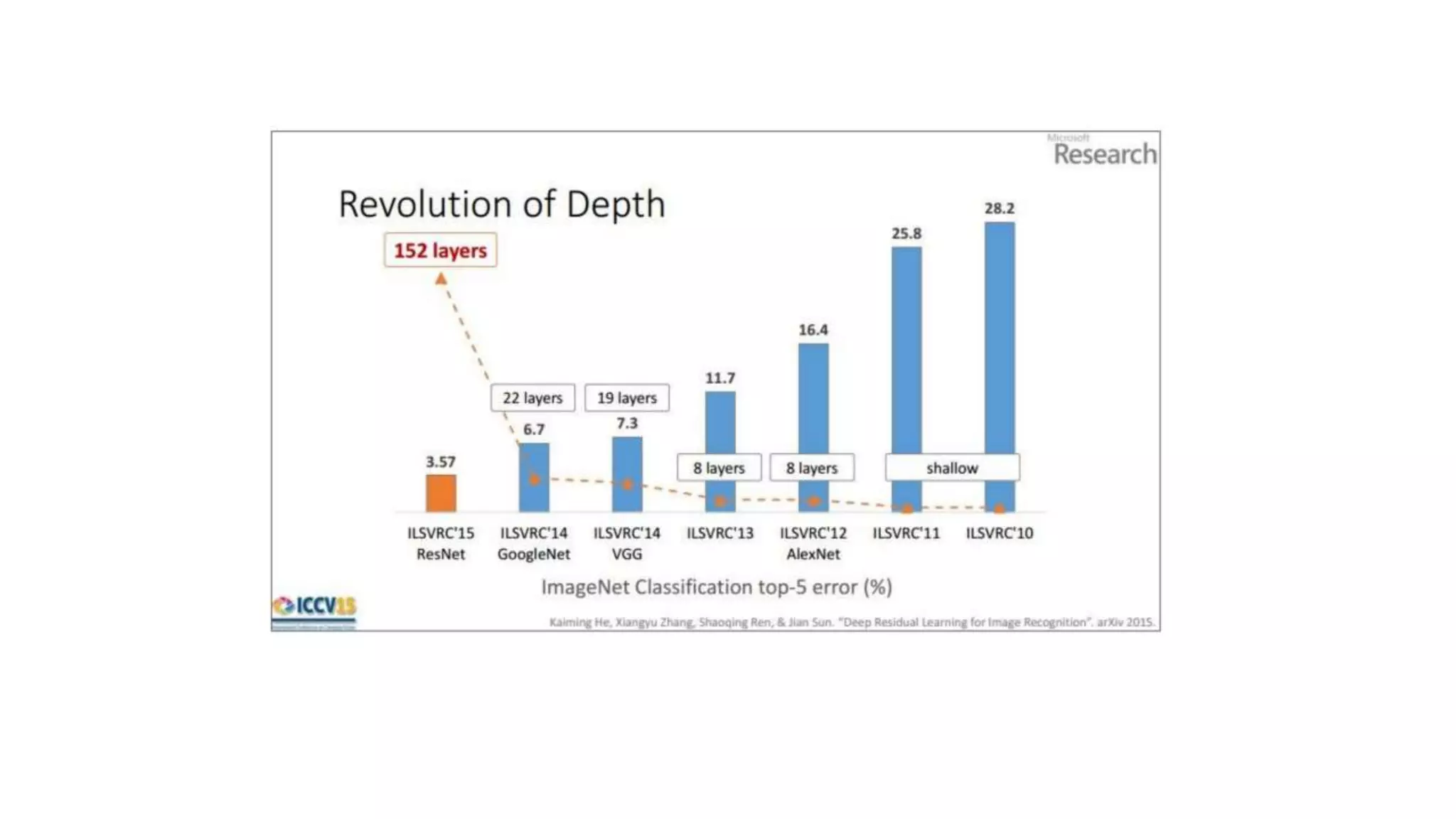

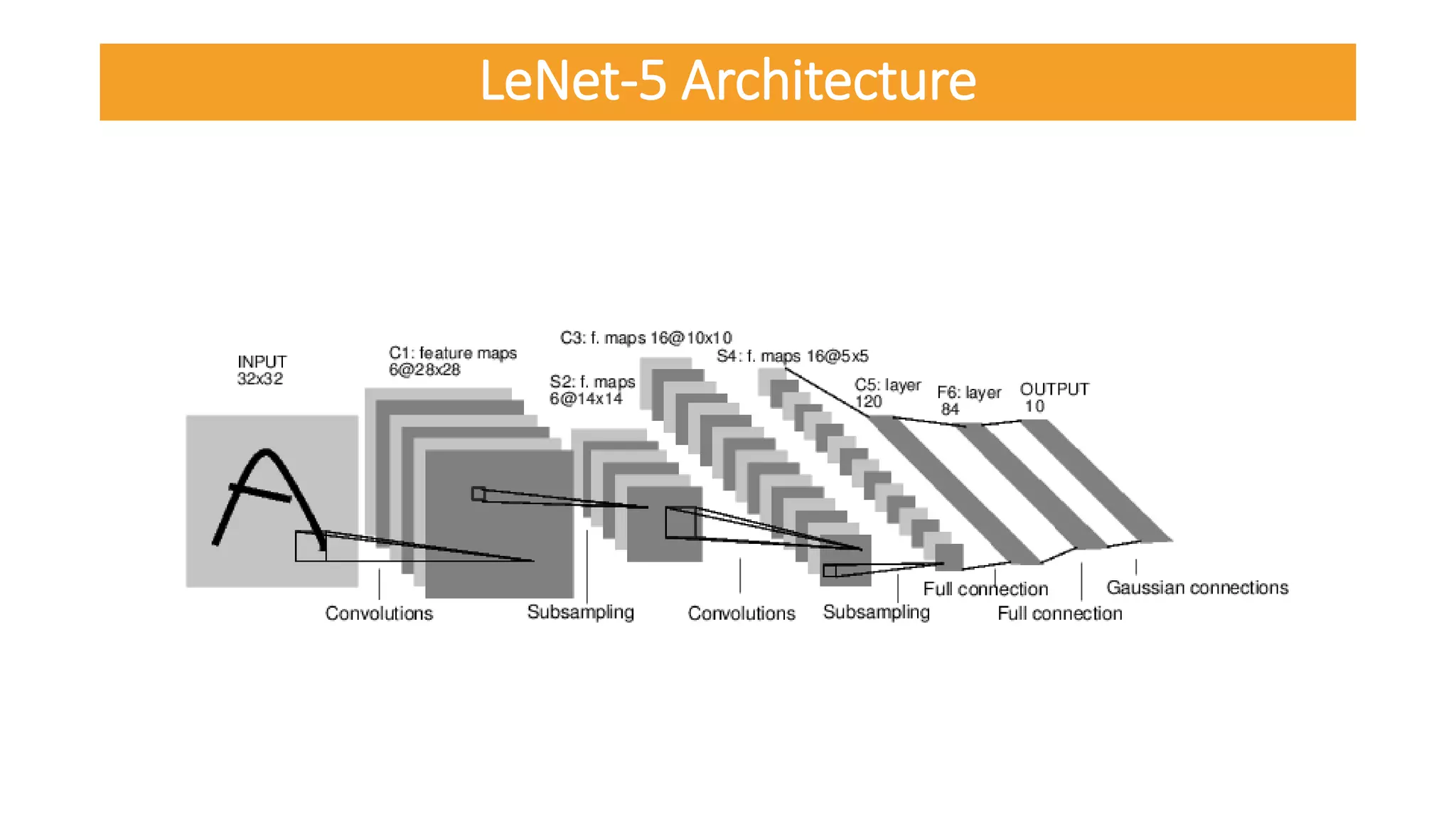

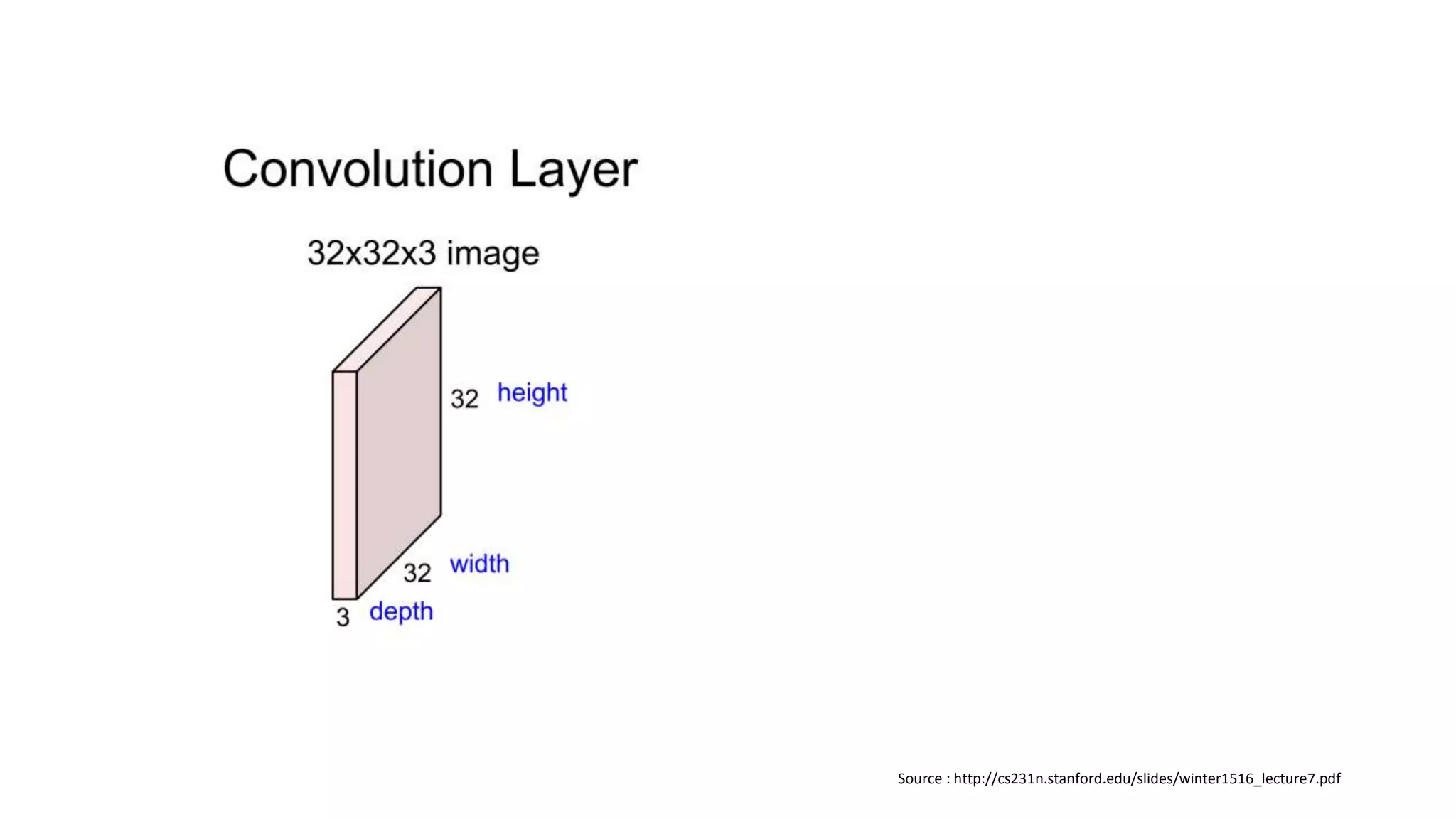

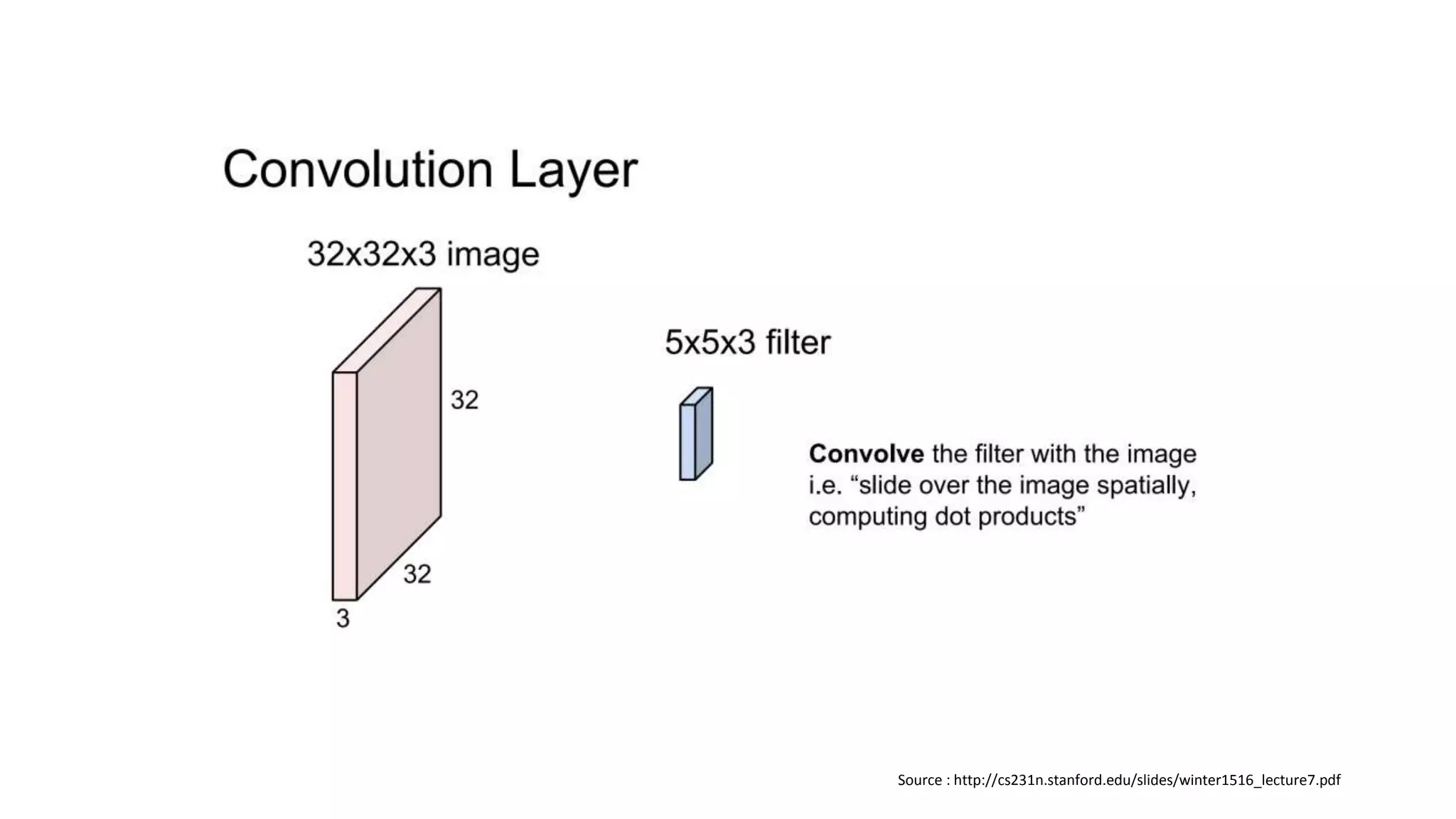

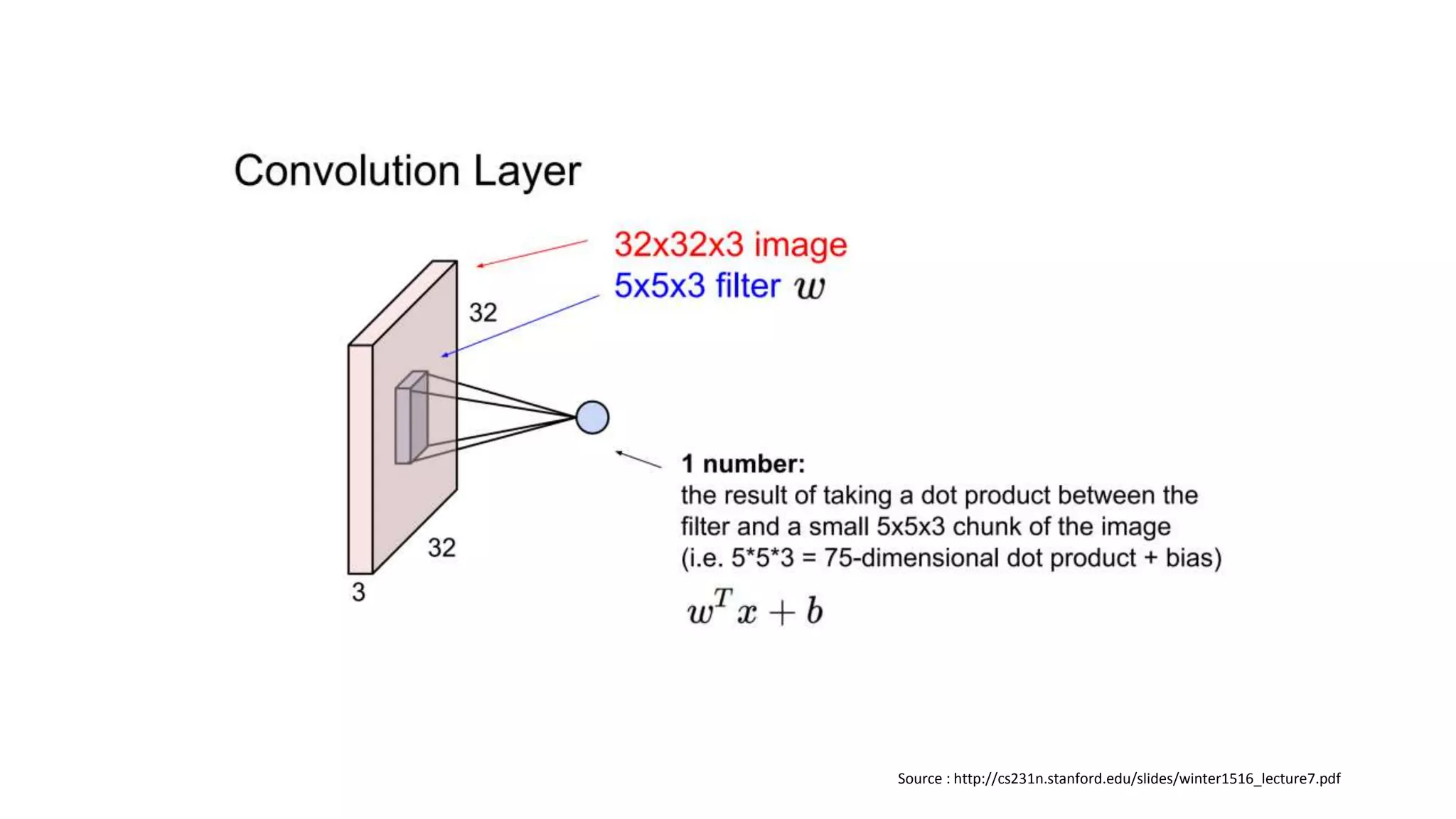

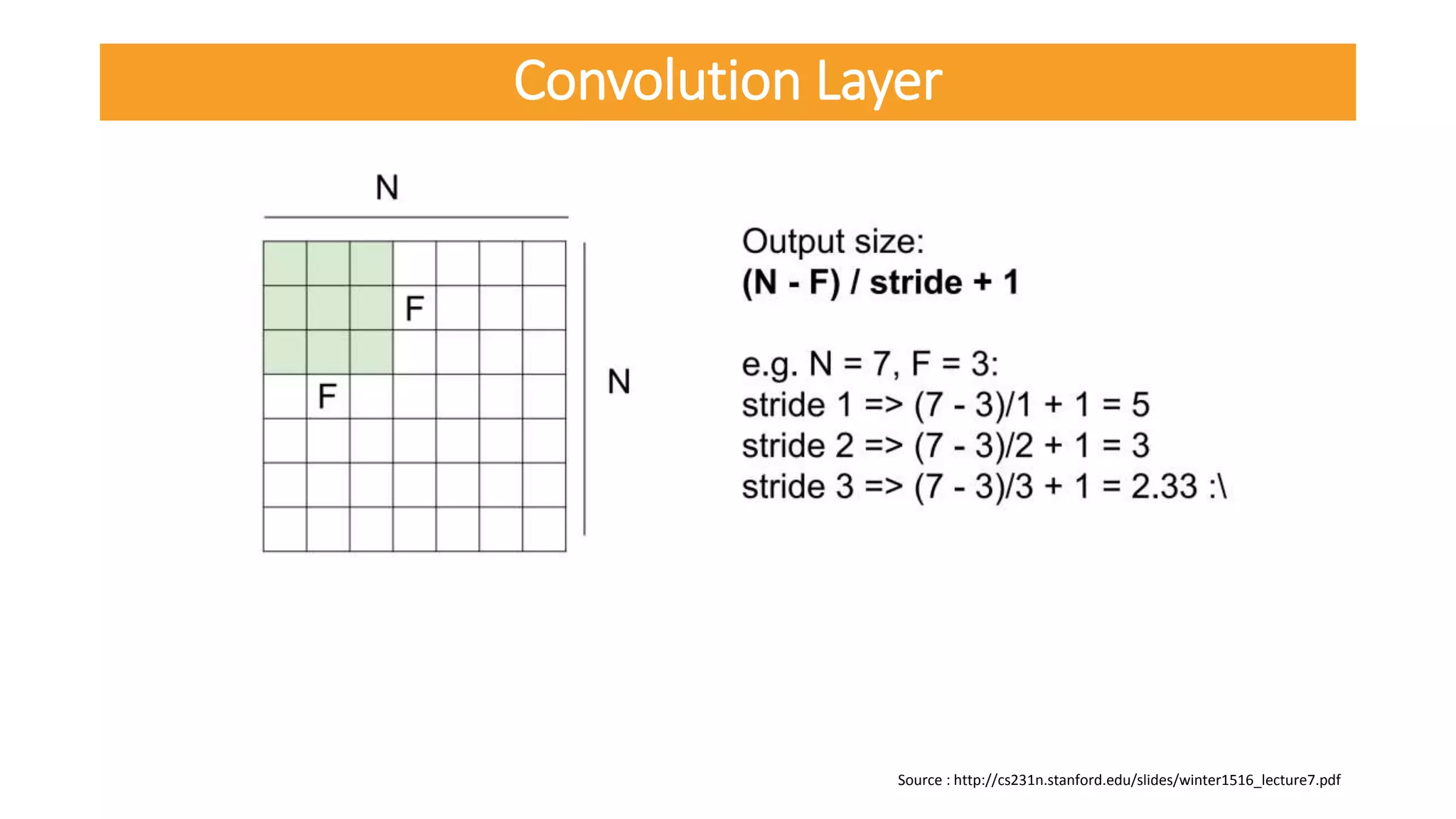

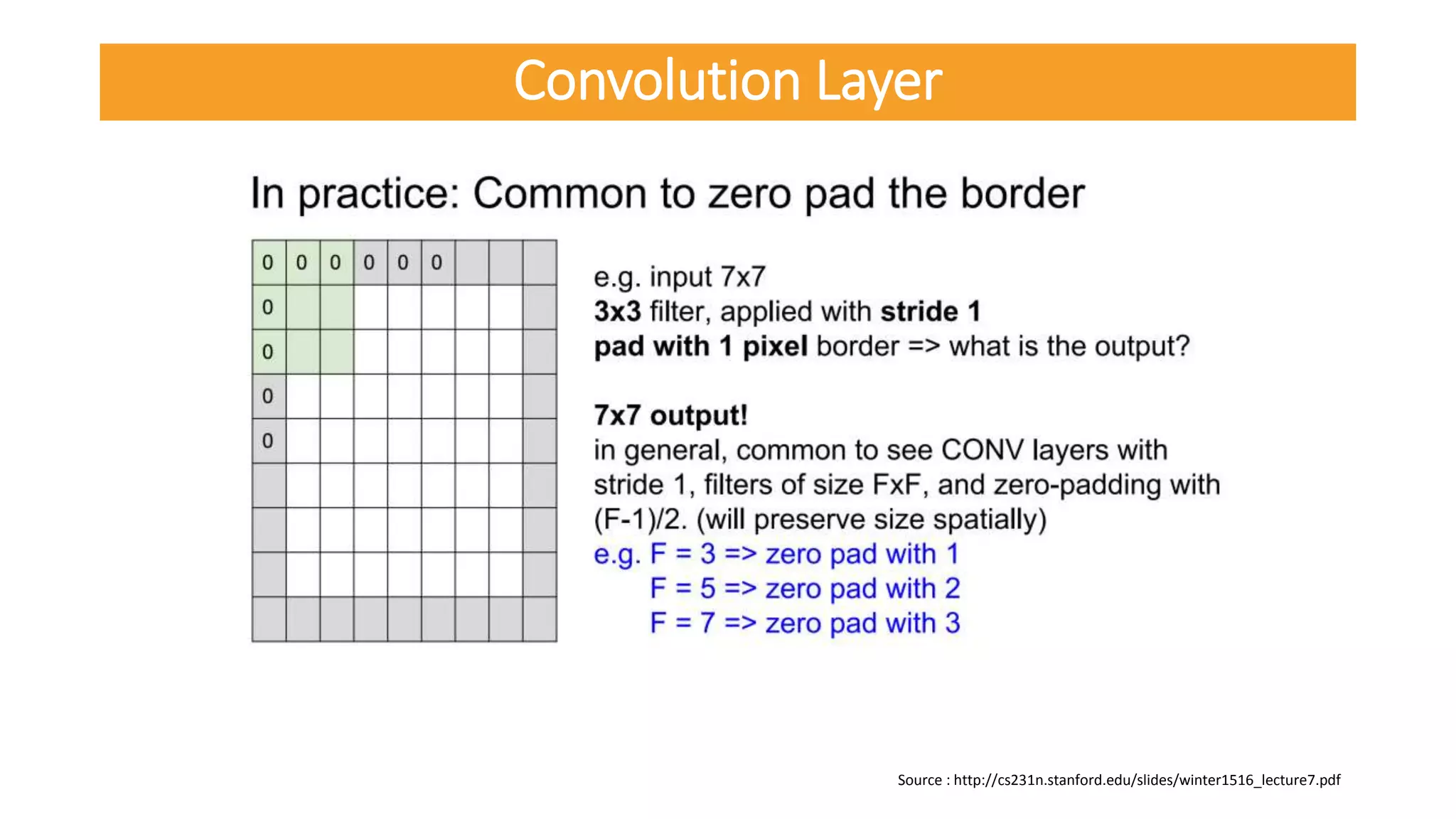

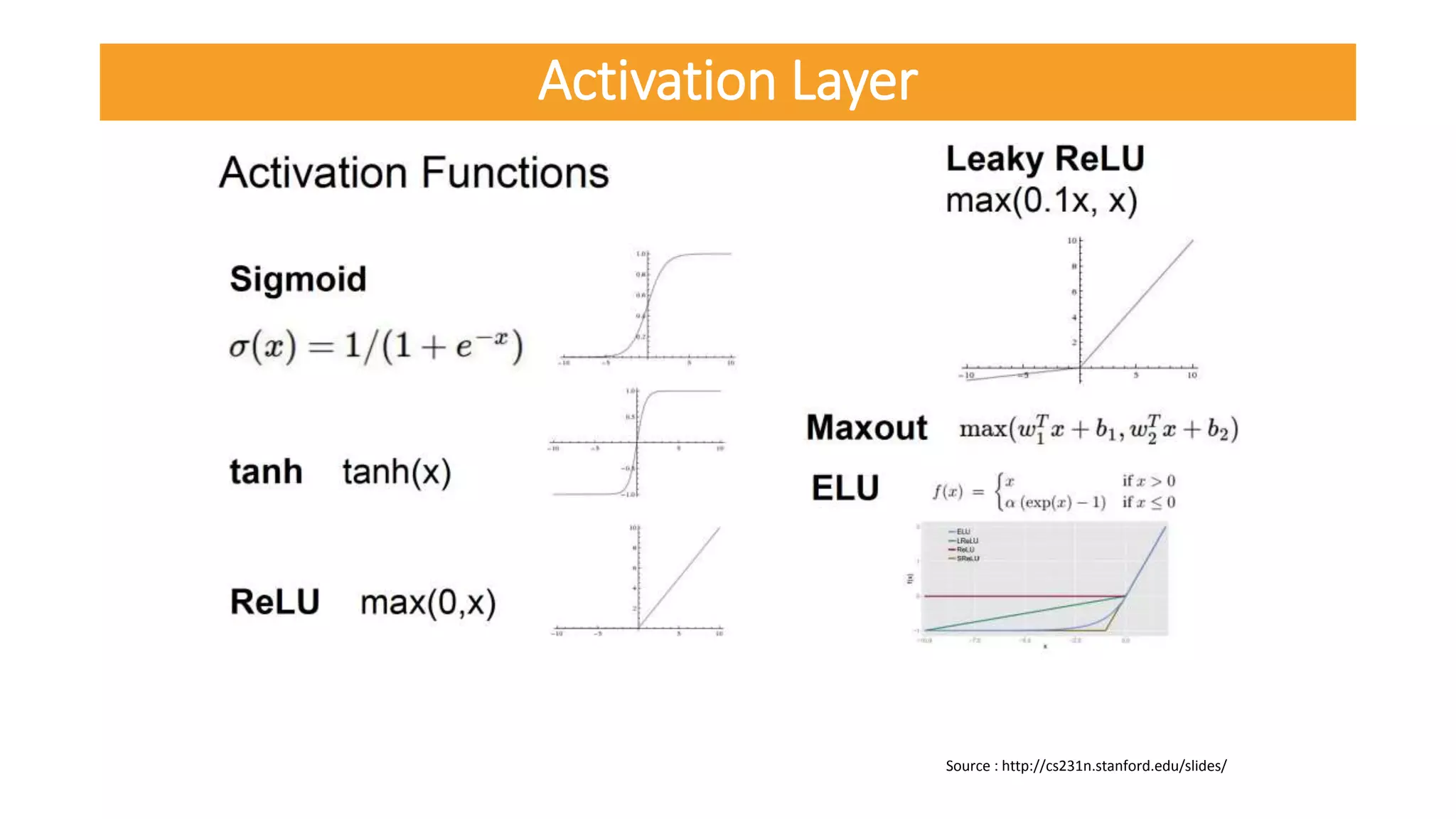

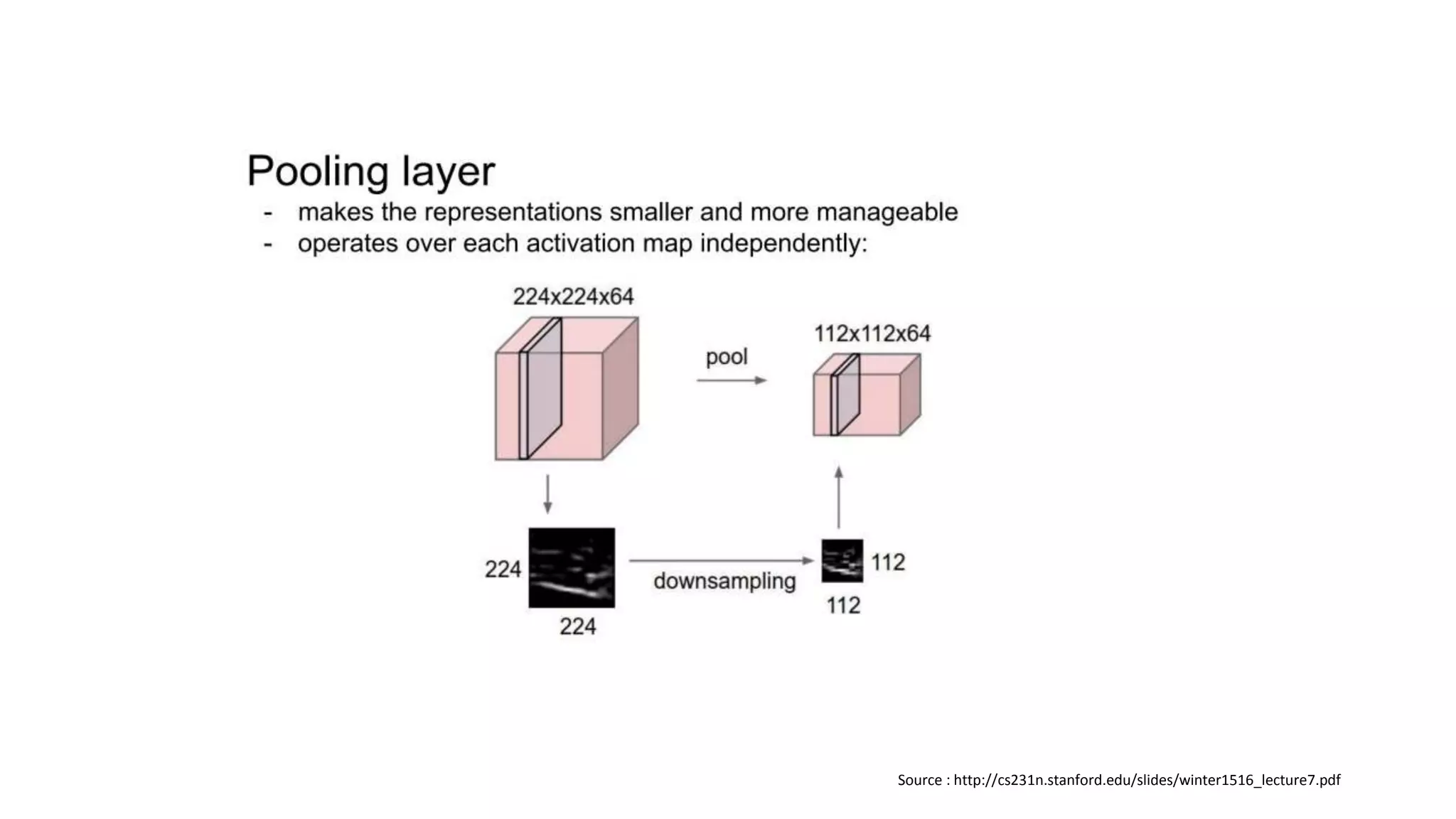

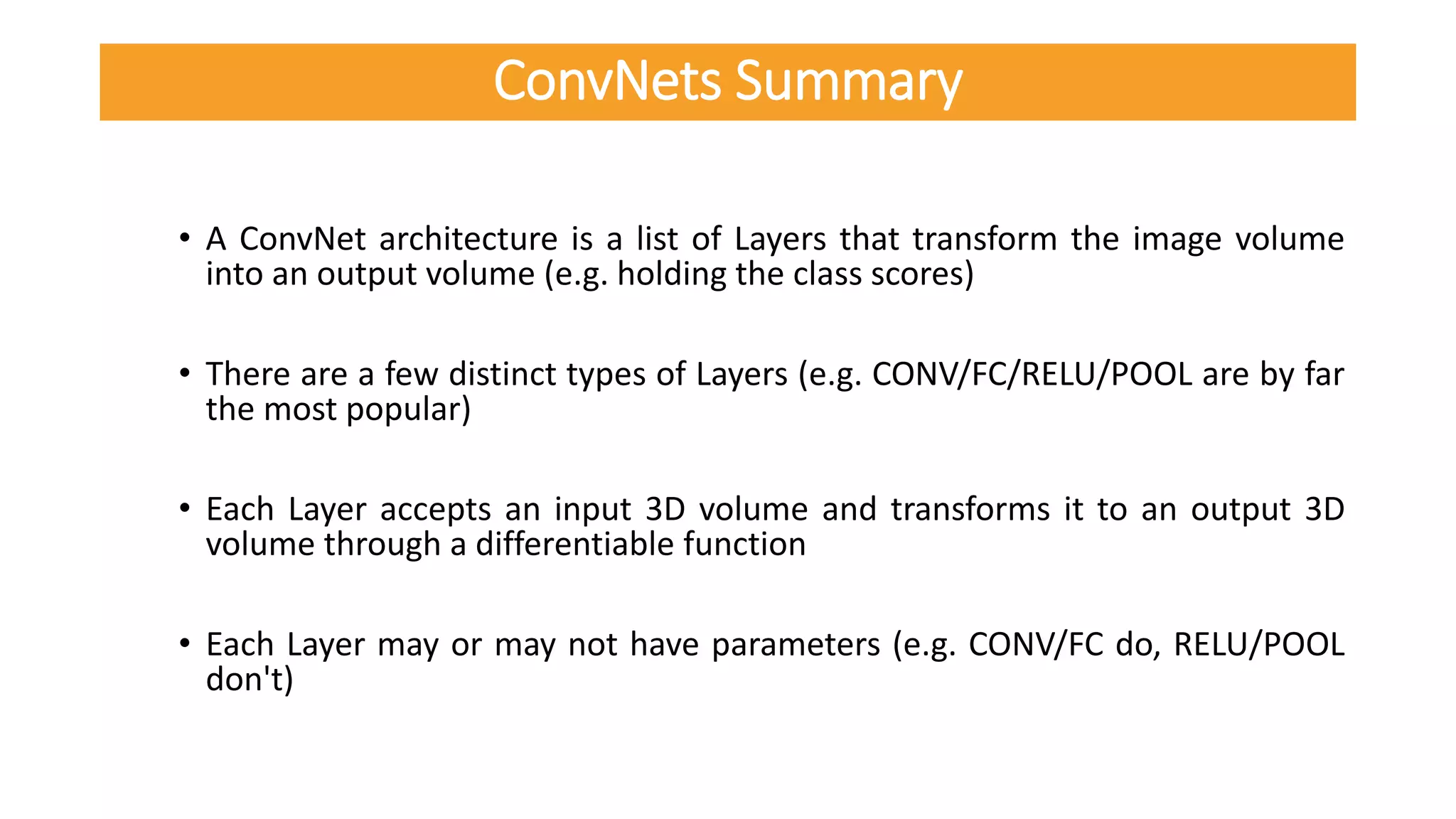

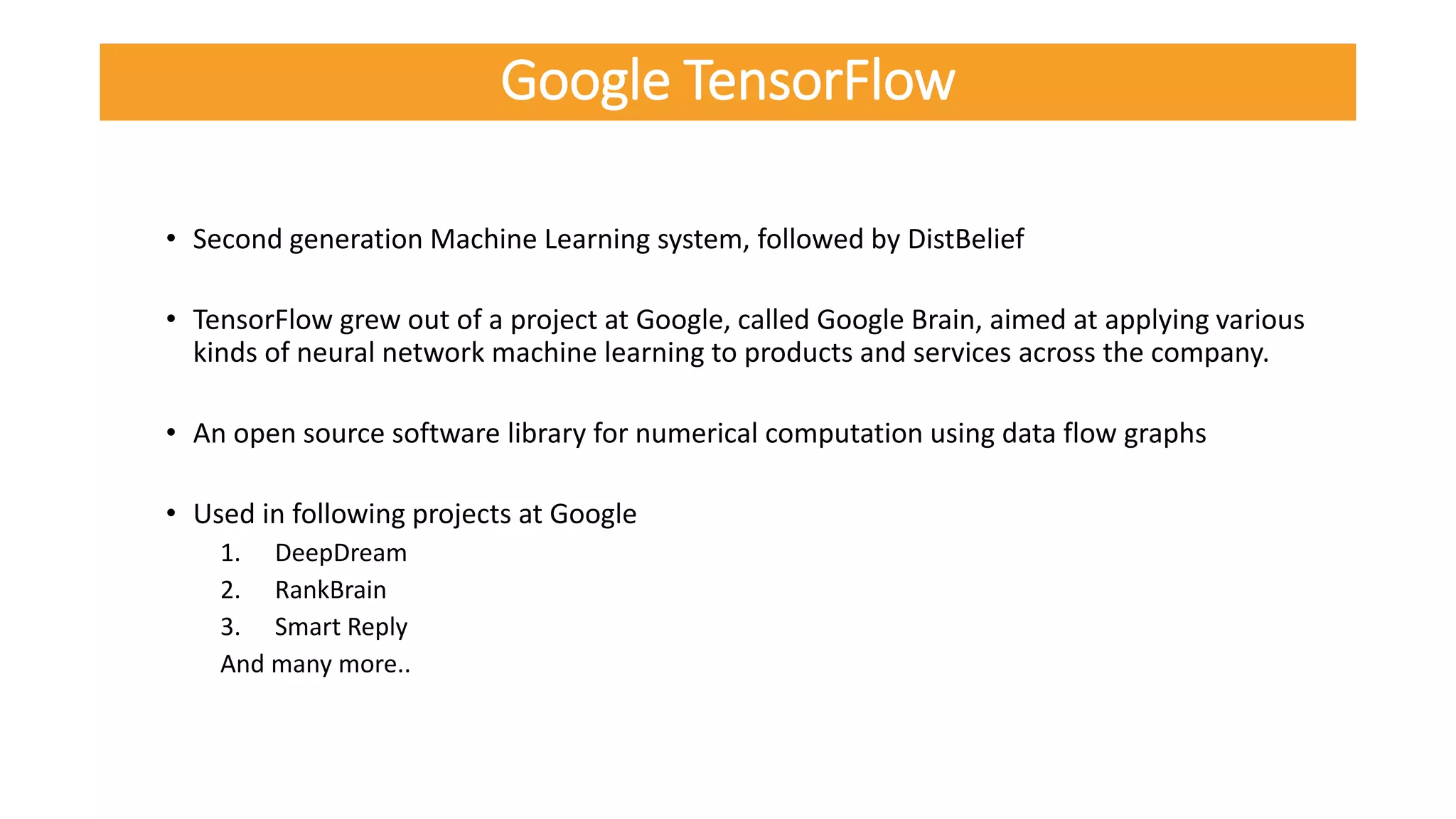

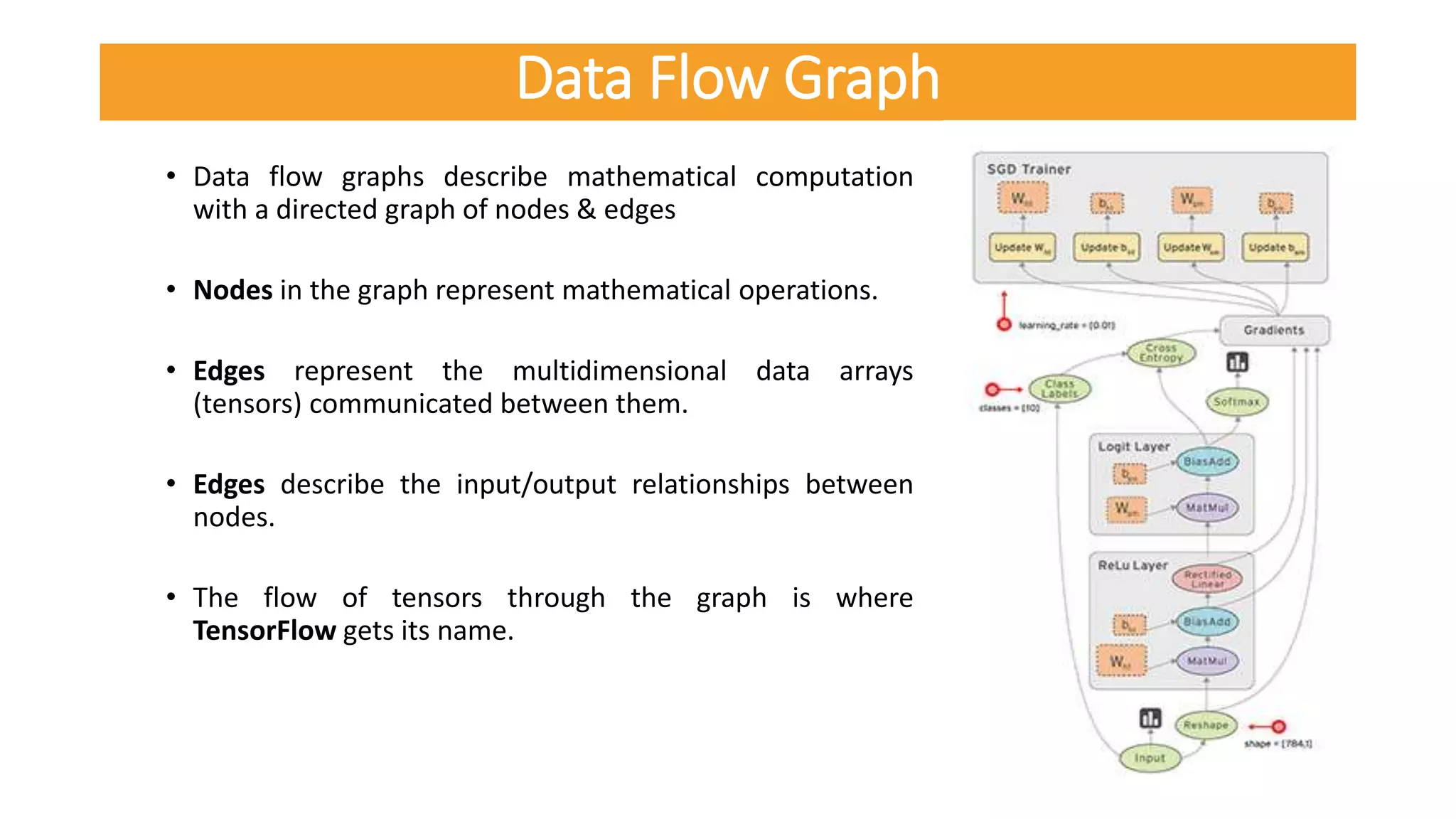

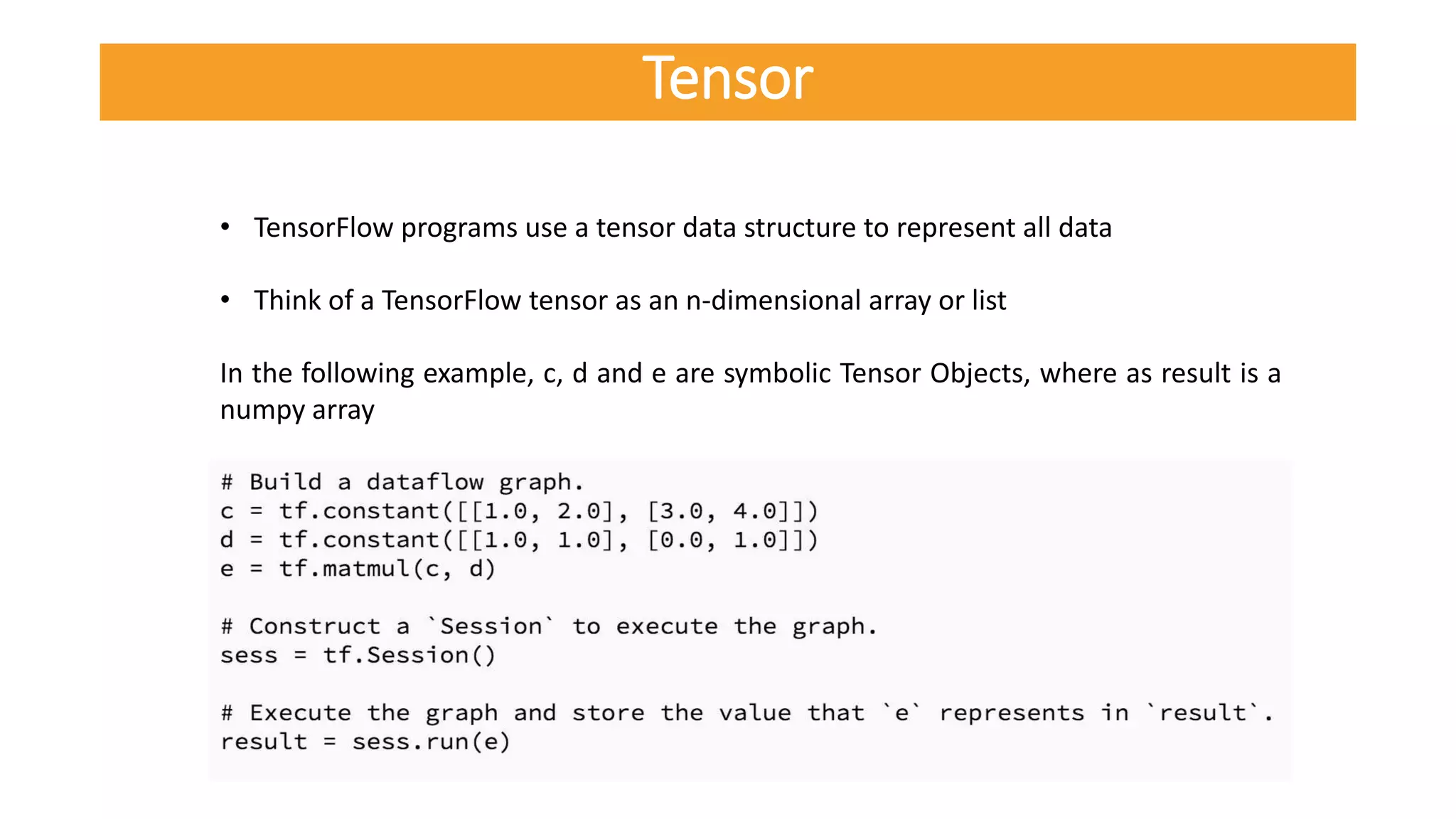

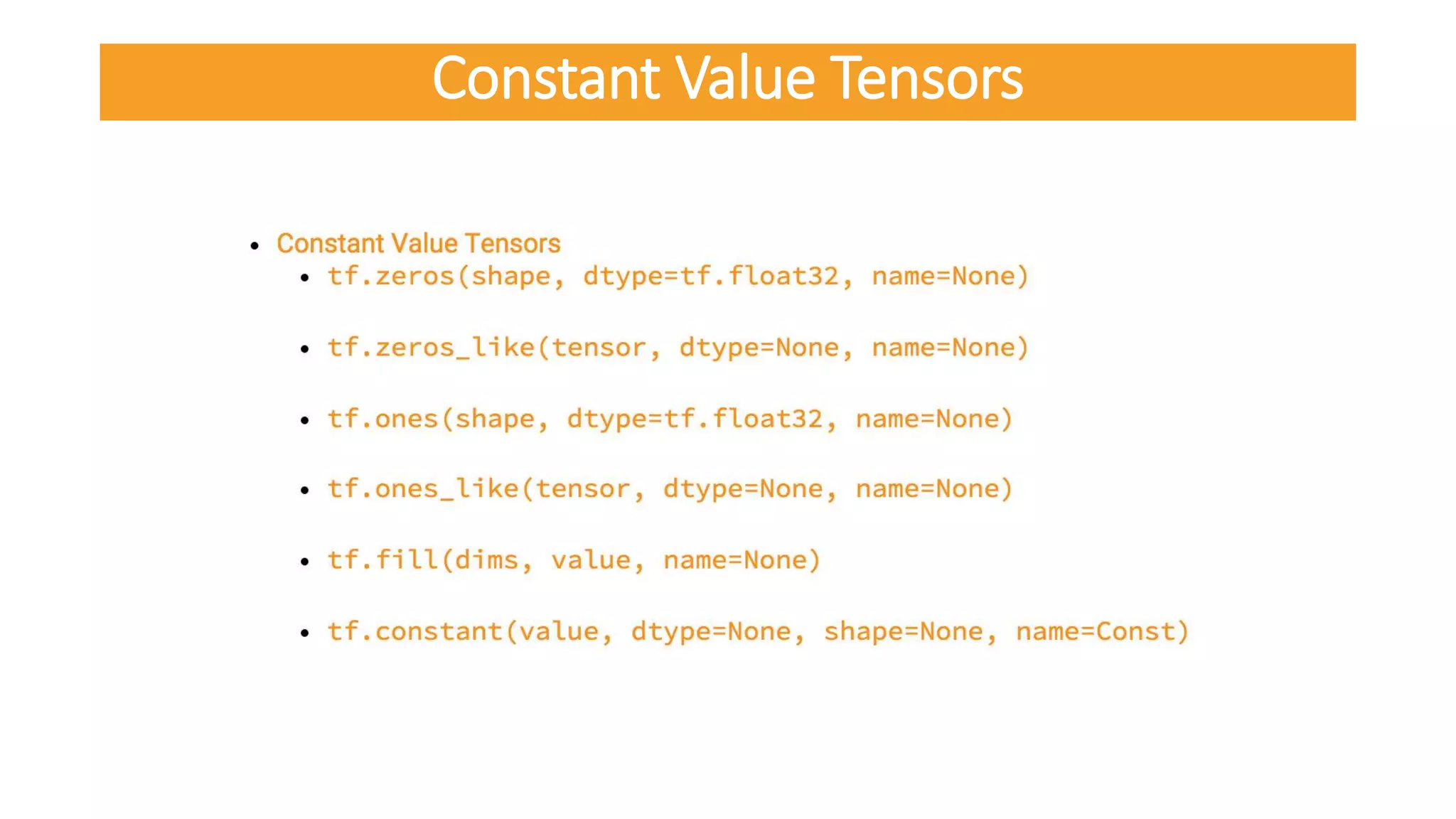

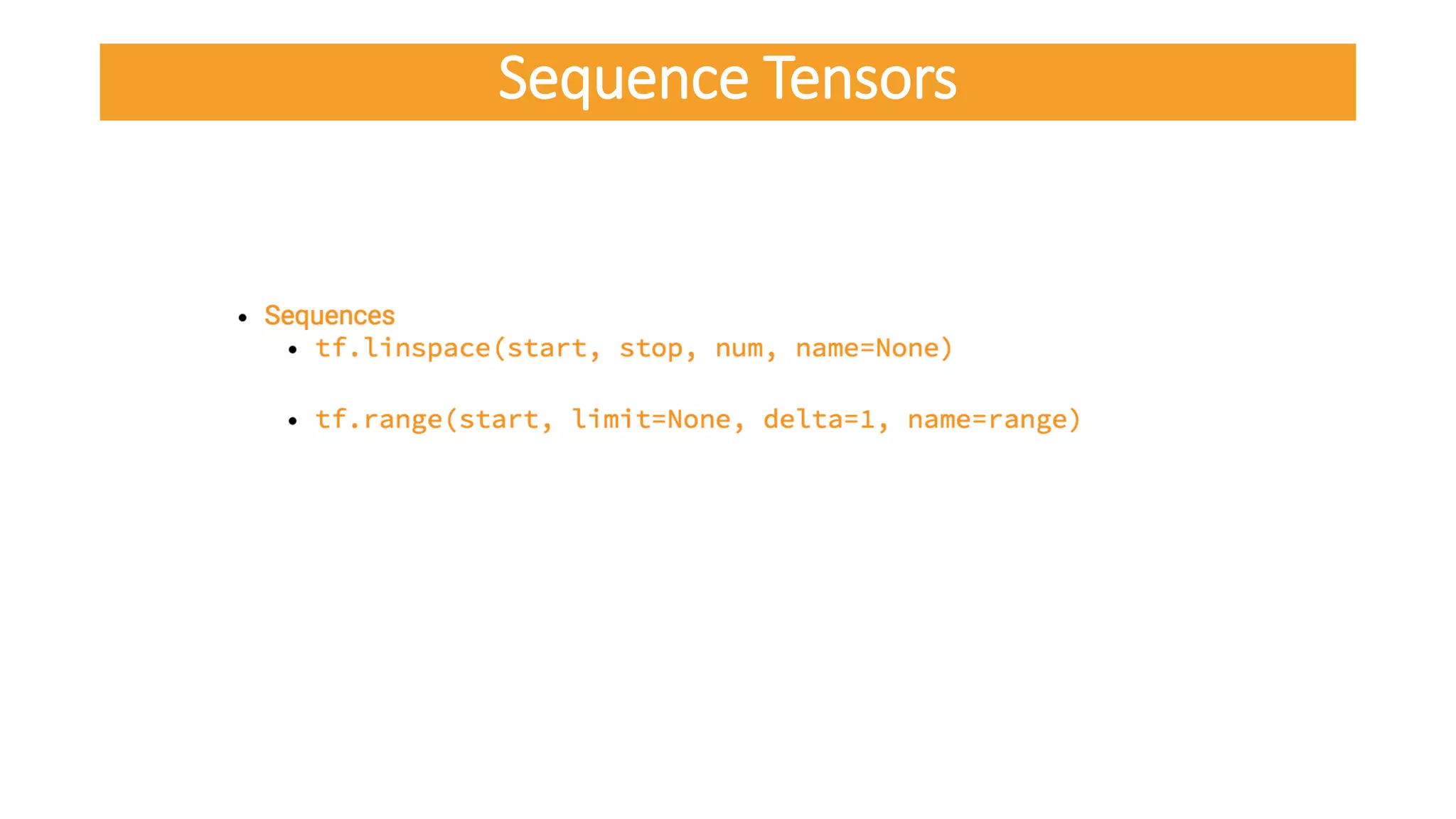

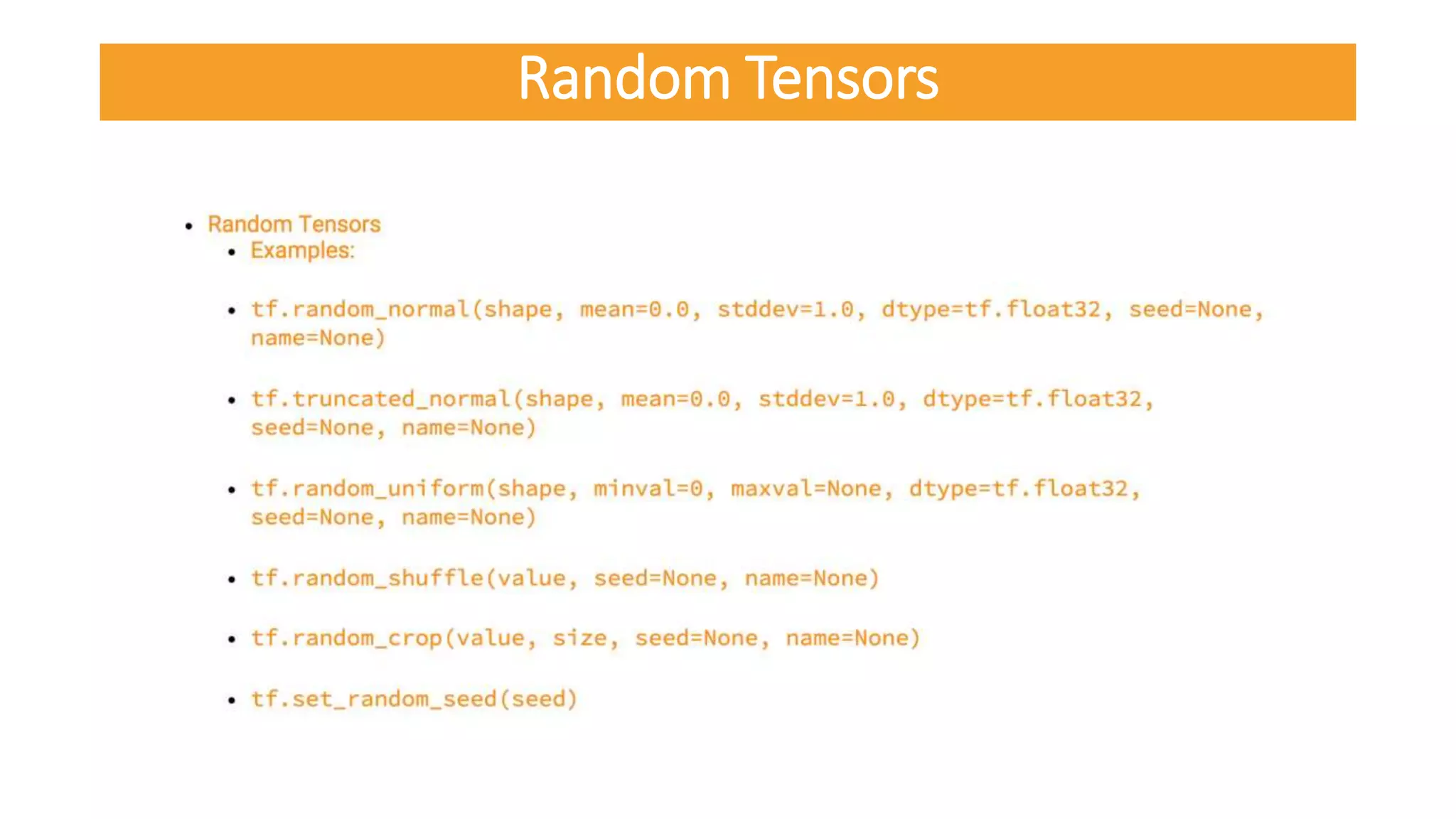

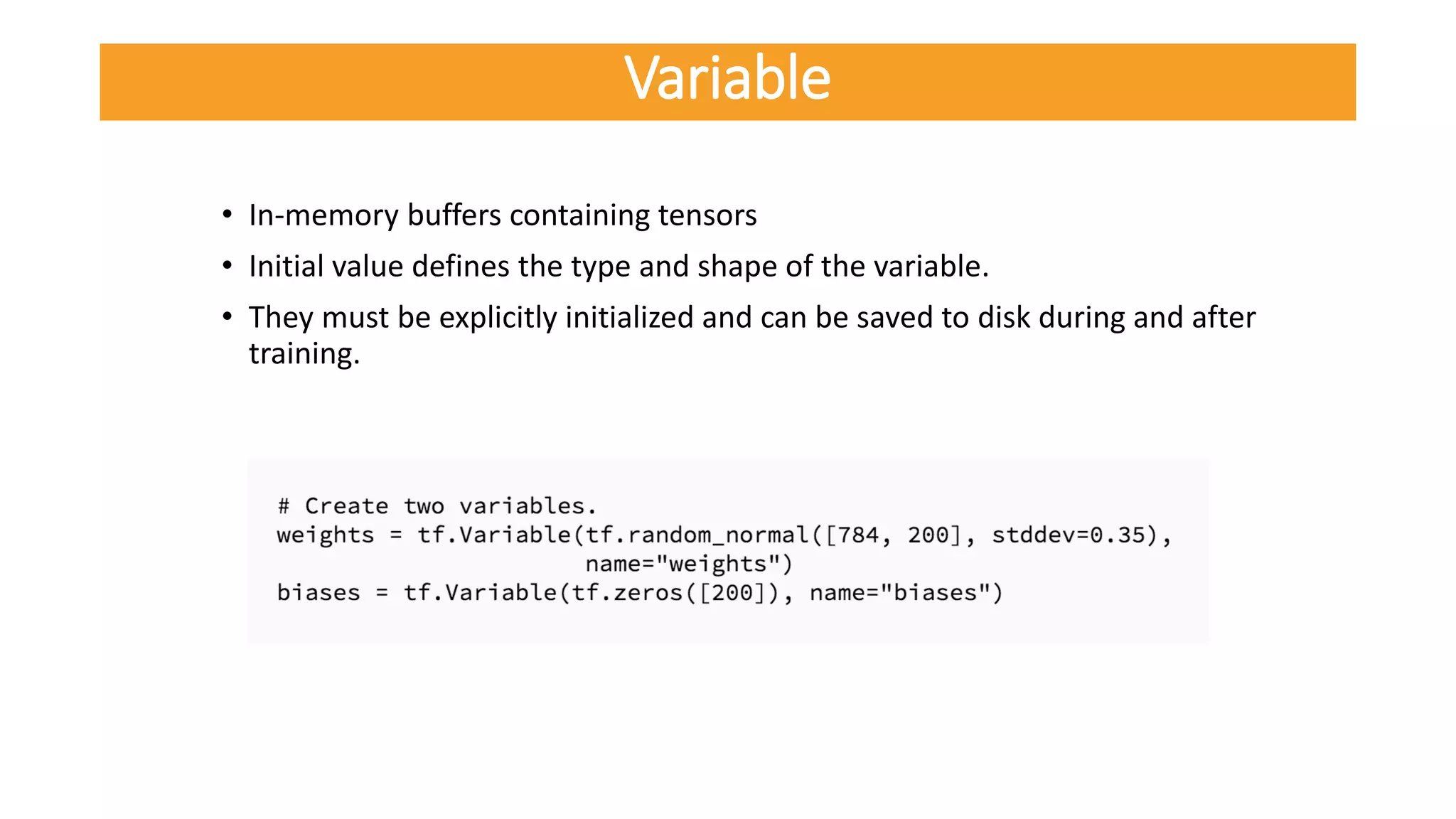

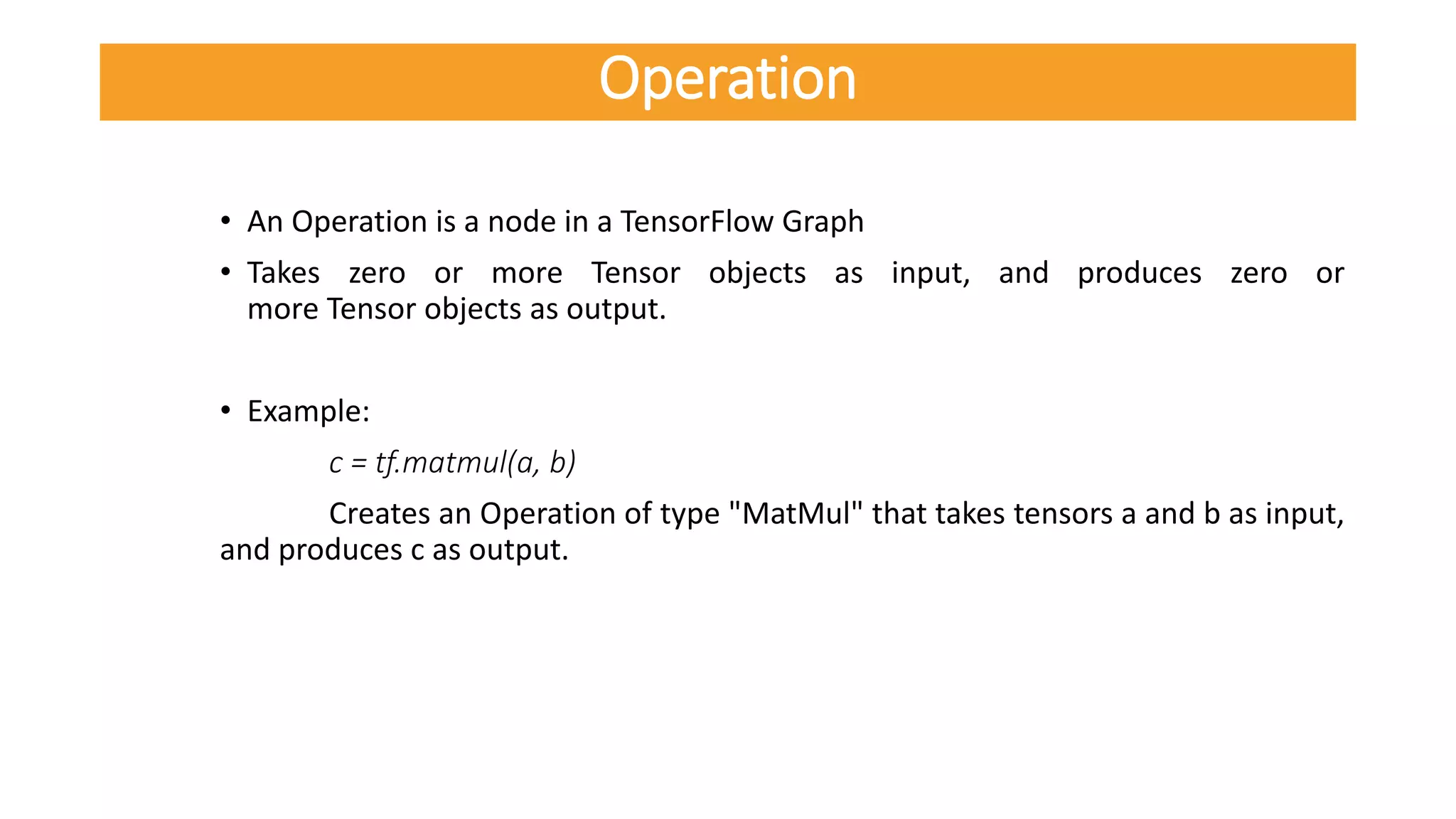

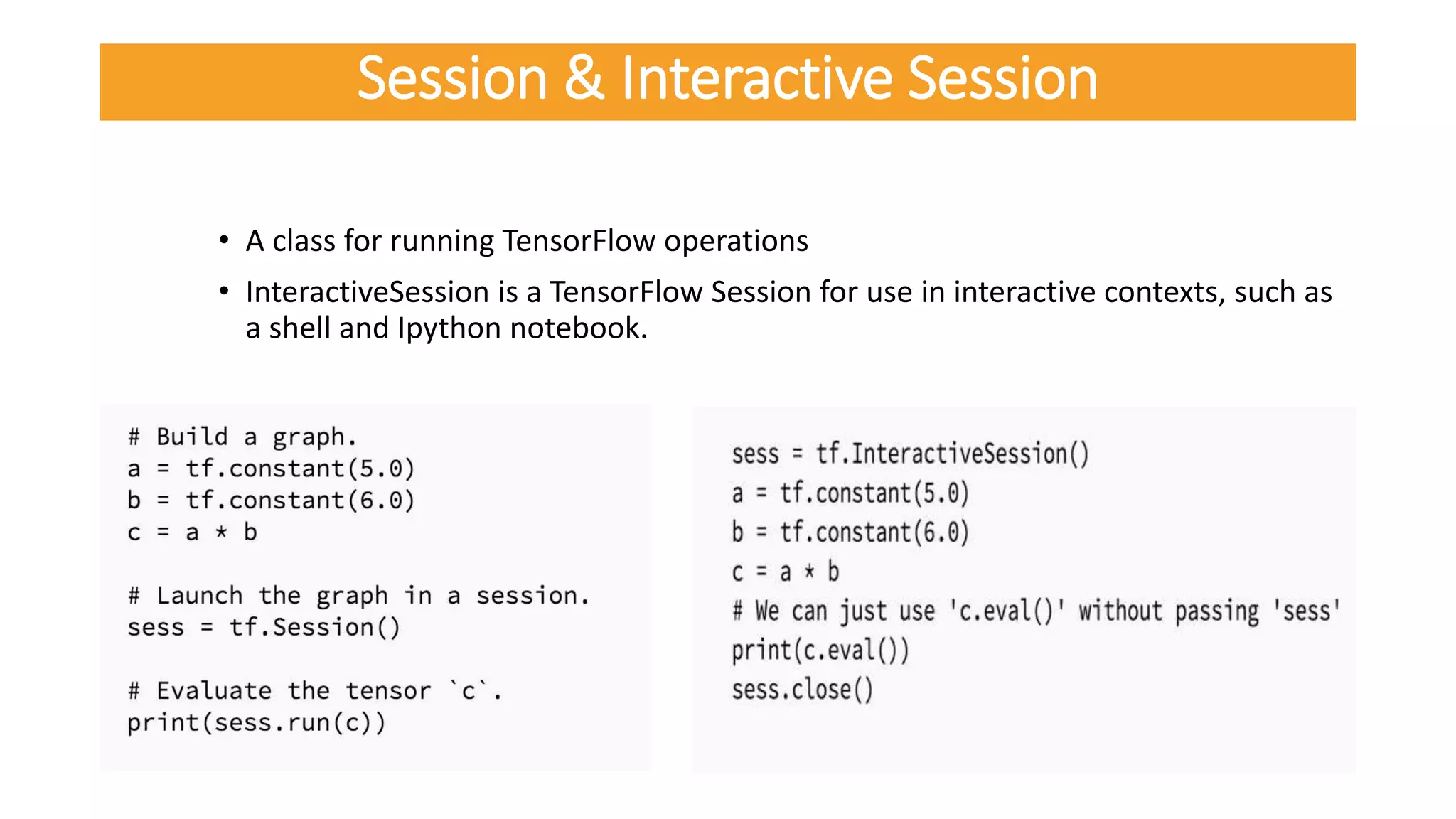

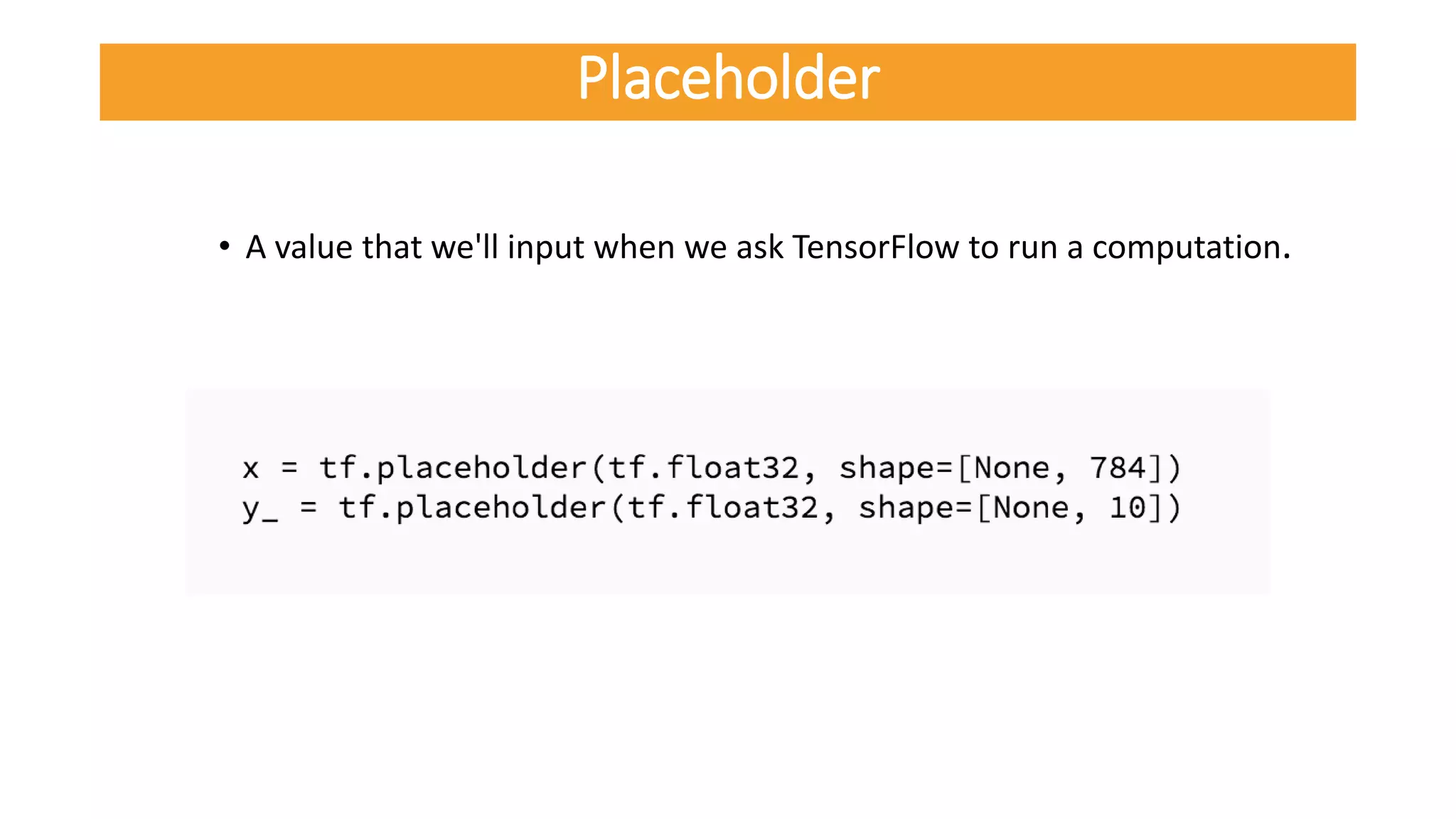

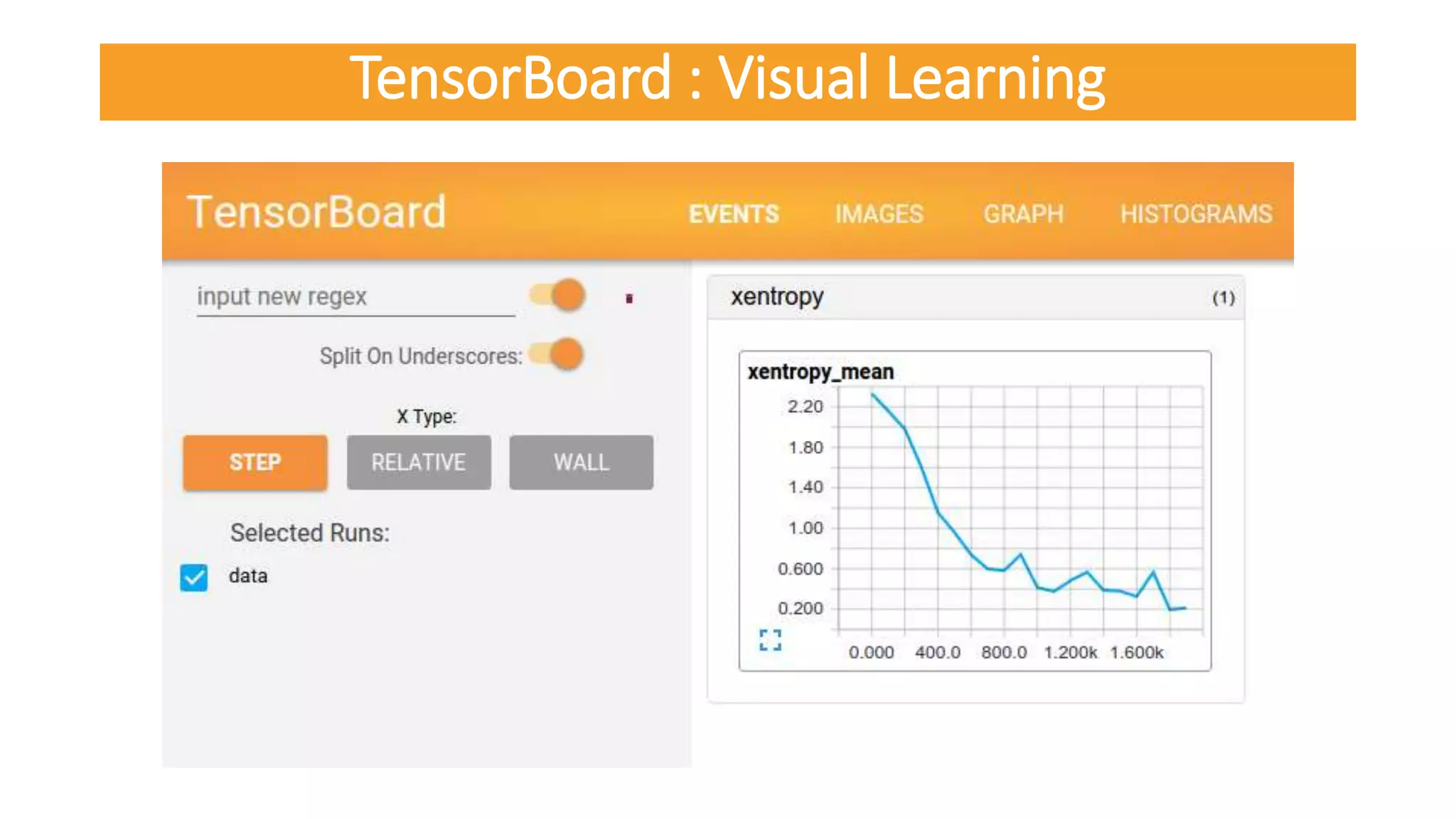

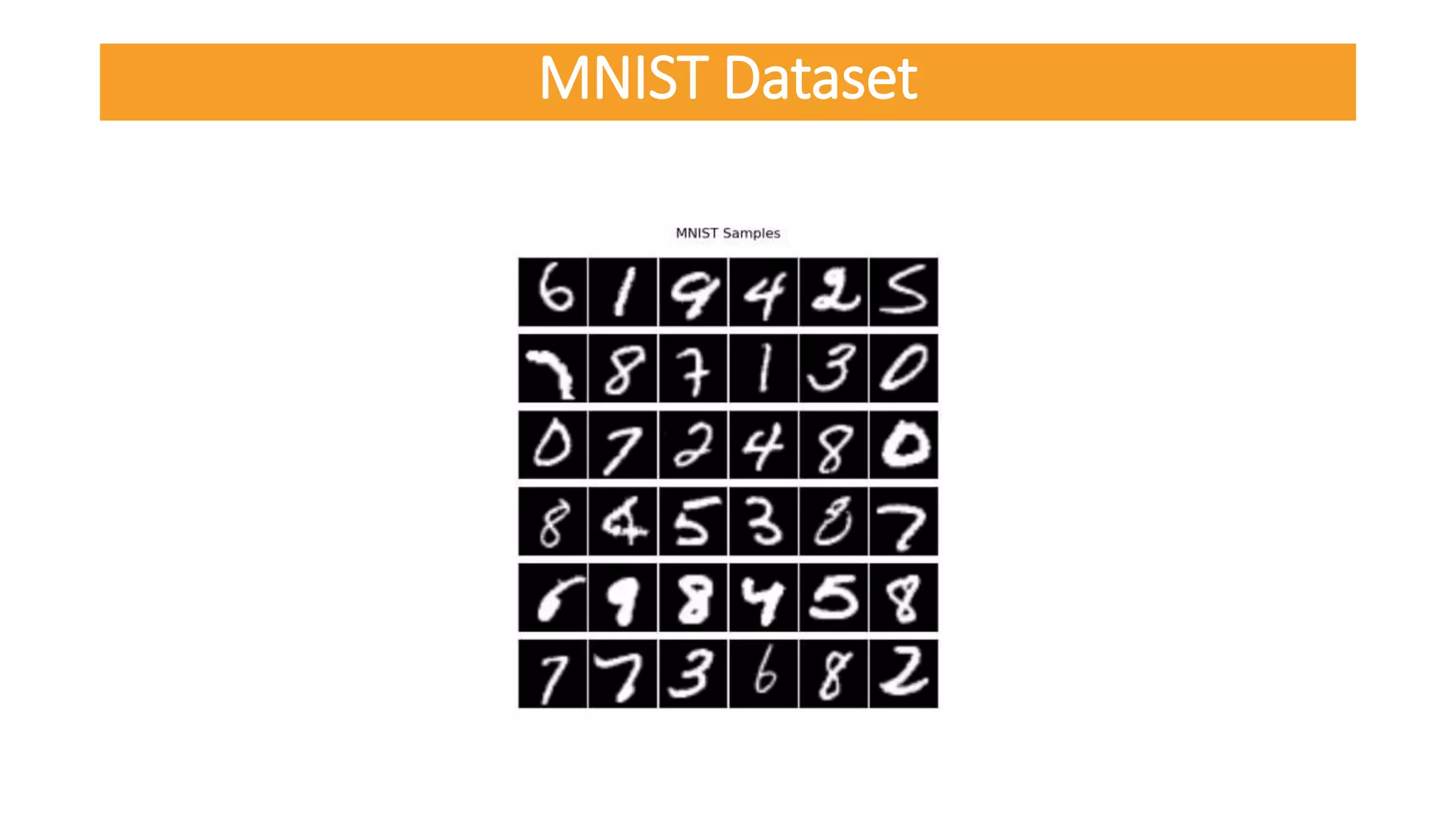

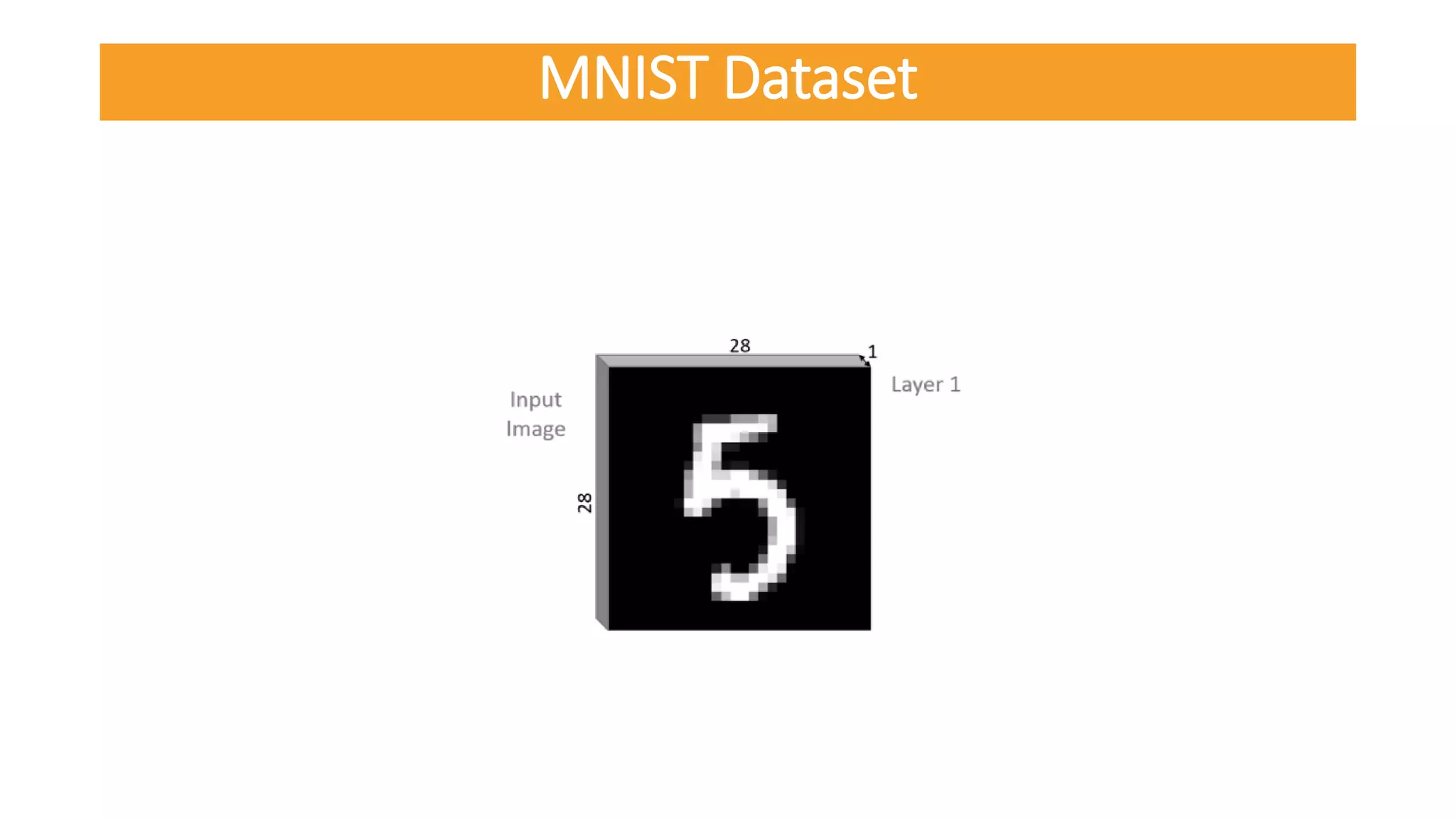

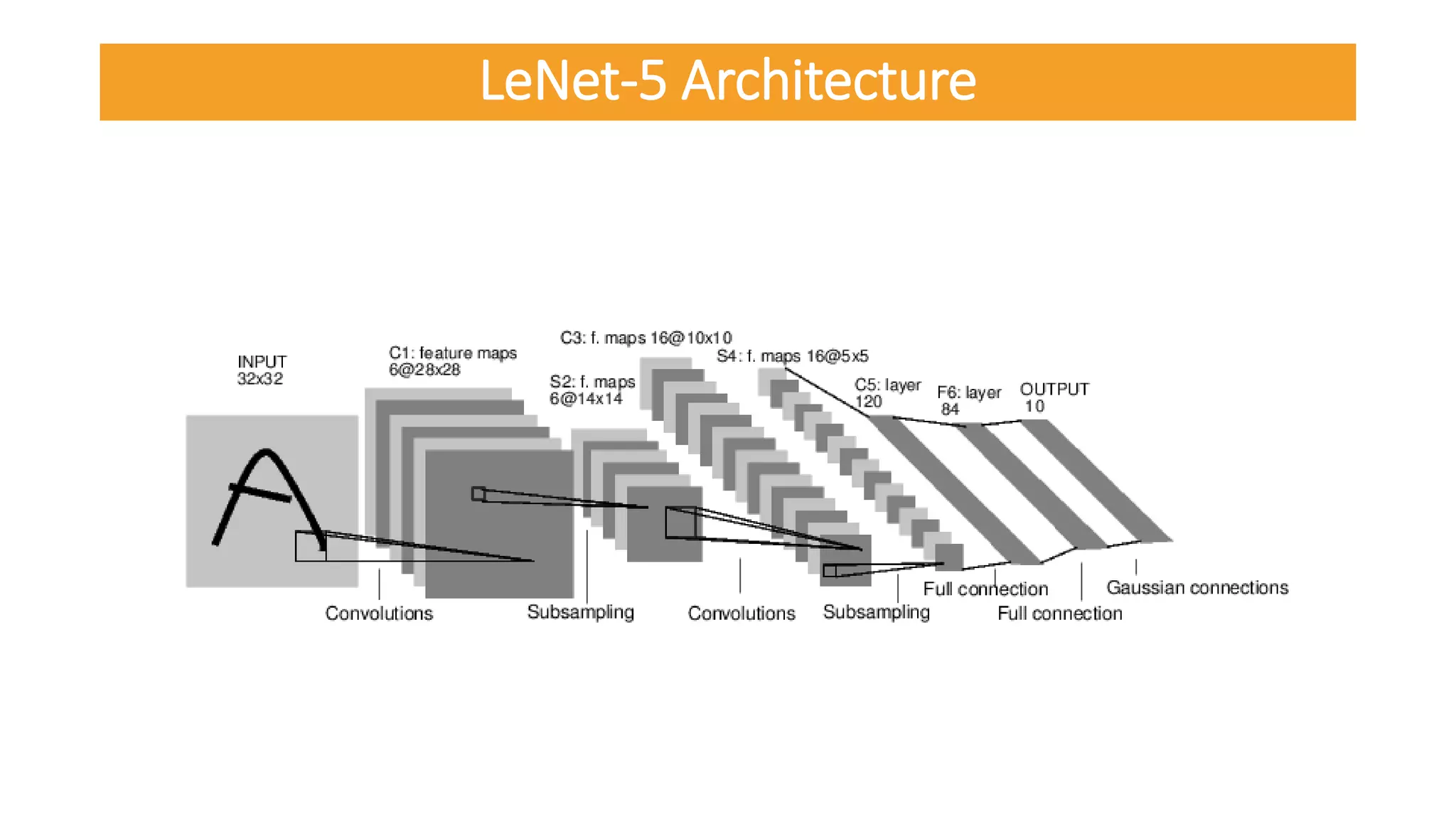

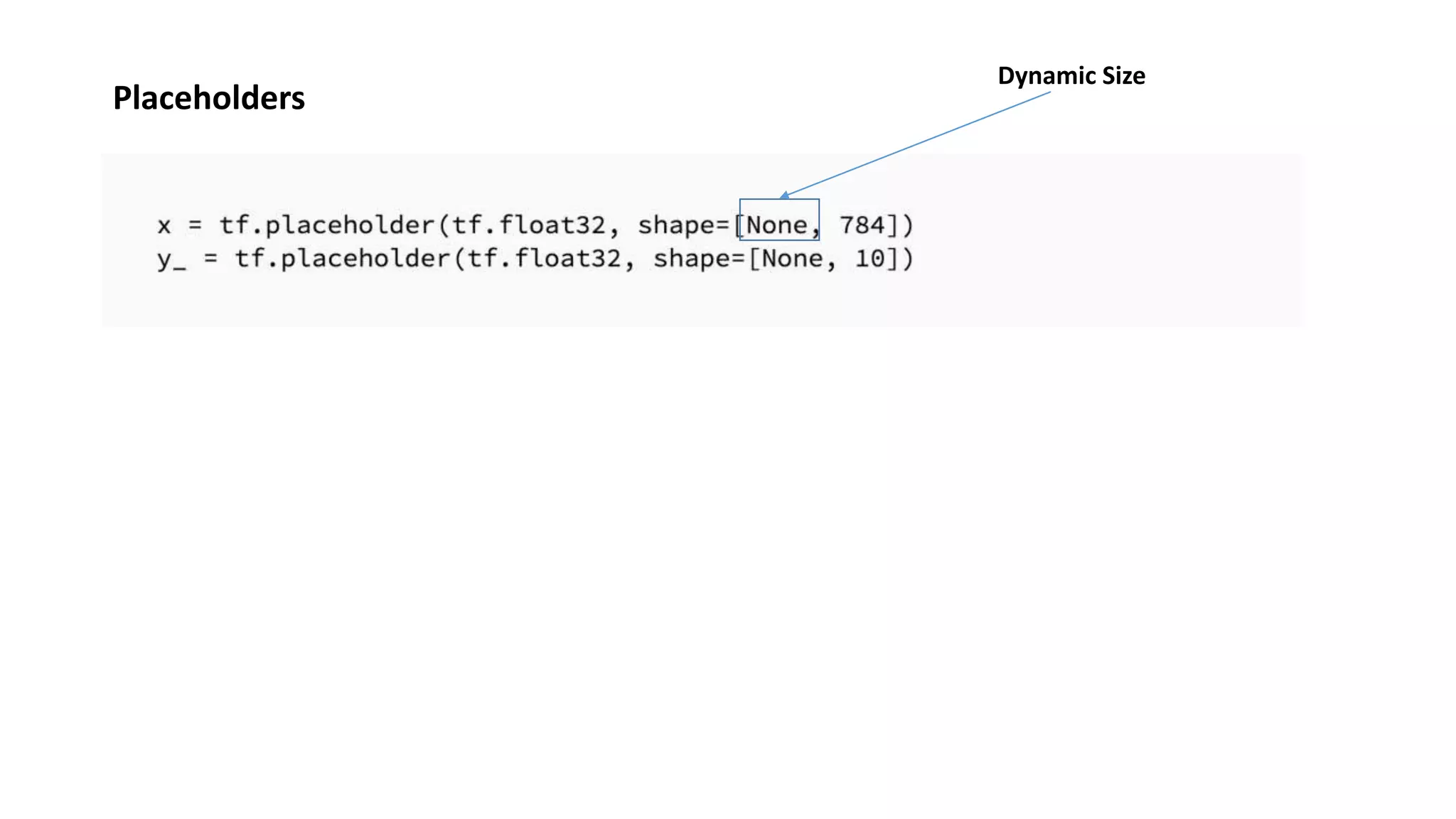

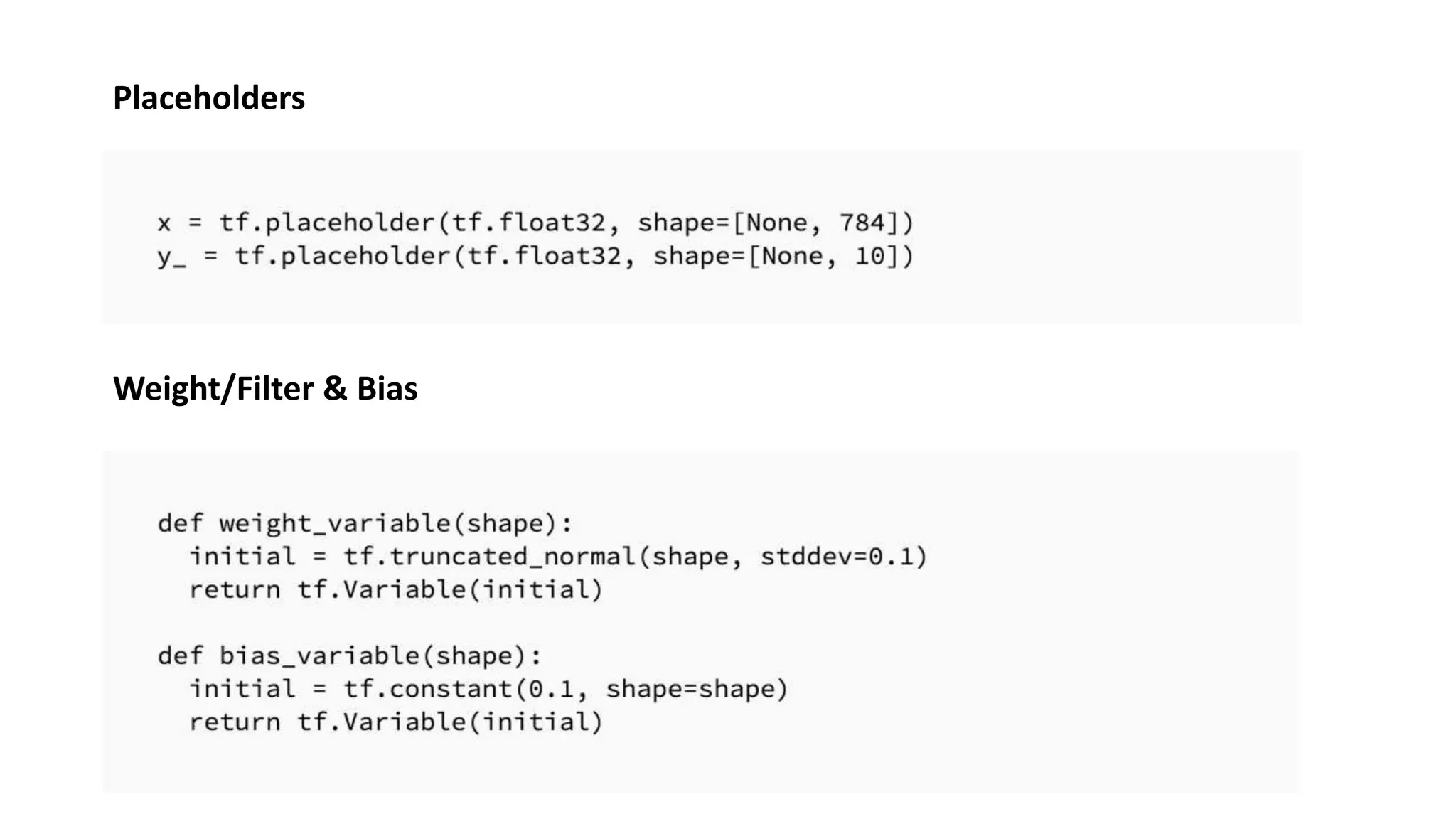

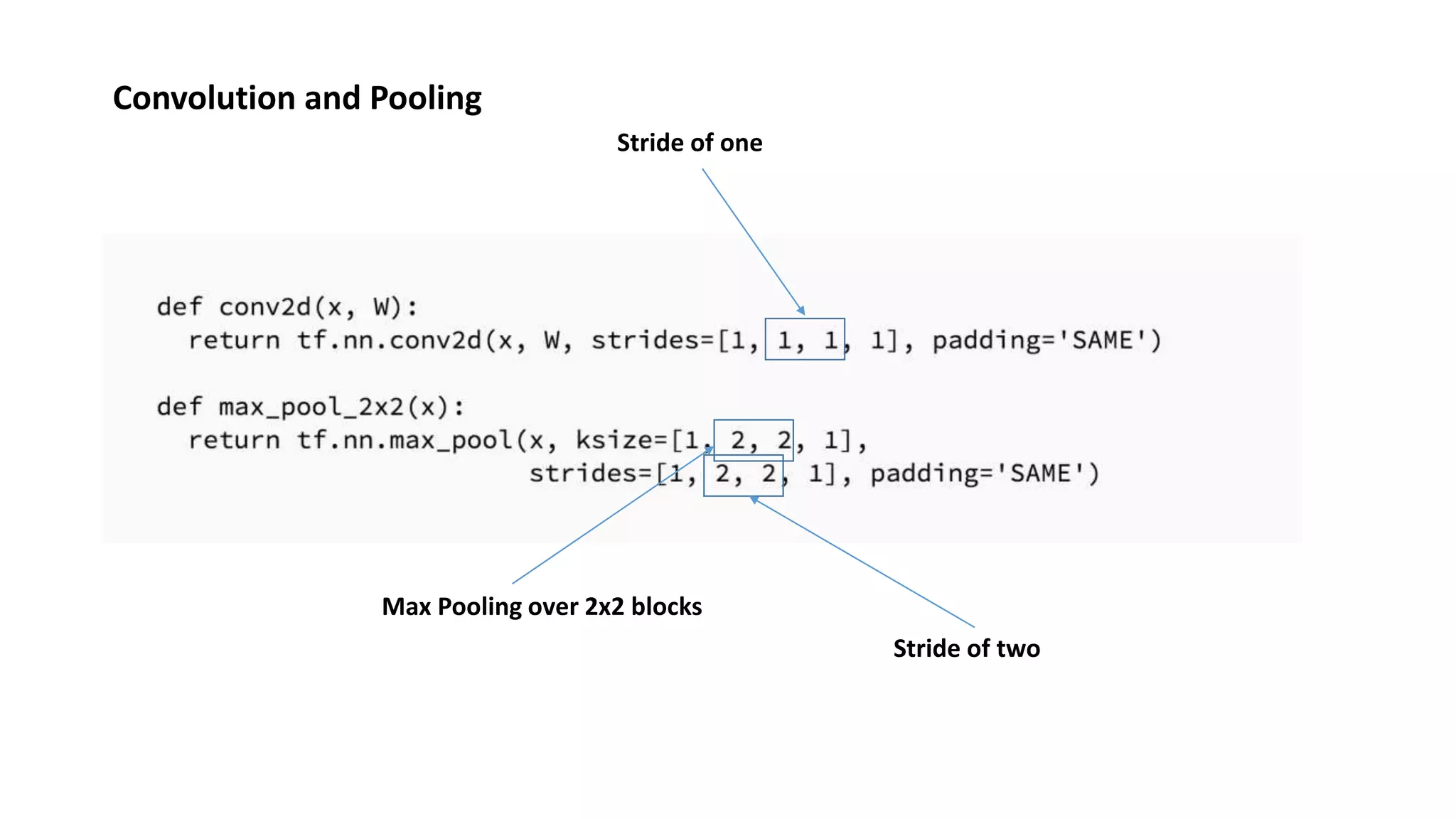

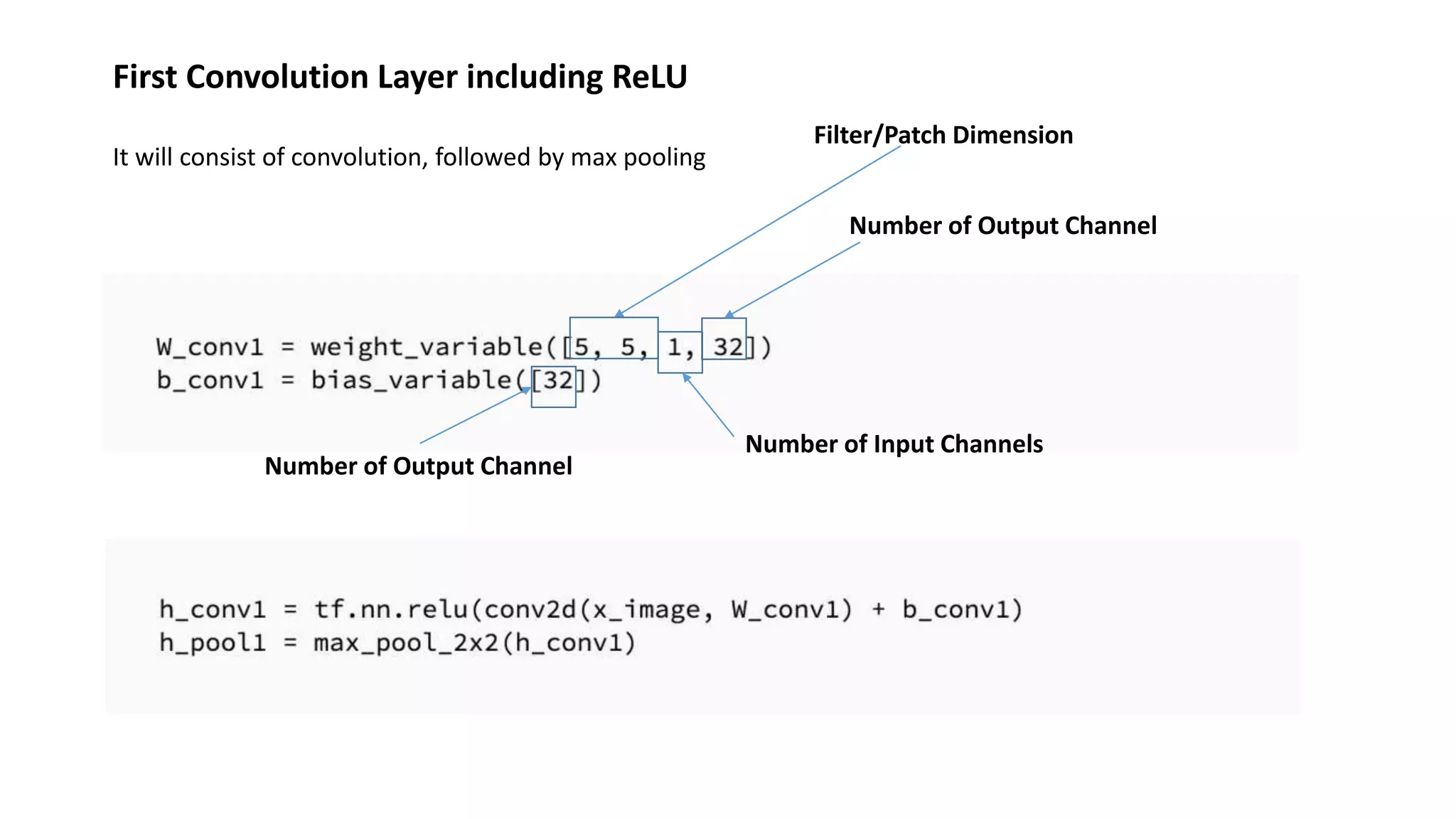

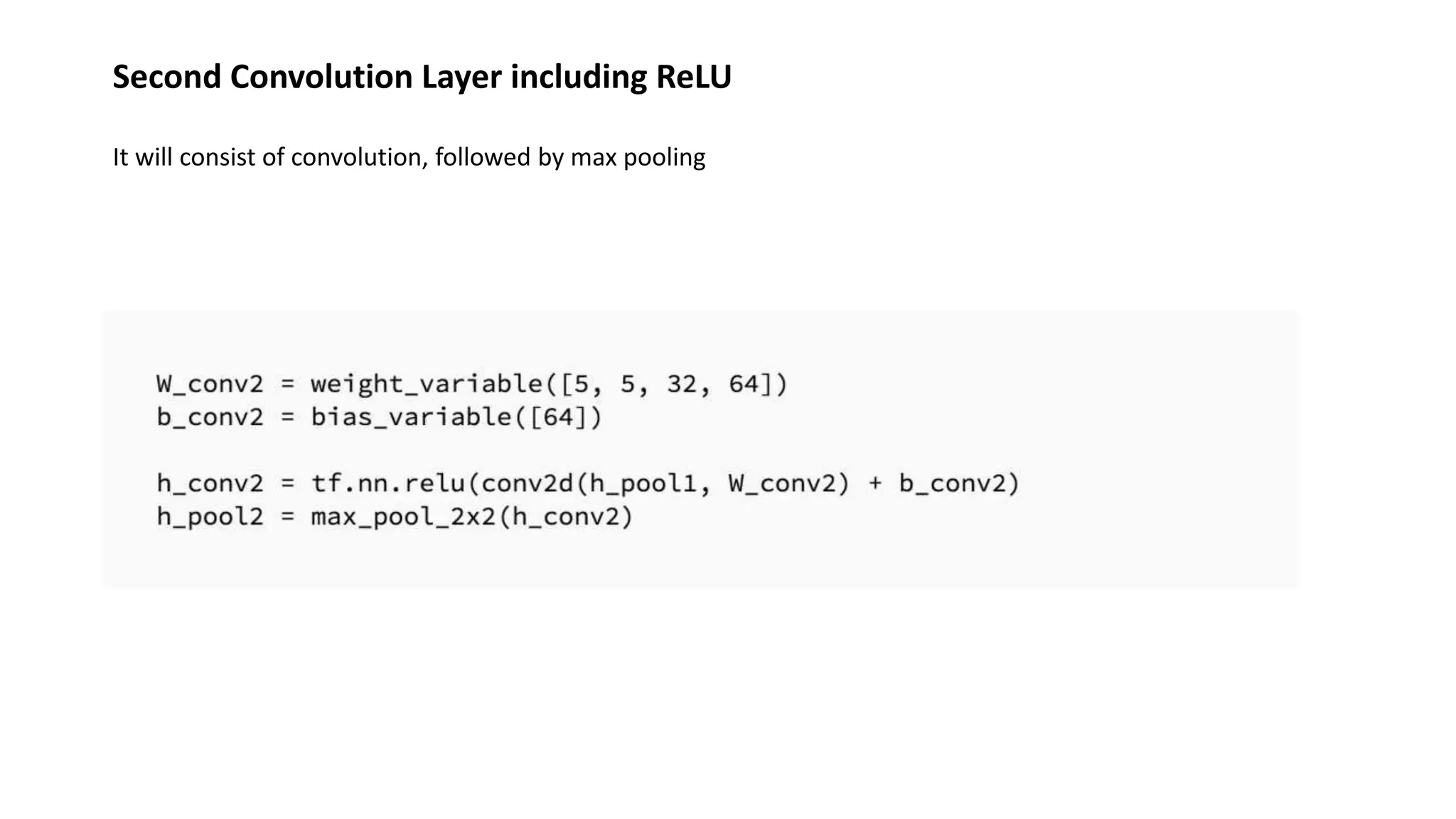

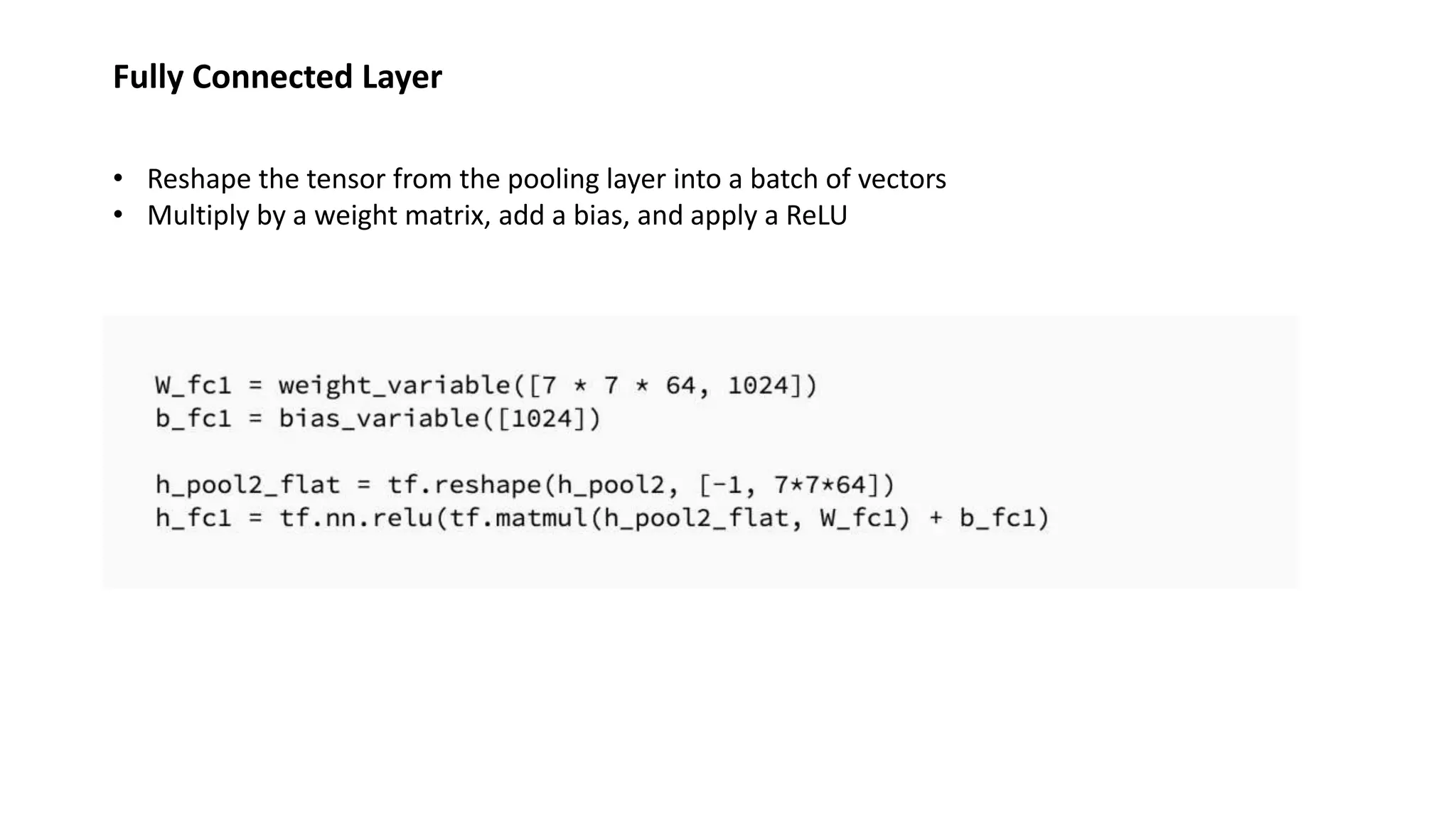

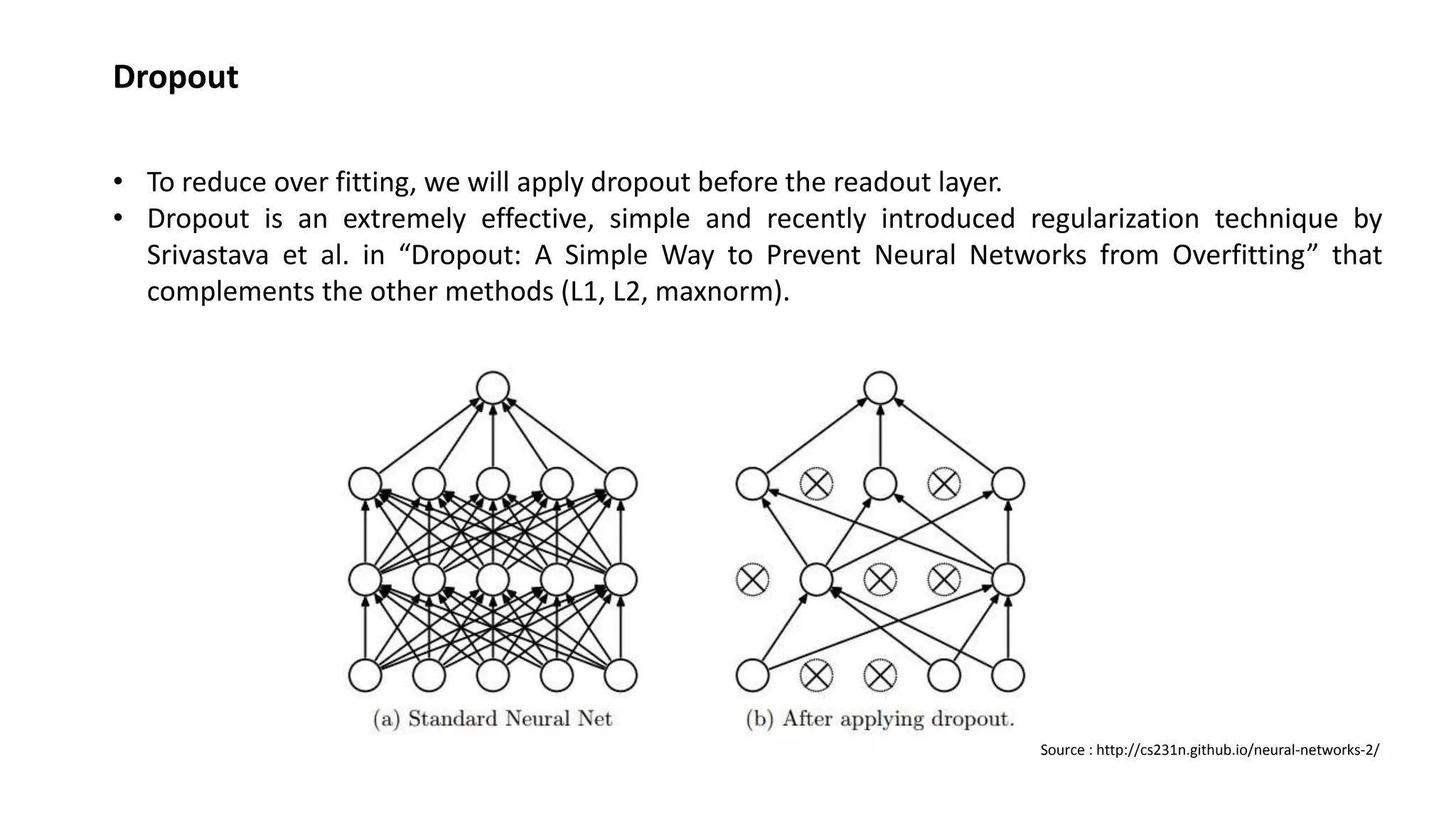

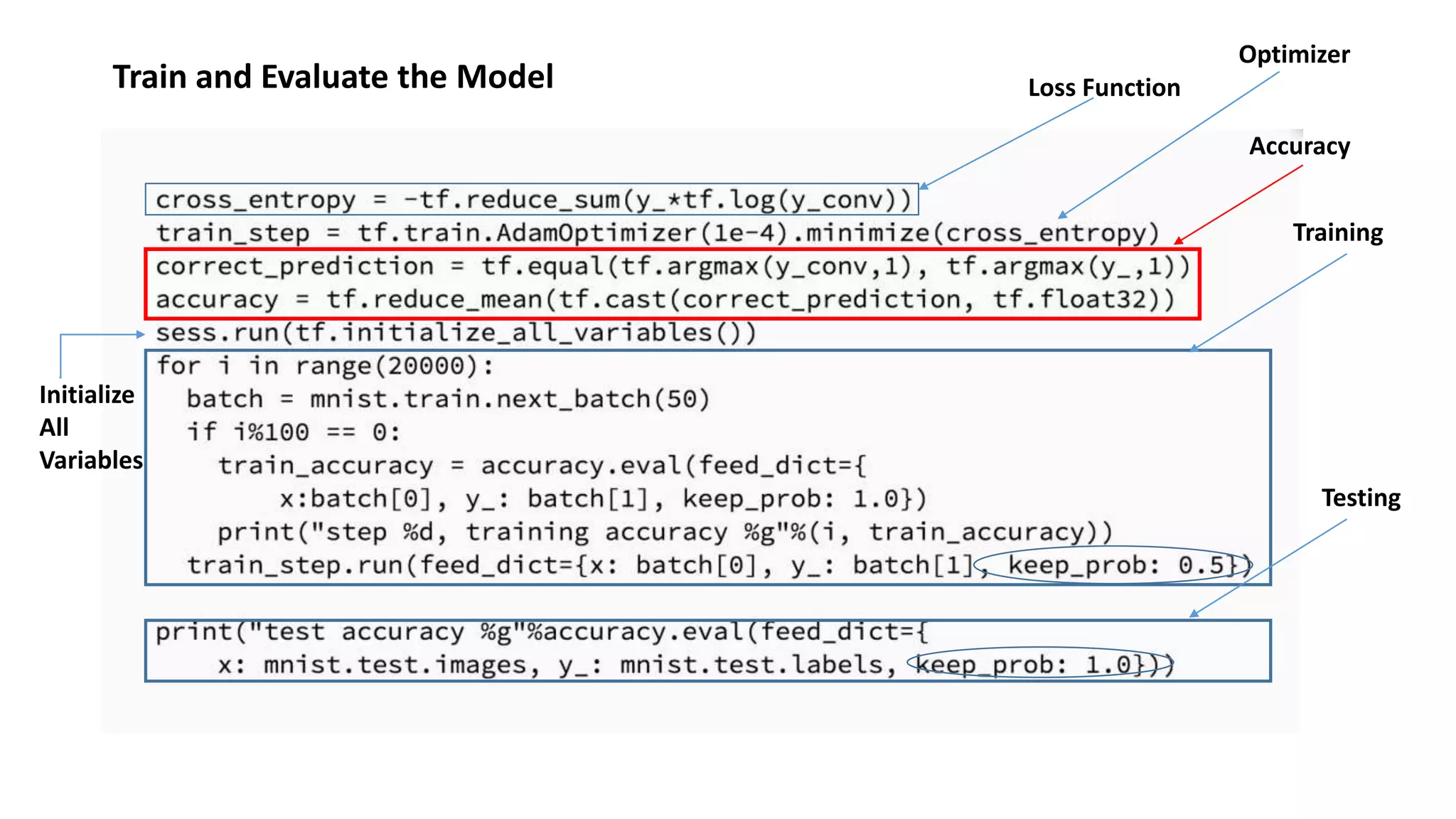

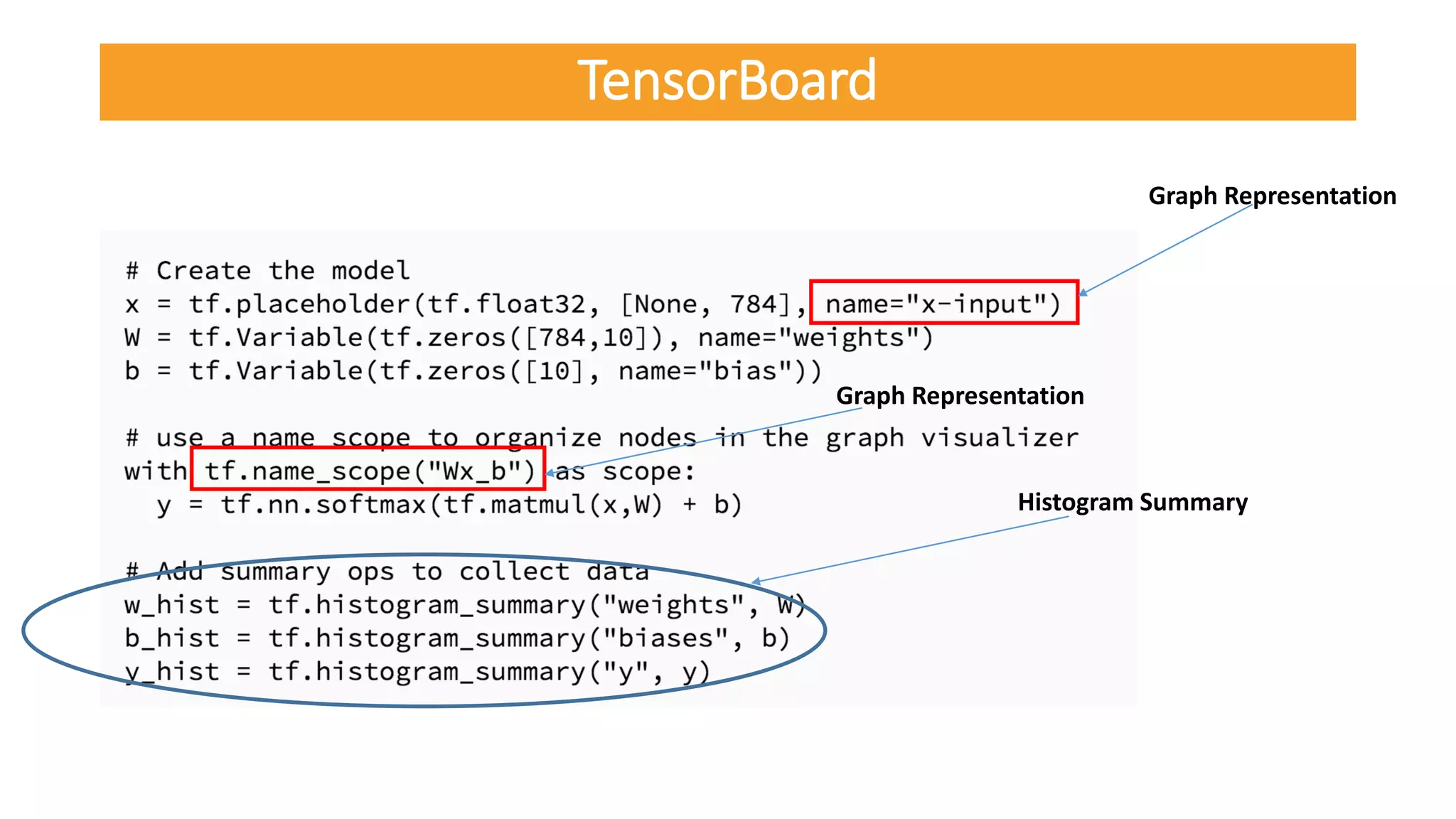

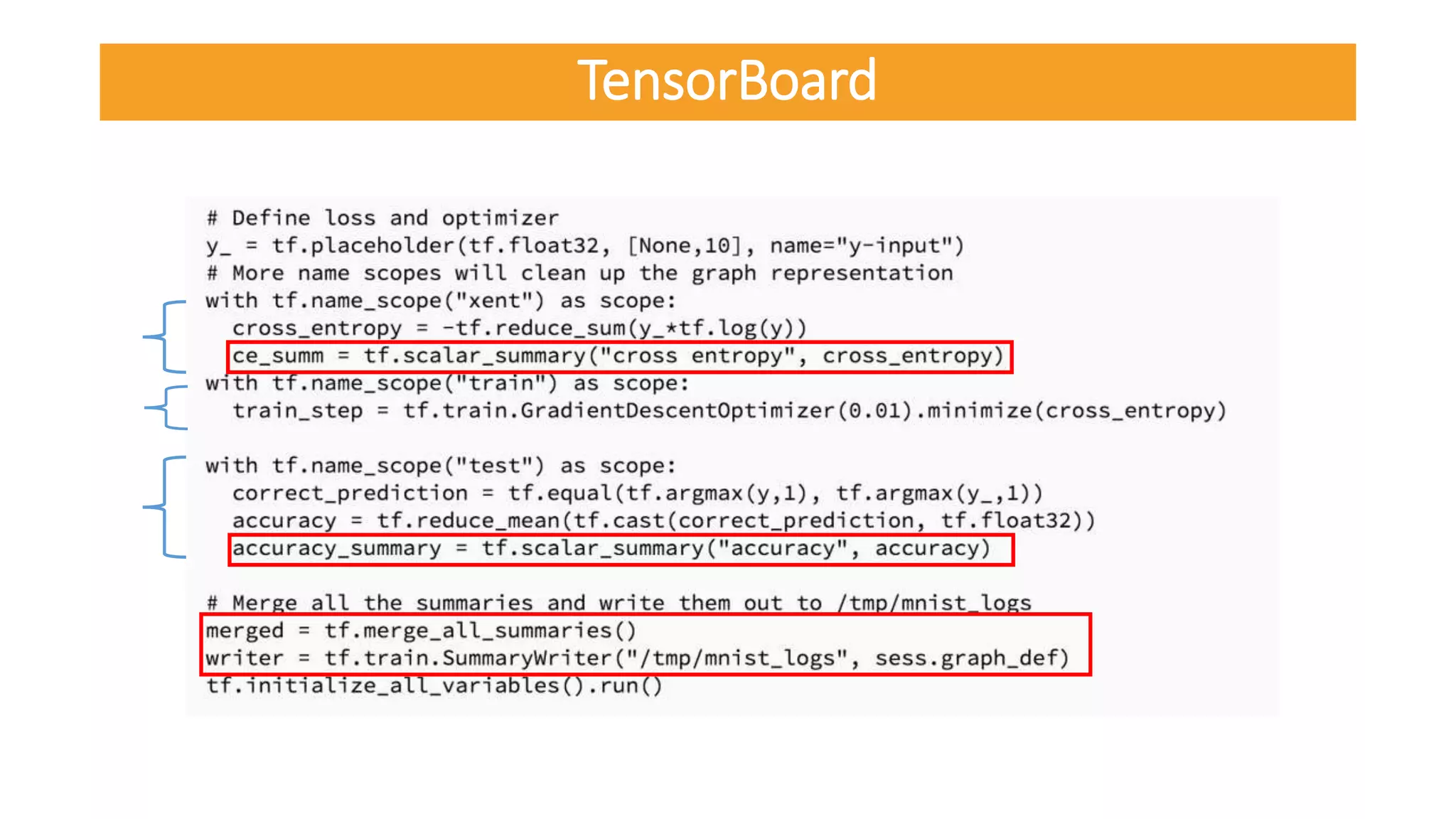

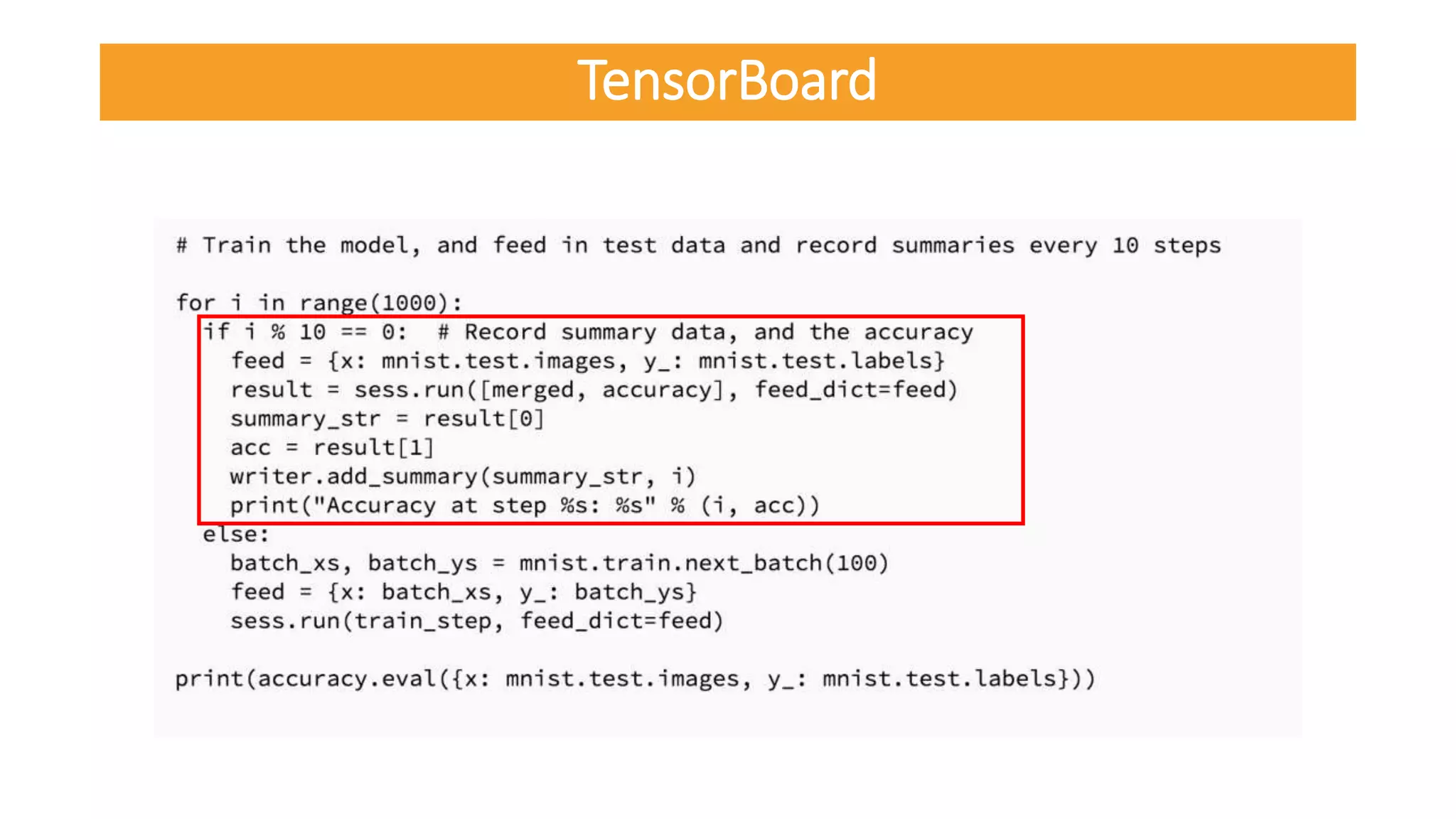

The document provides an overview of neural networks, particularly convolutional neural networks (CNNs) using Google TensorFlow, covering their architecture and mathematical concepts. It highlights key elements of TensorFlow, including tensors, operations, and sessions, and explains the role of dropout as a technique to prevent overfitting in neural networks. Furthermore, it discusses the application of TensorFlow in various projects at Google and introduces TensorBoard for visualizing learning processes.