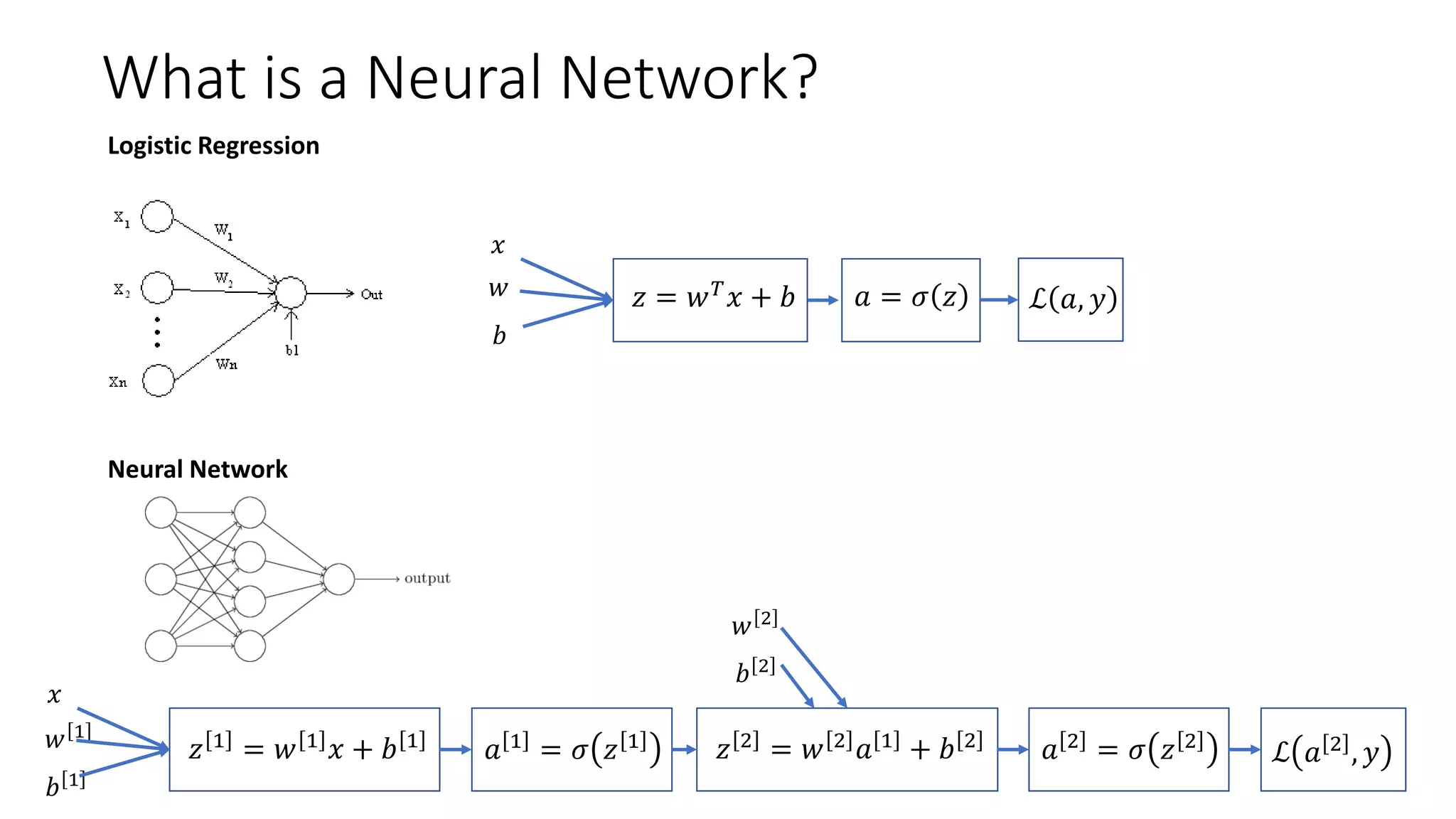

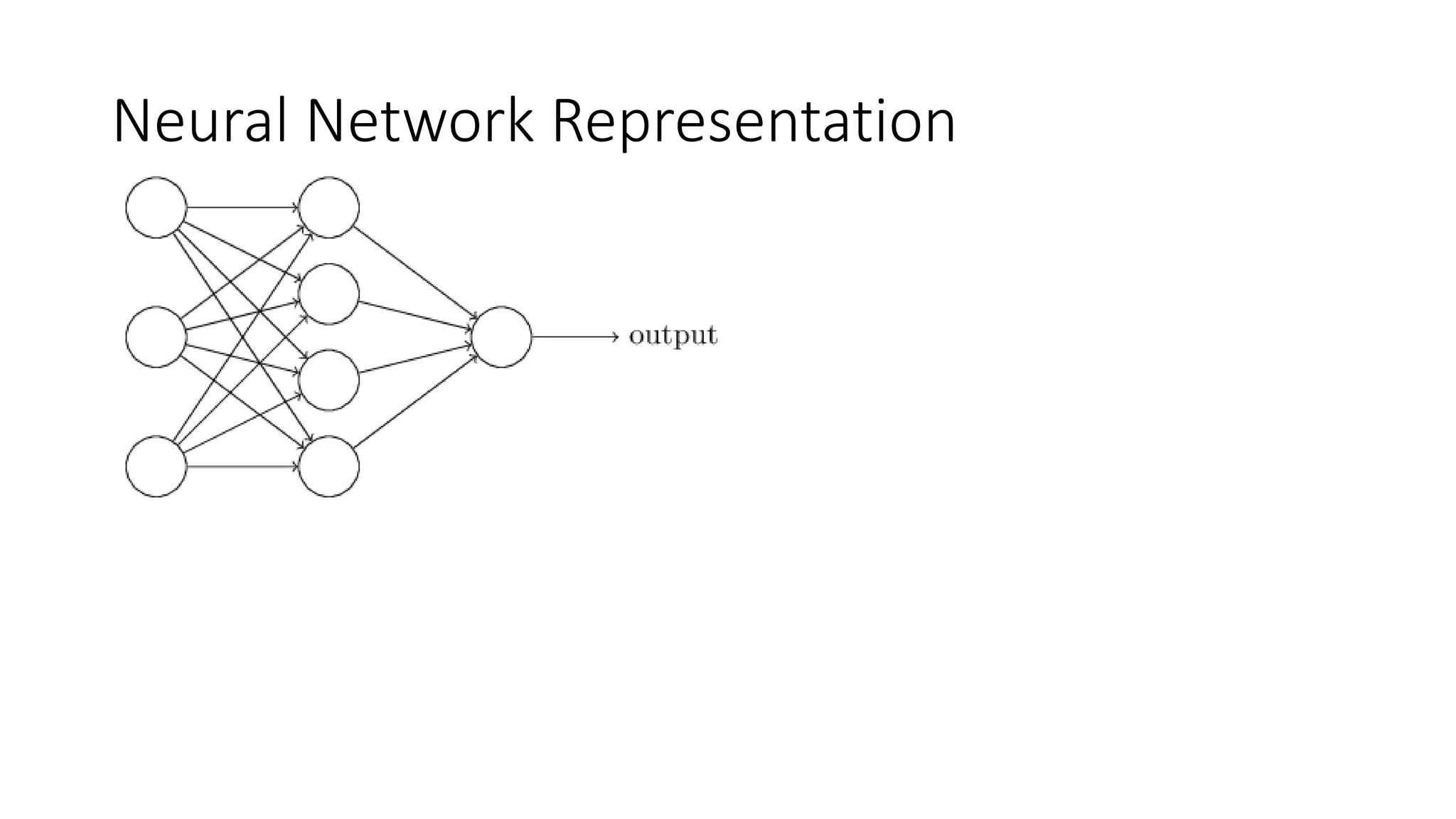

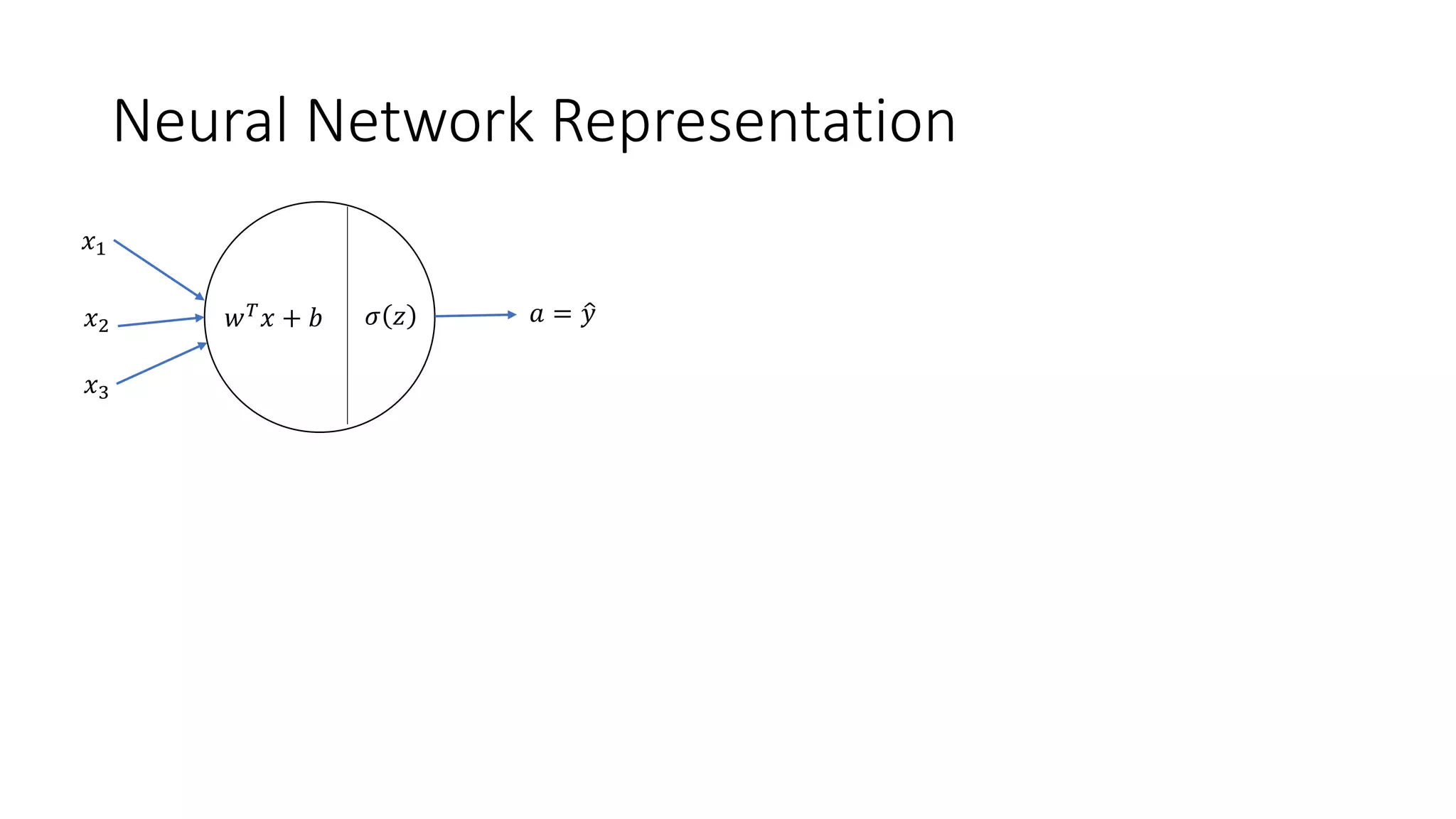

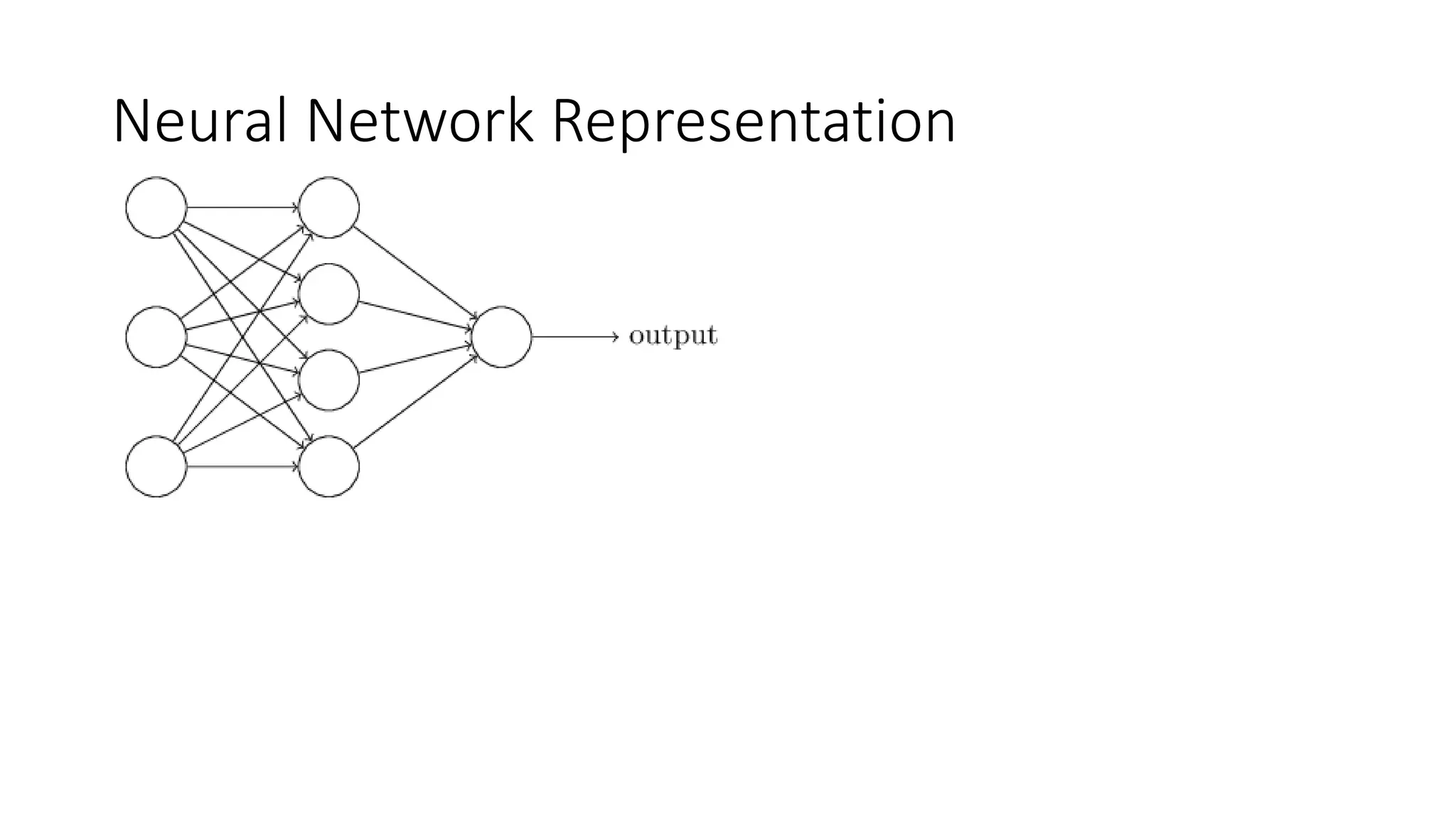

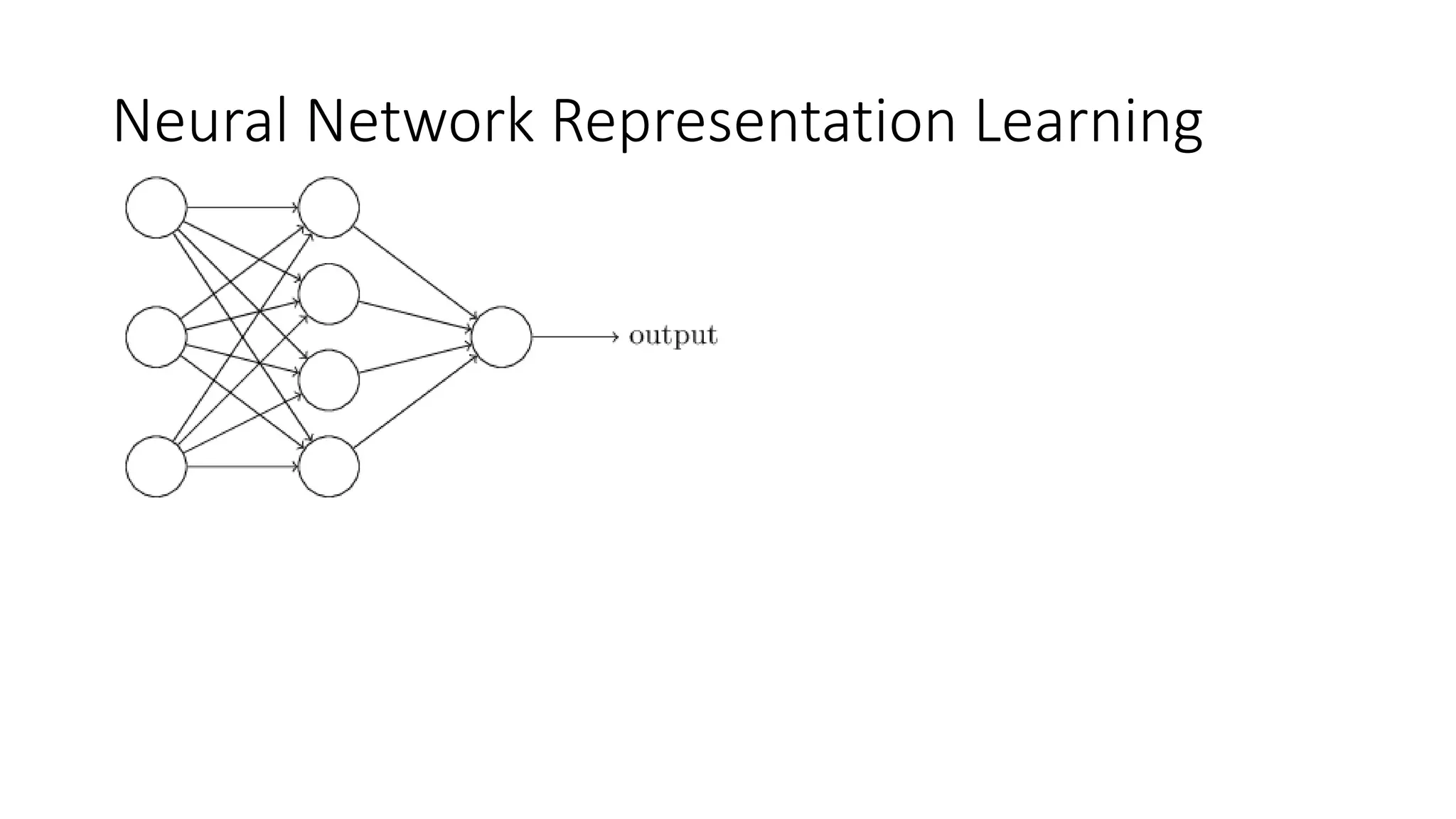

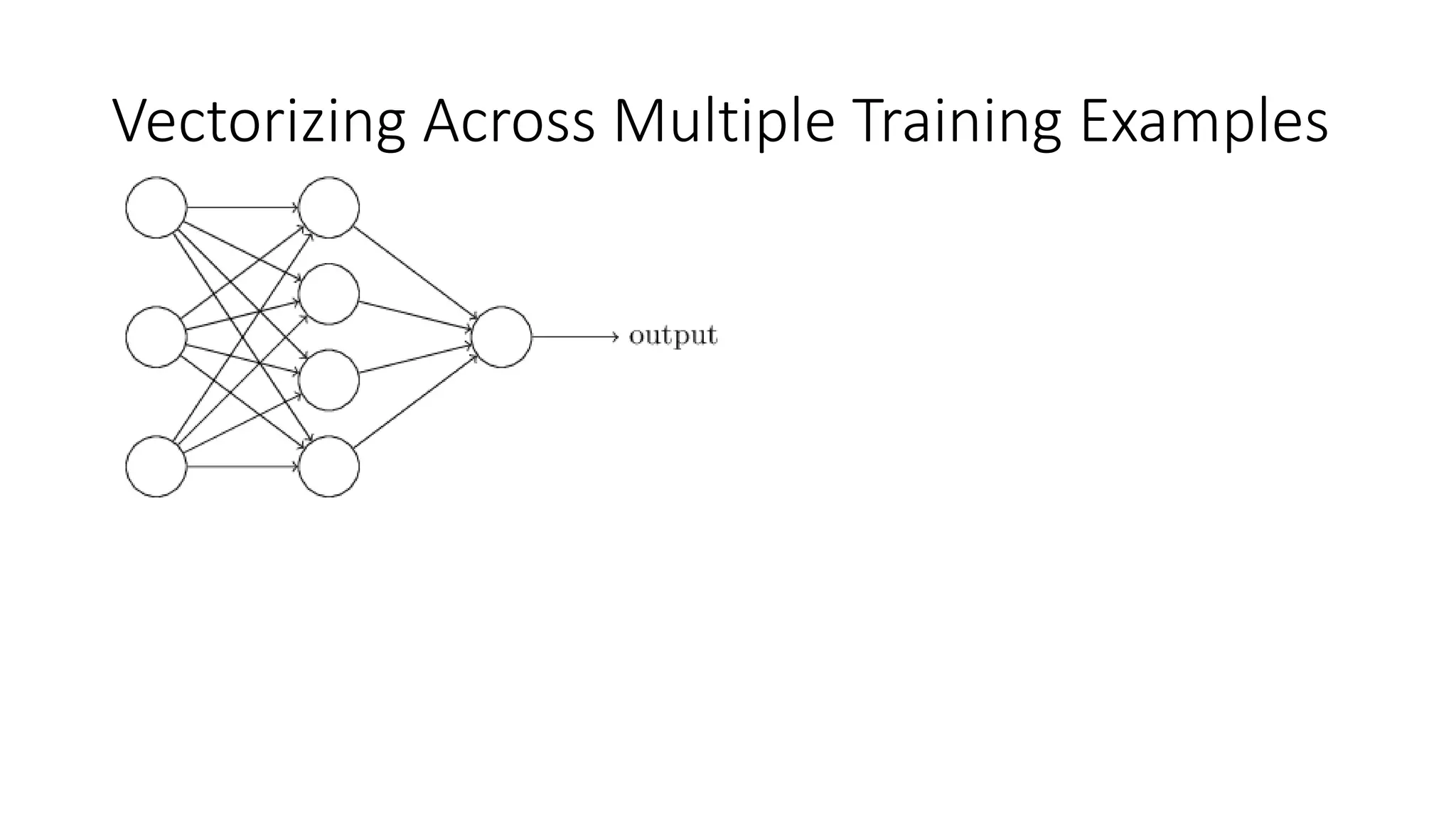

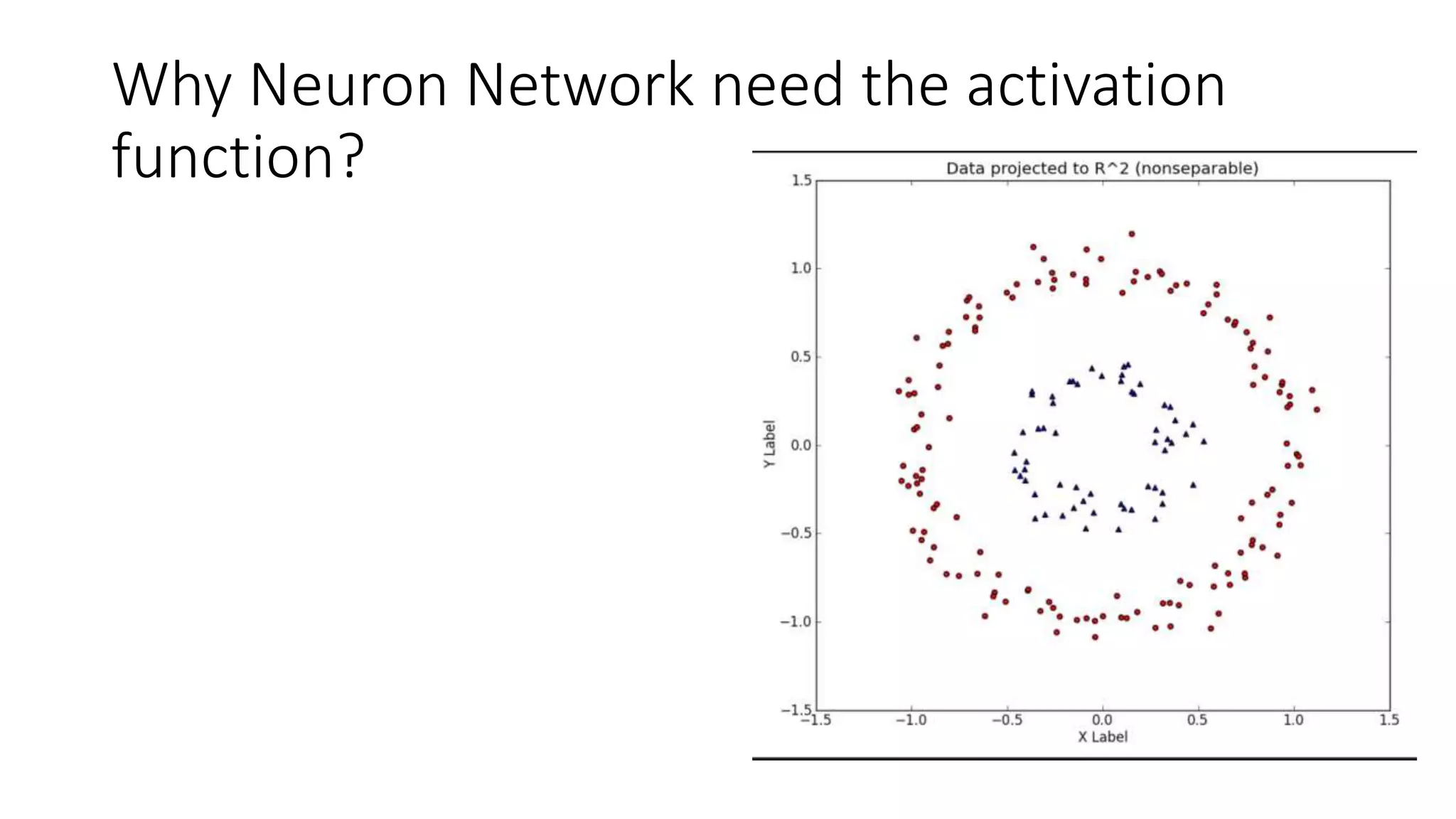

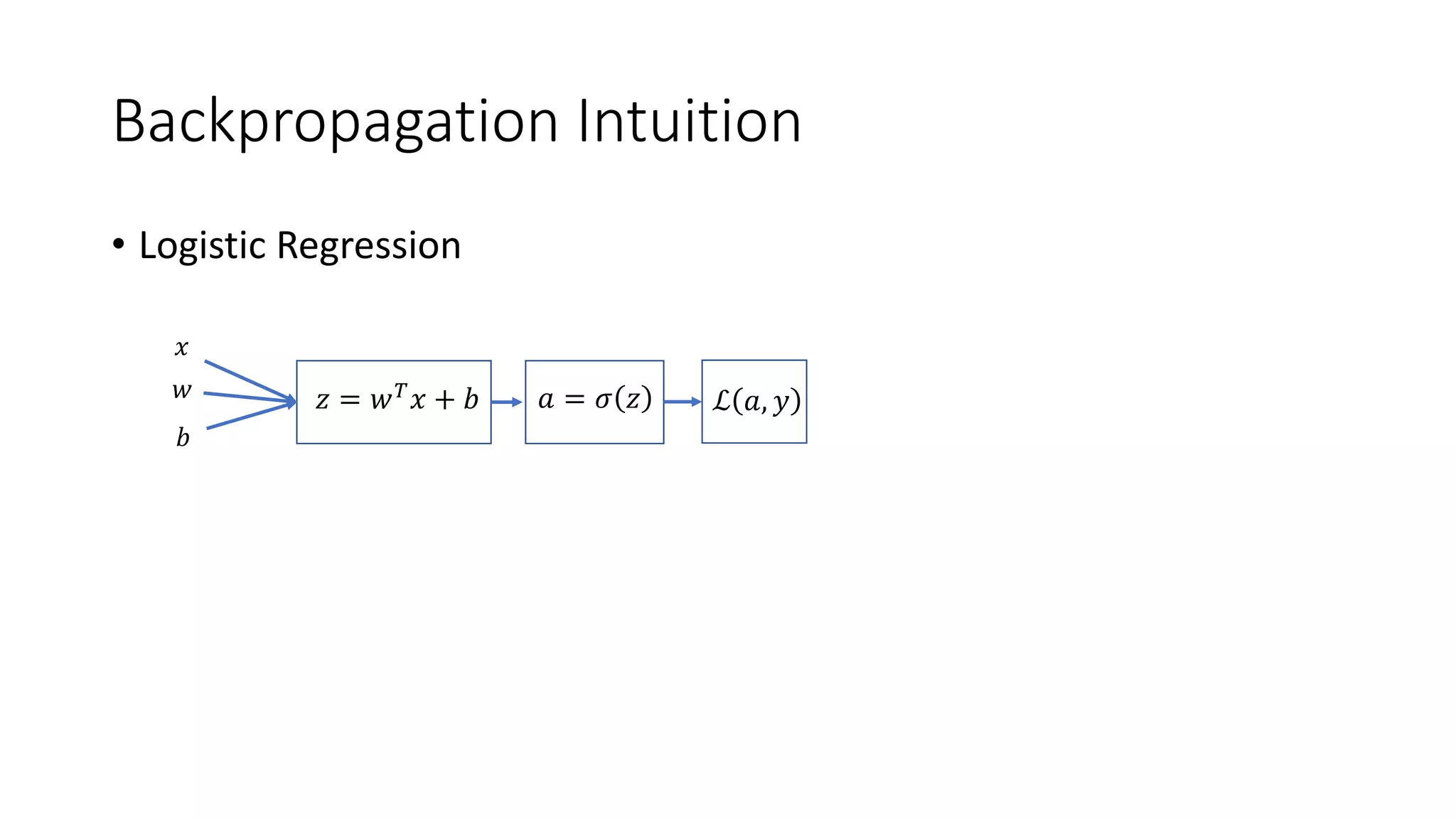

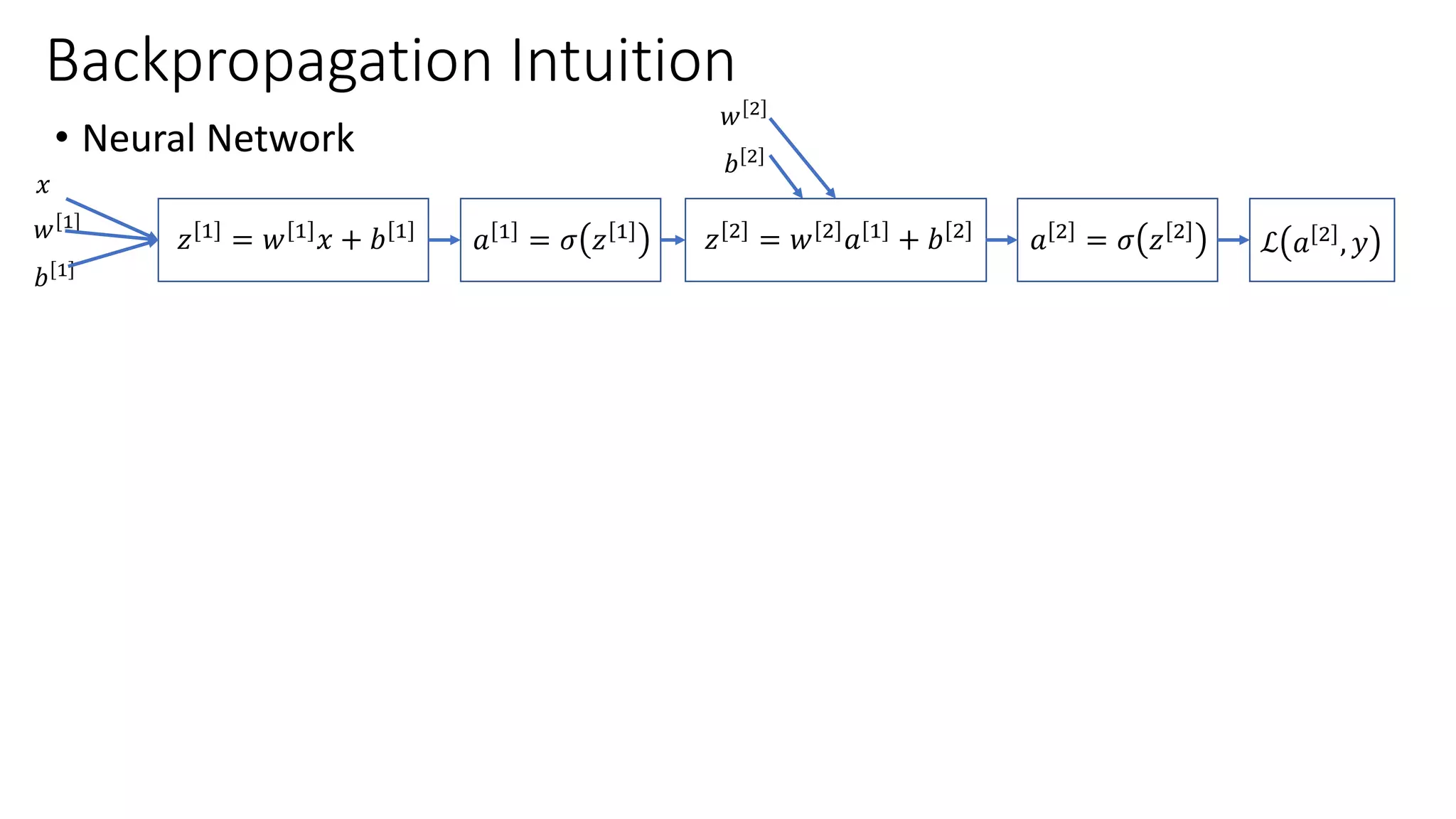

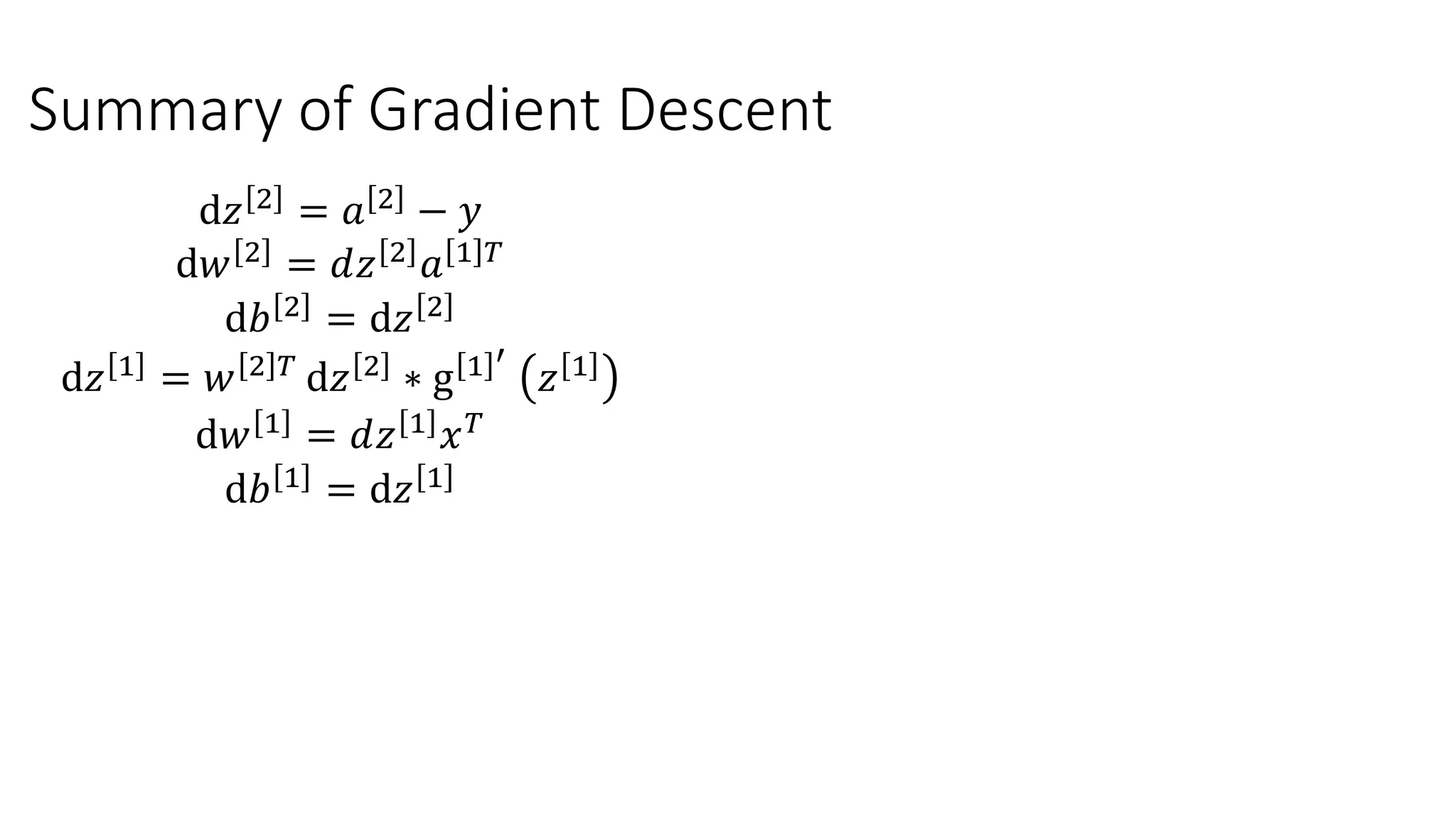

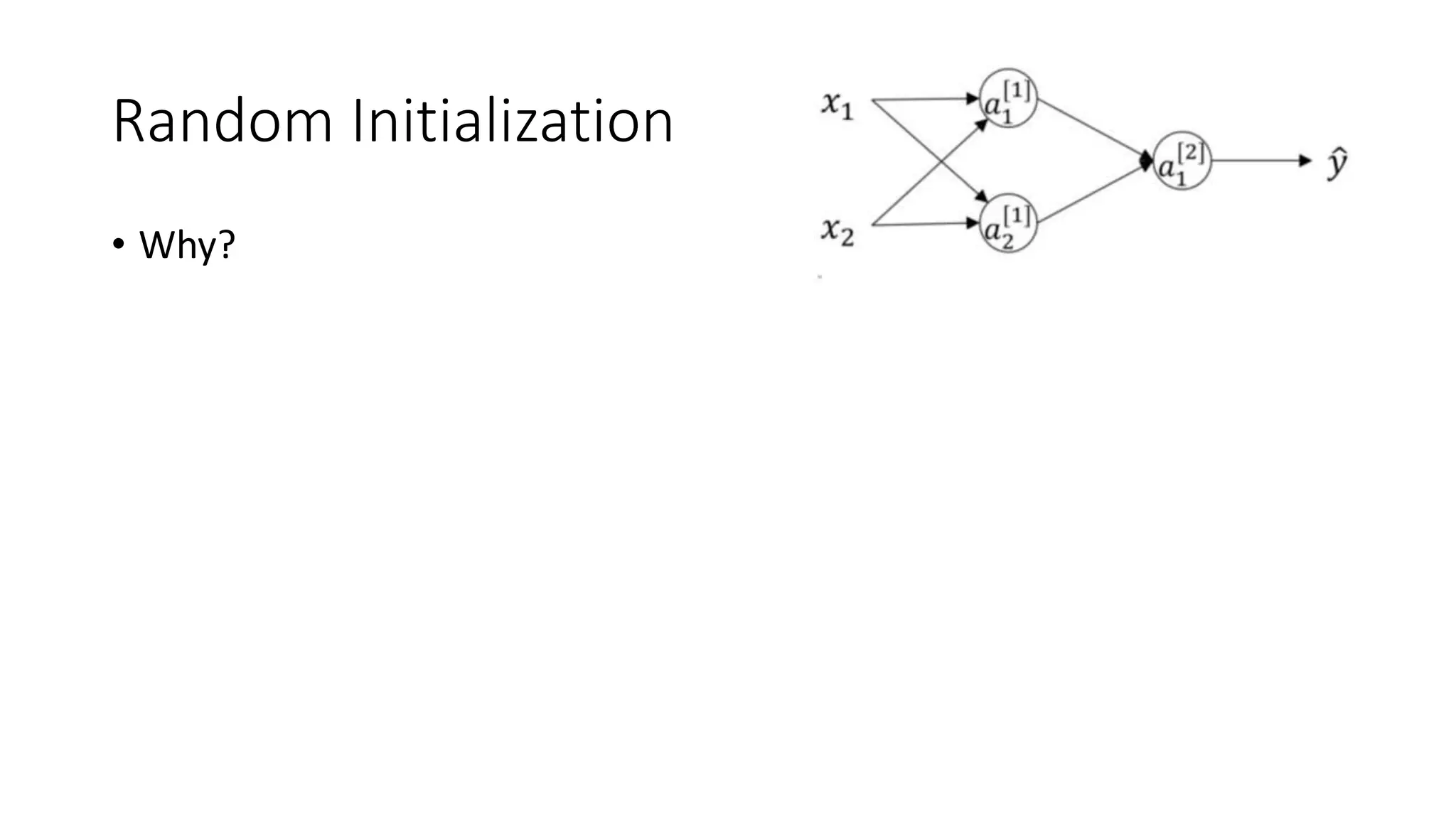

This lecture discusses shallow neural networks. It introduces neural network concepts like network representation, vectorizing training examples, and activation functions such as sigmoid, tanh, and ReLU. It explains that activation functions introduce non-linearity crucial for neural networks. The lecture also covers backpropagation for computing gradients in neural networks and updating weights with gradient descent. Random initialization is discussed as important for breaking symmetry in networks.