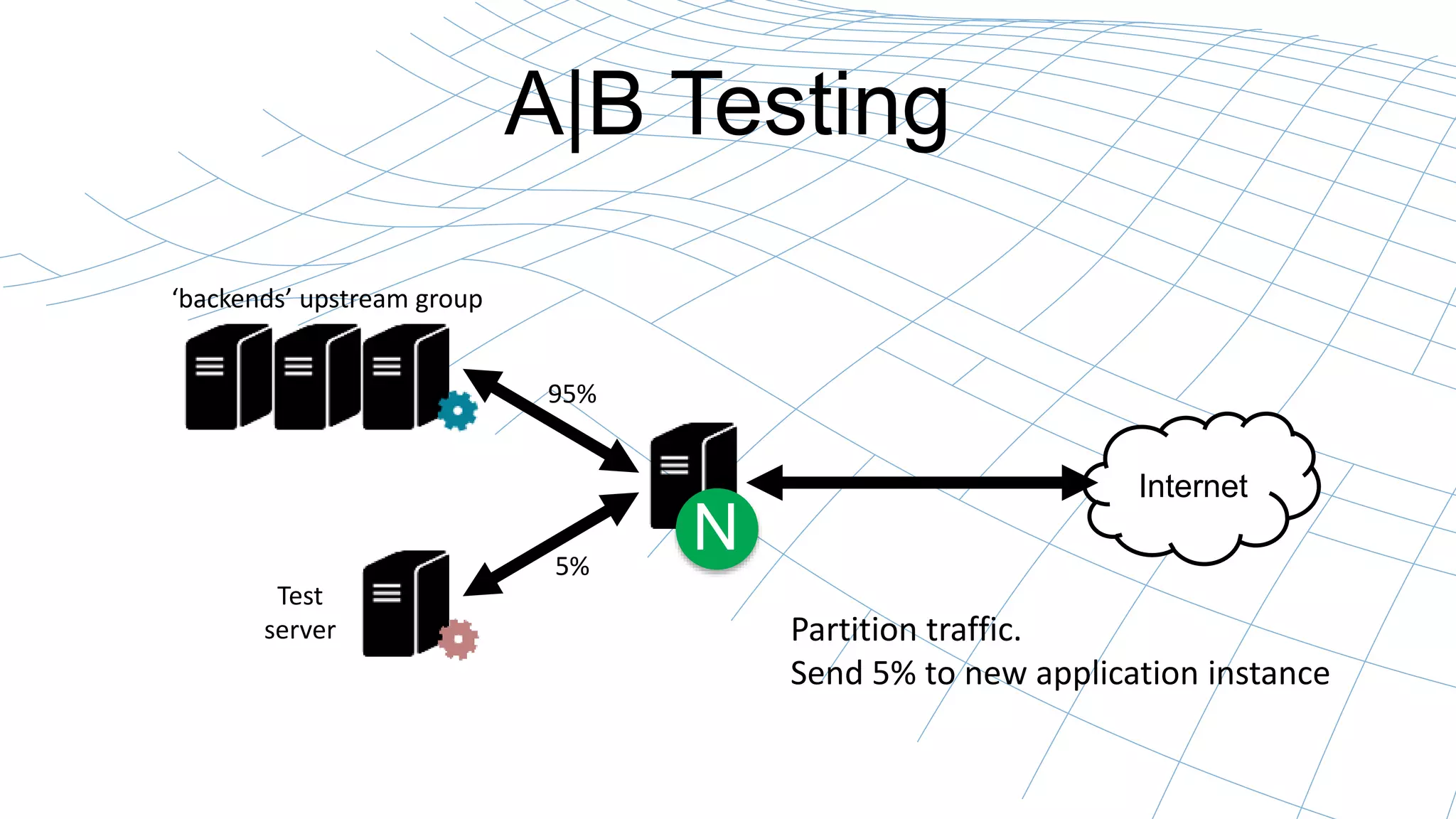

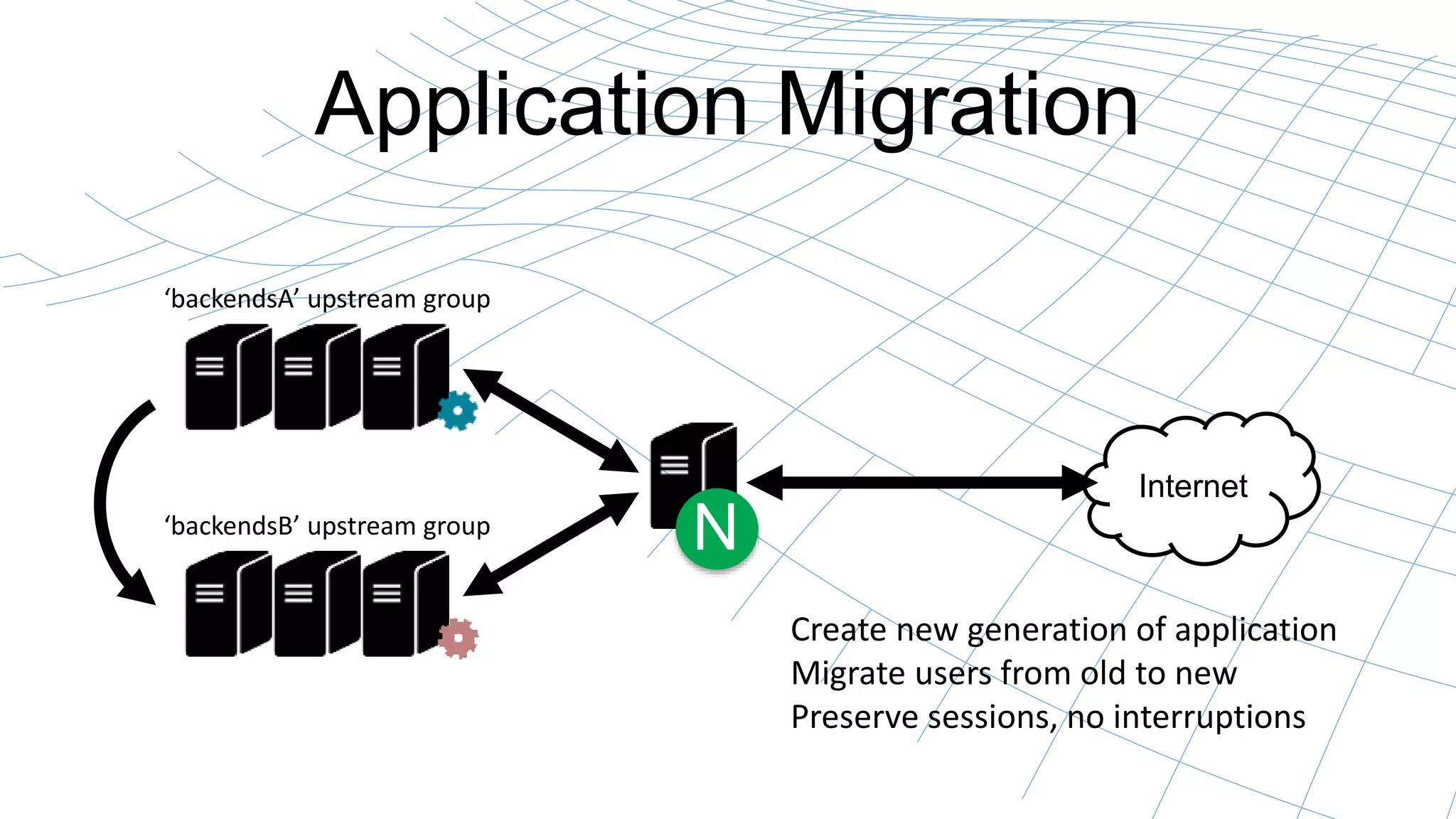

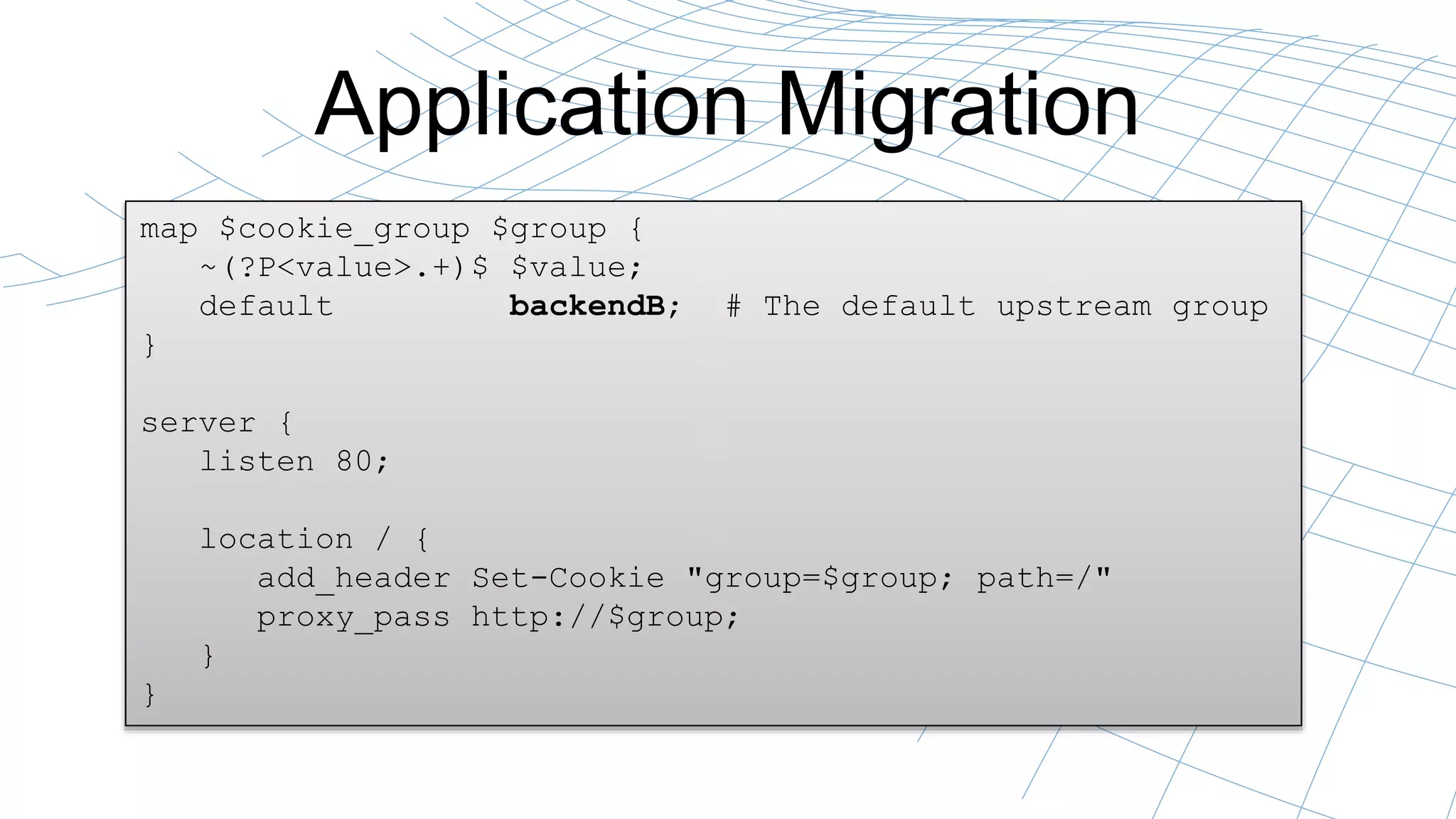

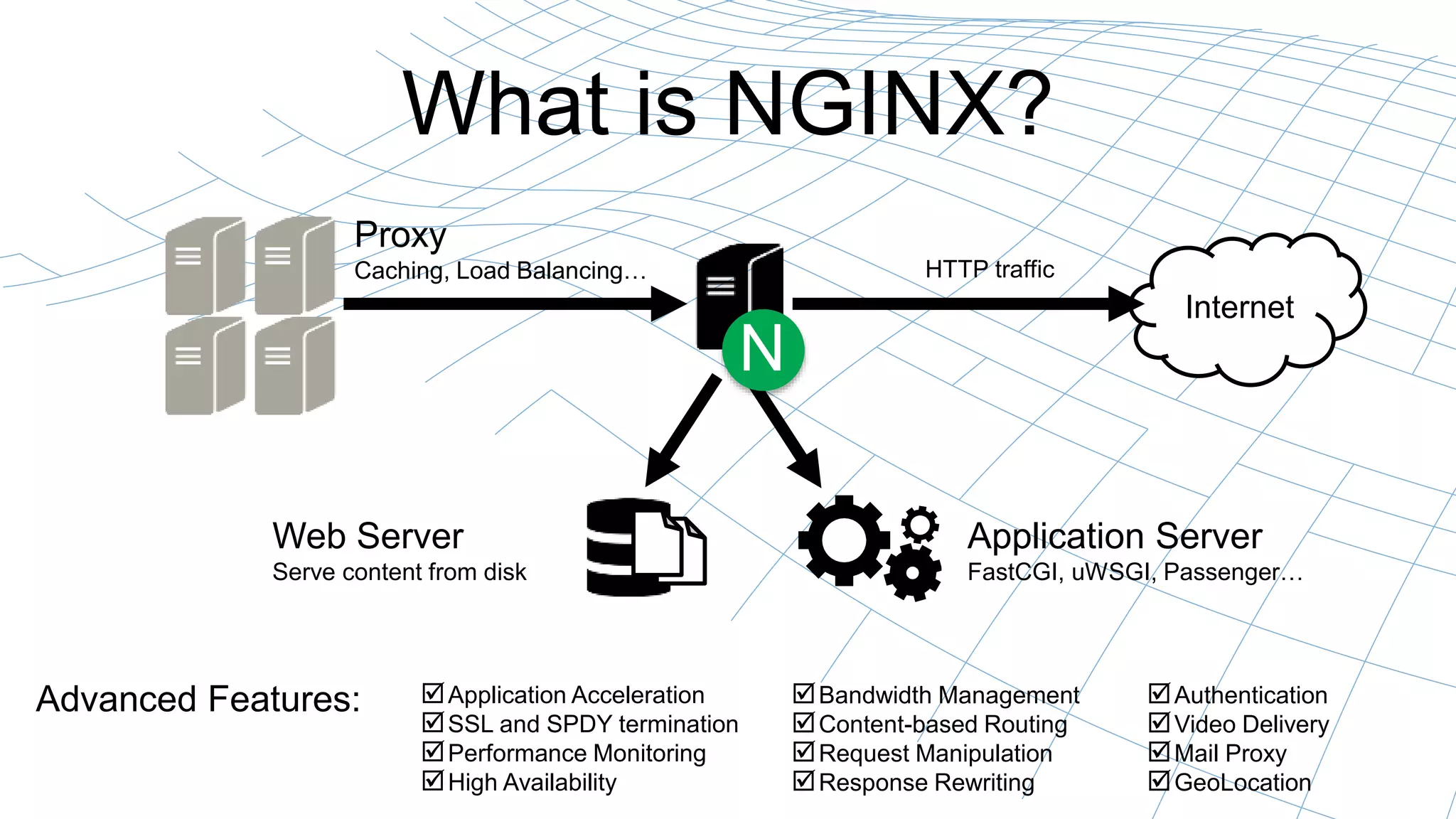

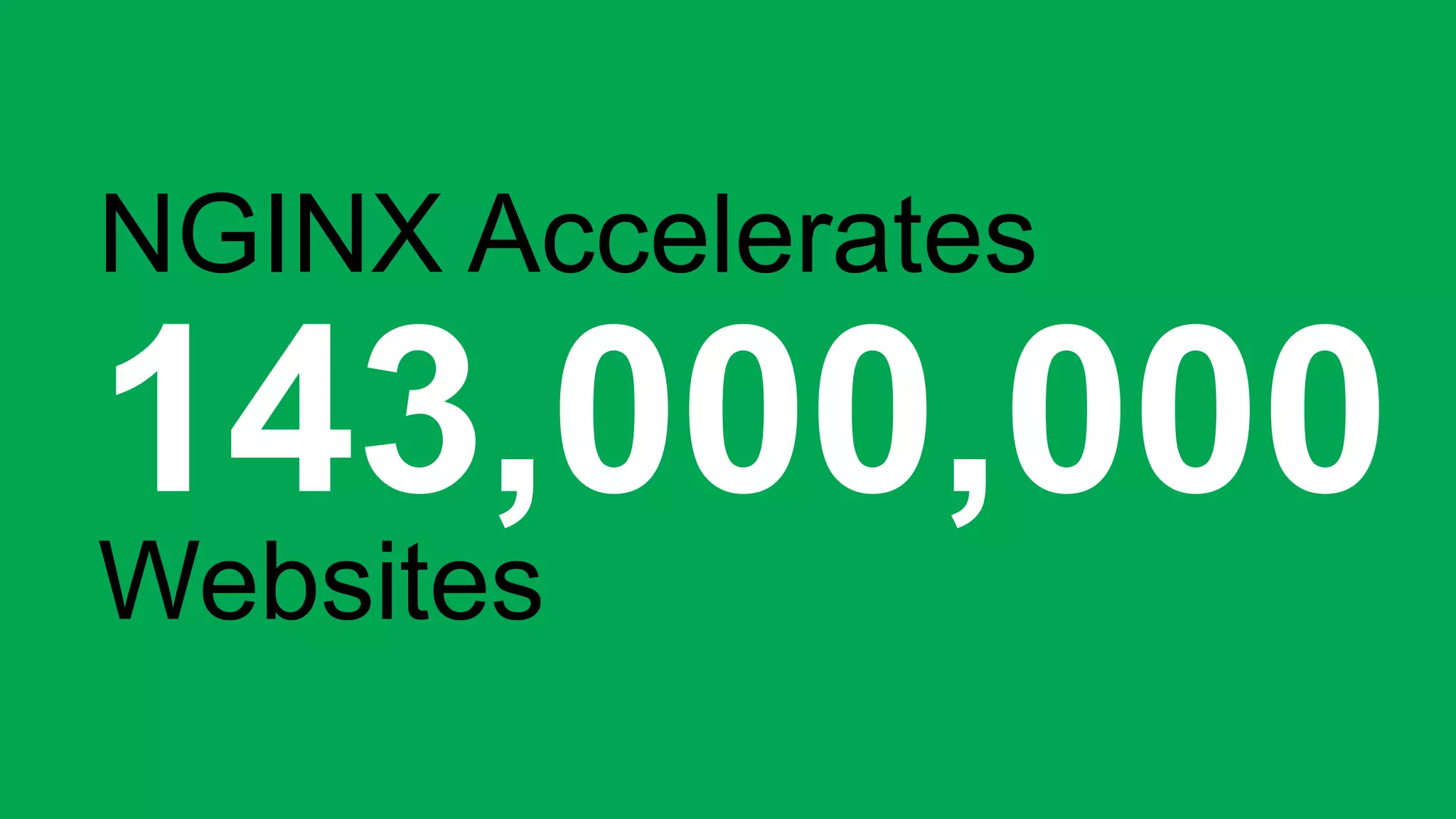

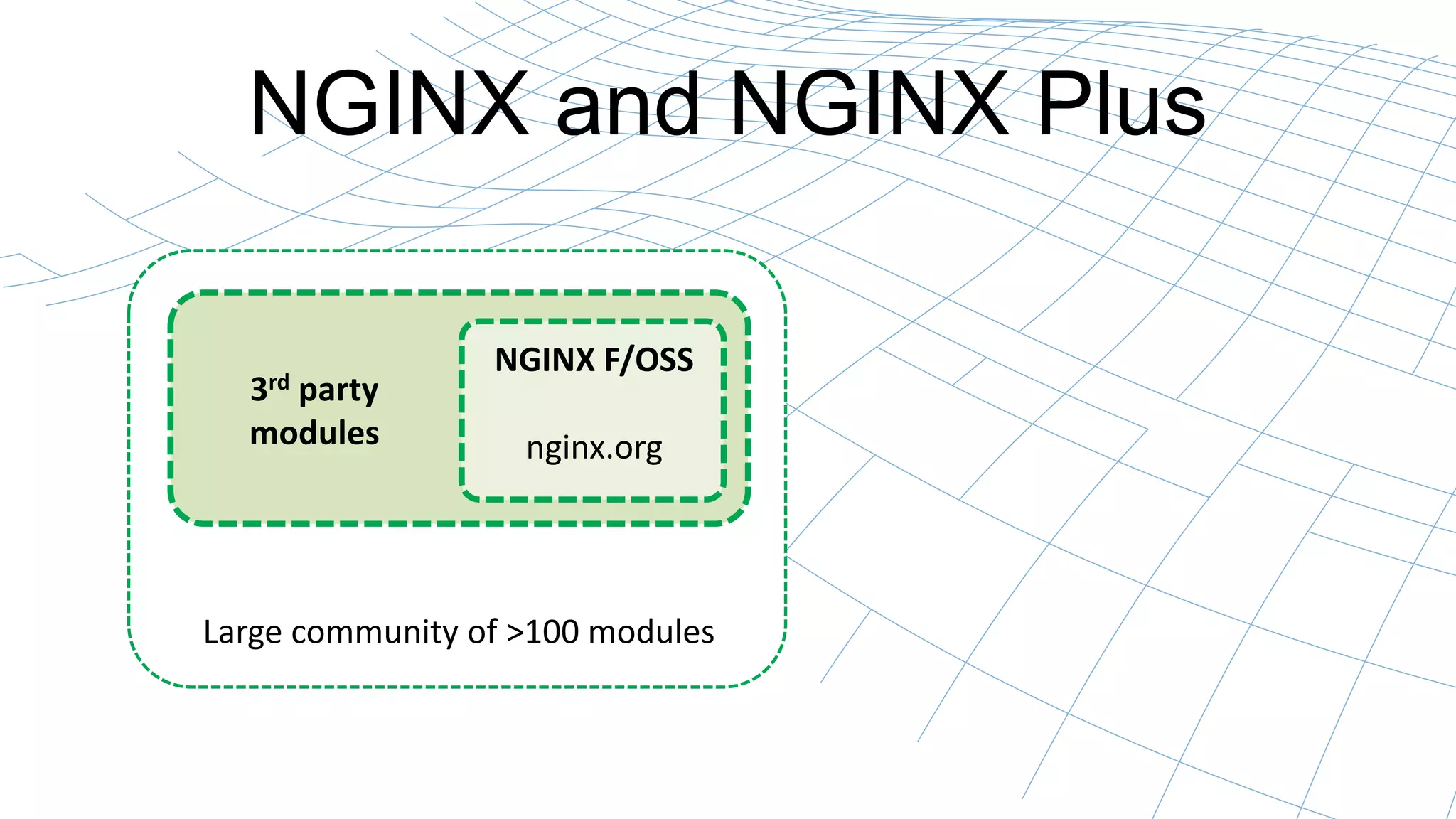

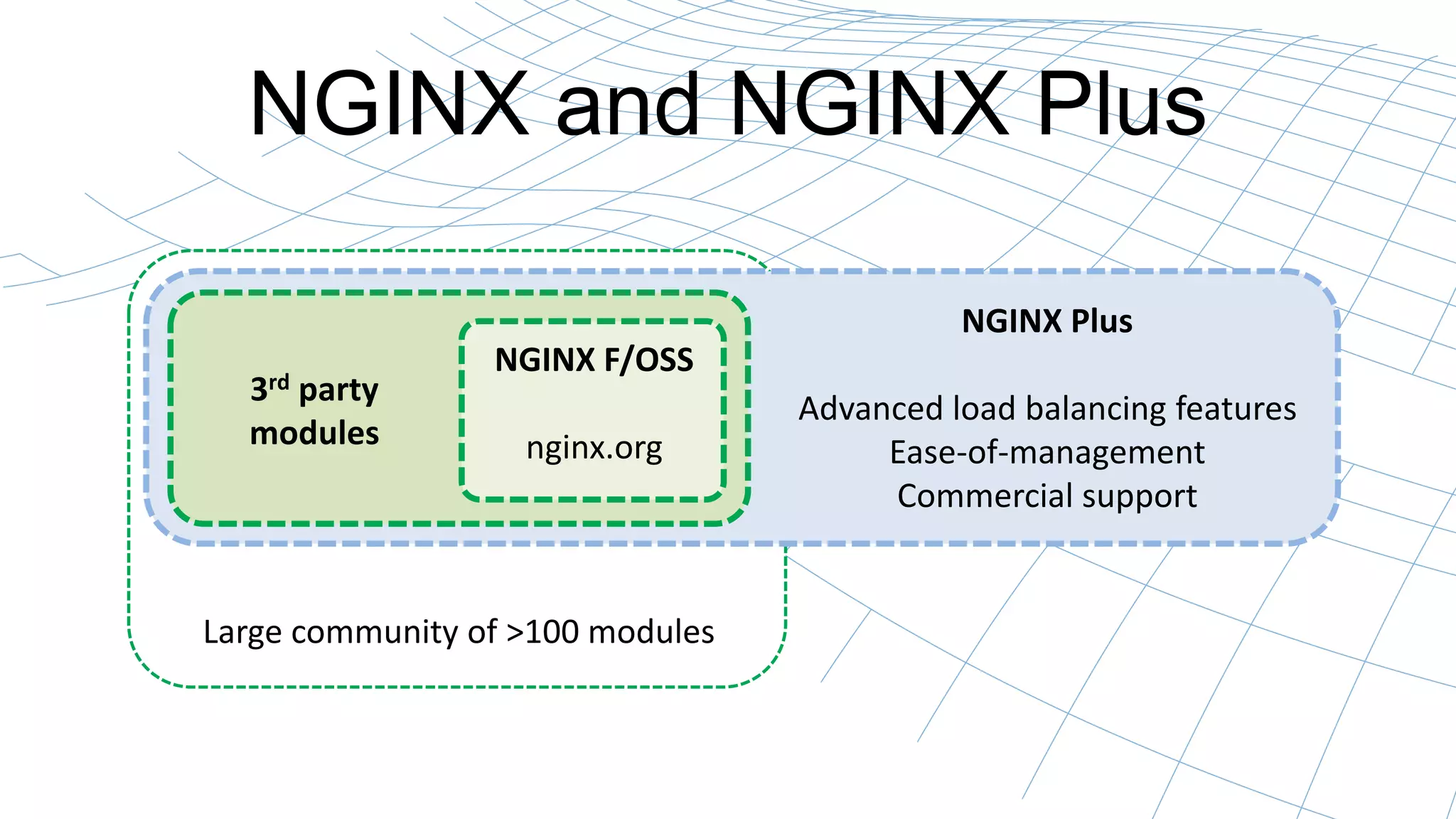

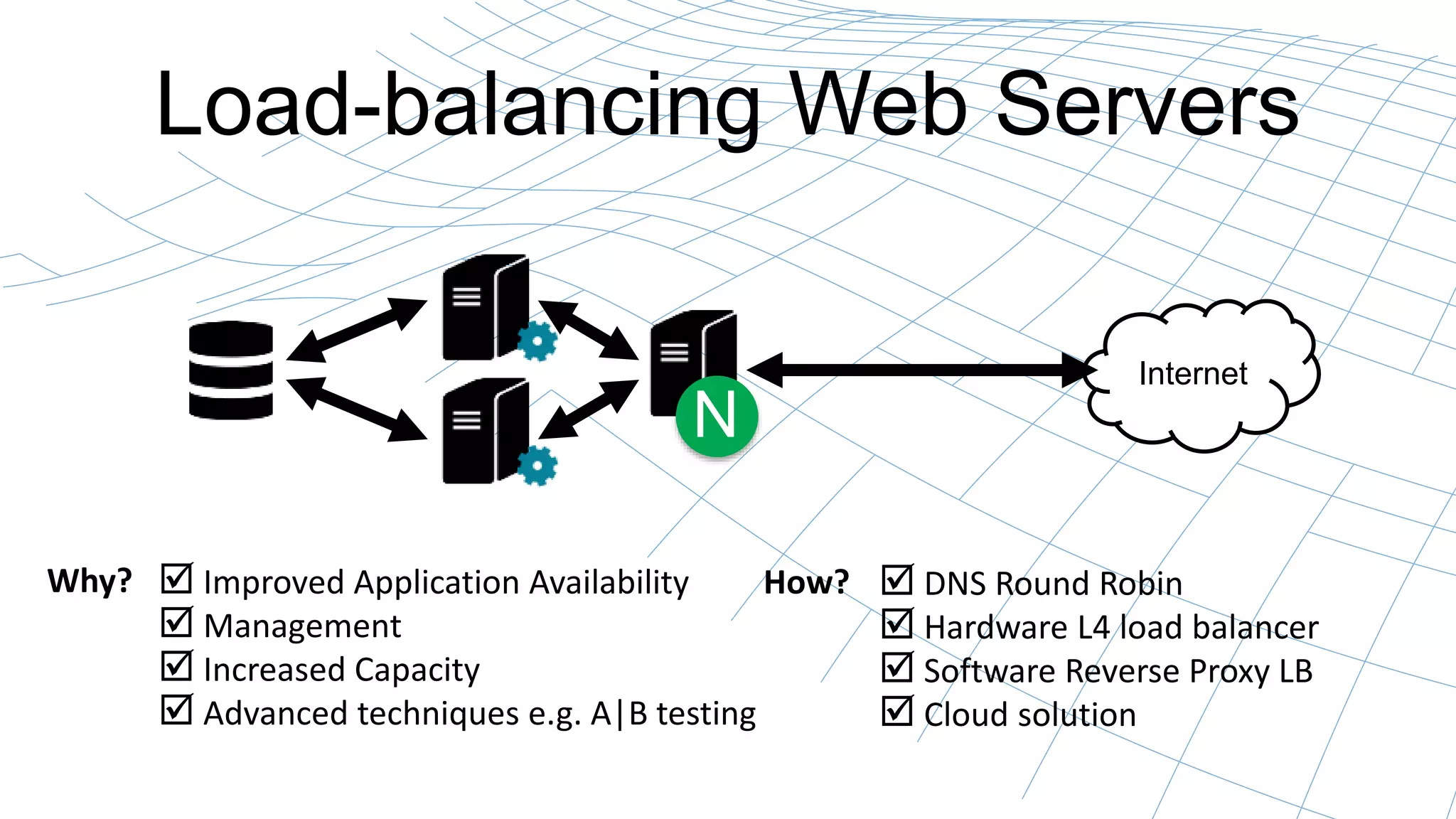

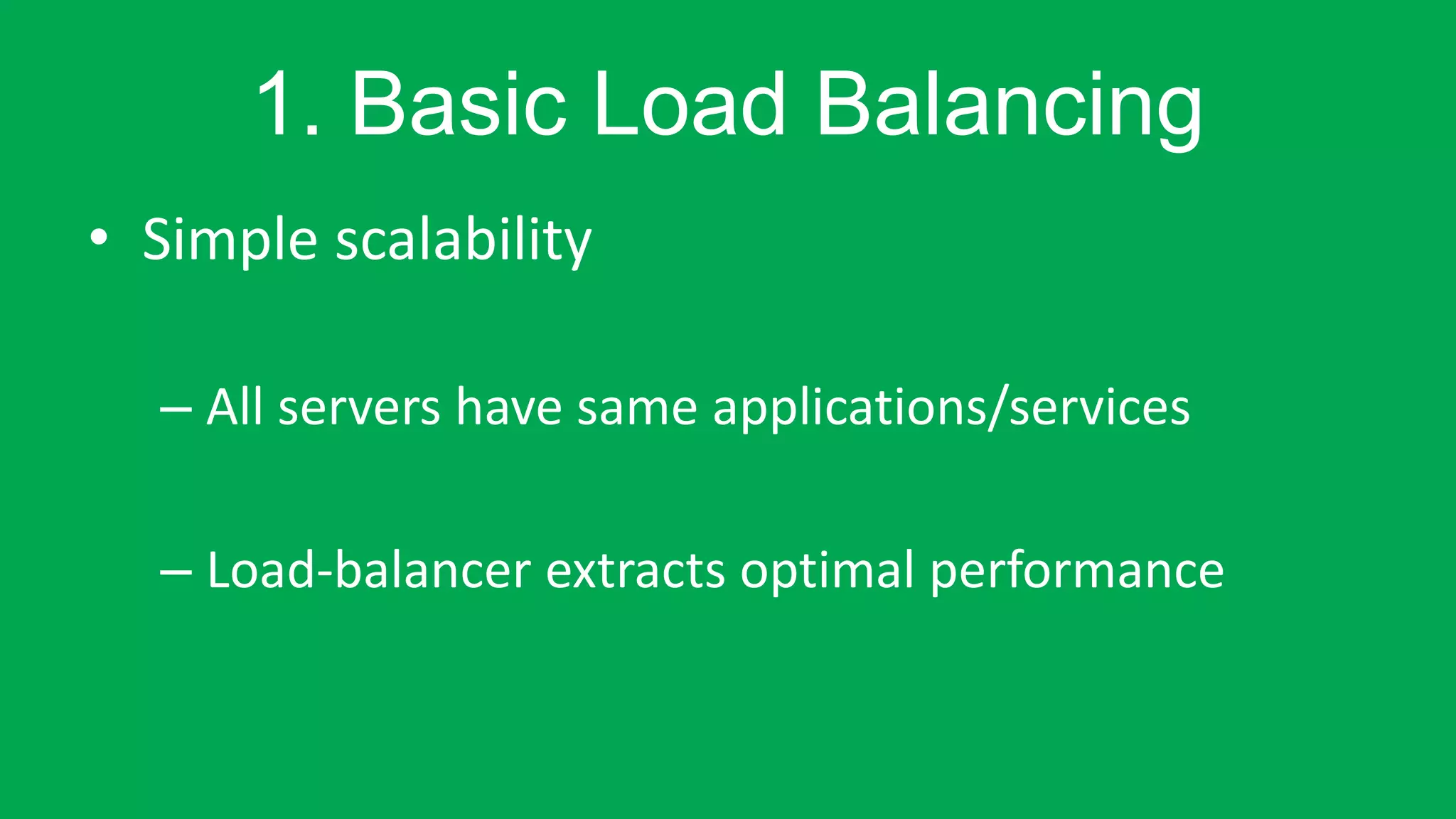

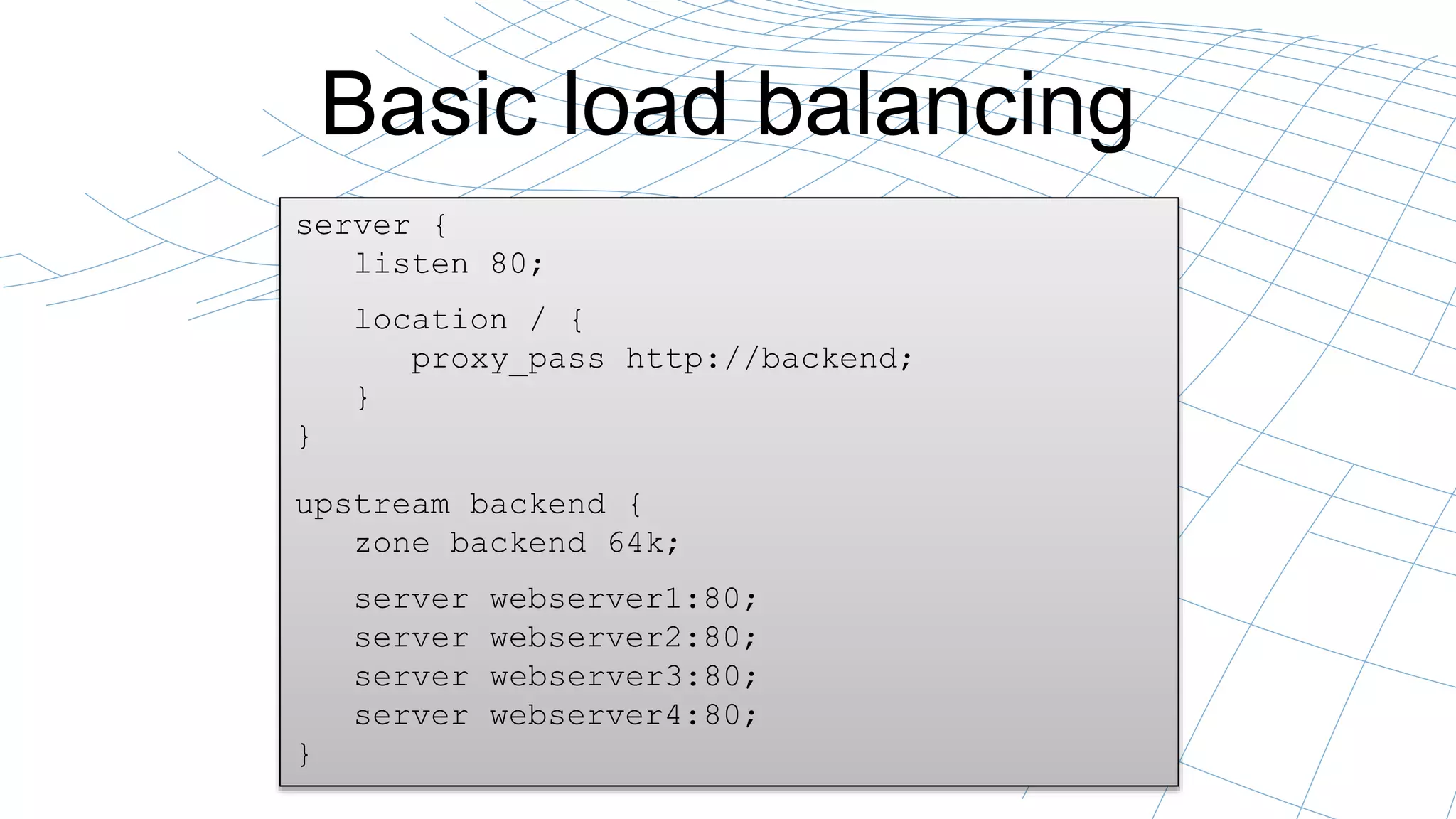

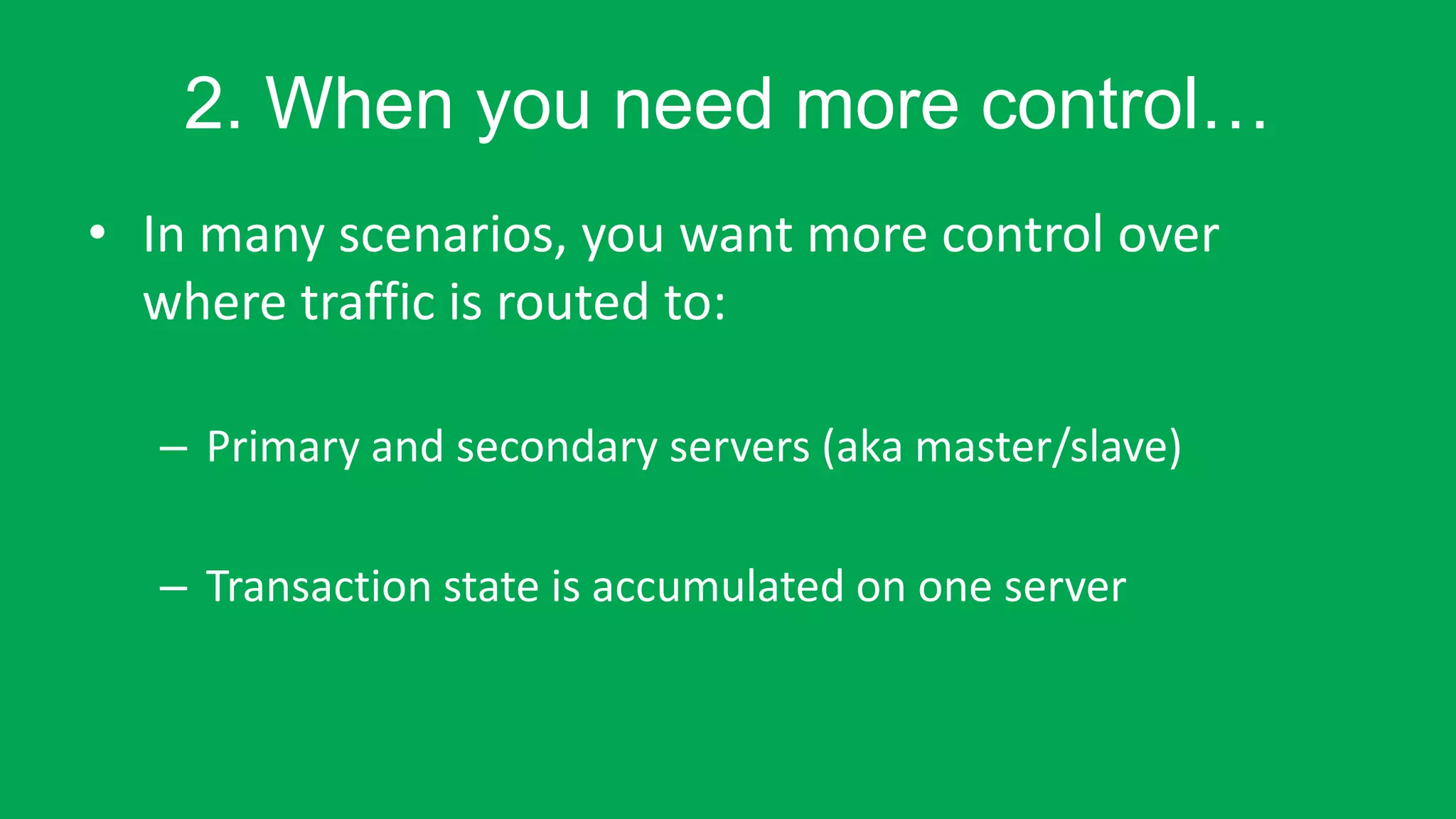

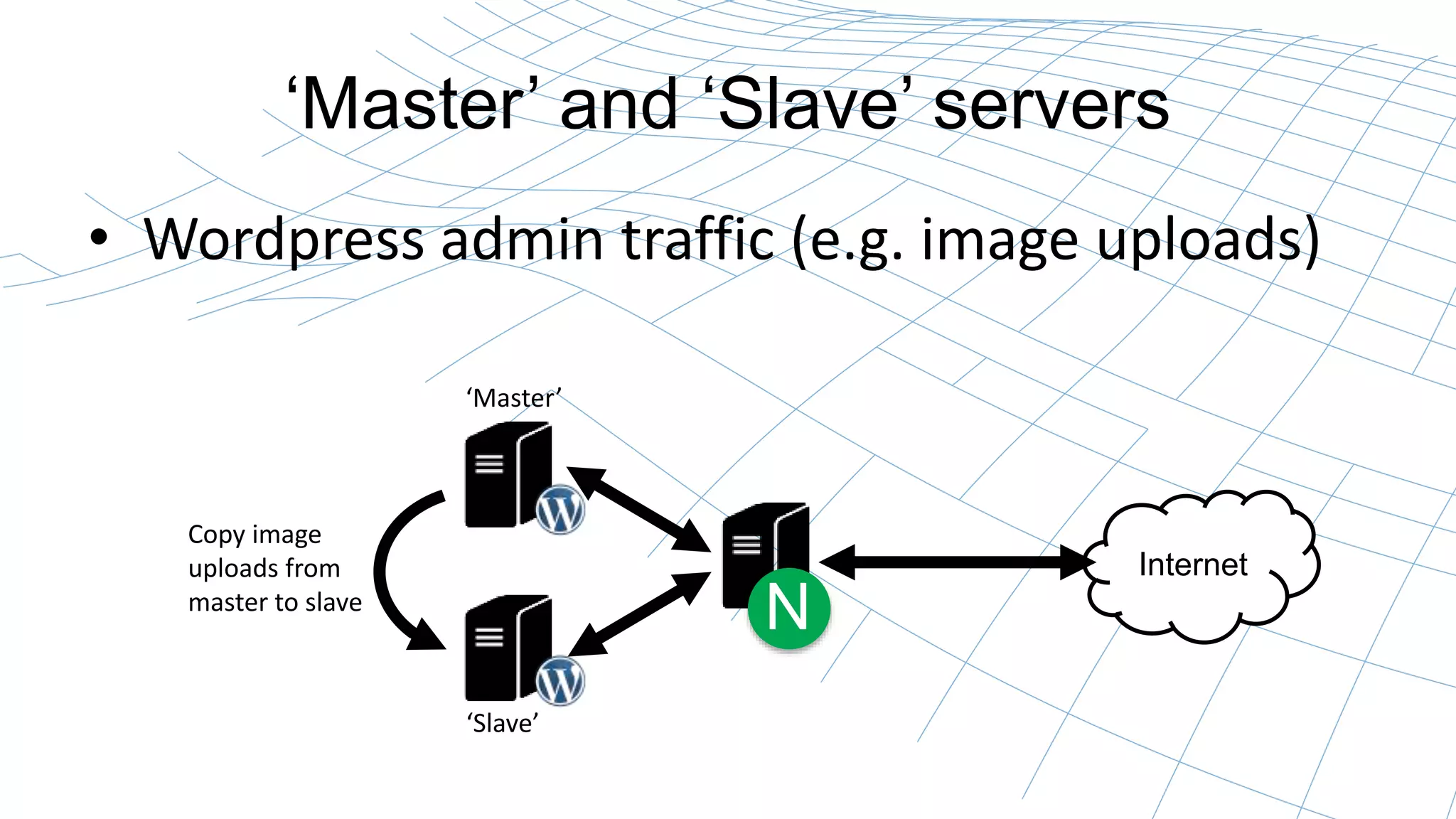

This webinar, presented by Owen Garrett and introduced by Andrew Alexeev, covers how to scale web server capacity using NGINX and its load balancing features. It explores basic load balancing techniques, advanced configurations for session persistence, and various methods like A/B testing and application migration. The presentation also highlights the significant usage of NGINX across top websites and encourages viewers to explore further resources and future webinars.

![Basic load balancing

• Use logging to debug: “$upstream_addr”

log_format combined2 '$remote_addr - $remote_user [$time_local] '

'"$request" $status $body_bytes_sent '

'"$upstream_addr"';

192.168.56.1 - - [09/Mar/2014:23:08:56 +0000] "GET / HTTP/1.1" 200 30 "127.0.1.1:80"

192.168.56.1 - - [09/Mar/2014:23:08:56 +0000] "GET /favicon.ico HTTP/1.1" 200 30 "127.0.1.2:80"

192.168.56.1 - - [09/Mar/2014:23:08:57 +0000] "GET / HTTP/1.1" 200 30 "127.0.1.3:80"

192.168.56.1 - - [09/Mar/2014:23:08:57 +0000] "GET /favicon.ico HTTP/1.1" 200 30 "127.0.1.4:80"

192.168.56.1 - - [09/Mar/2014:23:08:57 +0000] "GET / HTTP/1.1" 200 30 "127.0.1.1:80"

192.168.56.1 - - [09/Mar/2014:23:08:57 +0000] "GET /favicon.ico HTTP/1.1" 200 30 "127.0.1.2:80"

192.168.56.1 - - [09/Mar/2014:23:08:58 +0000] "GET / HTTP/1.1" 200 30 "127.0.1.3:80"

192.168.56.1 - - [09/Mar/2014:23:08:58 +0000] "GET /favicon.ico HTTP/1.1" 200 30 "127.0.1.4:80"](https://image.slidesharecdn.com/loadbalancingwithnginx-141120212150-conversion-gate01/75/Load-Balancing-and-Scaling-with-NGINX-15-2048.jpg)

![Managing the Upstream Group

• Direct config editing:

– nginx –s reload

– upstream.conf file:

upstream backend {

server webserver1:80;

server webserver2:80;

server webserver3:80;

server webserver4:80;

}

• On-the-fly Reconfiguration [NGINX Plus only]

$ curl 'http://localhost/upstream_conf?upstream=backend&id=3&down=1'](https://image.slidesharecdn.com/loadbalancingwithnginx-141120212150-conversion-gate01/75/Load-Balancing-and-Scaling-with-NGINX-17-2048.jpg)

![Session Persistence [NGINX Plus only]

• For when transaction state is accumulated on one server

– Shopping carts

– Advanced interactions

– Non-RESTful Applications

• NGINX Plus offers two methods:

– sticky cookie

– sticky route

“Session persistence also

helps performance”](https://image.slidesharecdn.com/loadbalancingwithnginx-141120212150-conversion-gate01/75/Load-Balancing-and-Scaling-with-NGINX-21-2048.jpg)