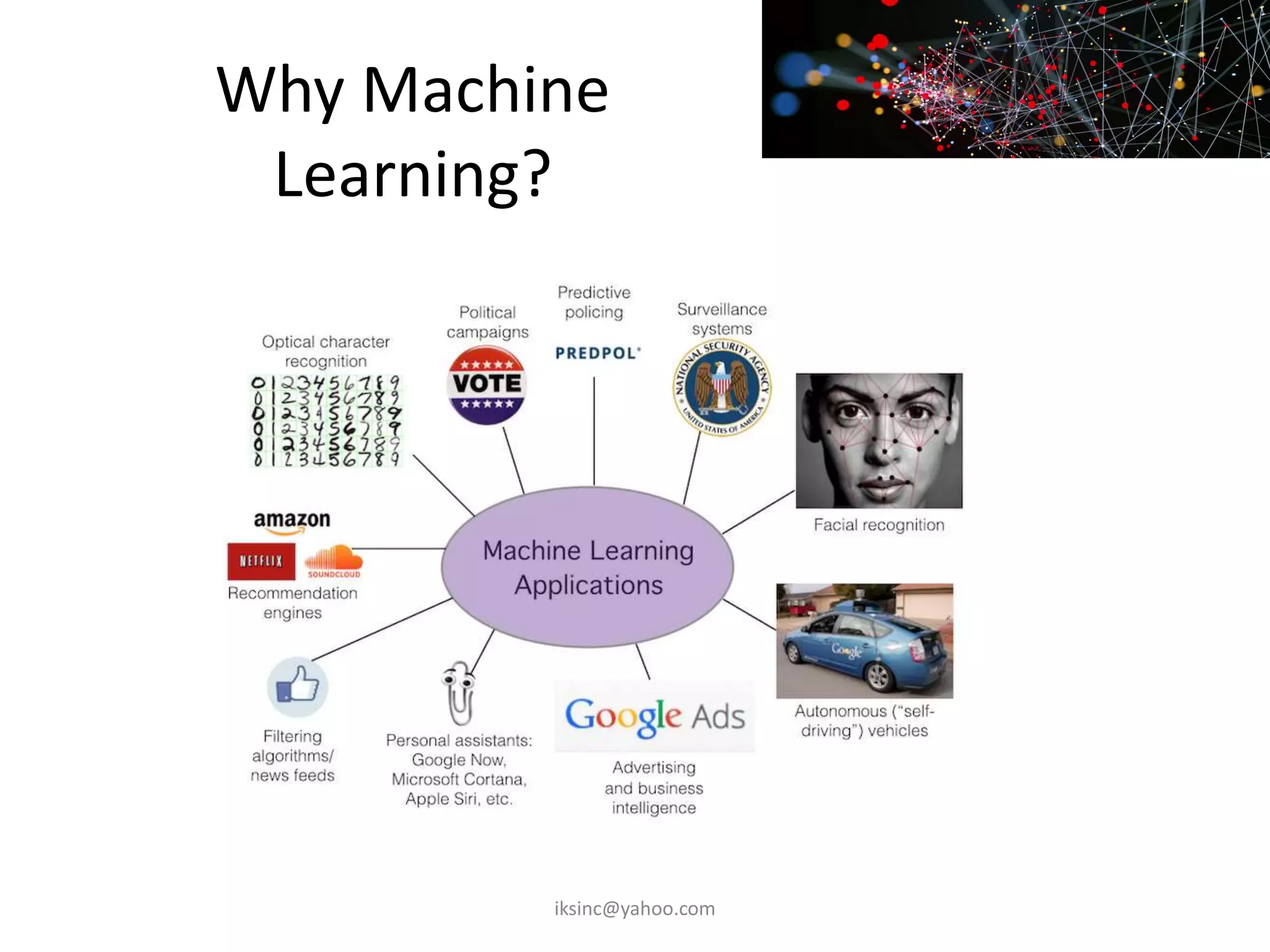

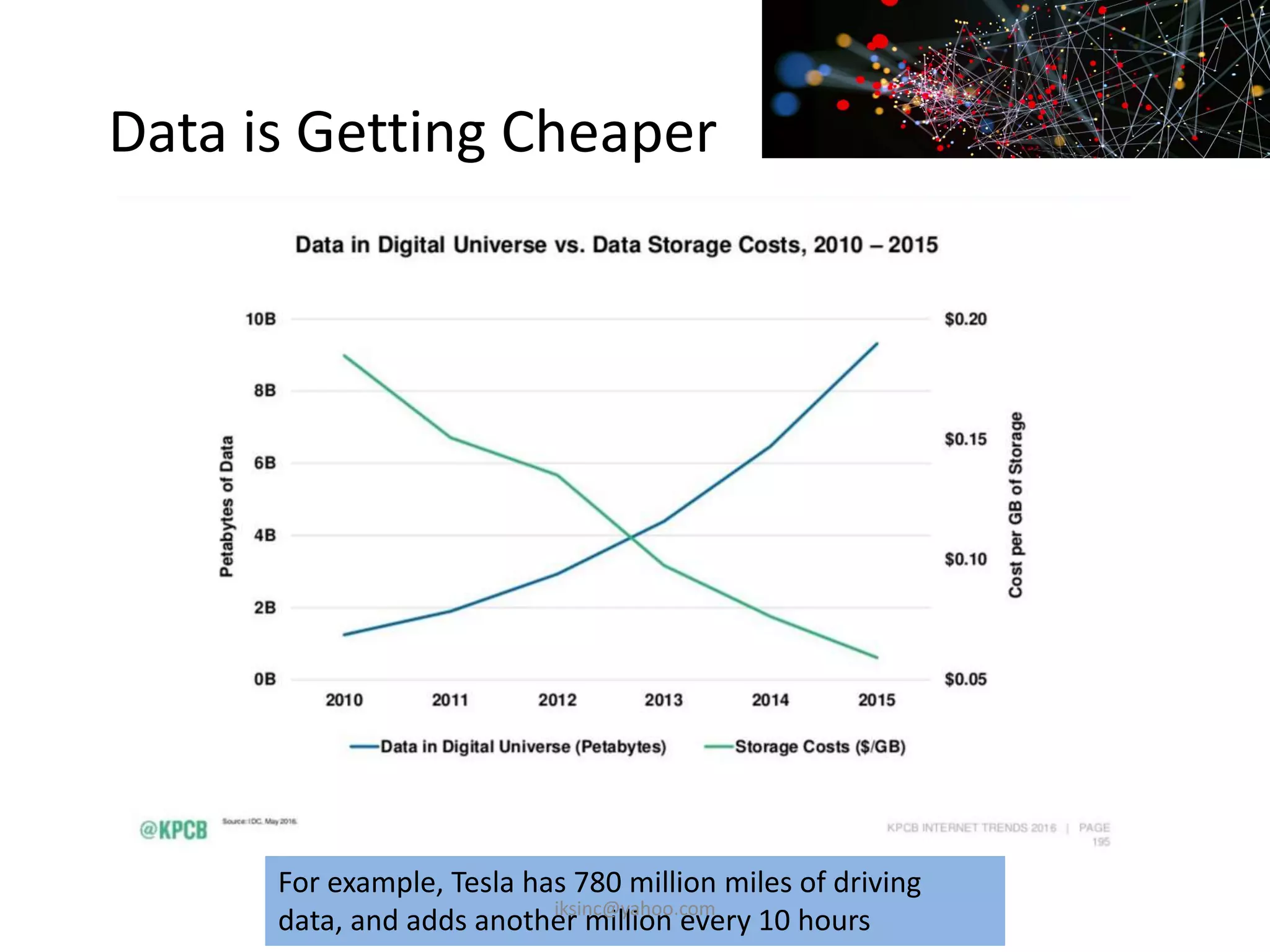

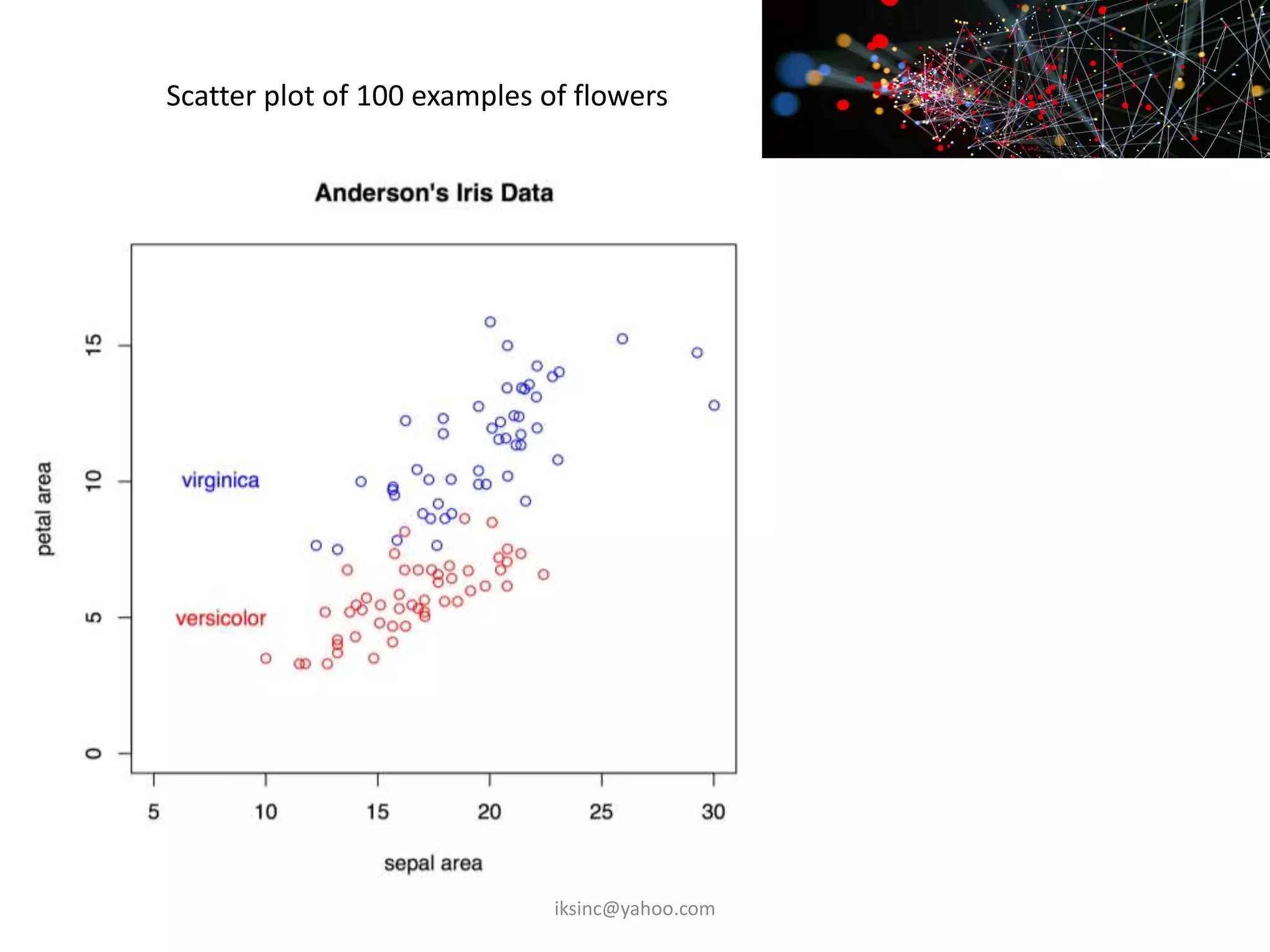

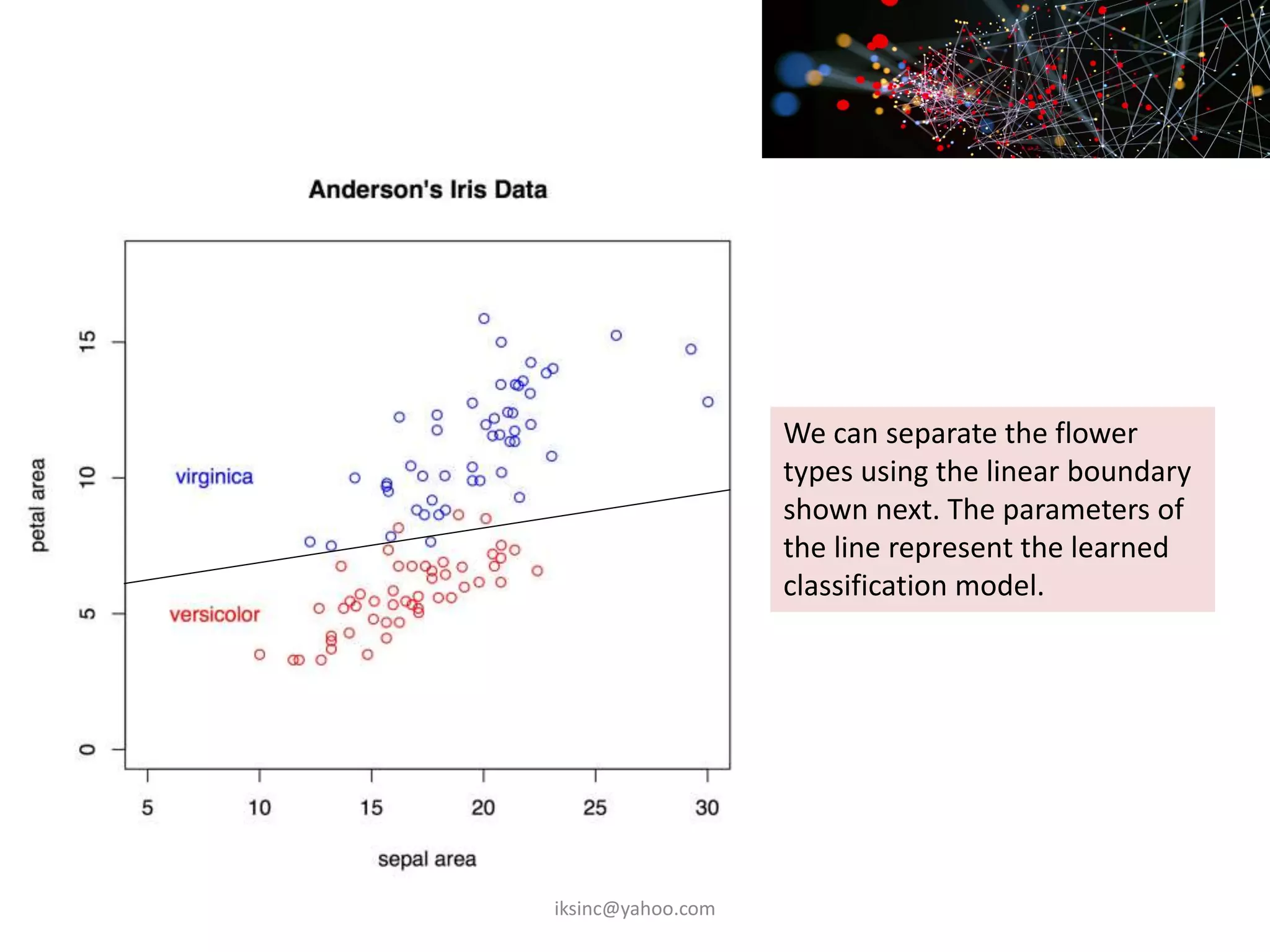

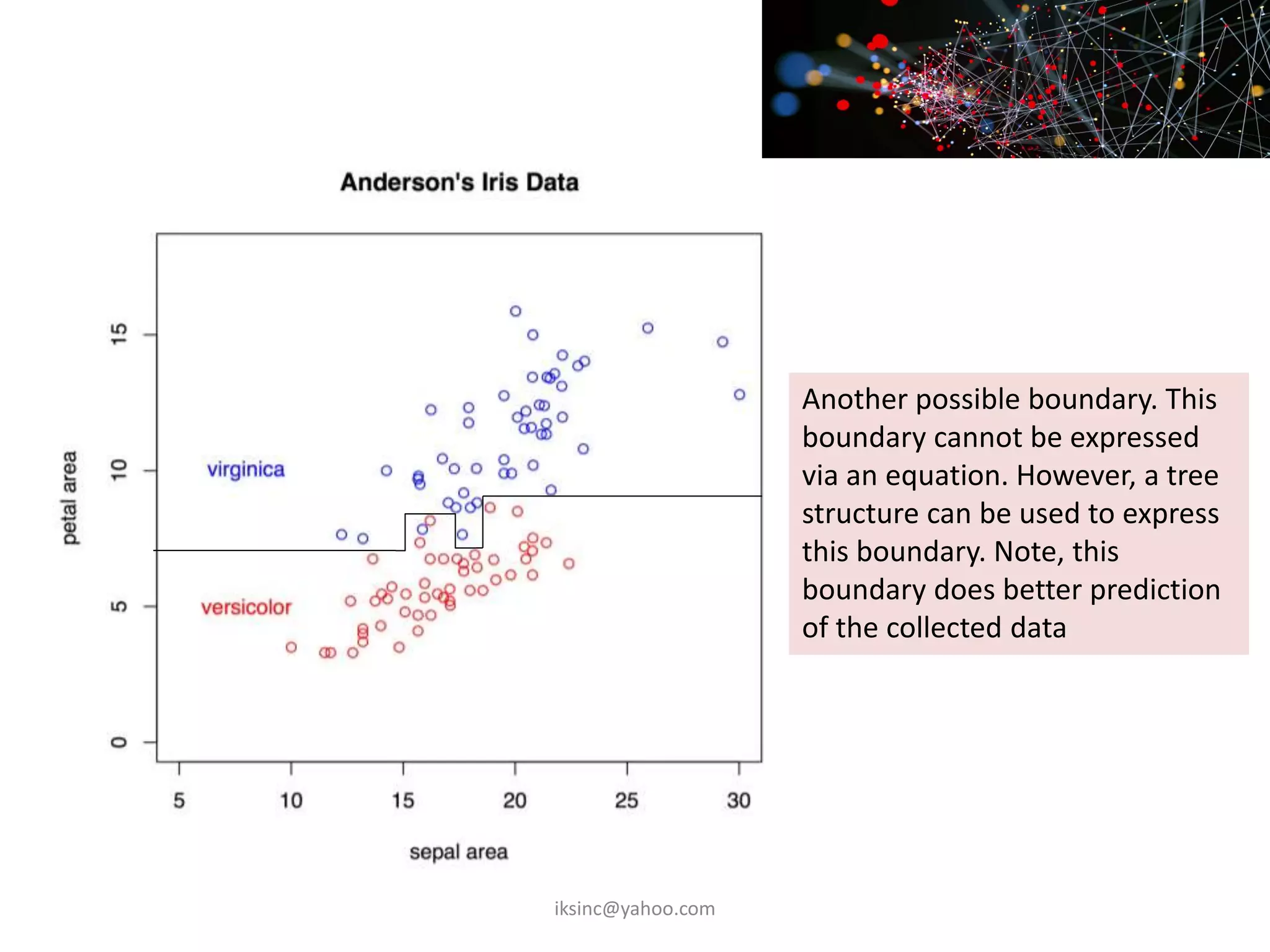

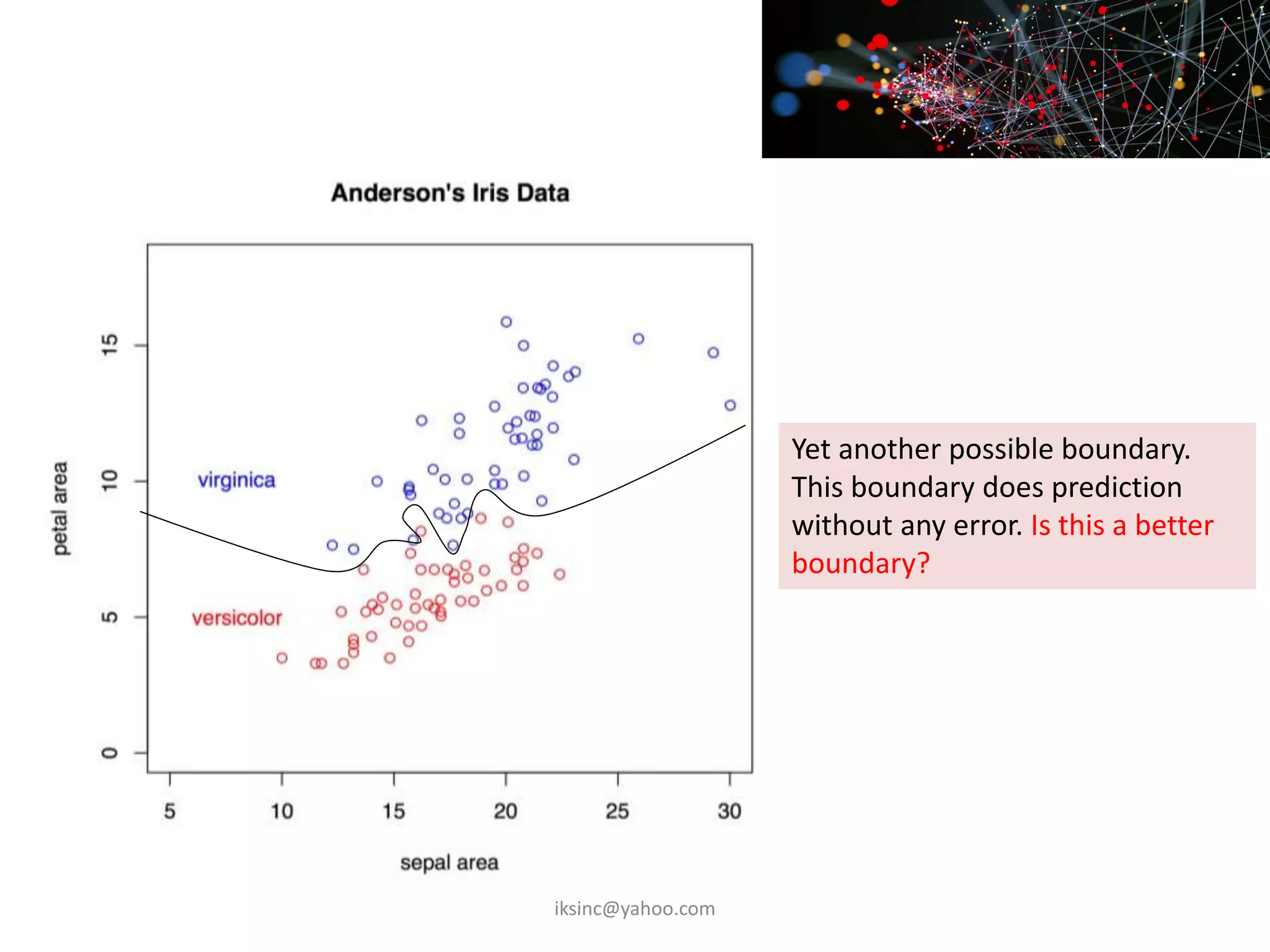

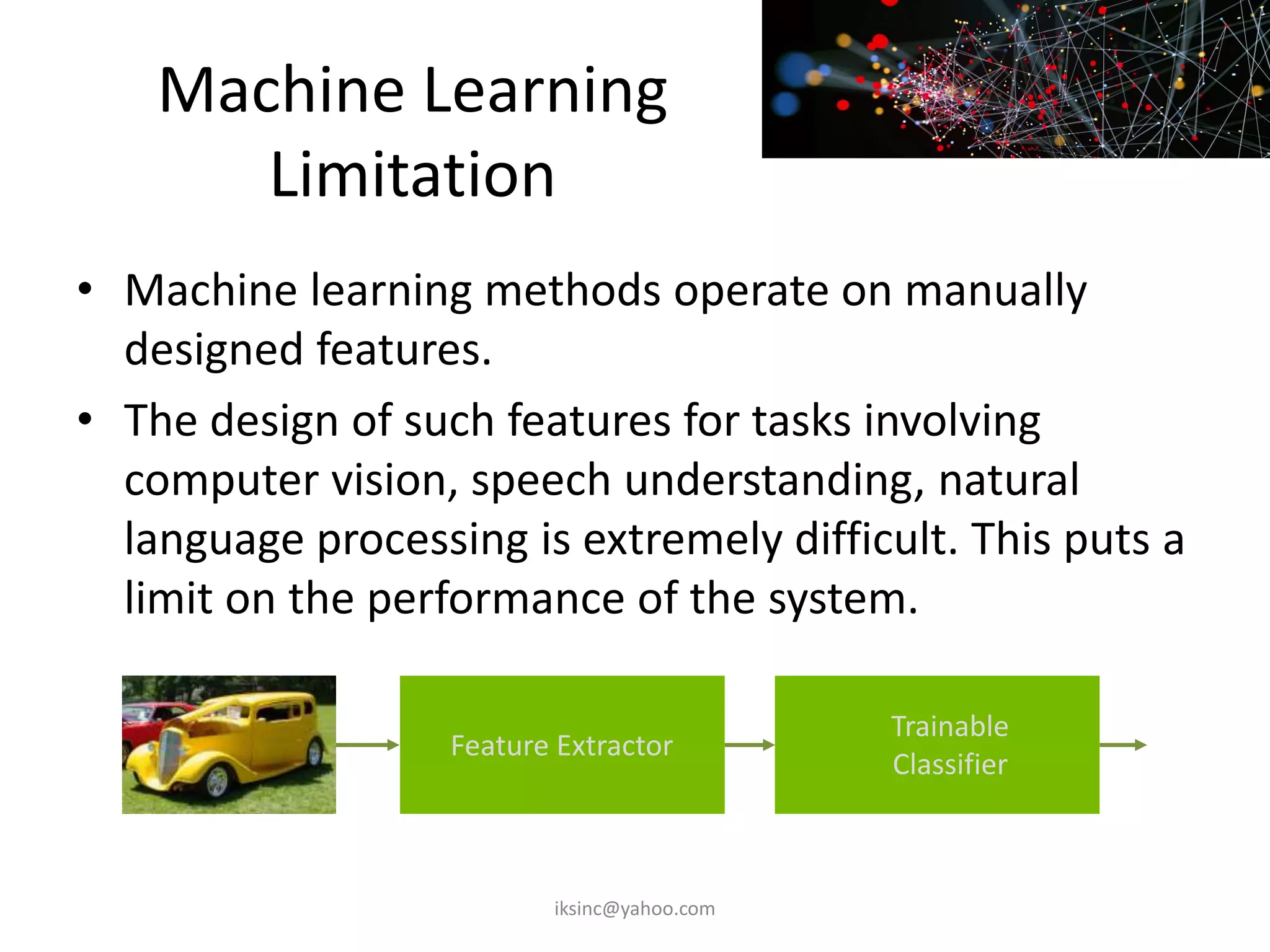

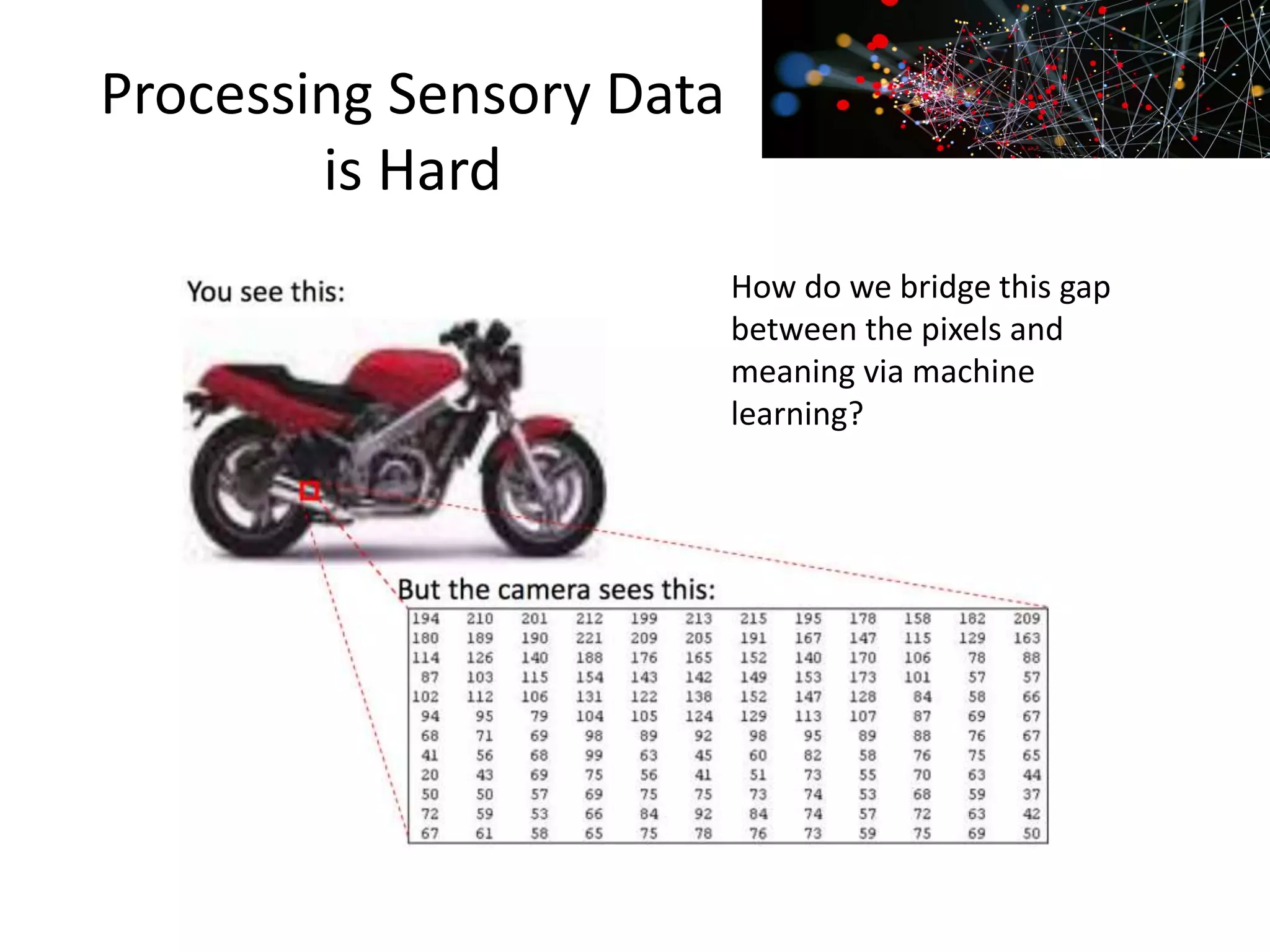

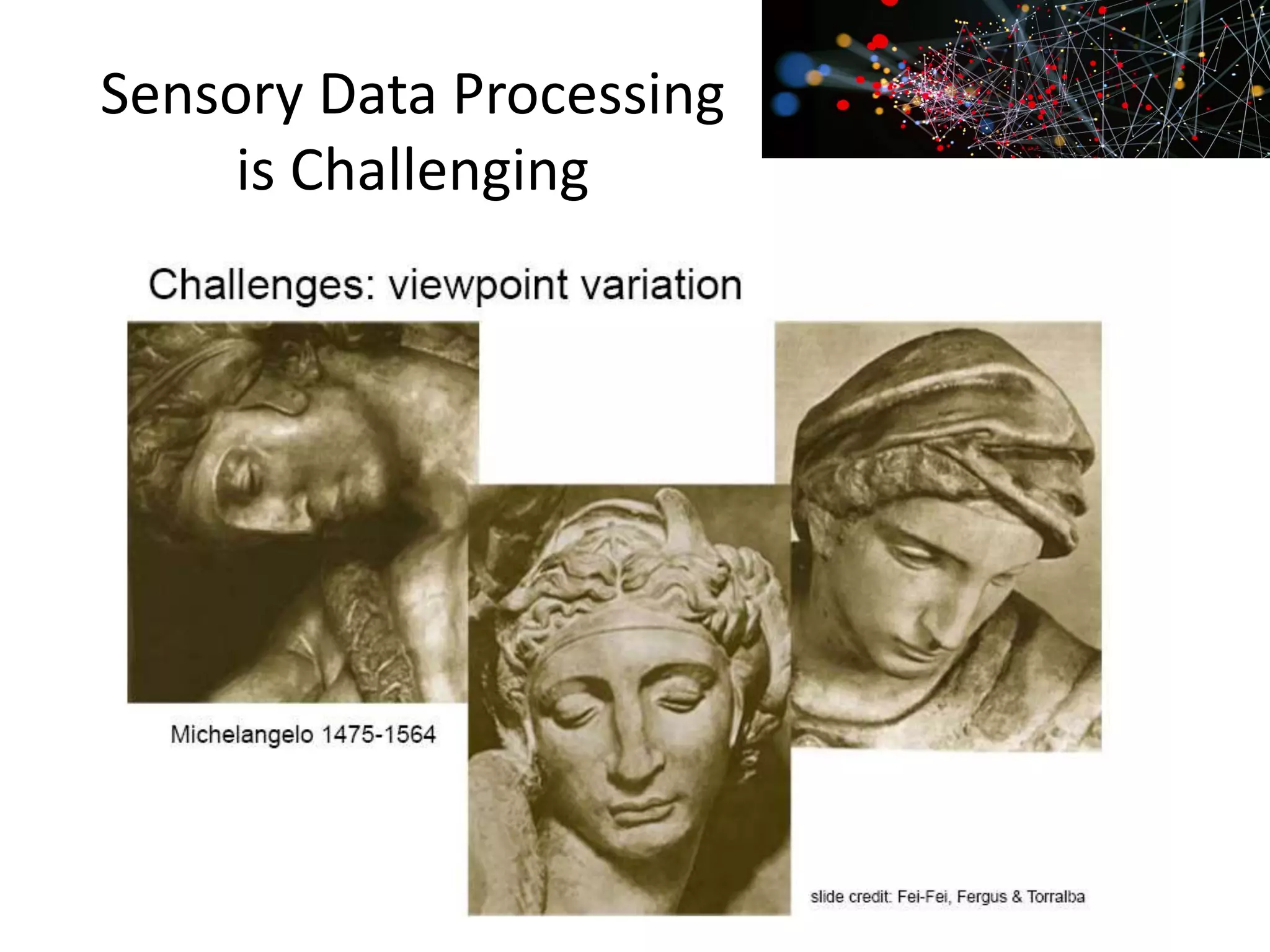

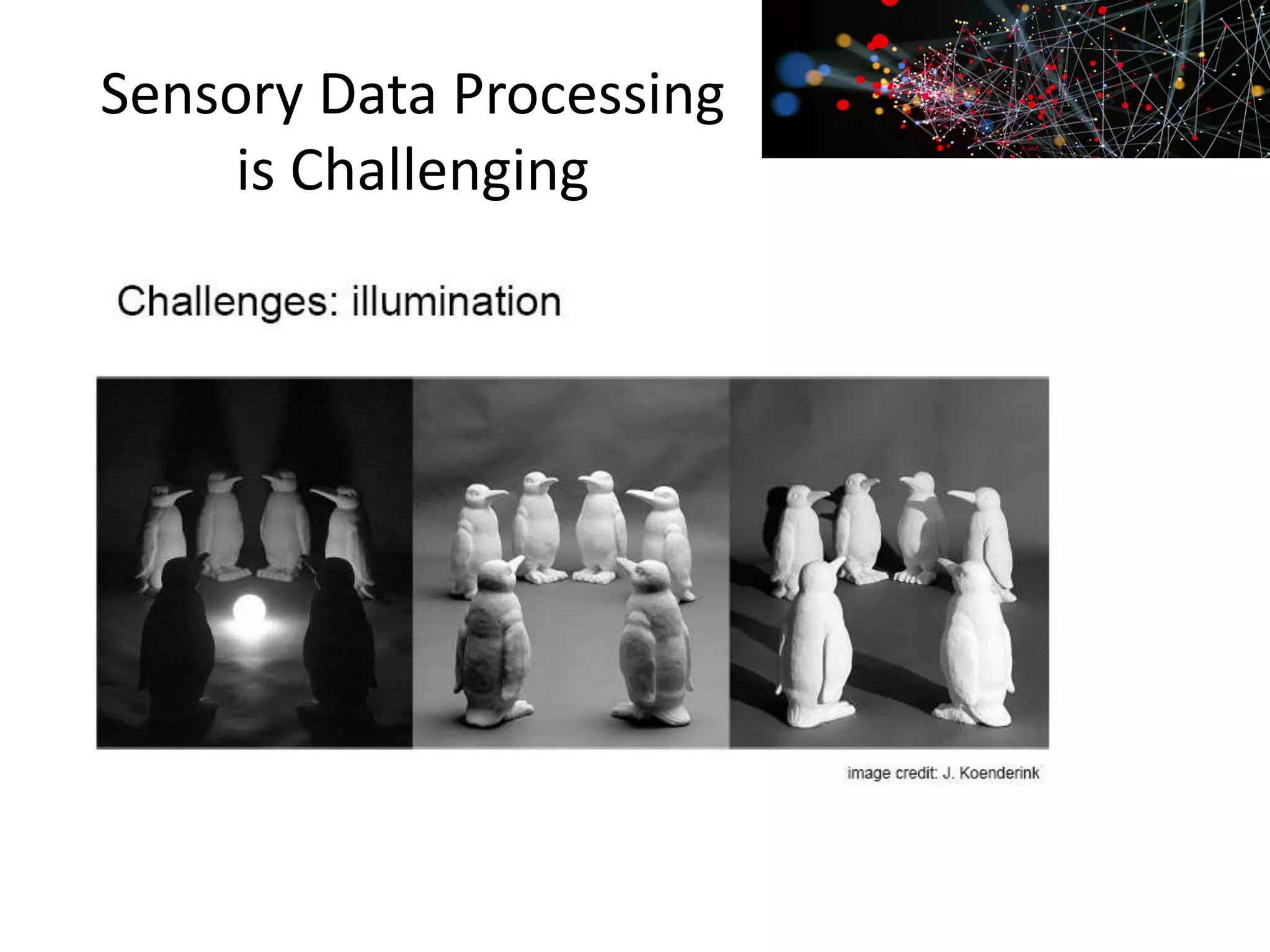

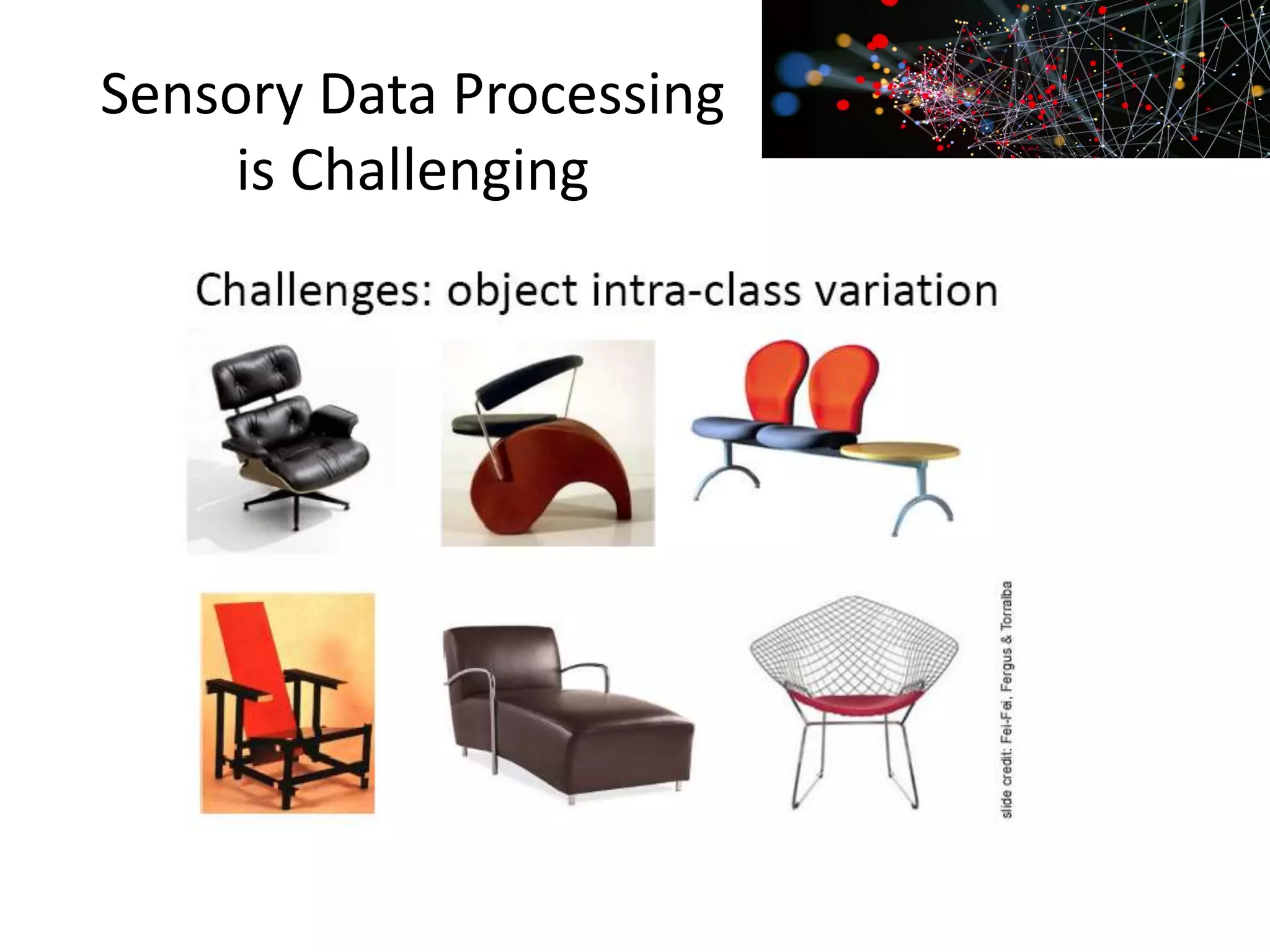

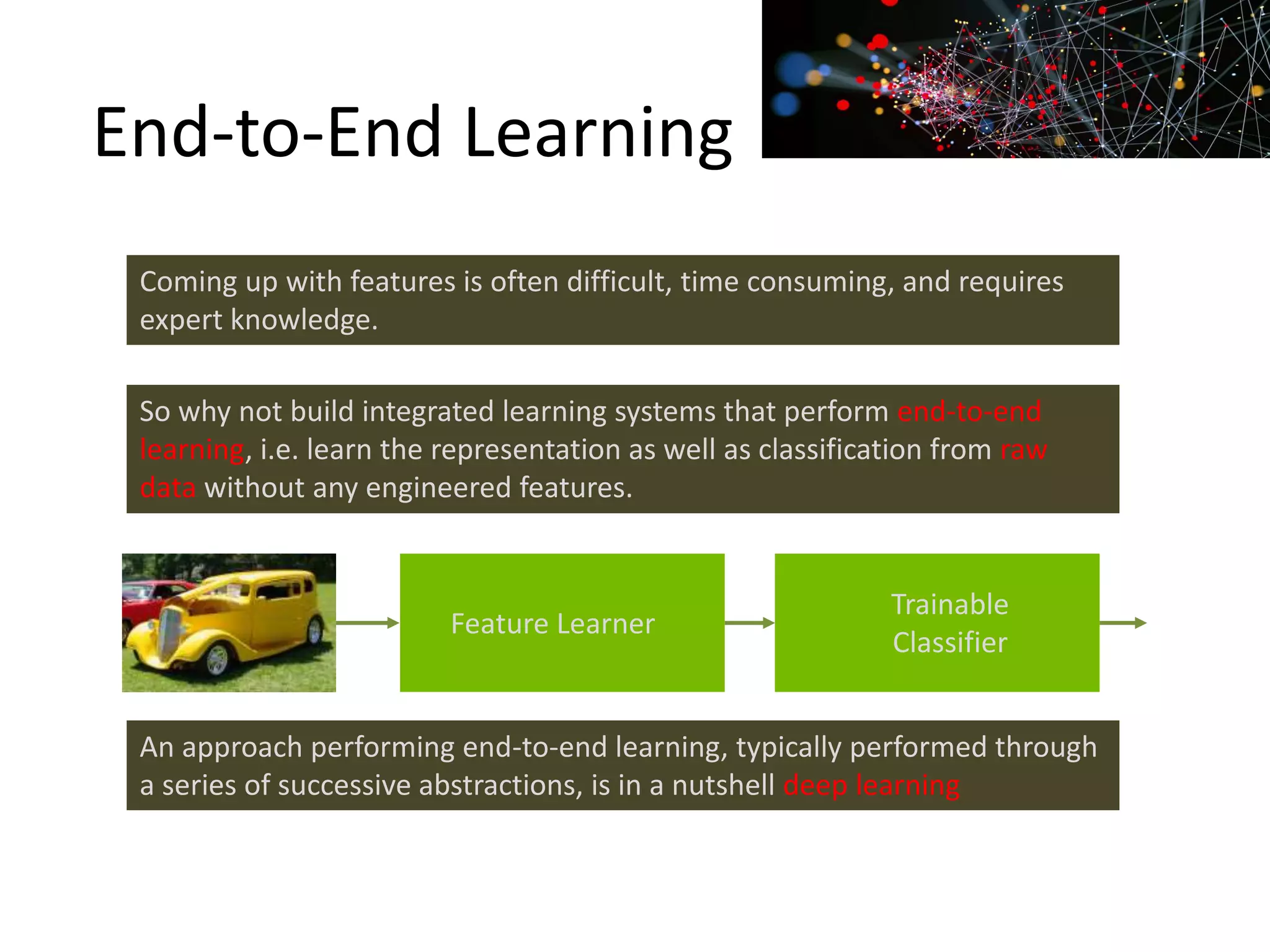

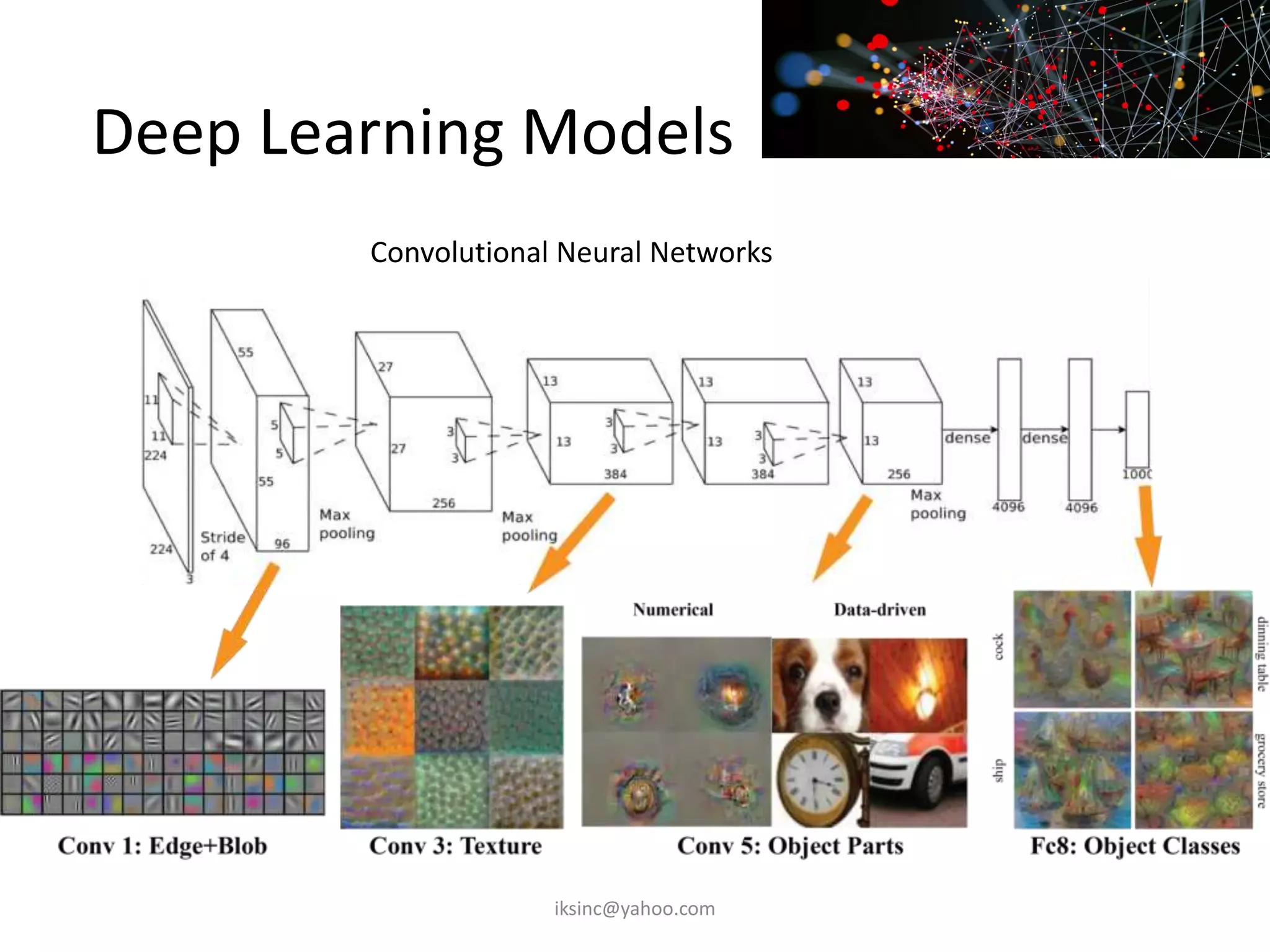

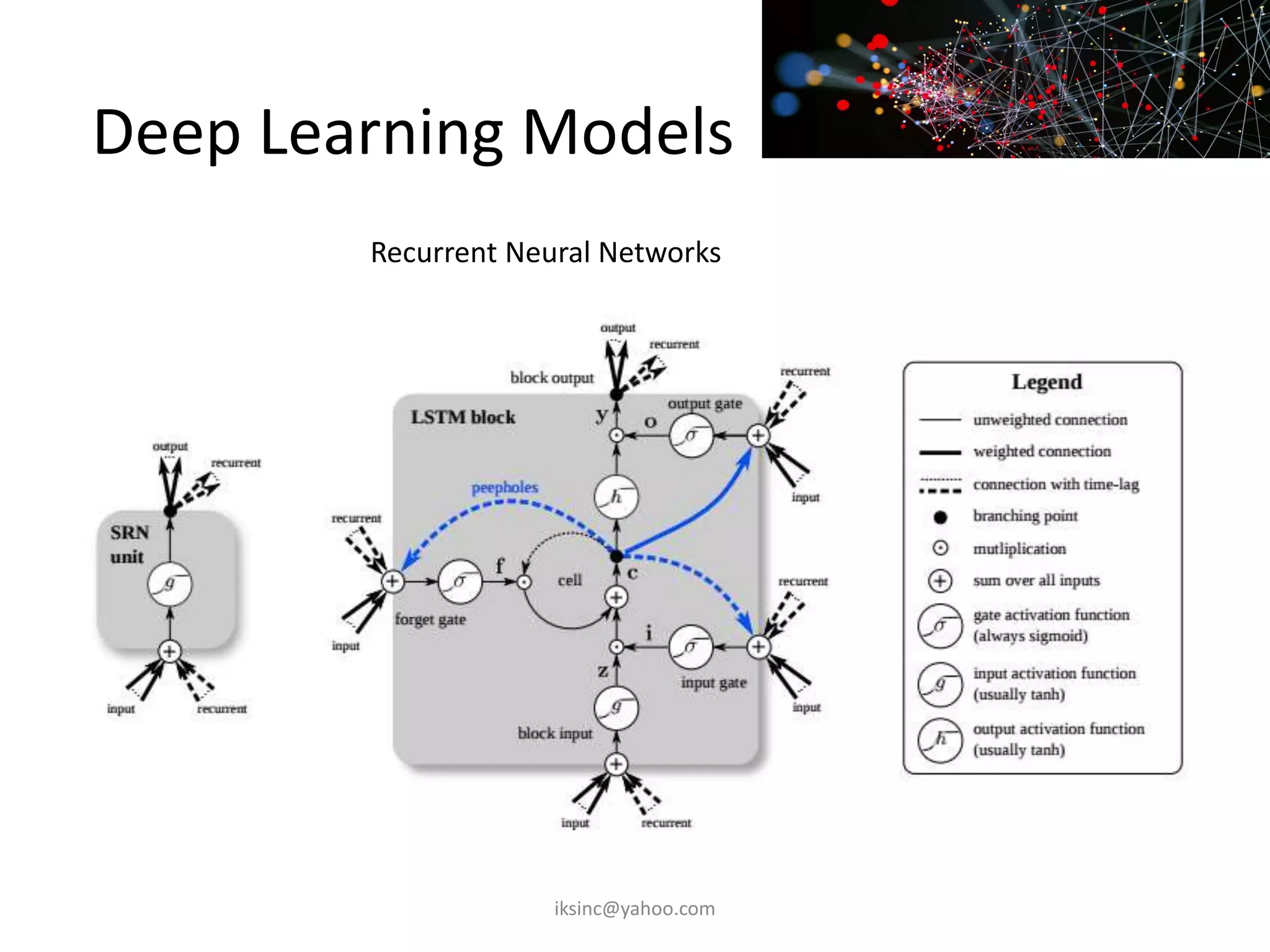

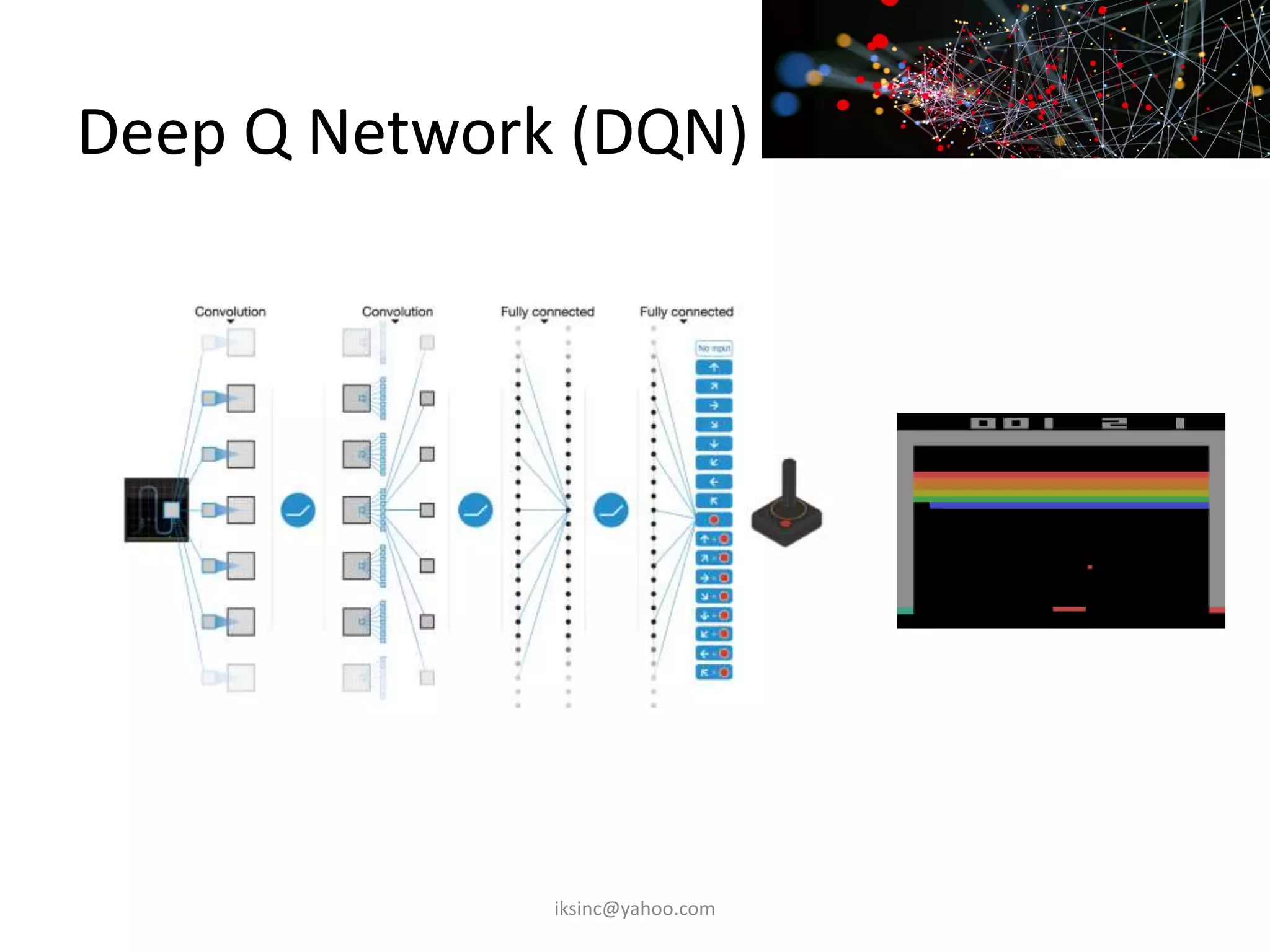

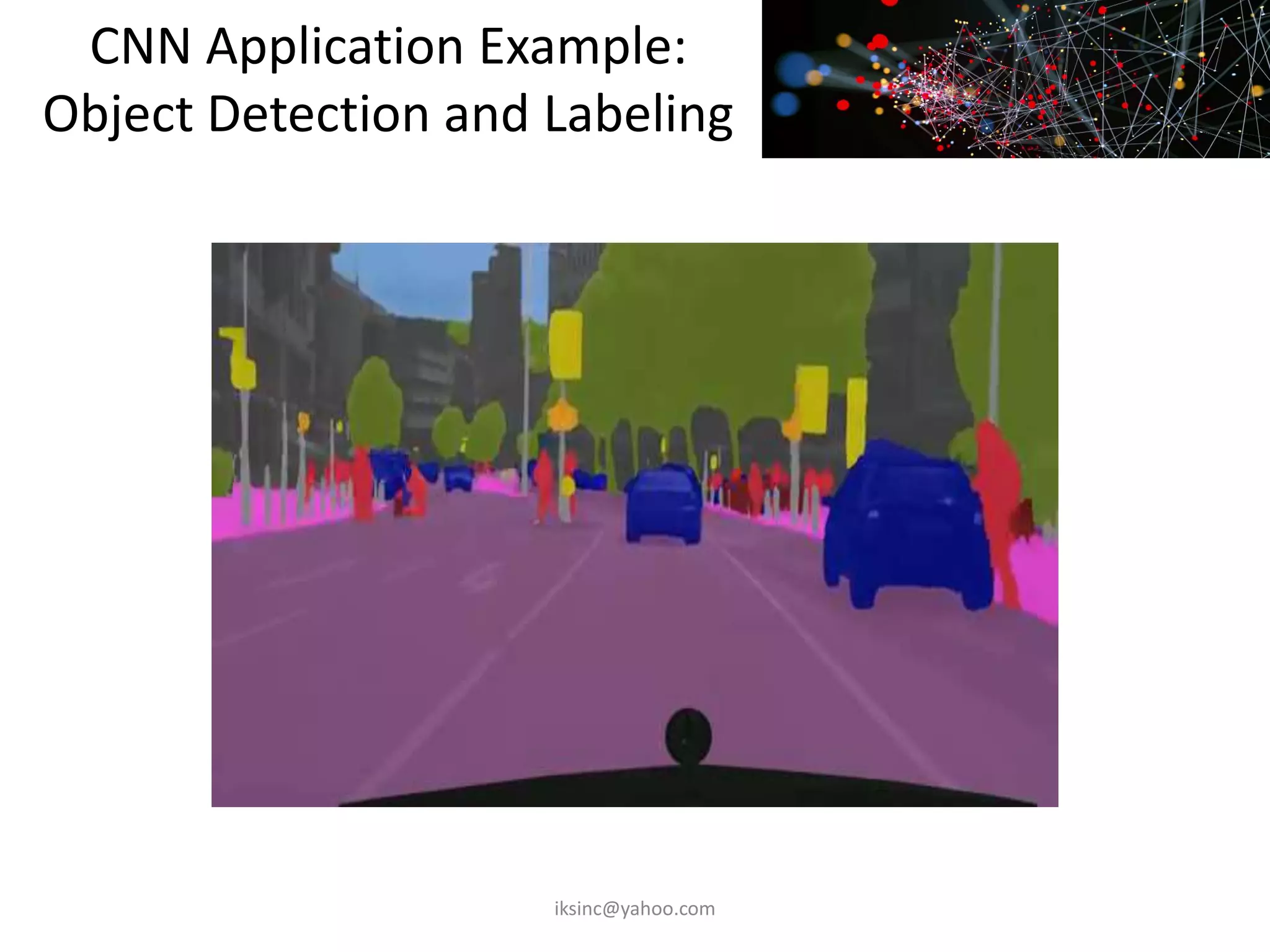

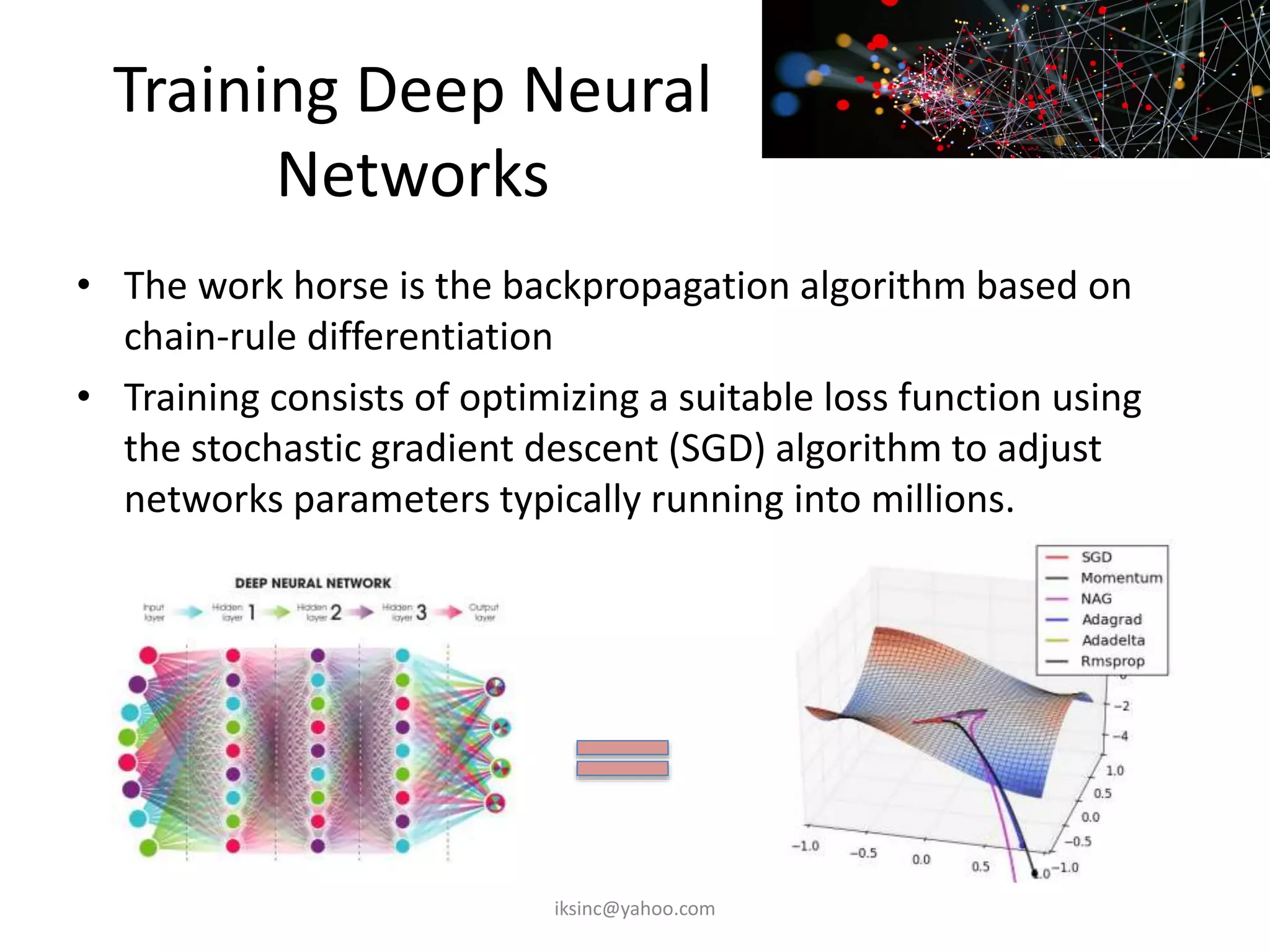

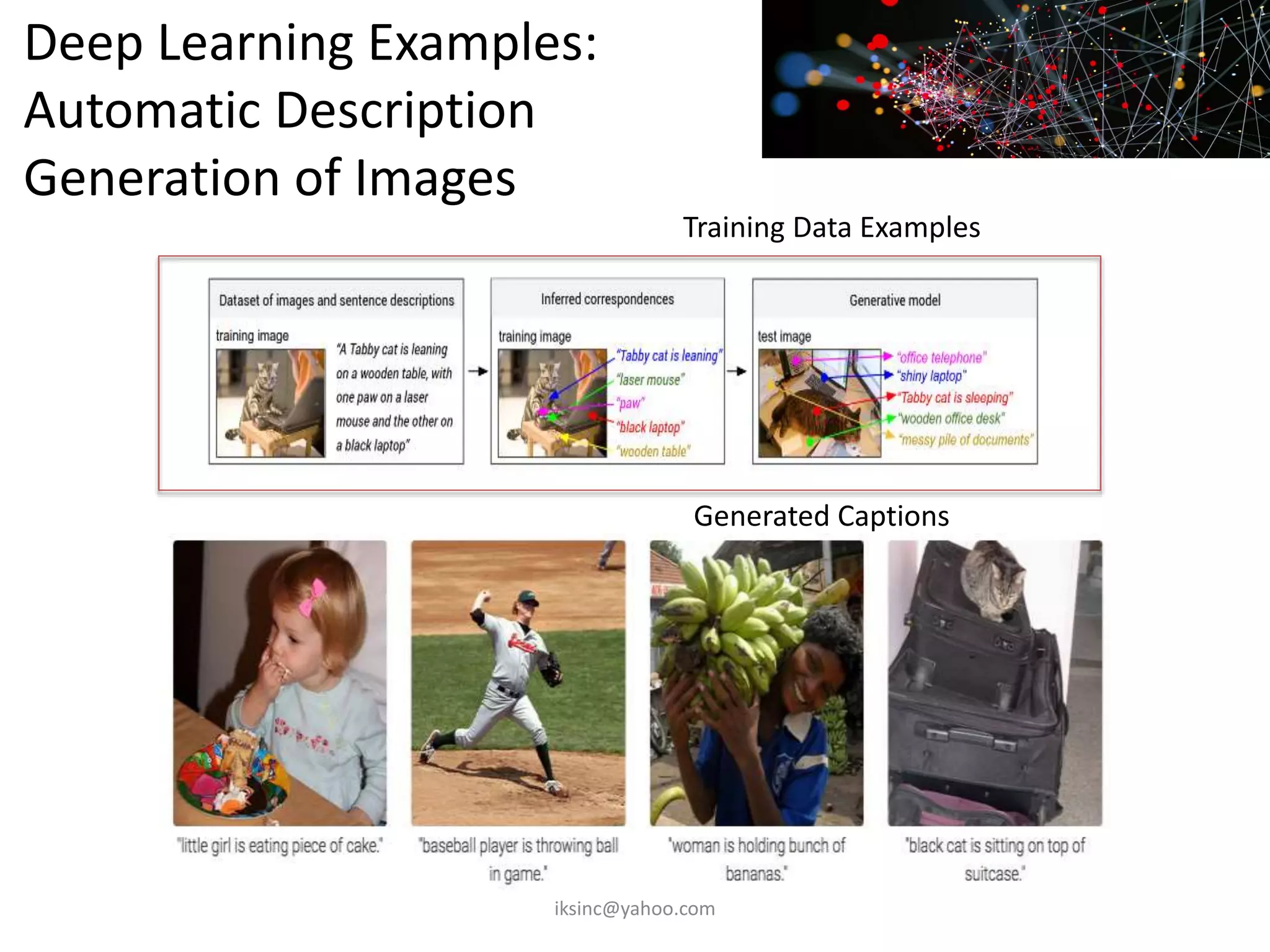

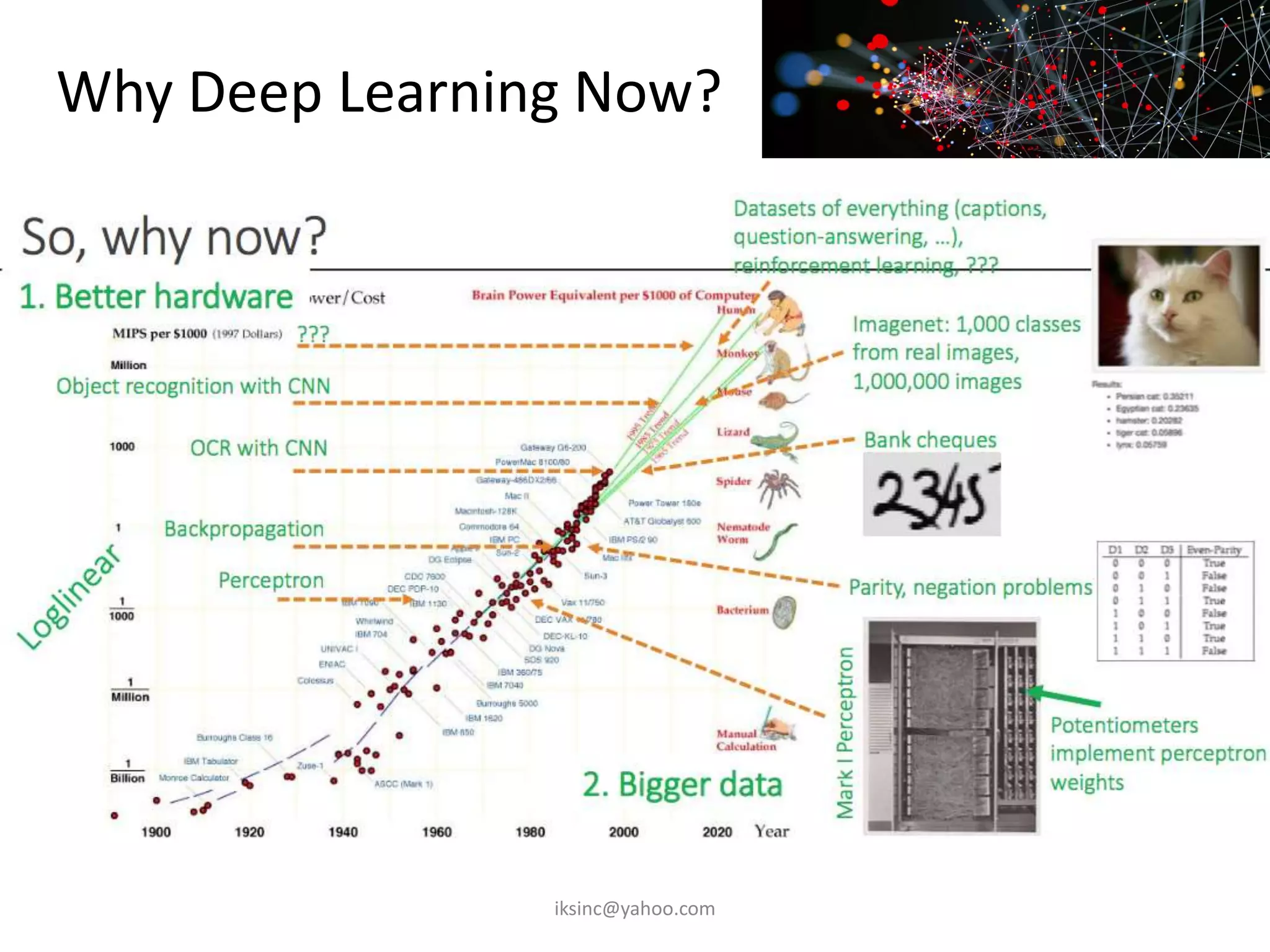

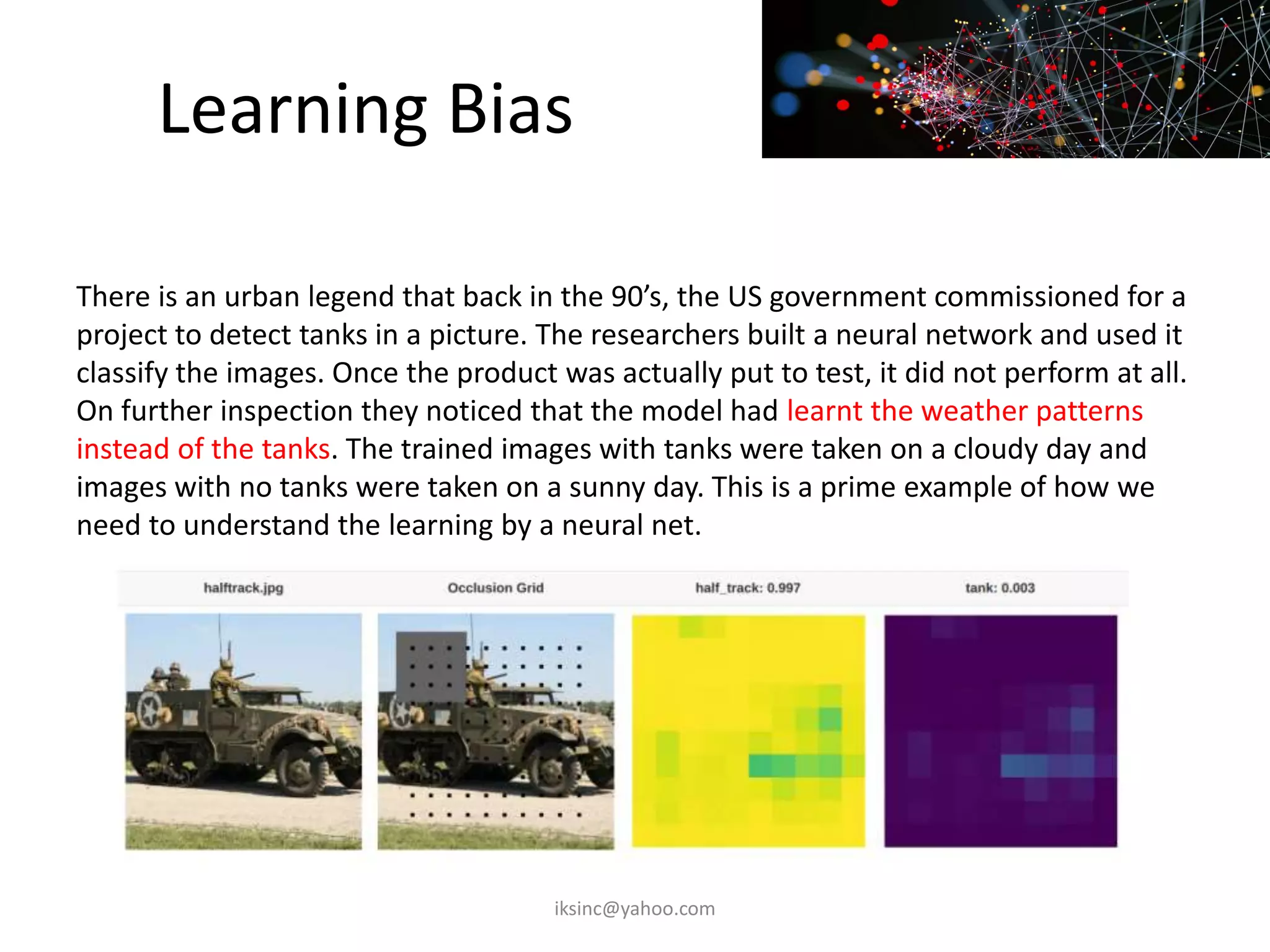

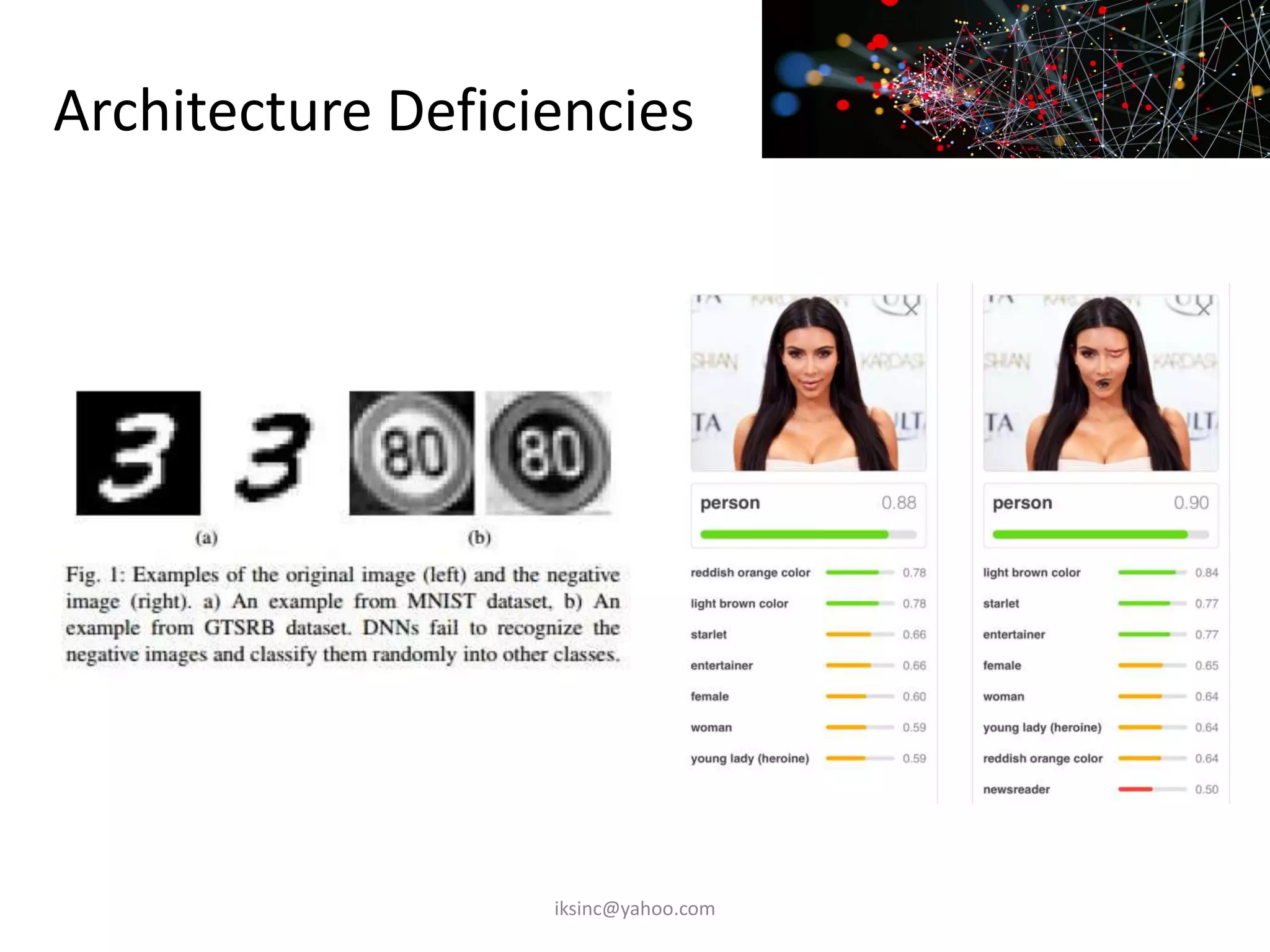

The document provides an introduction to machine learning and deep learning. It discusses that machine learning involves making computers learn patterns from data without being explicitly programmed, while deep learning uses neural networks with many layers to perform end-to-end learning from raw data without engineered features. Deep learning has achieved remarkable success in applications involving computer vision, speech recognition, and natural language processing due to its ability to learn representations of the raw data. The document outlines popular deep learning models like convolutional neural networks and recurrent neural networks and provides examples of applications in areas such as image classification and prediction of heart attacks.