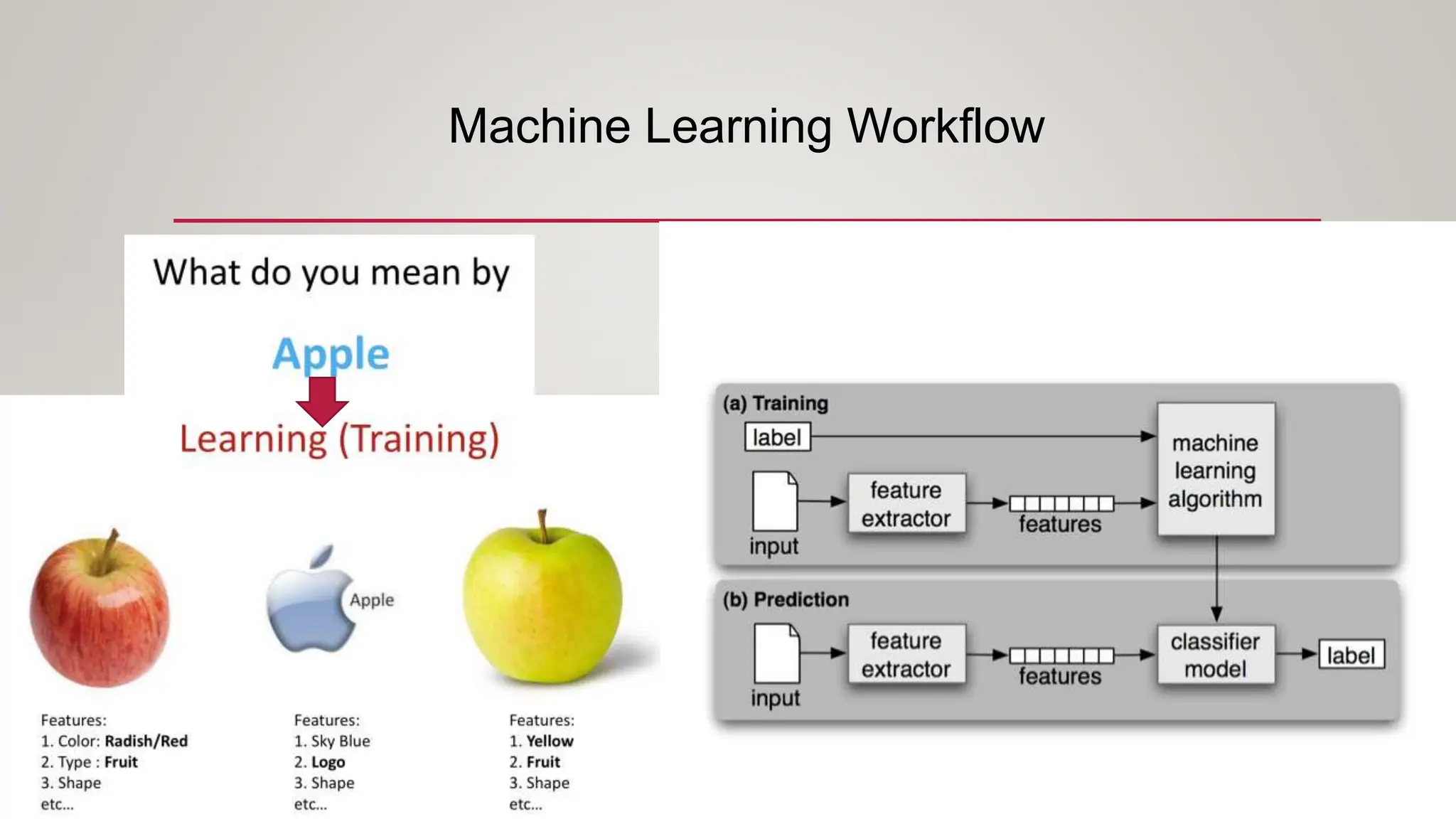

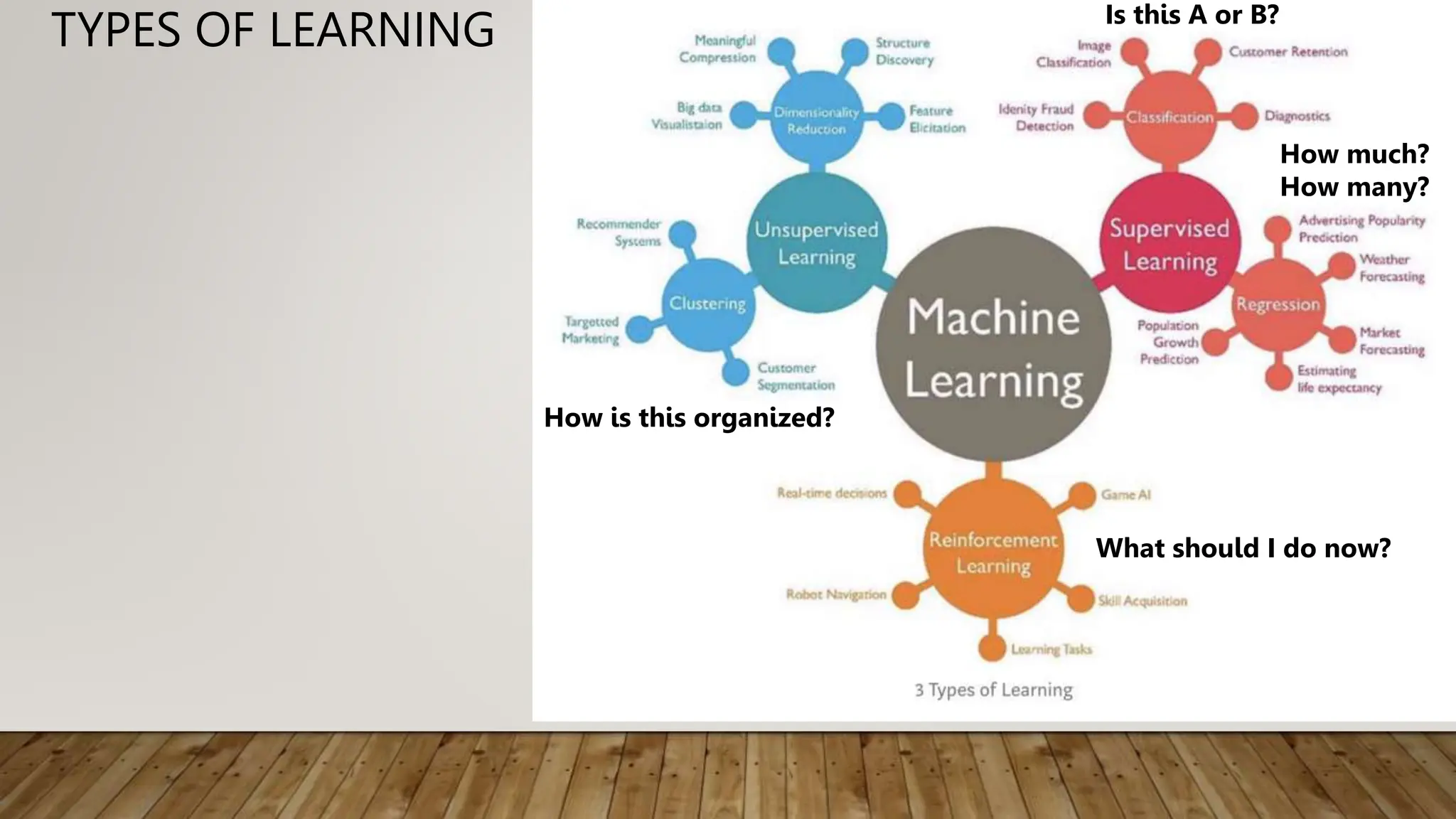

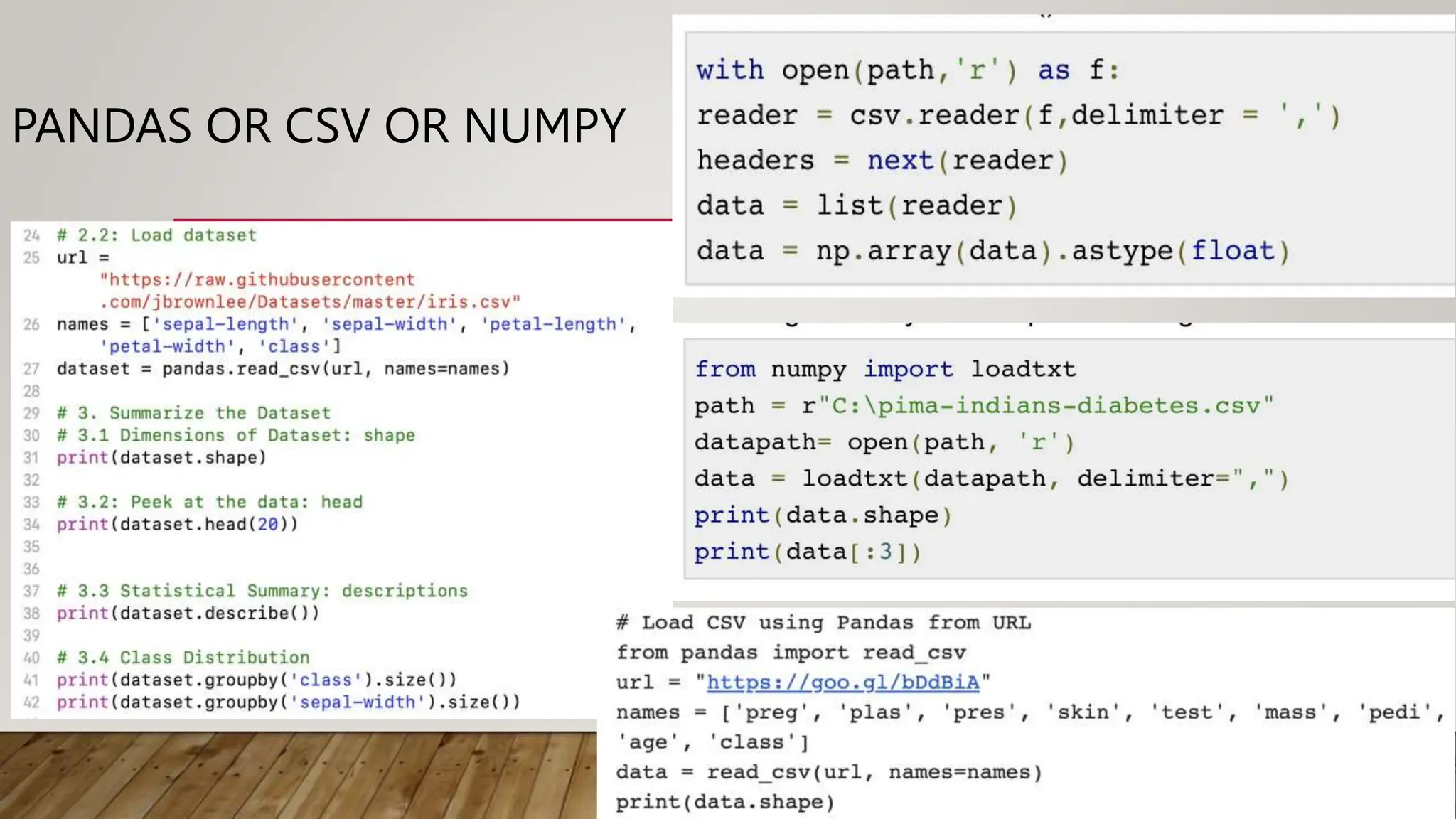

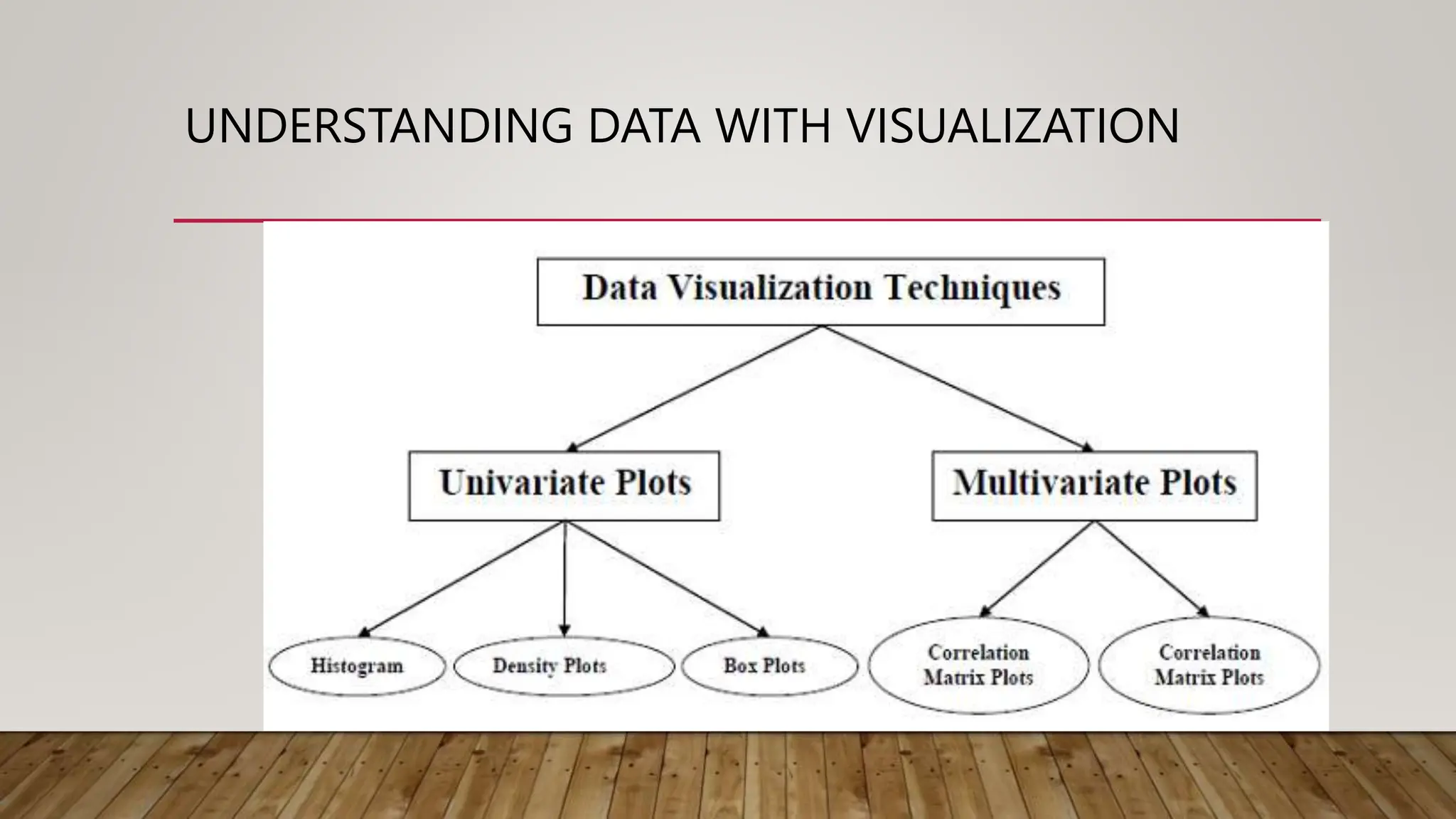

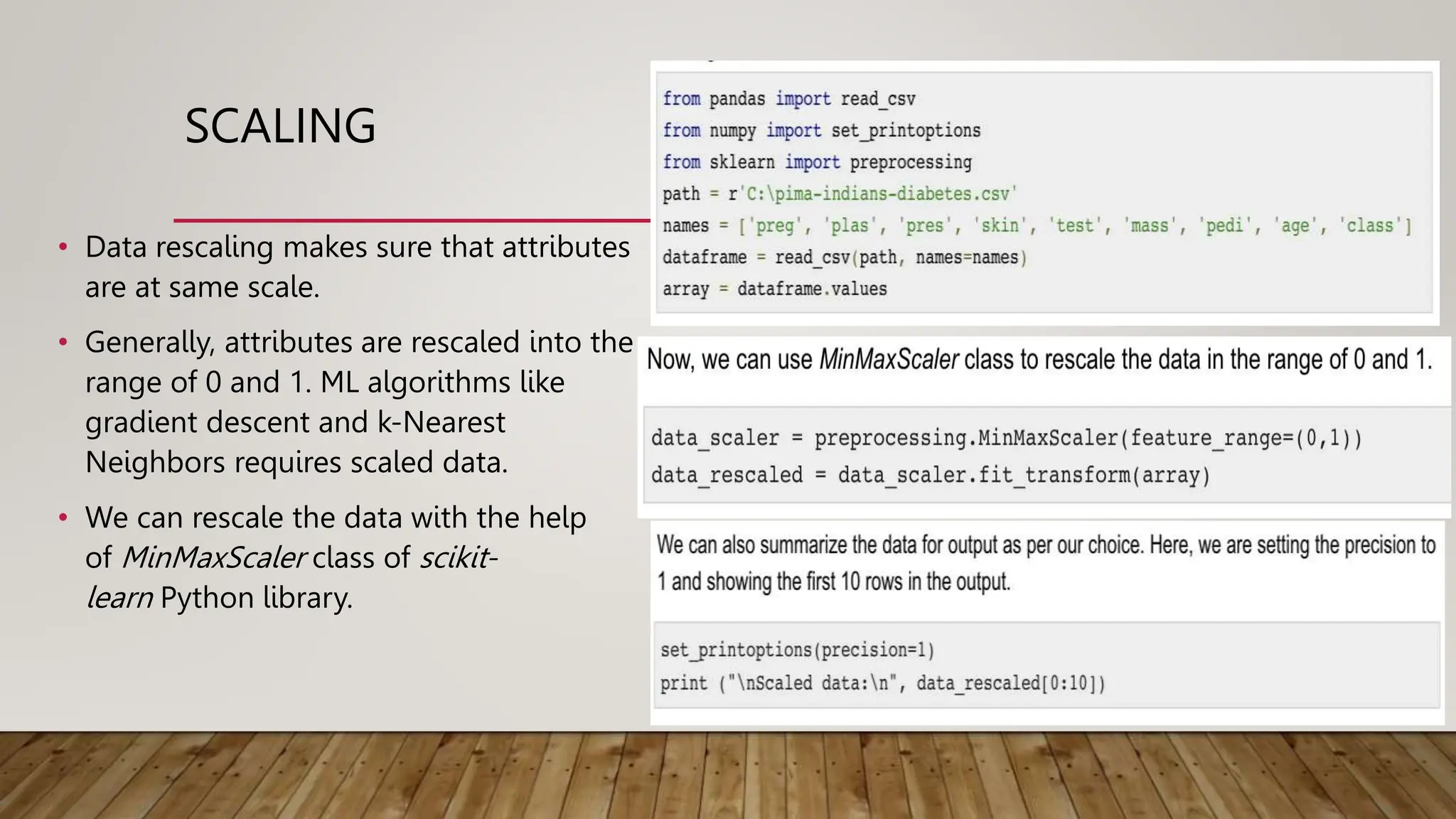

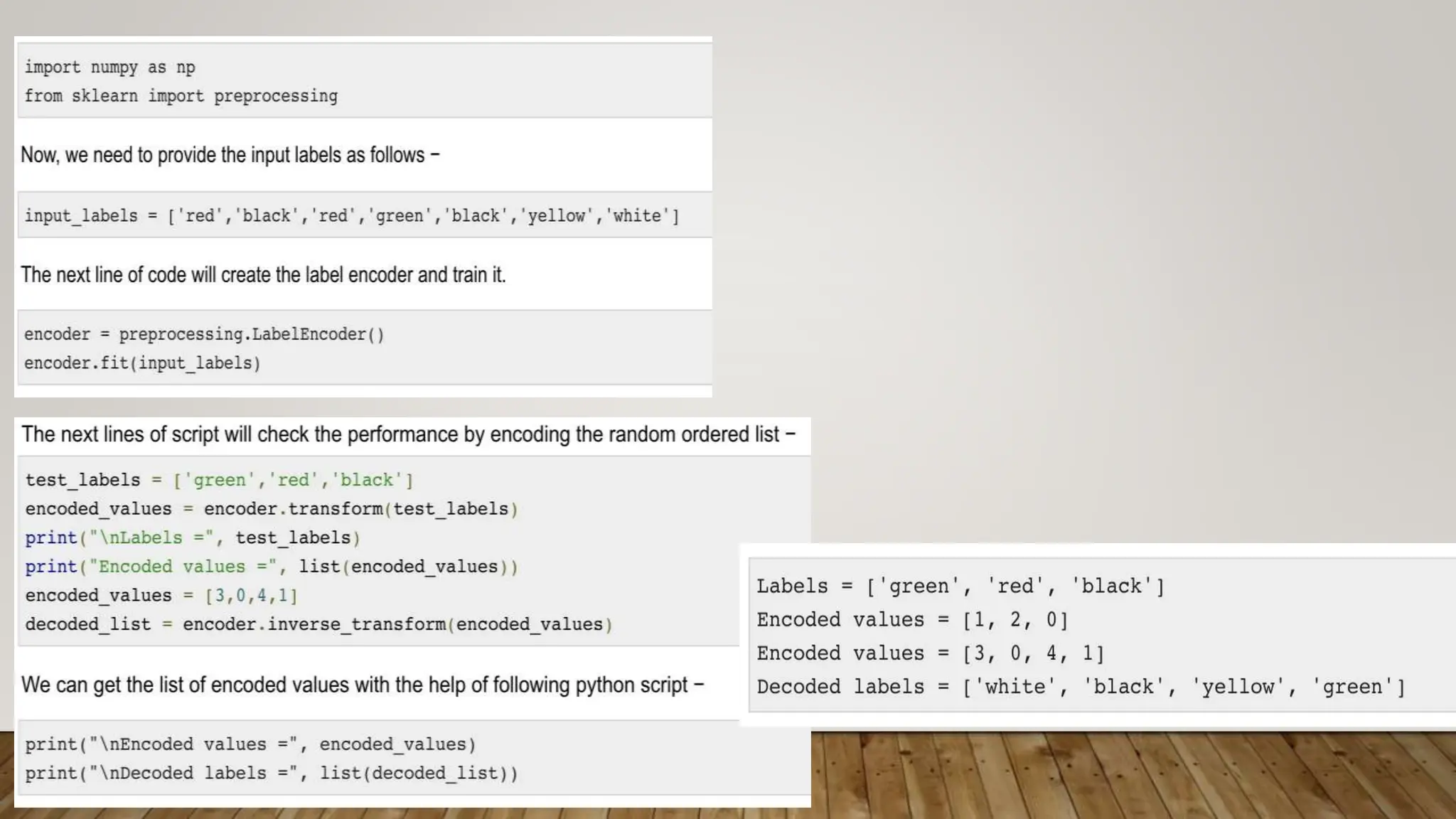

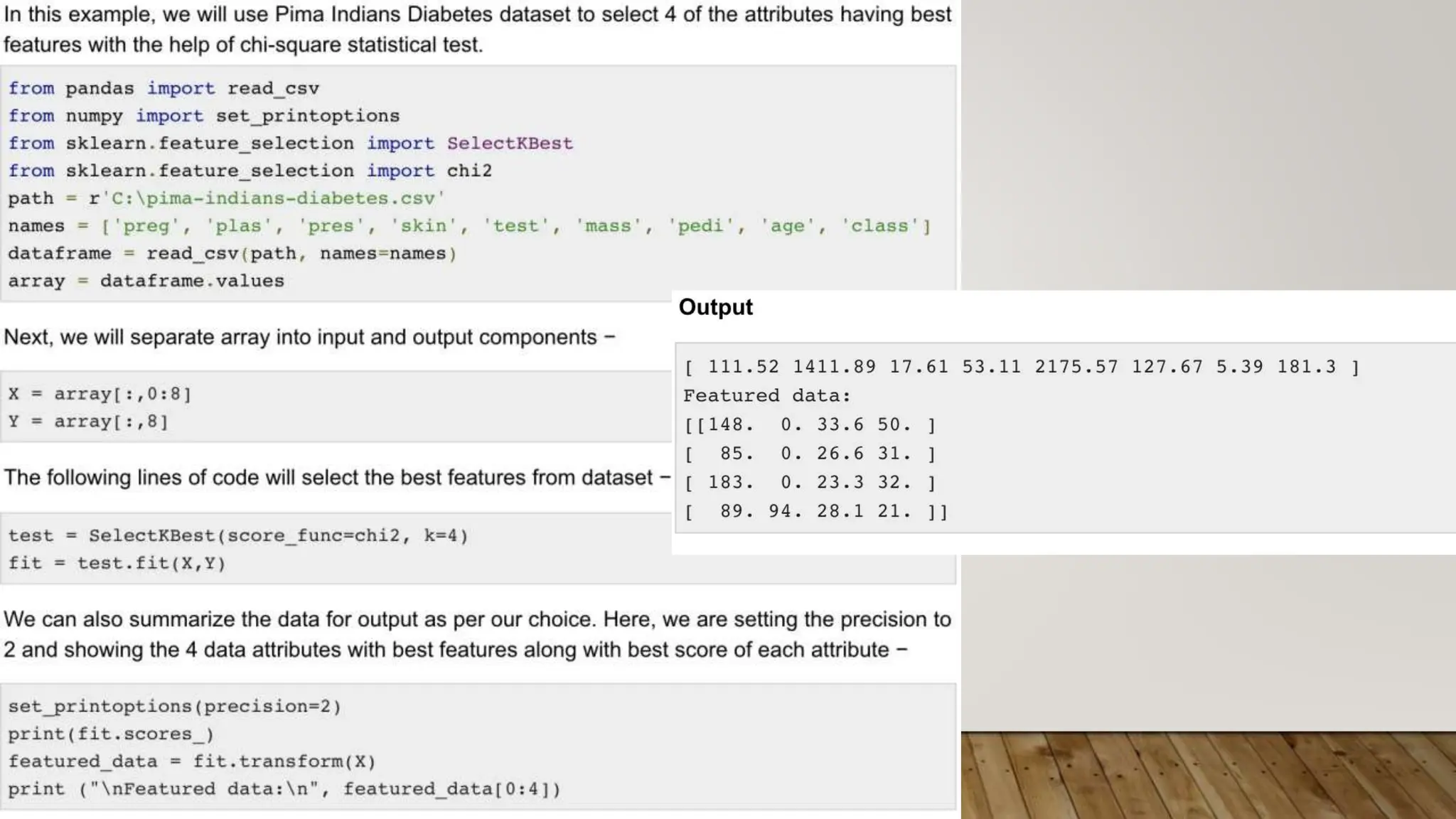

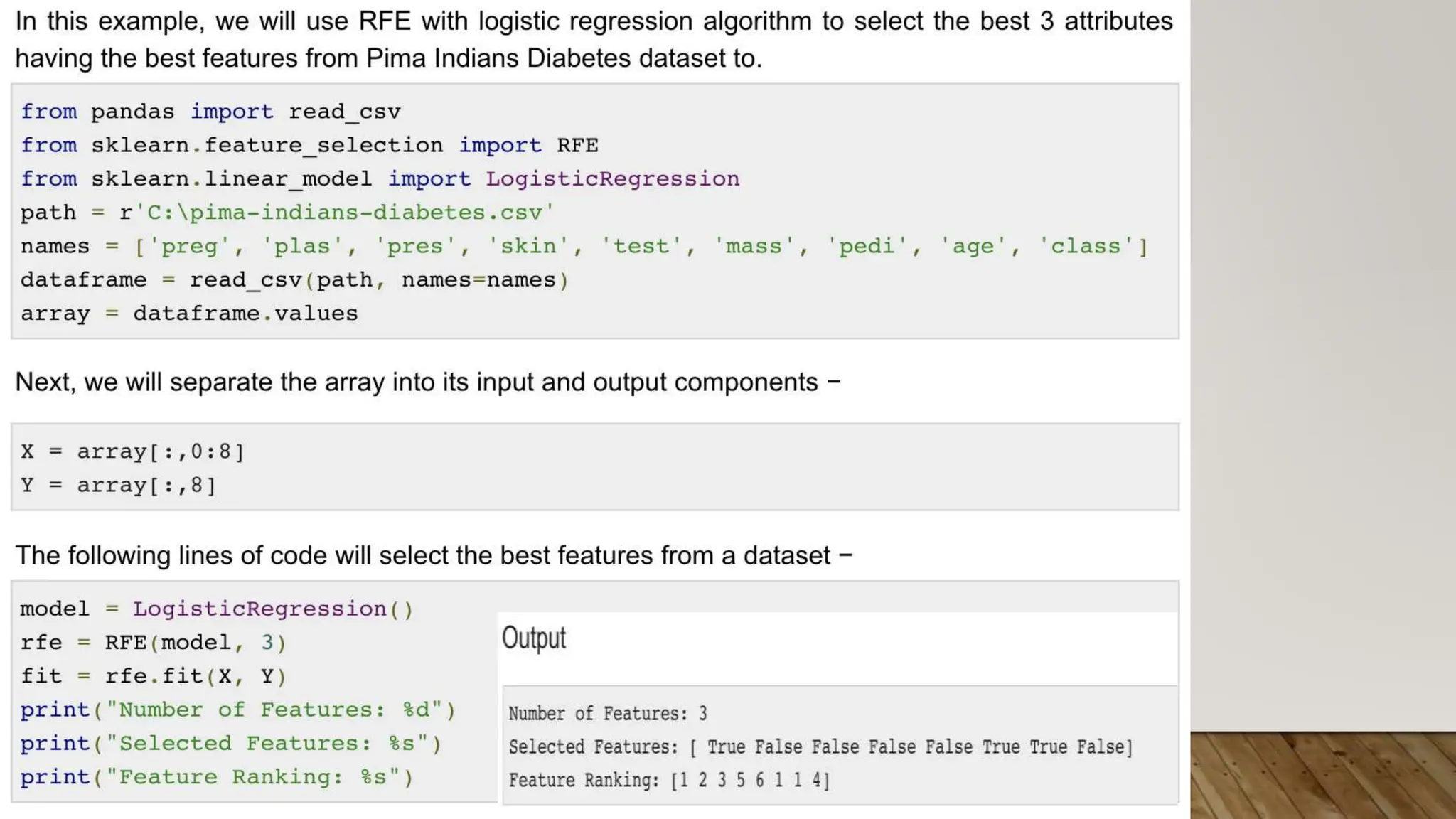

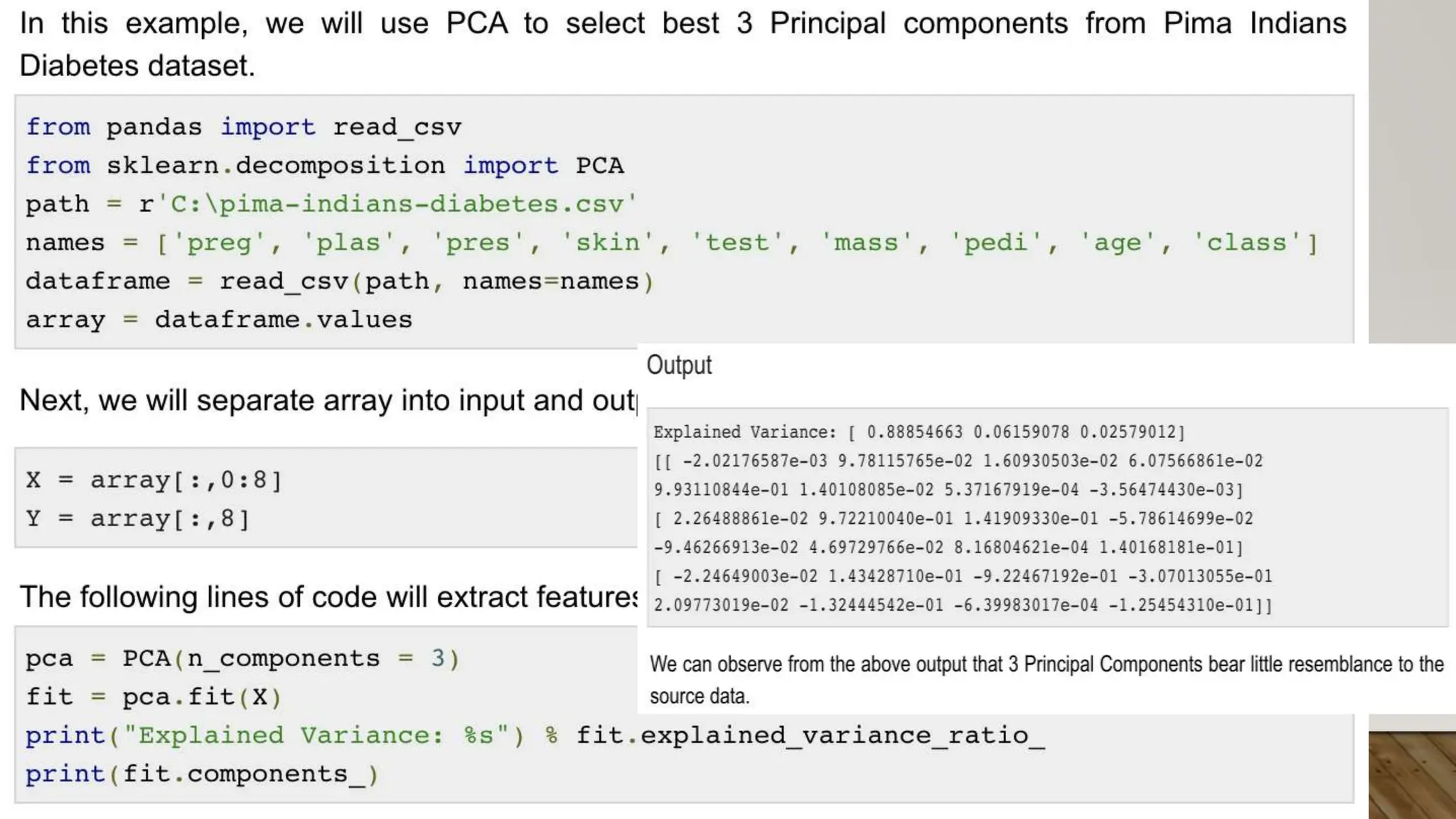

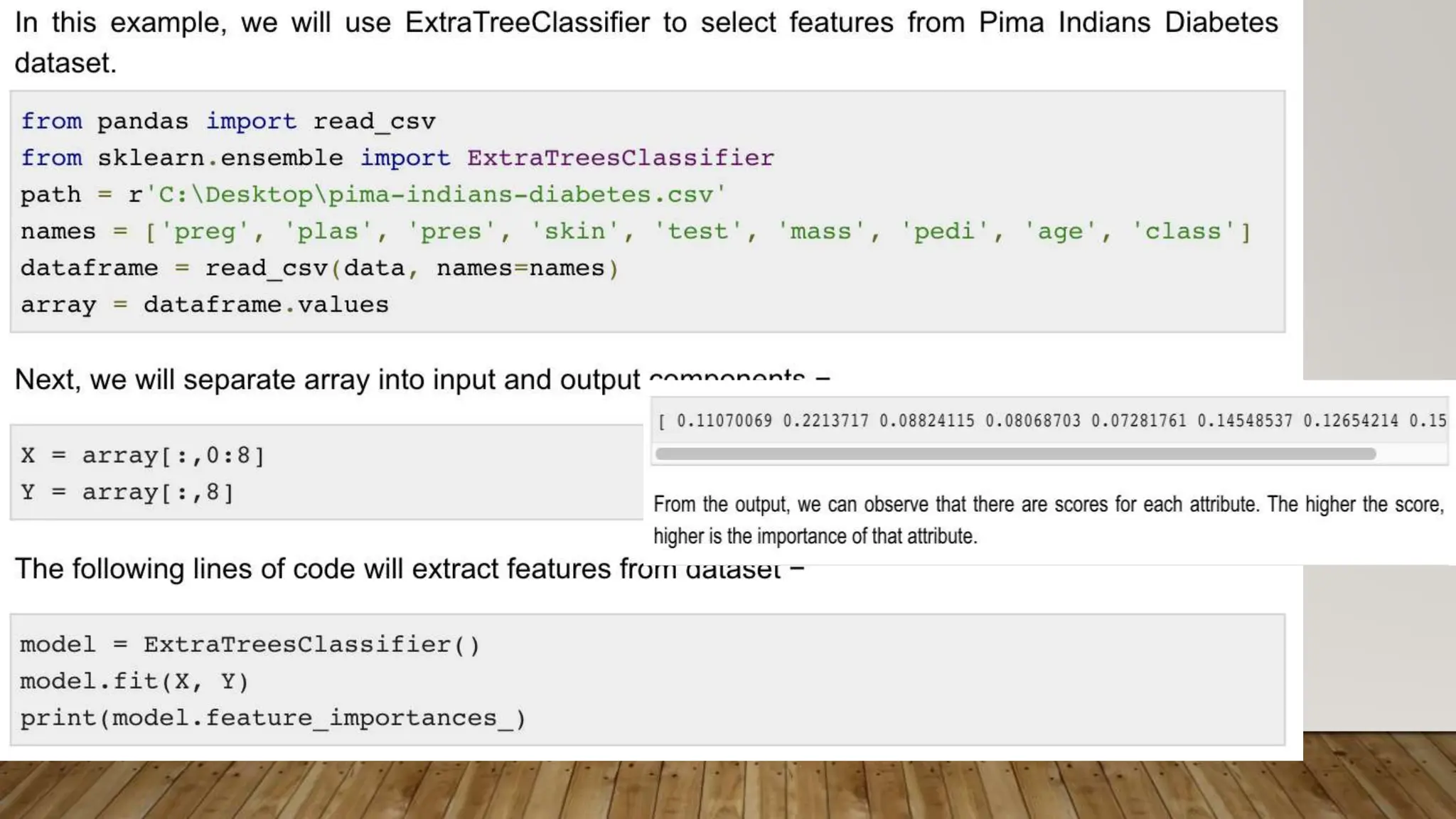

This document provides an overview of machine learning, including definitions of key concepts like tasks, experience, and performance in machine learning. It also discusses common machine learning workflows like data loading, understanding data through statistics and visualization, preparing data through techniques like scaling and normalization, selecting features, and discussing applications and types of machine learning. It provides examples of challenges in machine learning and techniques for data preparation and feature selection.