Download to read offline

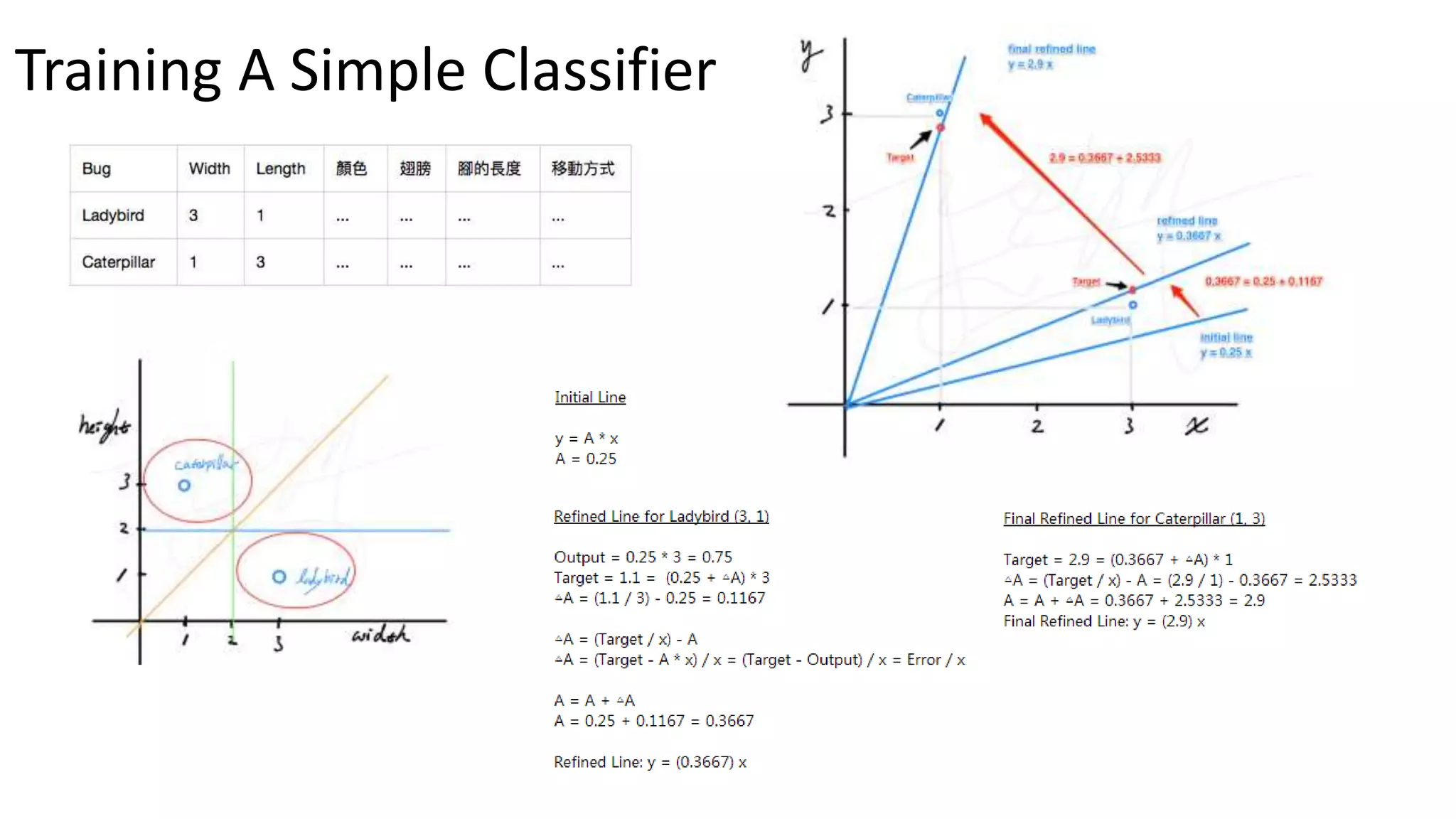

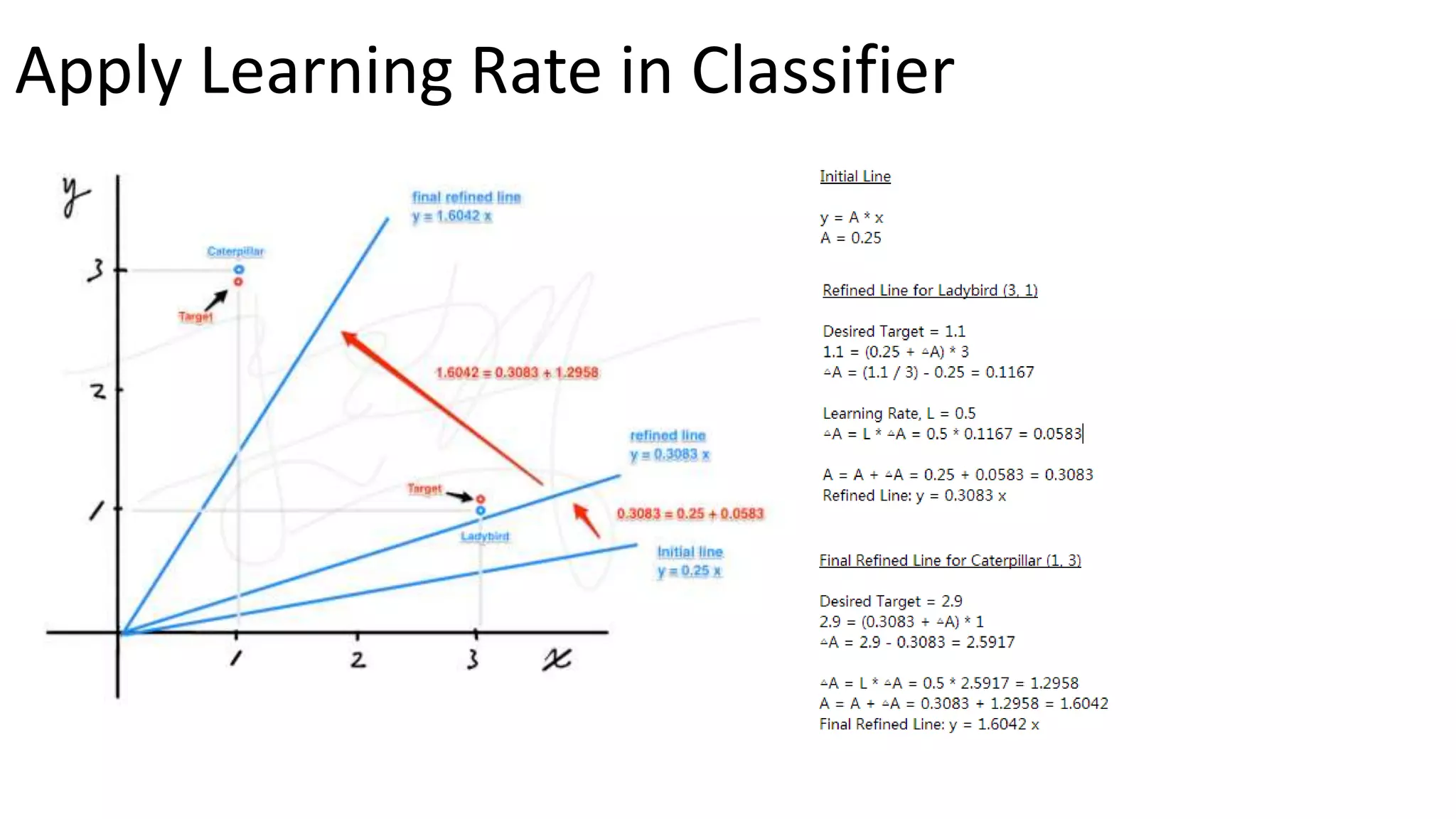

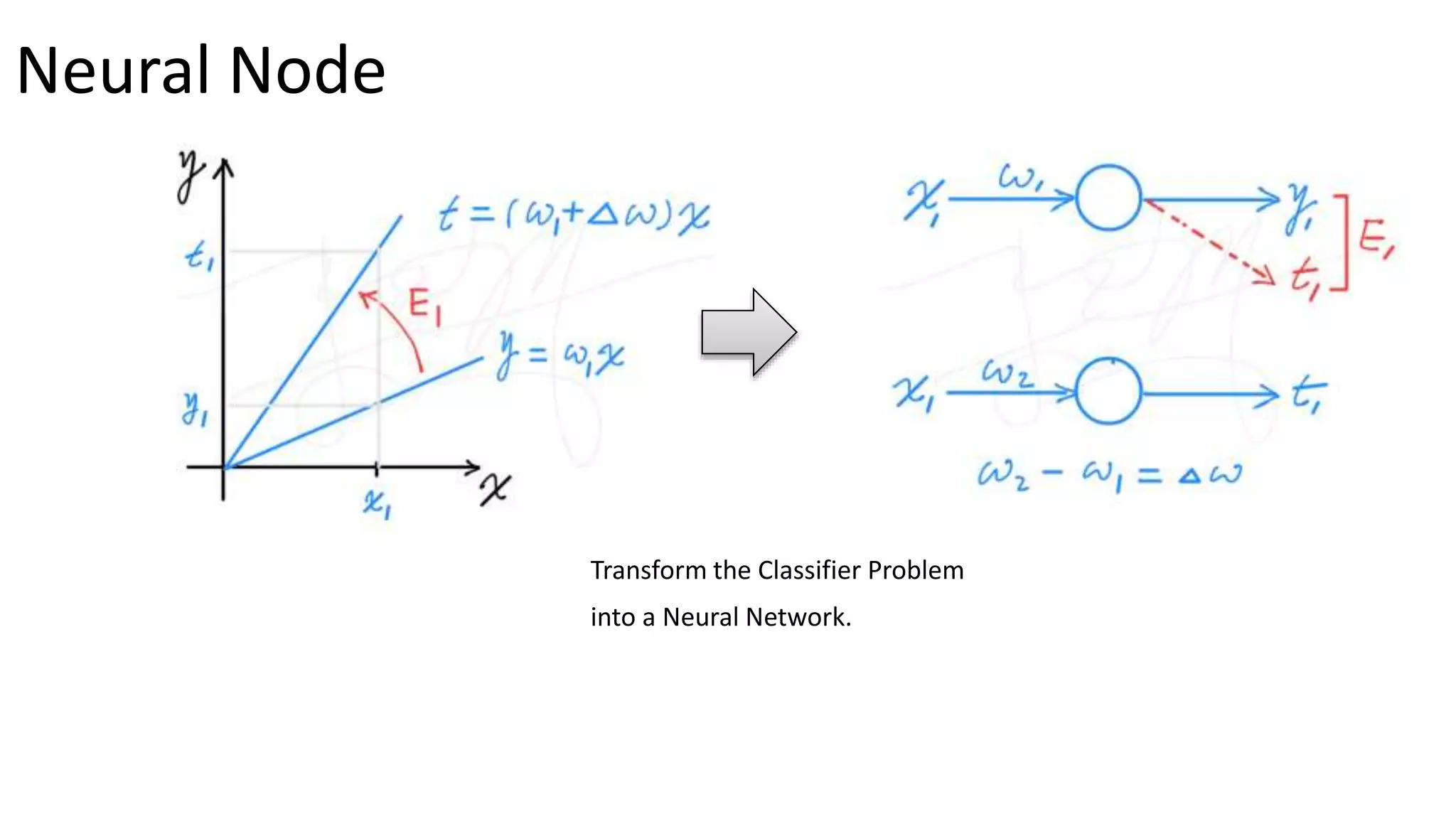

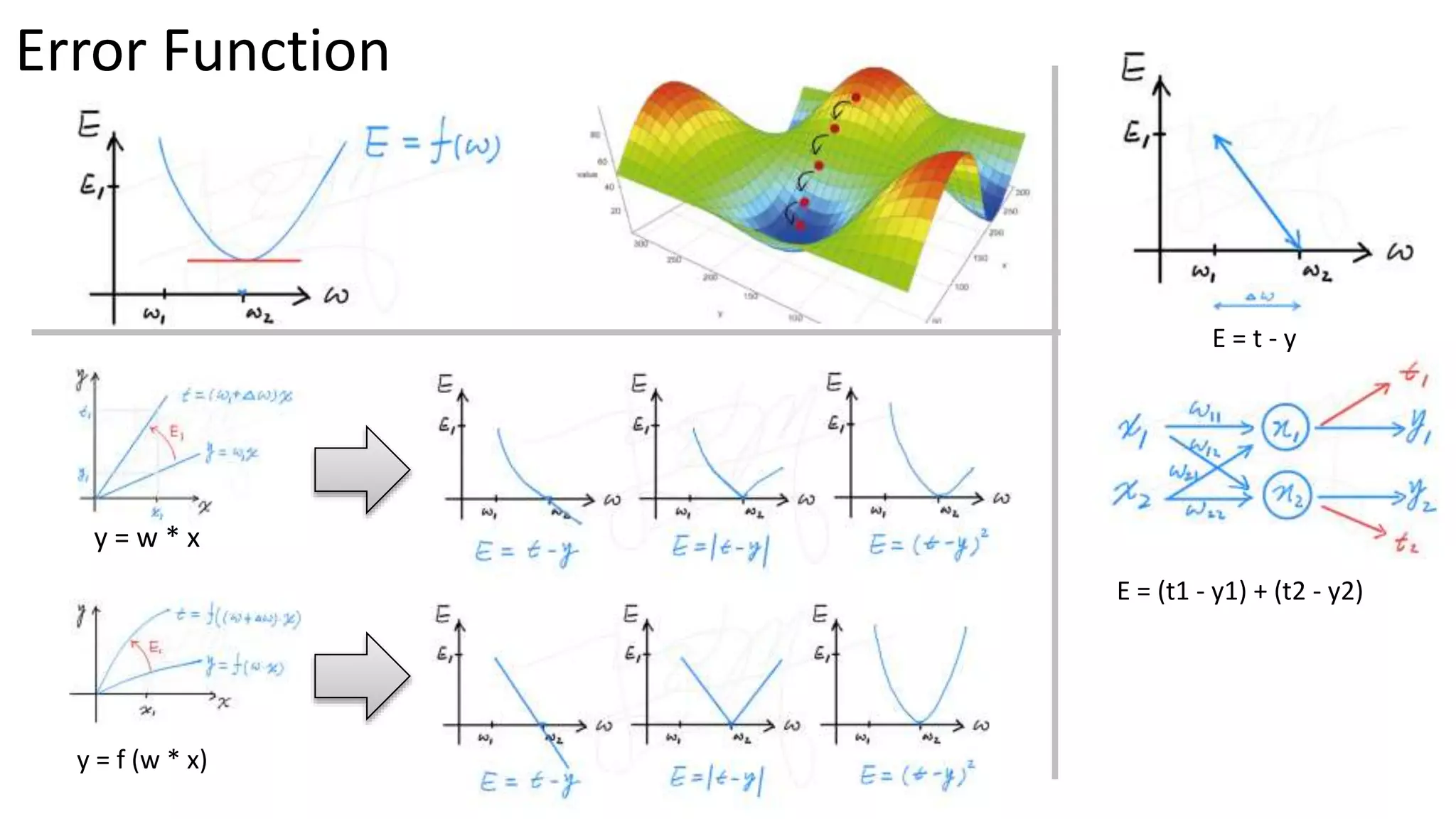

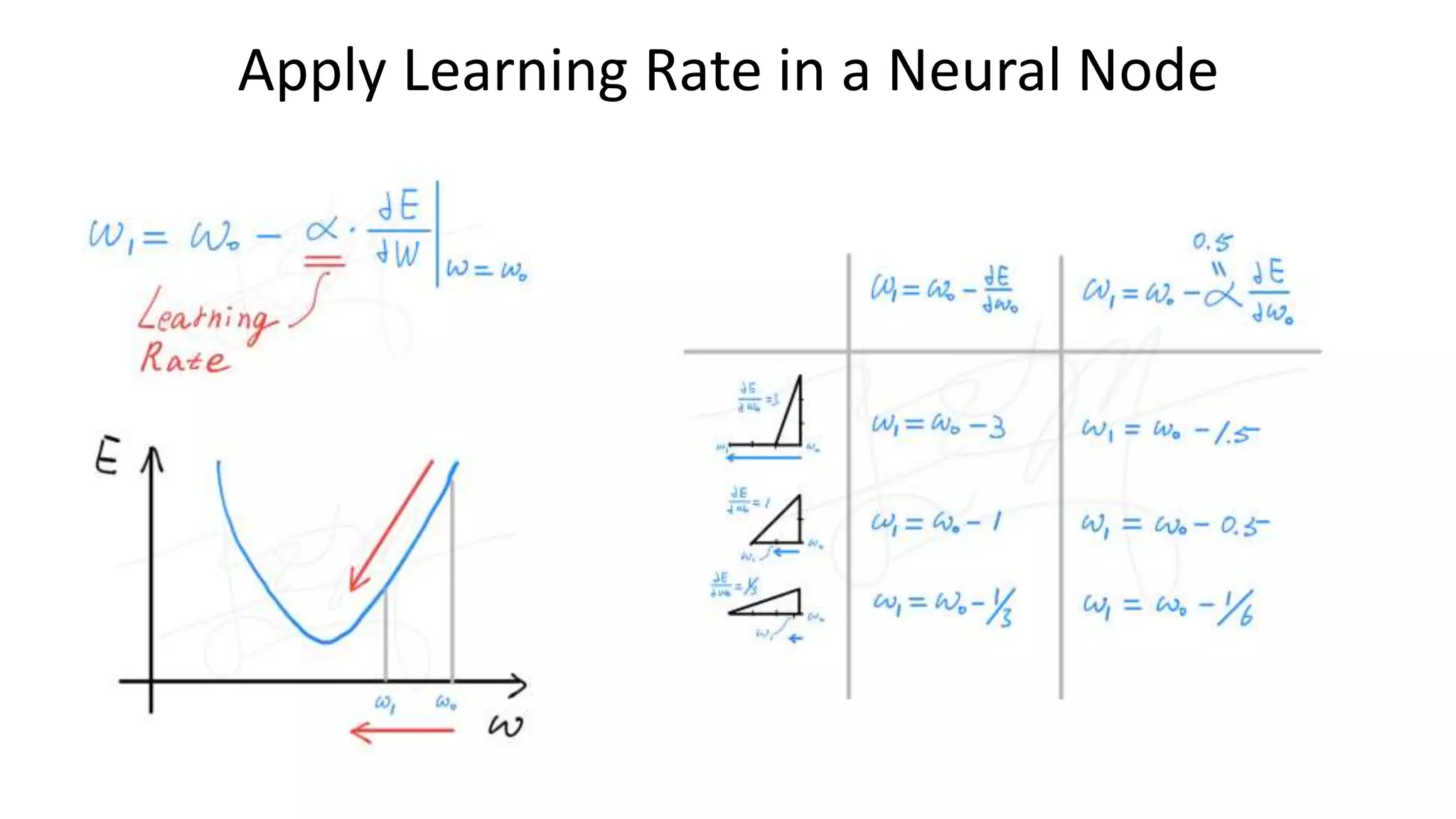

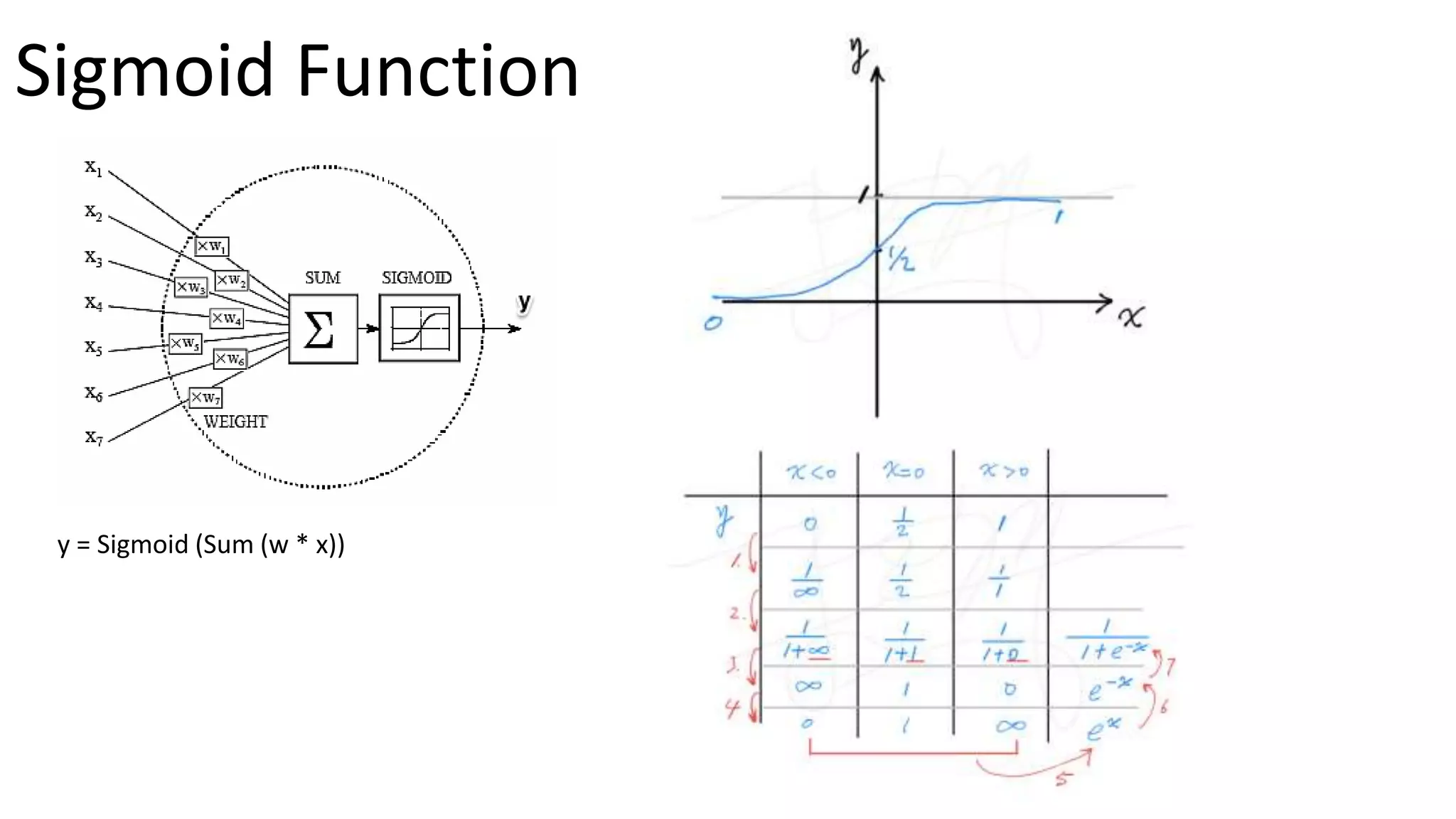

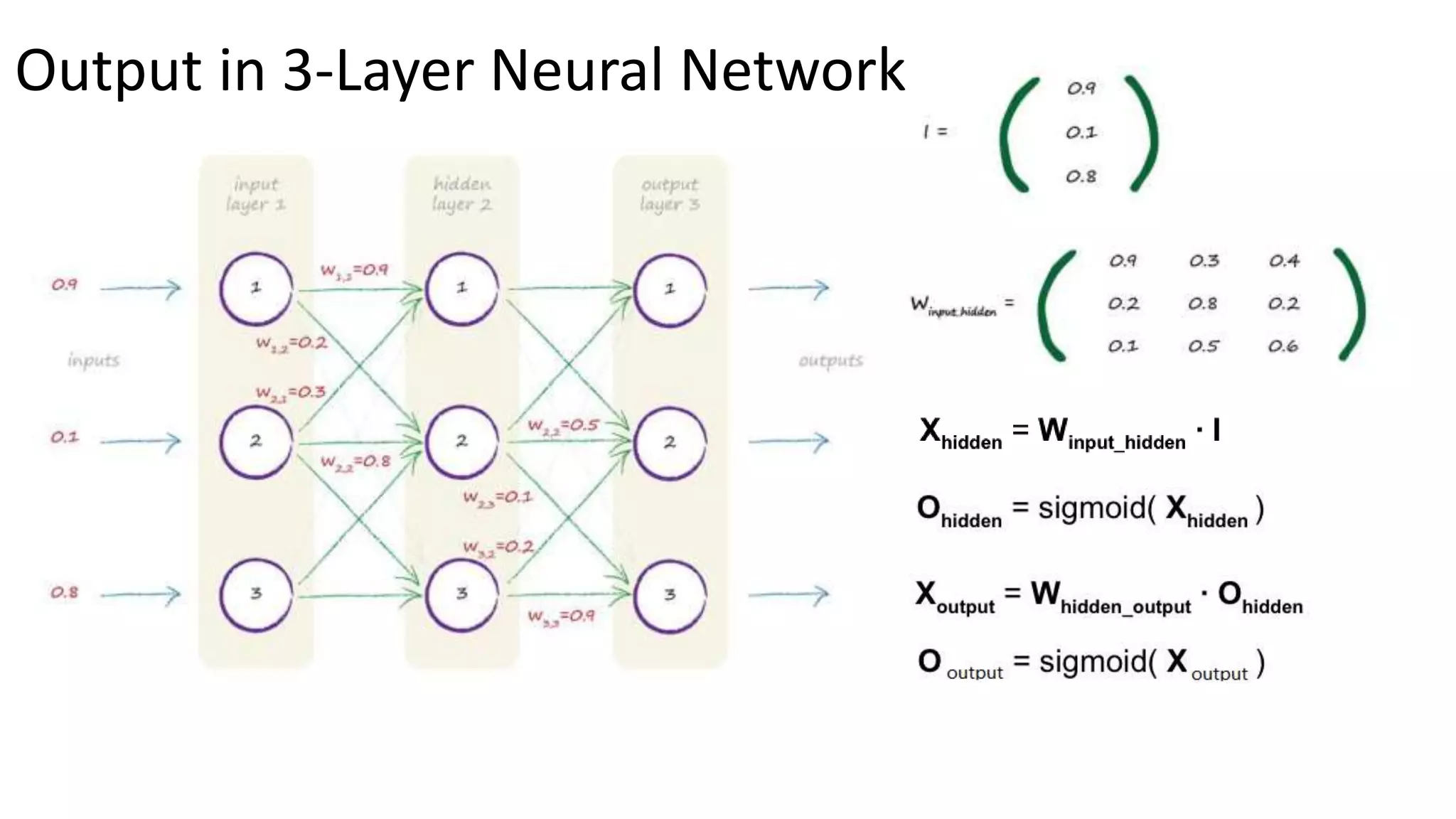

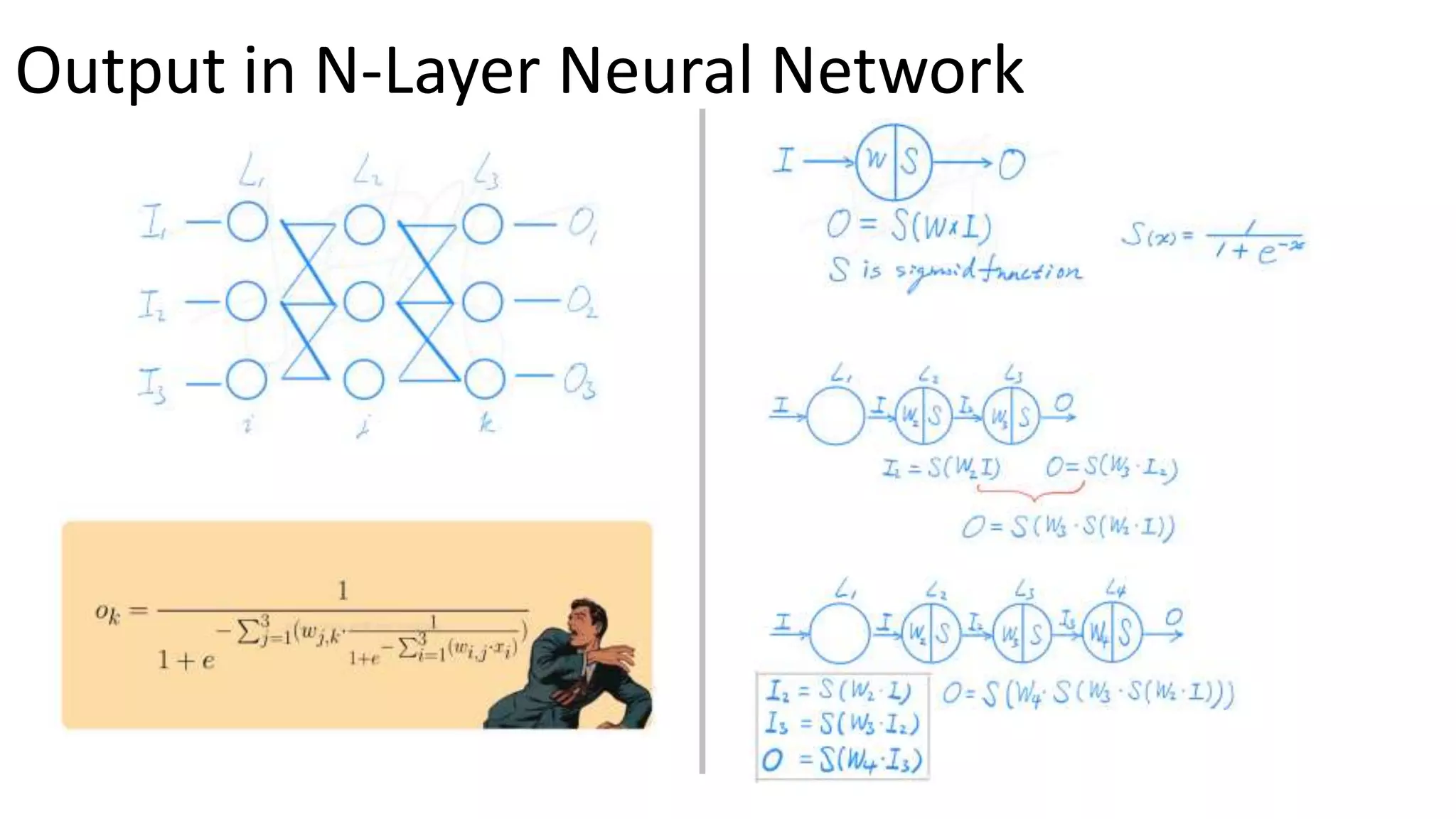

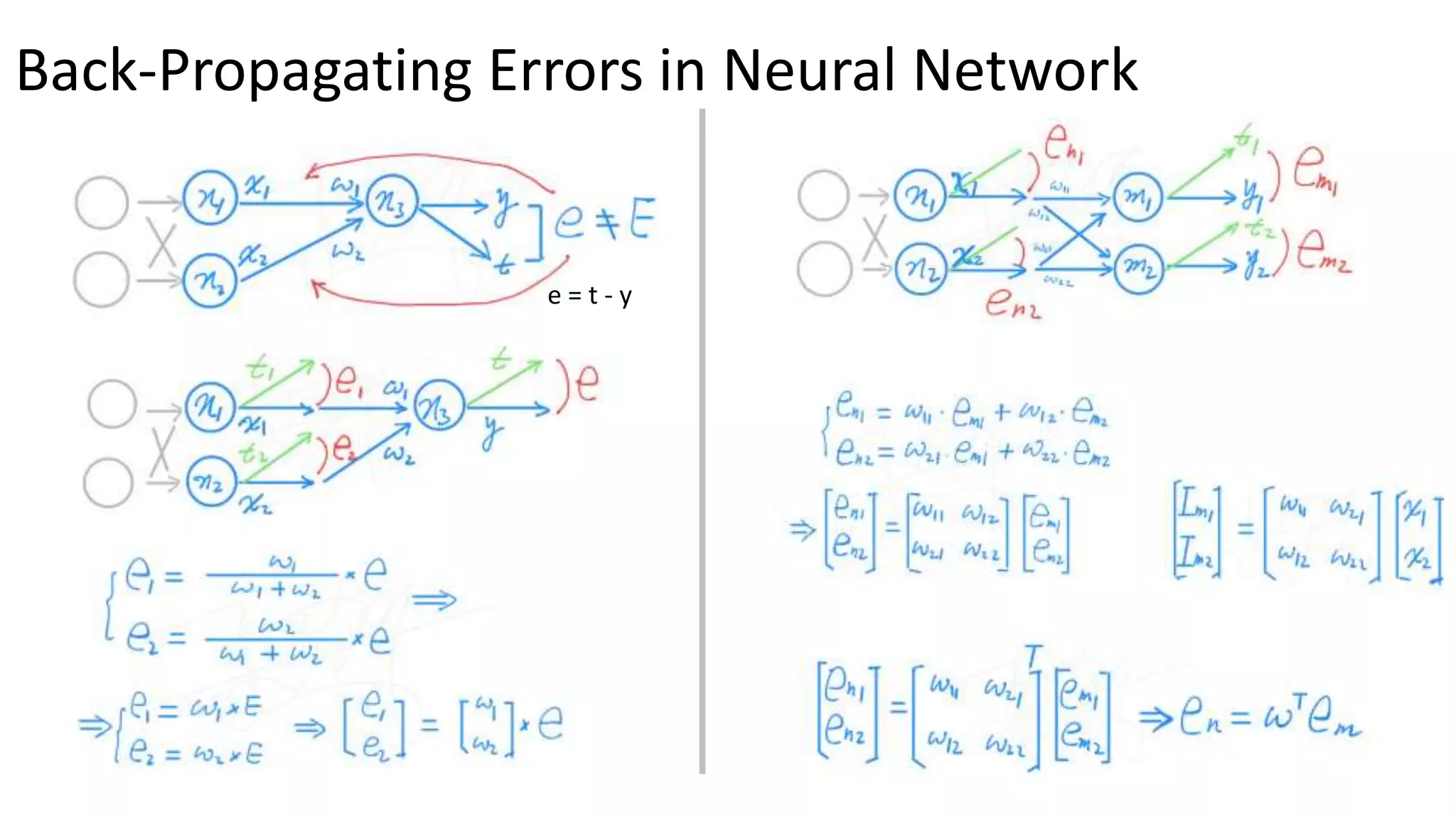

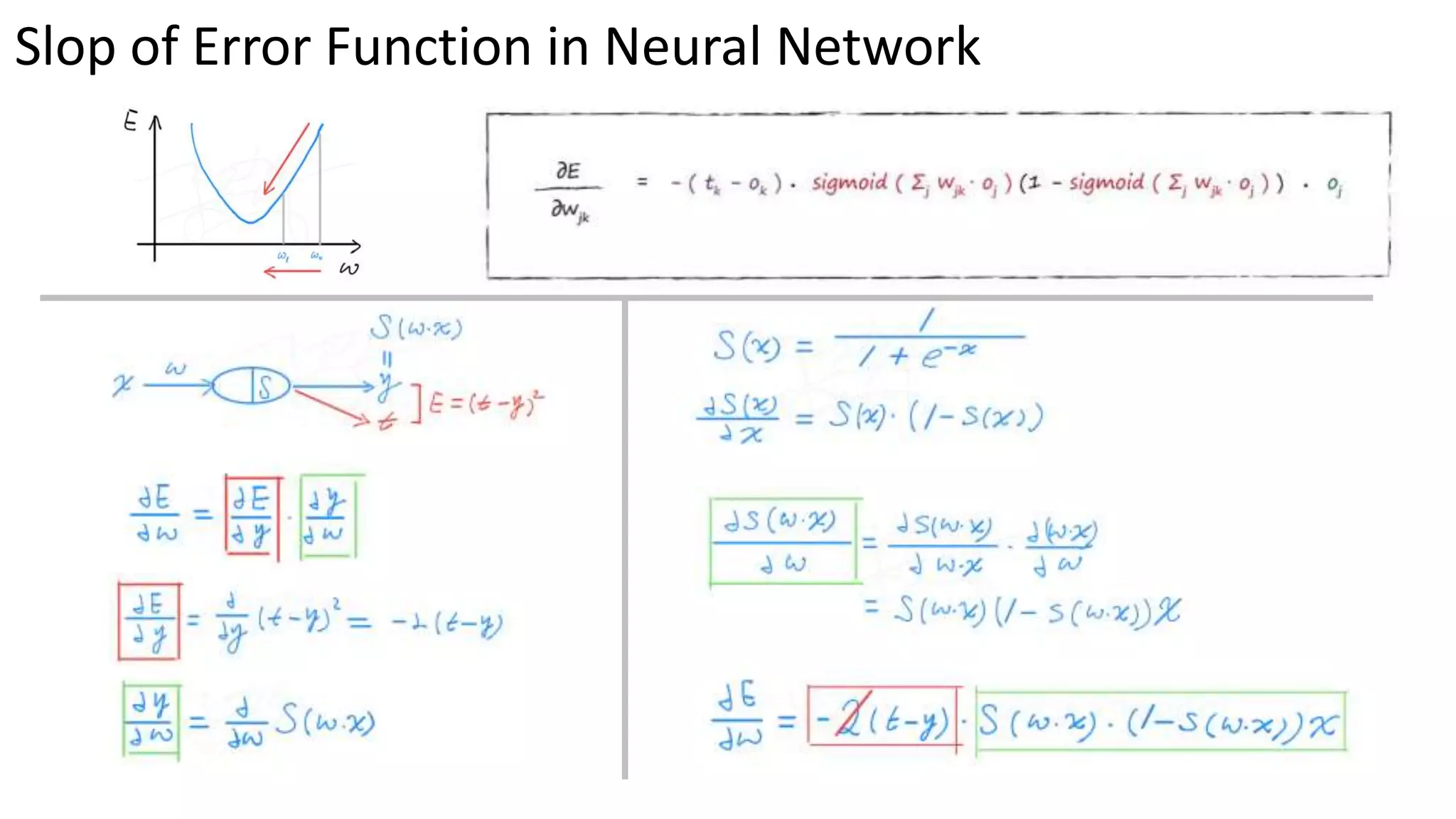

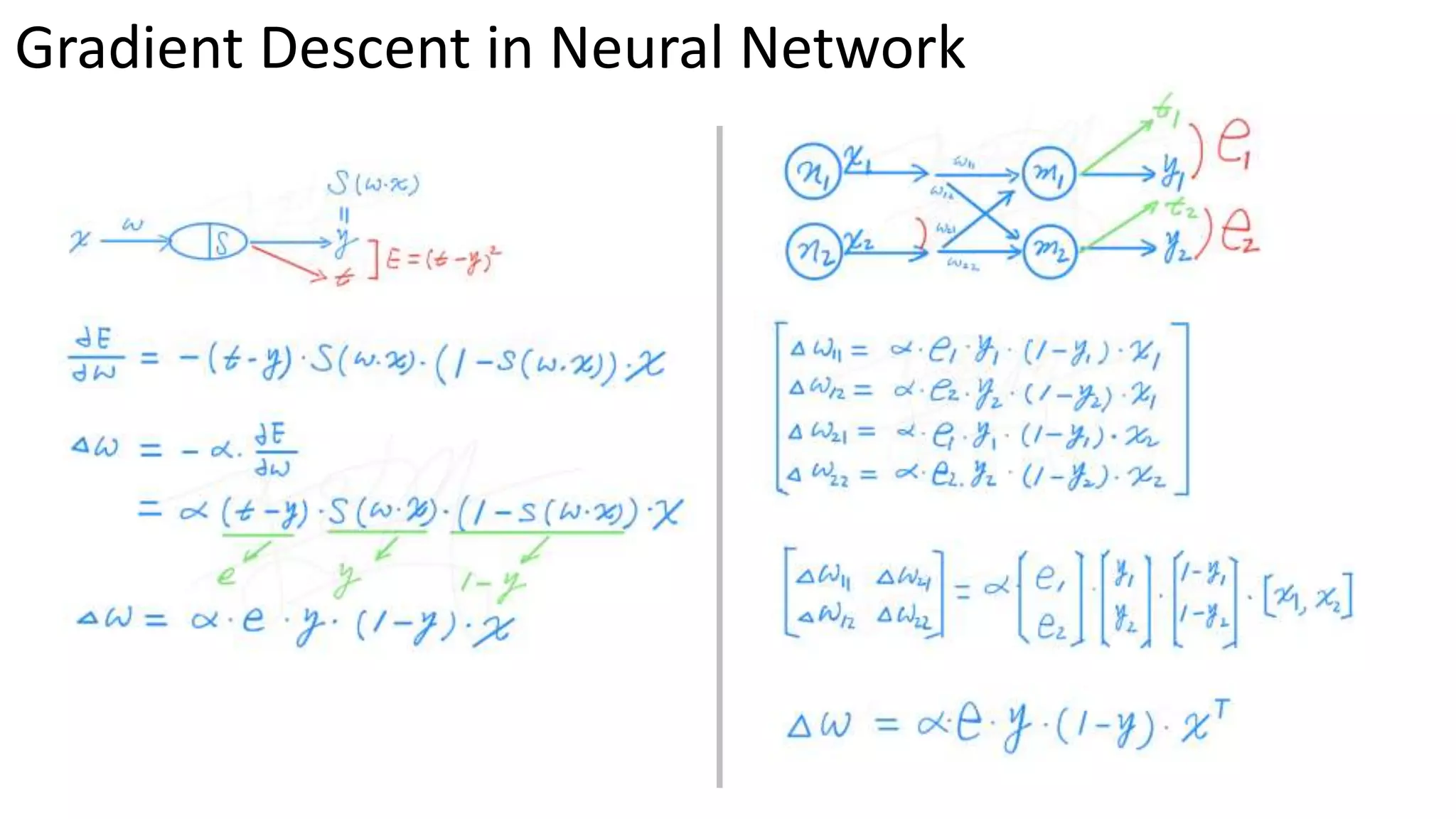

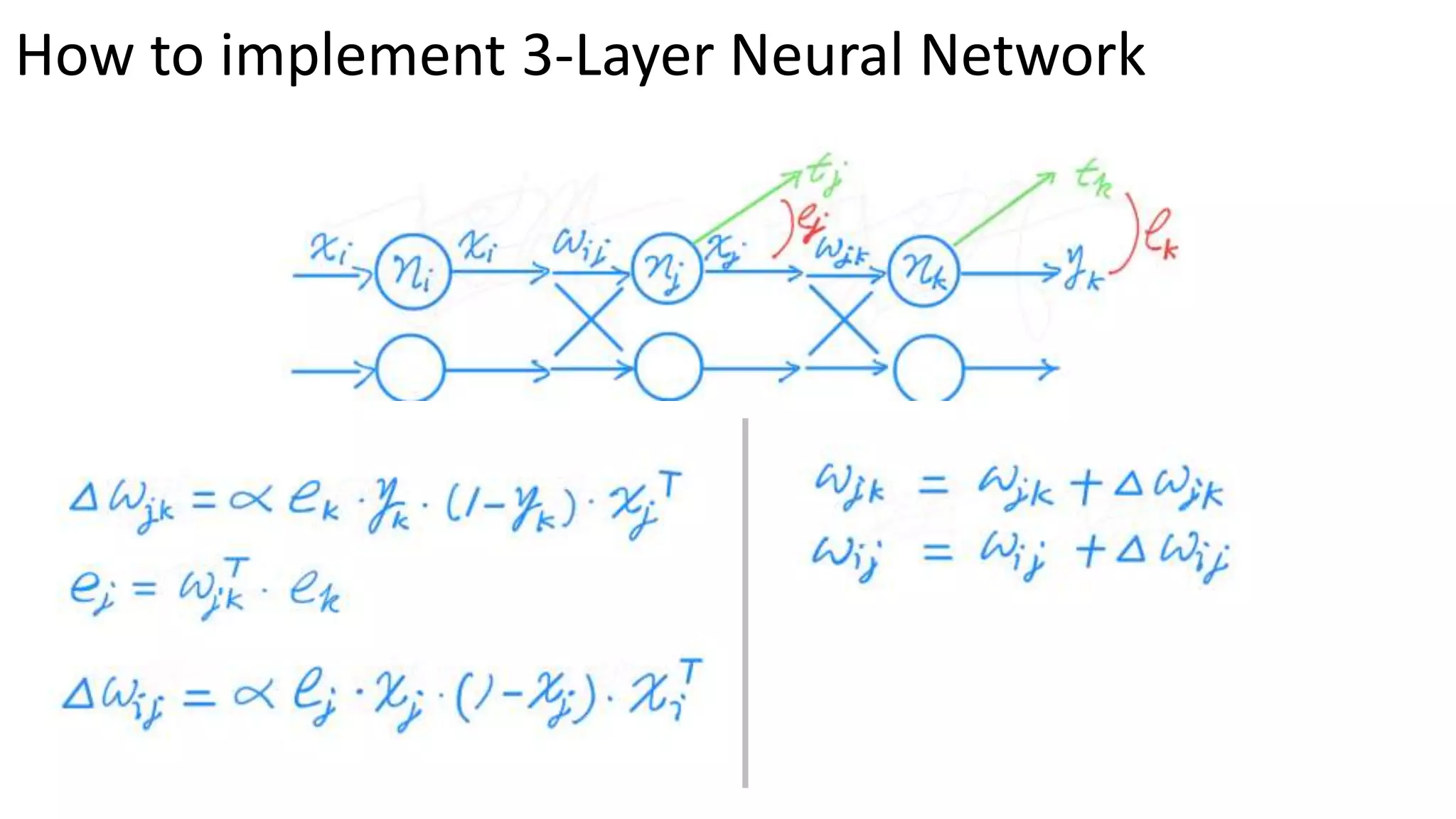

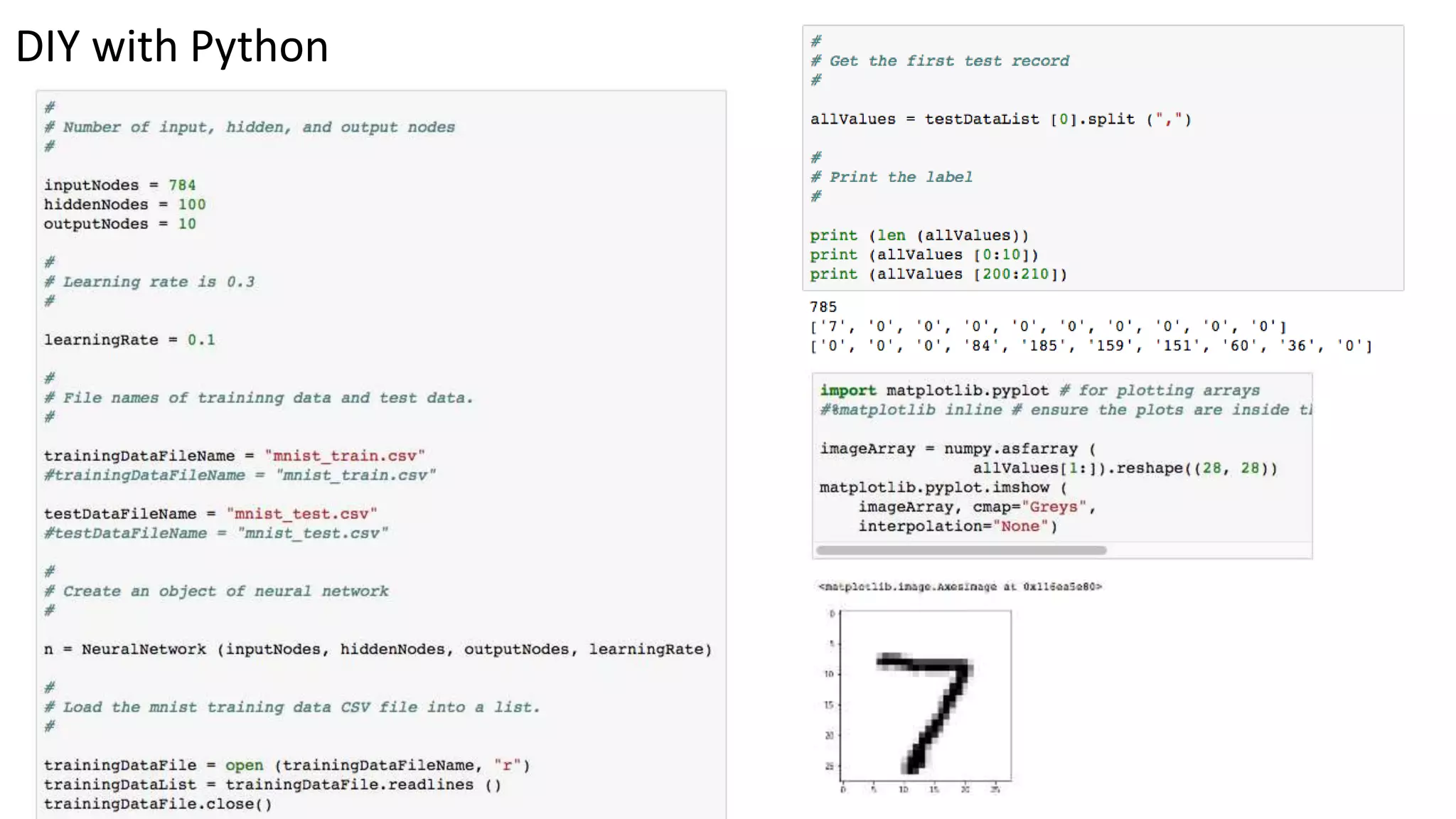

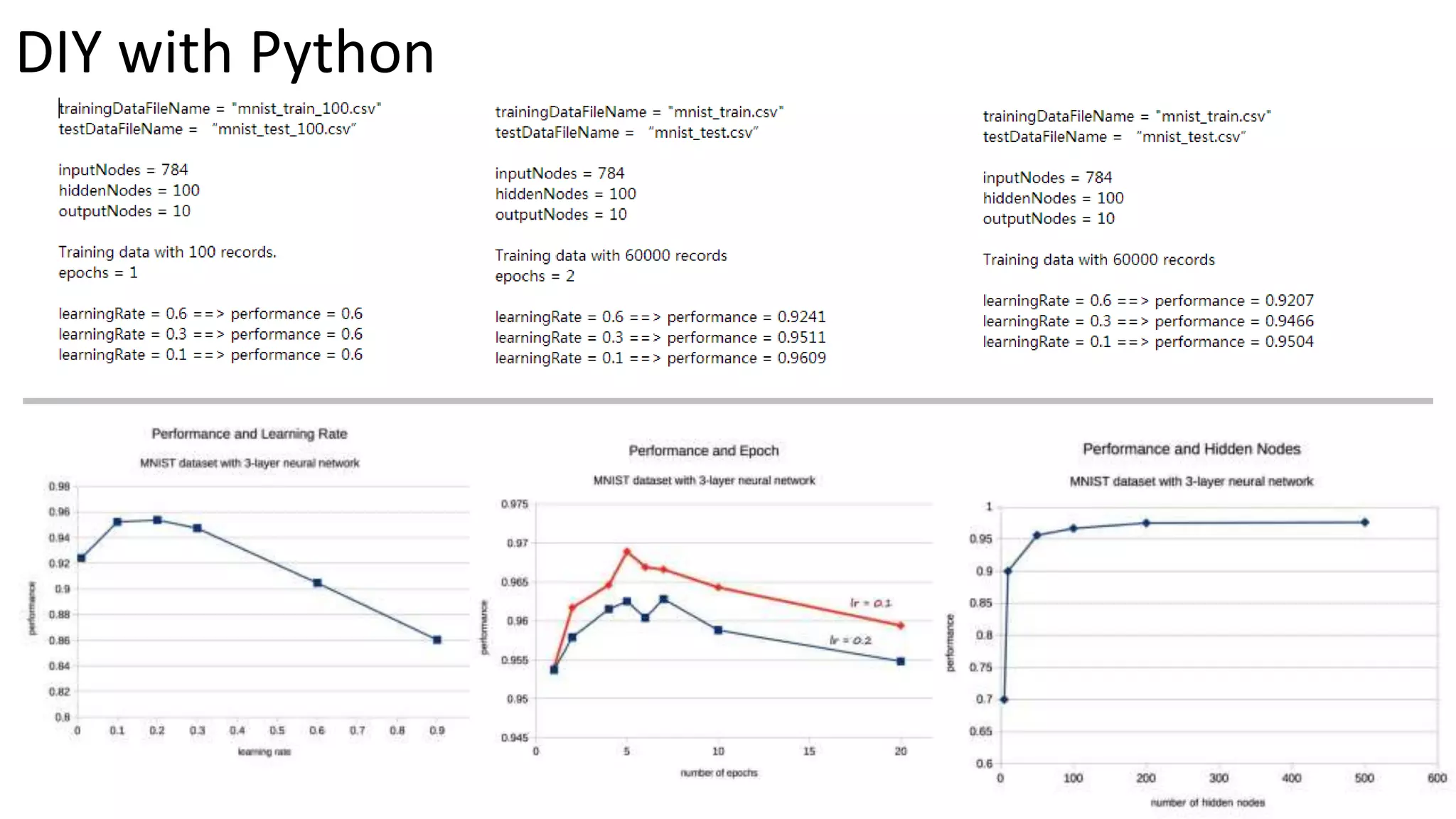

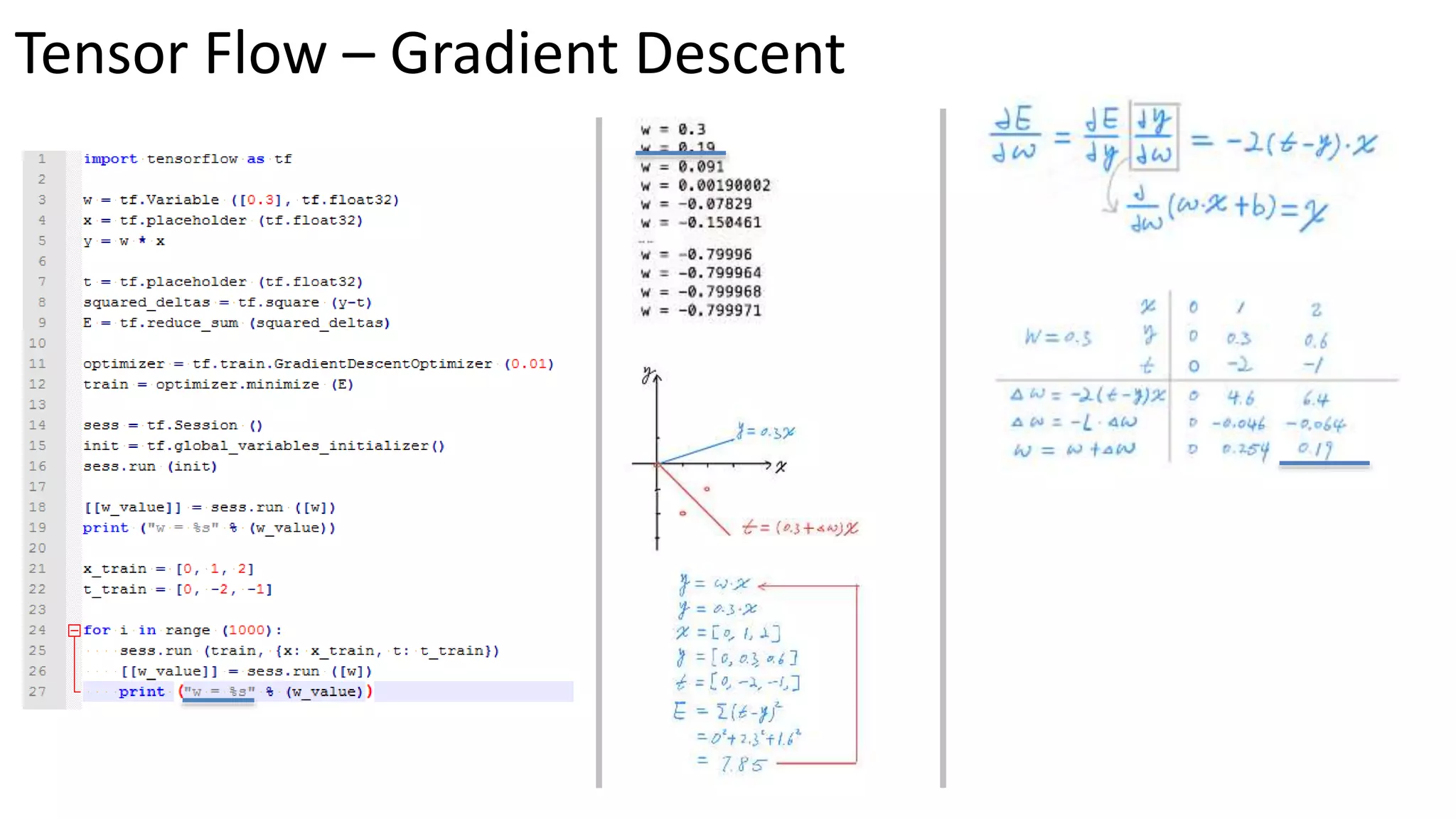

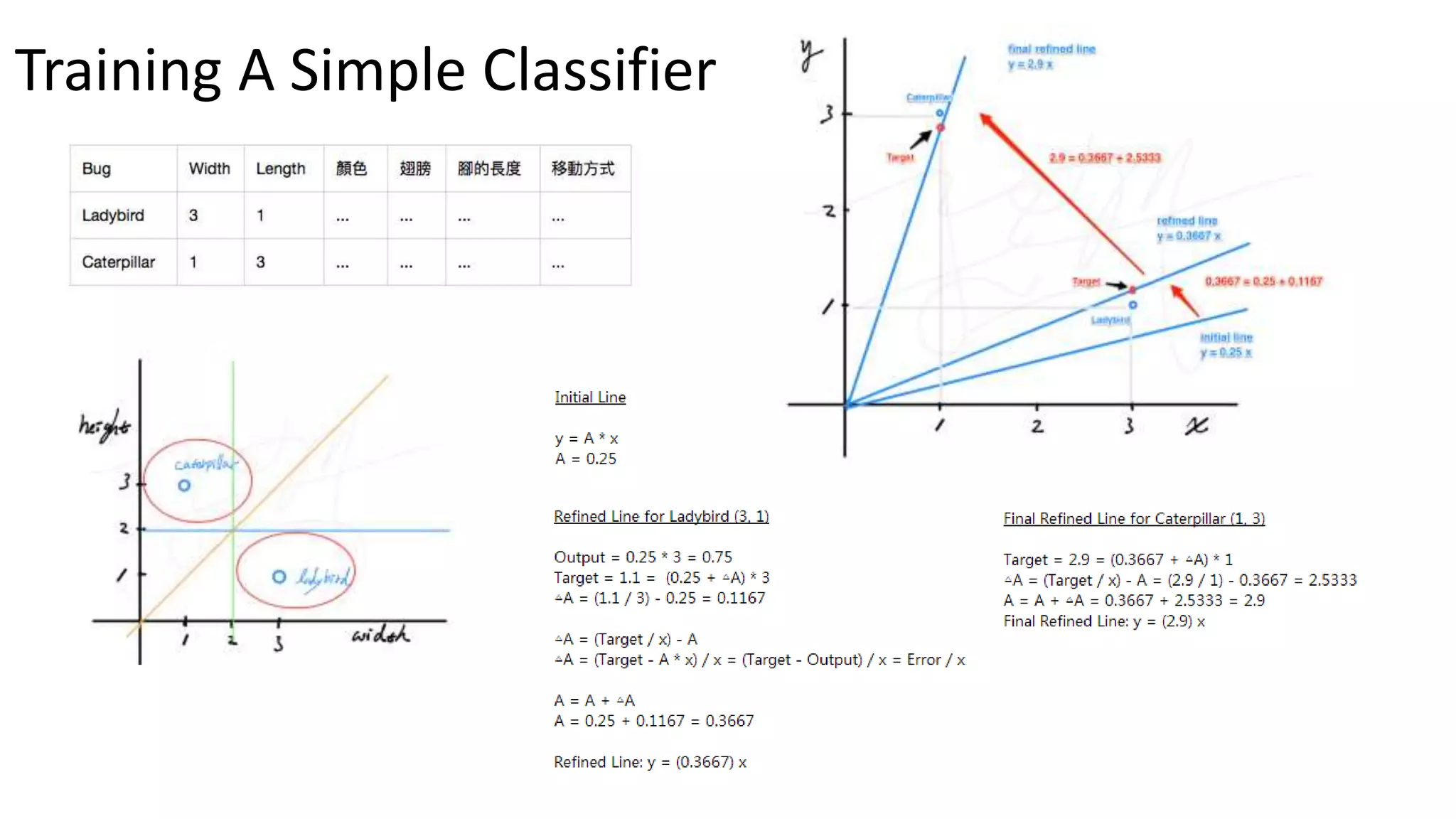

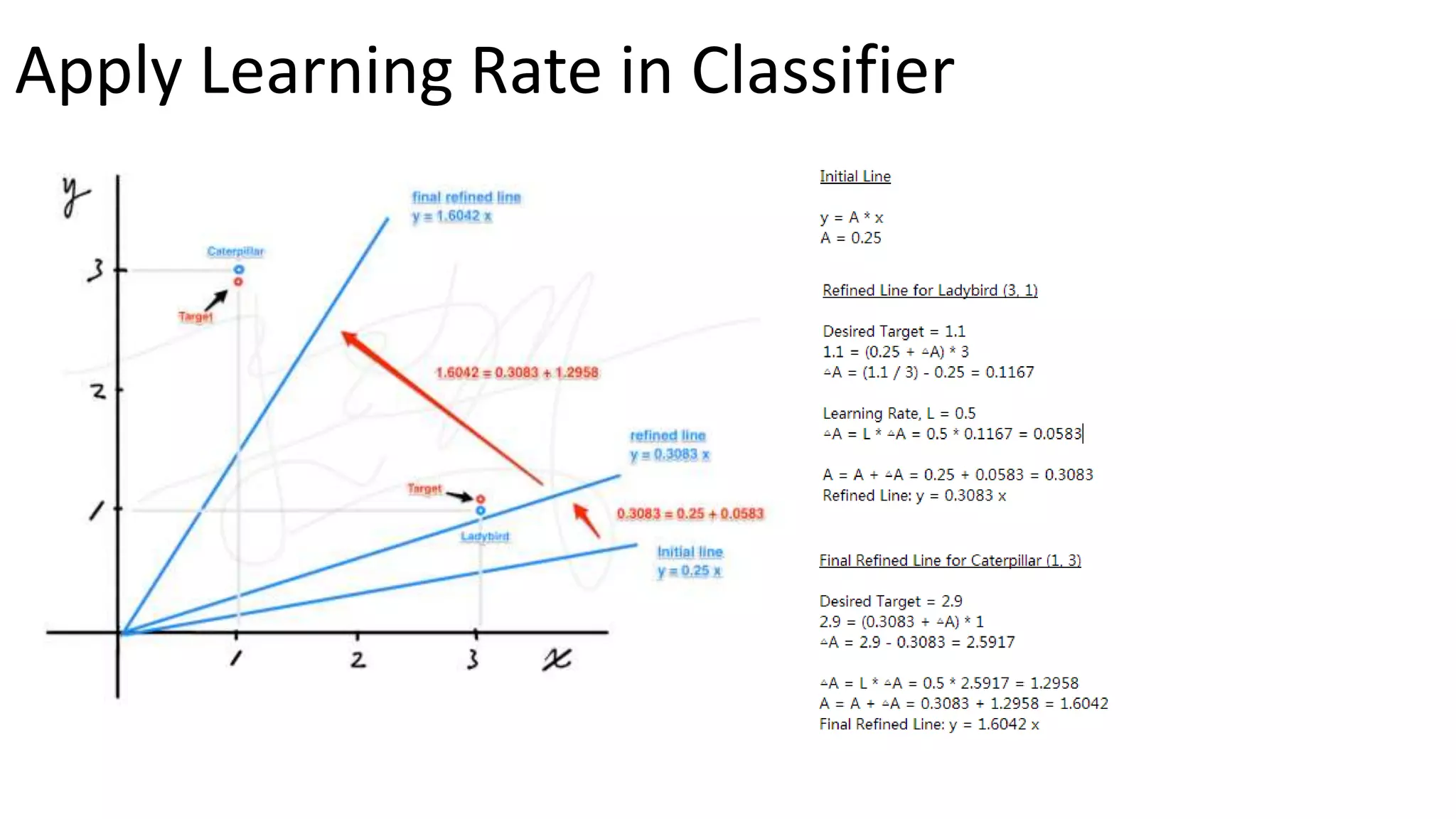

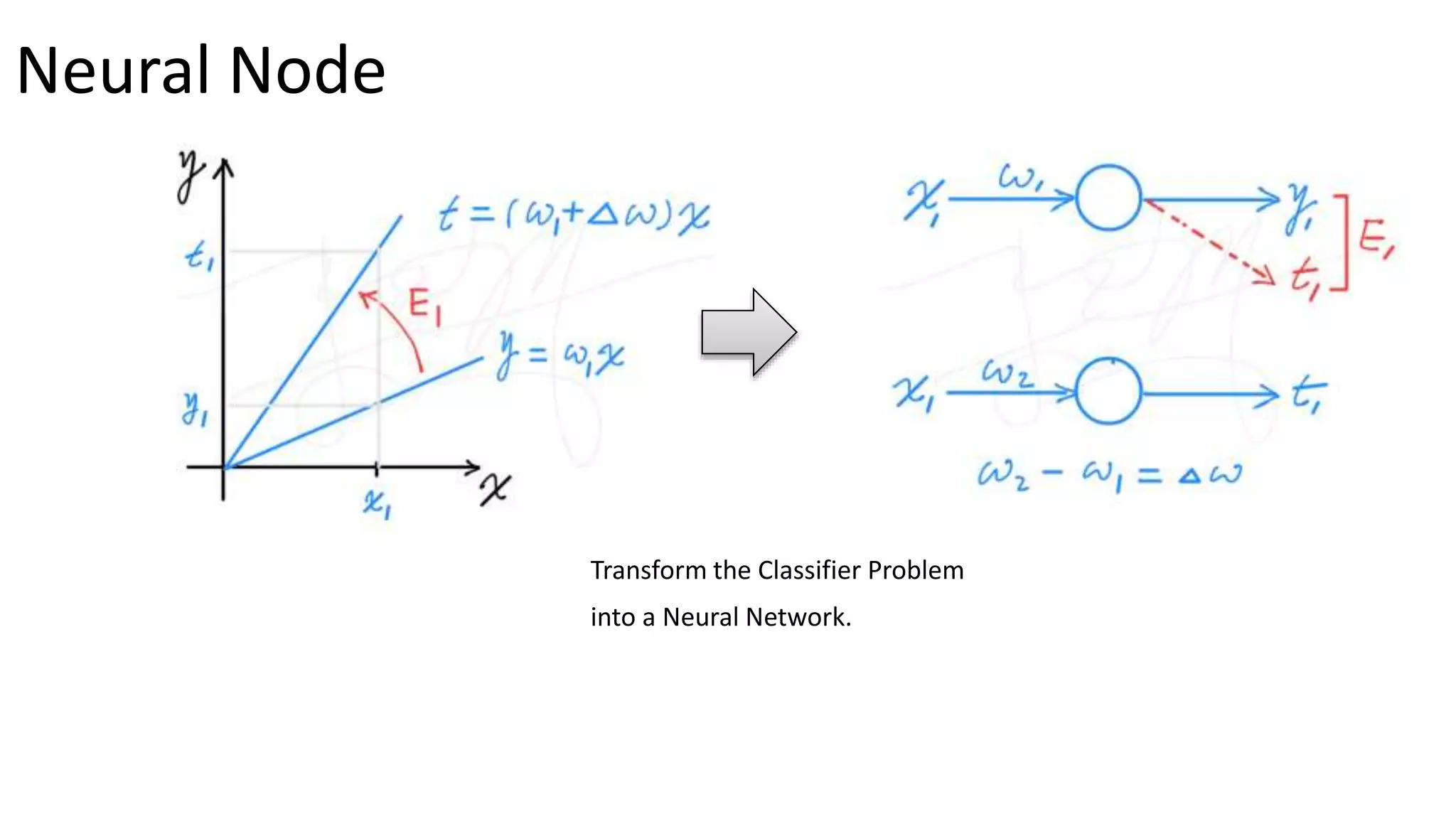

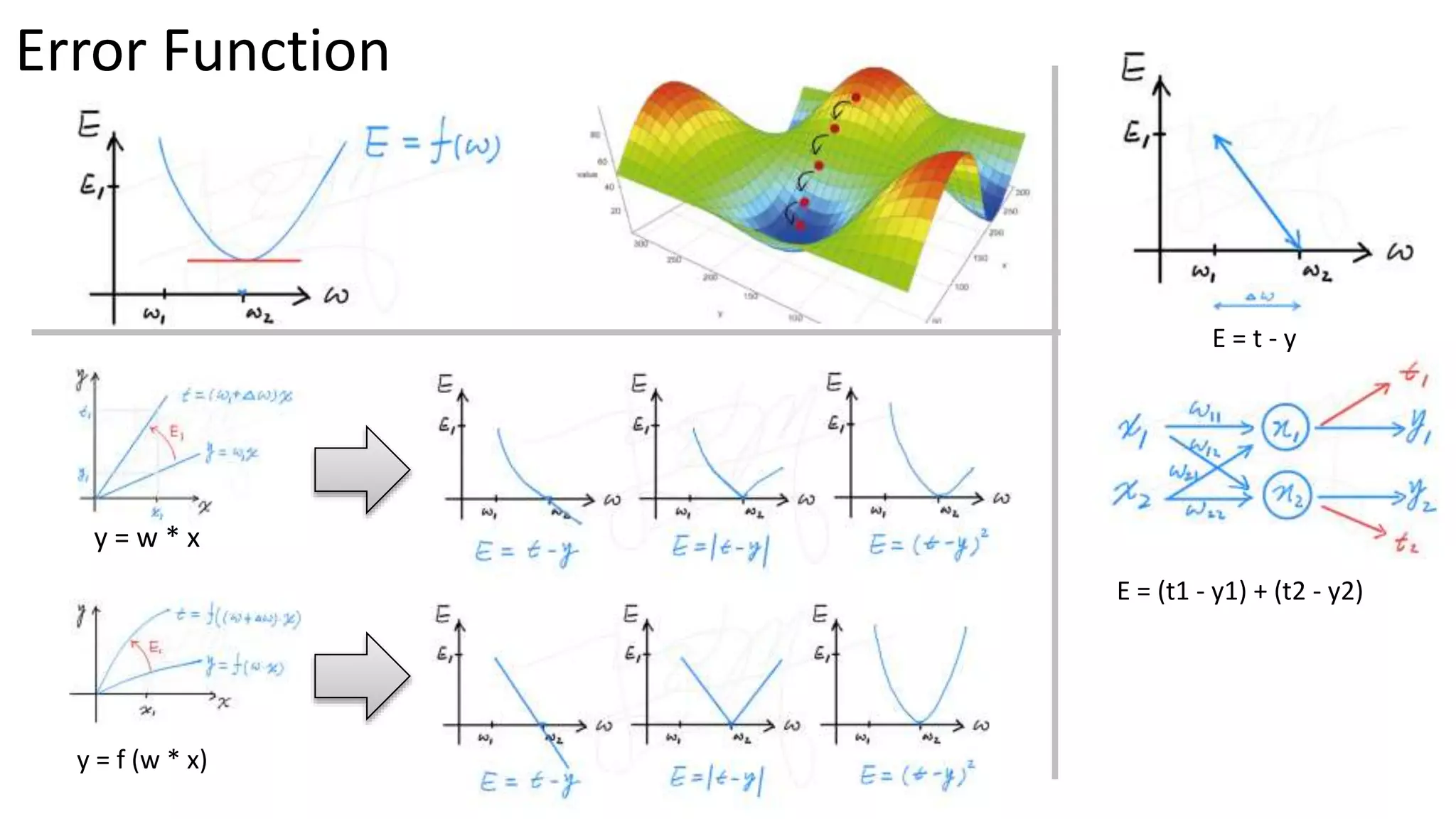

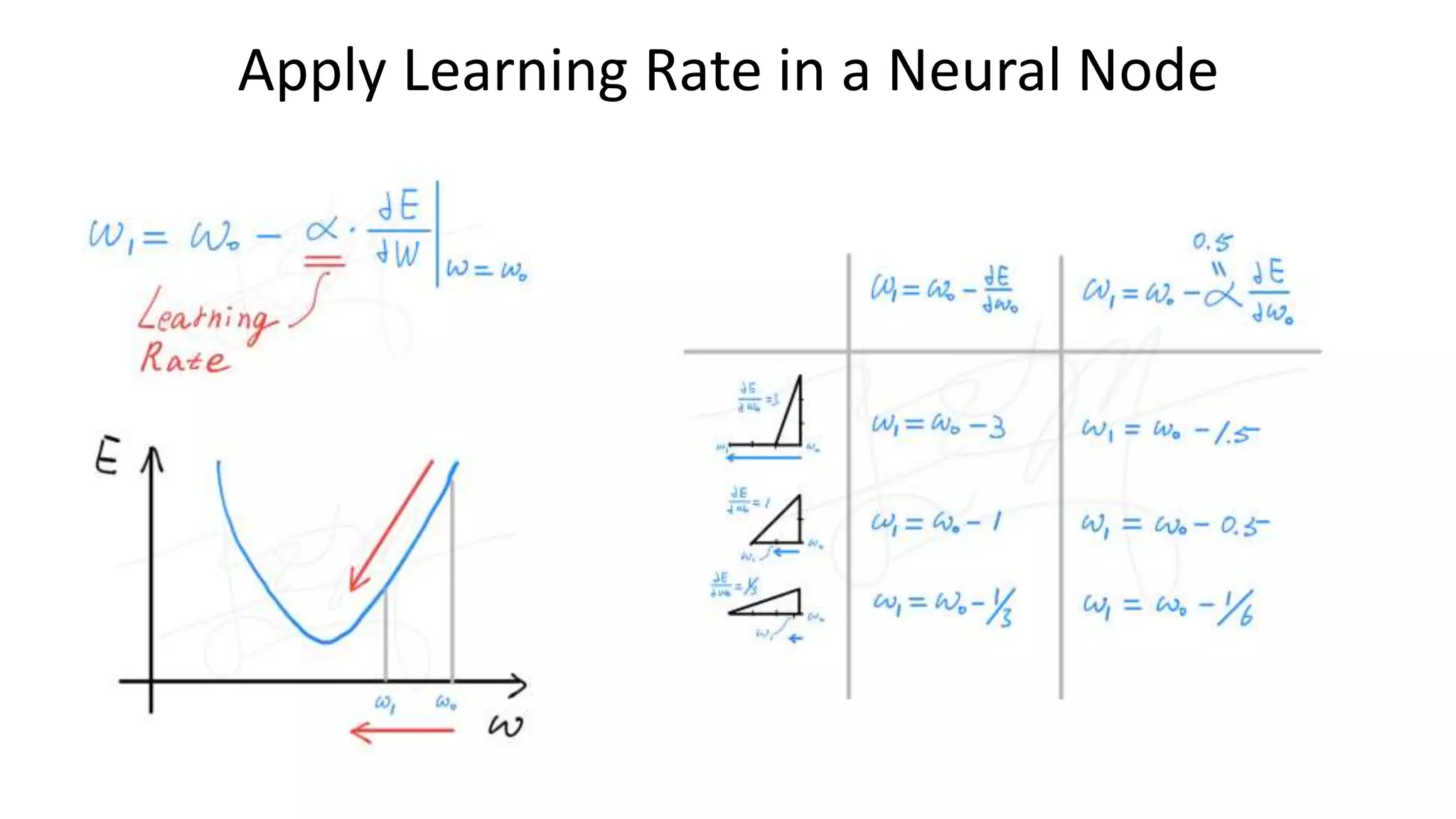

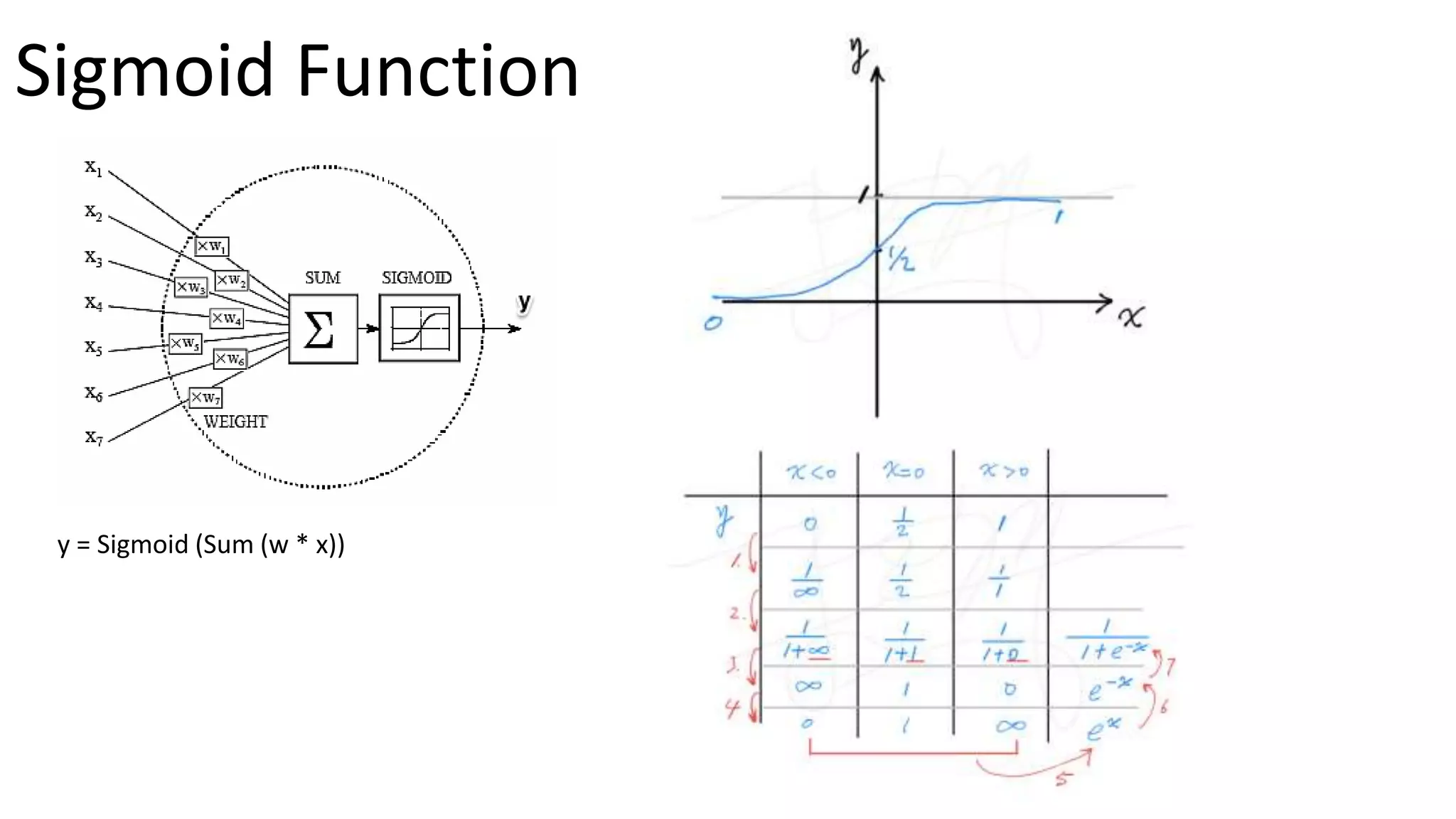

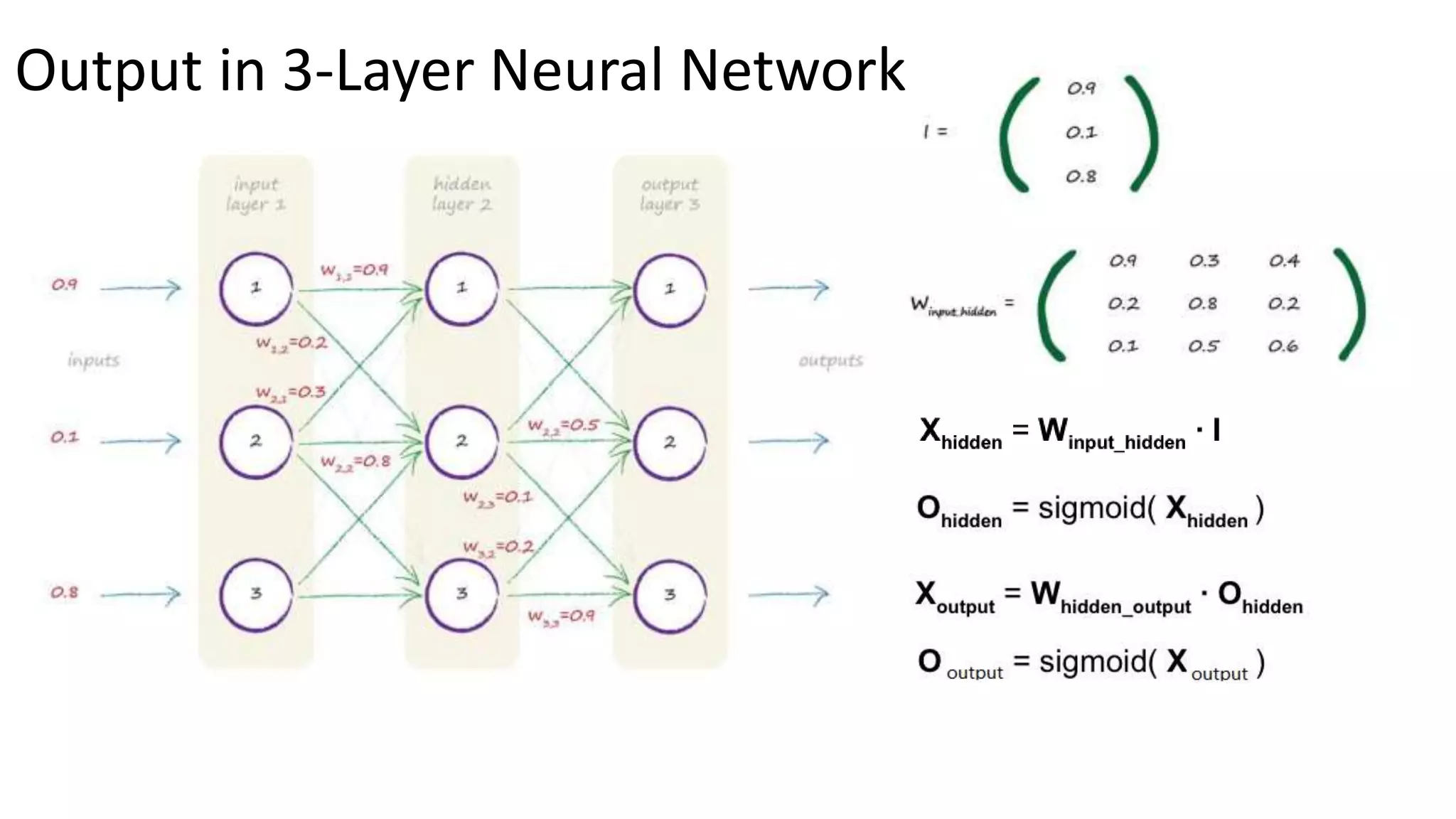

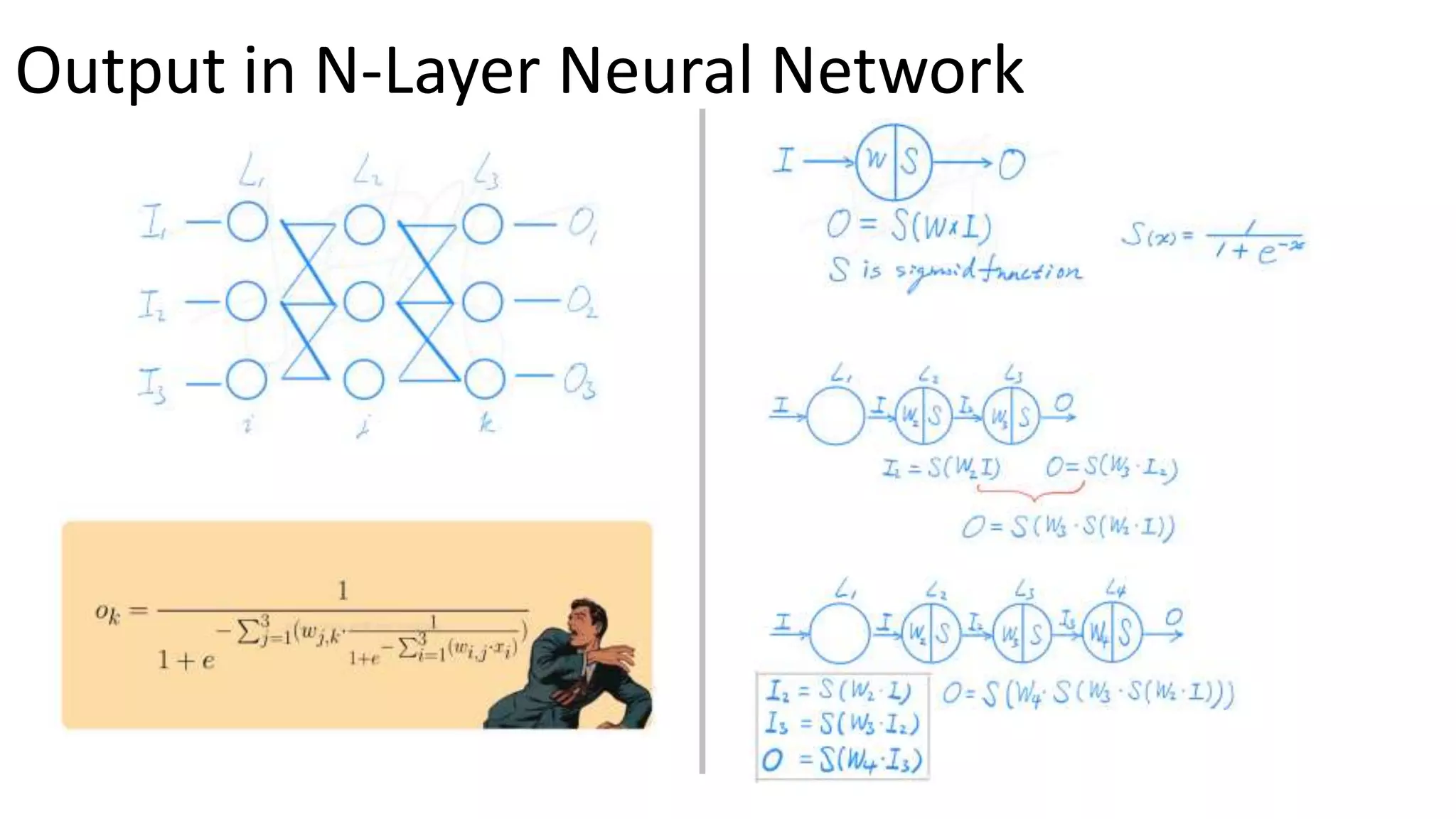

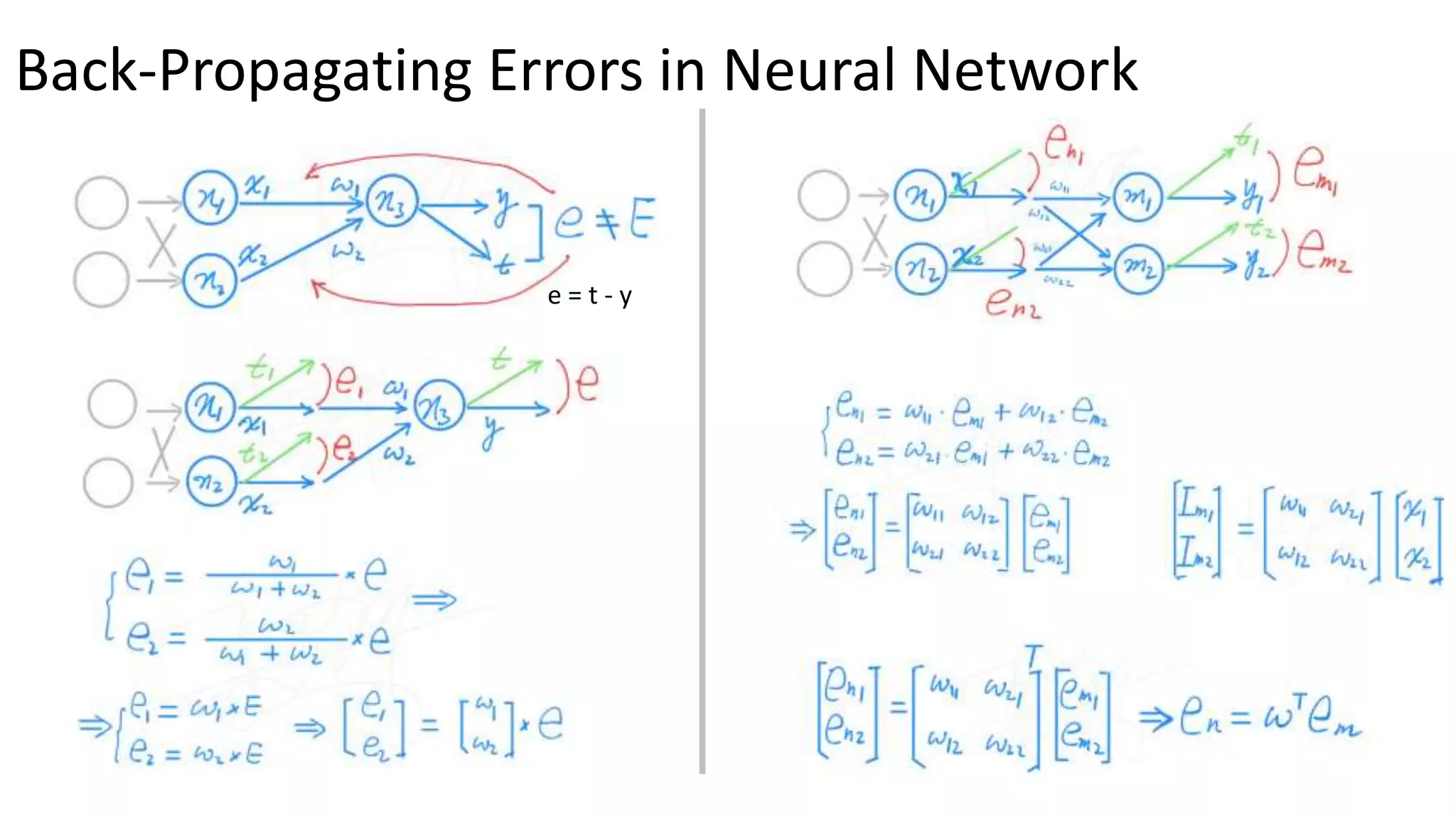

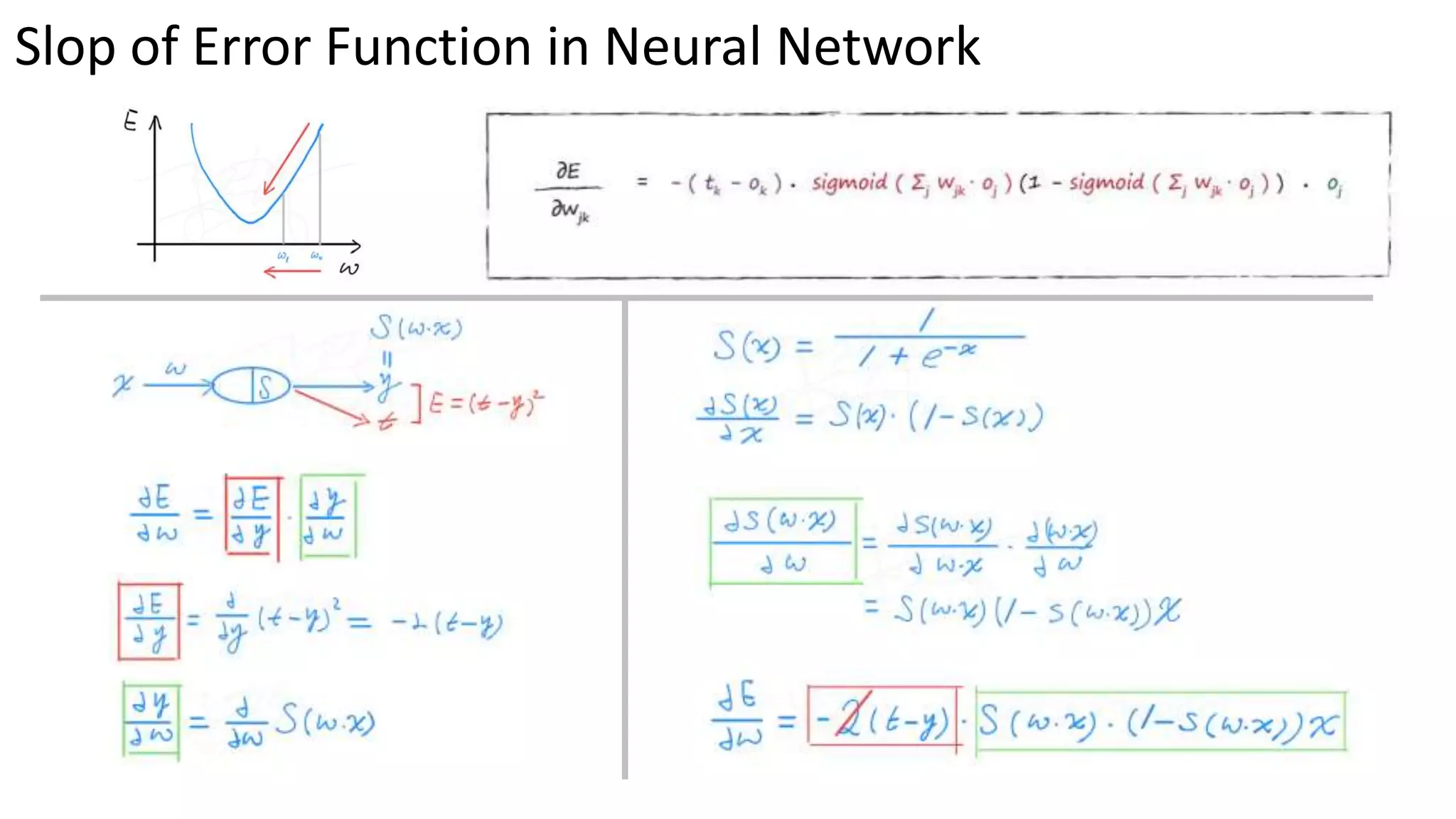

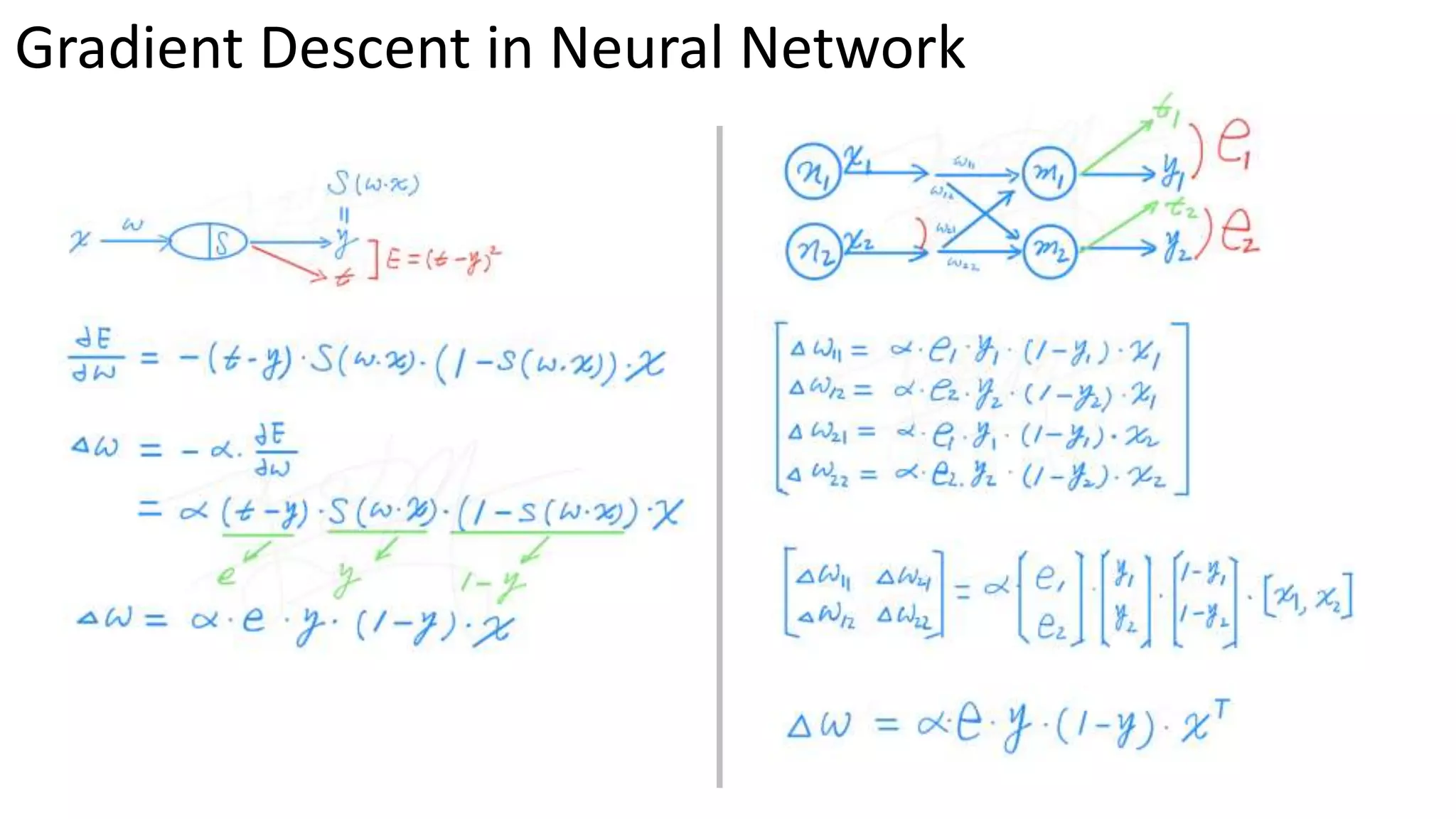

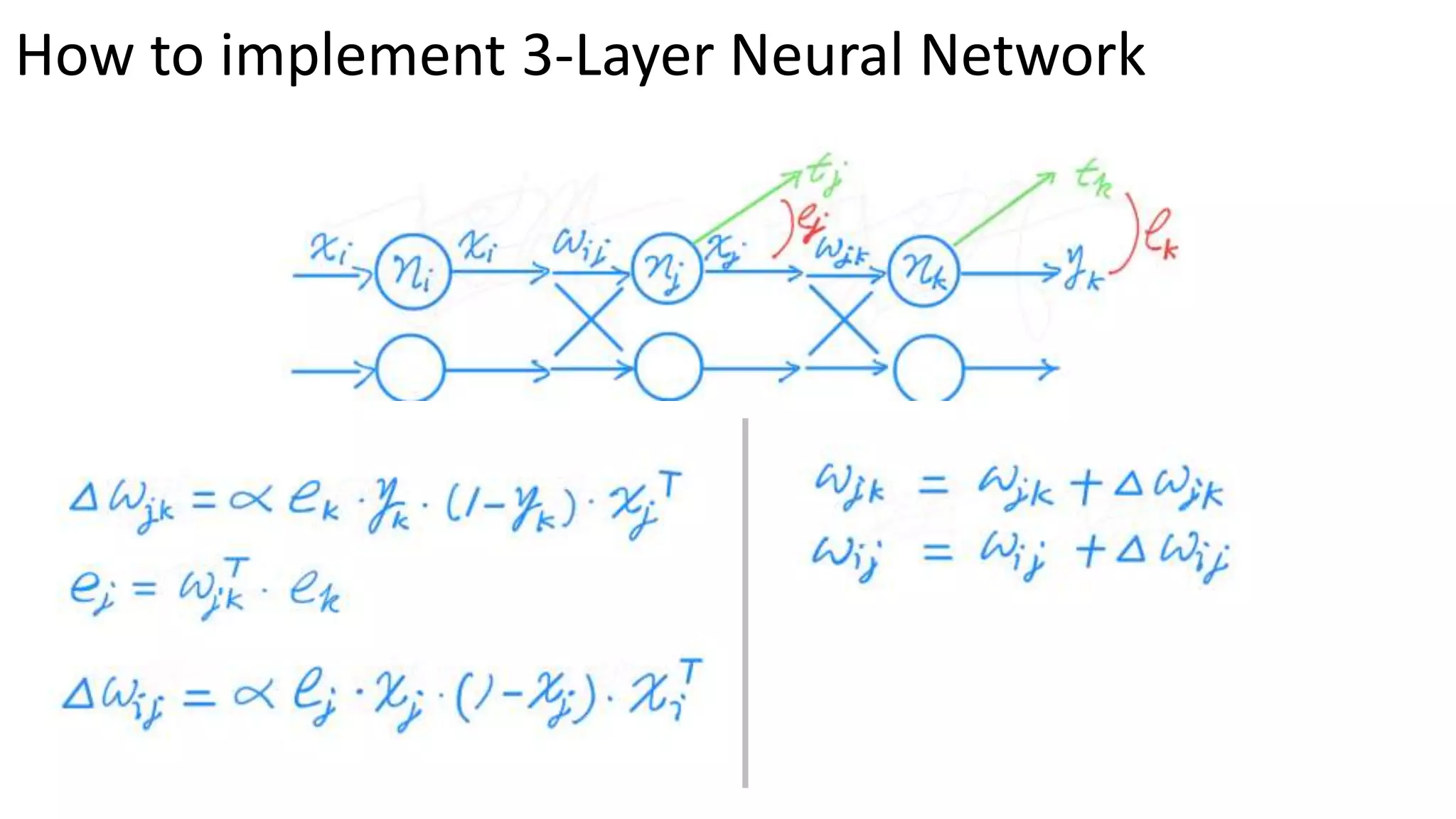

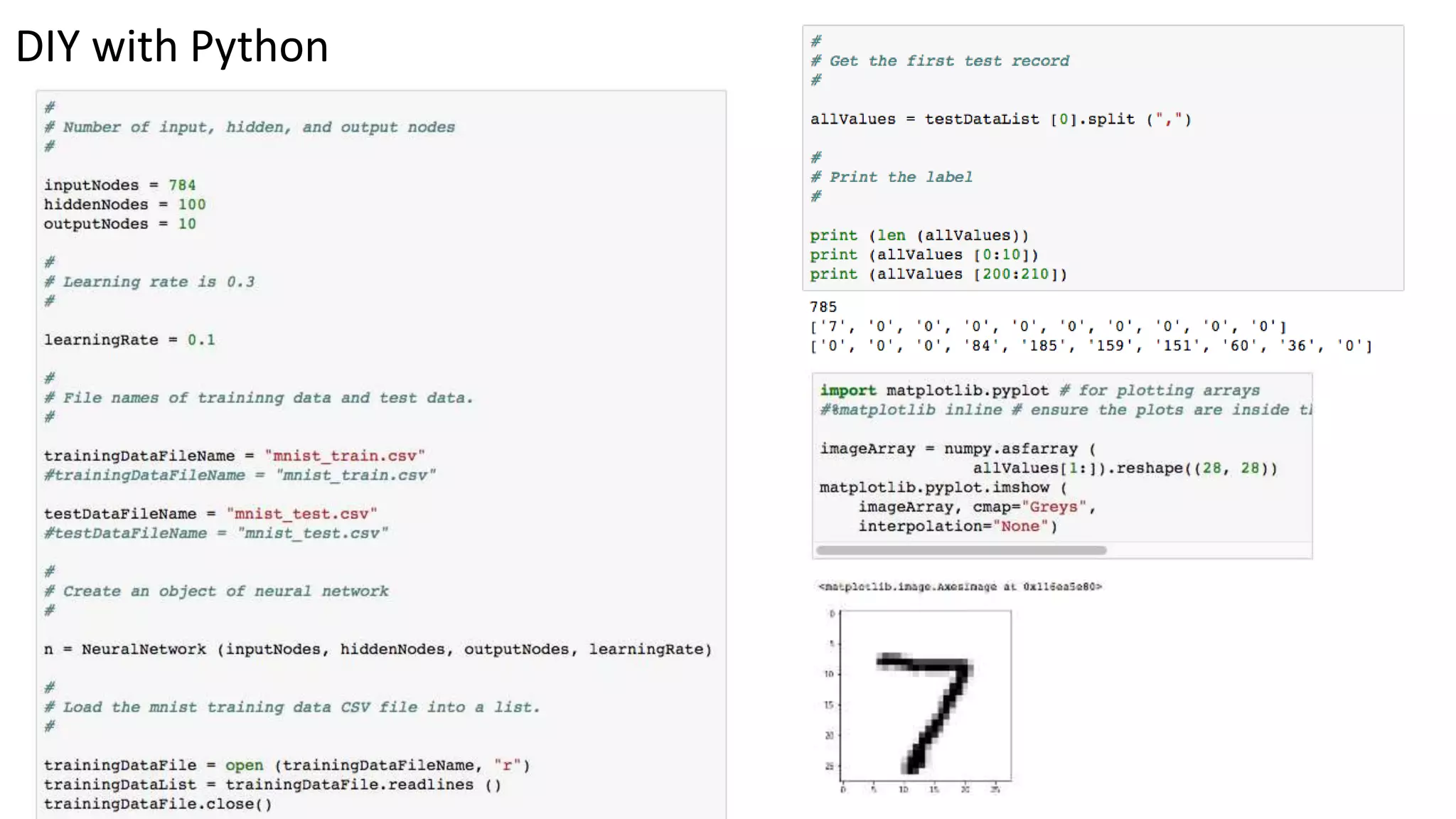

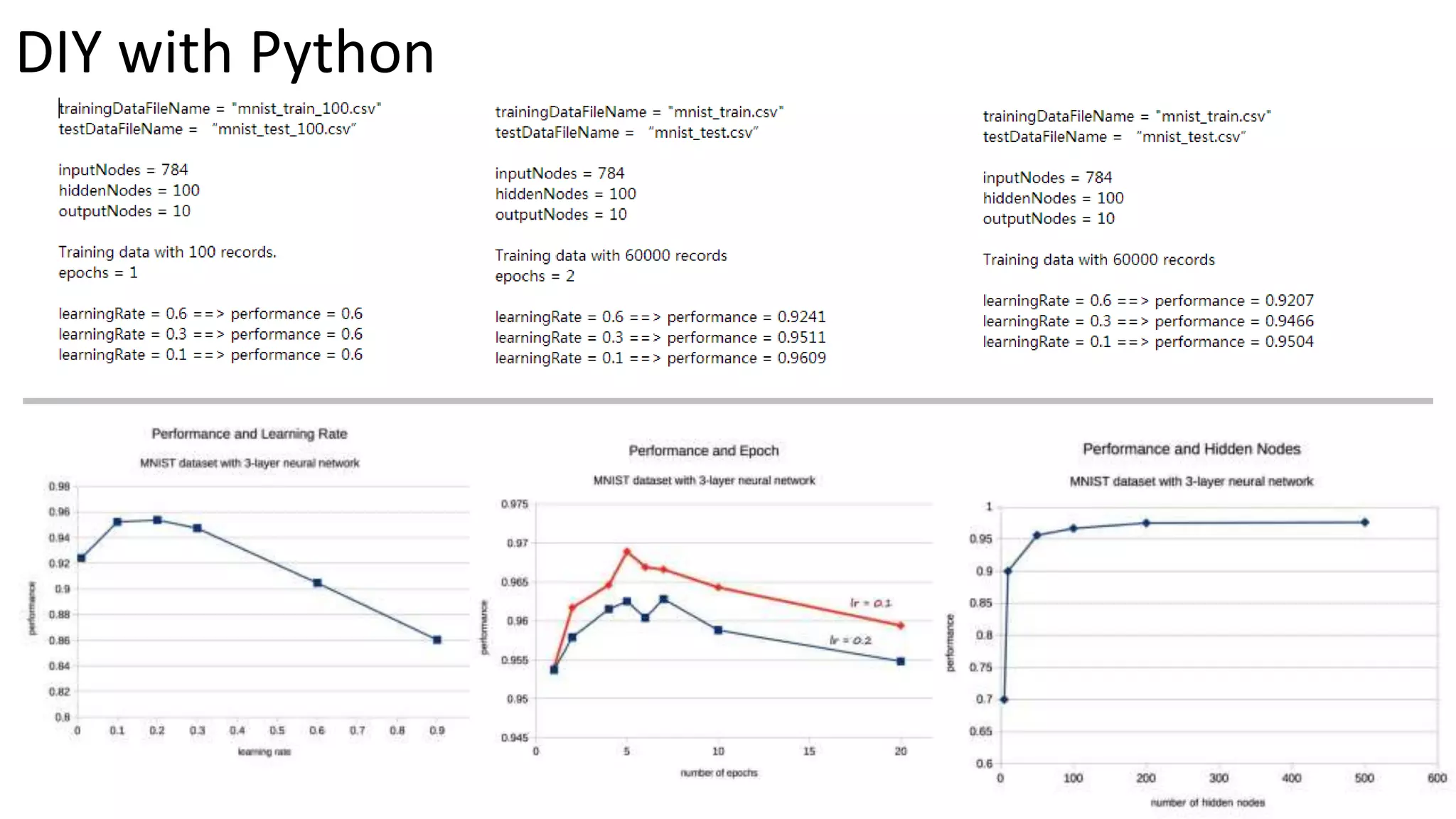

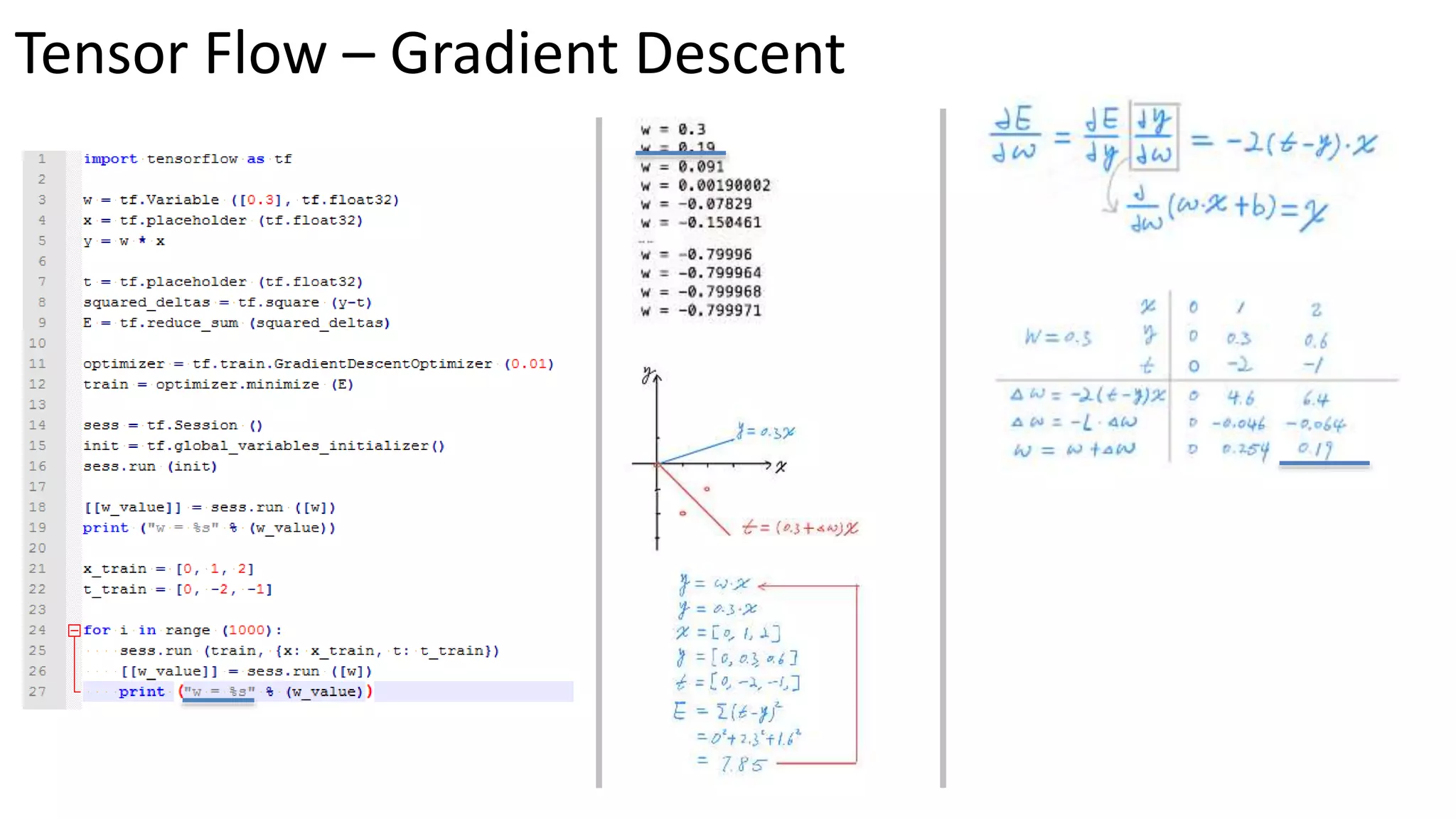

The document discusses the construction and training of neural networks, focusing on simple classifiers and key concepts like learning rates, error functions, and the sigmoid function. It provides an overview of 3-layer and n-layer neural networks, detailing the back-propagation of errors and the process of gradient descent. Additionally, it includes implementation resources for building neural networks using Python and TensorFlow.