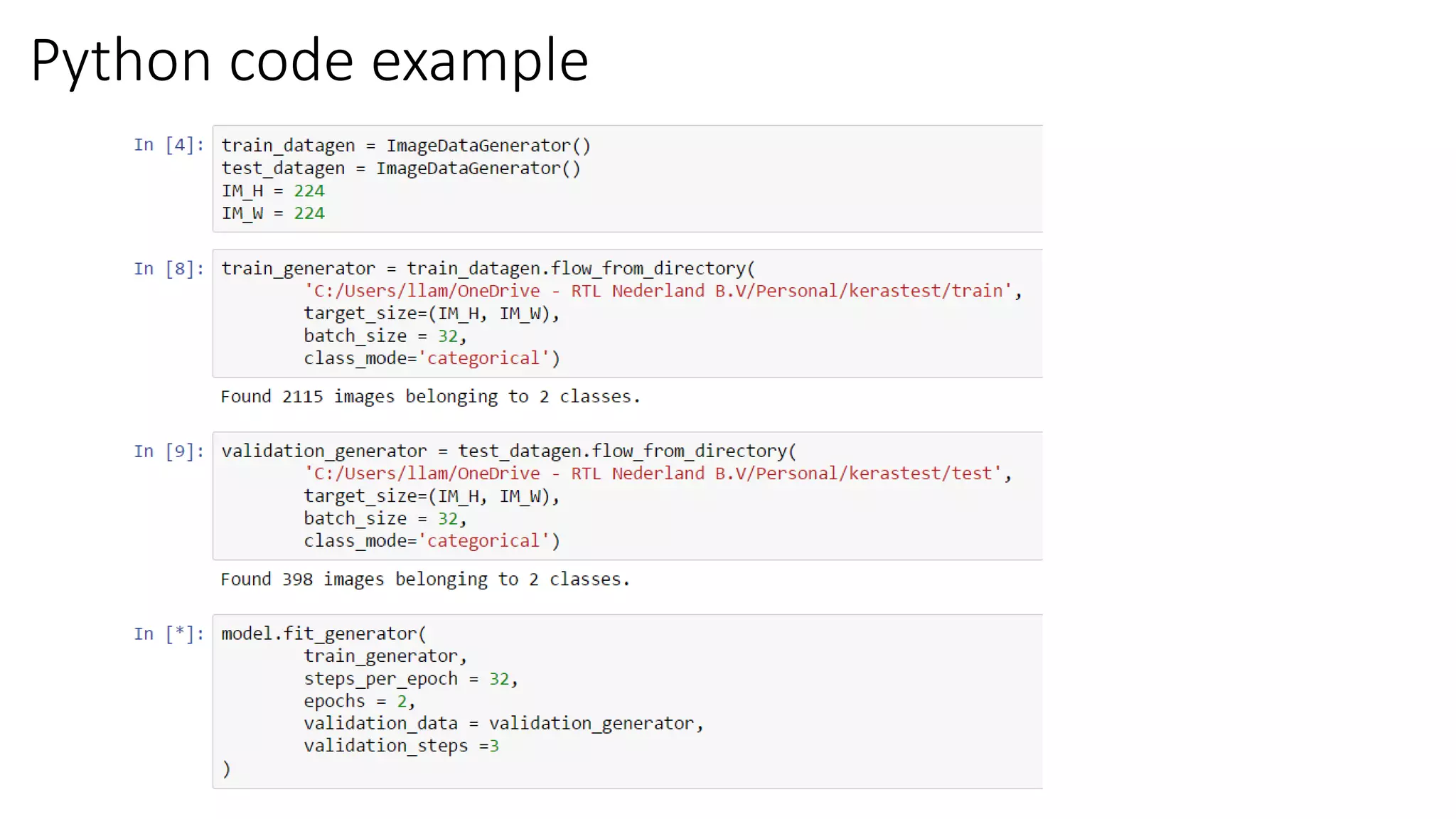

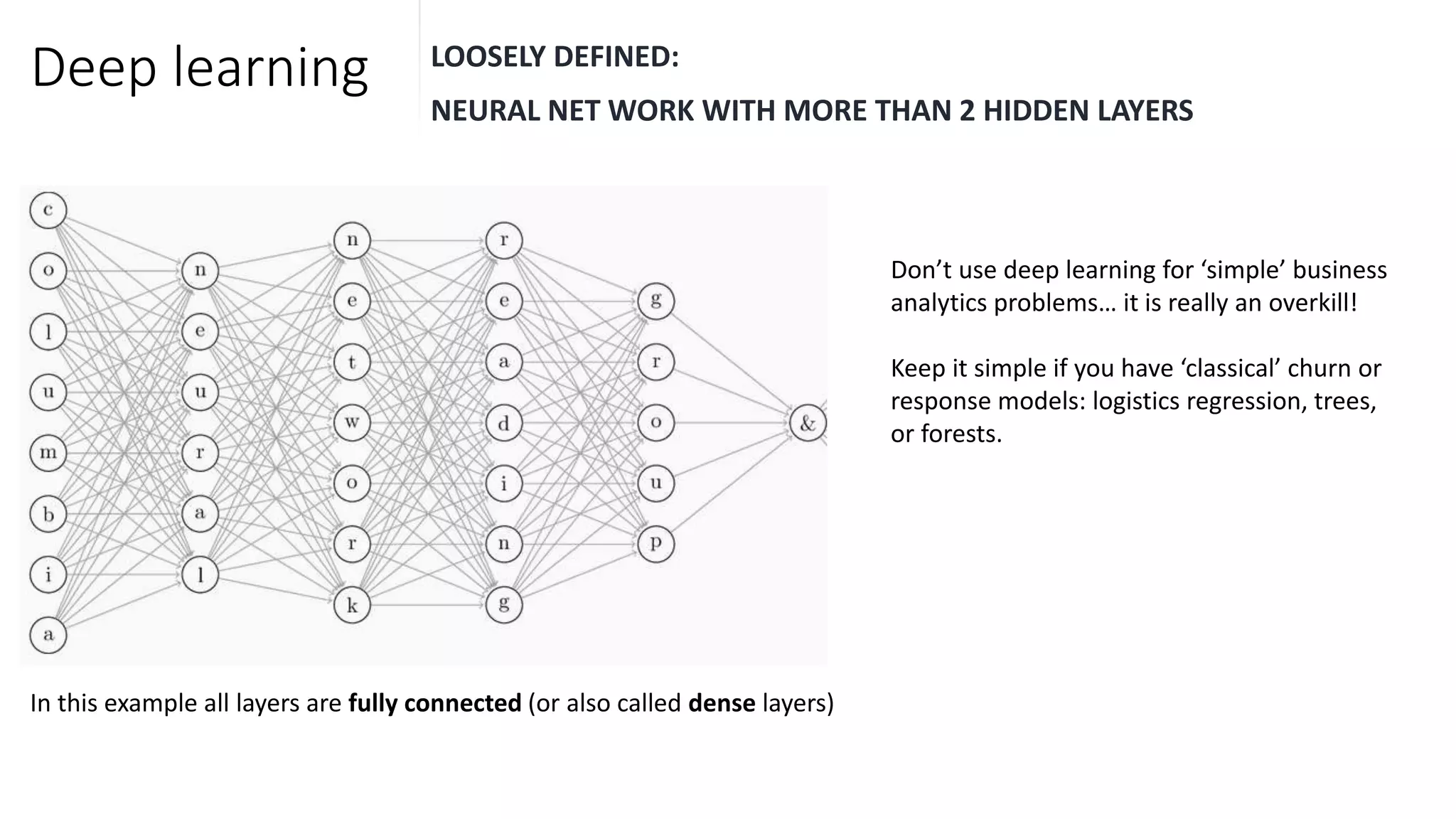

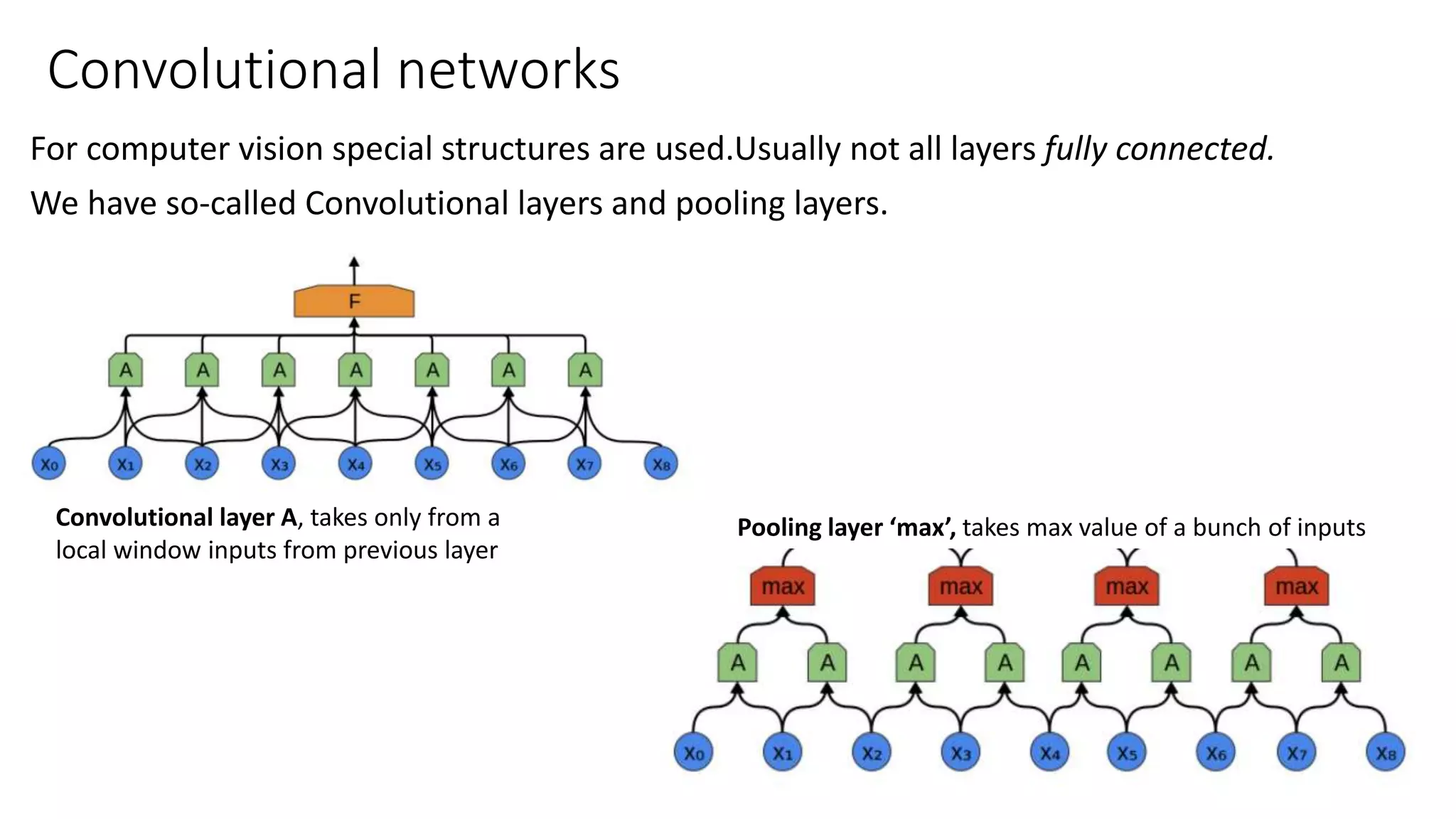

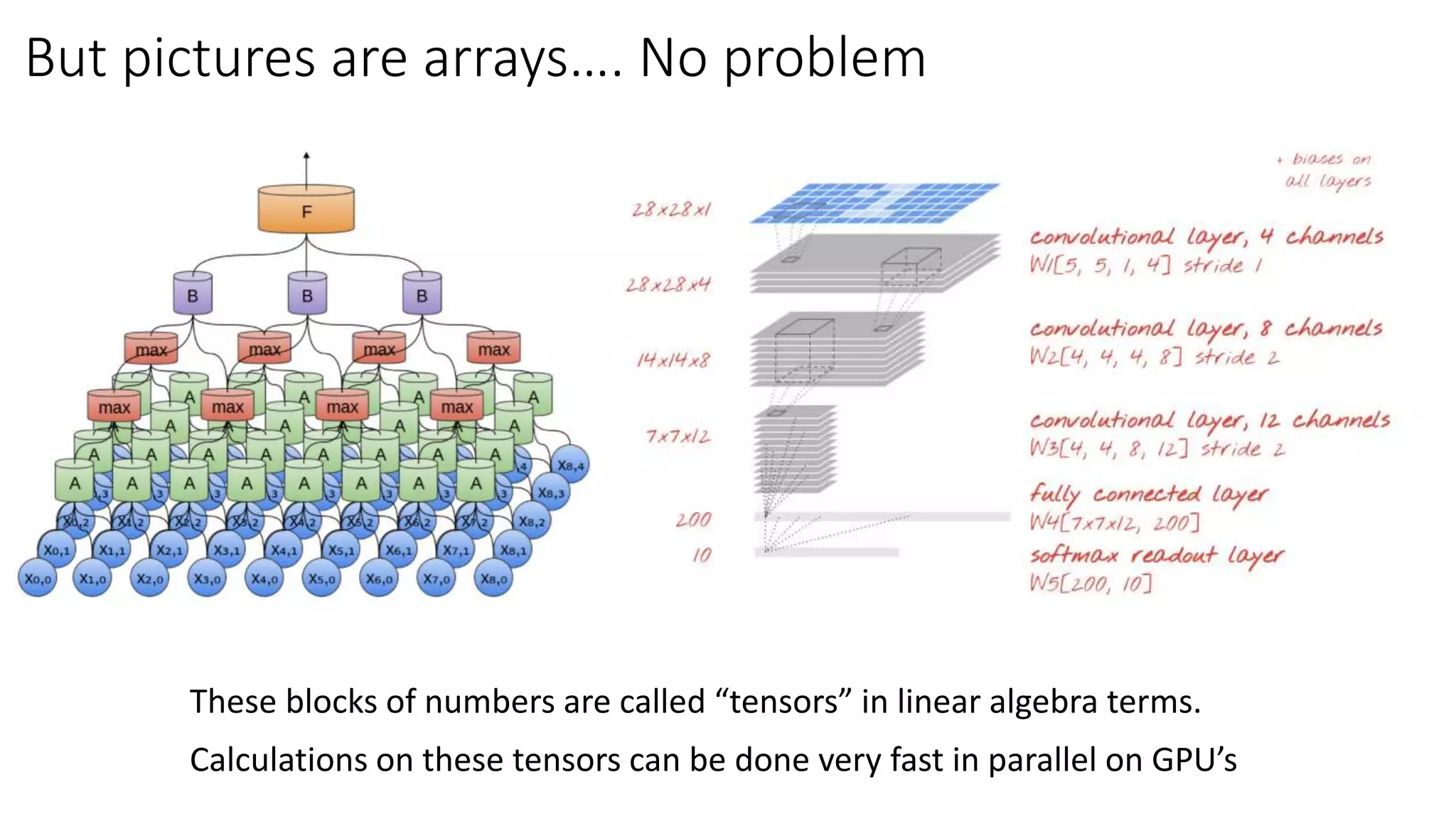

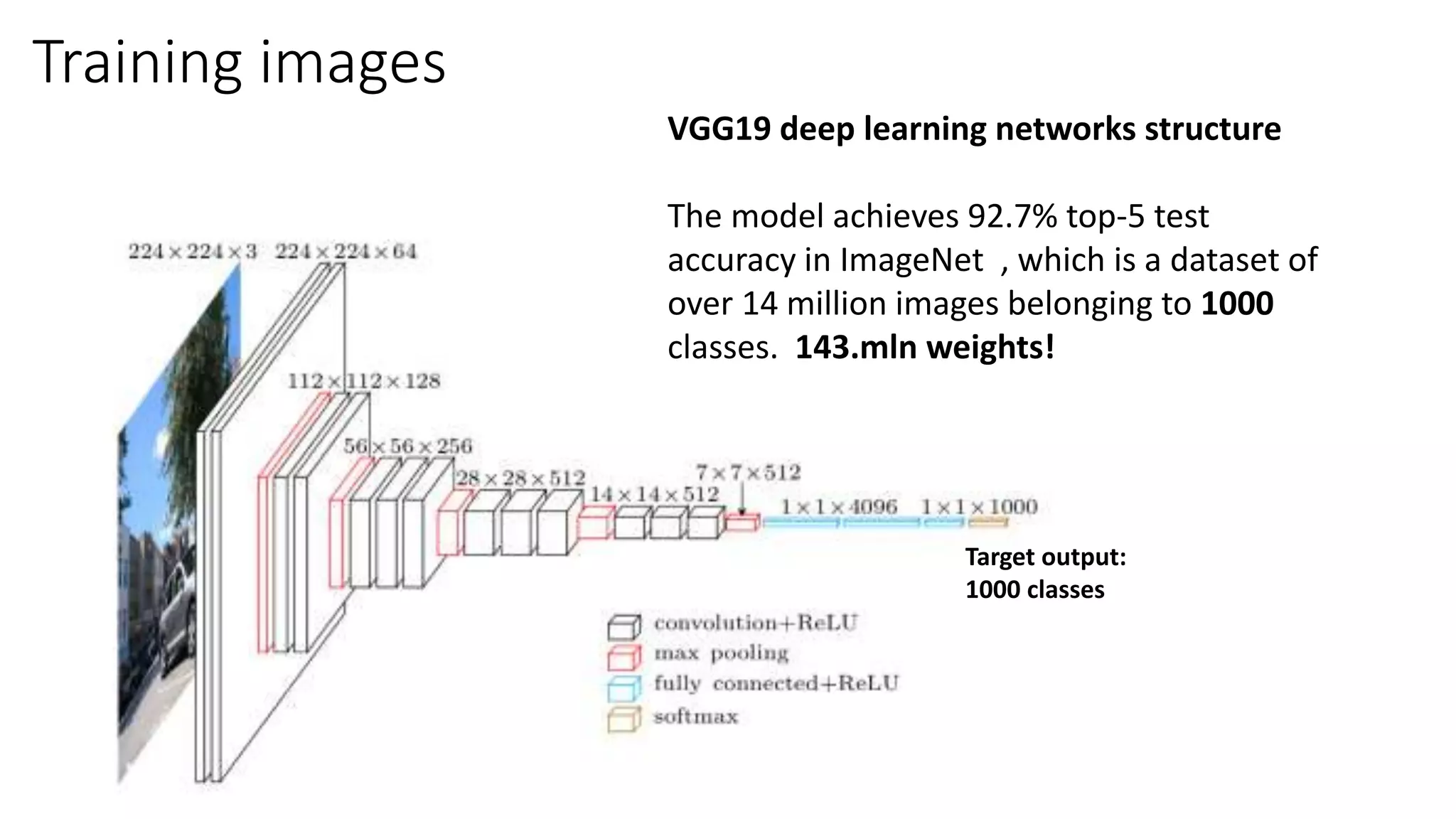

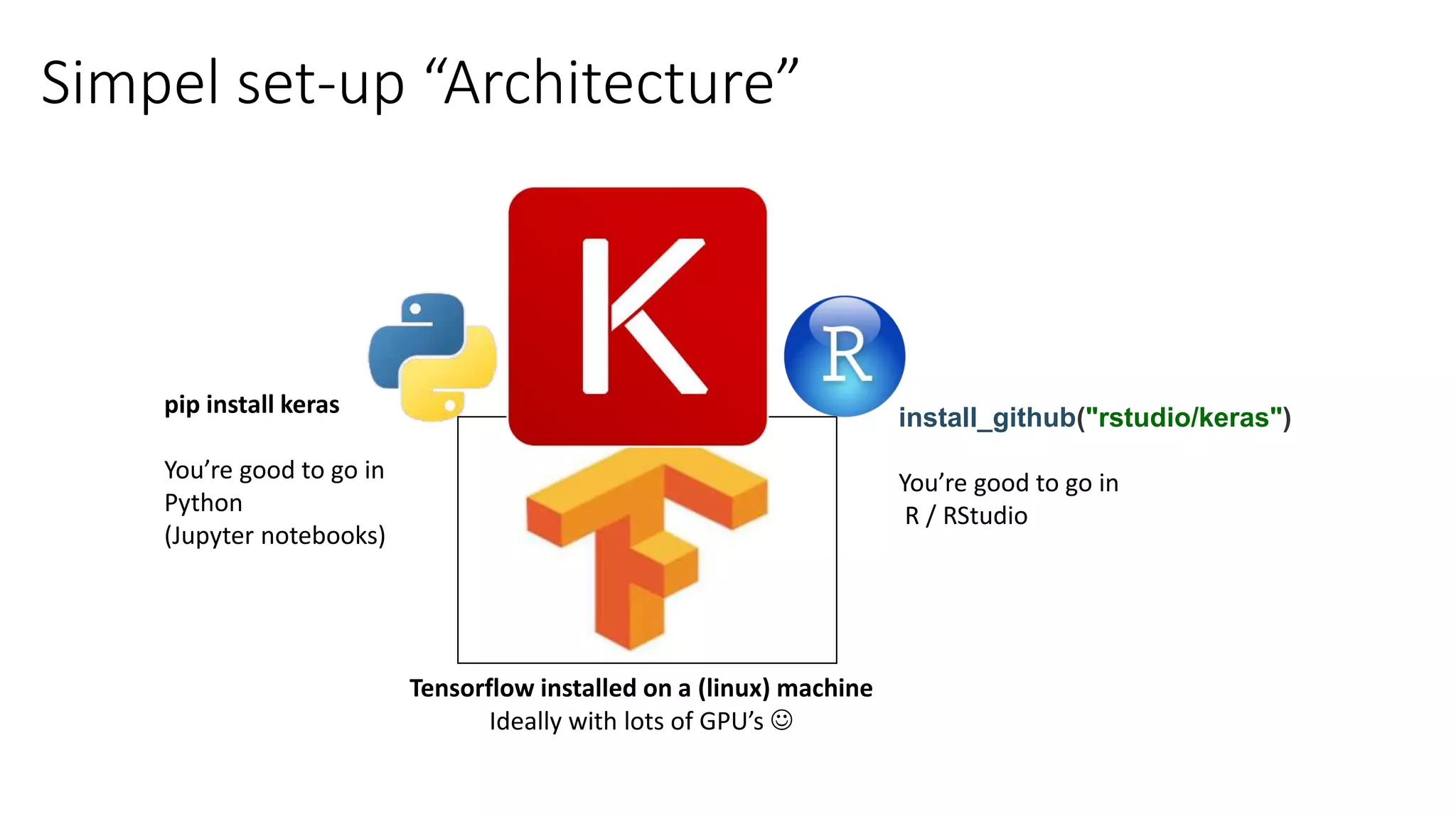

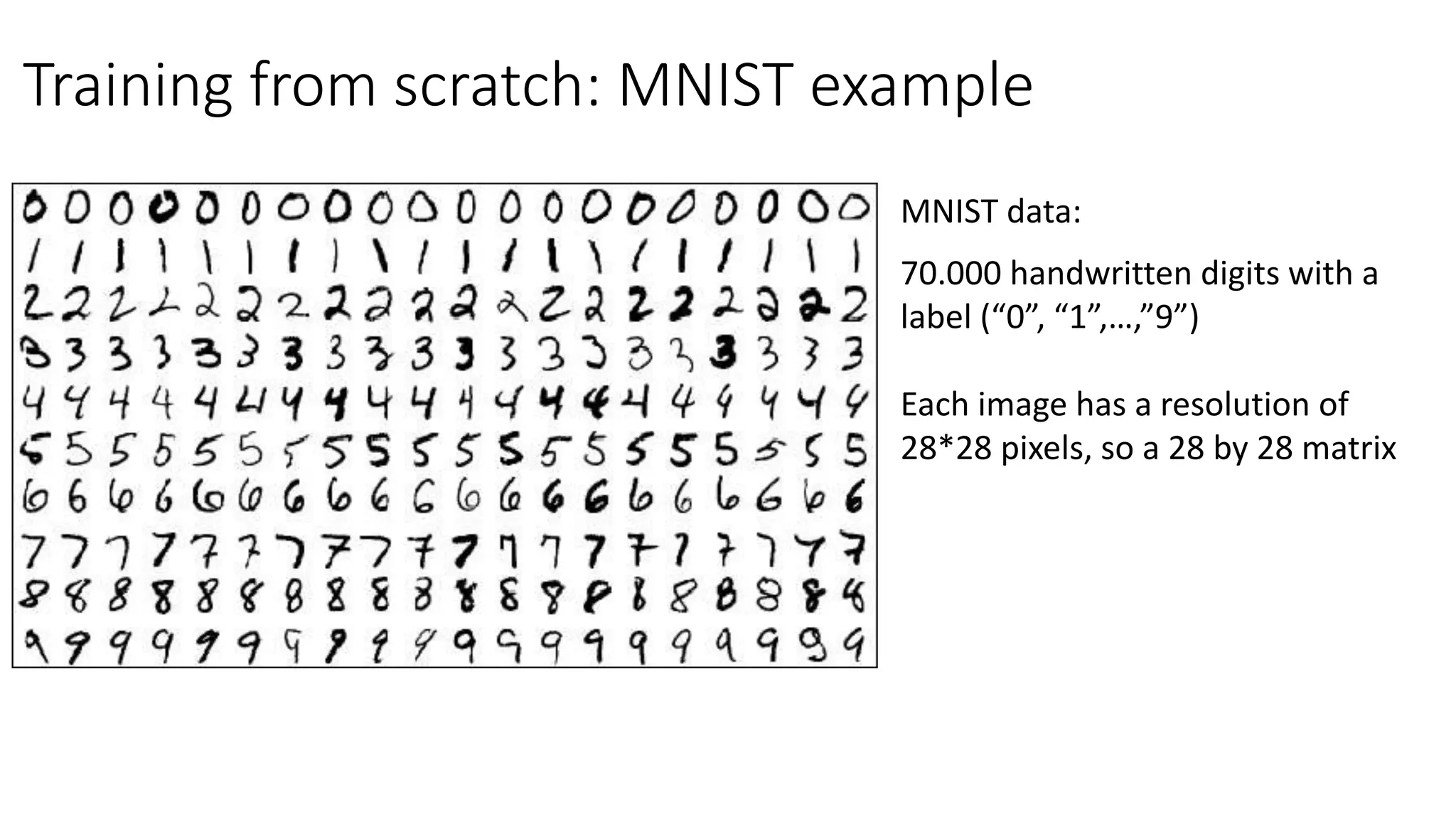

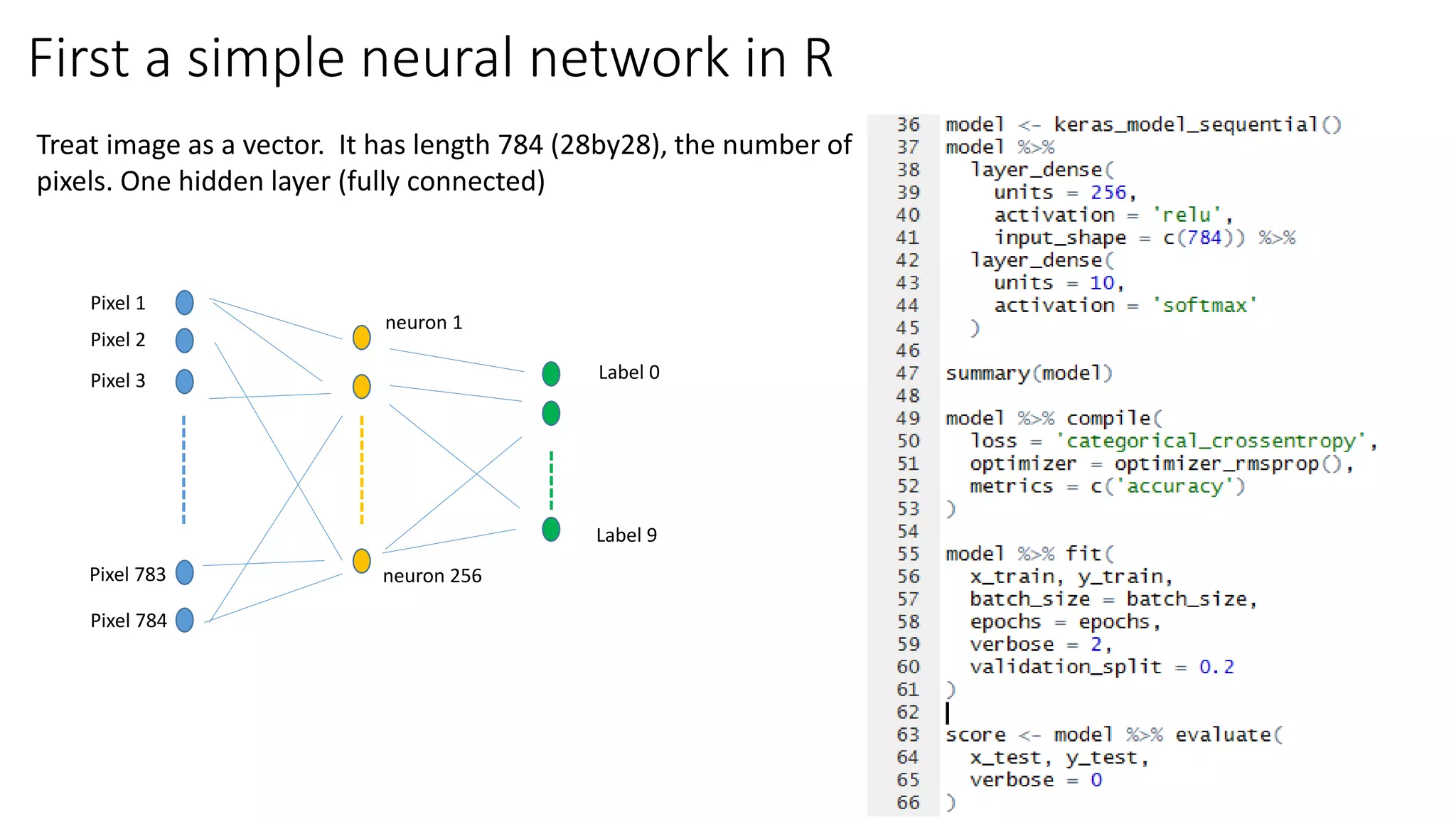

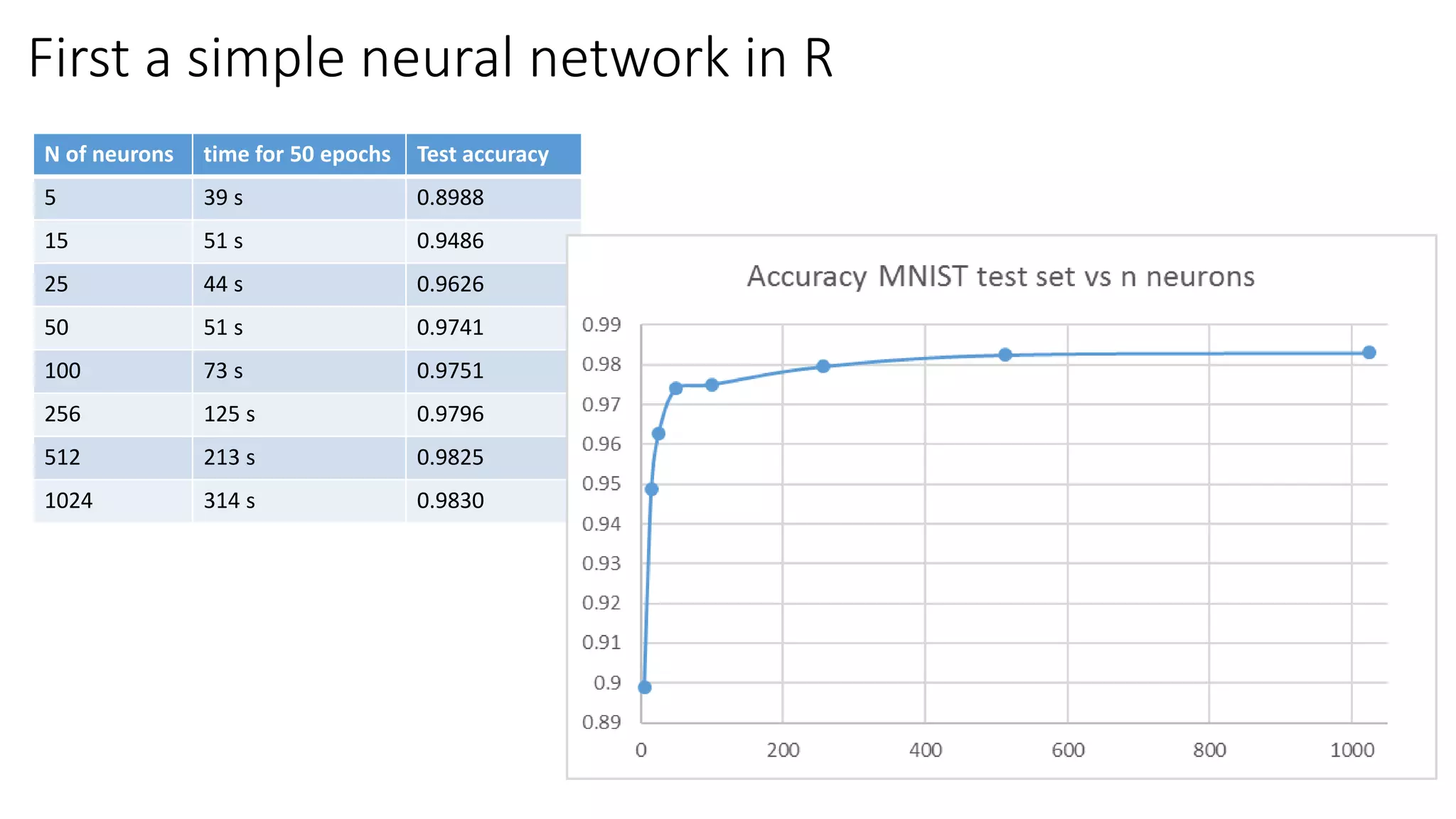

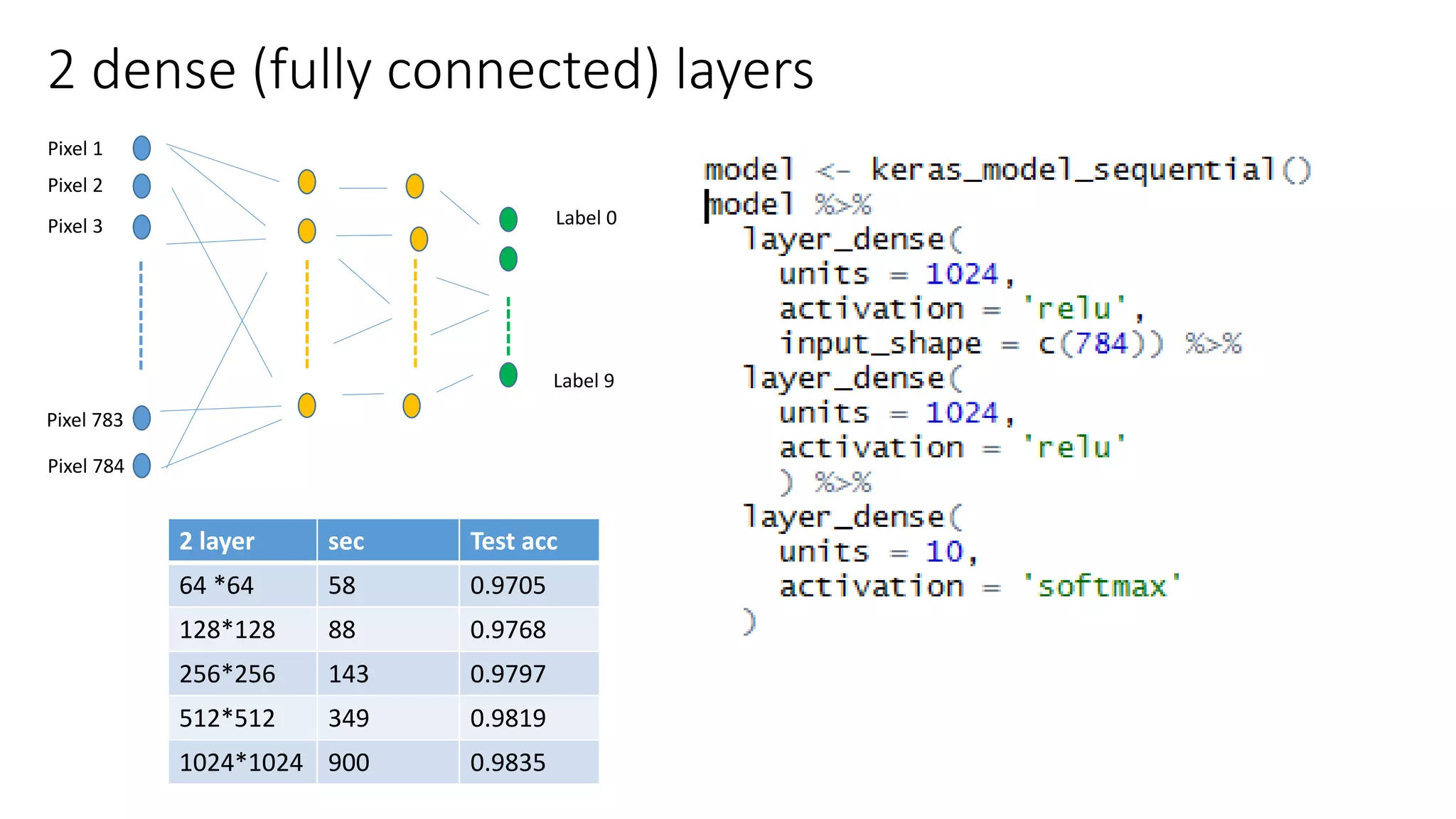

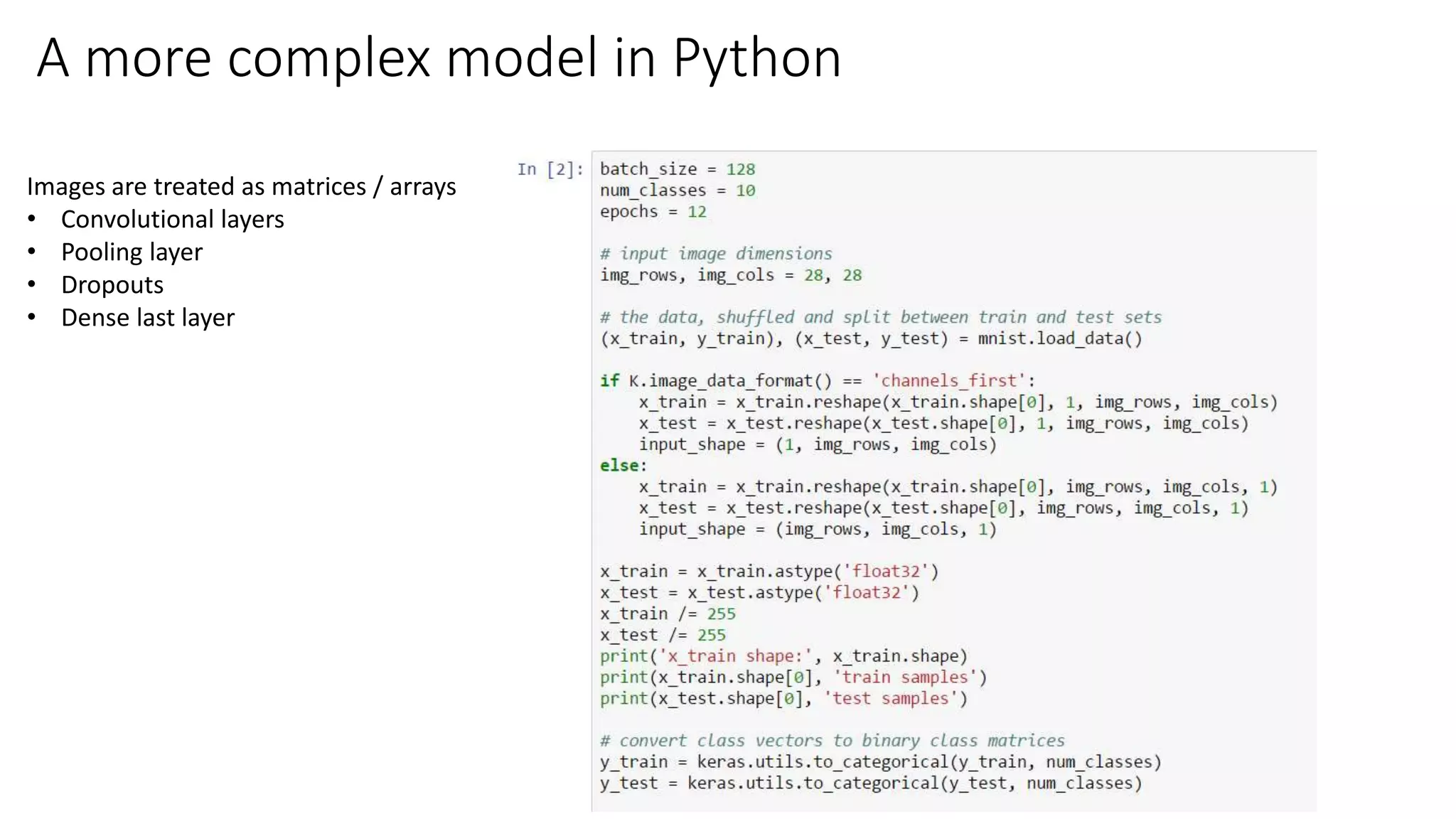

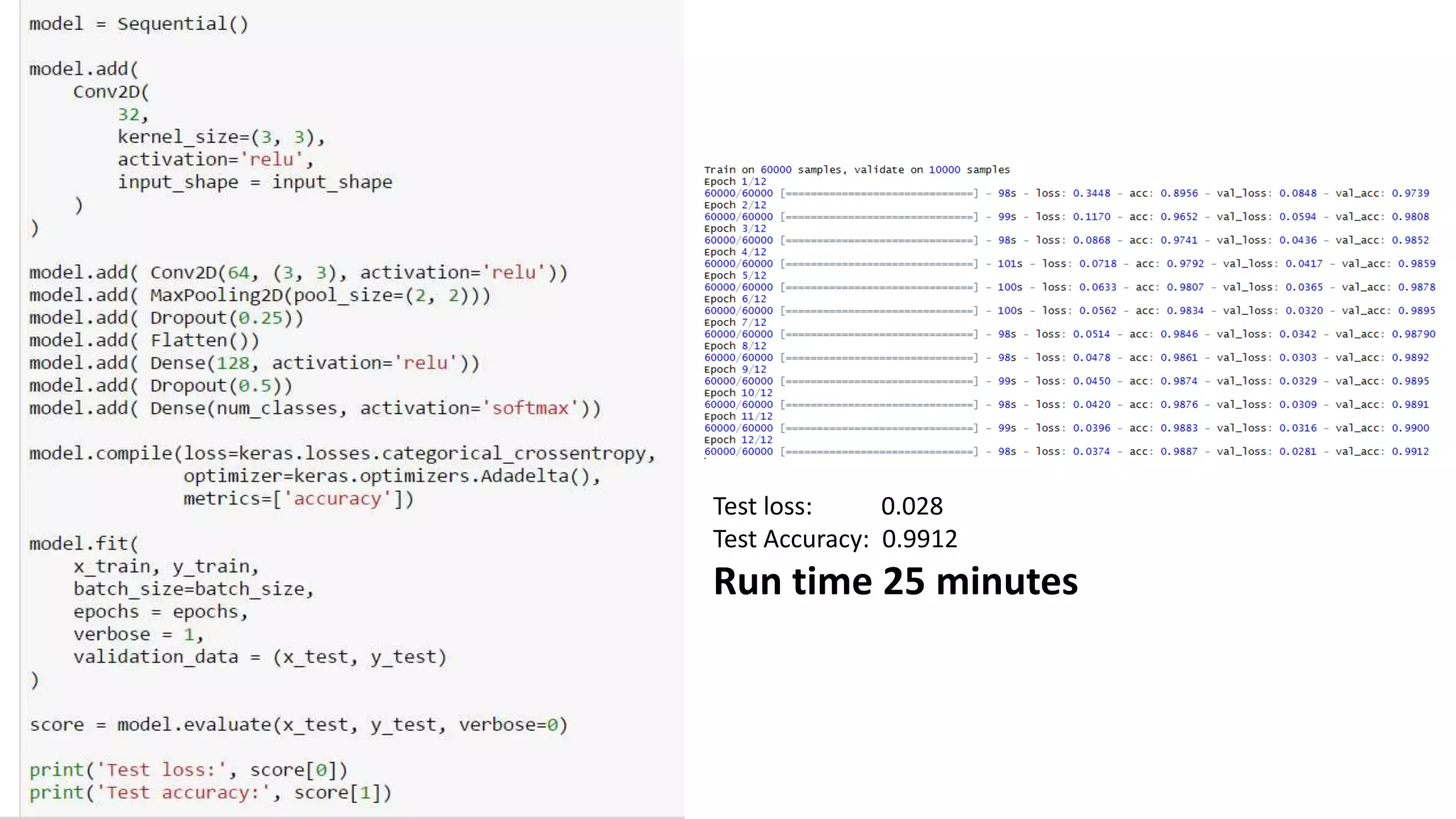

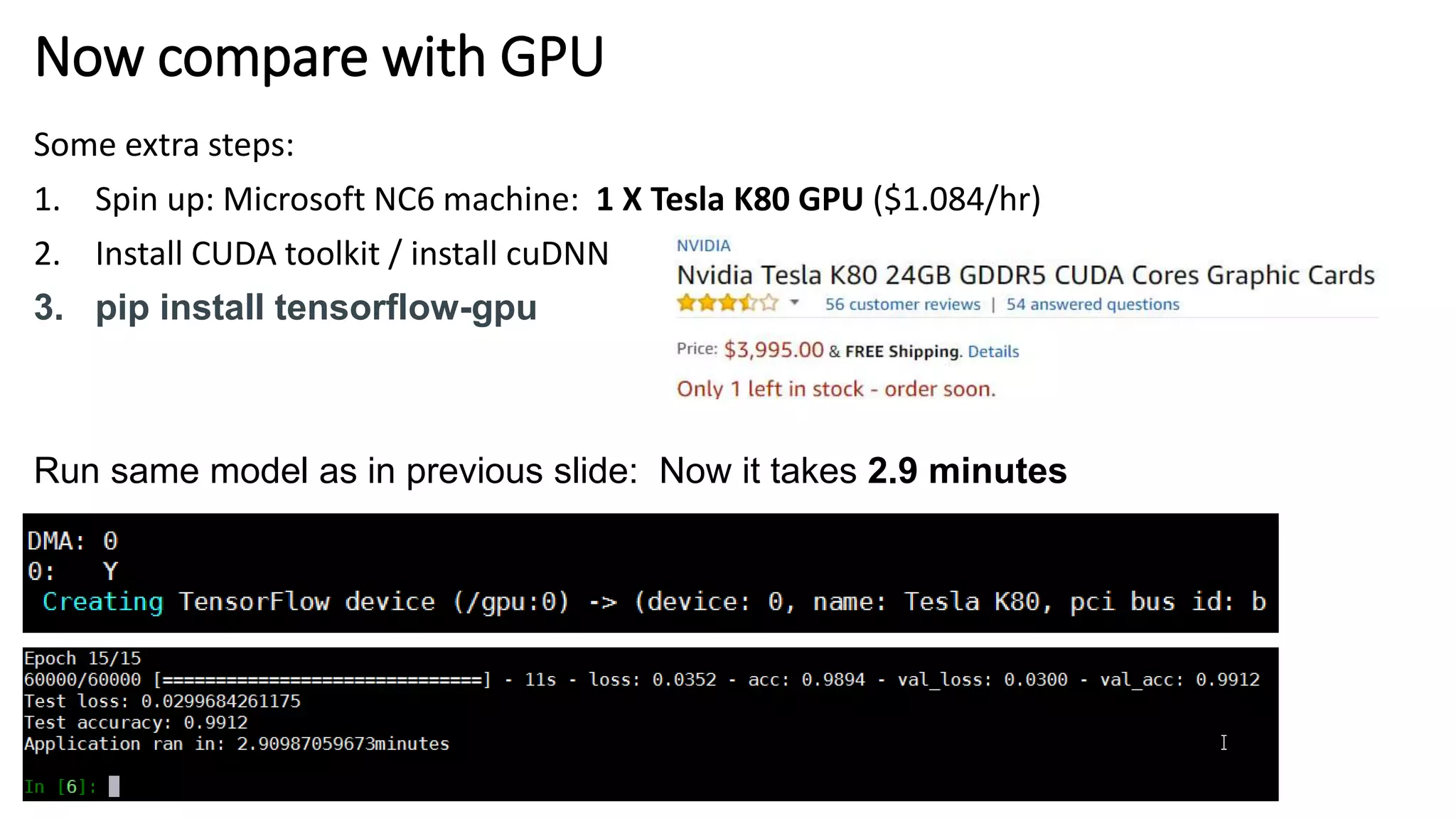

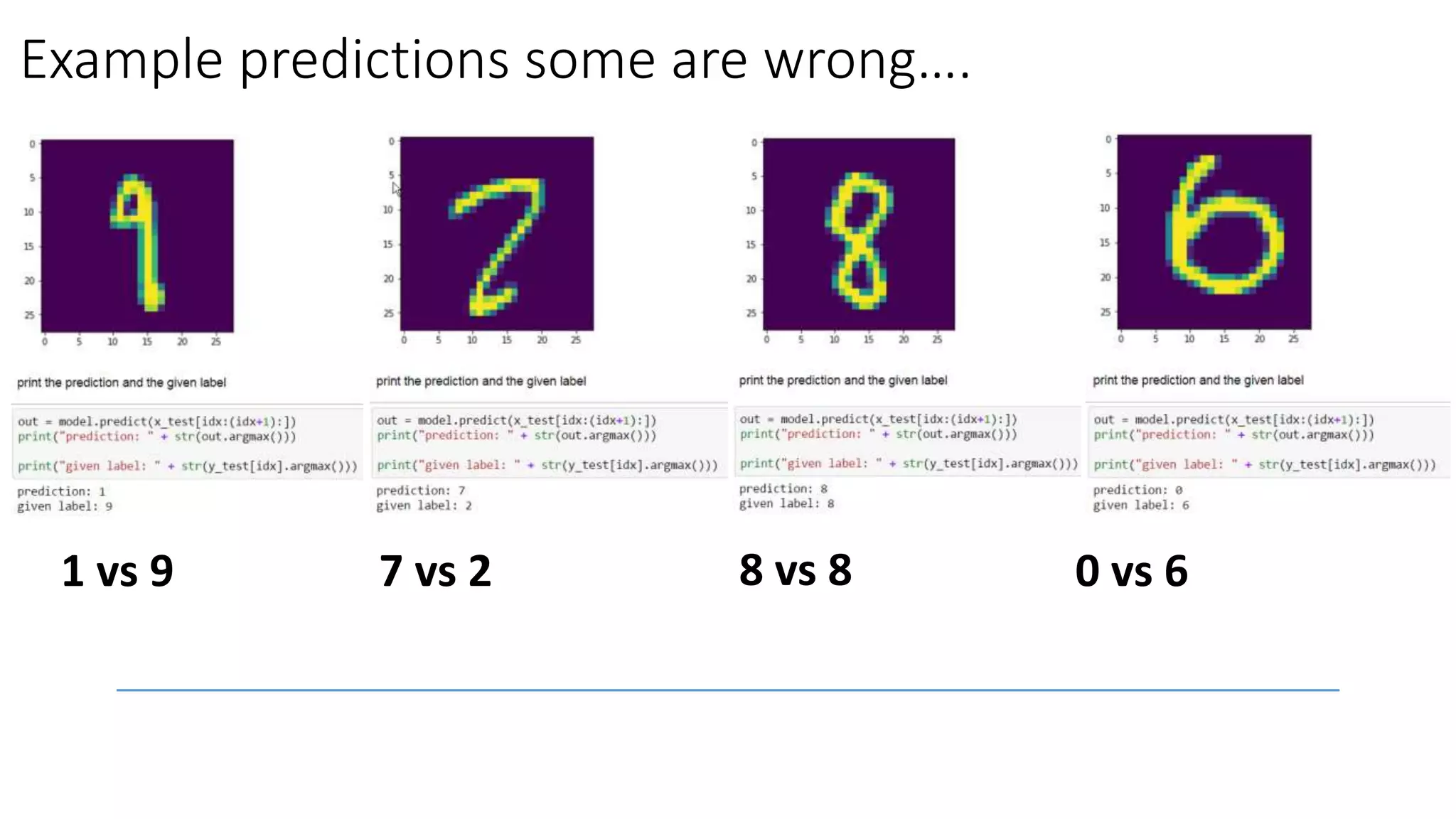

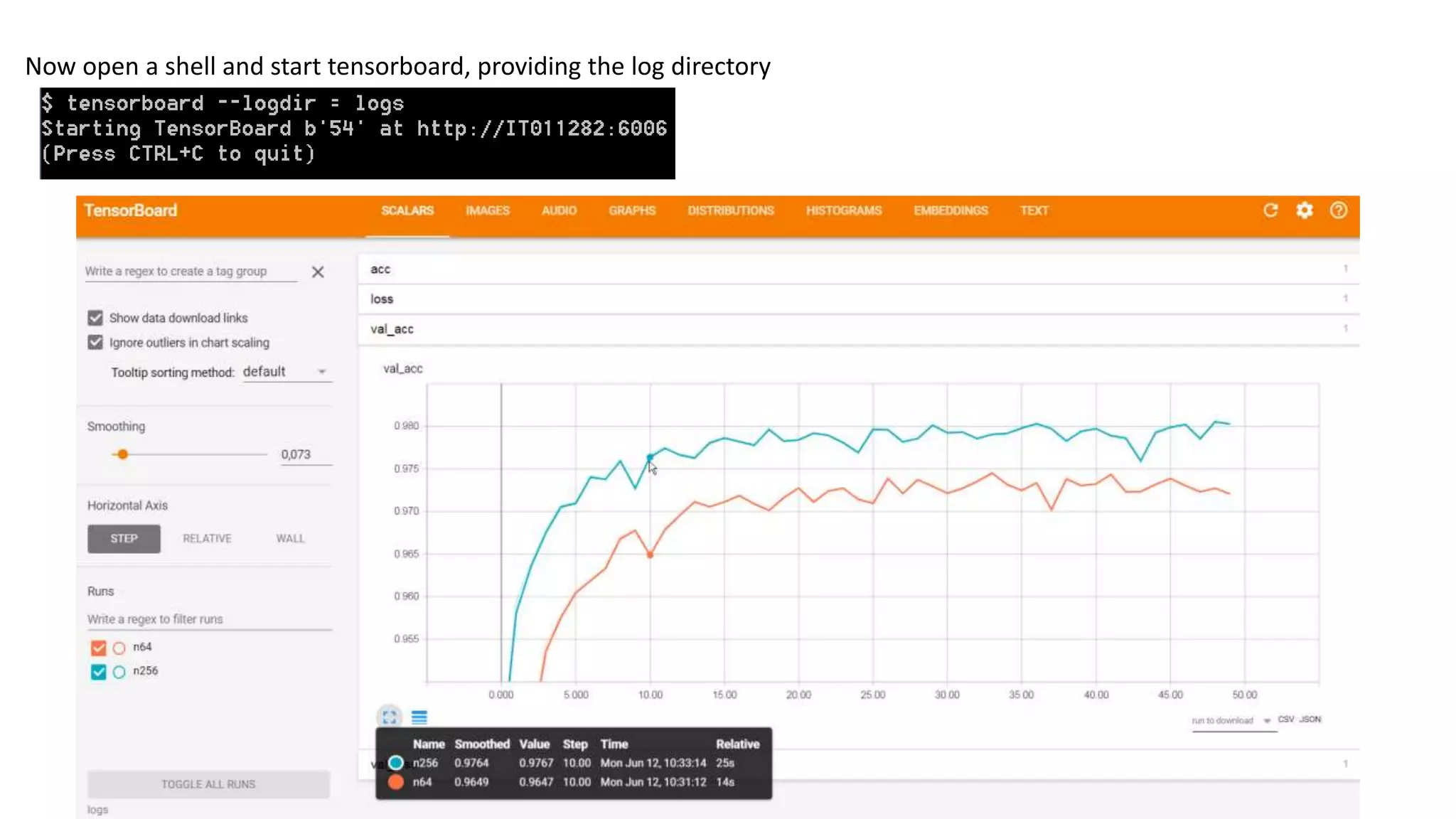

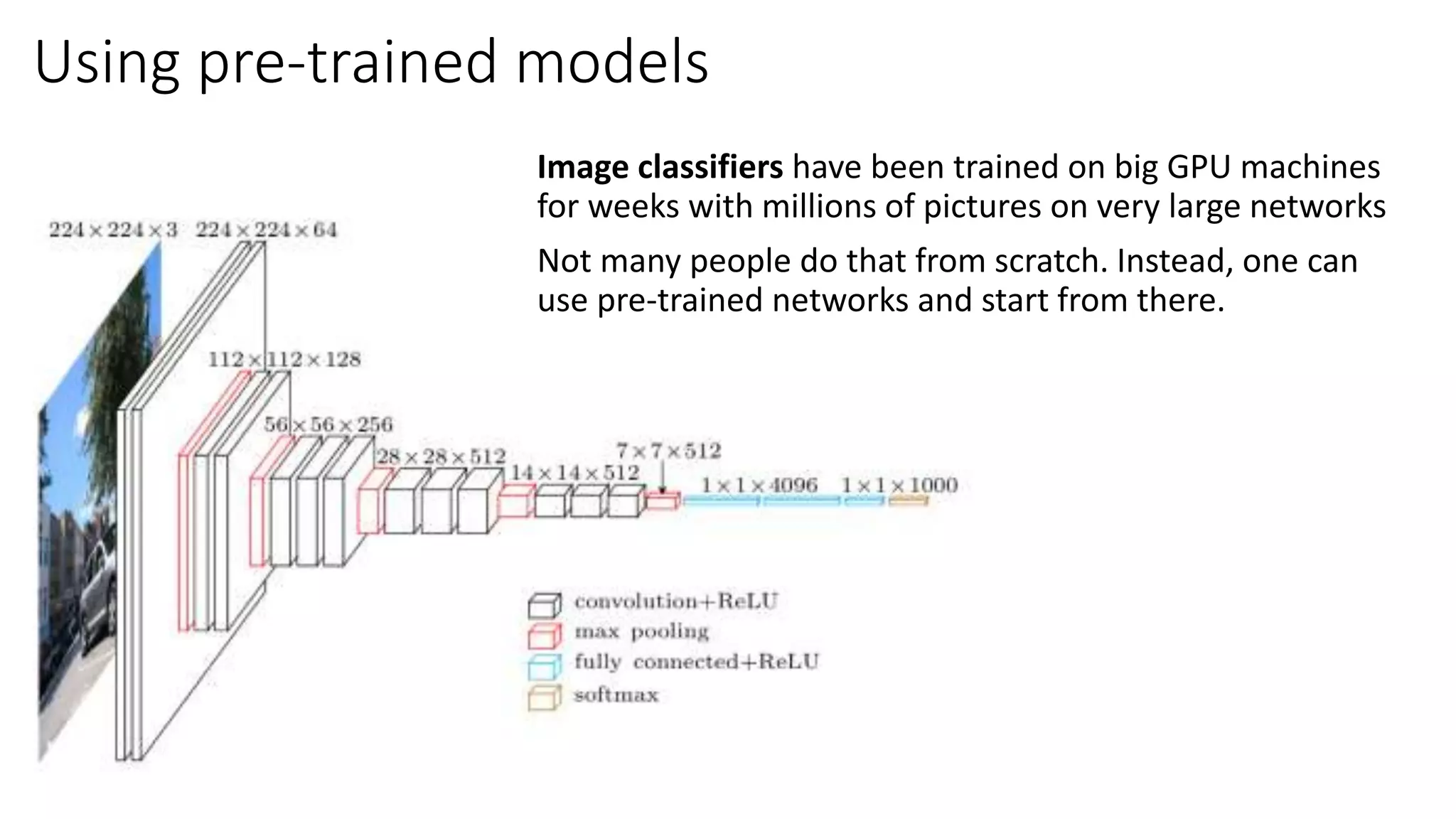

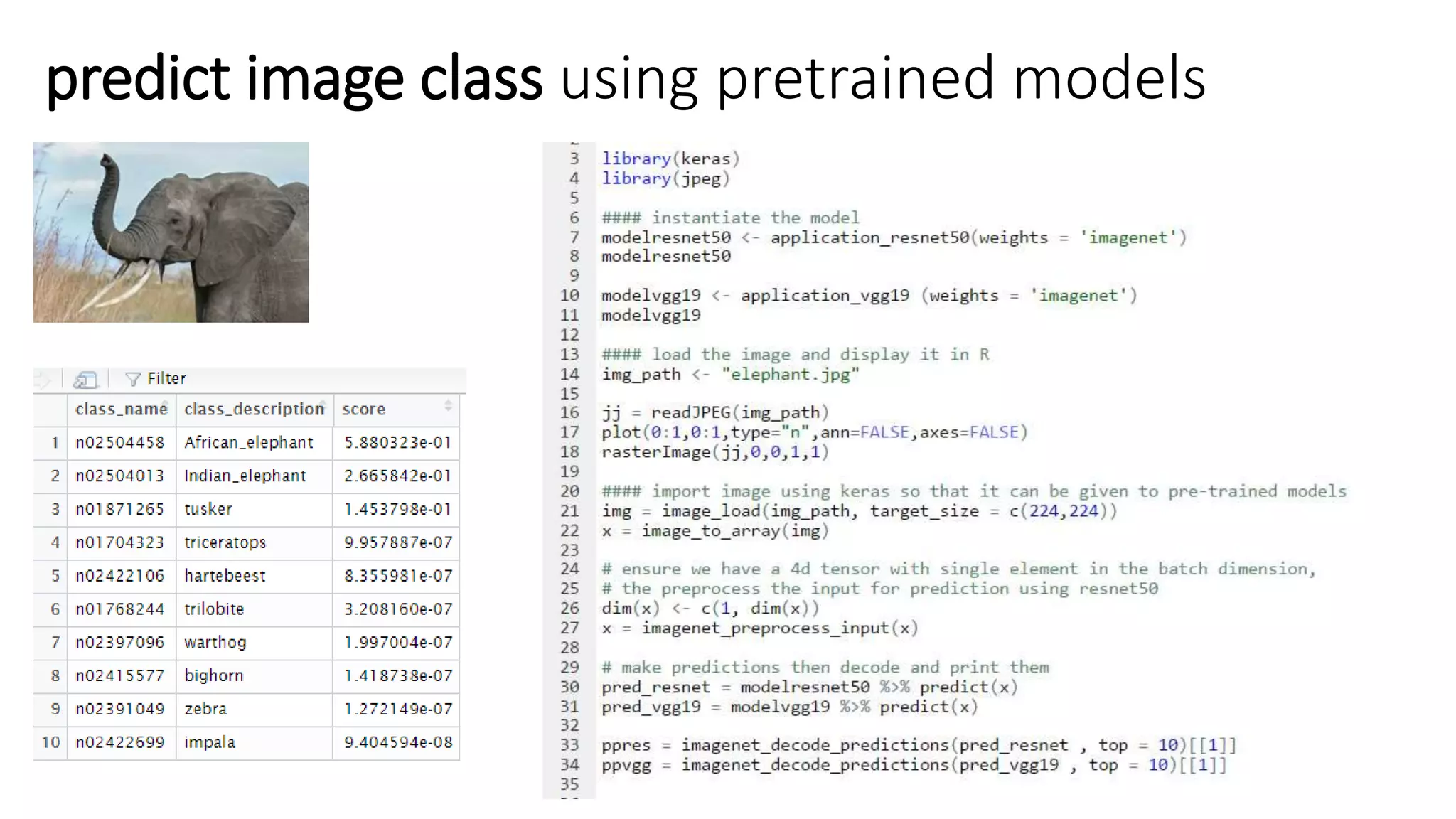

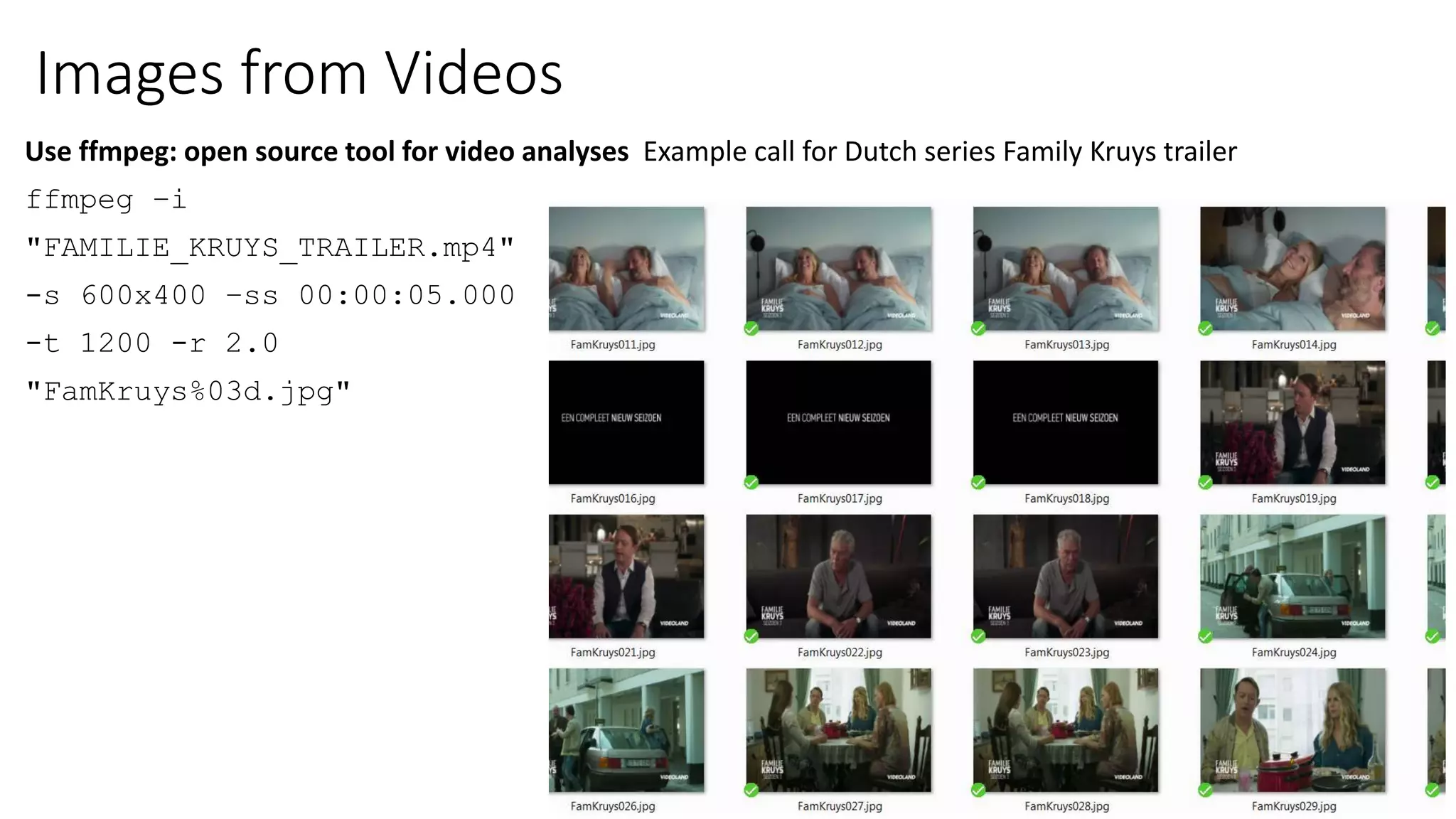

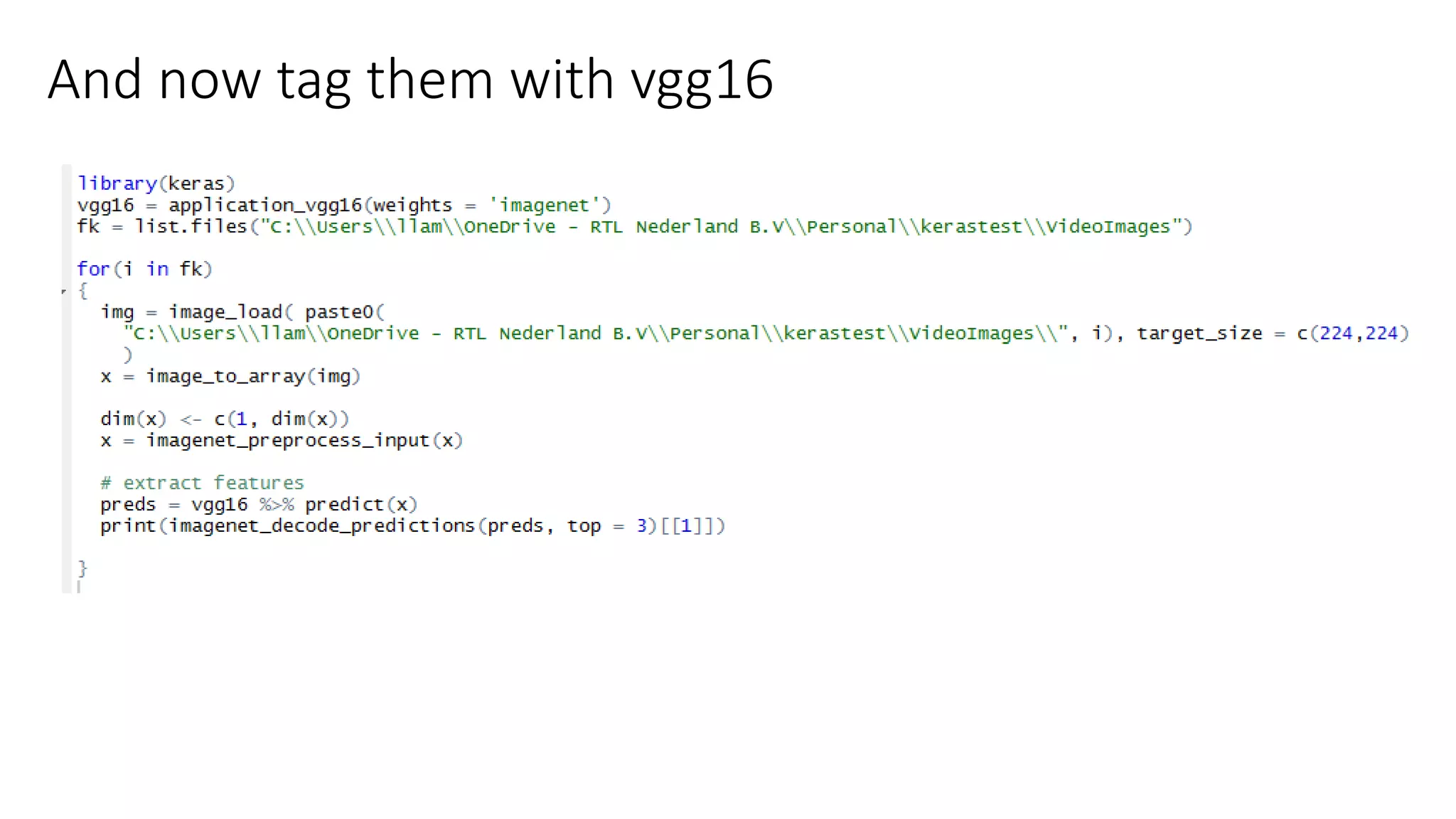

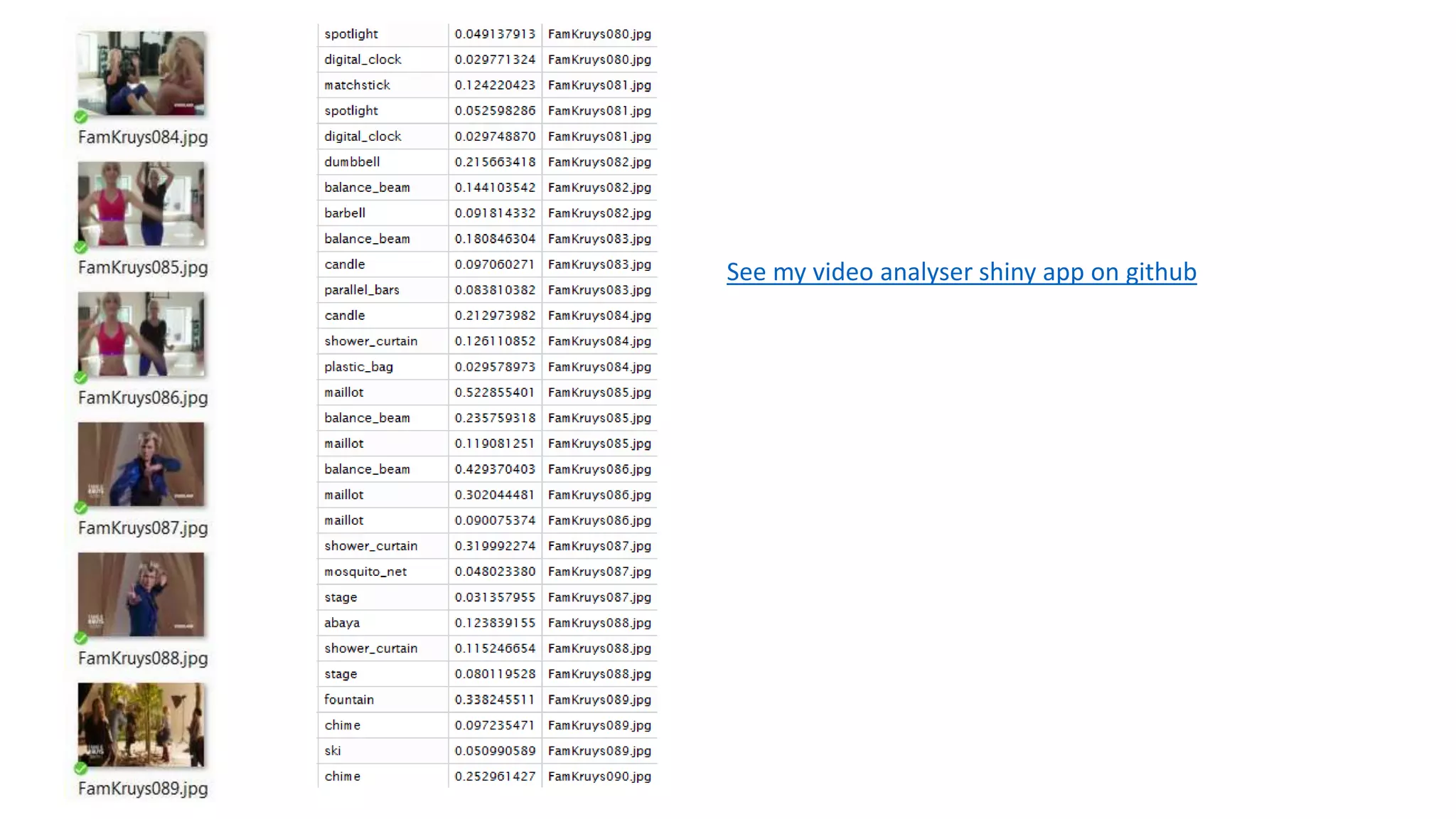

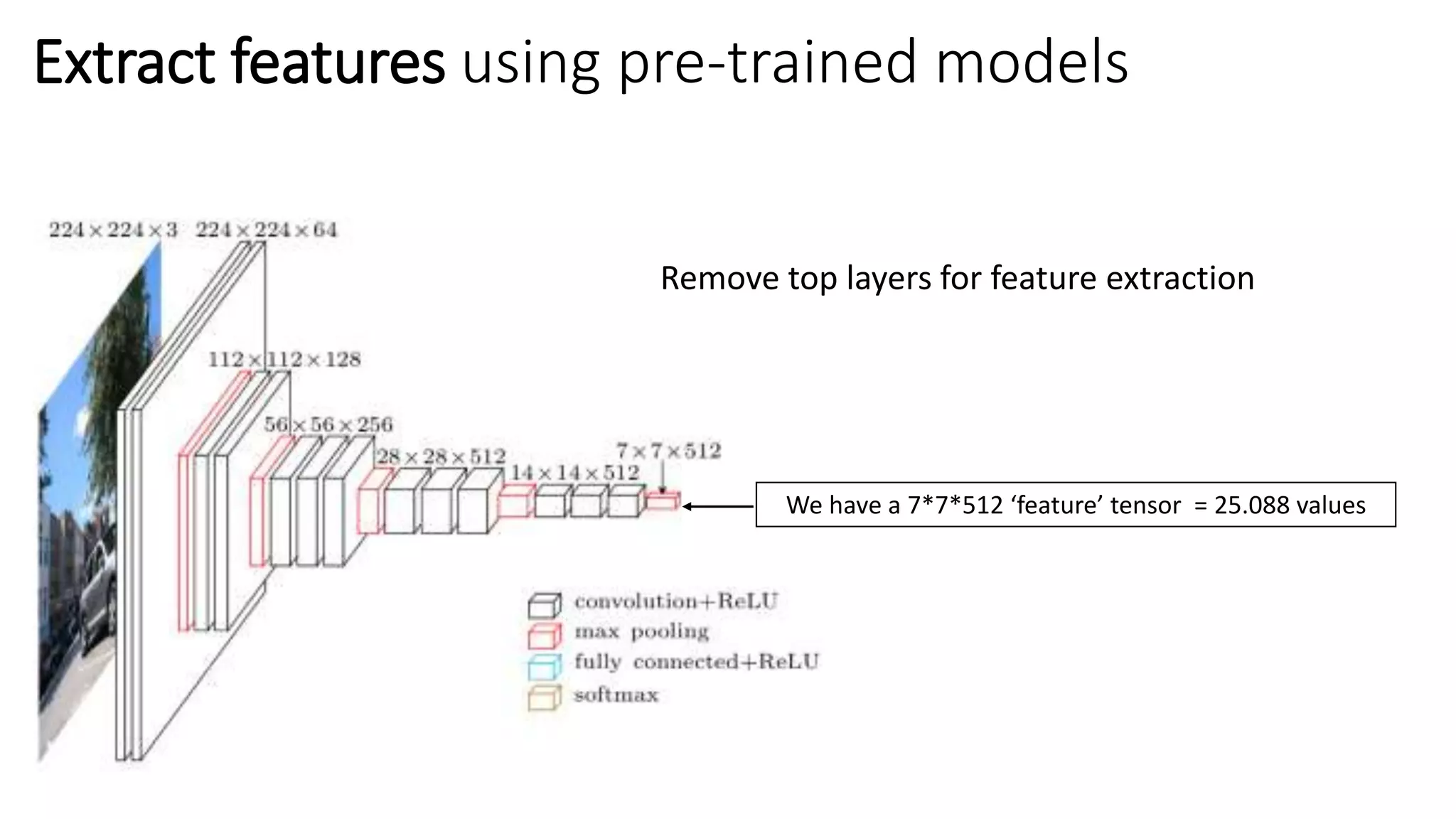

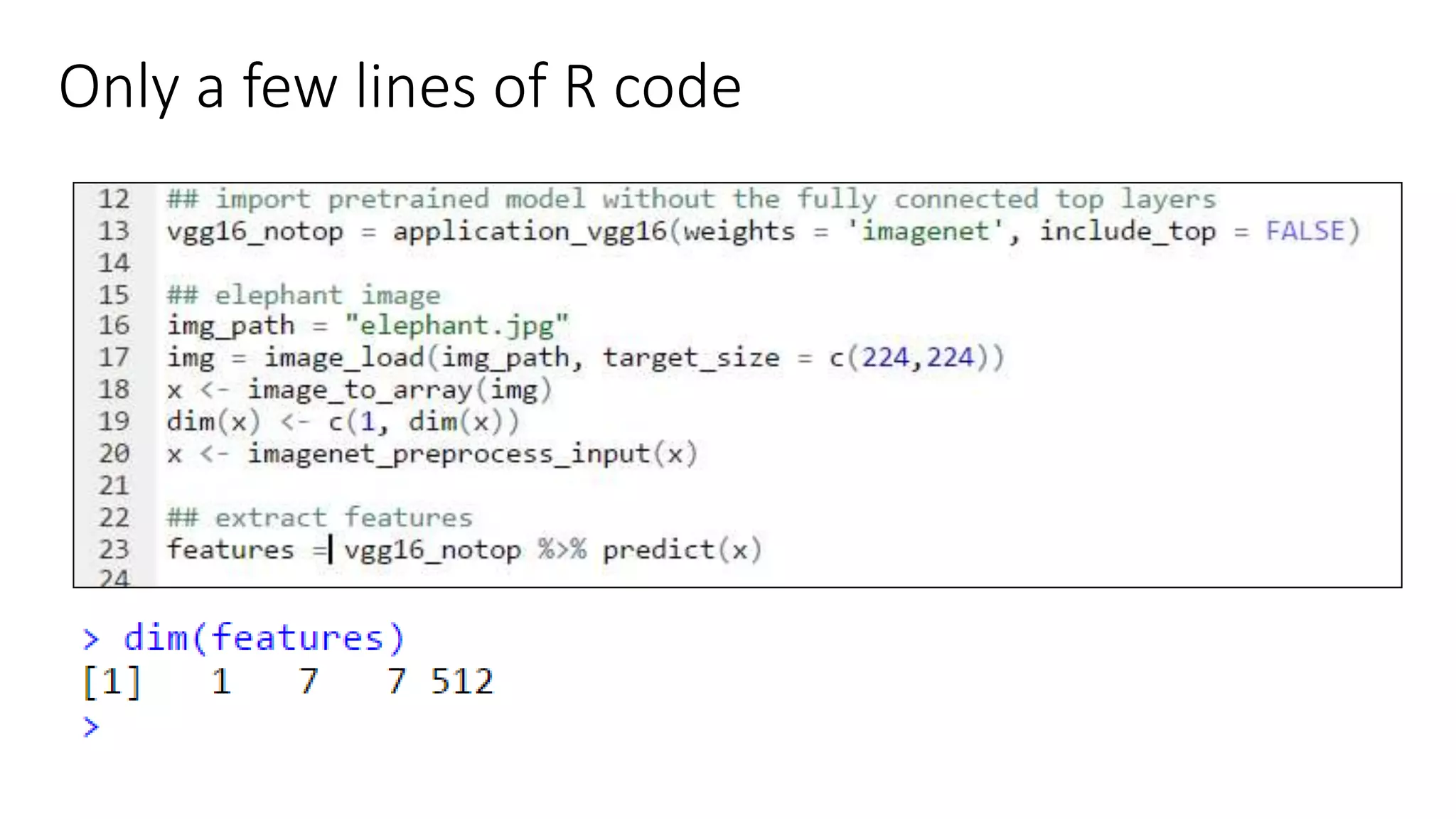

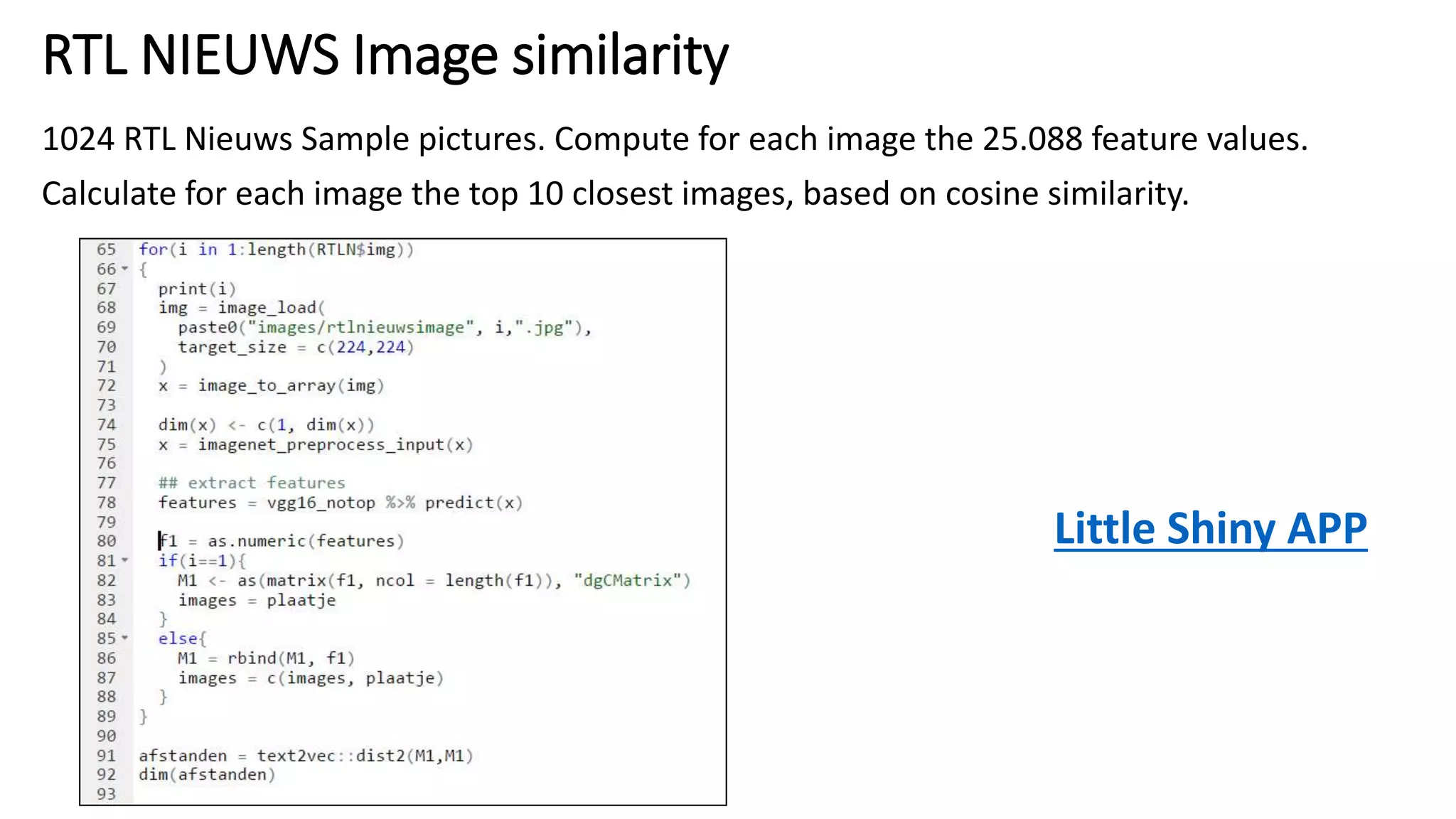

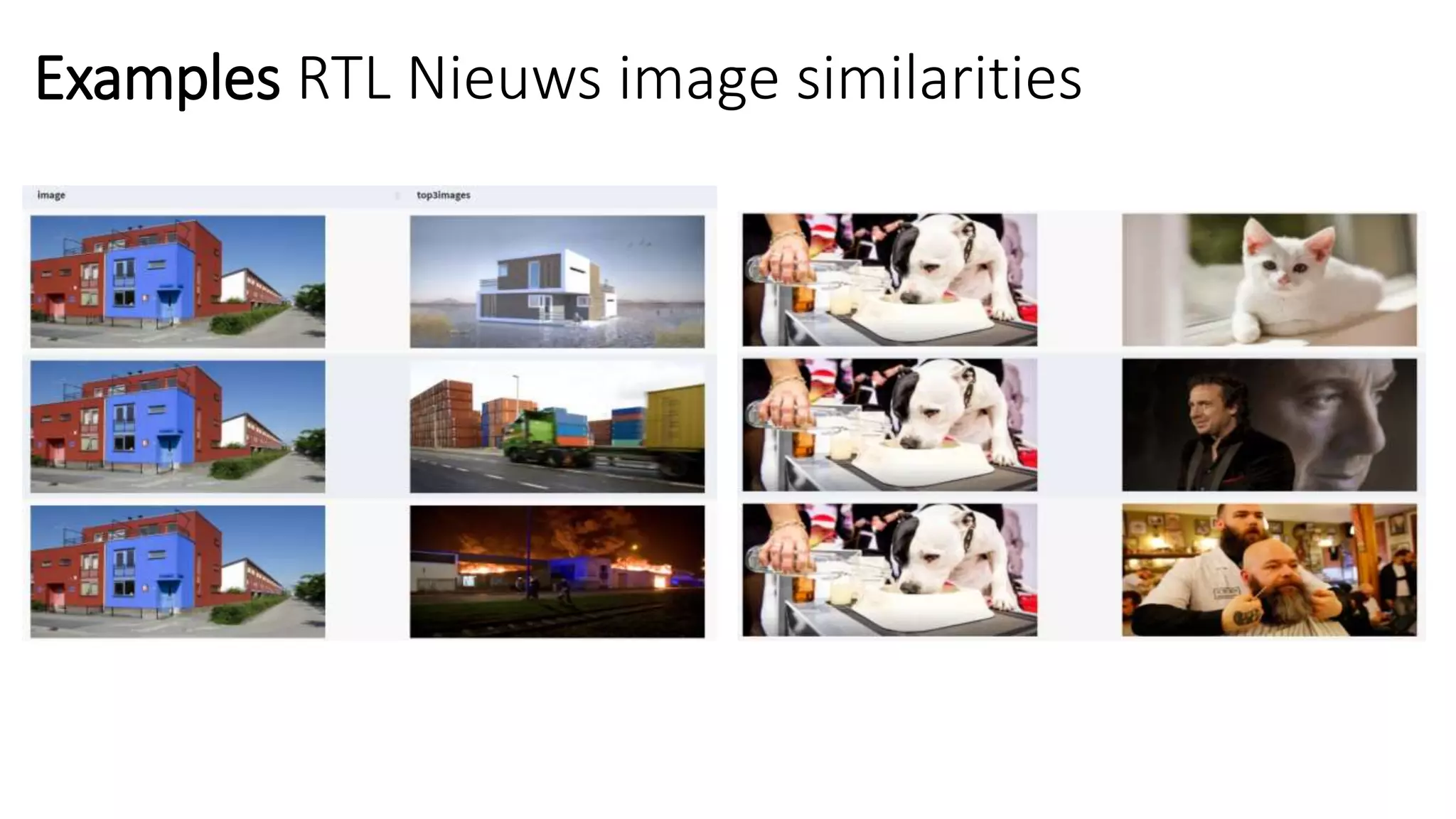

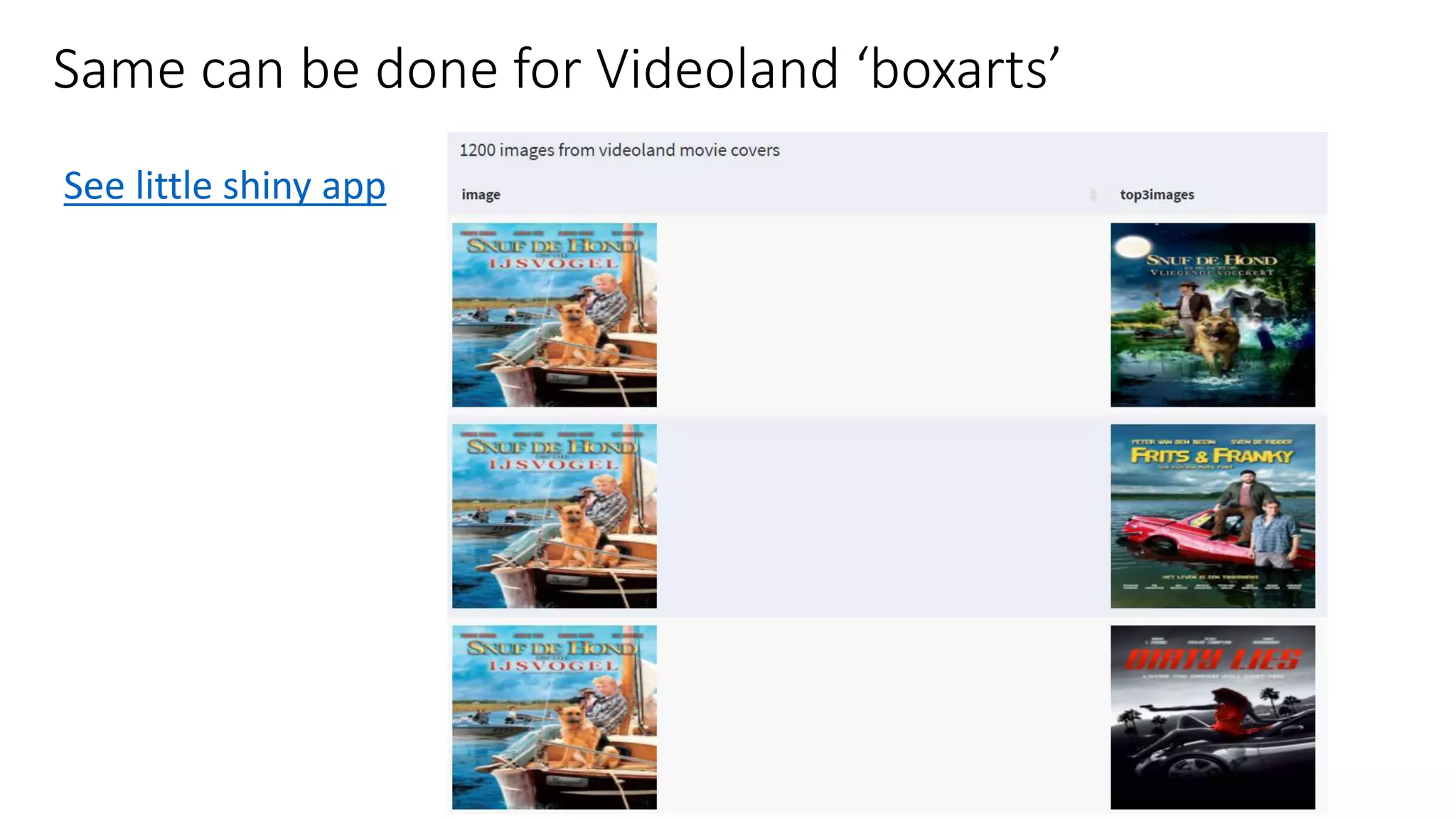

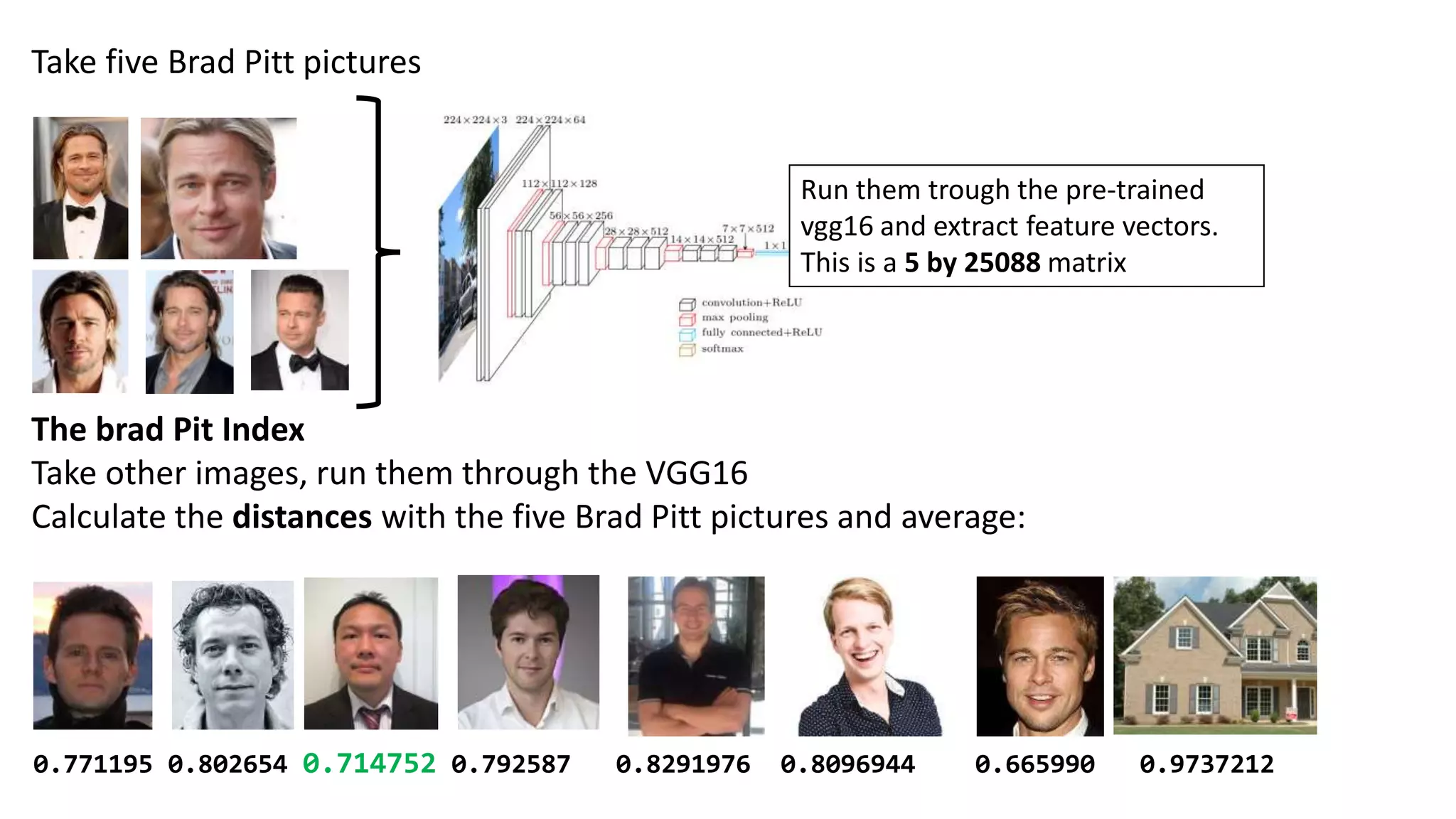

Keras with Tensorflow backend can be used for neural networks and deep learning in both R and Python. The document discusses using Keras to build neural networks from scratch on MNIST data, using pre-trained models like VGG16 for computer vision tasks, and fine-tuning pre-trained models on limited data. Examples are provided for image classification, feature extraction, and calculating image similarities.

![Python code example

base_model = VGG16(weights='imagenet', include_top=False)

x = base_model.output

x = GlobalAveragePooling2D()(x)

# let's add a fully-connected layer

x = Dense(256, activation='relu')(x)

# and a logistic layer -- 2 classes dogs and cats

predictions = Dense(2, activation='softmax')(x)

# this is the model we will train

model = Model(inputs=base_model.input, outputs=predictions)

# first: train only the top layers (which were randomly initialized)

# i.e. freeze all convolutional layers

for layer in base_model.layers:

layer.trainable = False

# compile the model (should be done *after* setting layers to non-trainable)

model.compile(optimizer='rmsprop', loss='categorical_crossentropy', metrics =['accuracy'])](https://image.slidesharecdn.com/kerasexperiments-170608112900/75/Keras-on-tensorflow-in-R-Python-44-2048.jpg)