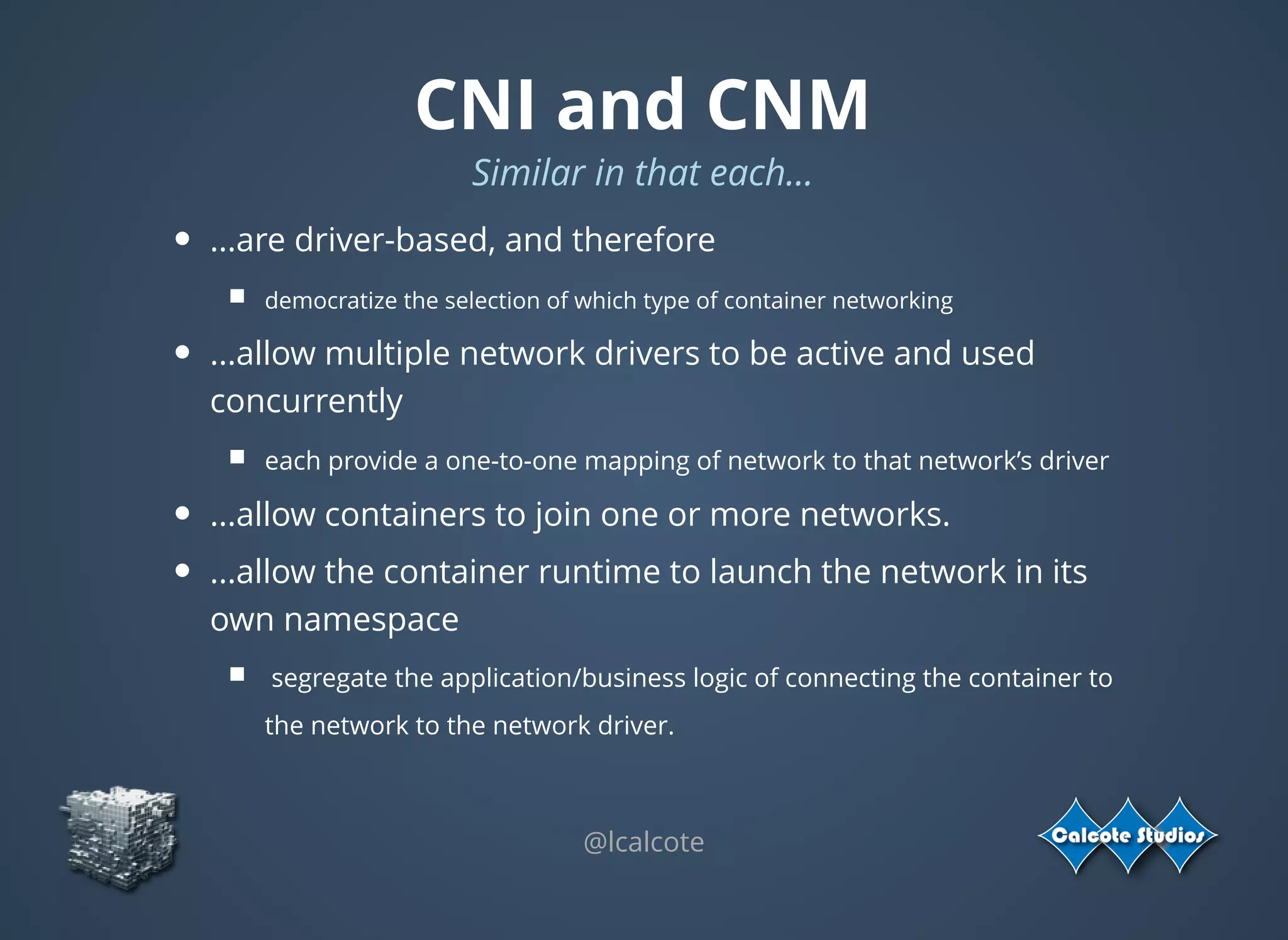

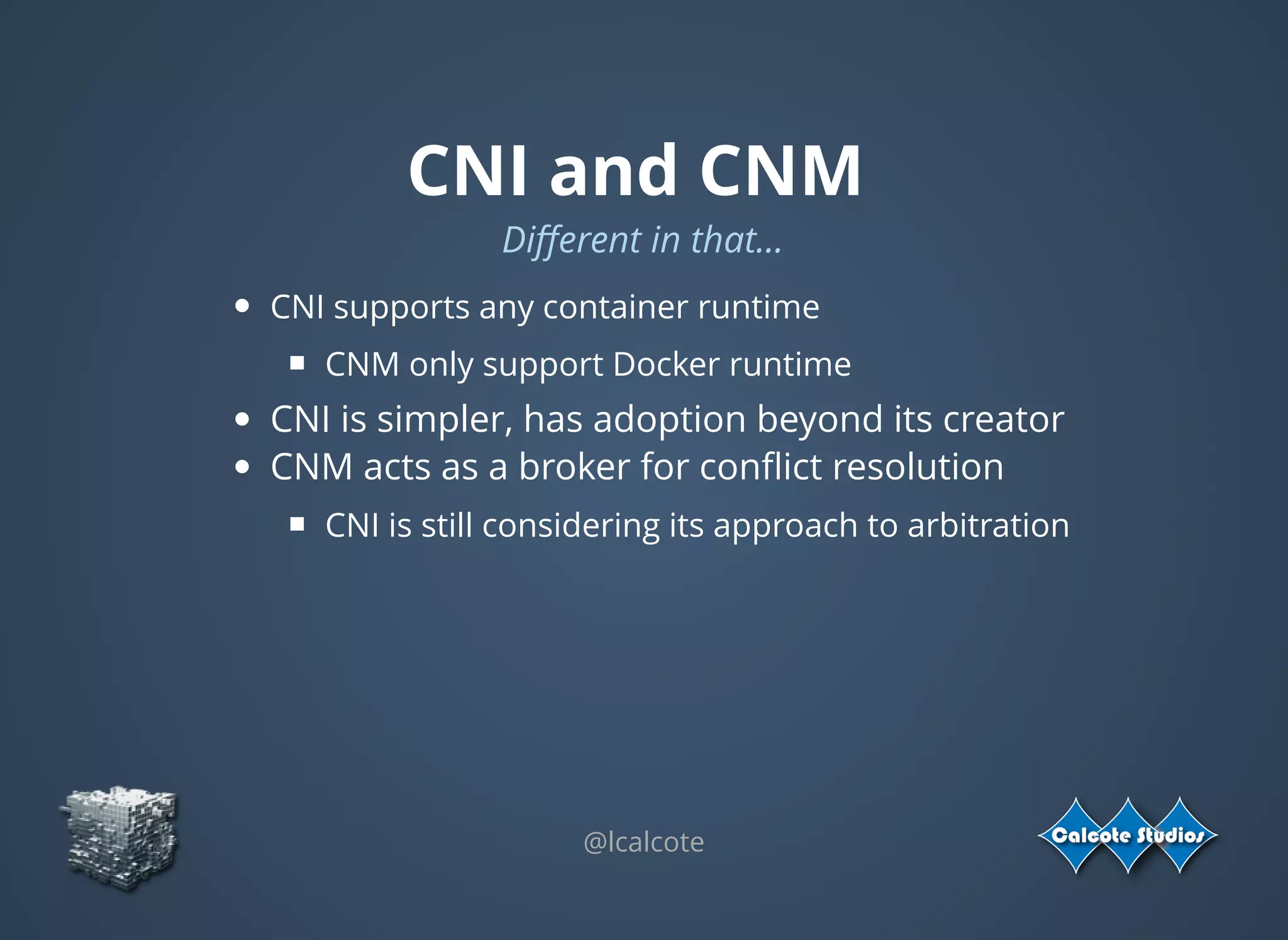

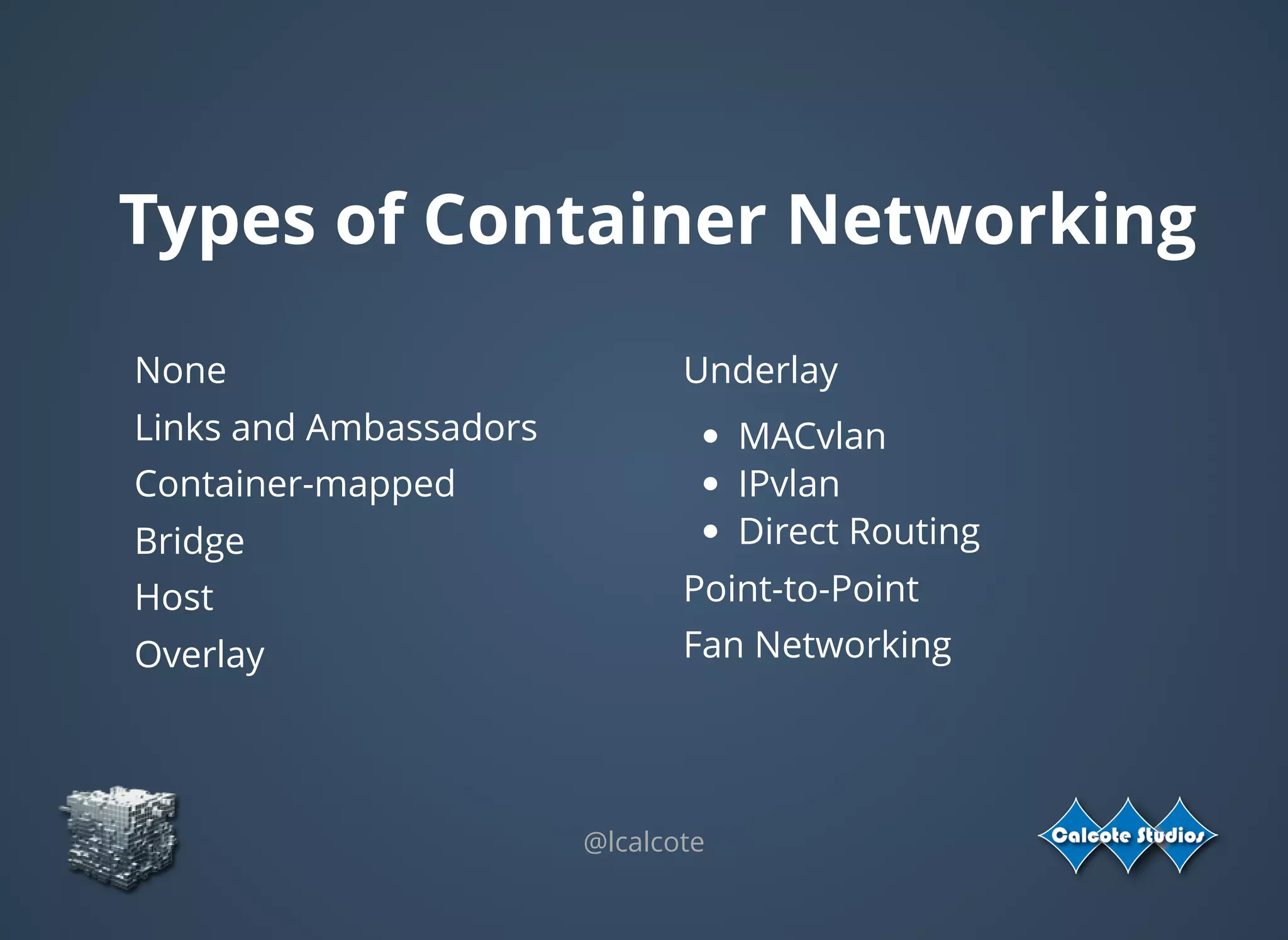

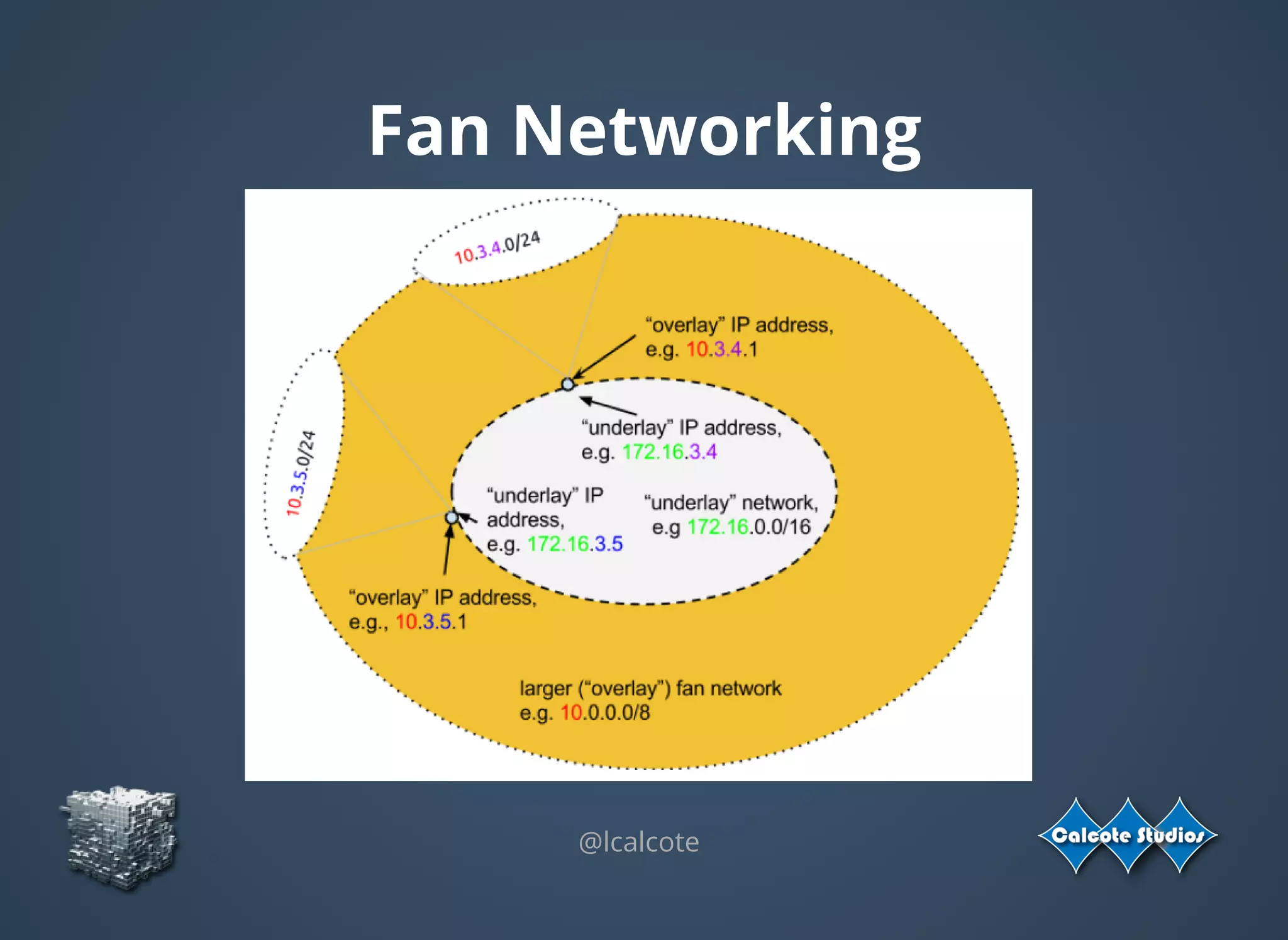

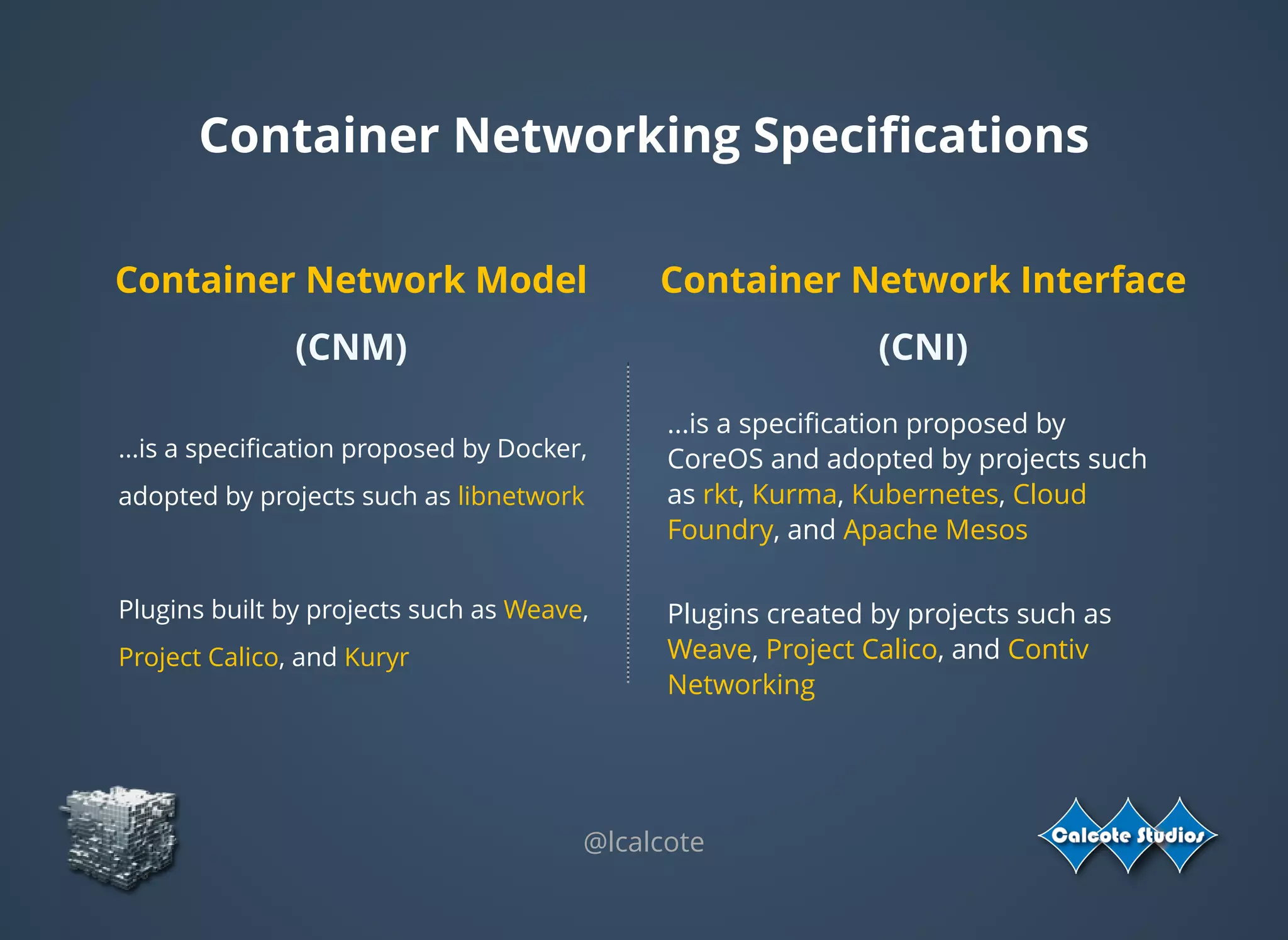

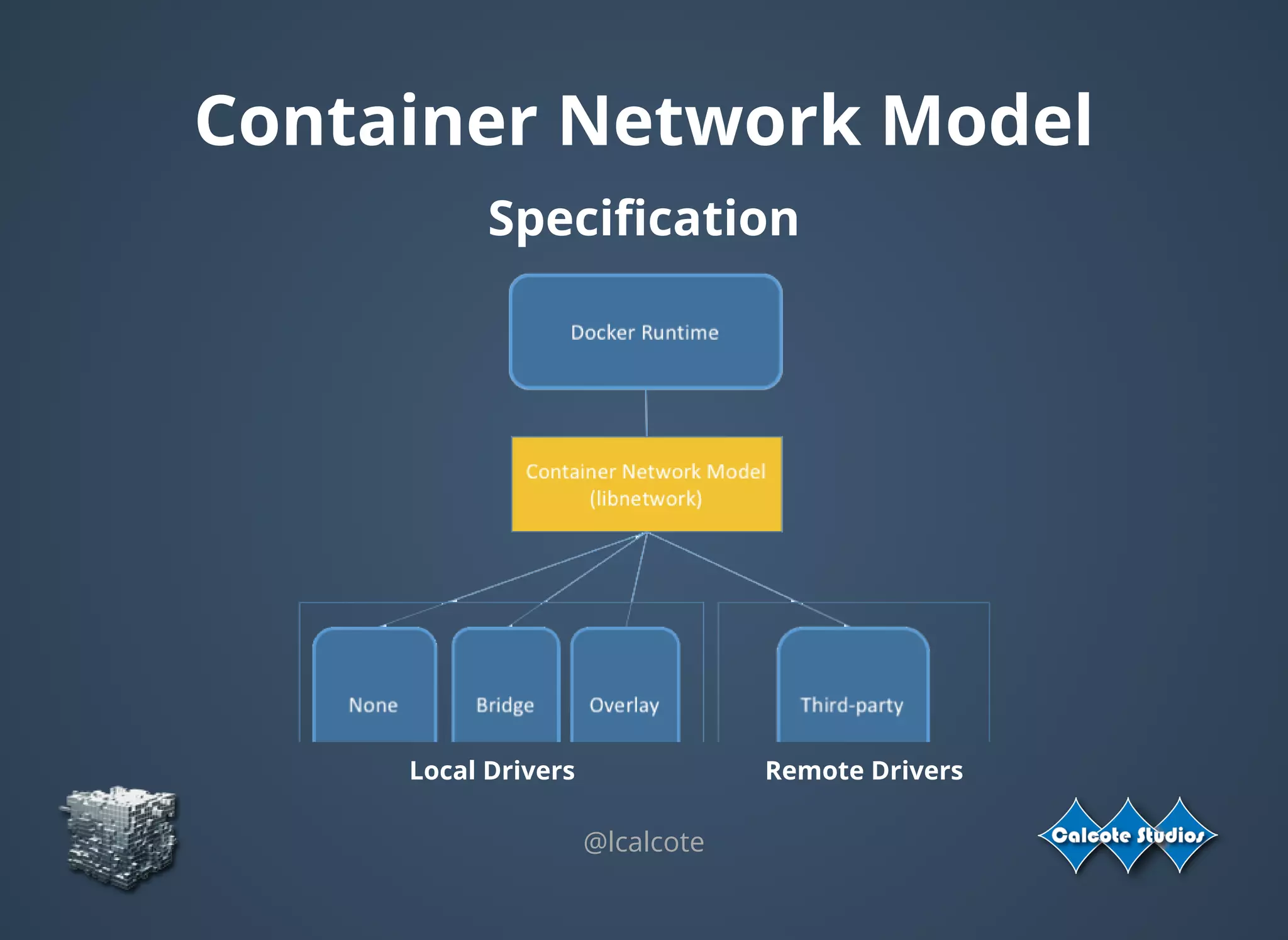

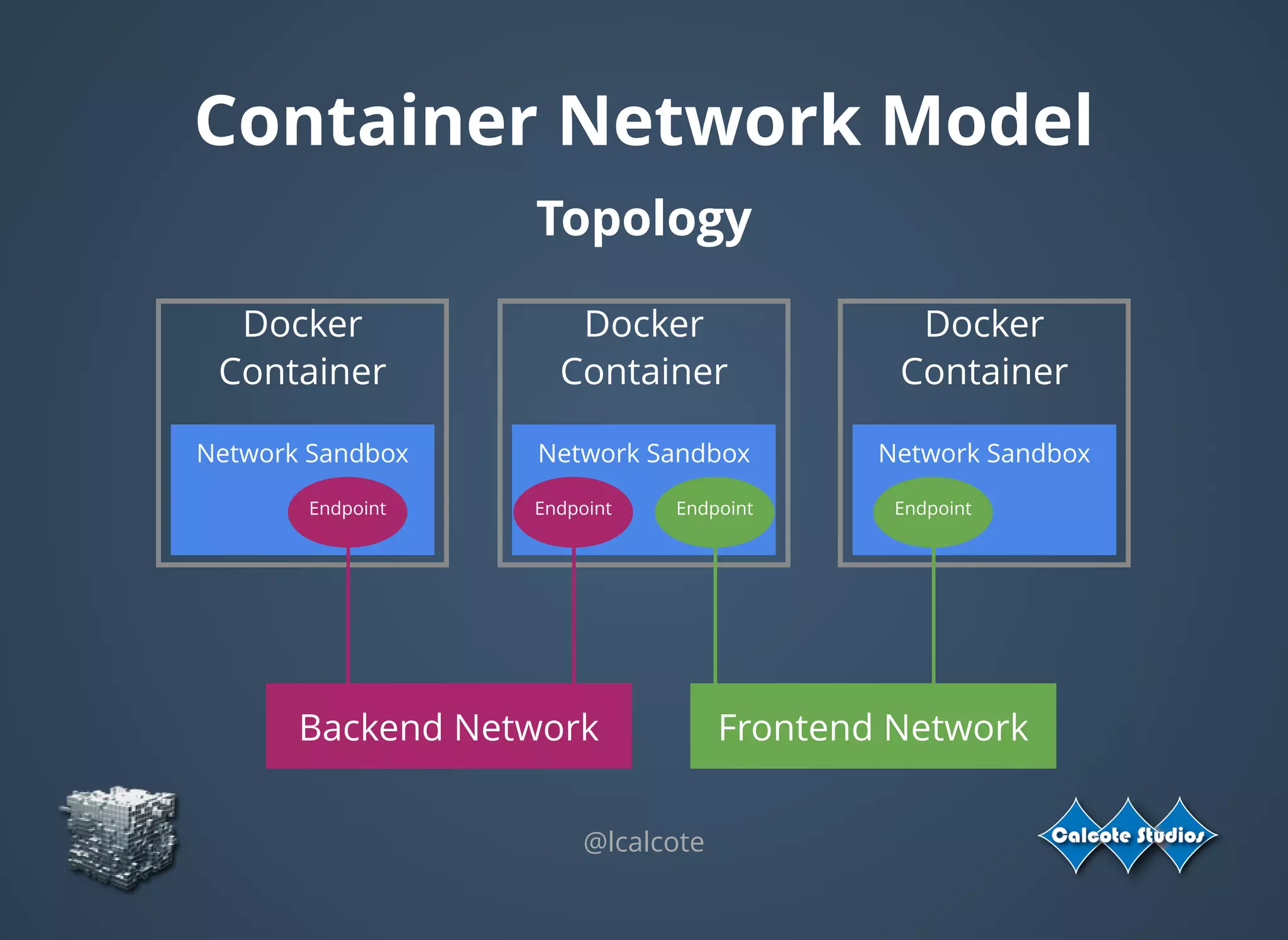

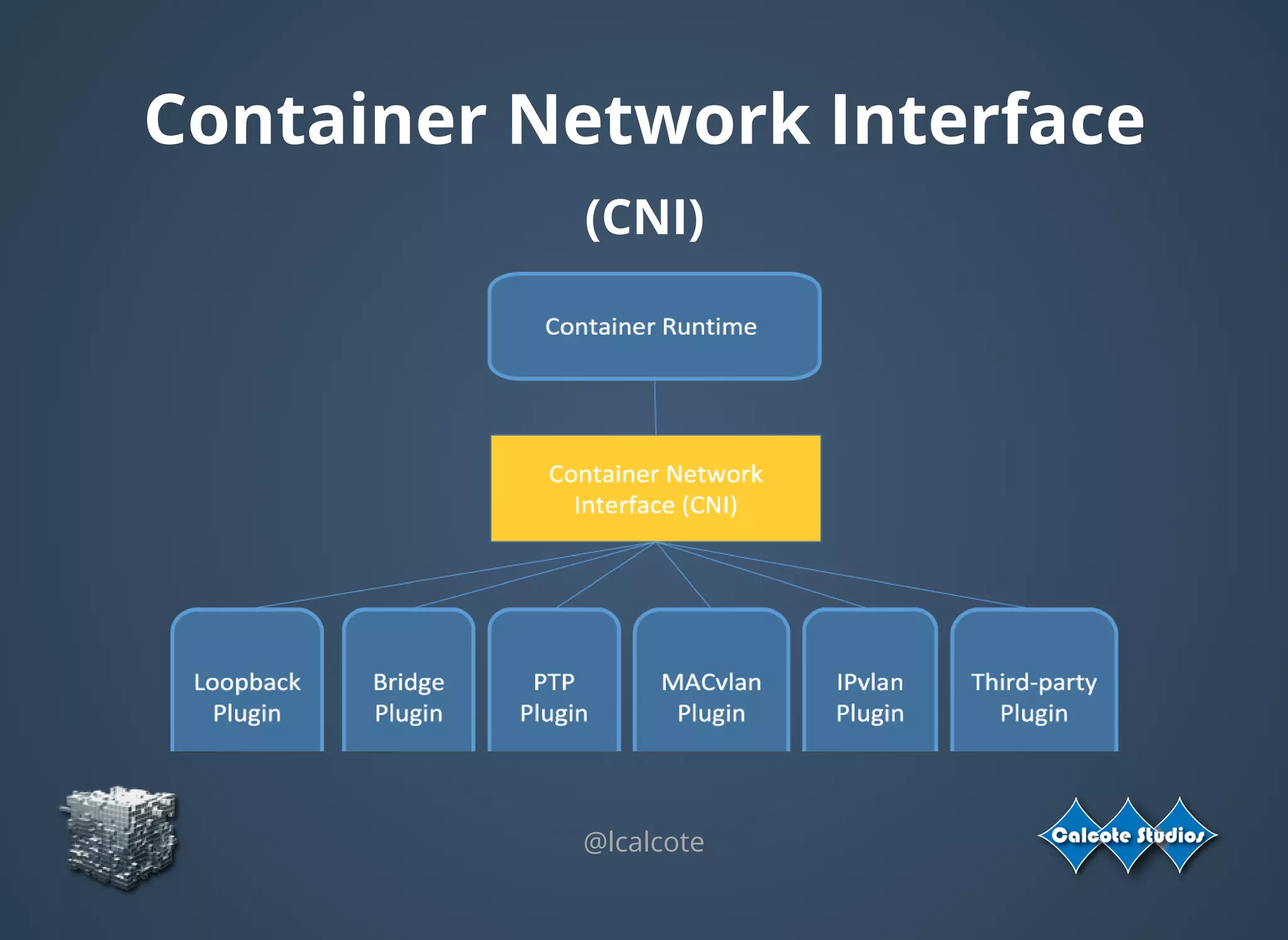

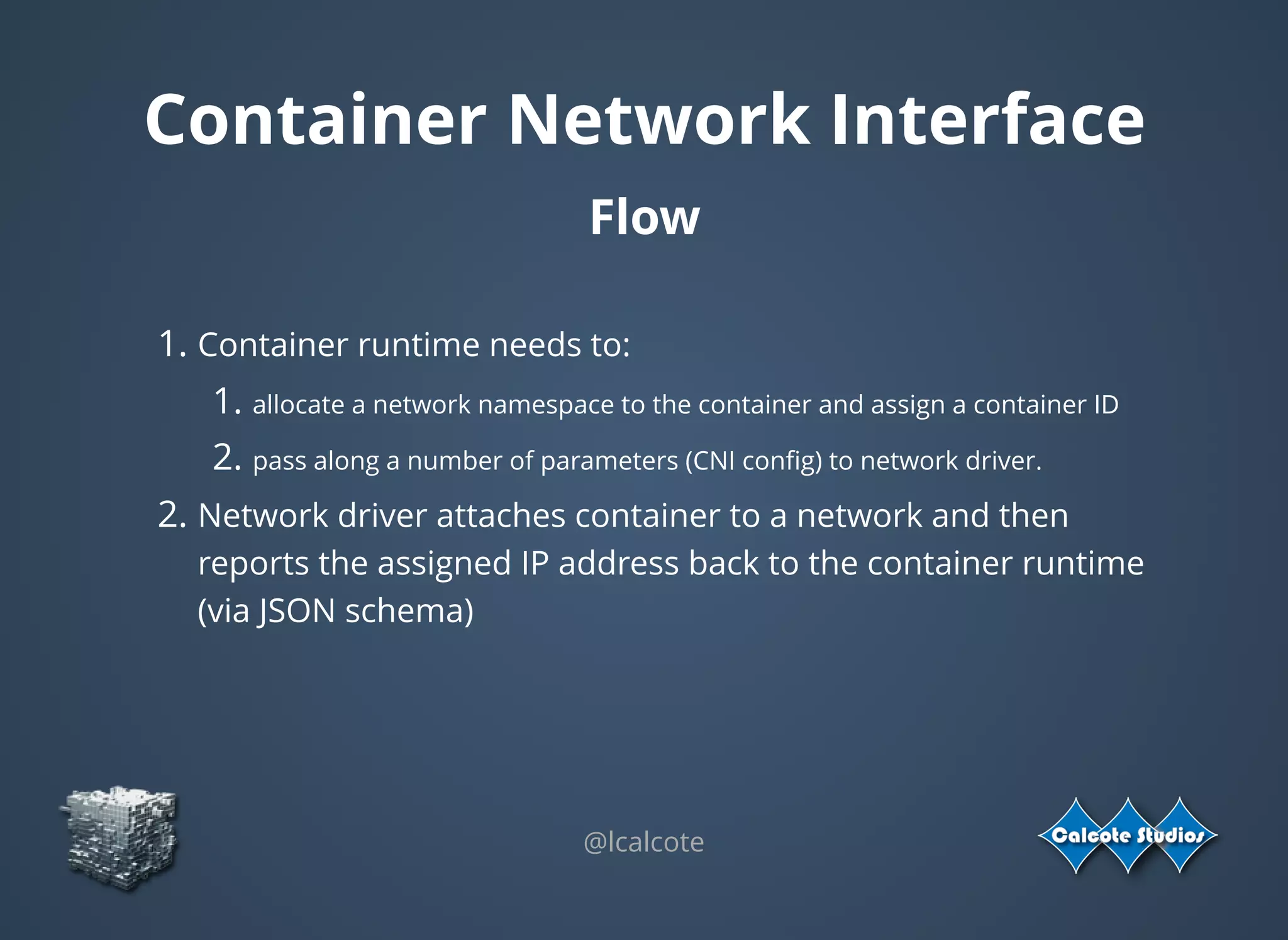

The document discusses the complexities and specifications of container networking, focusing on the Container Network Model (CNM) and Container Network Interface (CNI). It covers various networking types such as overlay, underlay, and techniques like macvlan and ipvlan, analyzing their functionalities and use cases. Additionally, it highlights the importance of network management considerations, including IP address management and the implications of IPv6 support in cloud environments.

![@lcalcote

CNI Network

(JSON)

{

"name": "mynet",

"type": "bridge",

"bridge": "cni0",

"isGateway": true,

"ipMasq": true,

"ipam": {

"type": "host-local",

"subnet": "10.22.0.0/16",

"routes": [

{ "dst": "0.0.0.0/0" }

]

}](https://image.slidesharecdn.com/overlay-underlay-bettingoncontainernetworking-161010022239/75/Overlay-Underlay-Betting-on-Container-Networking-13-2048.jpg)