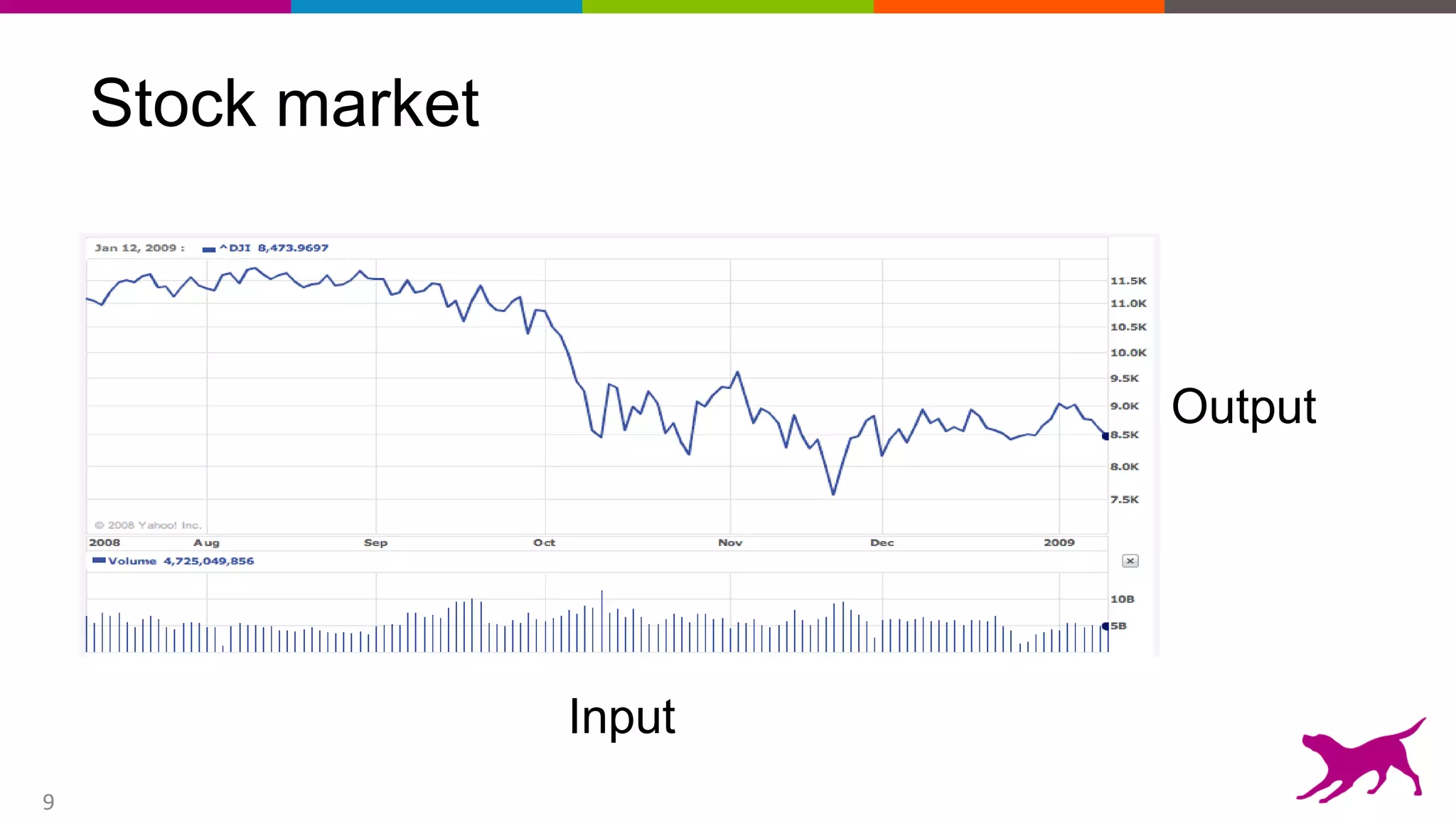

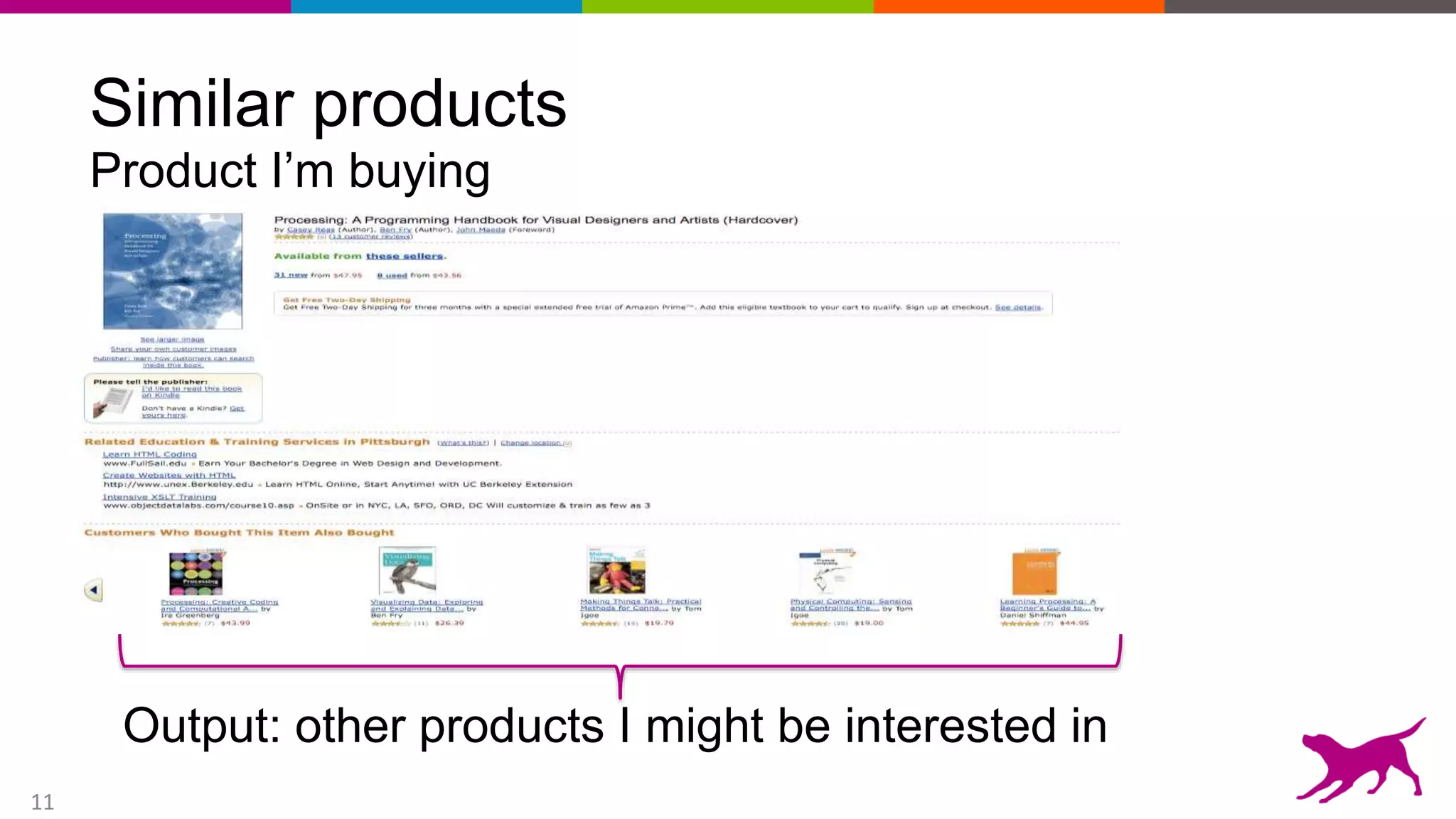

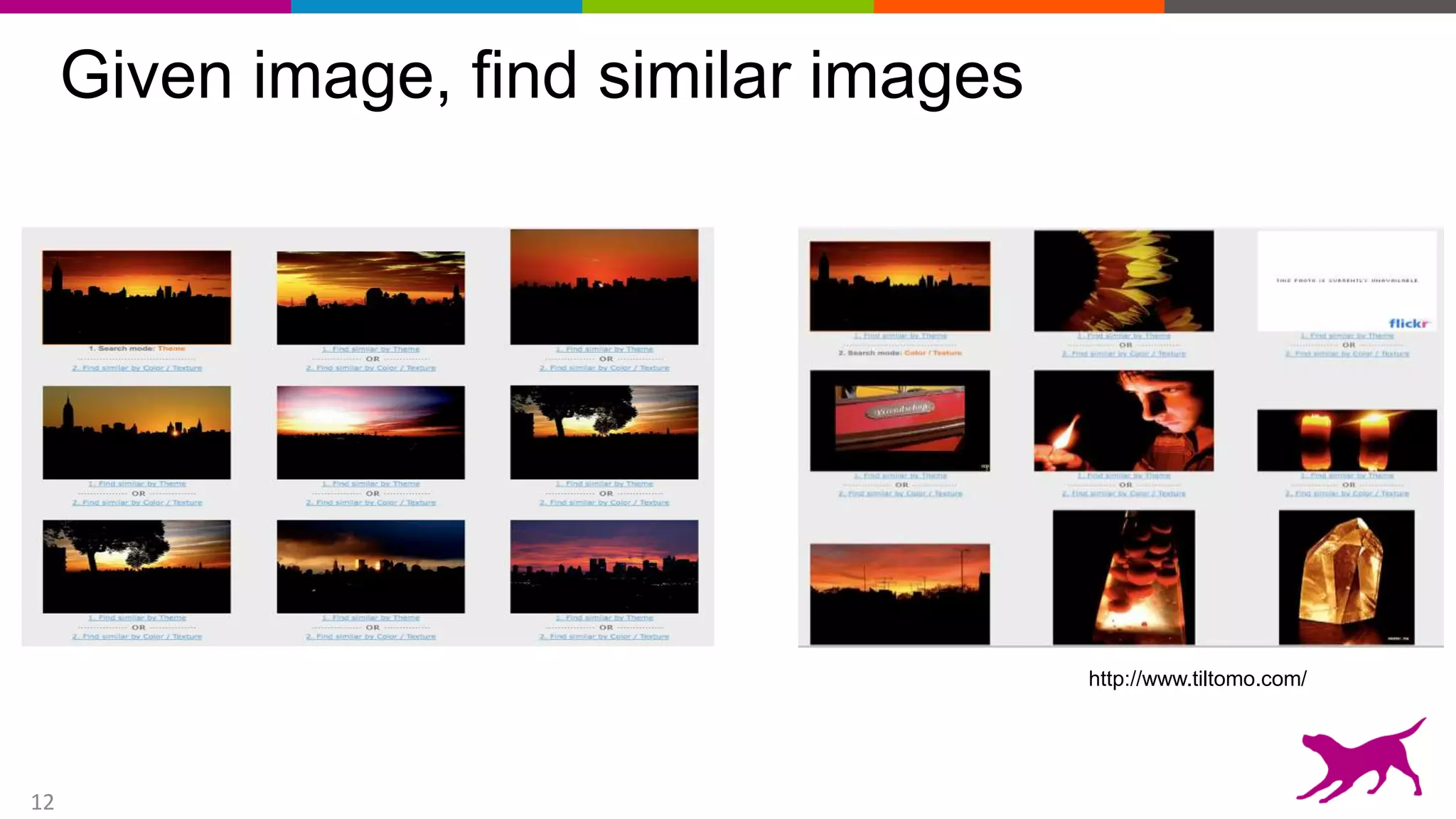

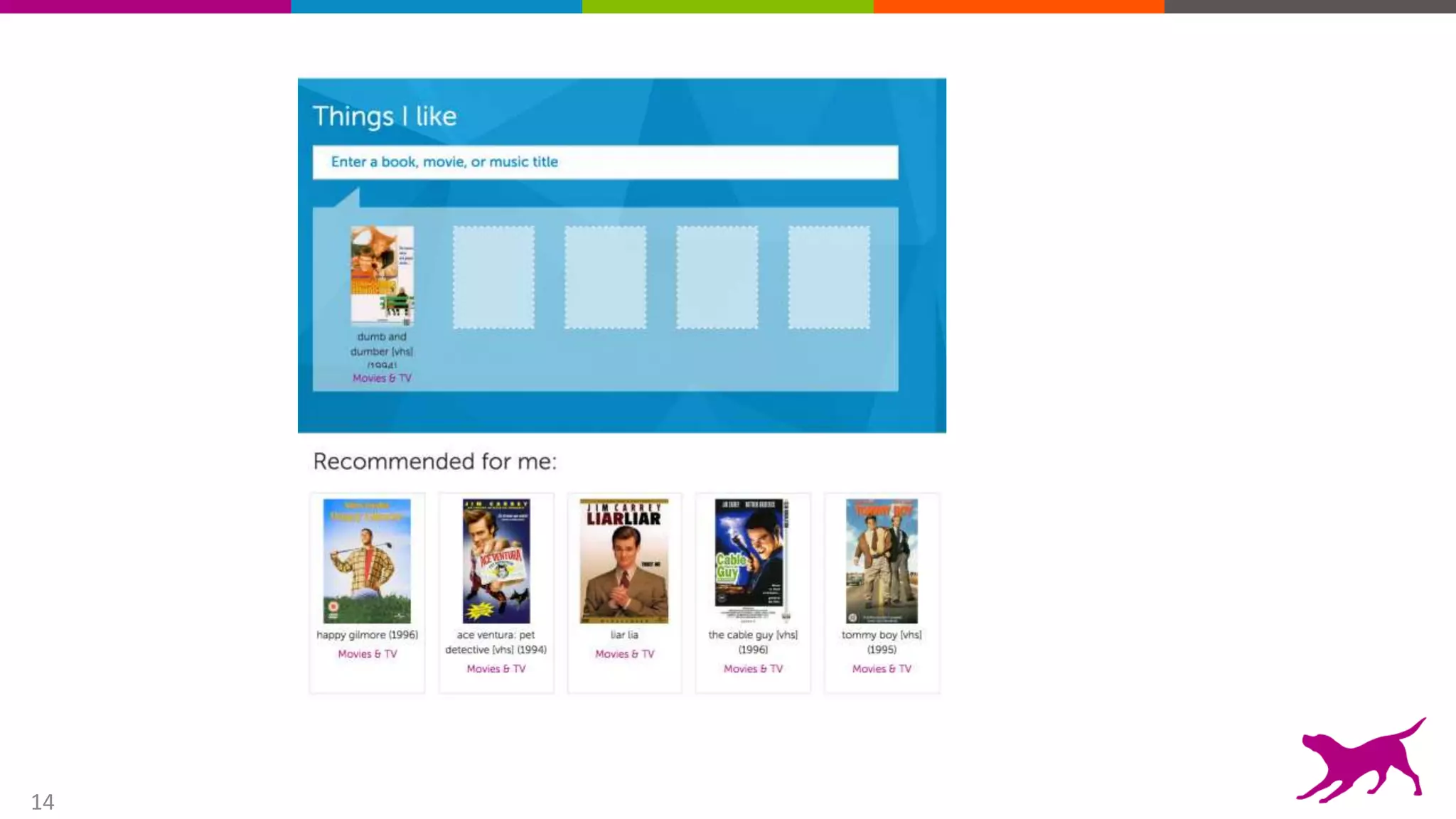

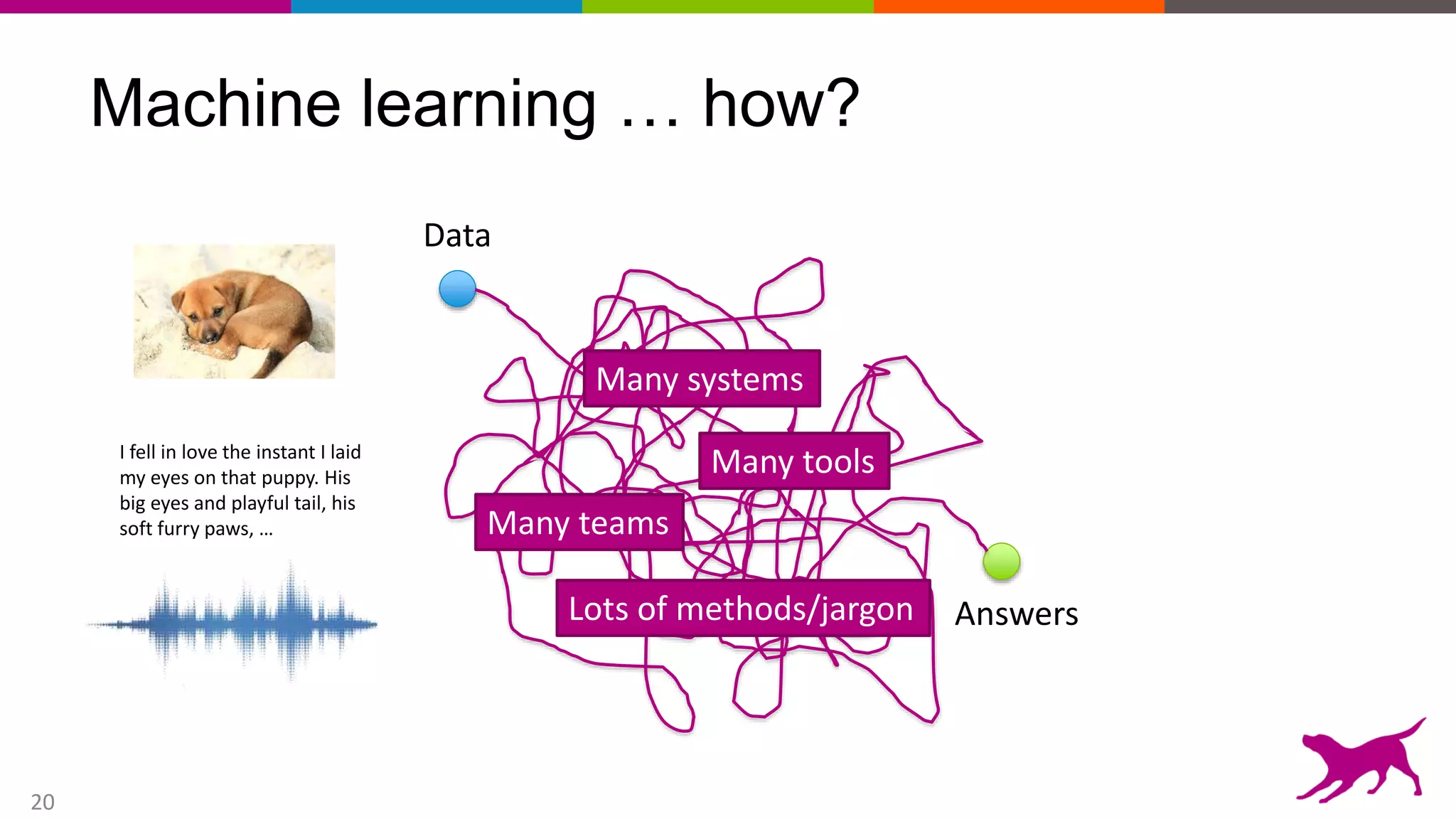

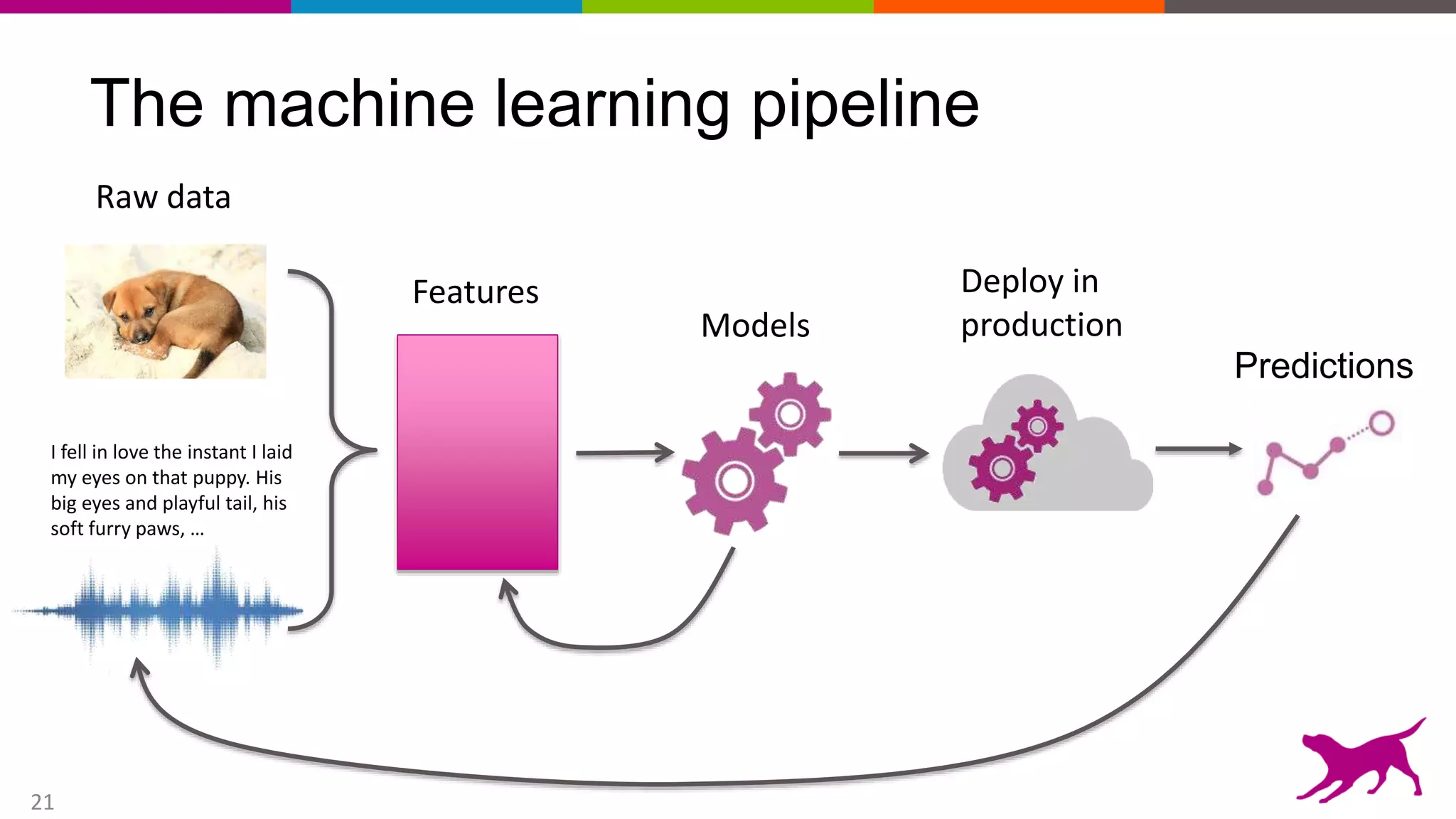

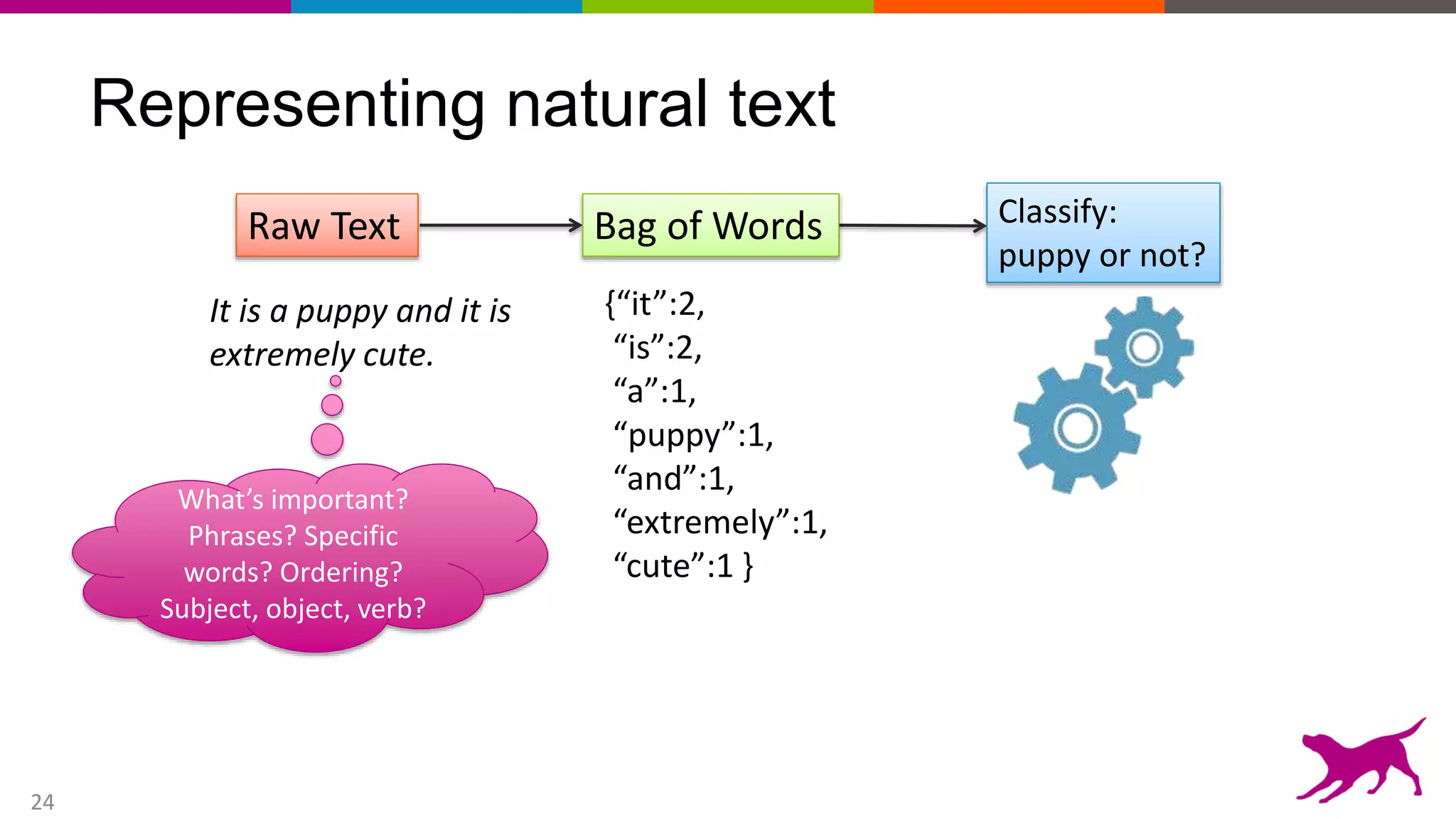

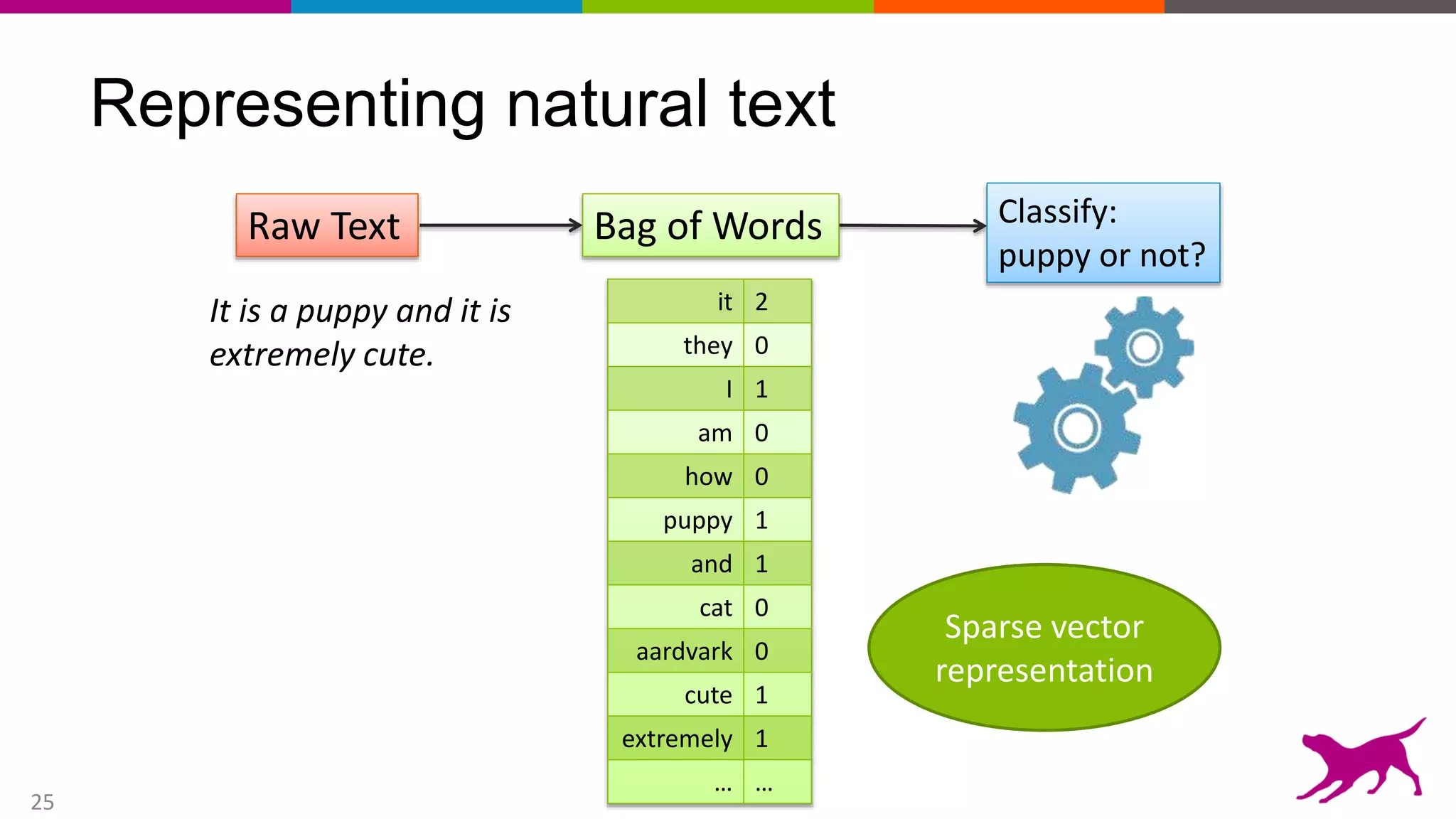

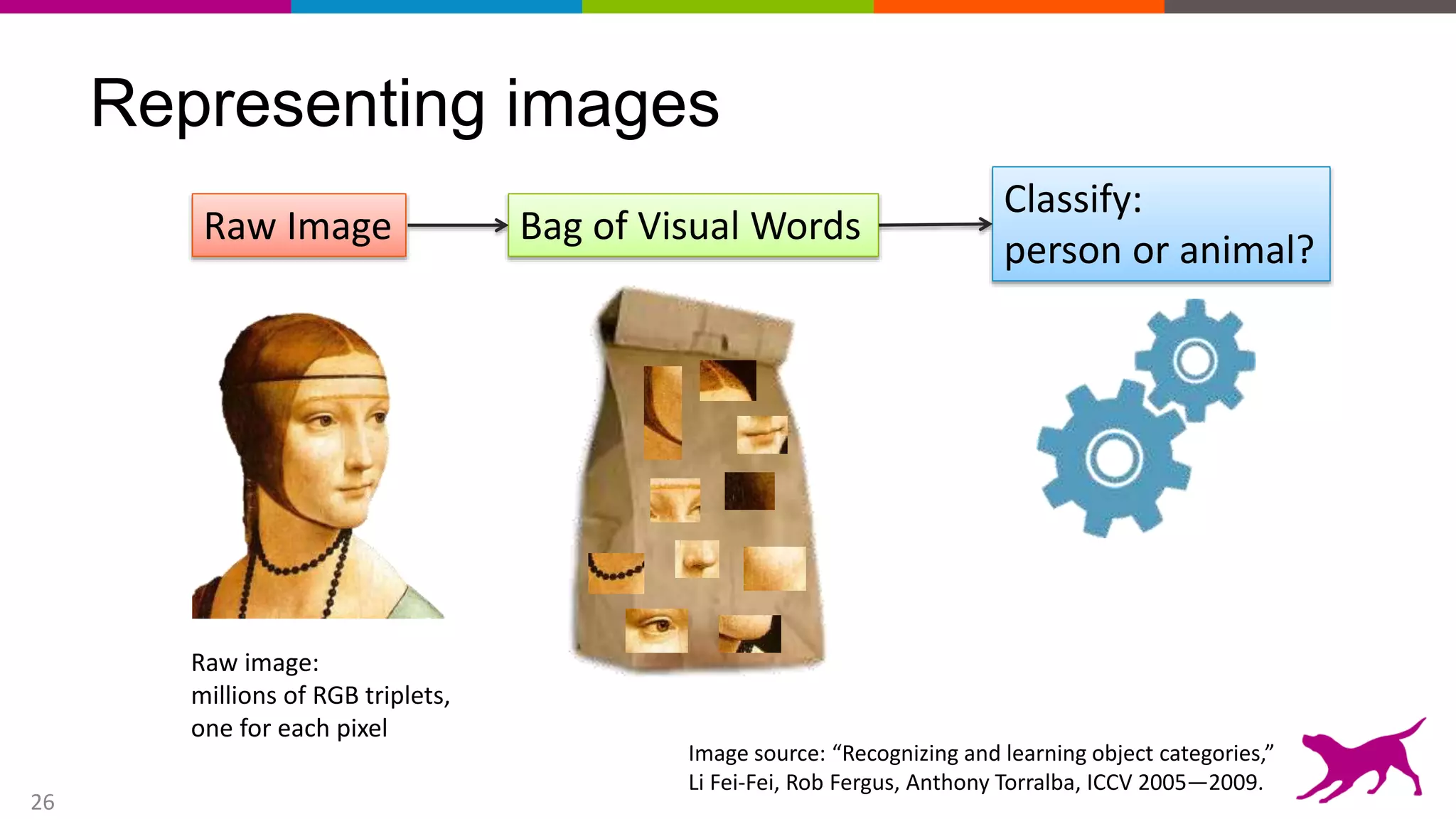

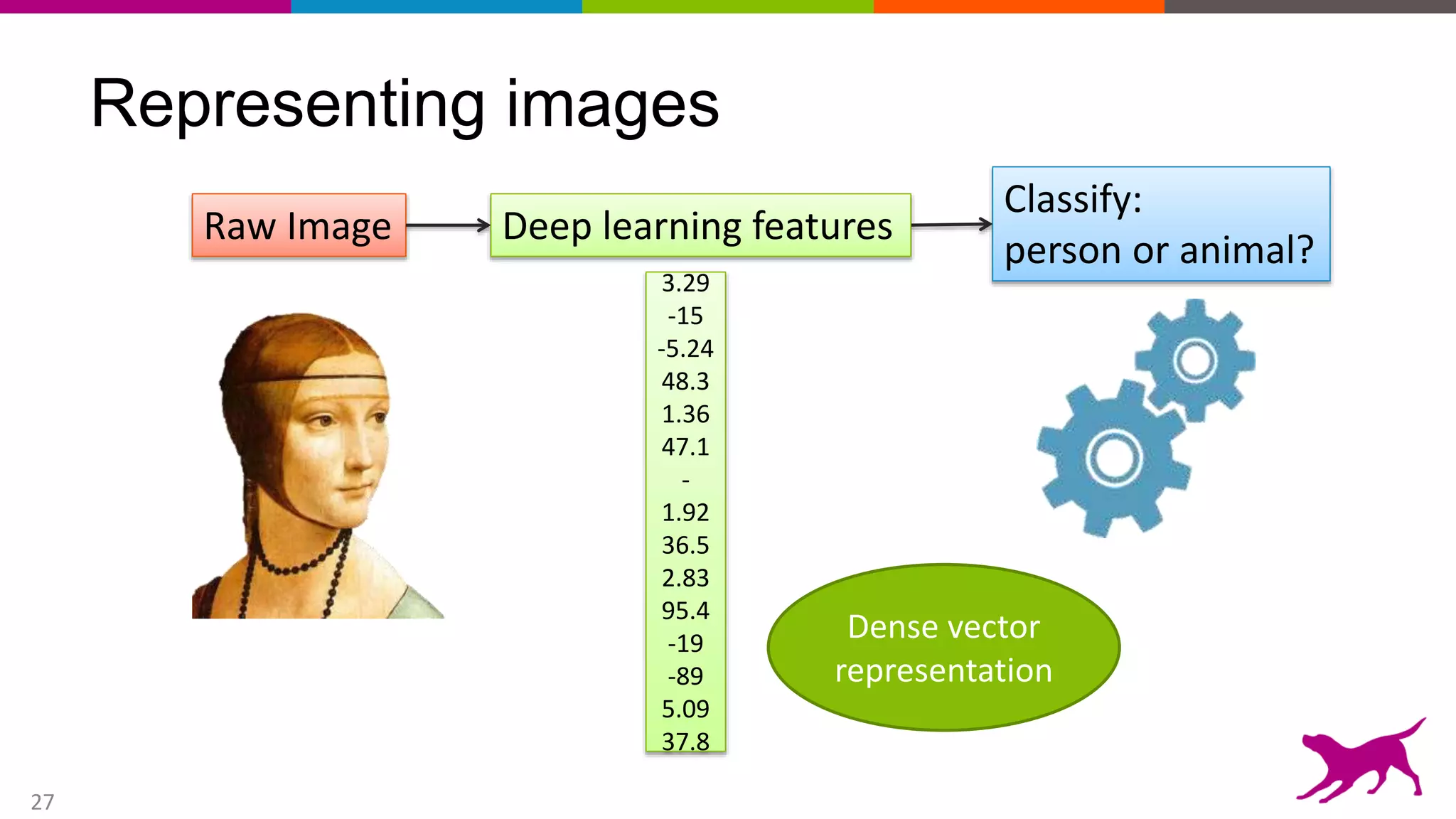

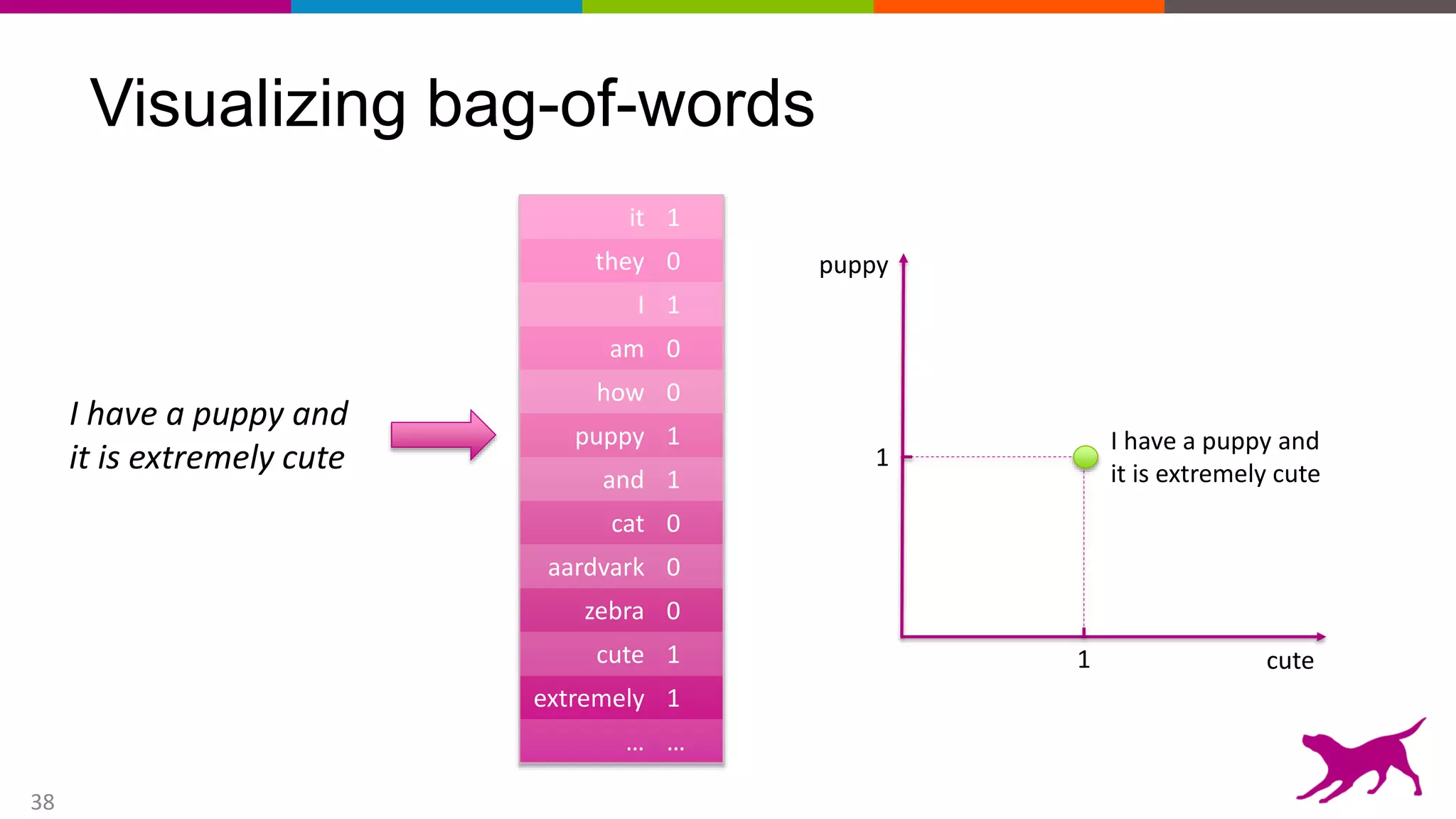

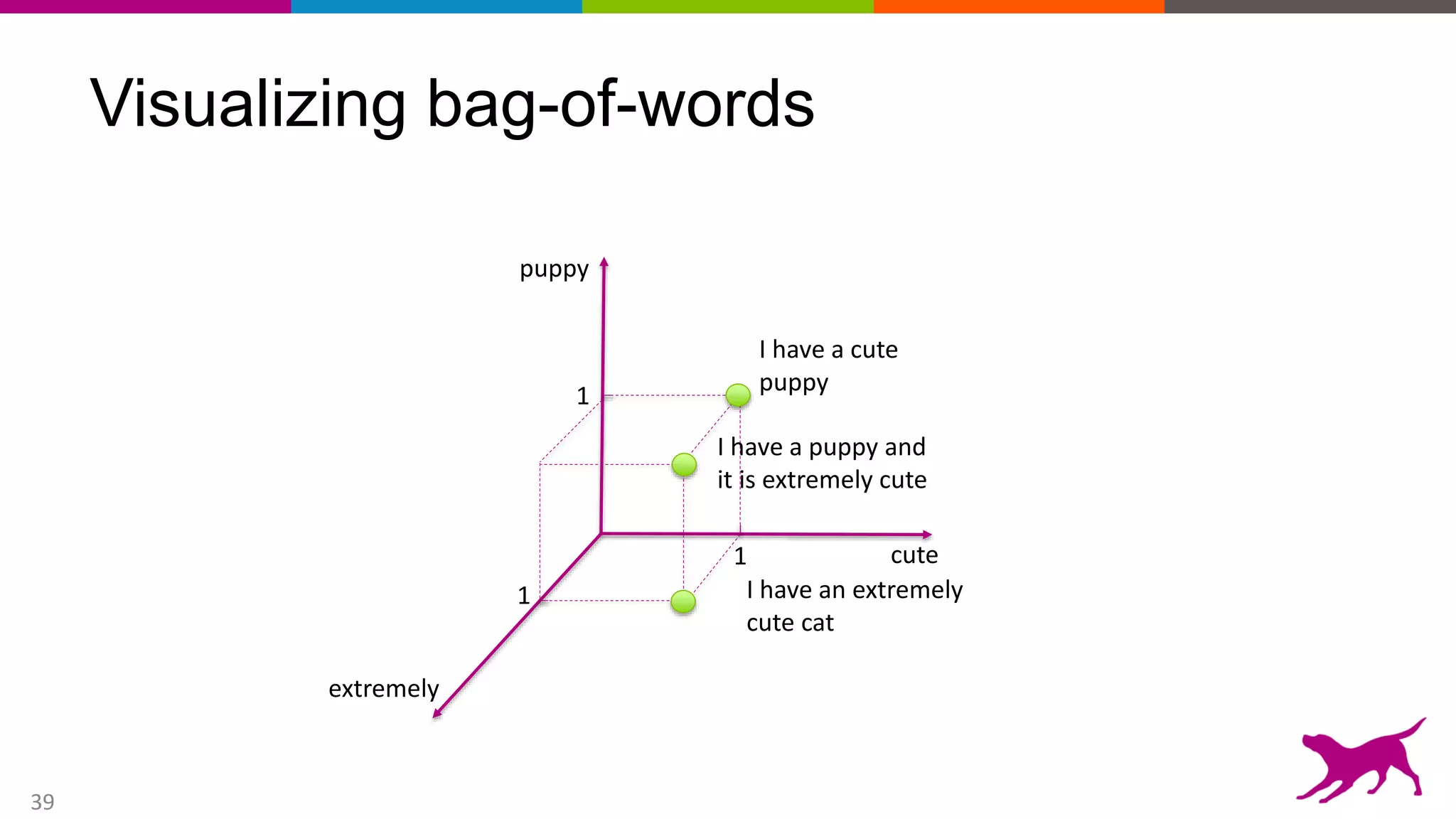

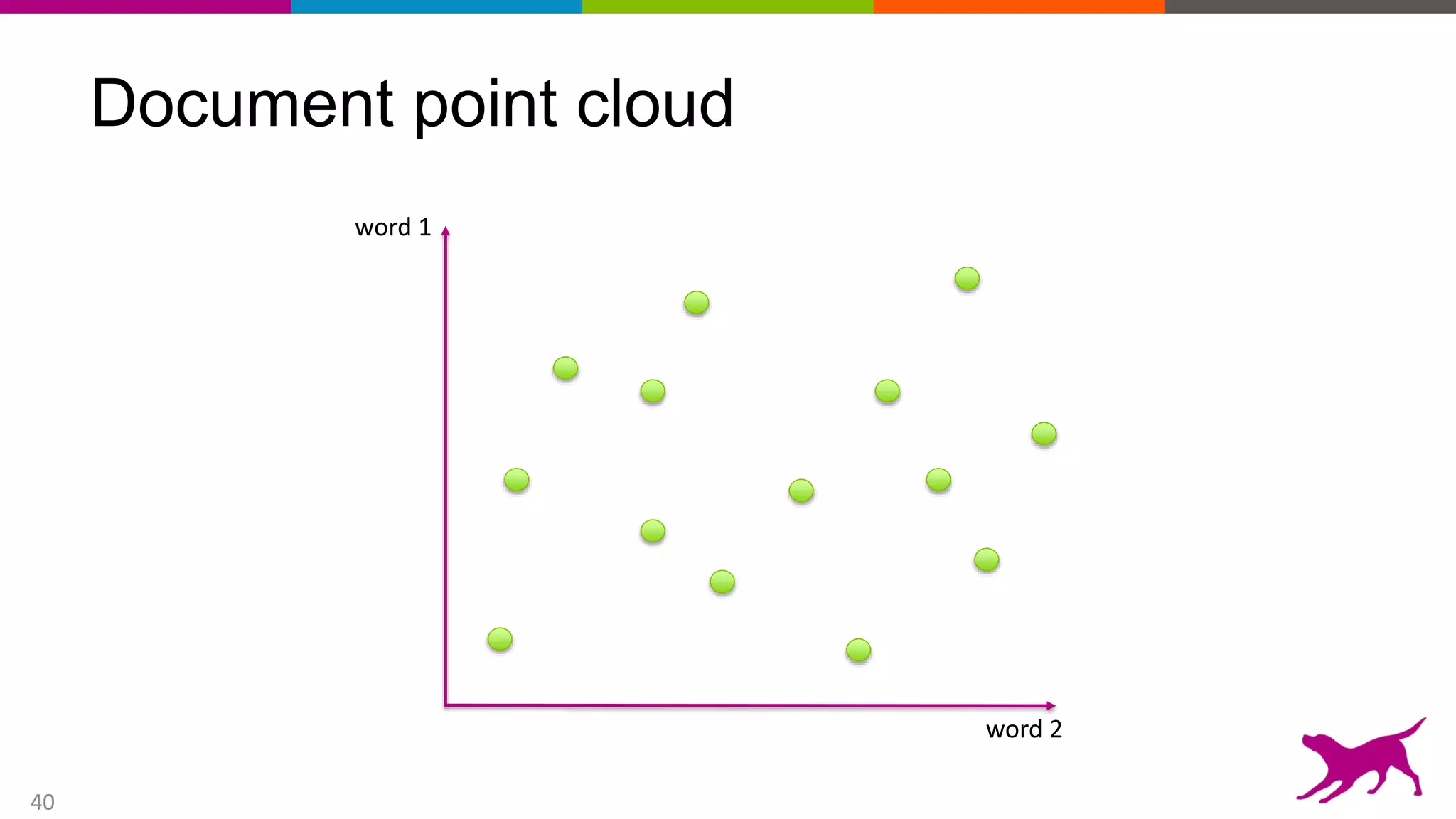

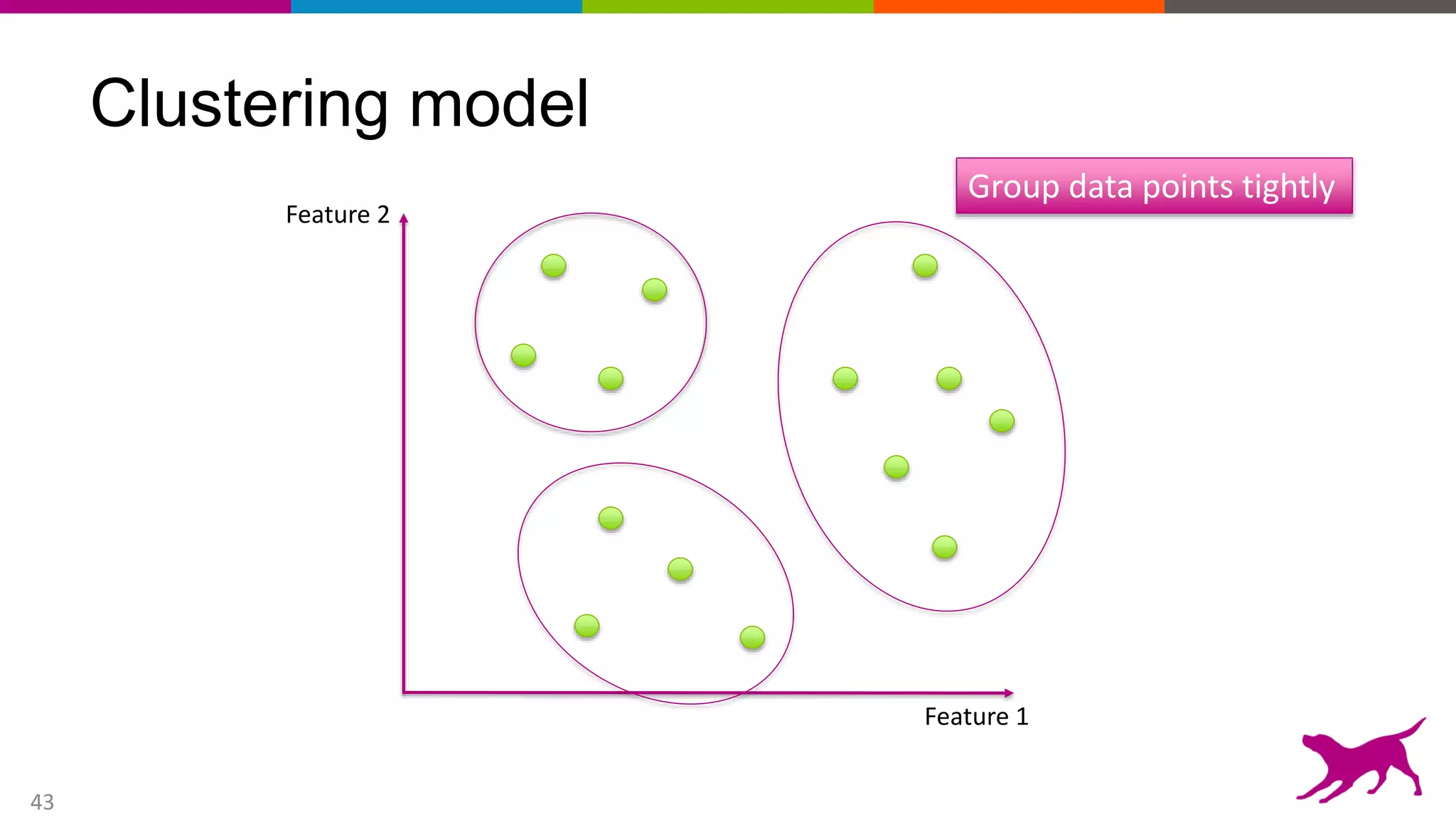

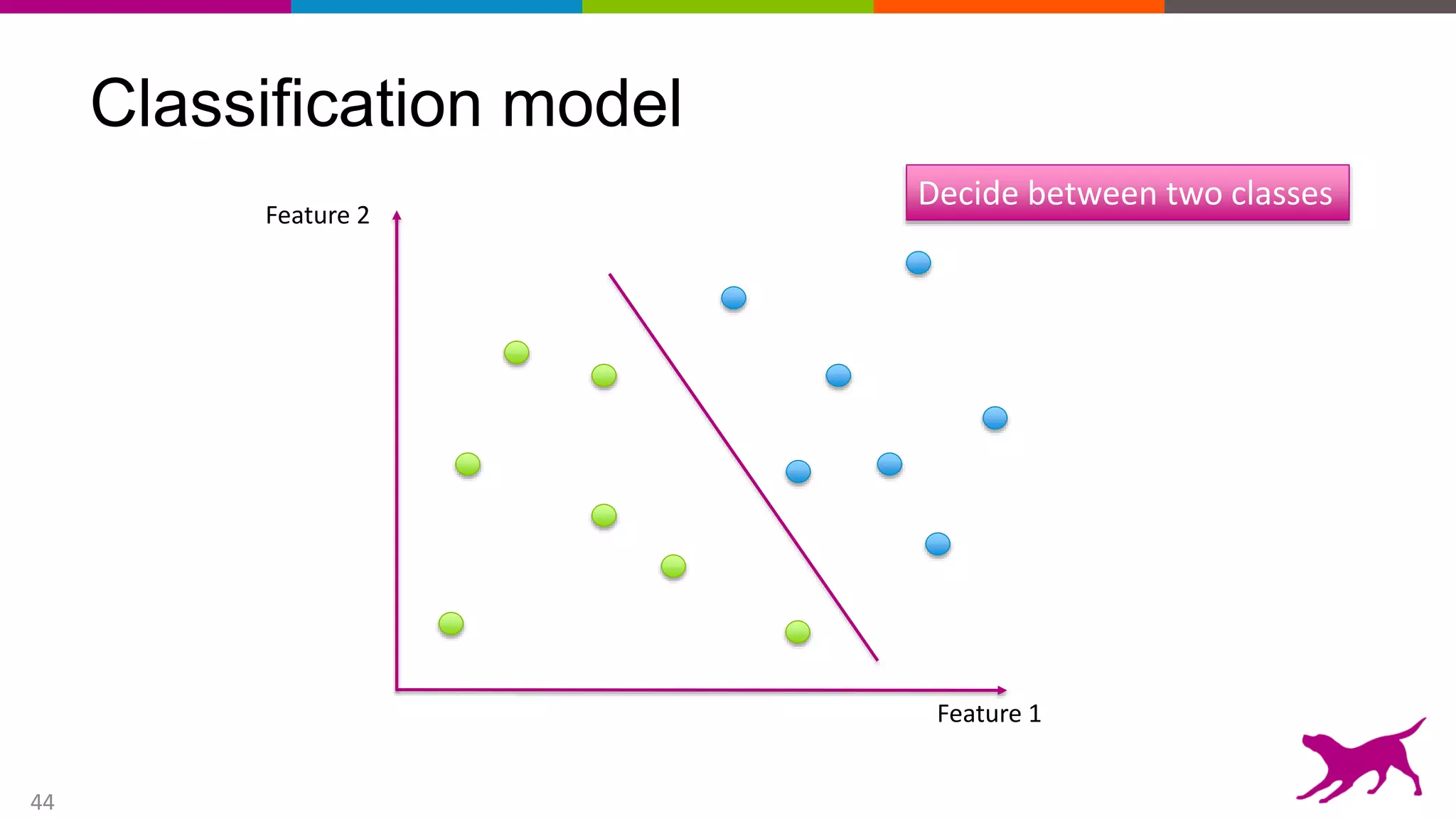

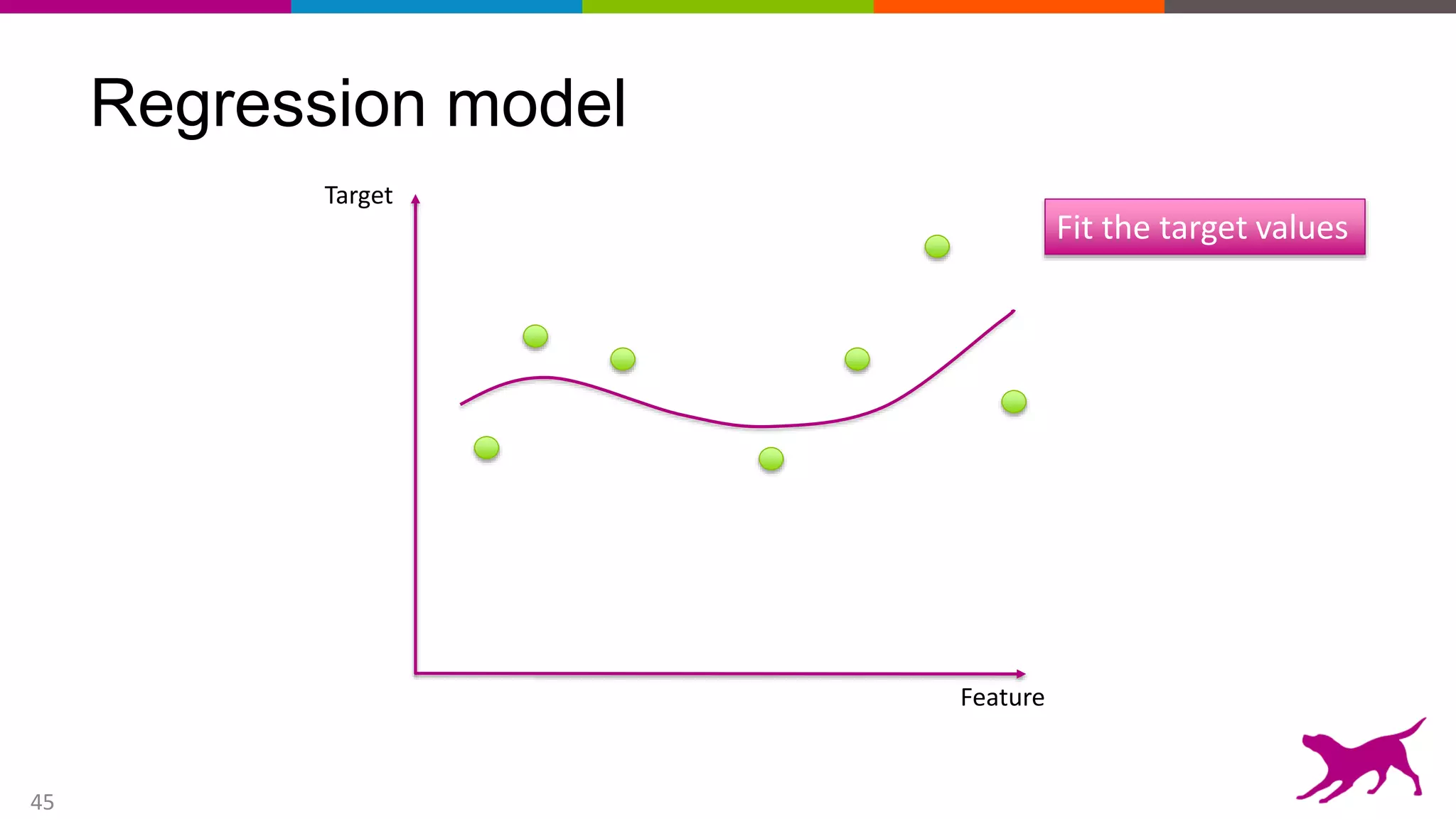

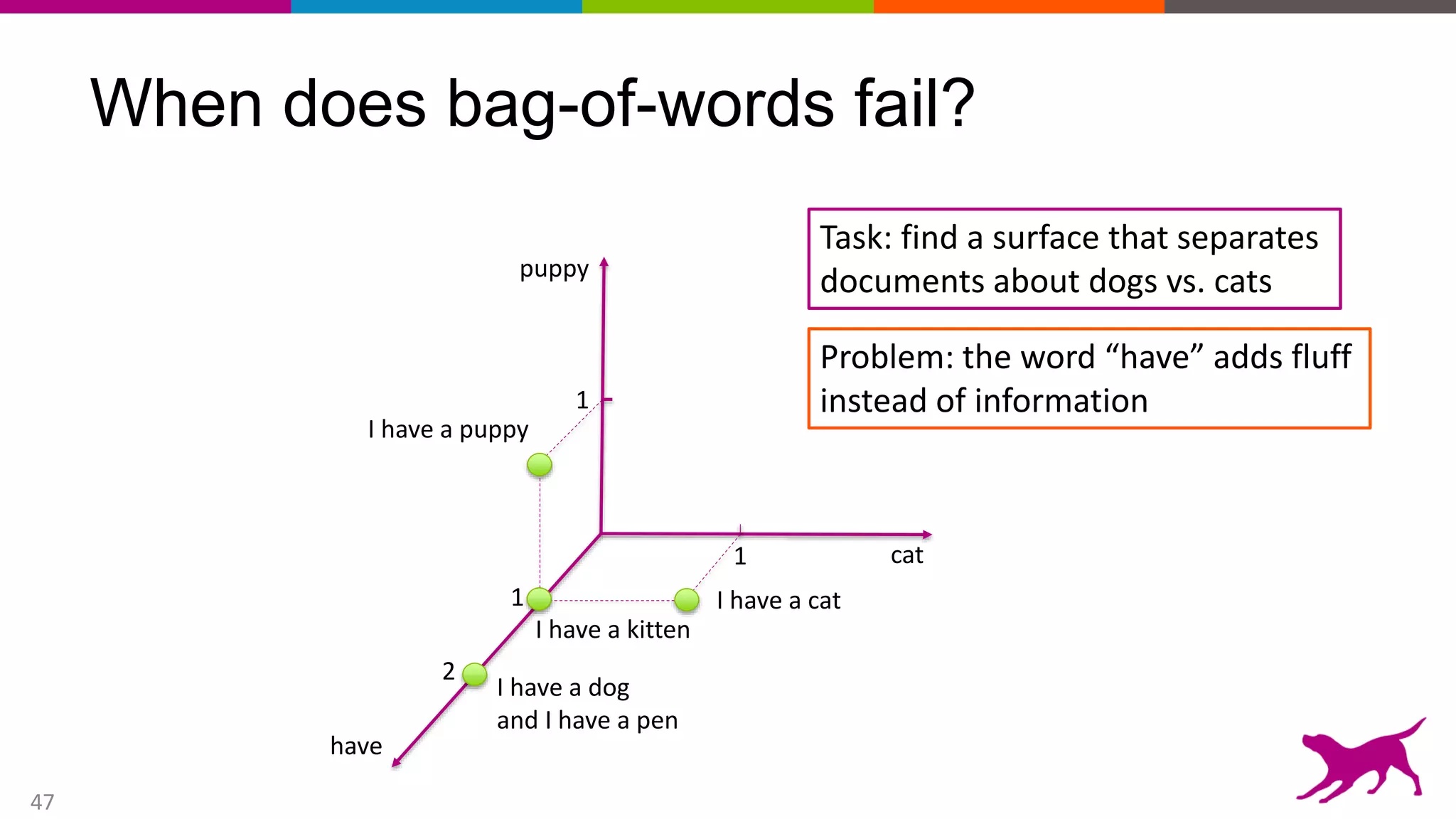

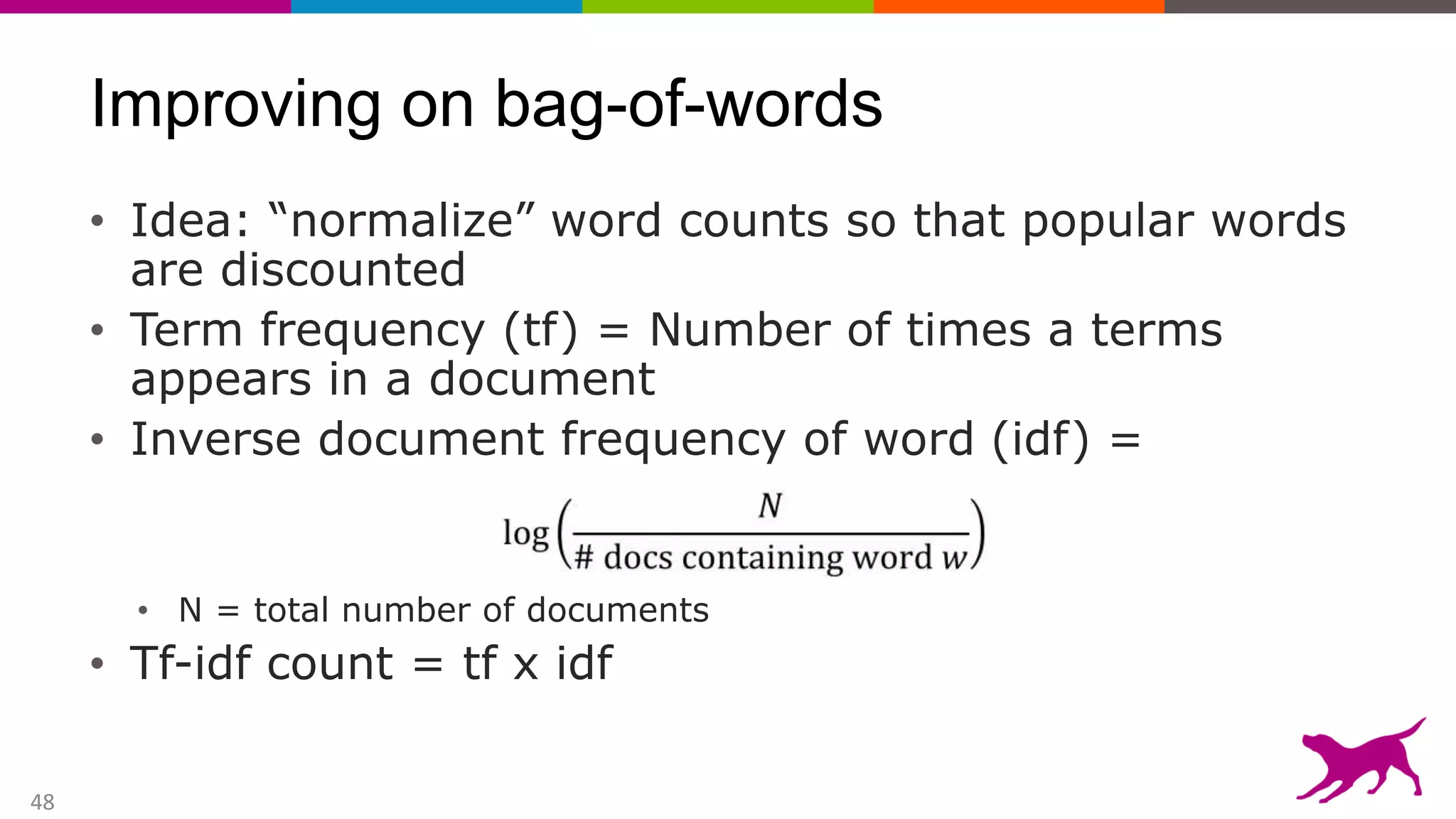

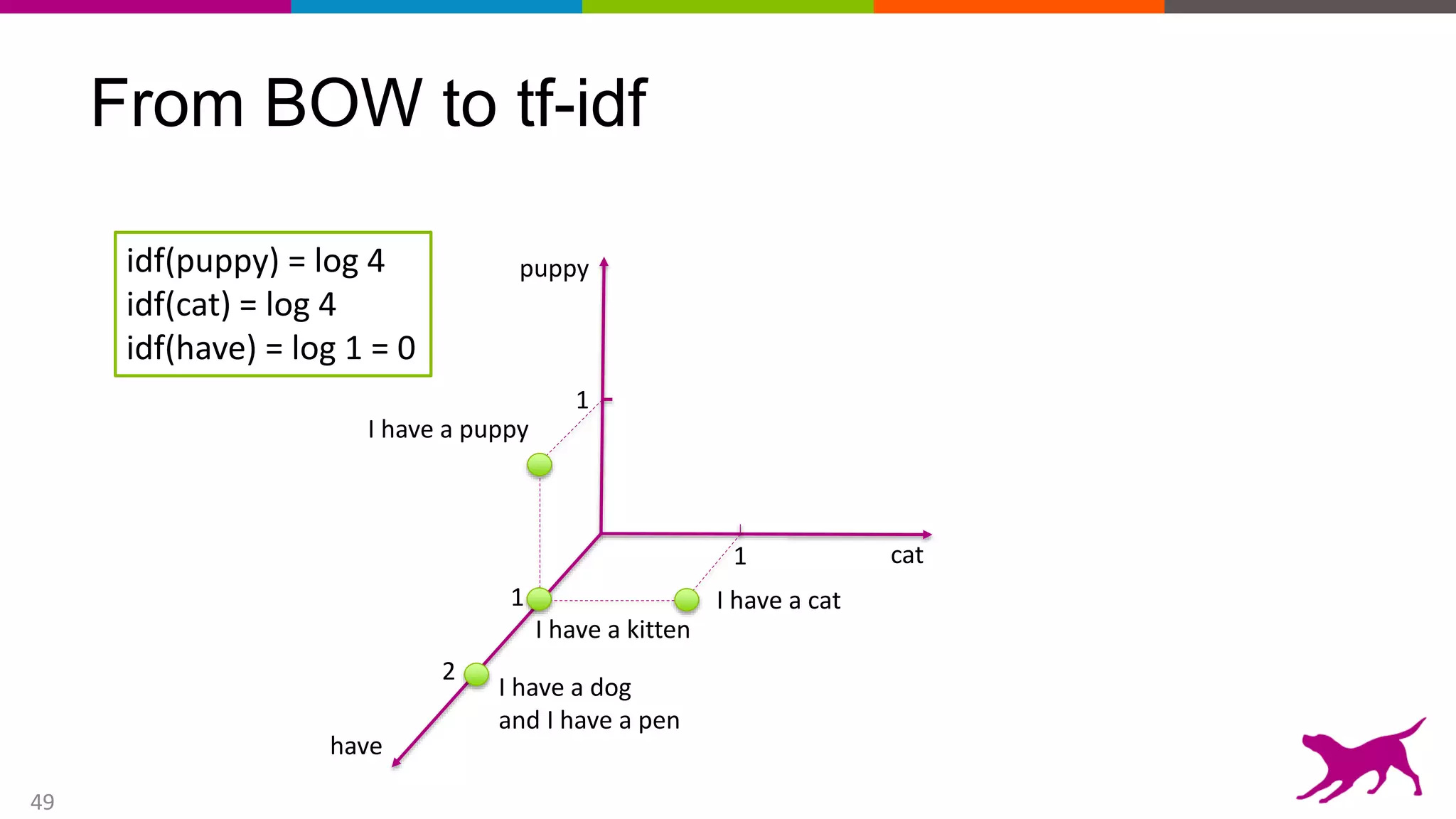

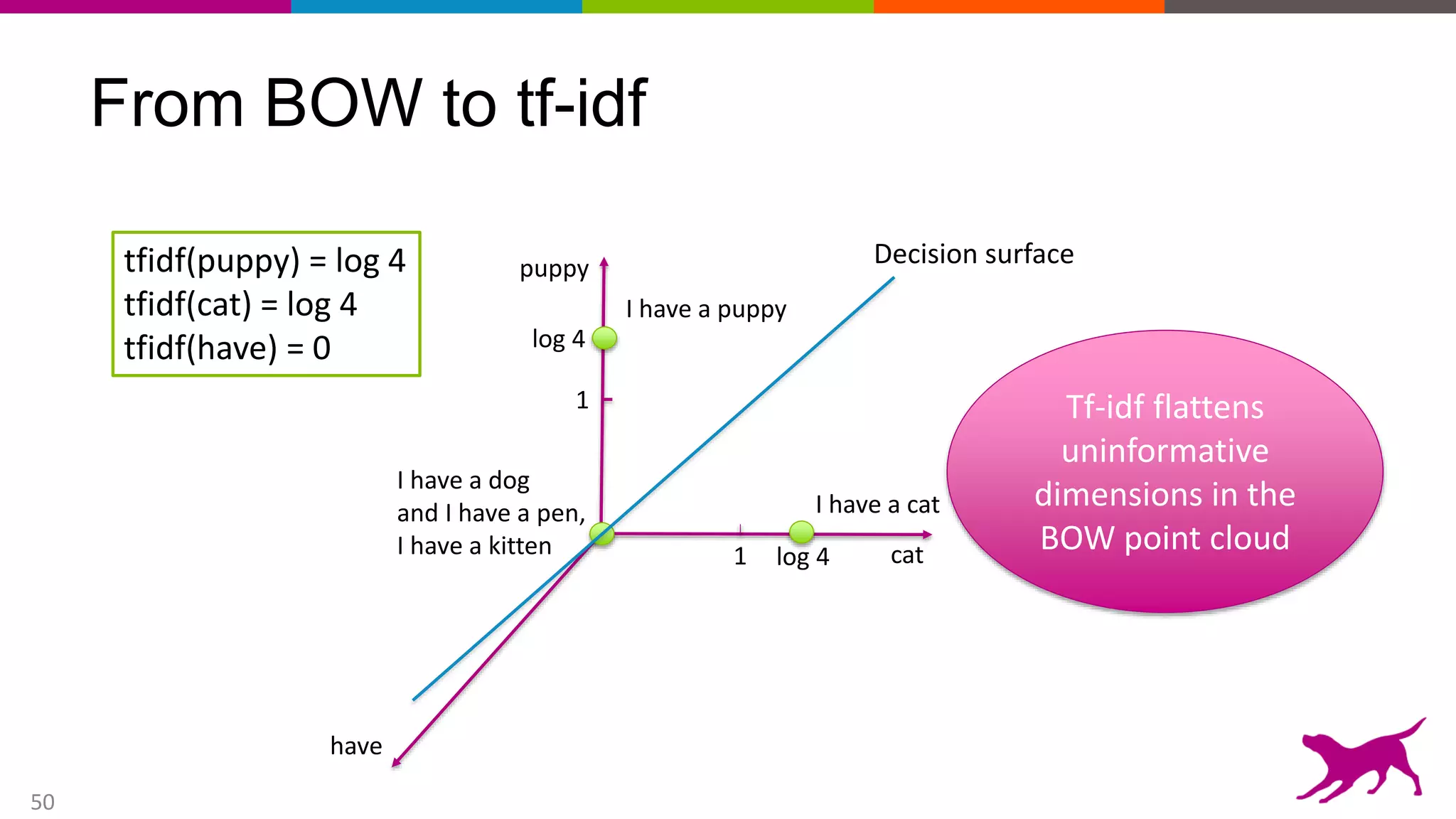

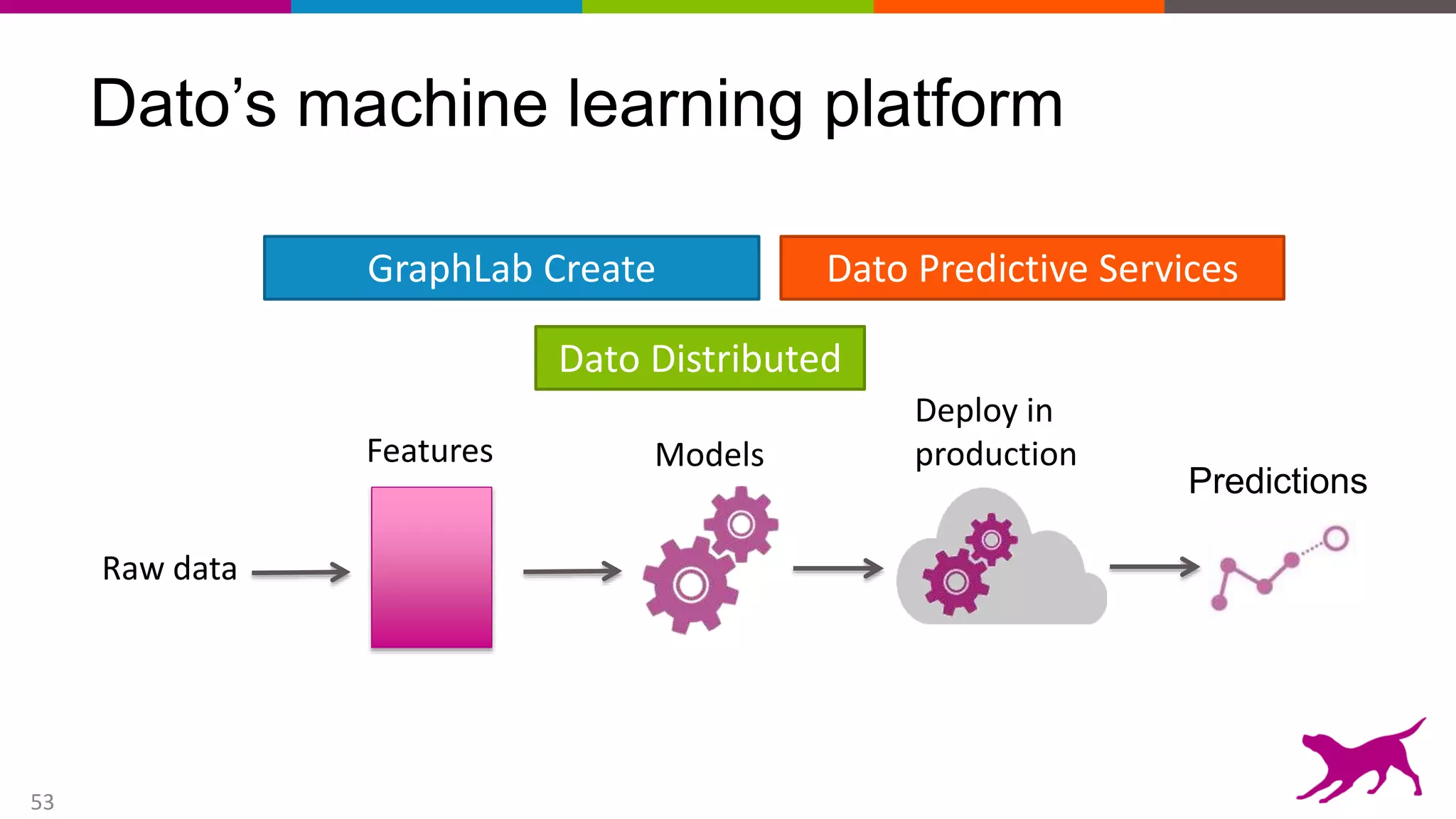

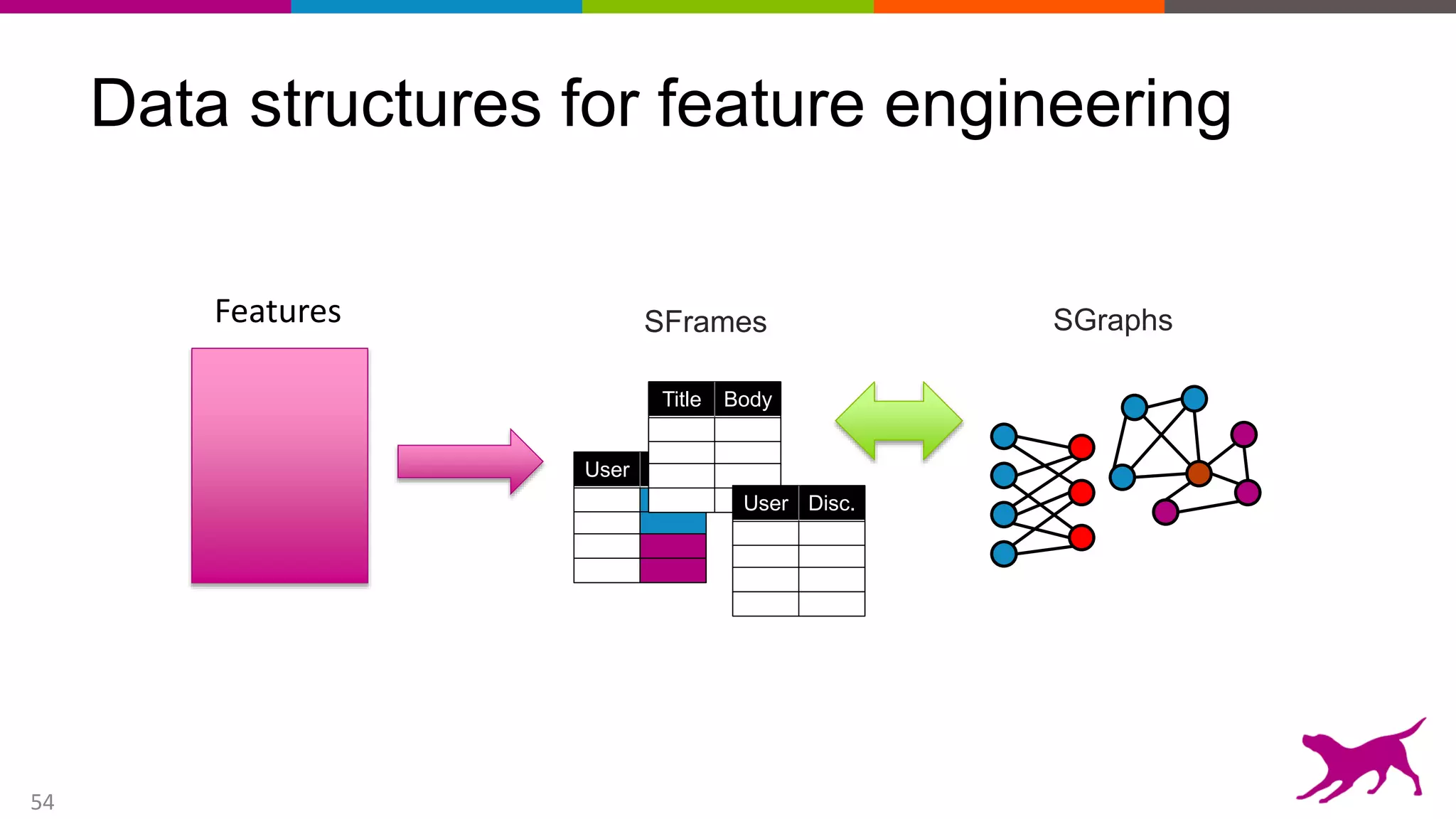

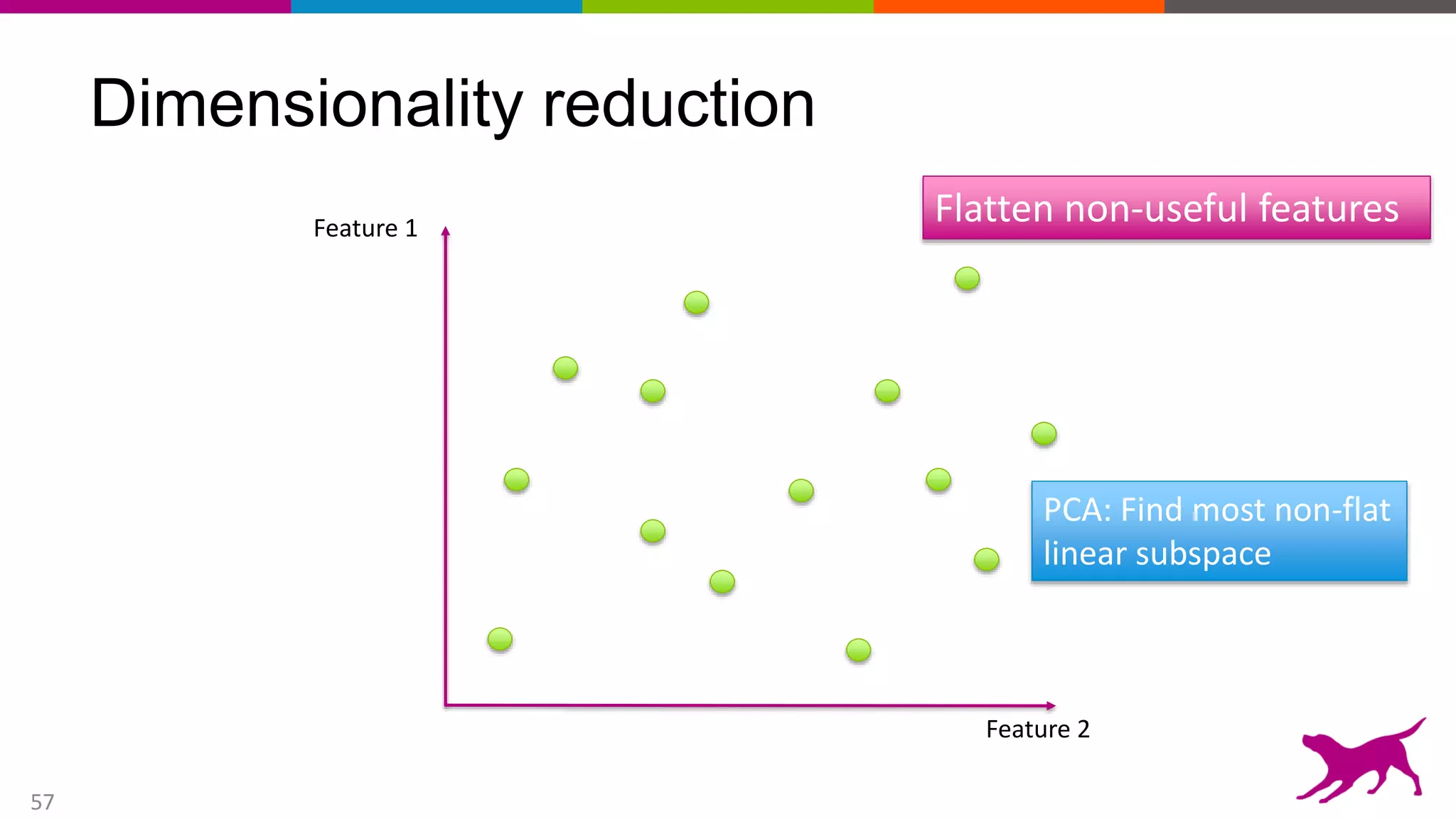

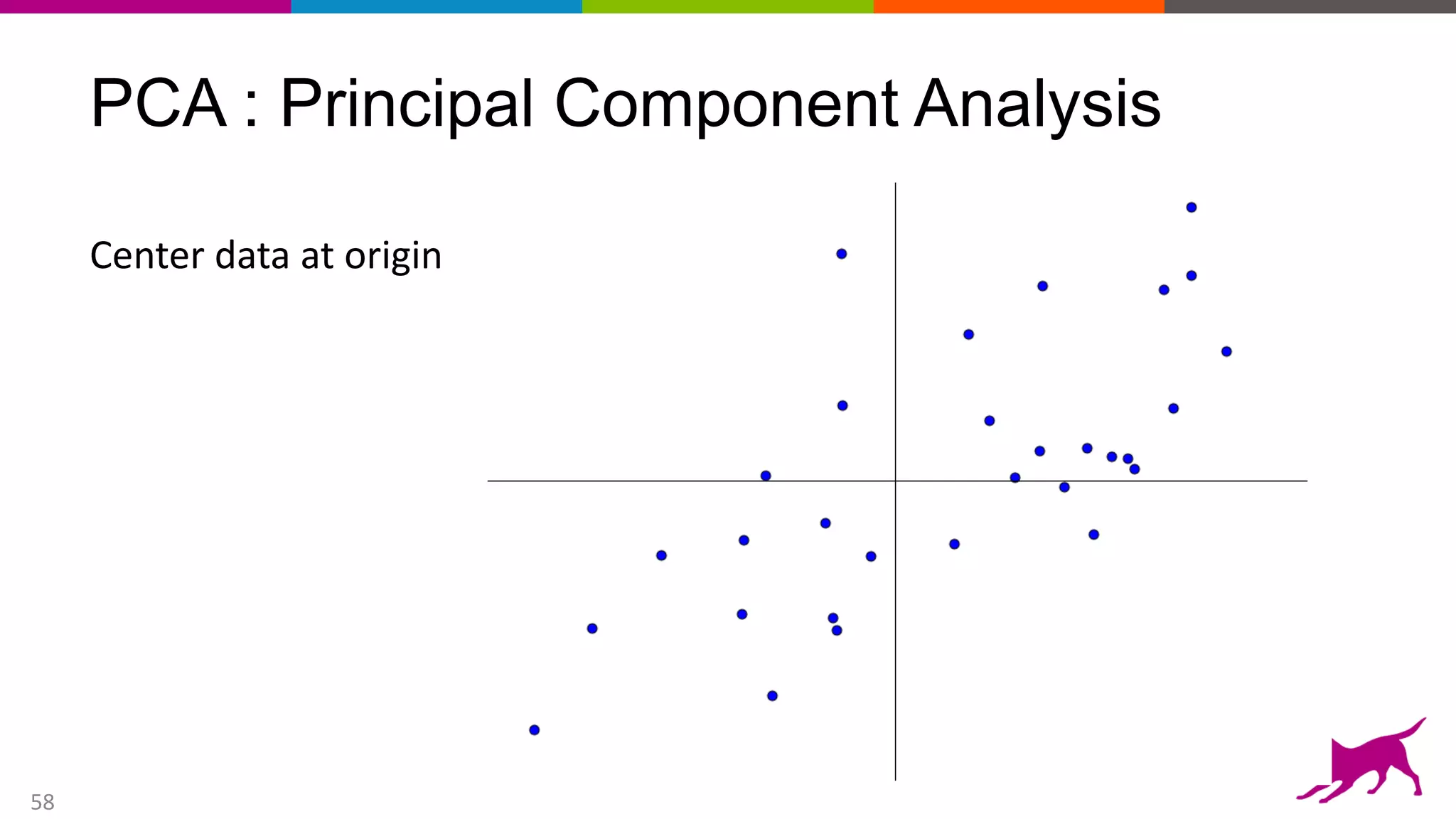

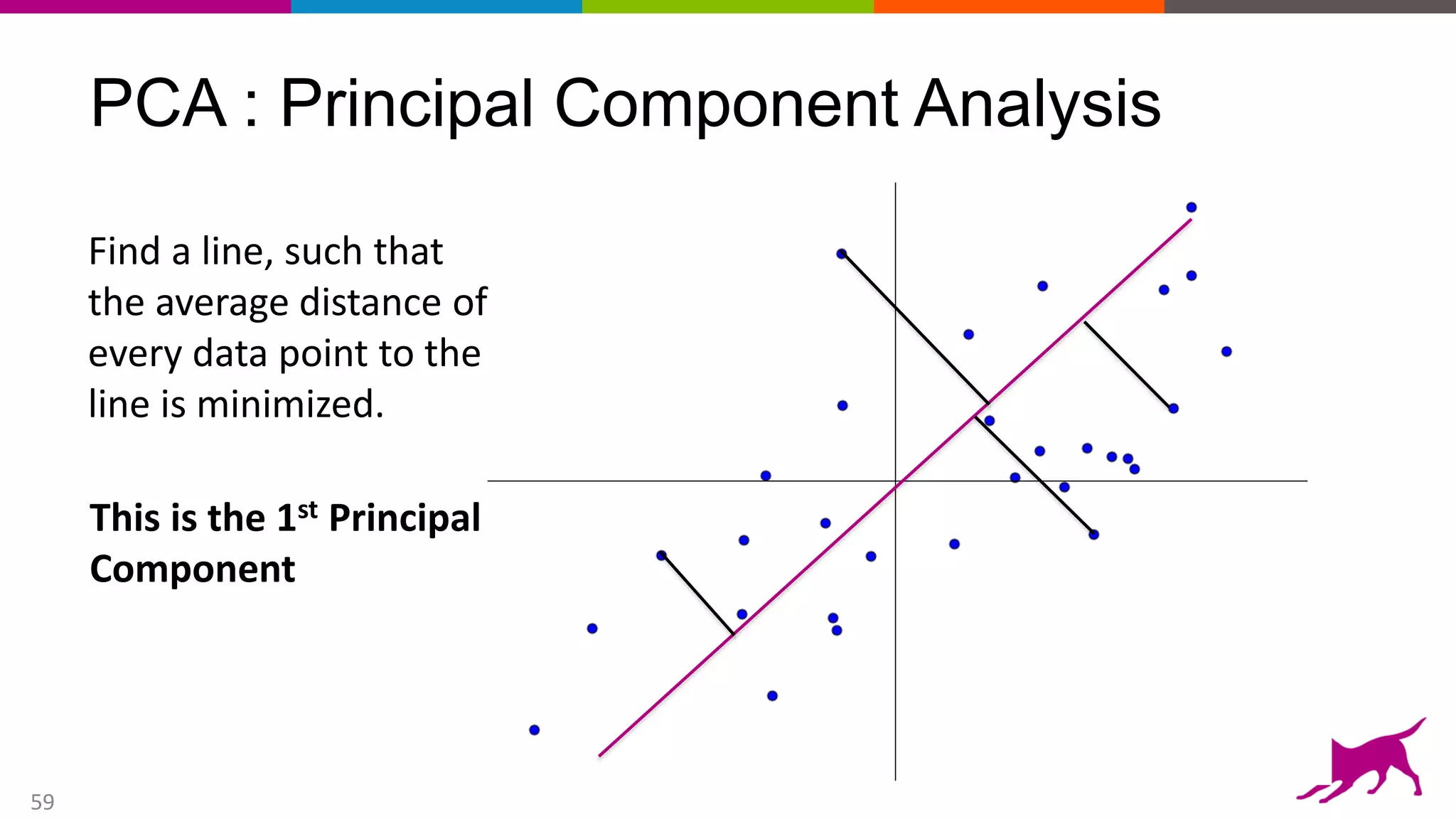

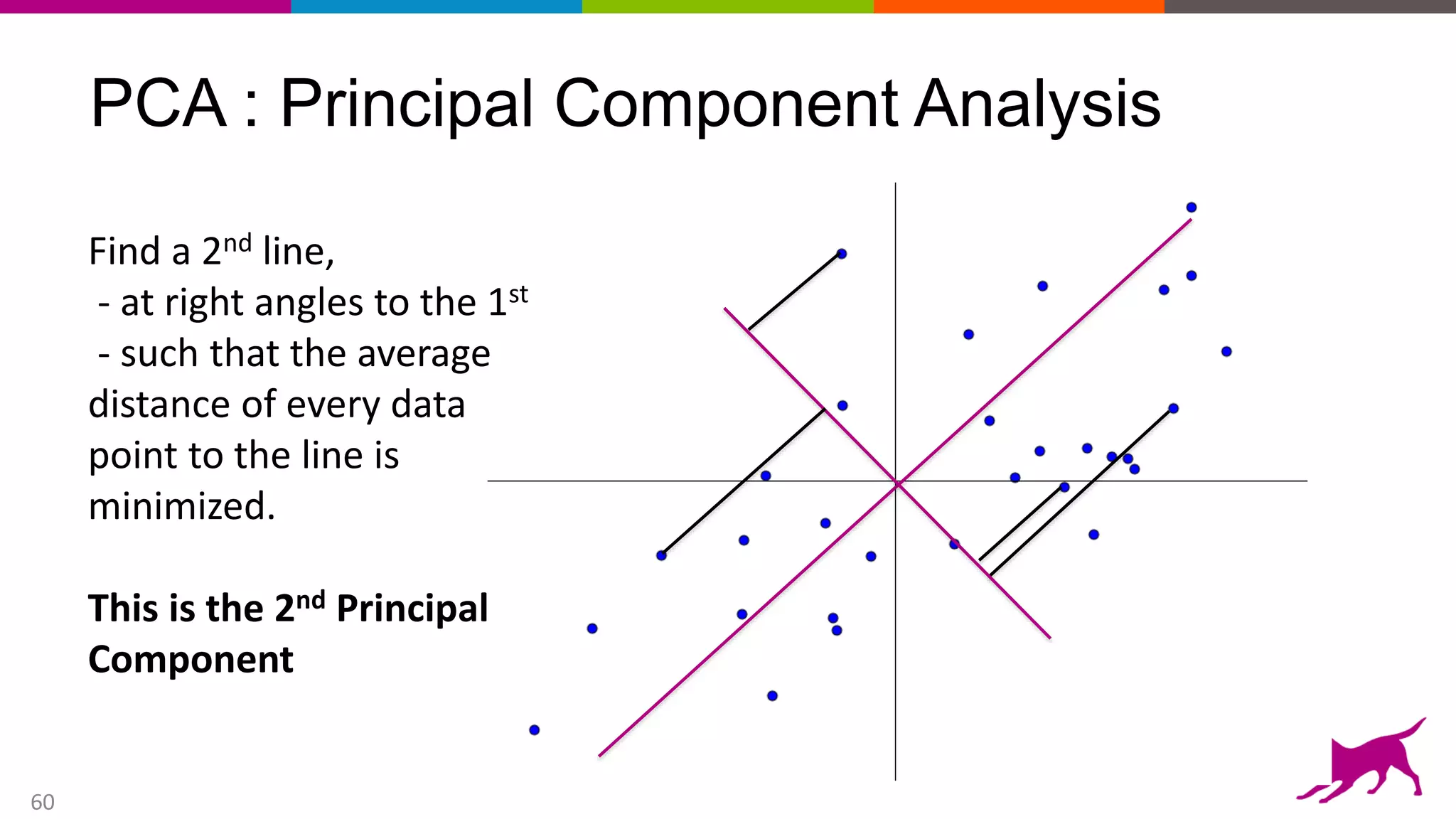

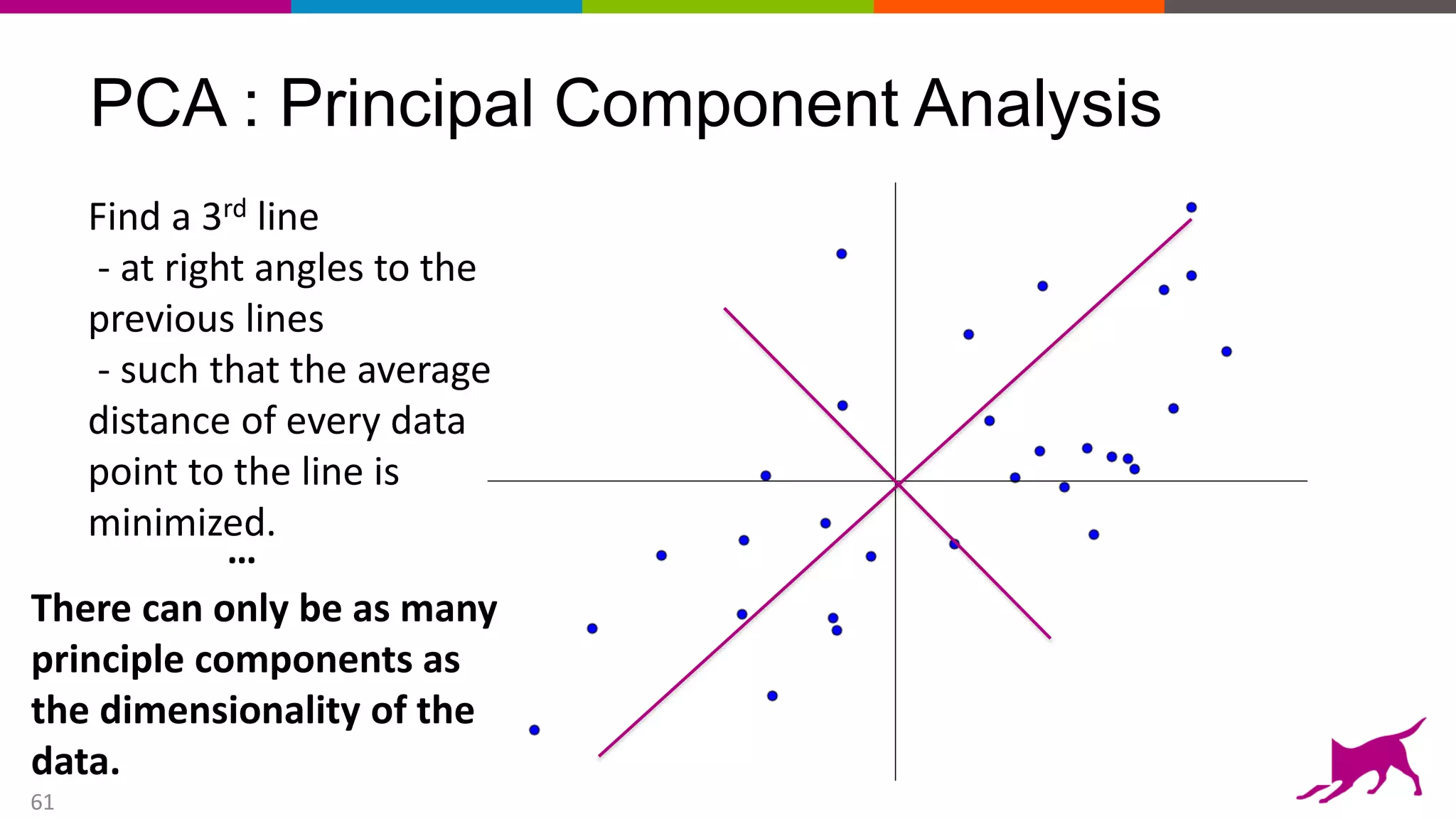

The document provides an overview of machine learning concepts, focusing on feature engineering and its importance in building intelligent applications. It discusses various methodologies, including classification, regression, and clustering, and introduces tools and frameworks for machine learning. The presentation emphasizes the need for effective data representation and feature selection to improve model performance.