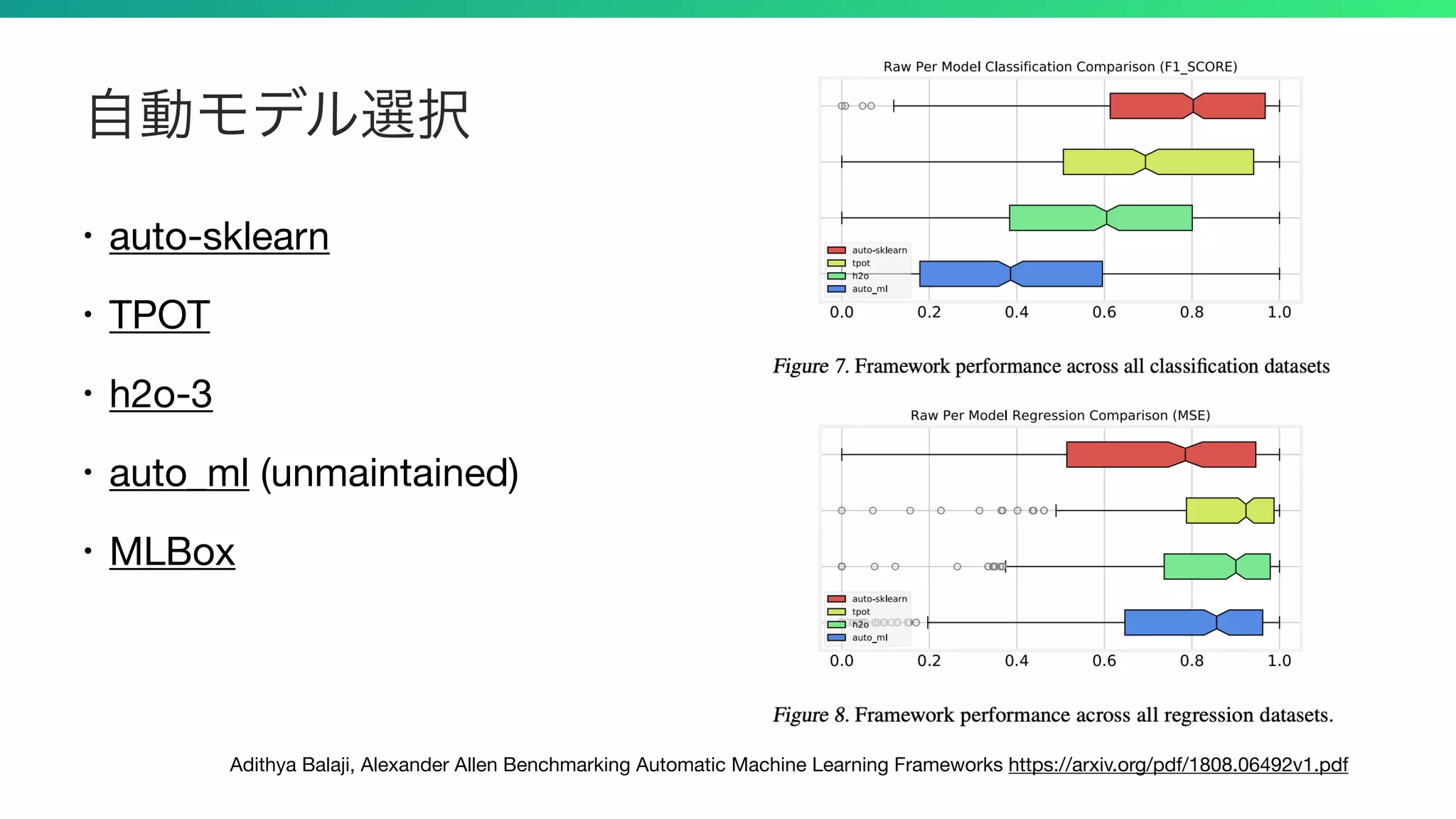

The document discusses various aspects of automated machine learning (AutoML) in Python, covering feature preprocessing, model selection, and hyperparameter optimization techniques. It references notable tools and frameworks, such as Auto-Sklearn, TPOT, Hyperopt, and Optuna, while highlighting methods for feature selection and data cleaning. Additionally, it presents insights from multiple research papers and tutorials related to the advancement of AutoML methodologies.

![Using Optuna for CASH Problems

def objective(trial):

iris = sklearn.datasets.load_iris()

x, y = iris.data, iris.target

classifier_name = trial.suggest_categorical('classifier', ['SVC', 'RandomForest'])

if classifier_name == 'SVC':

svc_c = trial.suggest_loguniform('svc_c', 1e-10, 1e10)

classifier_obj = sklearn.svm.SVC(C=svc_c, gamma='auto')

else:

rf_max_depth = int(trial.suggest_loguniform('rf_max_depth', 2, 32))

classifier_obj = sklearn.ensemble.RandomForestClassifier(

max_depth=rf_max_depth, n_estimators=10)

score = sklearn.model_selection.cross_val_score(

classifier_obj, x, y, n_jobs=-1, cv=3)

accuracy = score.mean()

return accuracy

https://github.com/pfnet/optuna/blob/v0.16.0/examples/sklearn_simple.py](https://image.slidesharecdn.com/automl-190916020403/75/Python-AutoML-at-PyConJP-2019-43-2048.jpg)

![Optuna for CASH Problem

def objective(trial):

iris = sklearn.datasets.load_iris()

x, y = iris.data, iris.target

classifier_name = trial.suggest_categorical('classifier', ['SVC', 'RandomForest'])

if classifier_name == 'SVC':

svc_c = trial.suggest_loguniform('svc_c', 1e-10, 1e10)

classifier_obj = sklearn.svm.SVC(C=svc_c, gamma='auto')

else:

rf_max_depth = int(trial.suggest_loguniform('rf_max_depth', 2, 32))

classifier_obj = sklearn.ensemble.RandomForestClassifier(

max_depth=rf_max_depth, n_estimators=10)

score = sklearn.model_selection.cross_val_score(

classifier_obj, x, y, n_jobs=-1, cv=3)

accuracy = score.mean()

return accuracy

https://github.com/pfnet/optuna/blob/v0.16.0/examples/sklearn_simple.py

Algorithm Selection](https://image.slidesharecdn.com/automl-190916020403/75/Python-AutoML-at-PyConJP-2019-44-2048.jpg)

![Optuna for CASH Problem

def objective(trial):

iris = sklearn.datasets.load_iris()

x, y = iris.data, iris.target

classifier_name = trial.suggest_categorical('classifier', ['SVC', 'RandomForest'])

if classifier_name == 'SVC':

svc_c = trial.suggest_loguniform('svc_c', 1e-10, 1e10)

classifier_obj = sklearn.svm.SVC(C=svc_c, gamma='auto')

else:

rf_max_depth = int(trial.suggest_loguniform('rf_max_depth', 2, 32))

classifier_obj = sklearn.ensemble.RandomForestClassifier(

max_depth=rf_max_depth, n_estimators=10)

score = sklearn.model_selection.cross_val_score(

classifier_obj, x, y, n_jobs=-1, cv=3)

accuracy = score.mean()

return accuracy

https://github.com/pfnet/optuna/blob/v0.16.0/examples/sklearn_simple.py

Hyperparameter optimization](https://image.slidesharecdn.com/automl-190916020403/75/Python-AutoML-at-PyConJP-2019-45-2048.jpg)