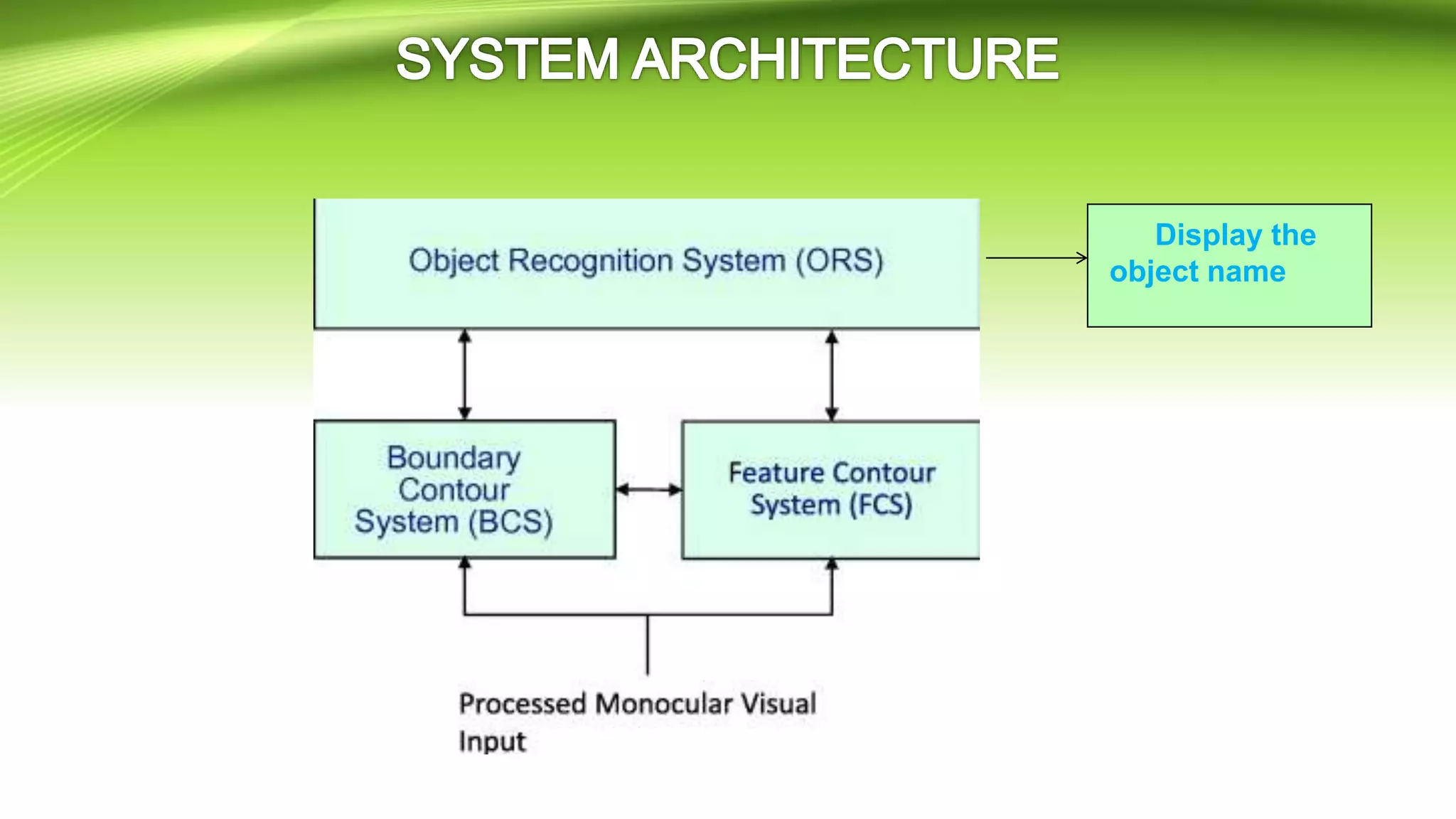

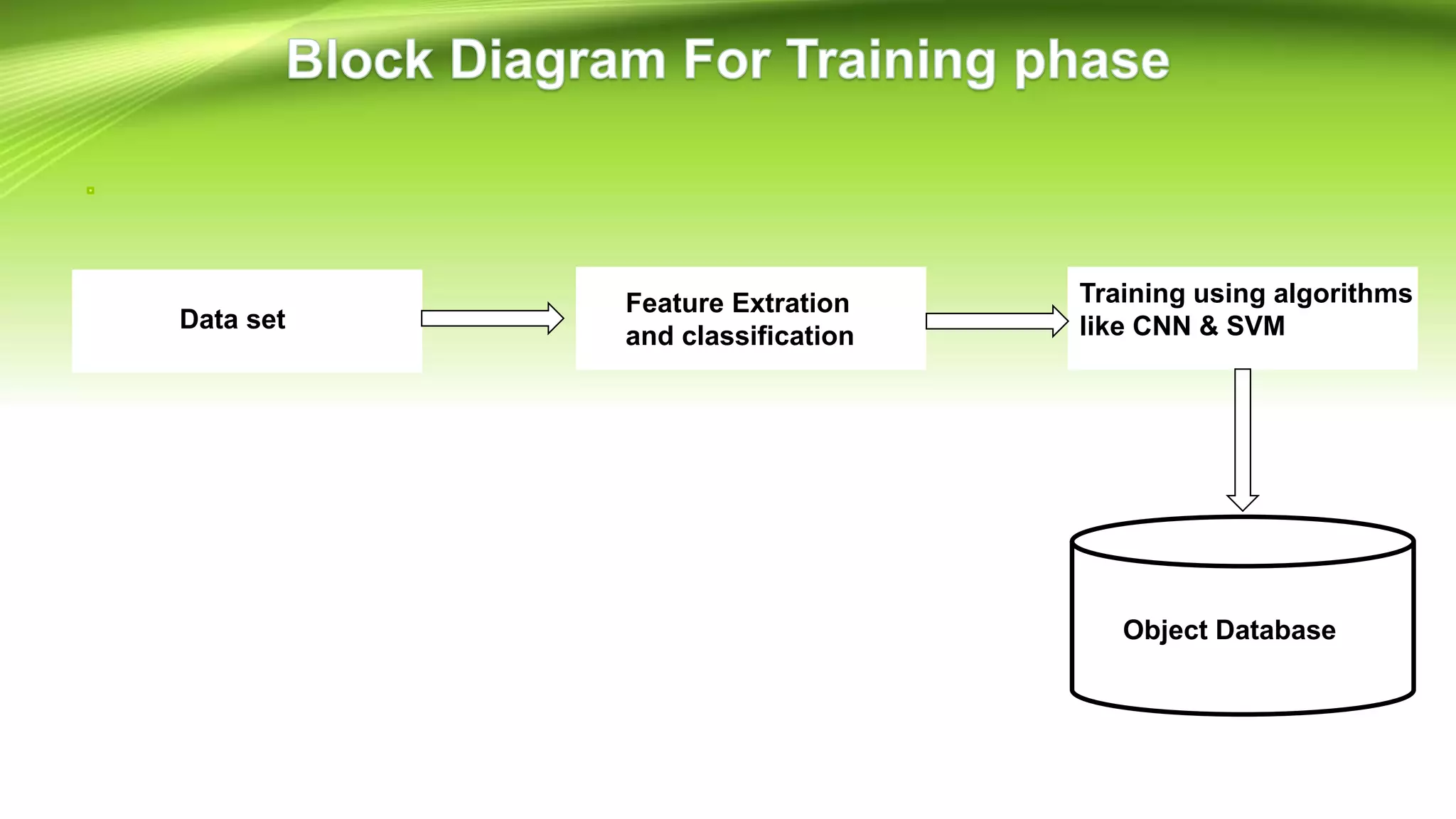

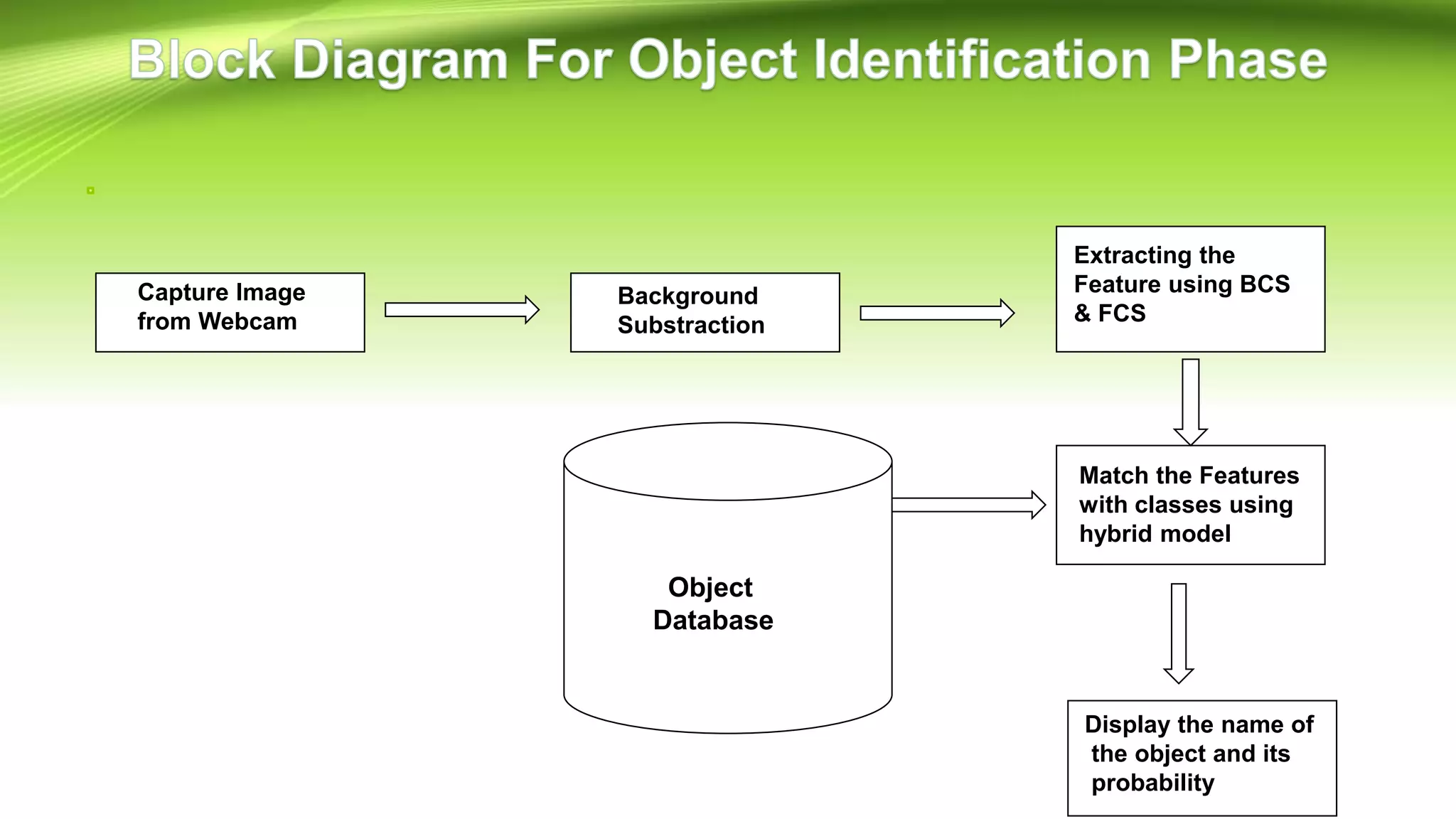

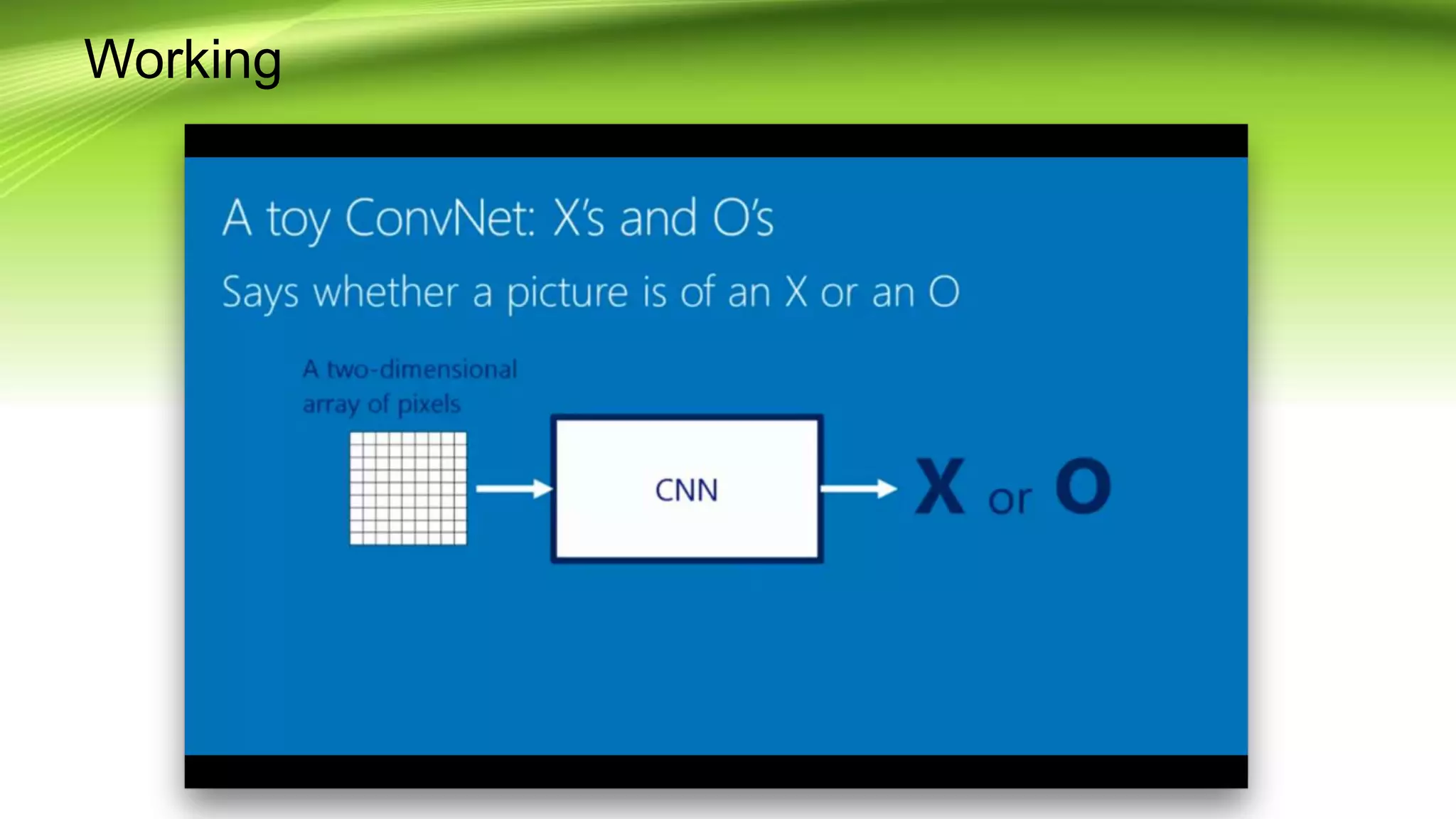

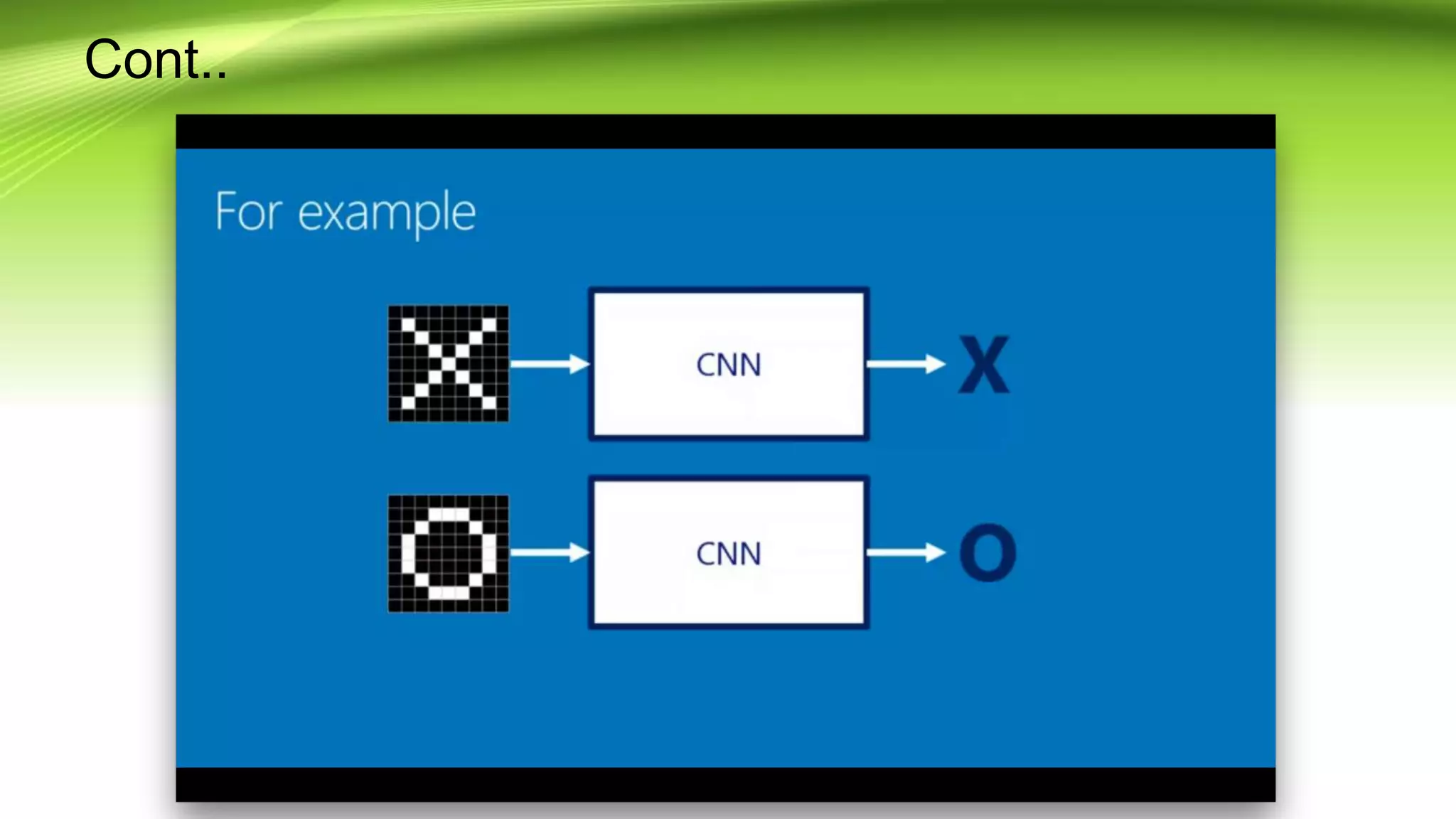

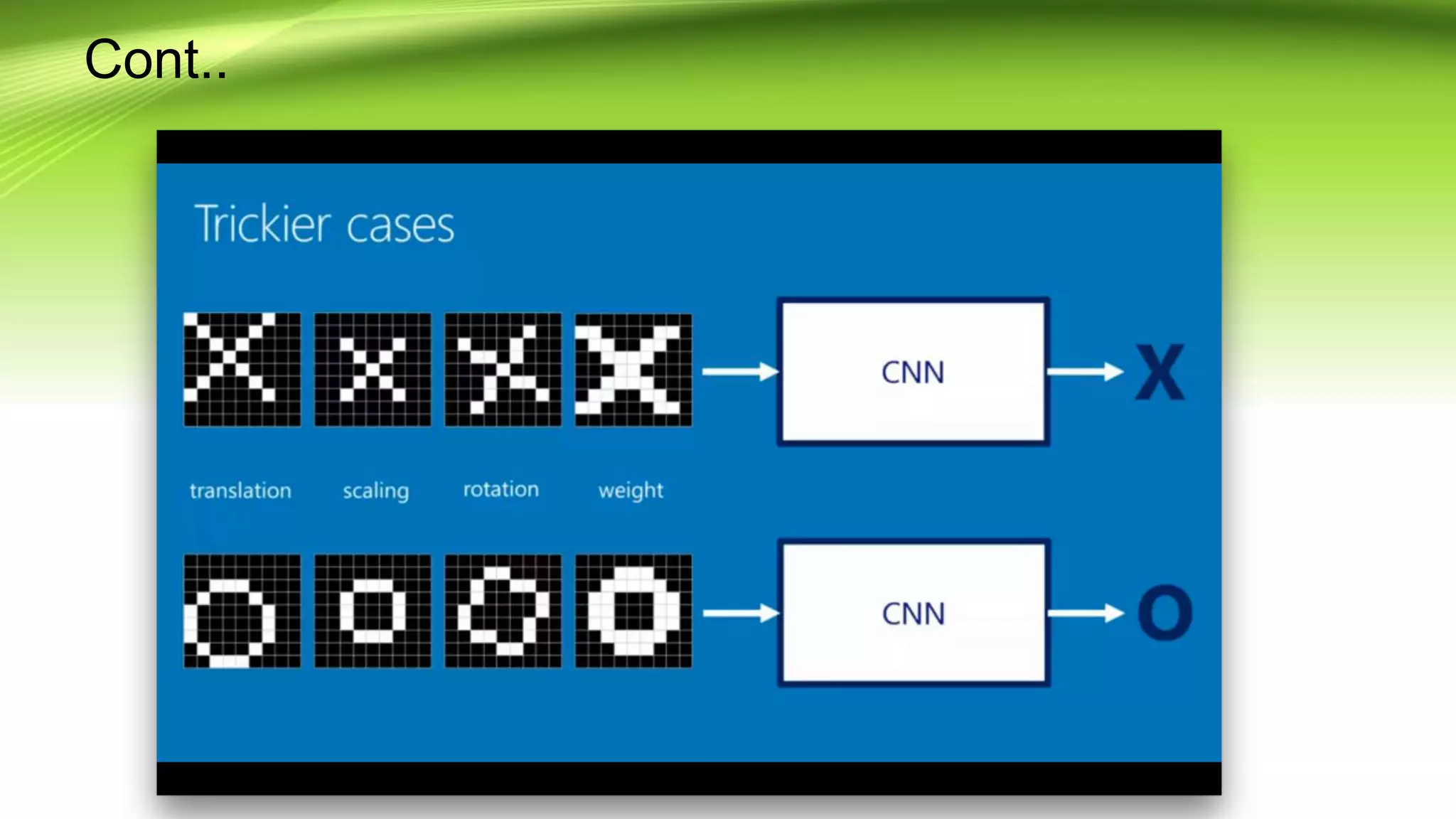

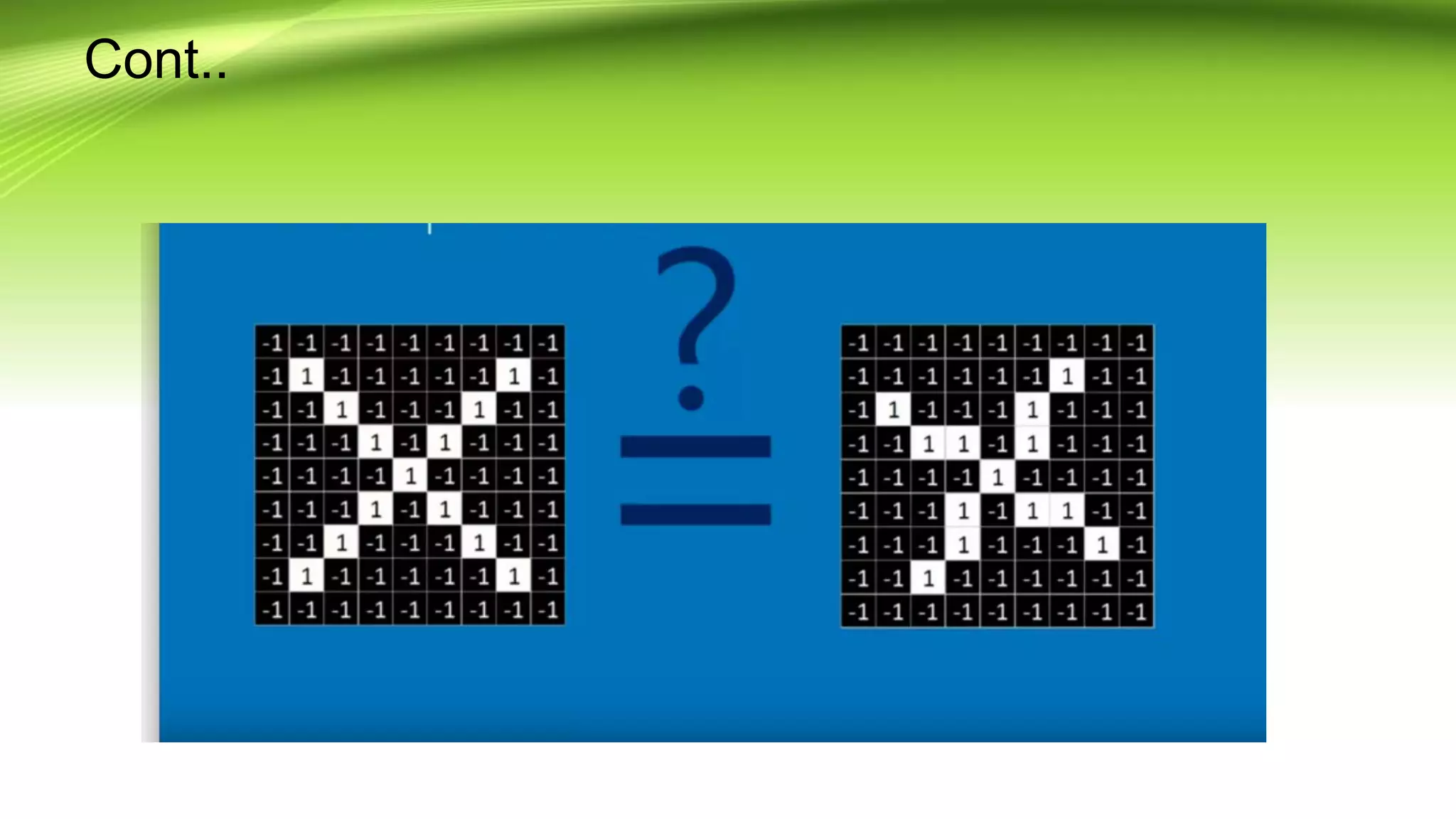

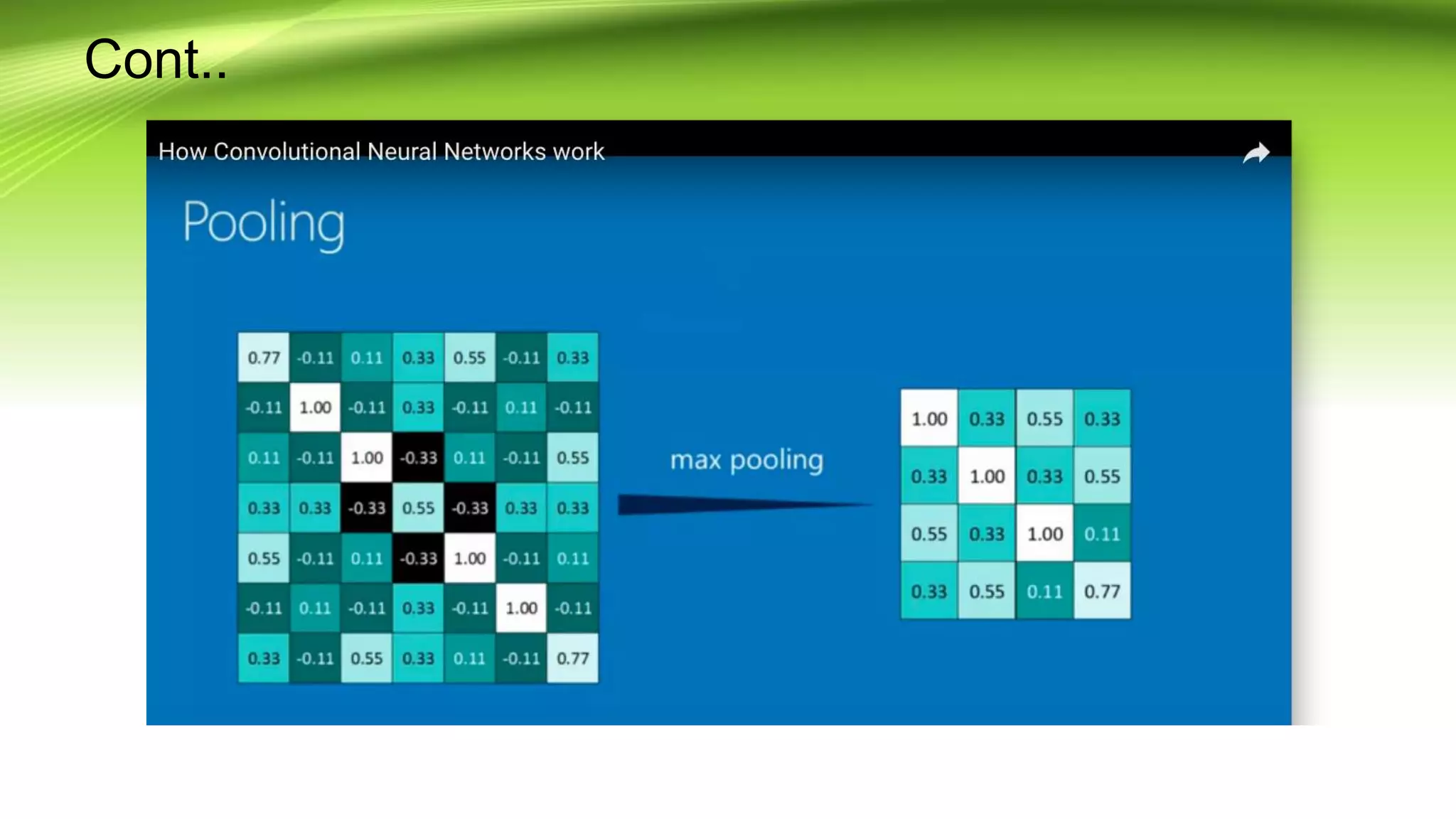

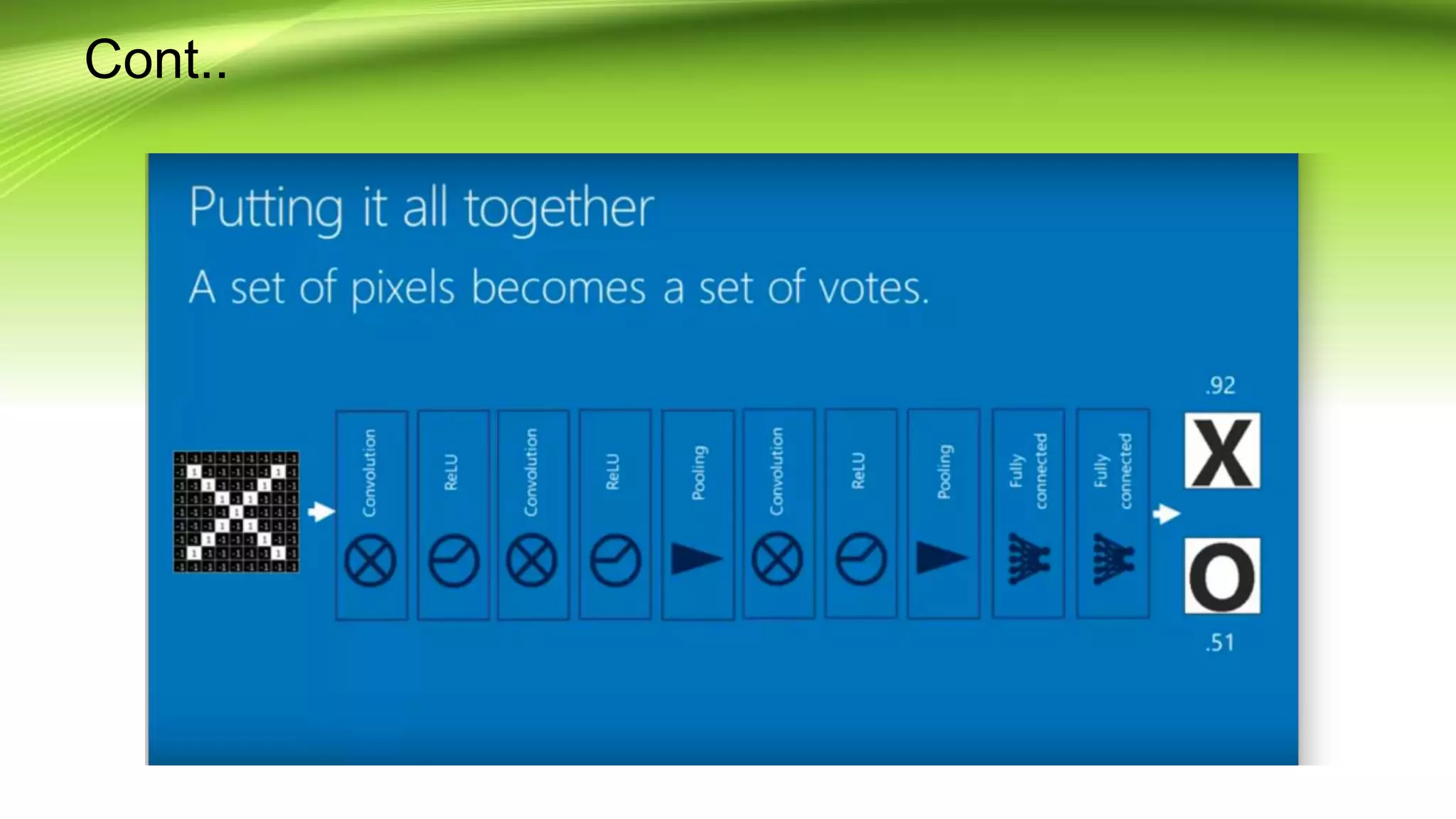

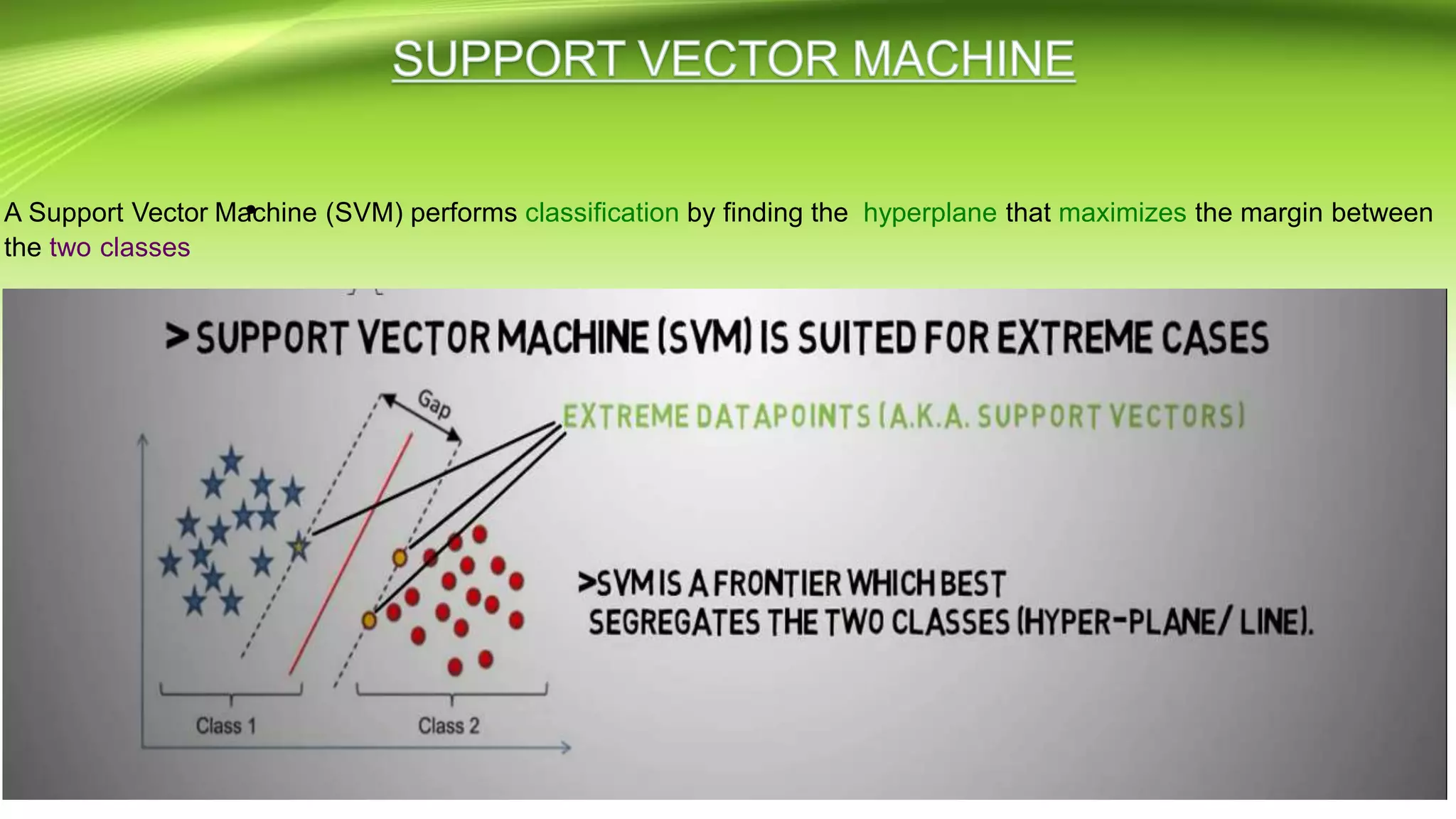

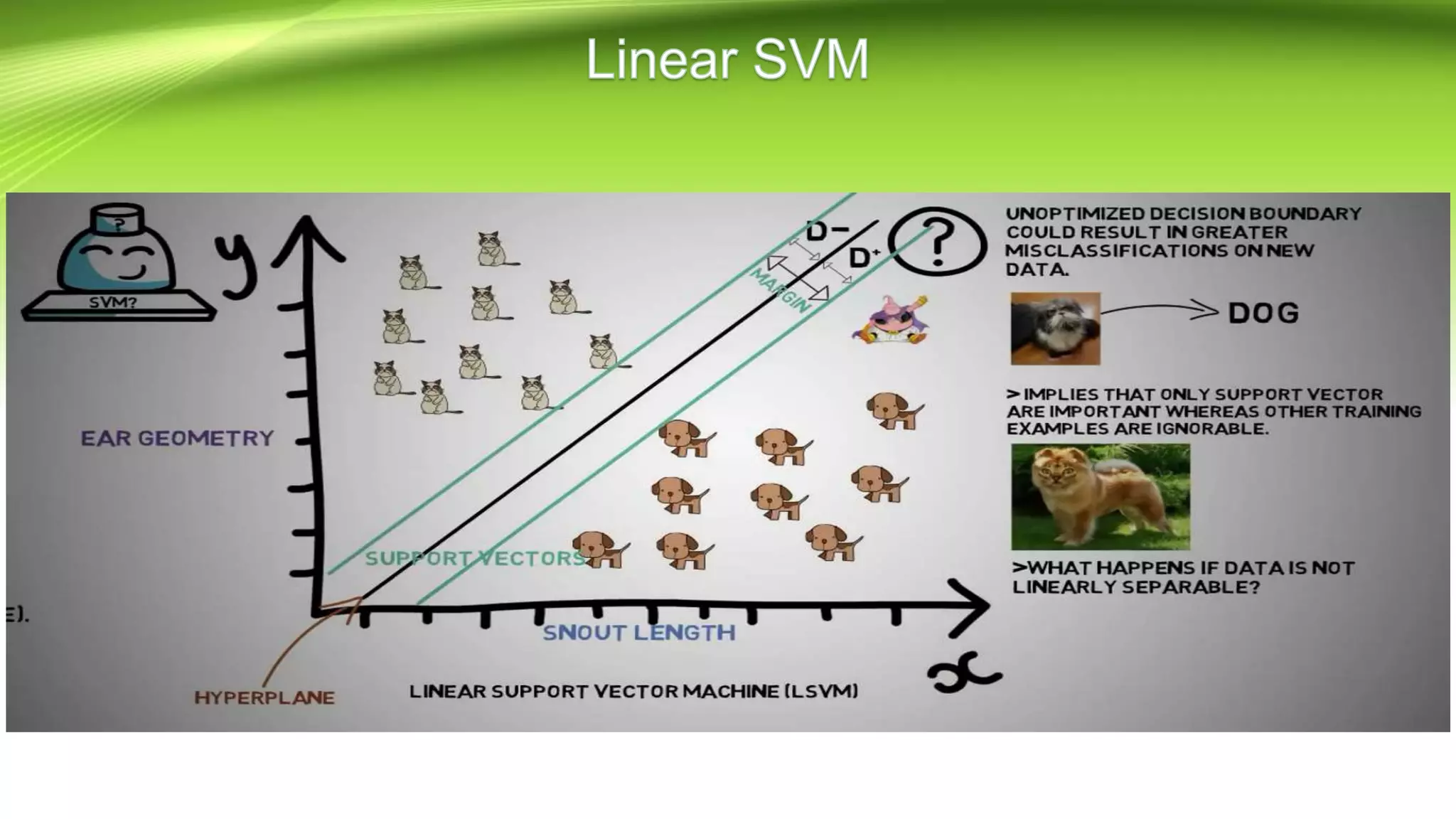

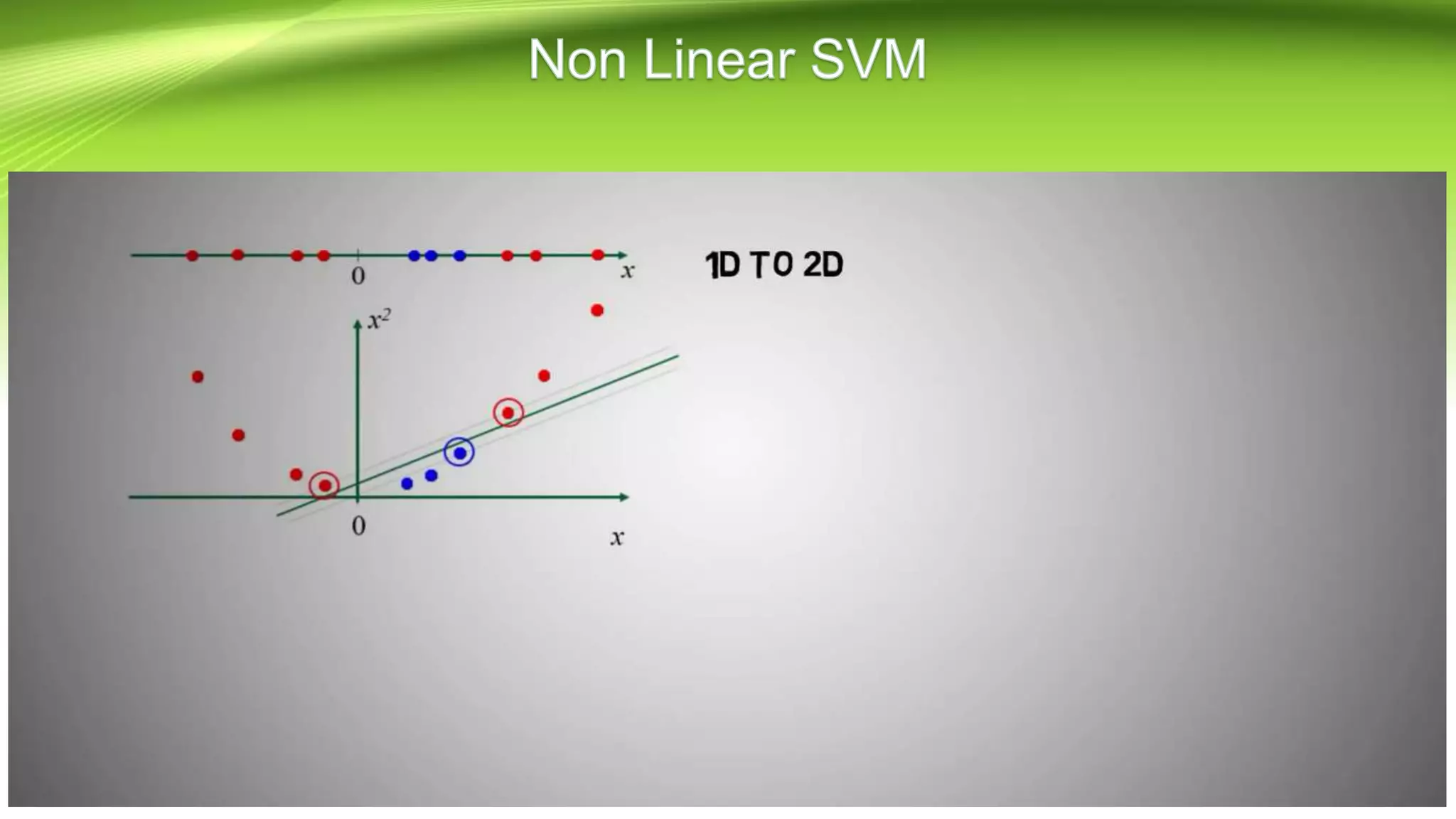

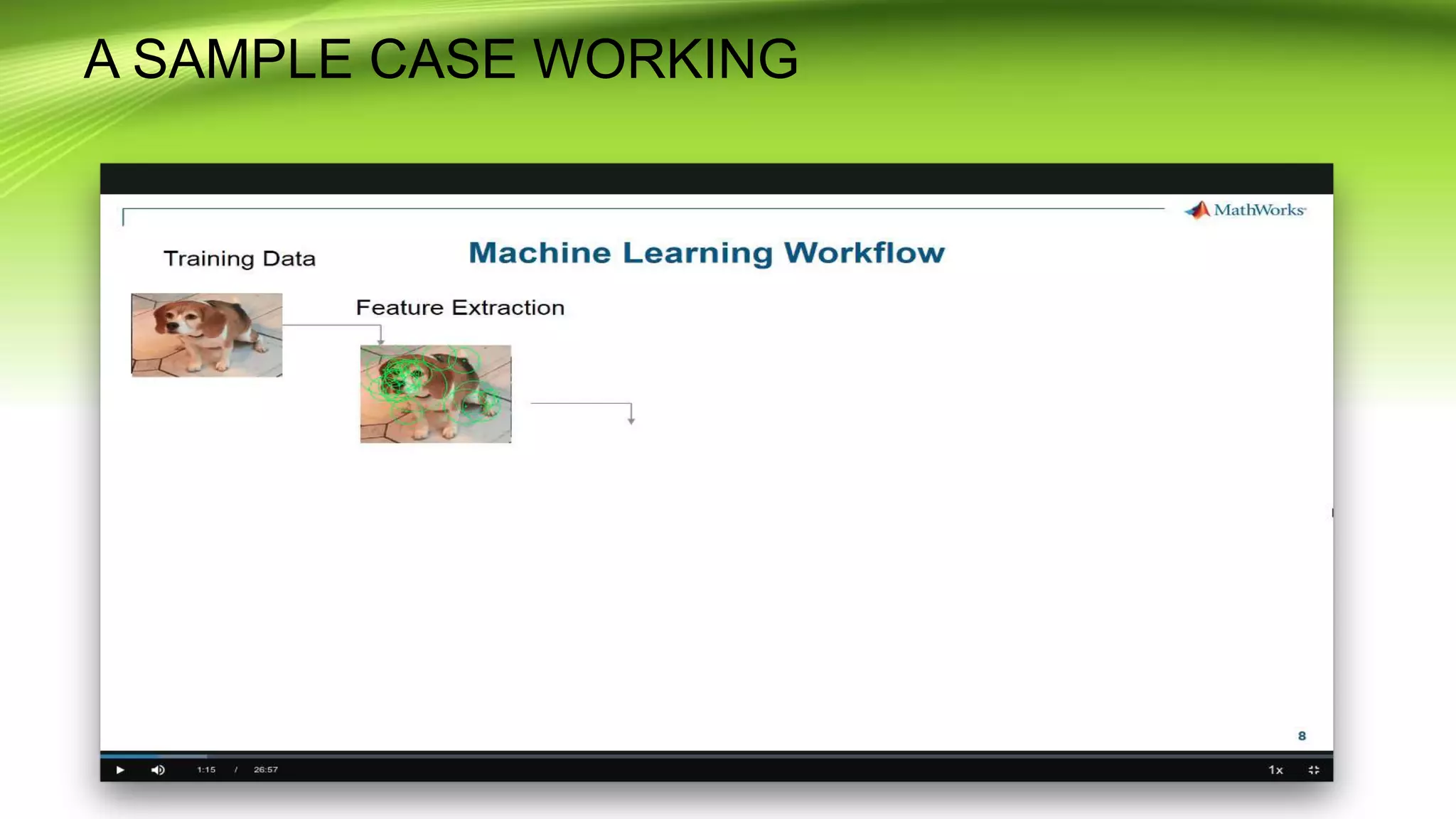

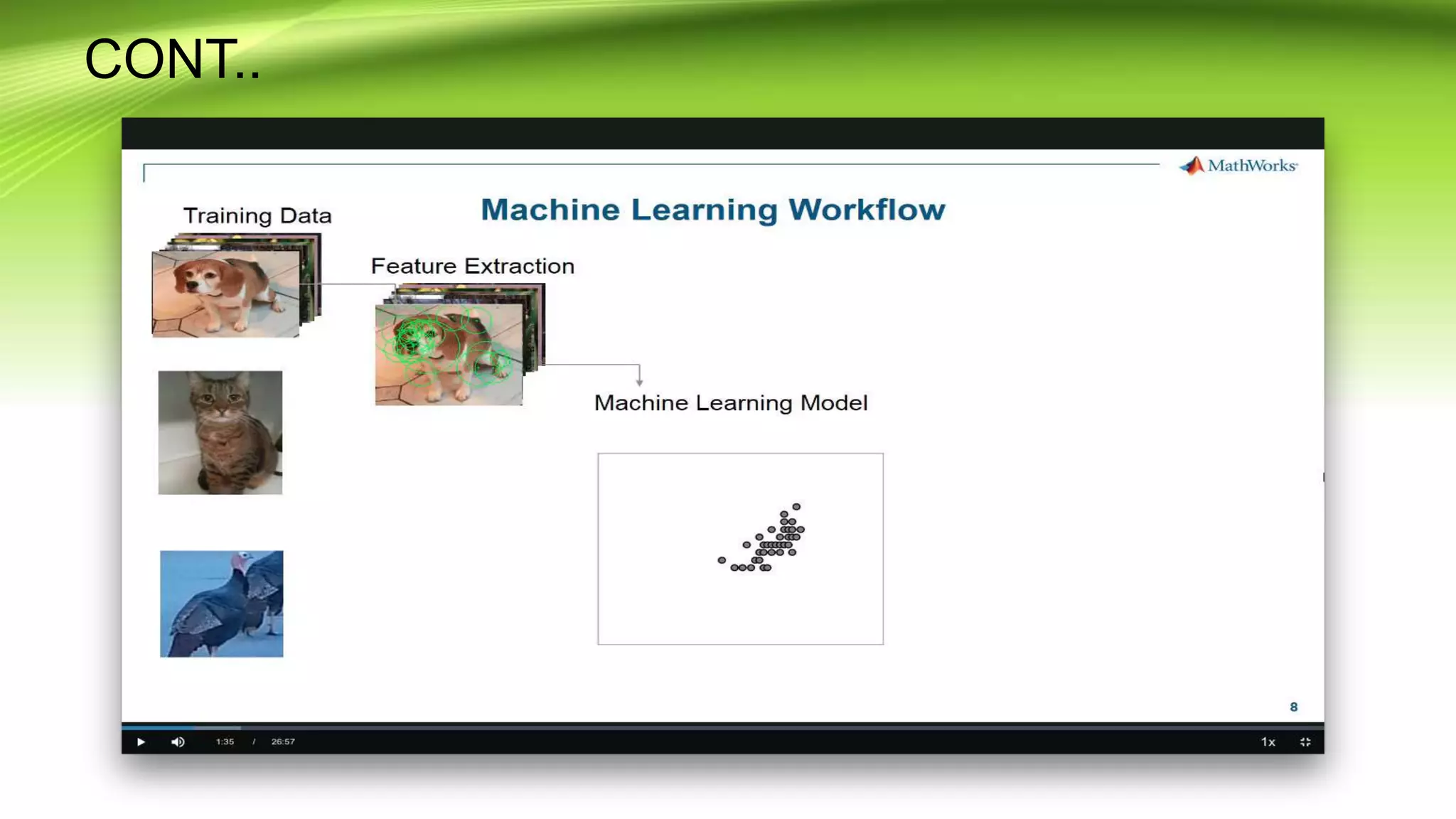

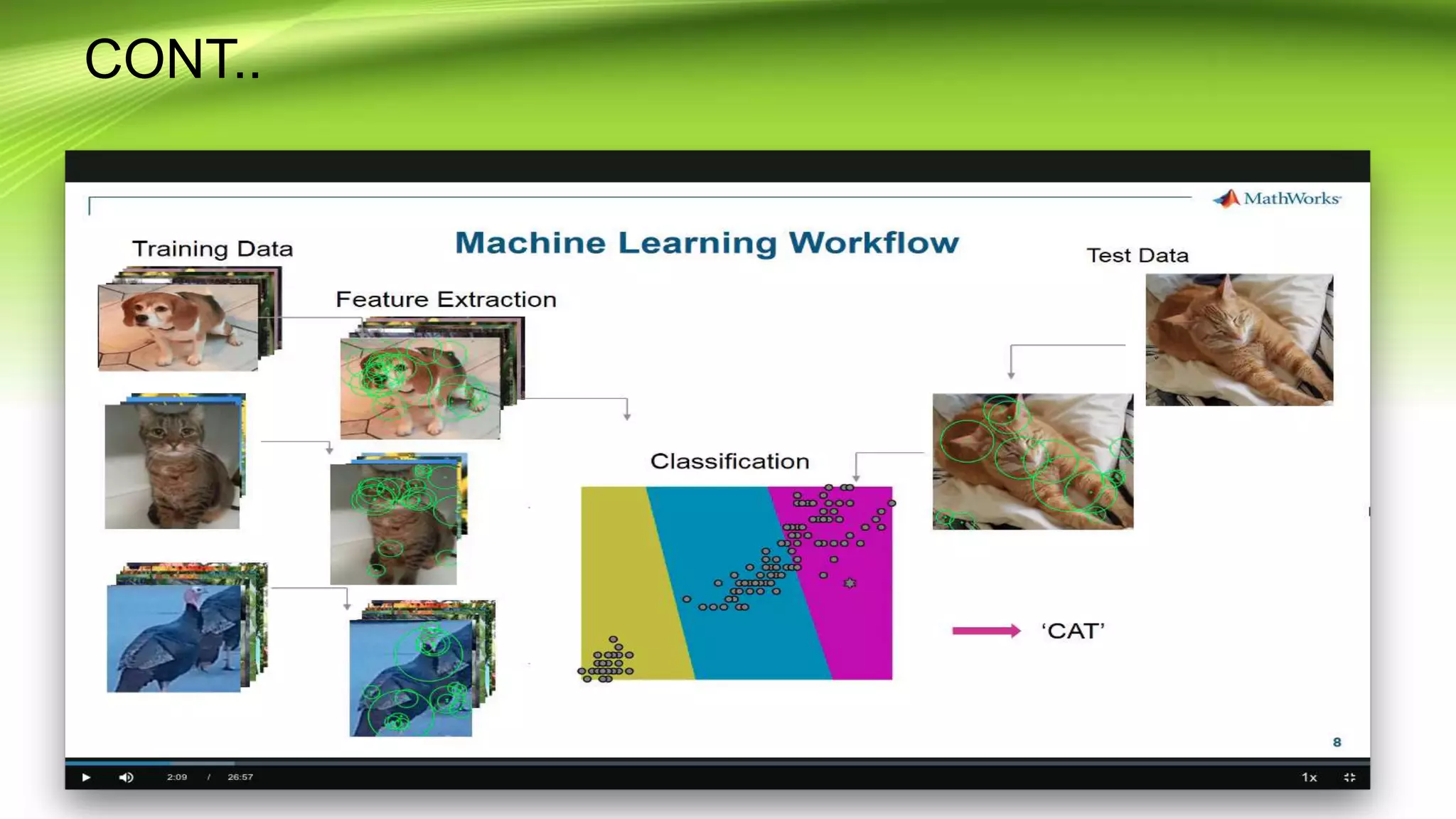

This document discusses the development of a real-time object detection system using computer vision techniques. It aims to recognize and label moving objects in video streams from monitoring cameras with high accuracy and in a short amount of time. The system will use a hybrid model of convolutional neural networks and support vector machines for feature extraction and classification of objects from camera feeds into predefined classes. It is intended to help analyze surveillance video by only flagging clips that contain objects of interest like people or vehicles, reducing wasted storage and review time.