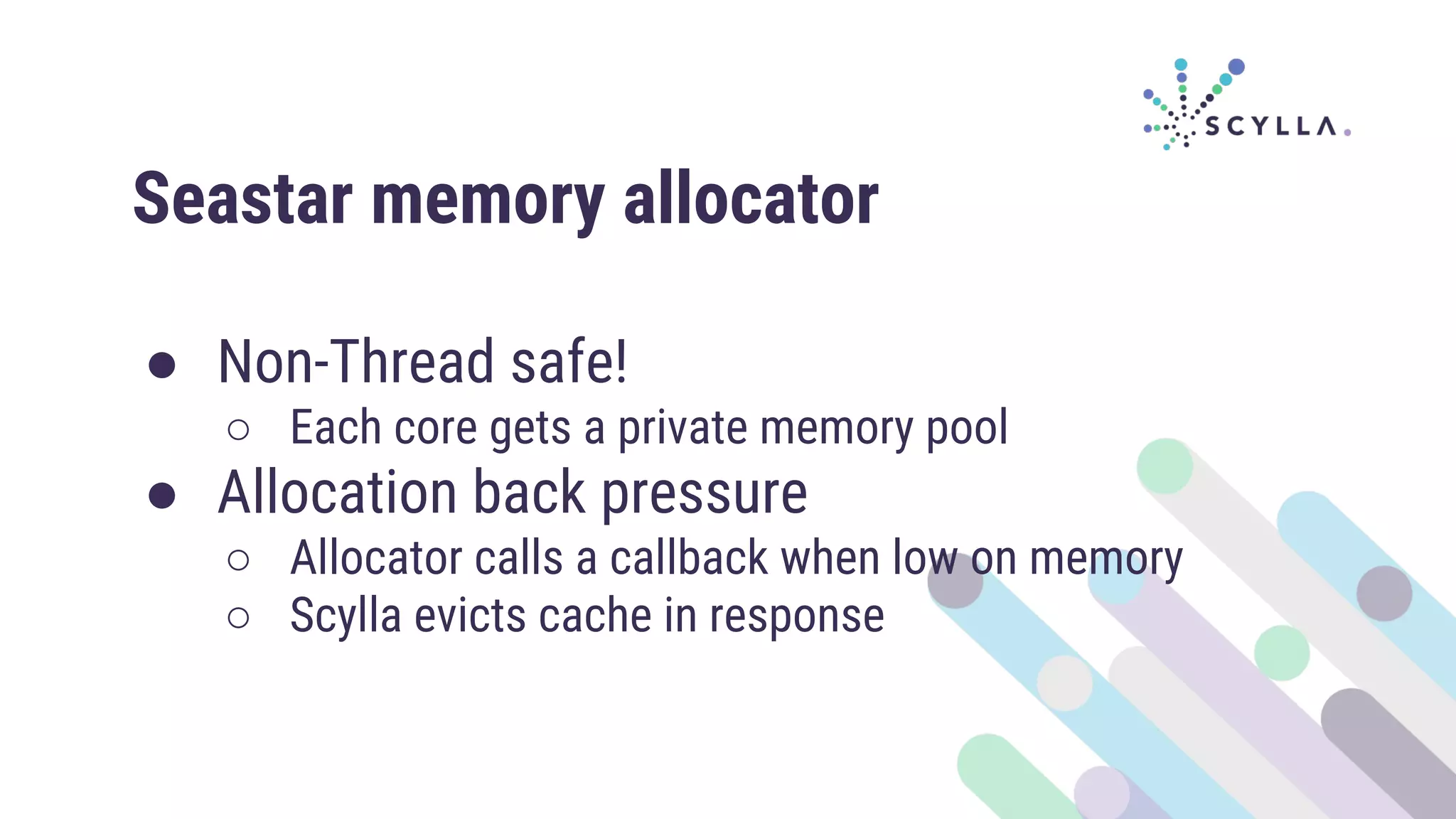

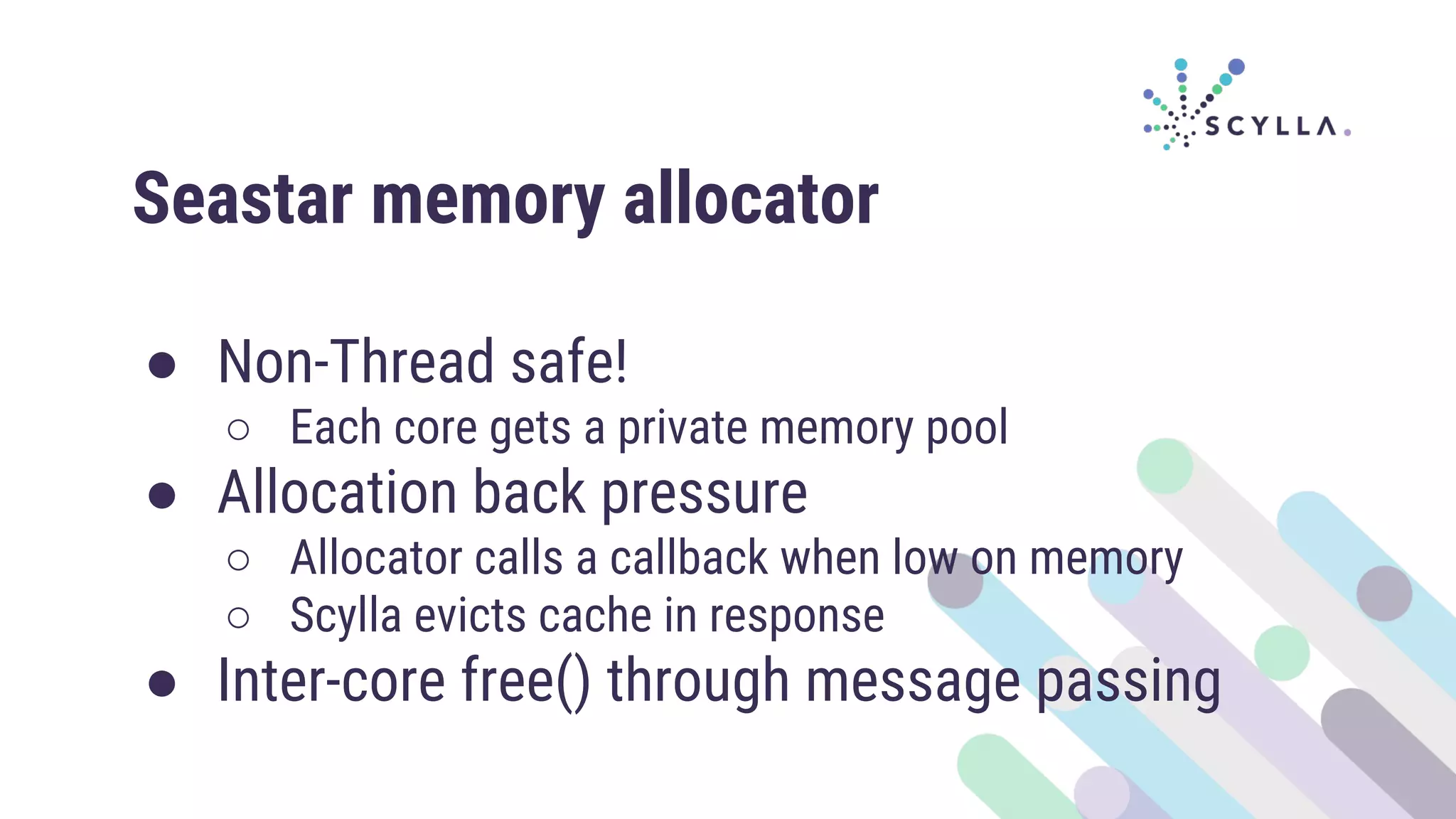

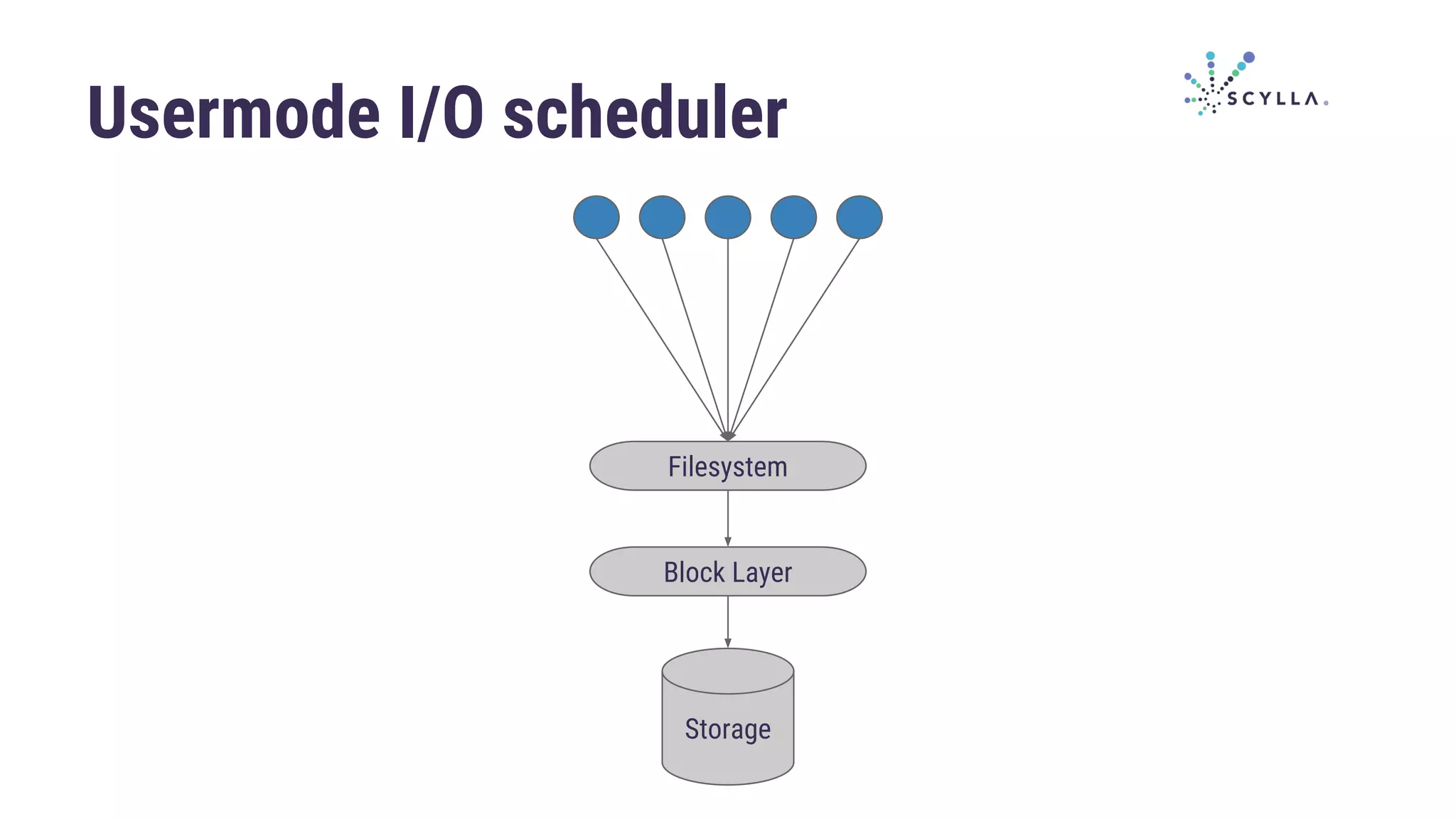

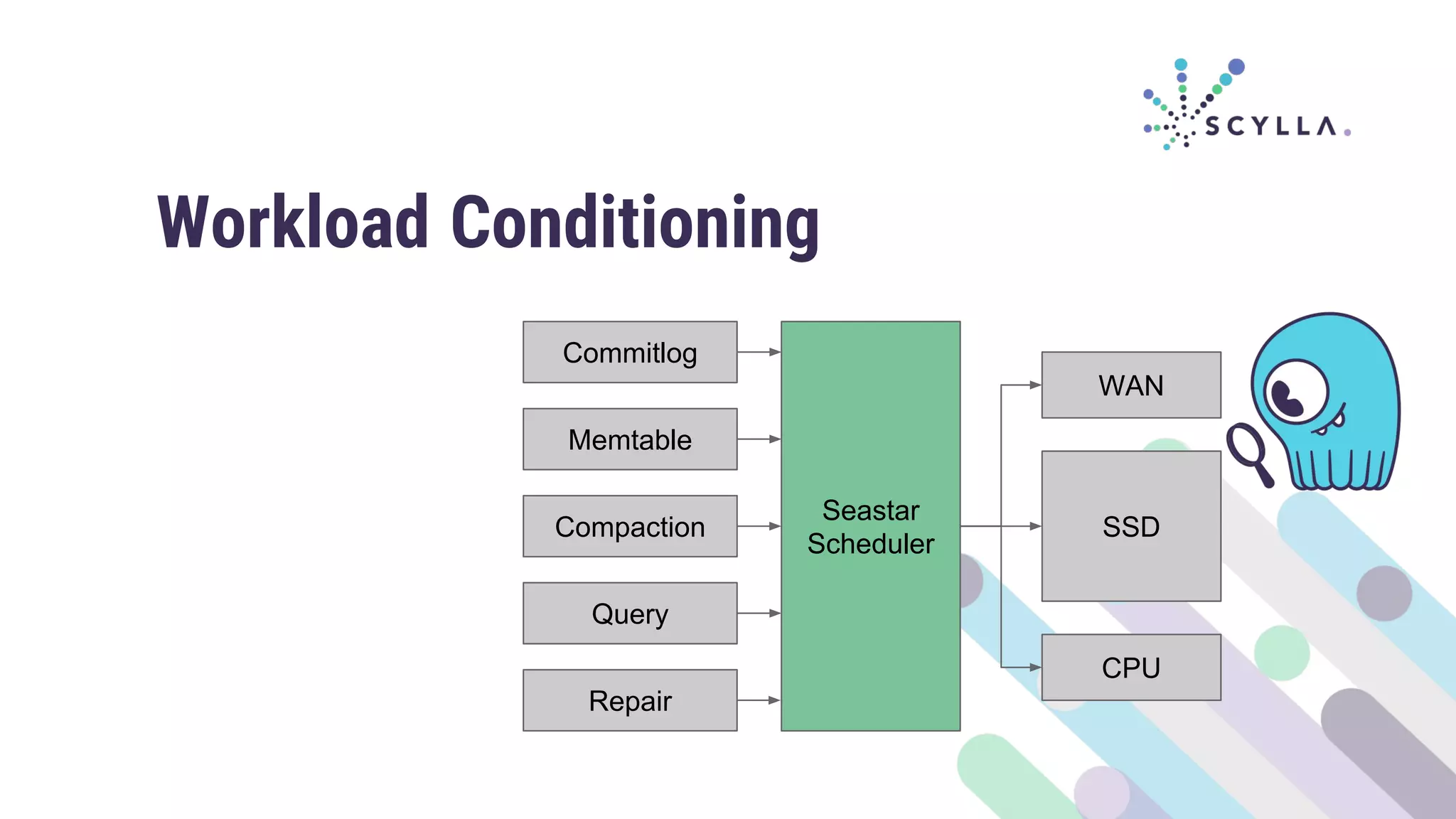

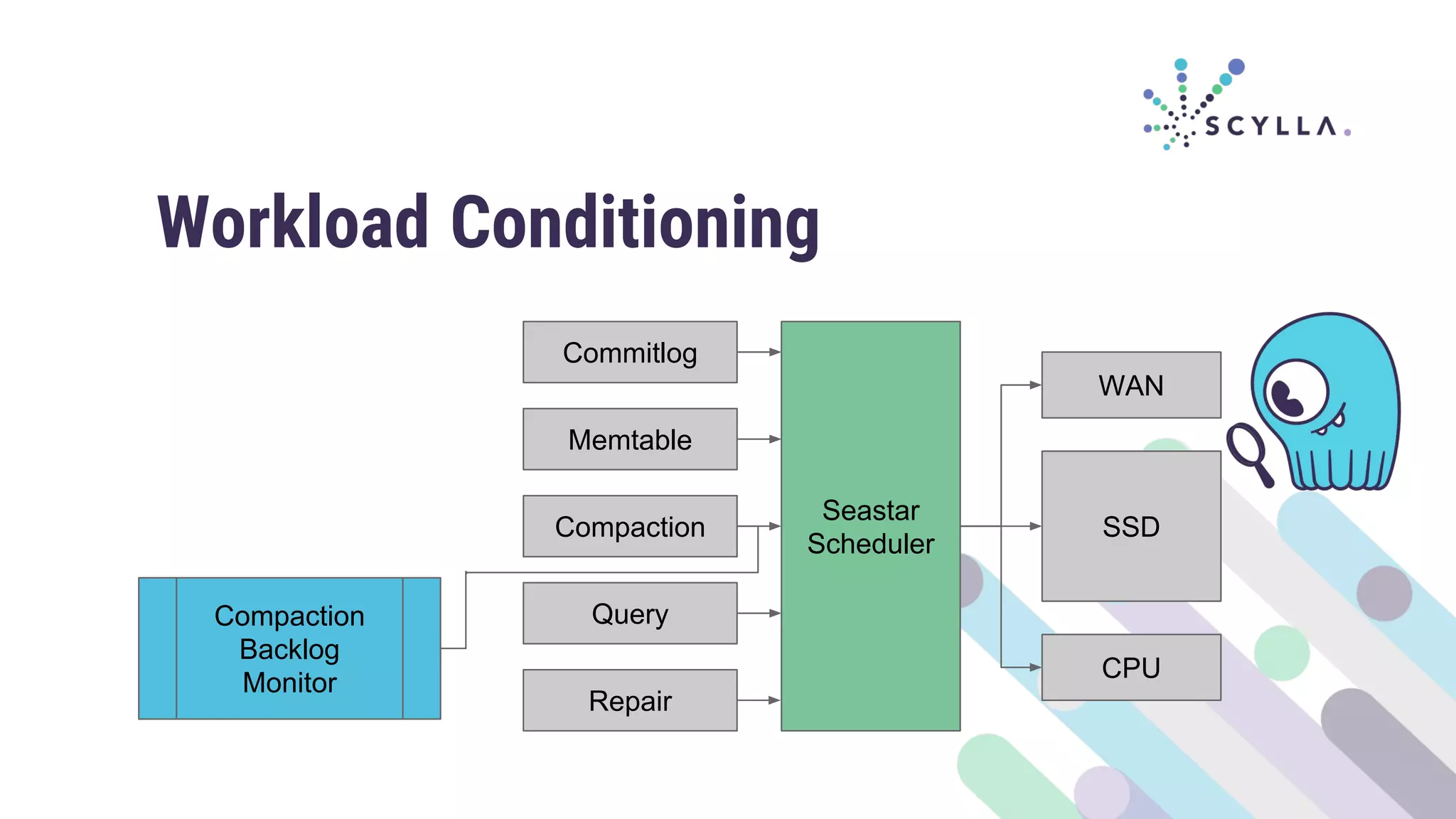

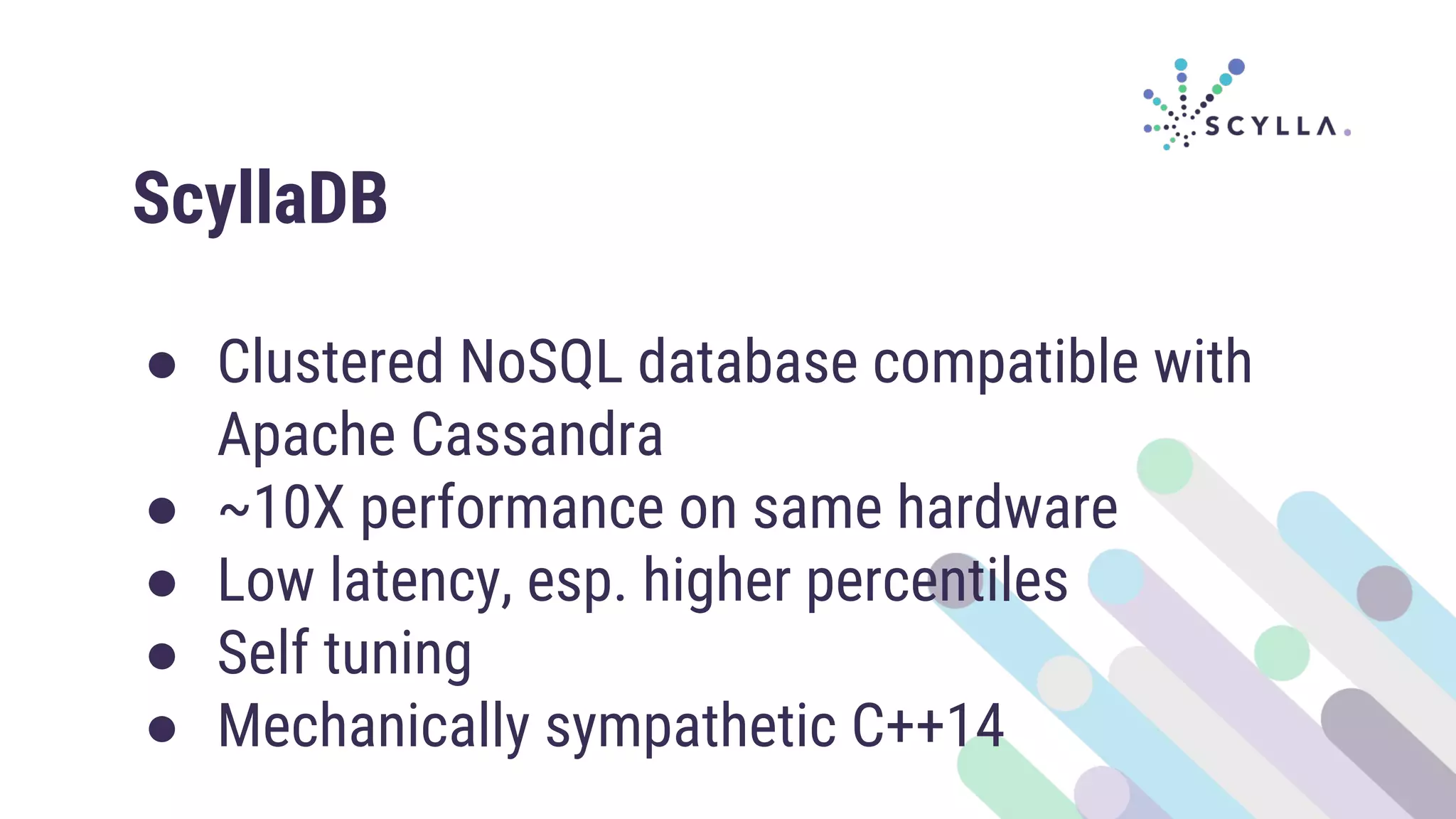

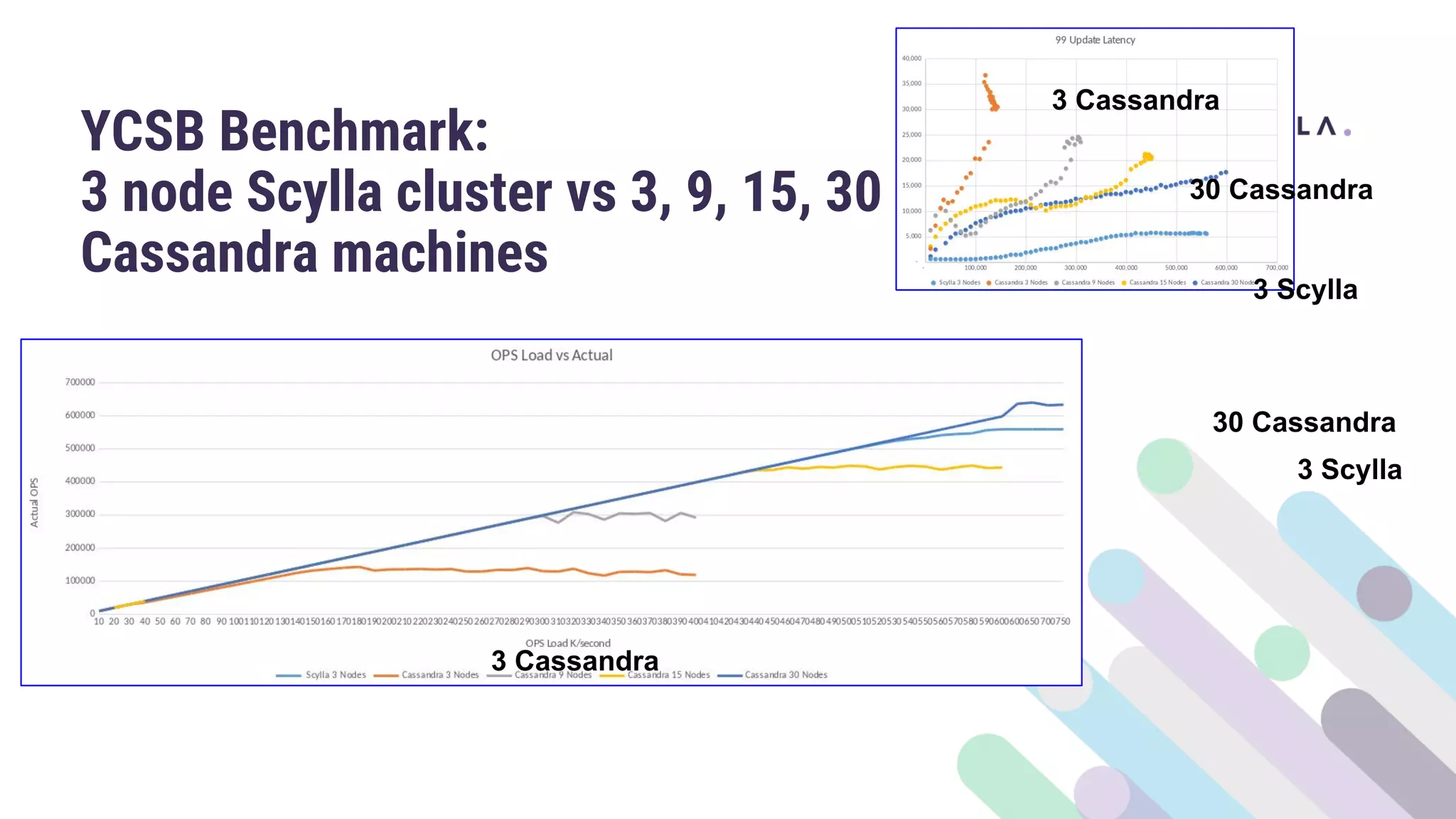

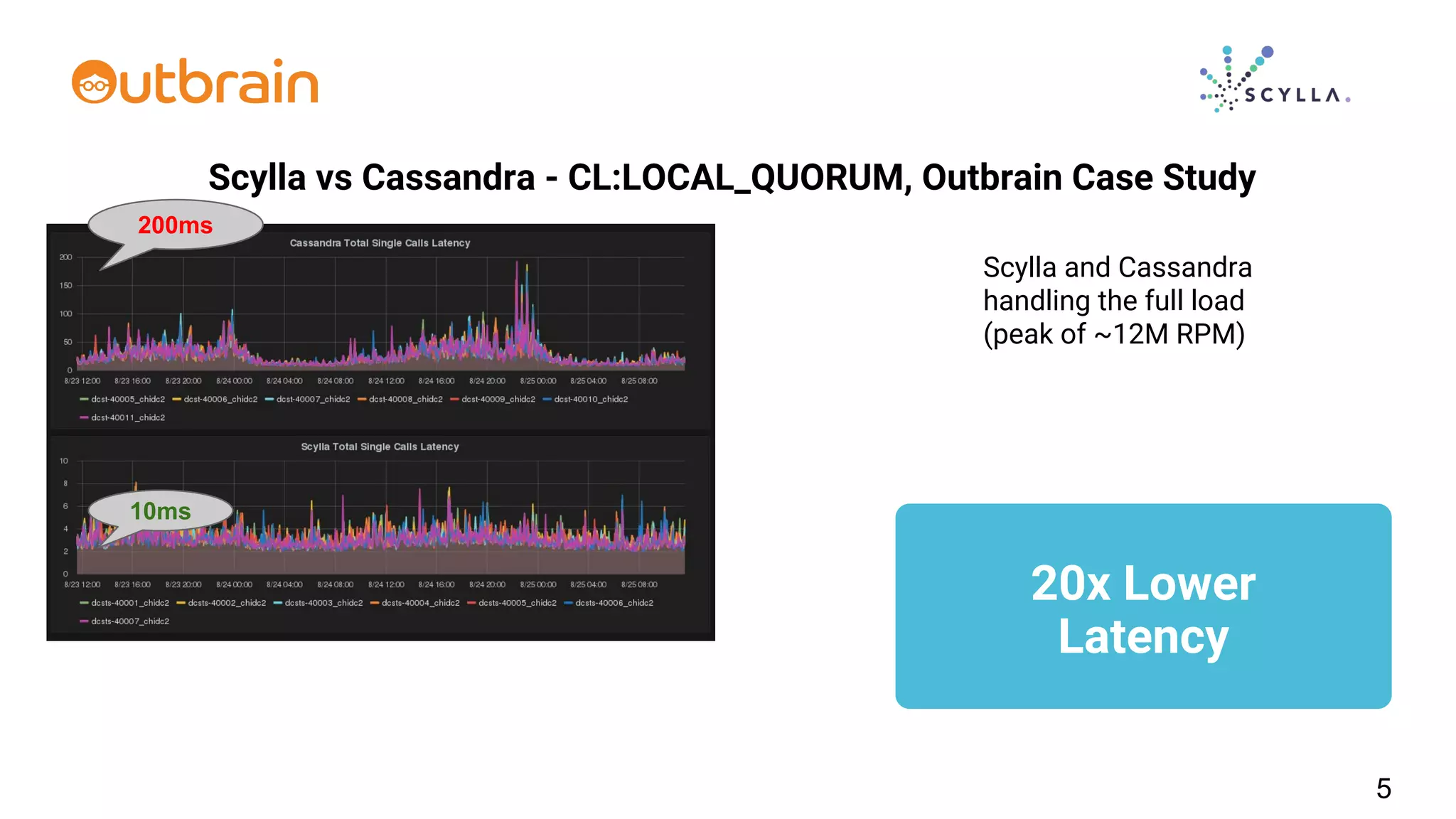

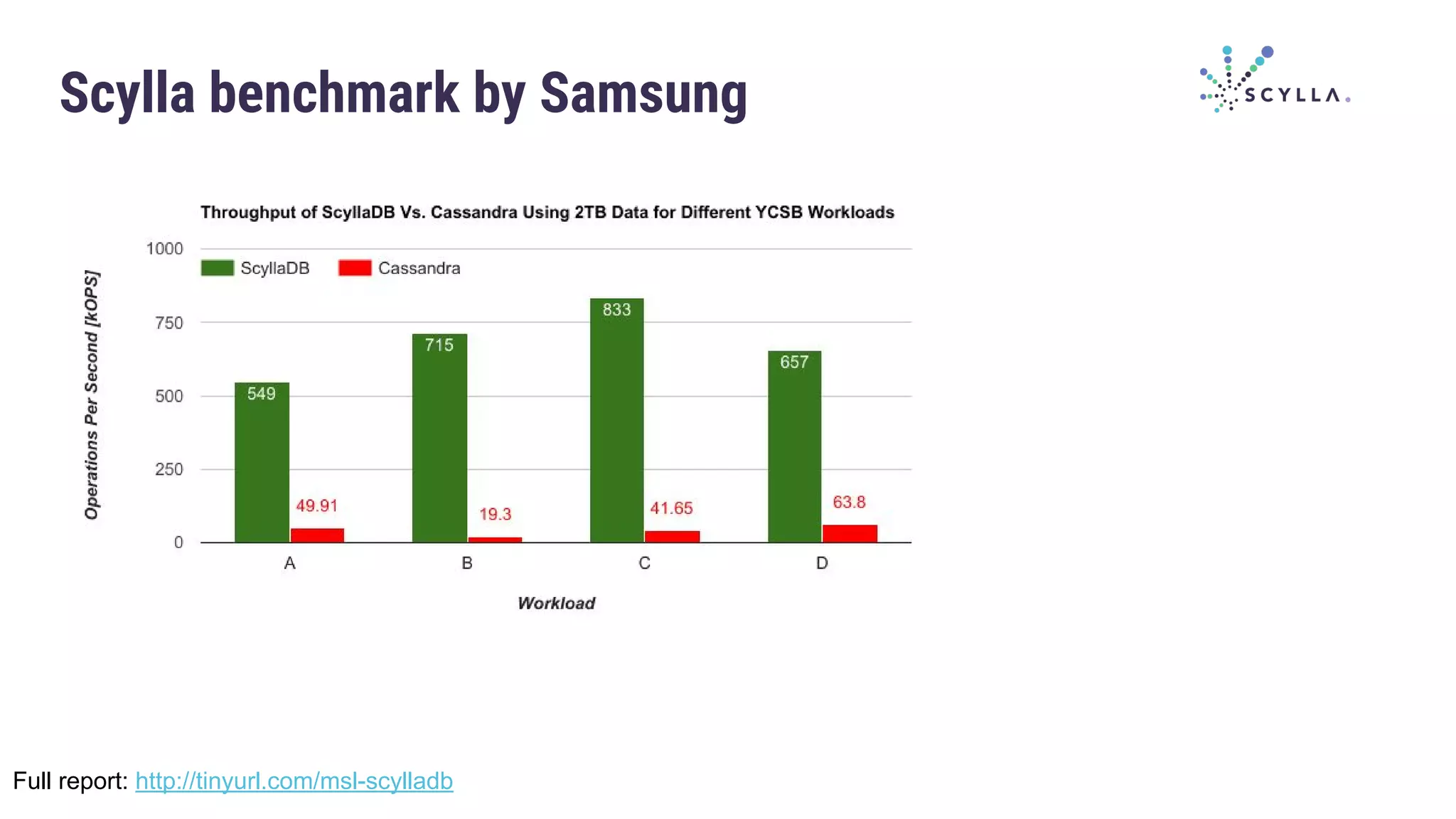

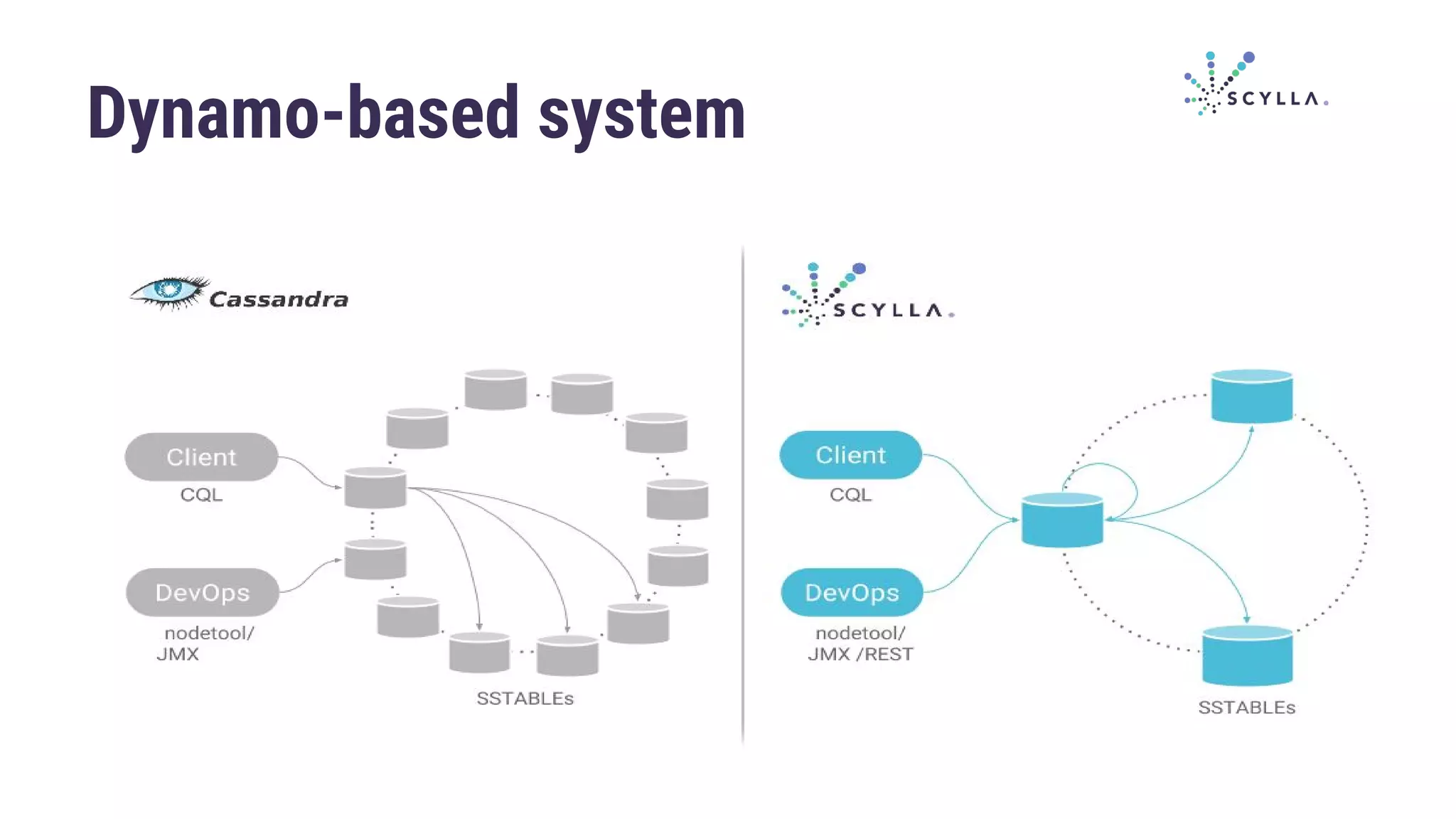

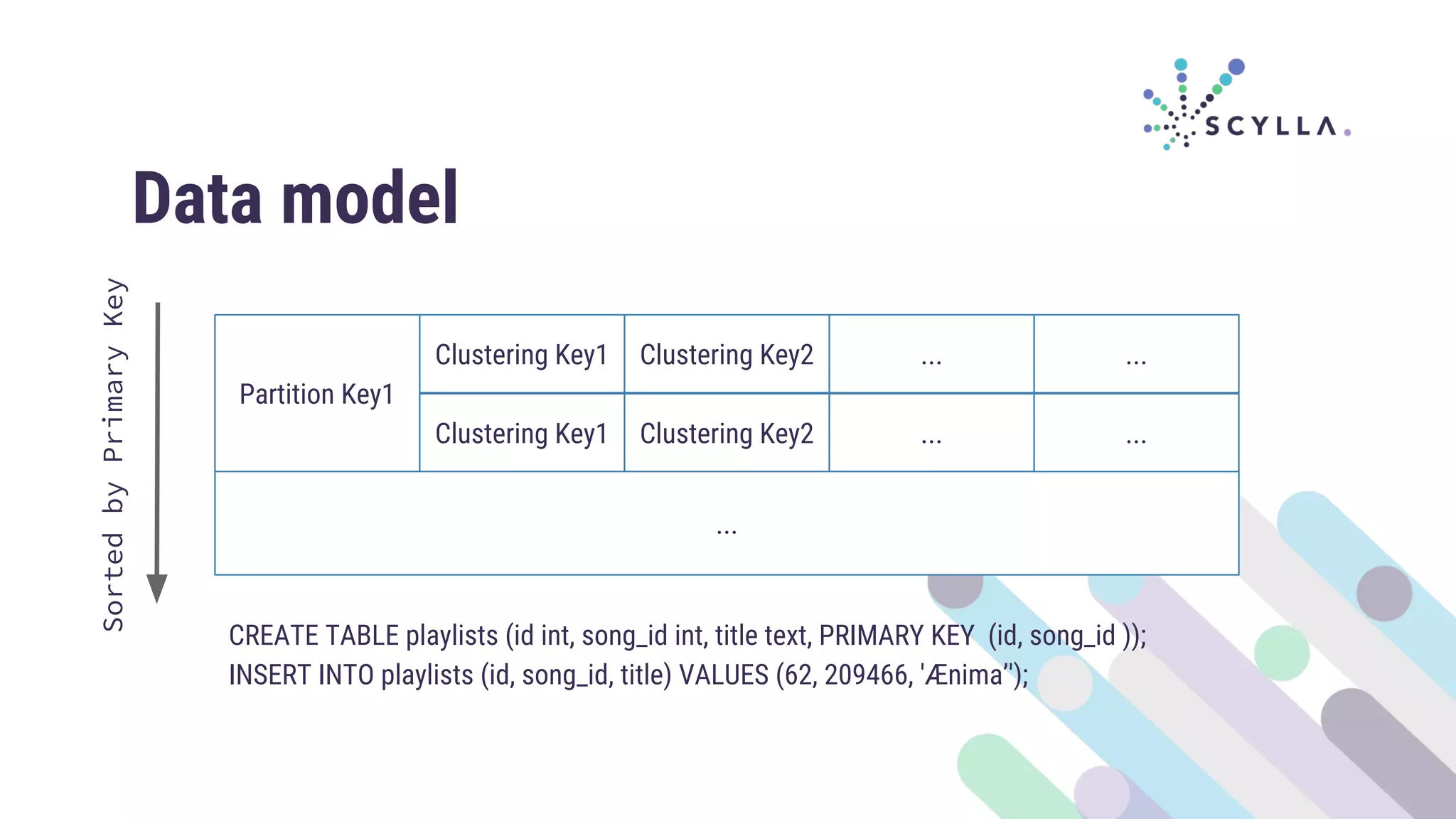

ScyllaDB is a high-performance, clustered NoSQL database compatible with Apache Cassandra, offering significant improvements in latency and resource management due to its unique architecture and use of Seastar for asynchronous processing. It demonstrates proficiency in handling workloads with mechanisms like efficient memory allocation and workload conditioning while maximizing throughput without requiring extensive tuning from the user. The document outlines its advantages, technical design, and how it contrasts with traditional systems like Cassandra.

![Futures

future<> f = _conn->read_exactly(4).then([] (temporary_buffer<char> buf) {

int id = buf_to_id(buf);

unsigned core = id % smp::count;

return smp::submit_to(core, [id] {

return lookup(id);

}).then([this] (sstring result) {

return _conn->write(result);

});

});](https://image.slidesharecdn.com/duartenunes-170608091341/75/ScyllaDB-NoSQL-at-Ludicrous-Speed-28-2048.jpg)

![Futures

future<> f = _conn->read_exactly(4).then([] (temporary_buffer<char> buf) {

int id = buf_to_id(buf);

unsigned core = id % smp::count;

return smp::submit_to(core, [id] {

return lookup(id);

}).then([this] (sstring result) {

return _conn->write(result);

});

});](https://image.slidesharecdn.com/duartenunes-170608091341/75/ScyllaDB-NoSQL-at-Ludicrous-Speed-29-2048.jpg)

![Futures

future<> f = _conn->read_exactly(4).then([] (temporary_buffer<char> buf) {

int id = buf_to_id(buf);

unsigned core = id % smp::count;

return smp::submit_to(core, [id] {

return lookup(id);

}).then([this] (sstring result) {

return _conn->write(result);

});

});](https://image.slidesharecdn.com/duartenunes-170608091341/75/ScyllaDB-NoSQL-at-Ludicrous-Speed-30-2048.jpg)

![Futures

future<> f = _conn->read_exactly(4).then([] (temporary_buffer<char> buf) {

int id = buf_to_id(buf);

unsigned core = id % smp::count;

return smp::submit_to(core, [id] {

return lookup(id);

}).then([this] (sstring result) {

return _conn->write(result);

});

});](https://image.slidesharecdn.com/duartenunes-170608091341/75/ScyllaDB-NoSQL-at-Ludicrous-Speed-31-2048.jpg)

![Futures

future<> f = _conn->read_exactly(4).then([] (temporary_buffer<char> buf) {

int id = buf_to_id(buf);

unsigned core = id % smp::count;

return smp::submit_to(core, [id] {

return lookup(id);

}).then([this] (sstring result) {

return _conn->write(result);

});

});](https://image.slidesharecdn.com/duartenunes-170608091341/75/ScyllaDB-NoSQL-at-Ludicrous-Speed-32-2048.jpg)

![Futures

future<> f = _conn->read_exactly(4).then([] (temporary_buffer<char> buf) {

int id = buf_to_id(buf);

unsigned core = id % smp::count;

return smp::submit_to(core, [id] {

return lookup(id);

}).then([this] (sstring result) {

return _conn->write(result);

});

});](https://image.slidesharecdn.com/duartenunes-170608091341/75/ScyllaDB-NoSQL-at-Ludicrous-Speed-33-2048.jpg)

![Unless...

future<> f = seastar::async([&] {

future<> f = …;

f.get();

});](https://image.slidesharecdn.com/duartenunes-170608091341/75/ScyllaDB-NoSQL-at-Ludicrous-Speed-35-2048.jpg)

![Unless...

future<> f = seastar::async([&] {

future<> f = …;

f.get();

});](https://image.slidesharecdn.com/duartenunes-170608091341/75/ScyllaDB-NoSQL-at-Ludicrous-Speed-36-2048.jpg)