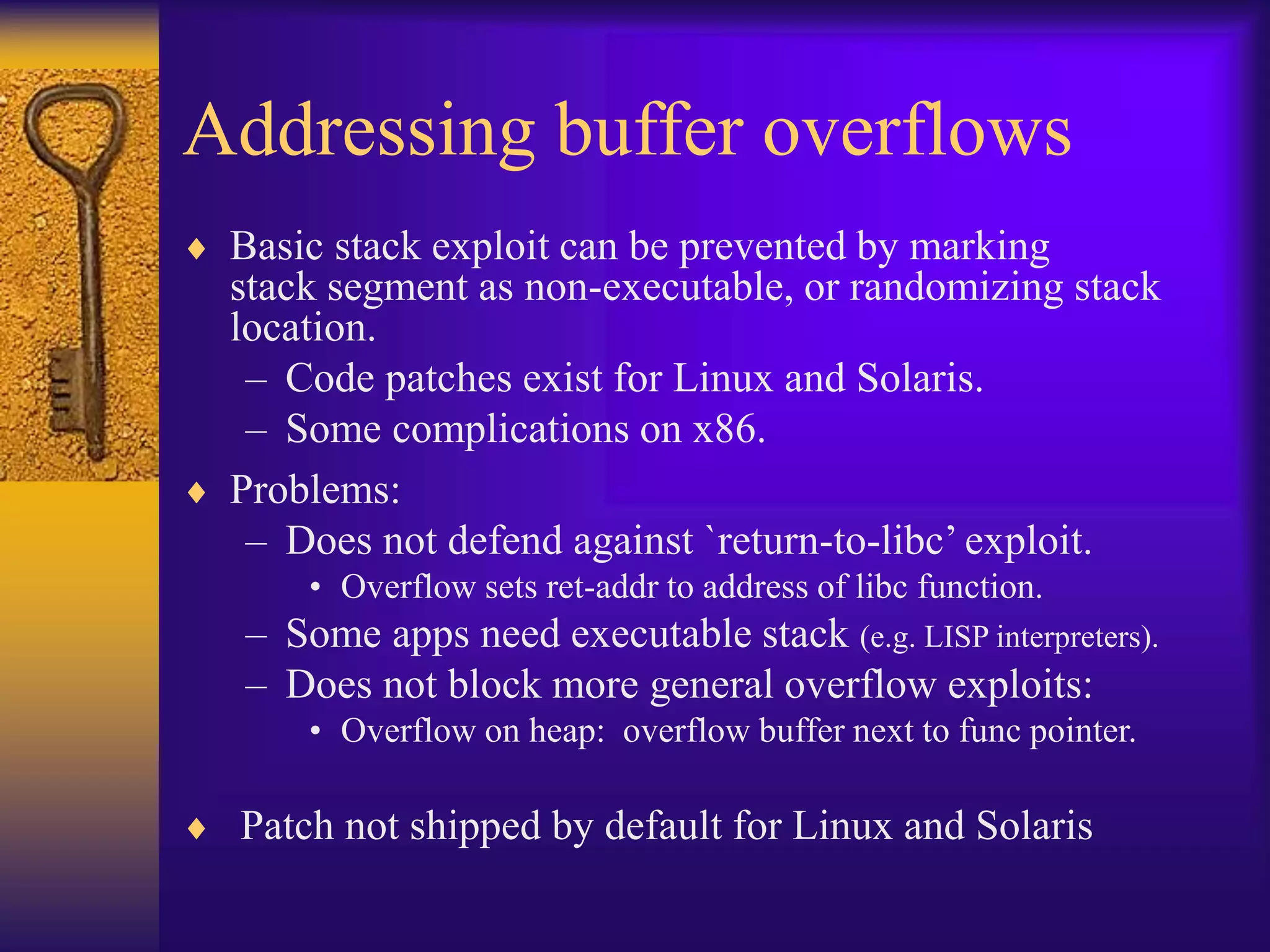

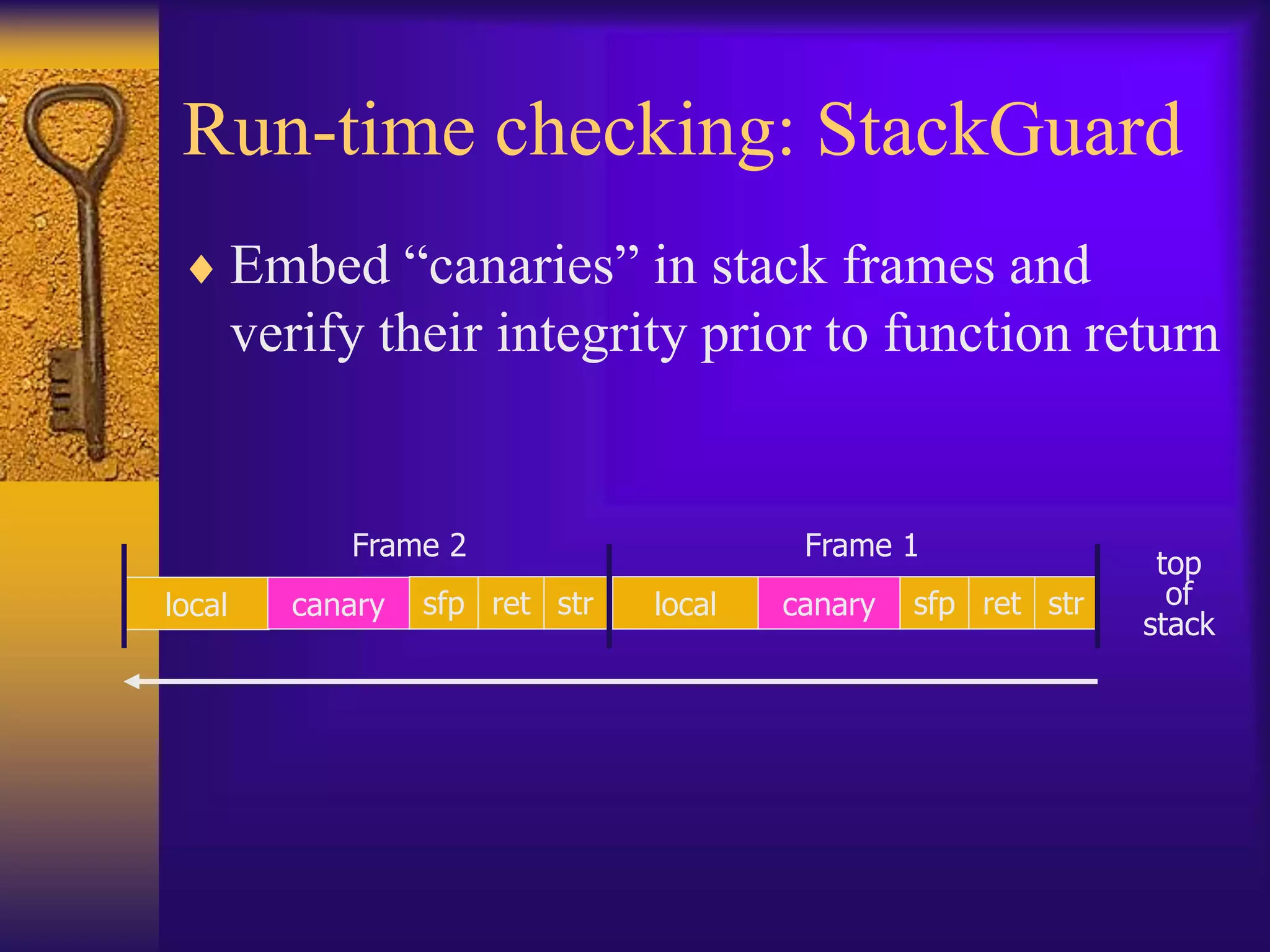

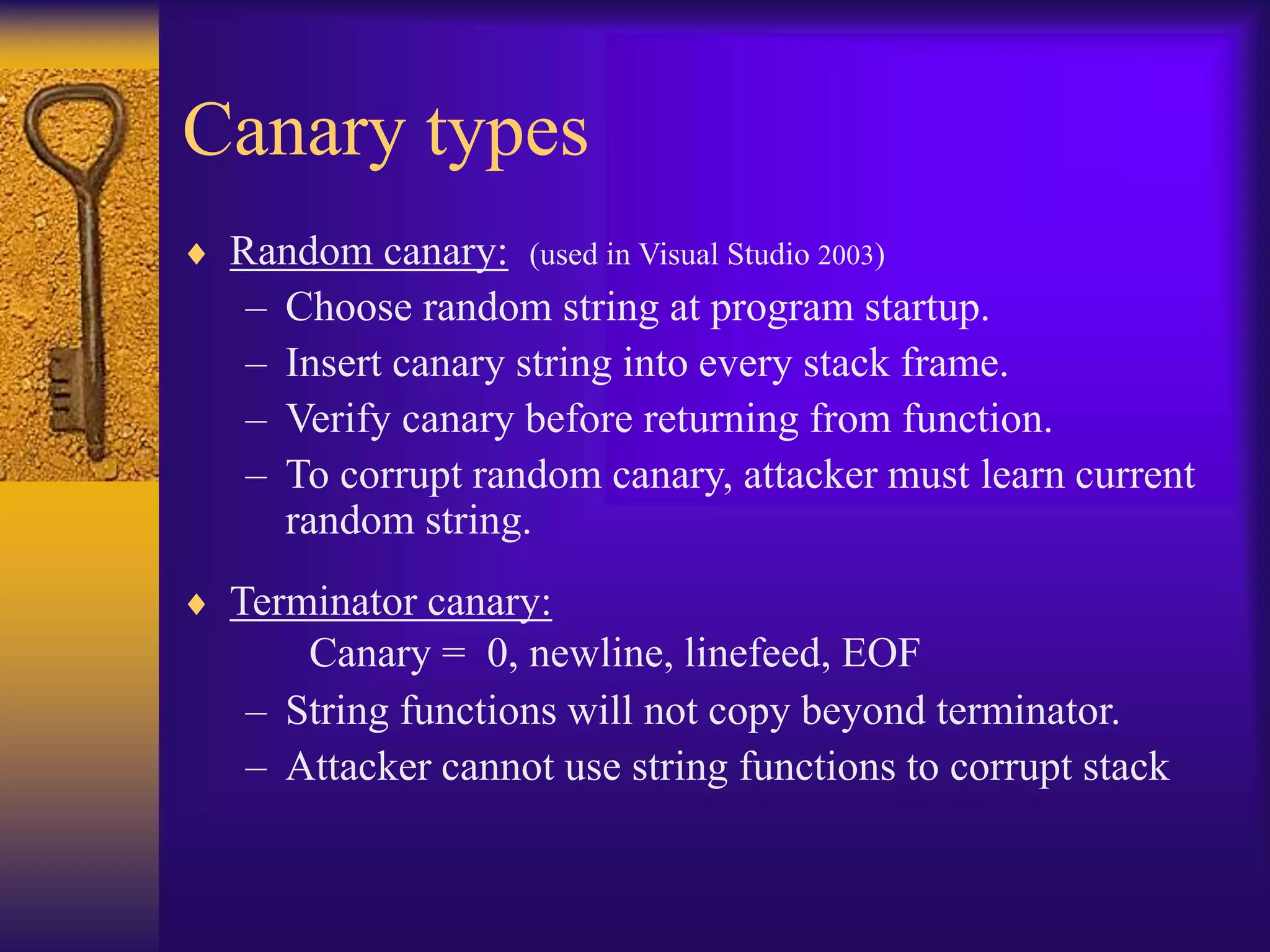

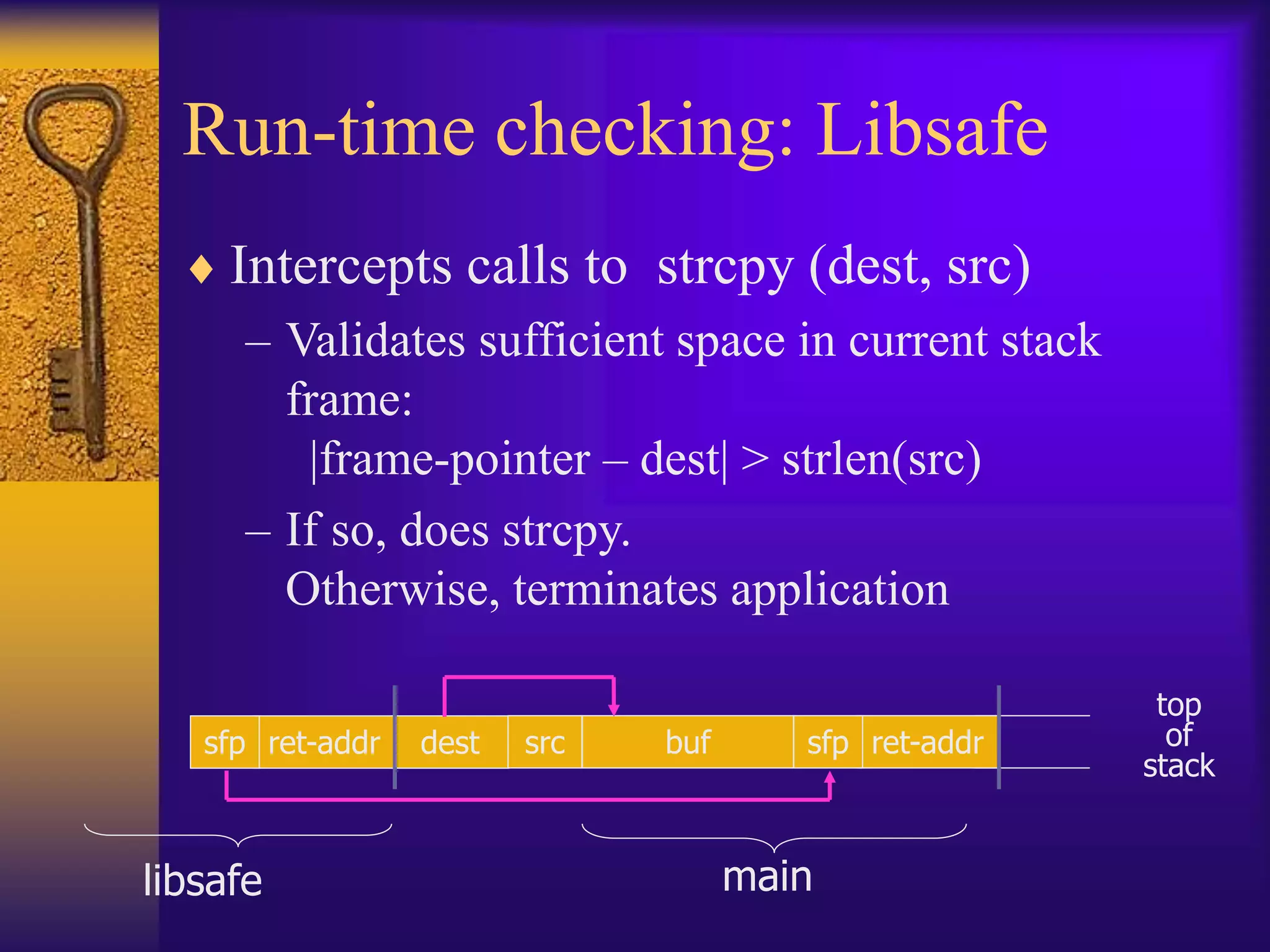

The document discusses secure programming techniques for Unix-like systems, emphasizing the importance of input validation, avoiding buffer overflows, and adhering to best practices in programming. It covers various methods to enhance security, including the use of source code scanners, runtime checking, sandboxing, and strategies to prevent malicious code such as viruses and worms. Additionally, the document highlights specific tools and approaches like stack guard, libsafe, and code-signing to mitigate vulnerabilities in software applications.

![Validating input

Filenames

– Disallow *, .., etc.

Html, URLs, cookies

– Cf. cross-site scripting attacks

Command-line arguments

– Even argv[0]…

Config files](https://image.slidesharecdn.com/lecture25-221129062045-62021ace/75/Secure-programming-Computer-and-Network-Security-5-2048.jpg)