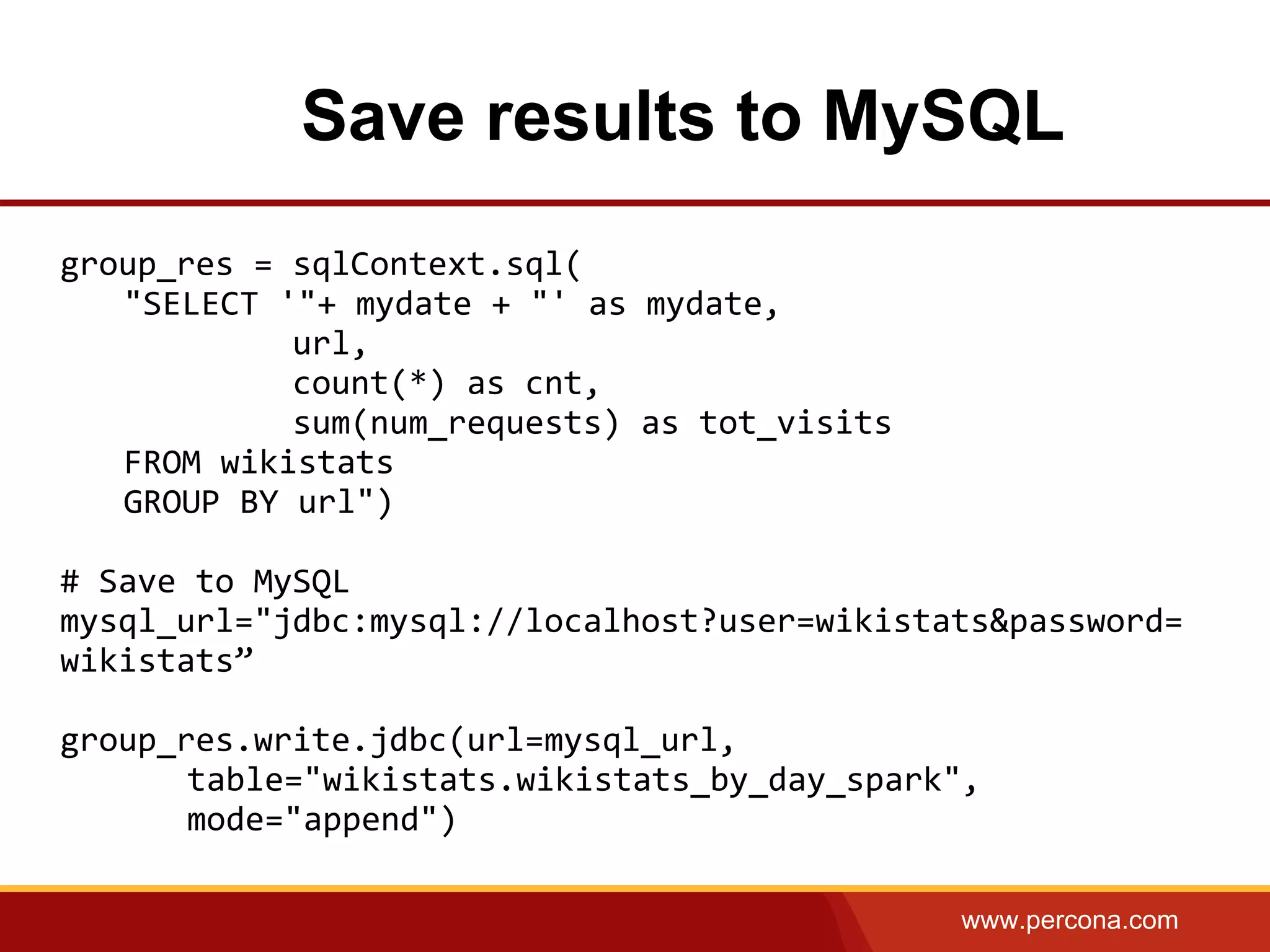

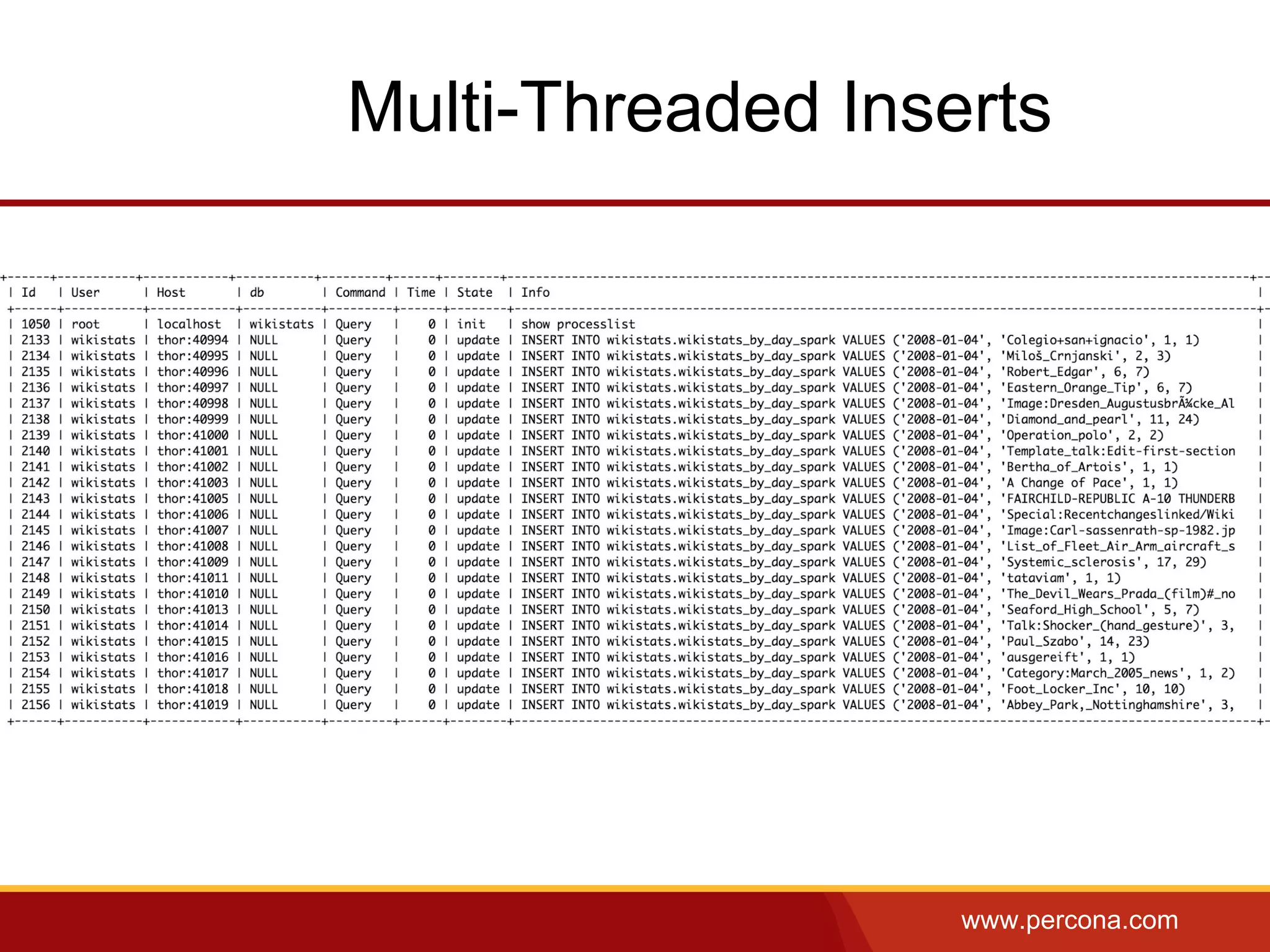

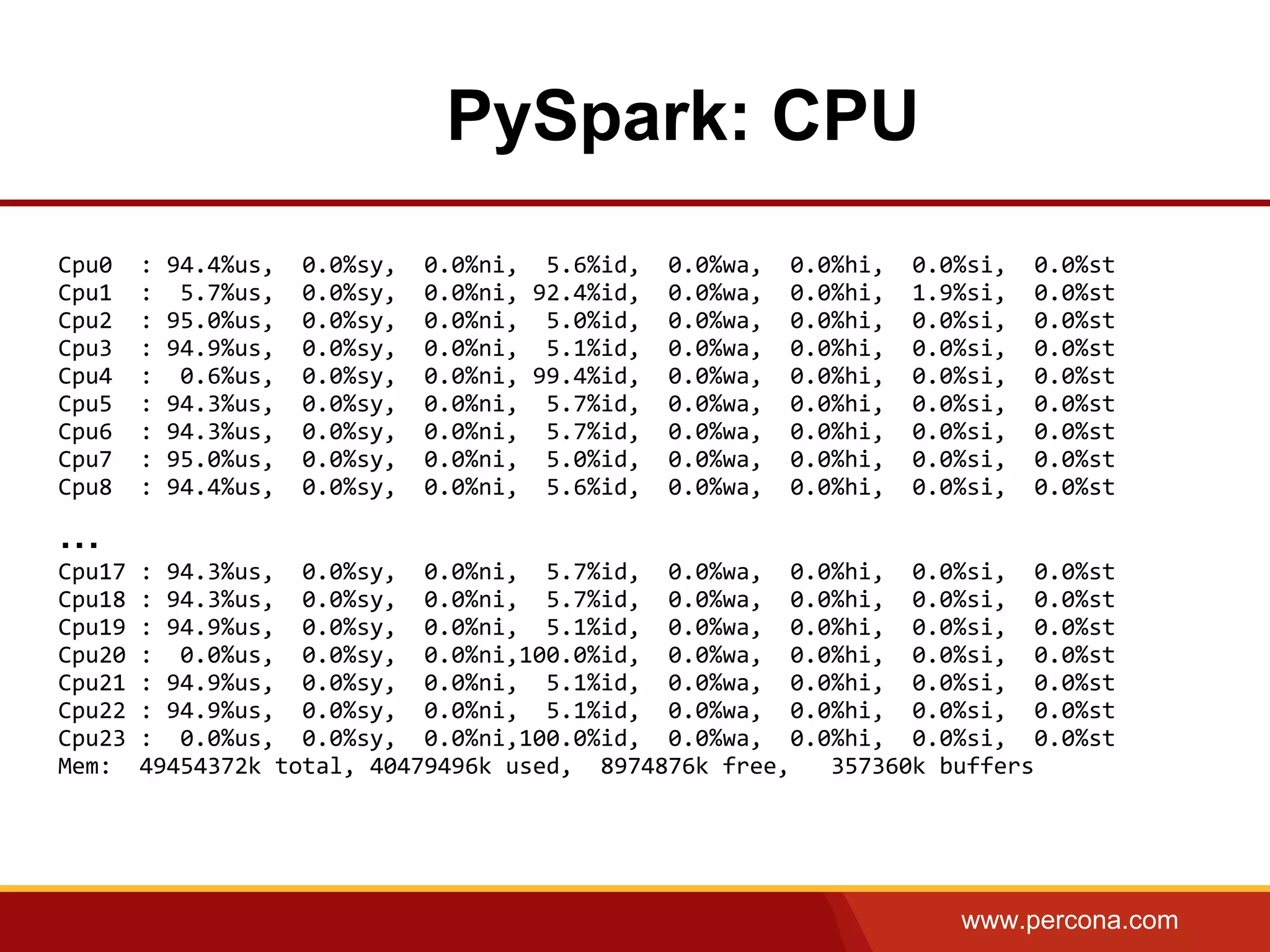

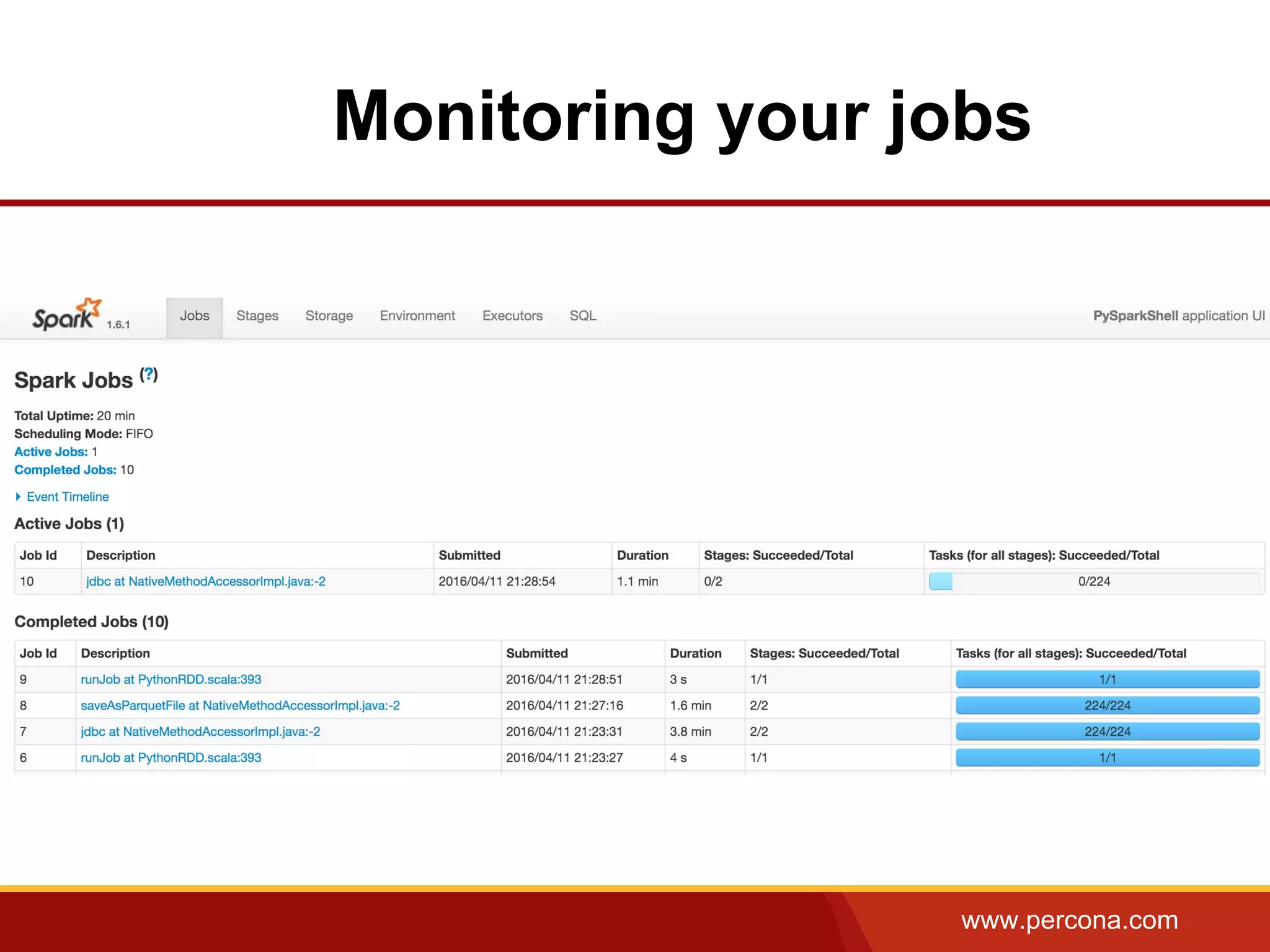

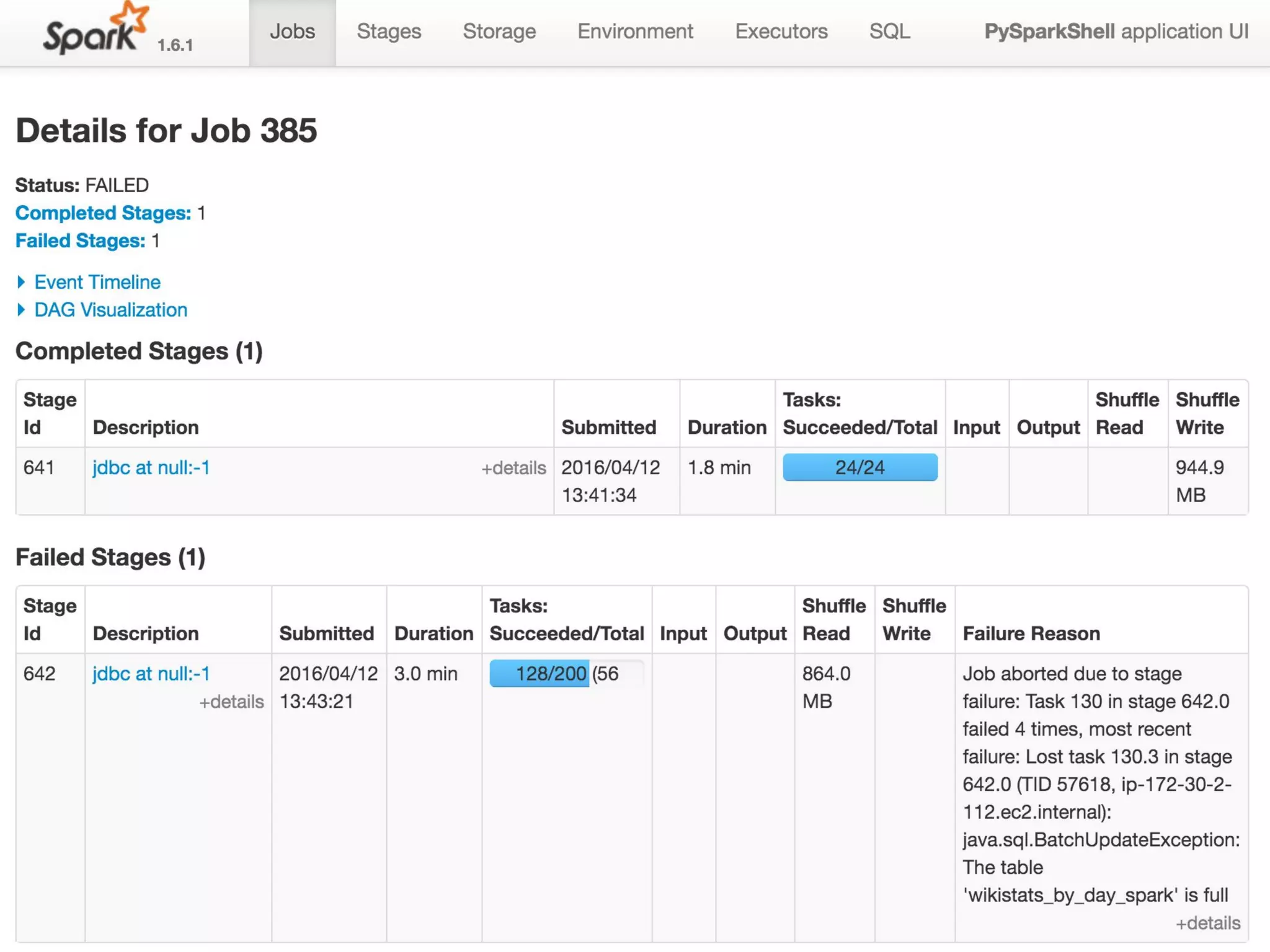

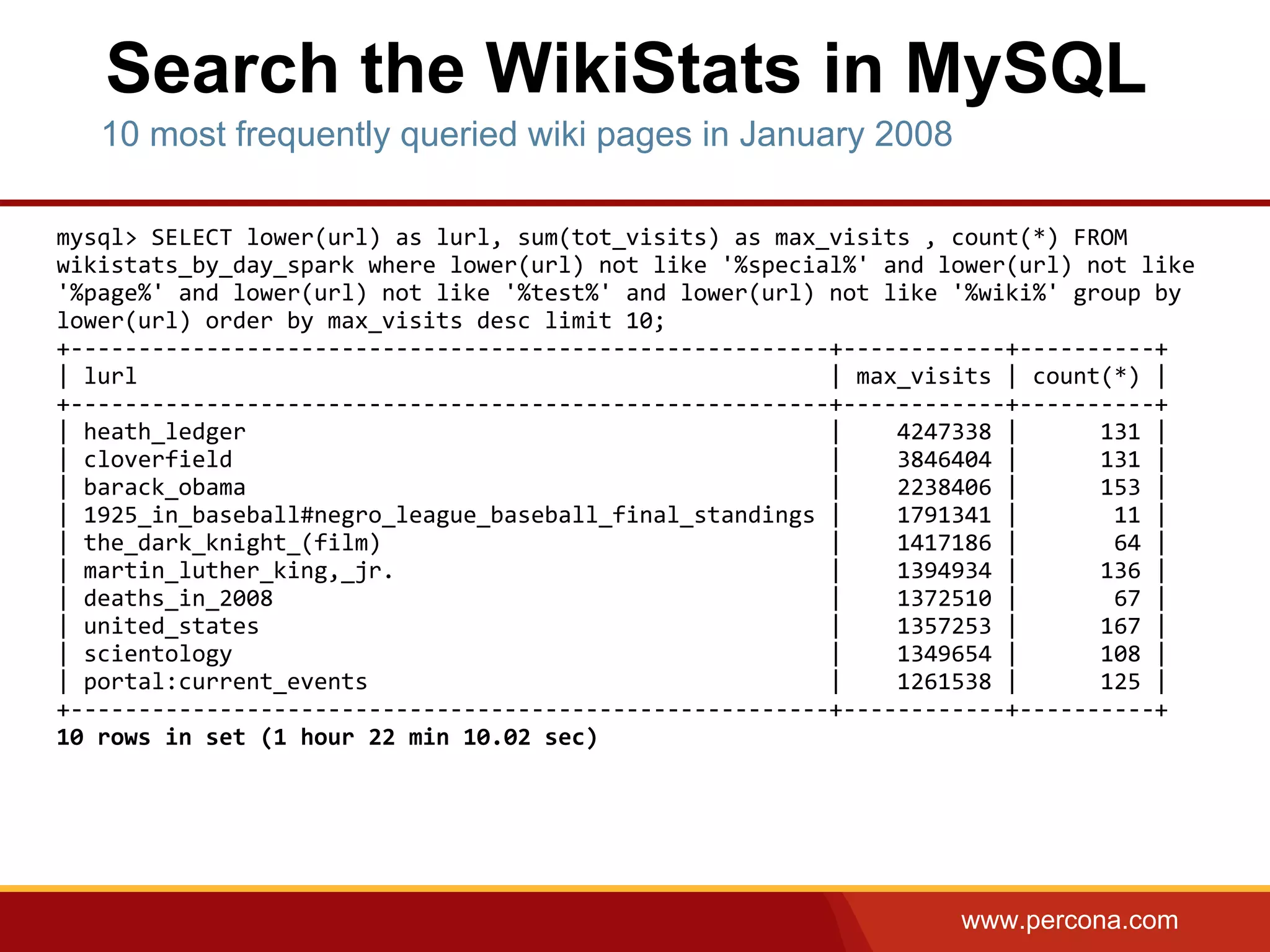

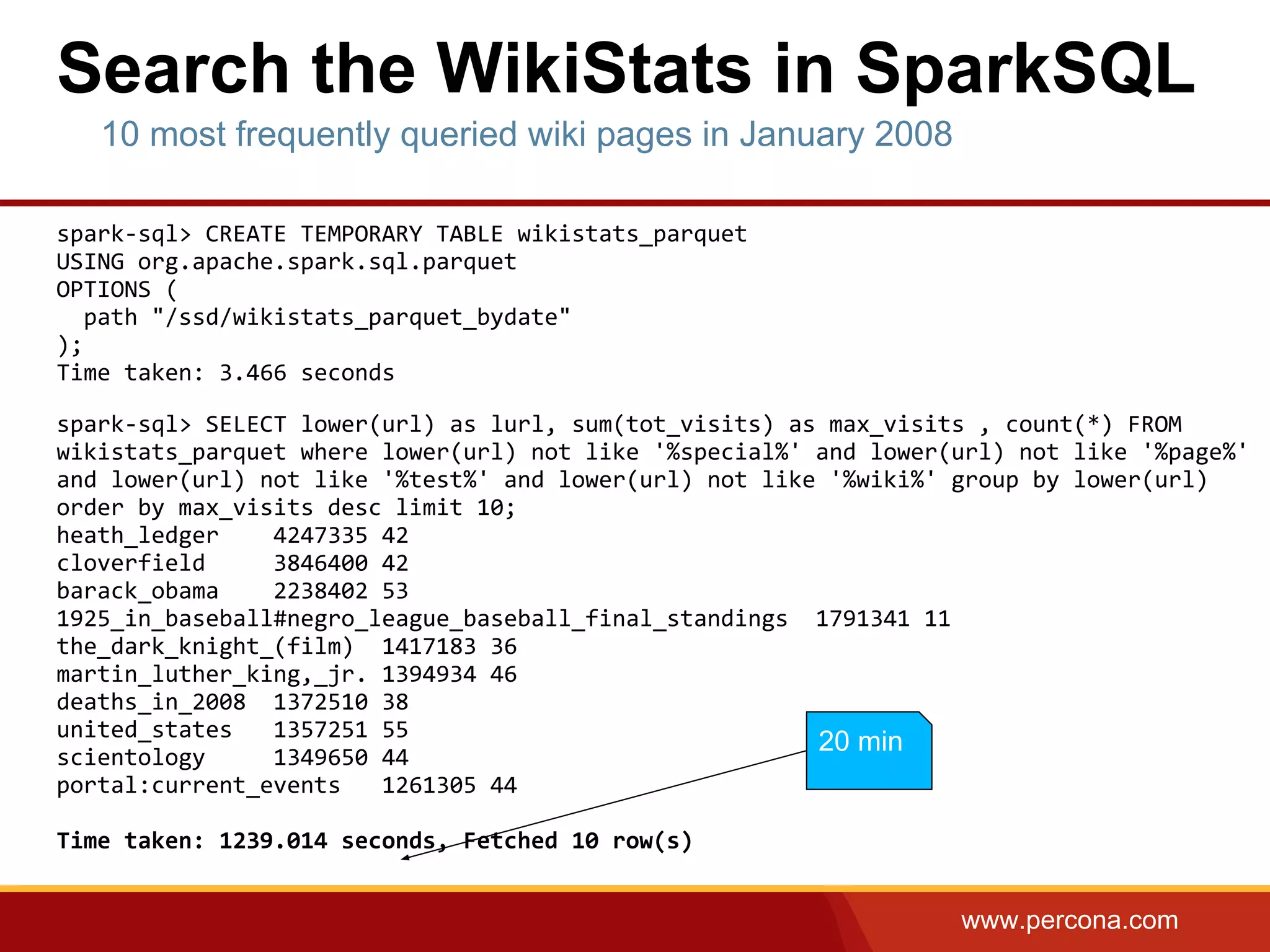

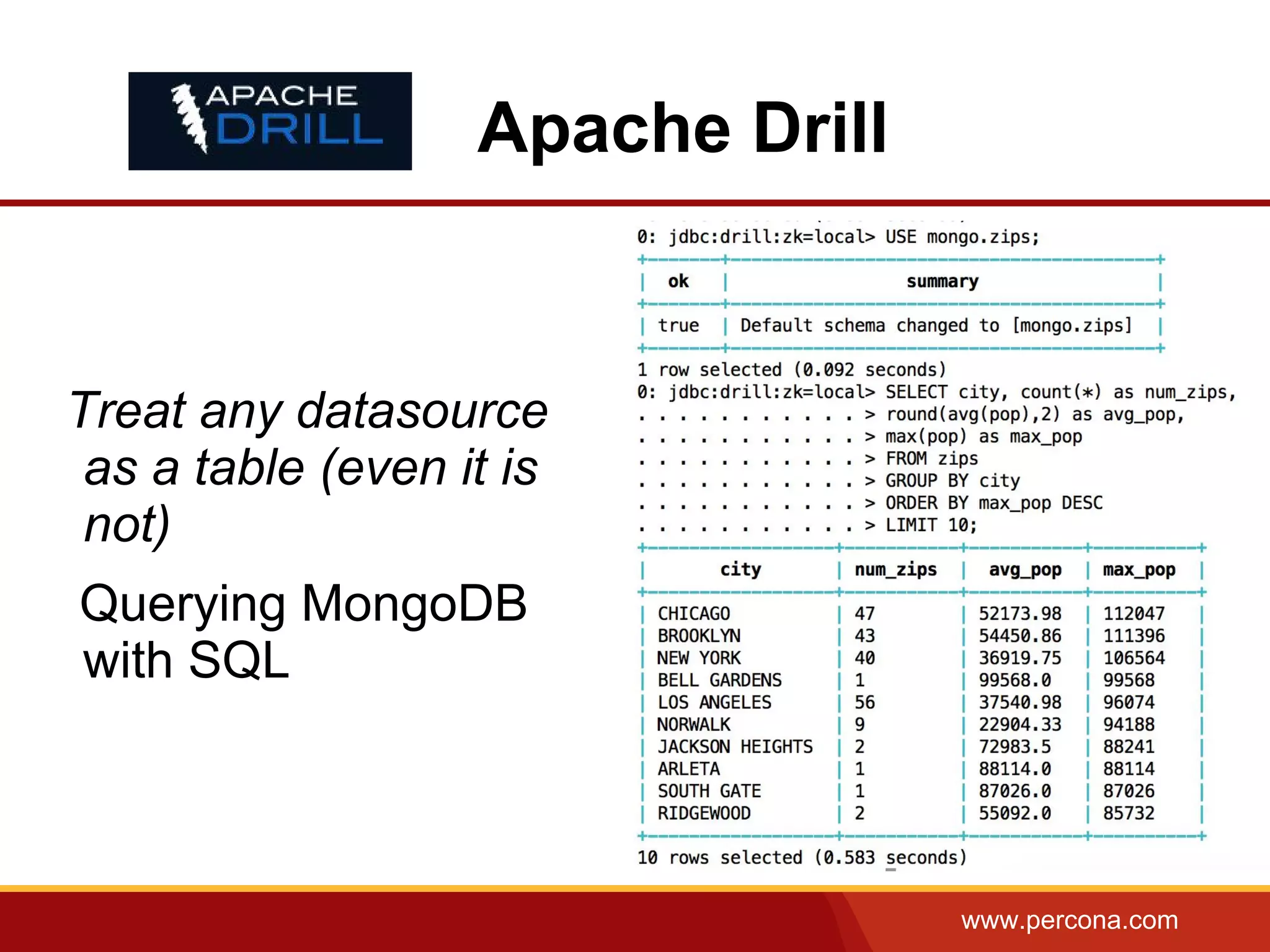

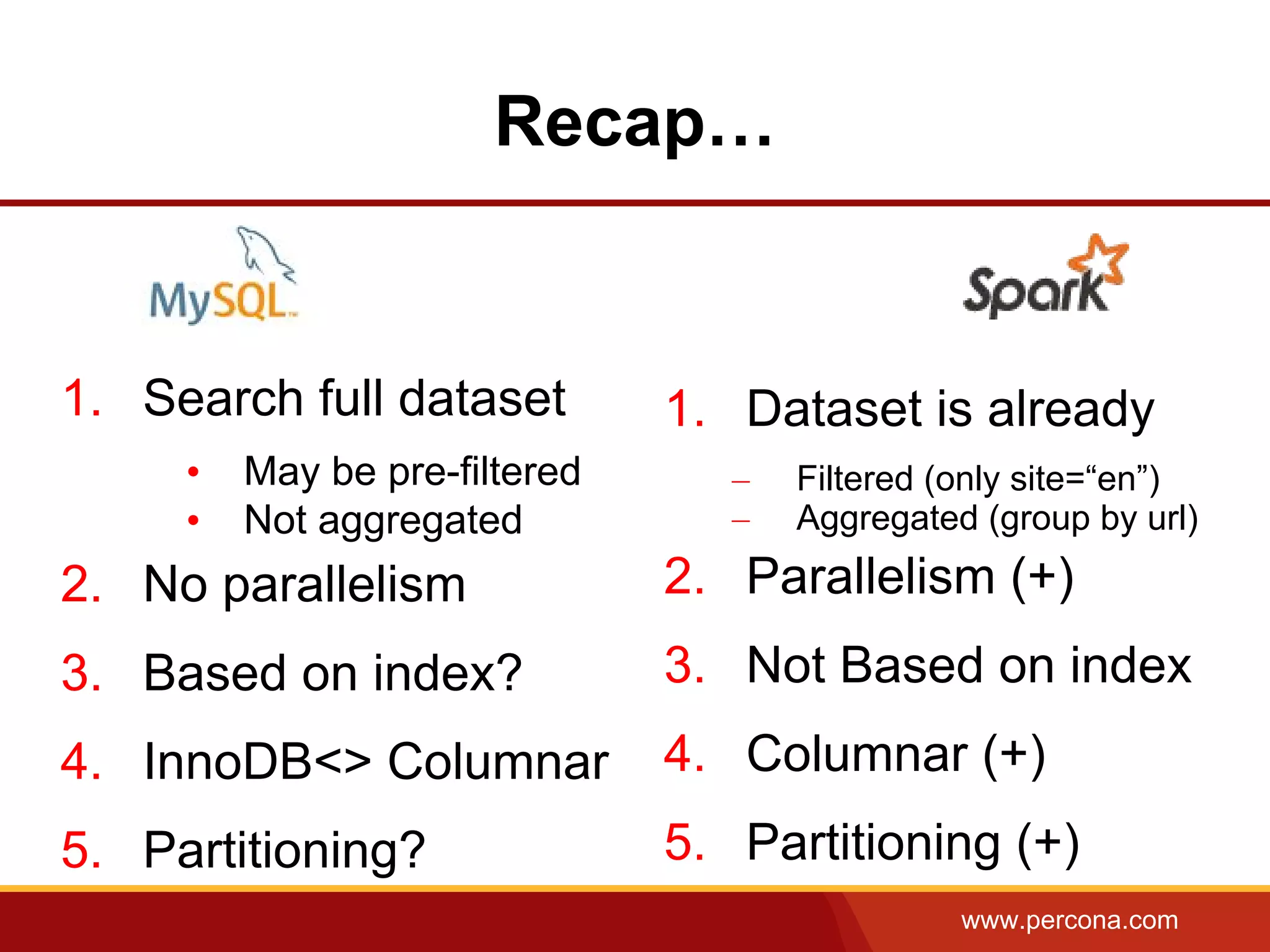

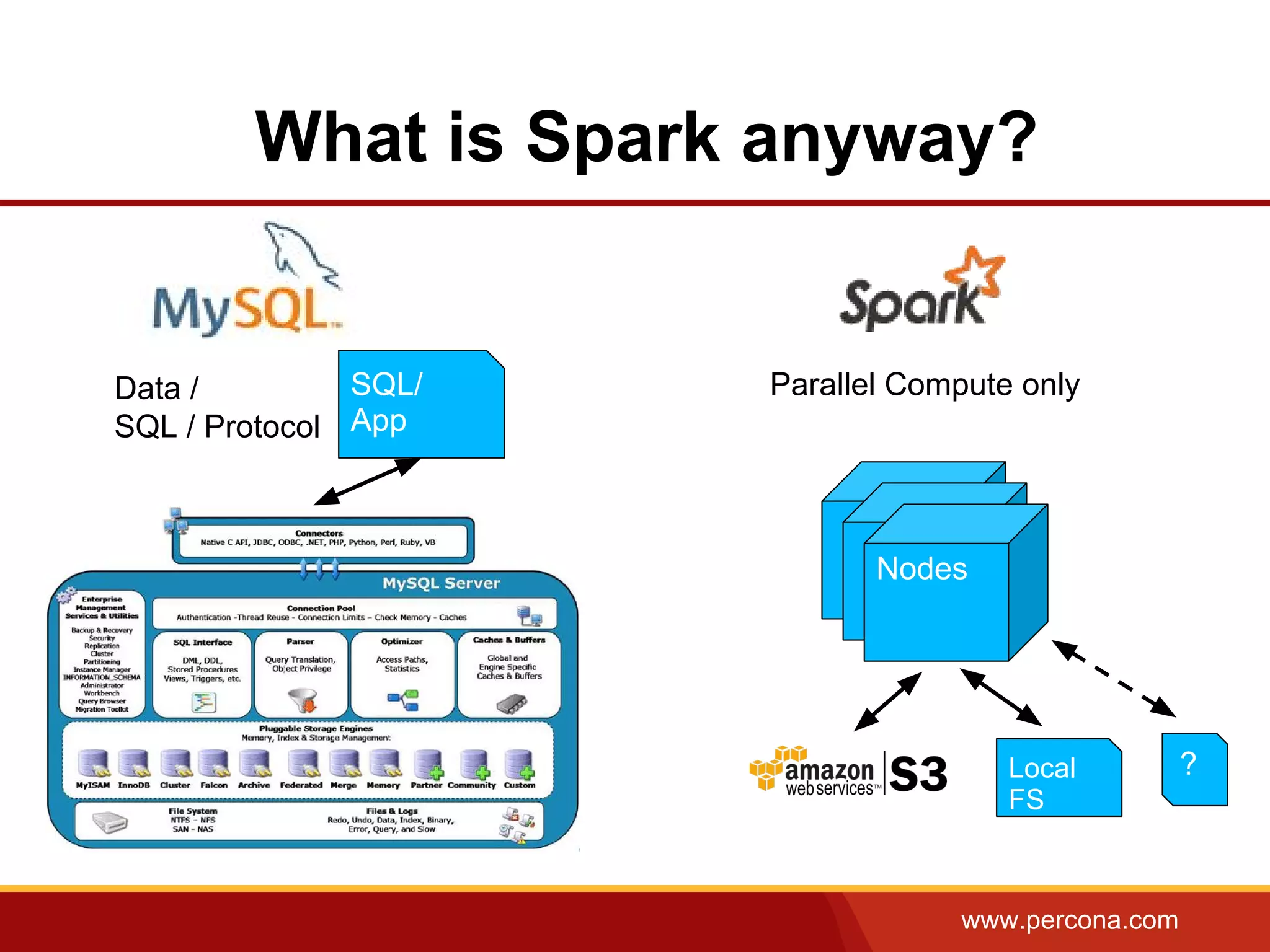

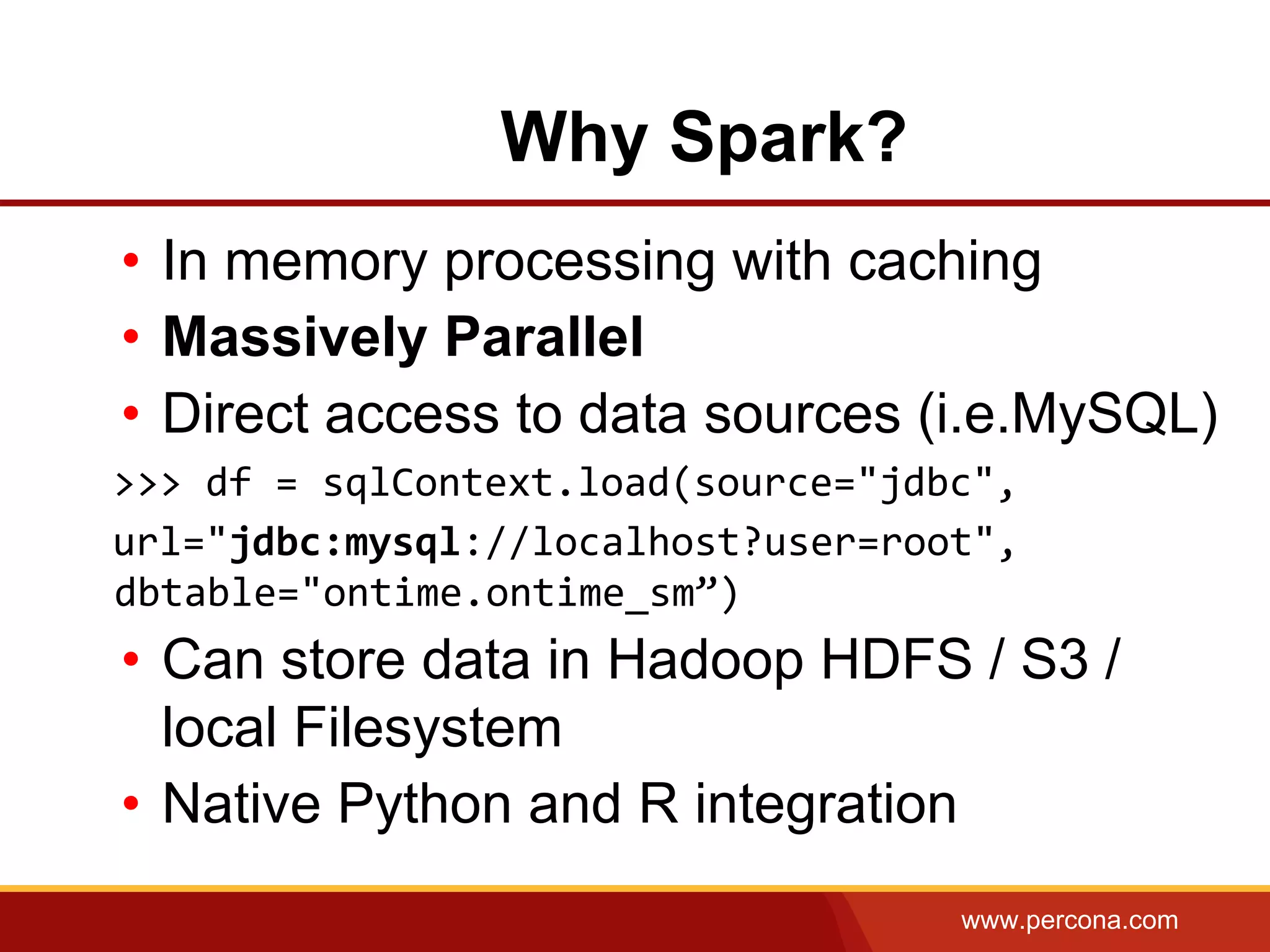

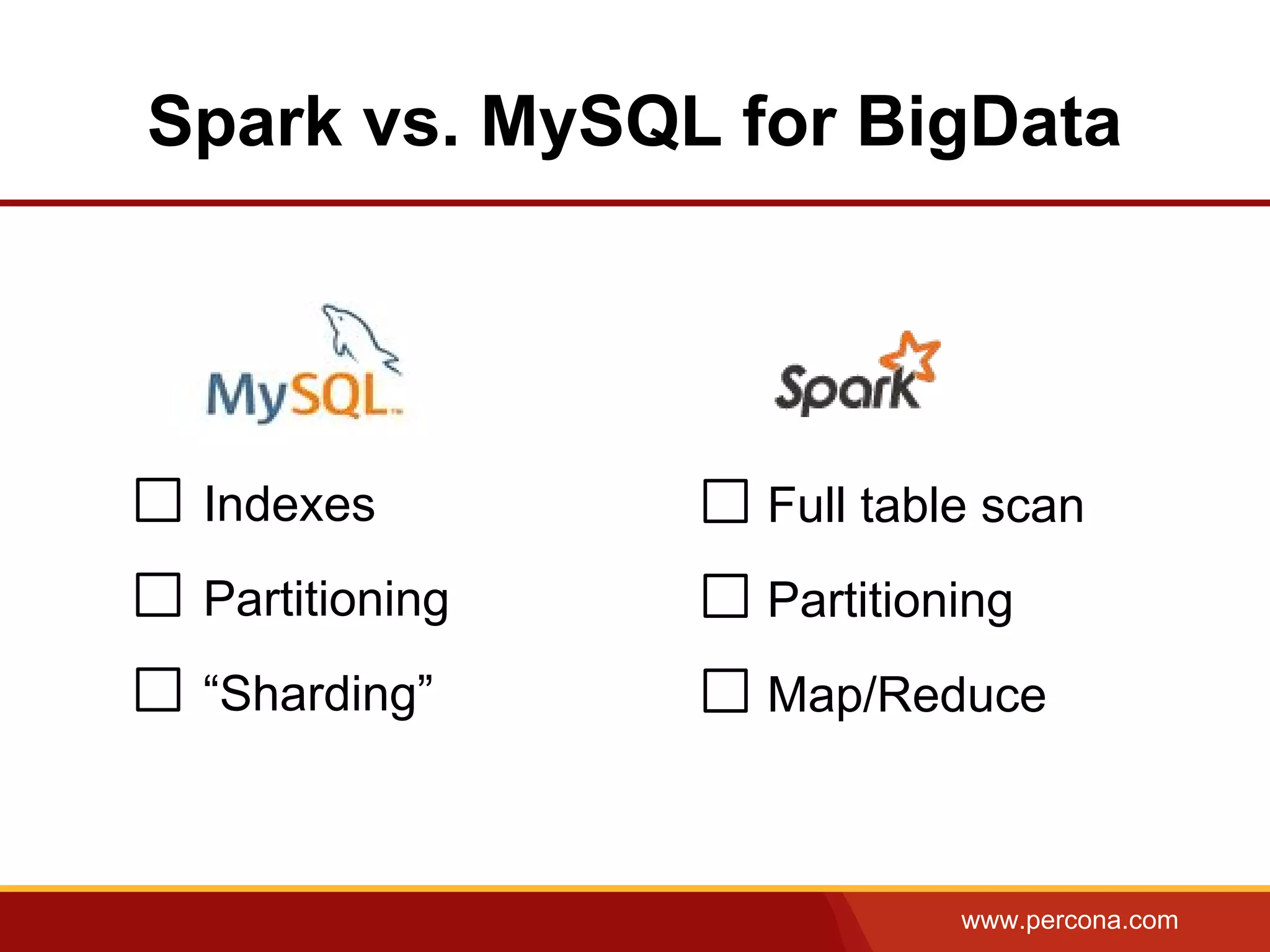

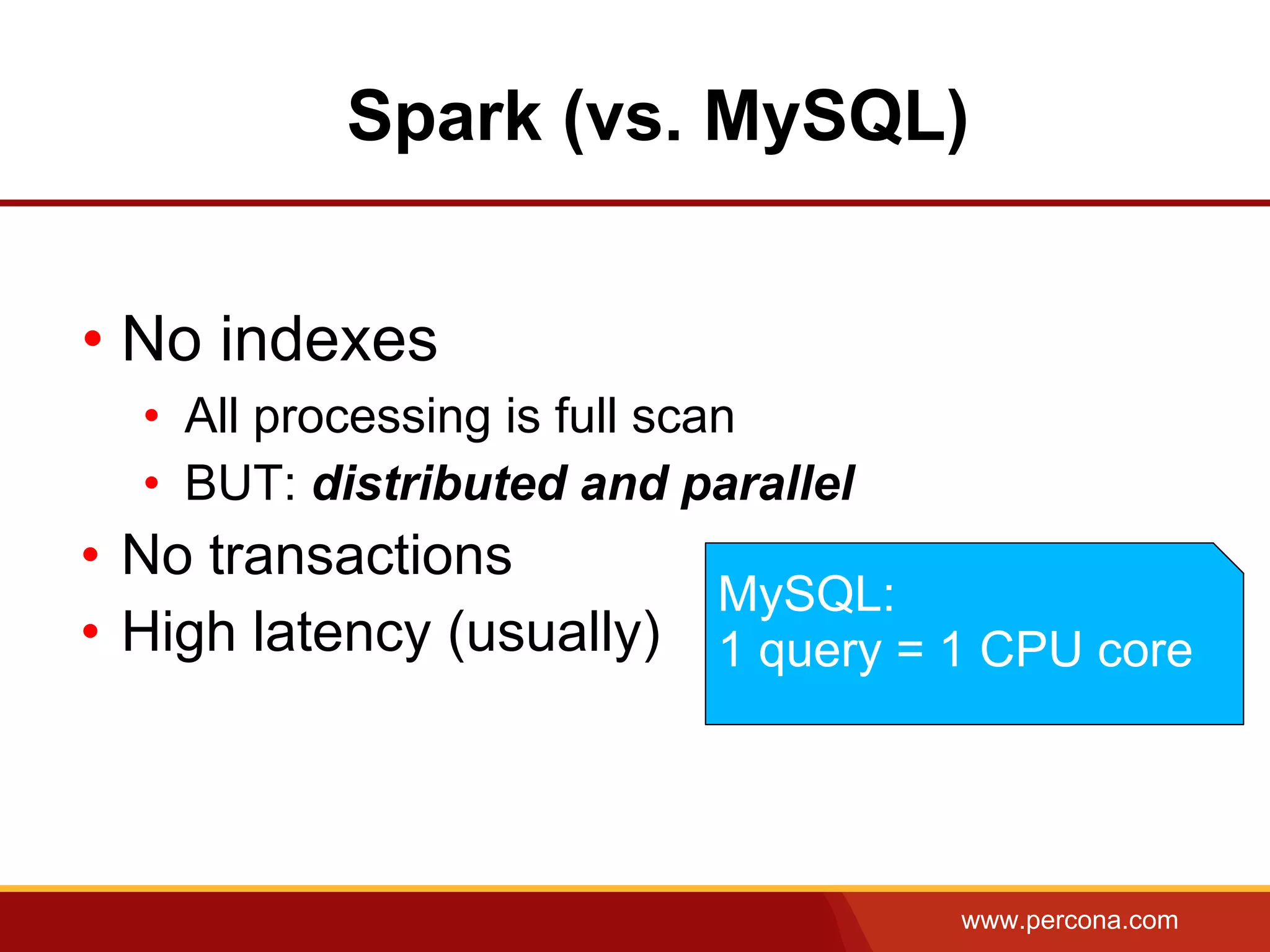

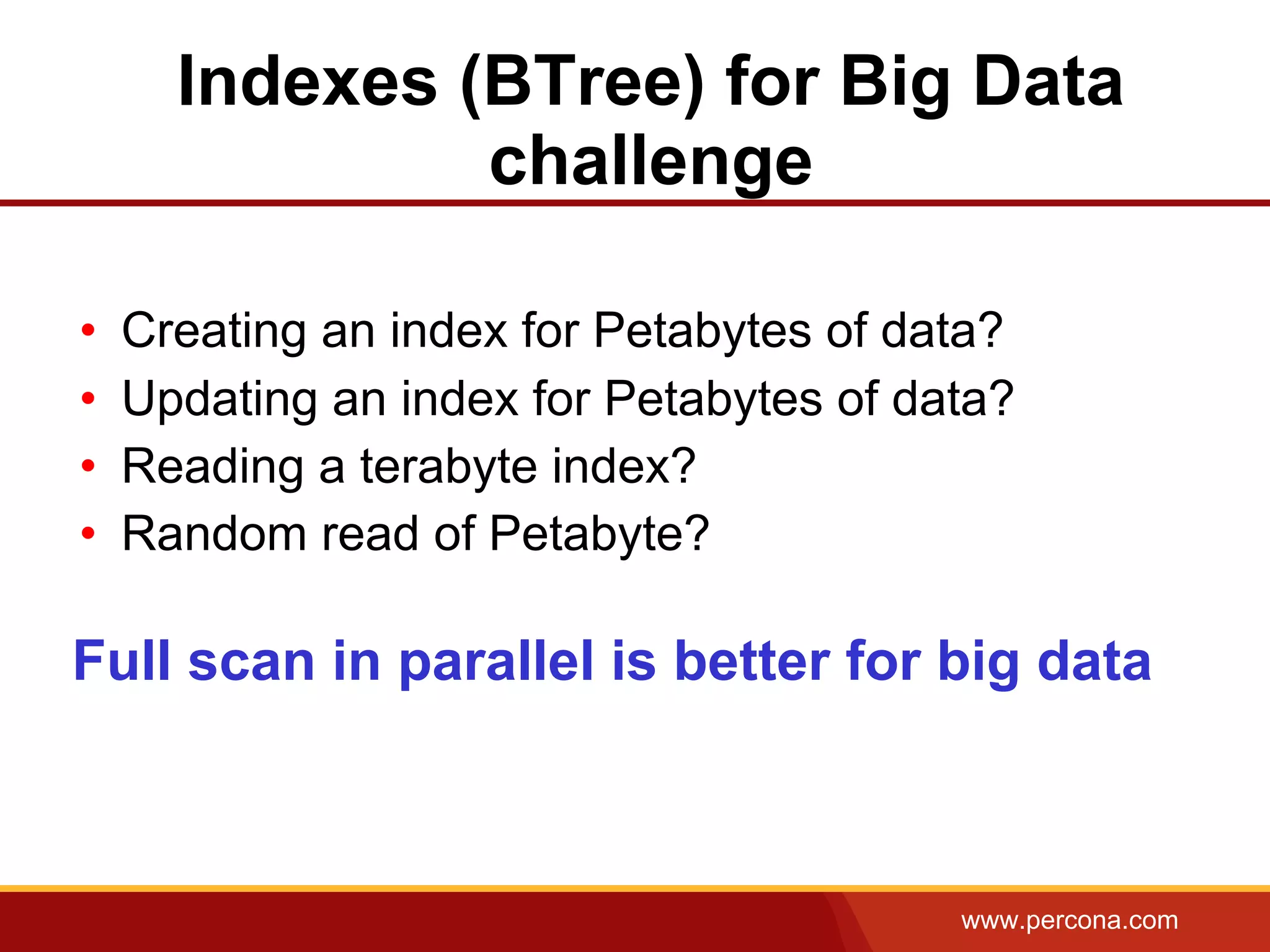

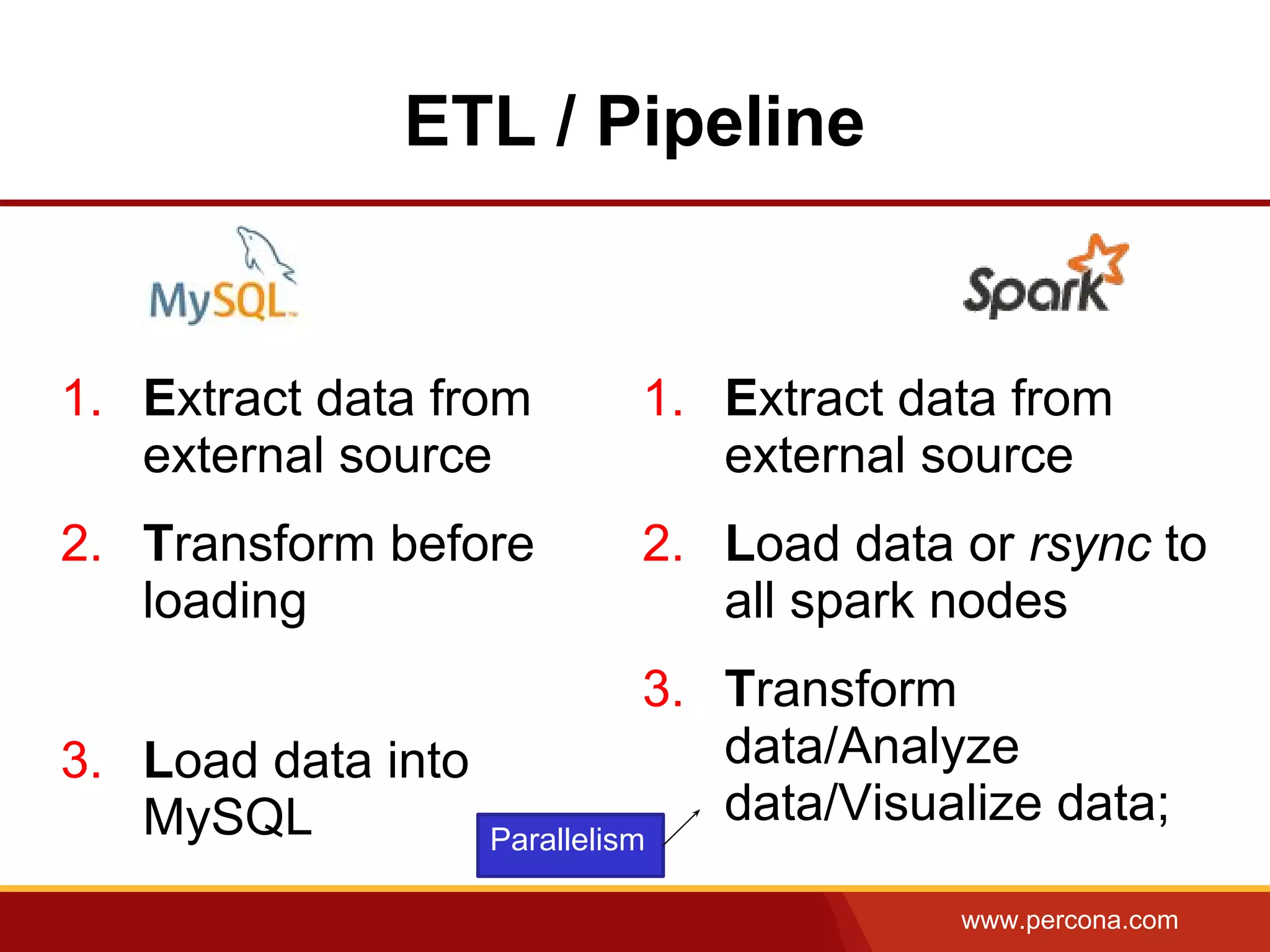

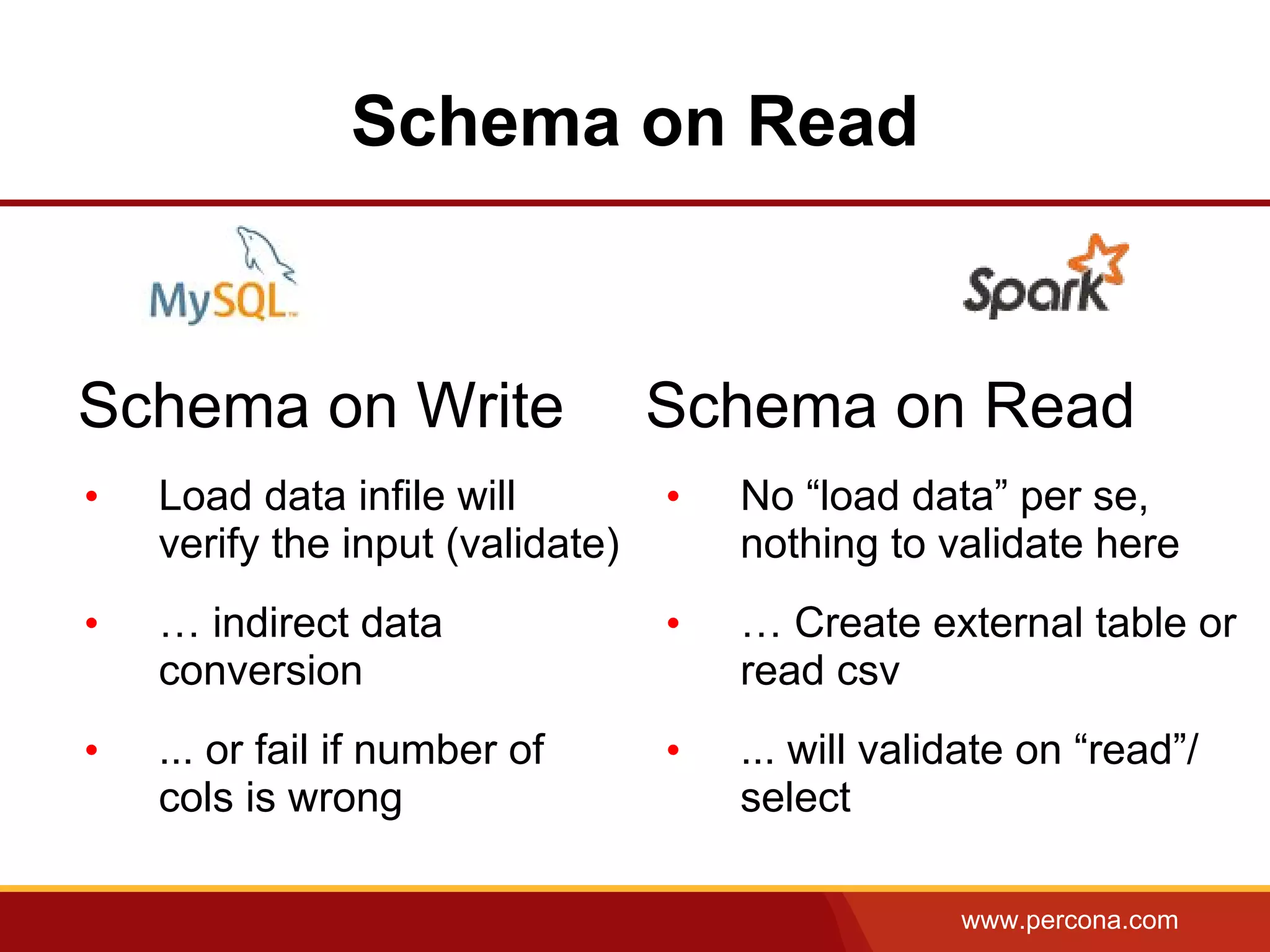

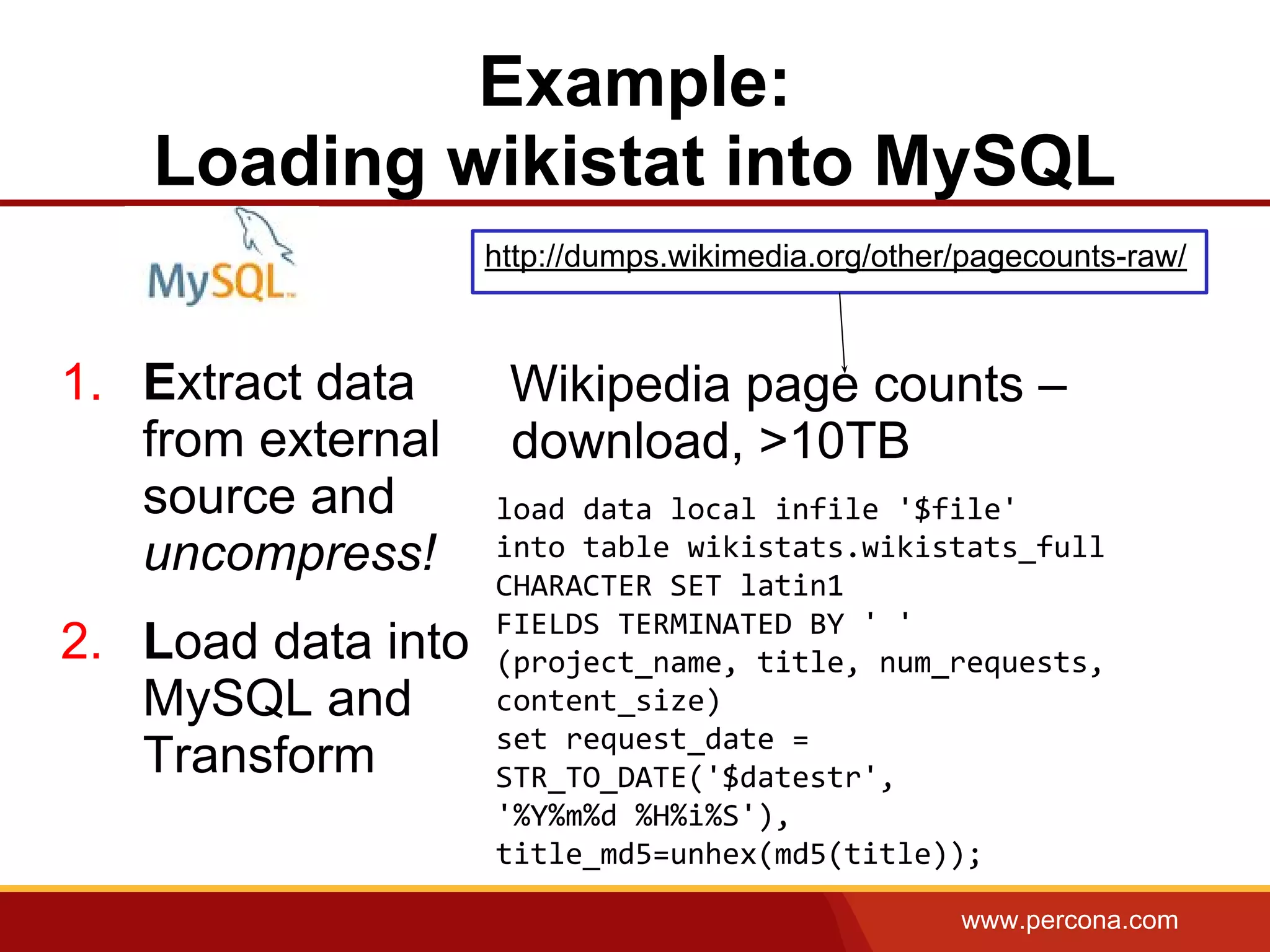

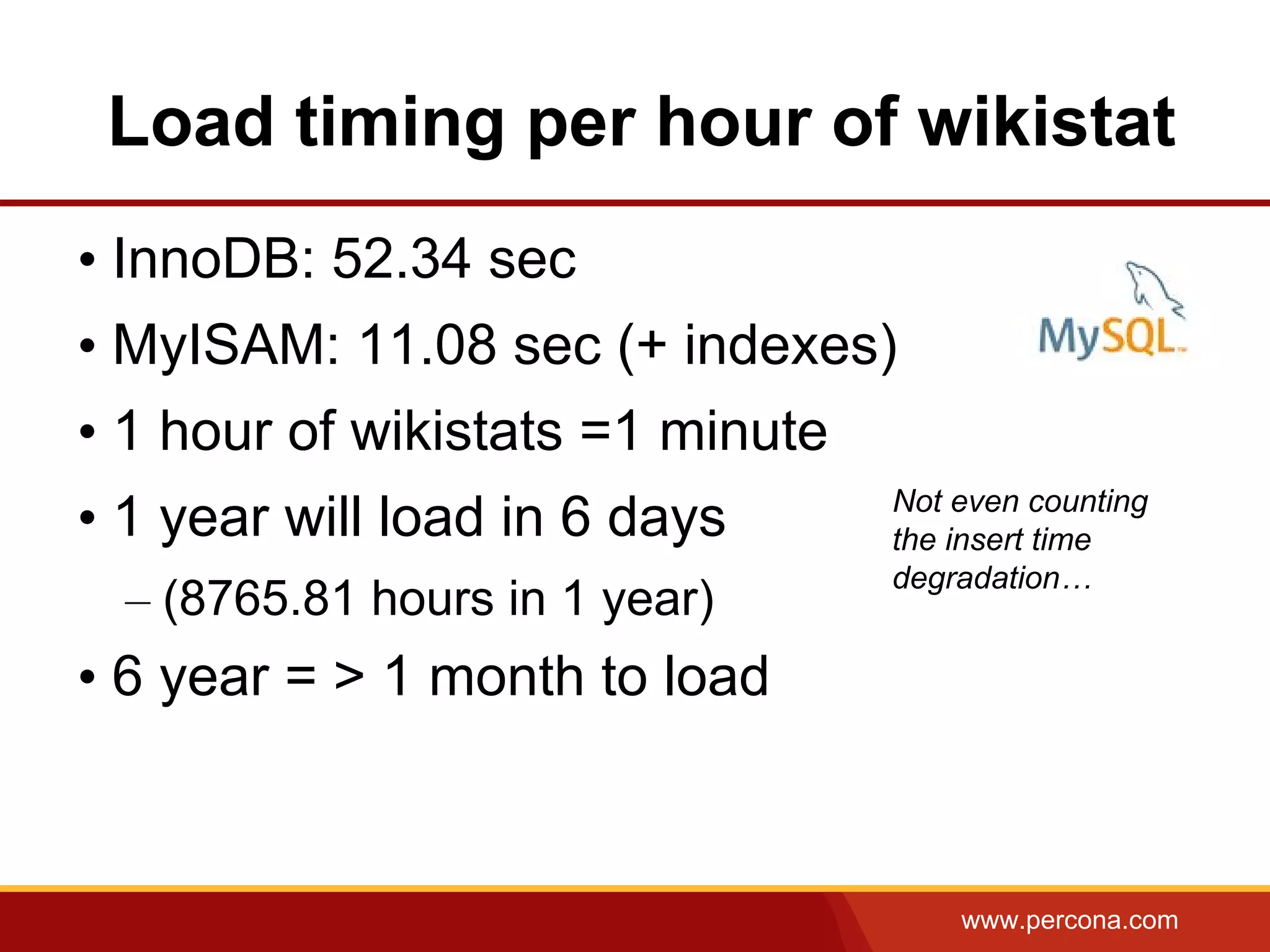

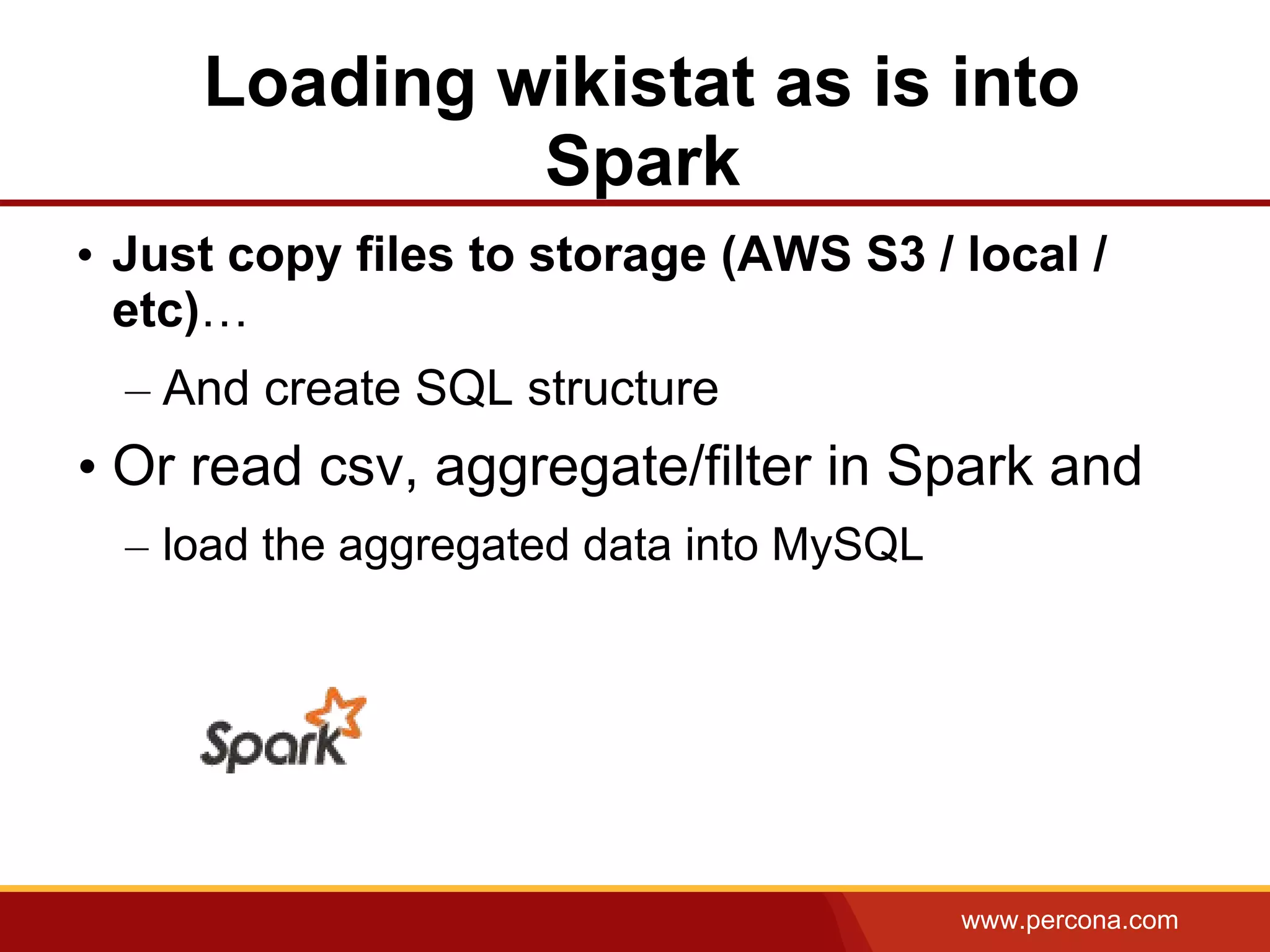

The document discusses using Apache Spark and MySQL for data analysis. It provides examples of loading Wikipedia usage statistics (Wikistats) data into both MySQL and Spark for analysis. Loading the full 10+ TB of Wikistats data into MySQL took over a month, while Spark was able to scan and analyze the entire dataset in under an hour by leveraging its ability to perform distributed, parallel processing across multiple nodes. The document compares key differences between Spark and MySQL for big data processing, such as Spark's lack of indexes but ability to perform full scans in parallel across nodes.

![www.percona.com

Spark and WikiStats: load pipeline

Row(project=p[0],

url=urllib.unquote(p[1]).lower(),

num_requests=int(p[2]),

content_size=int(p[3])))](https://image.slidesharecdn.com/usingapachesparkandmysqlfordataanalysis-170205010832/75/Using-Apache-Spark-and-MySQL-for-Data-Analysis-16-2048.jpg)