Download as PDF, PPTX

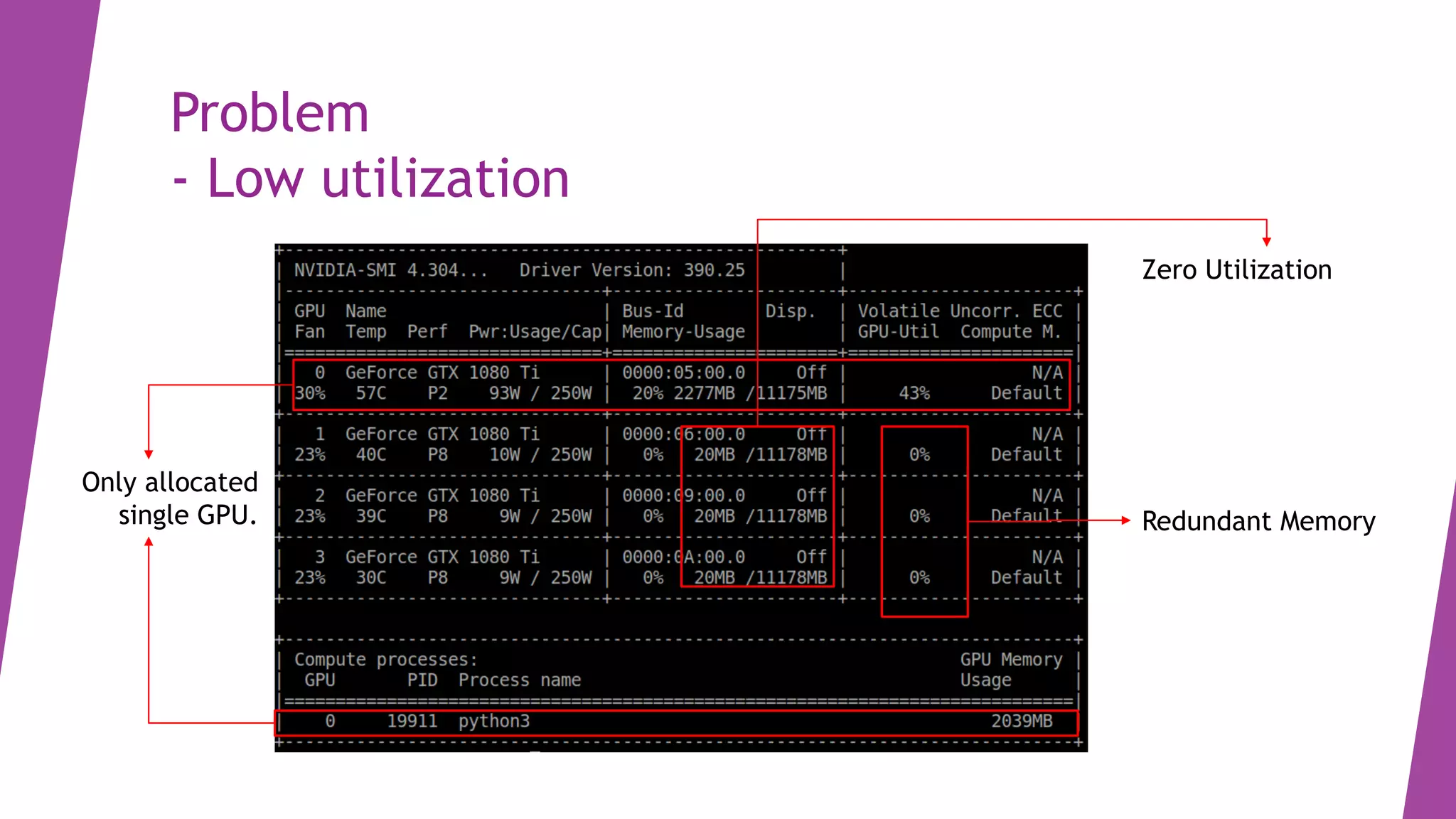

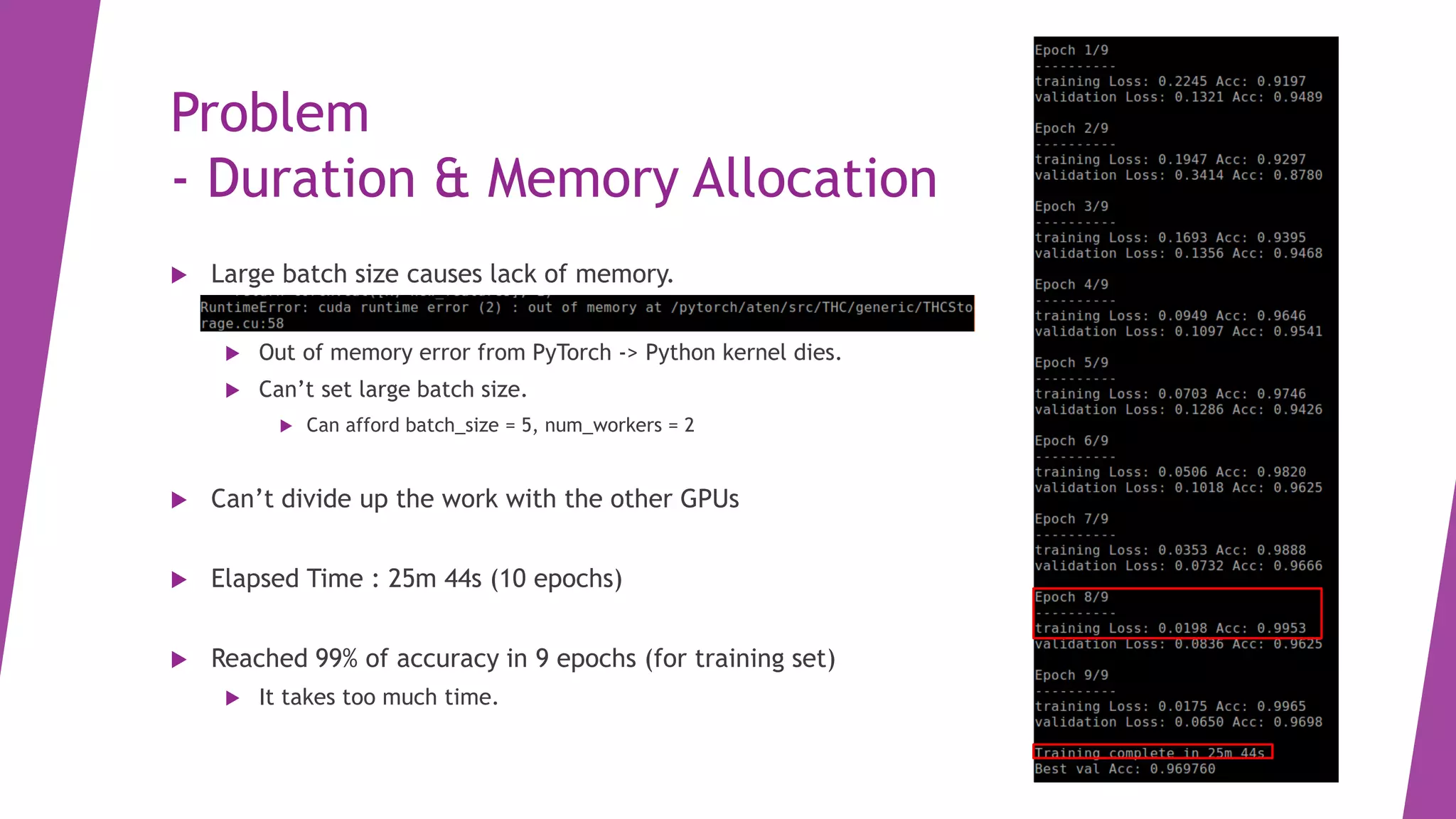

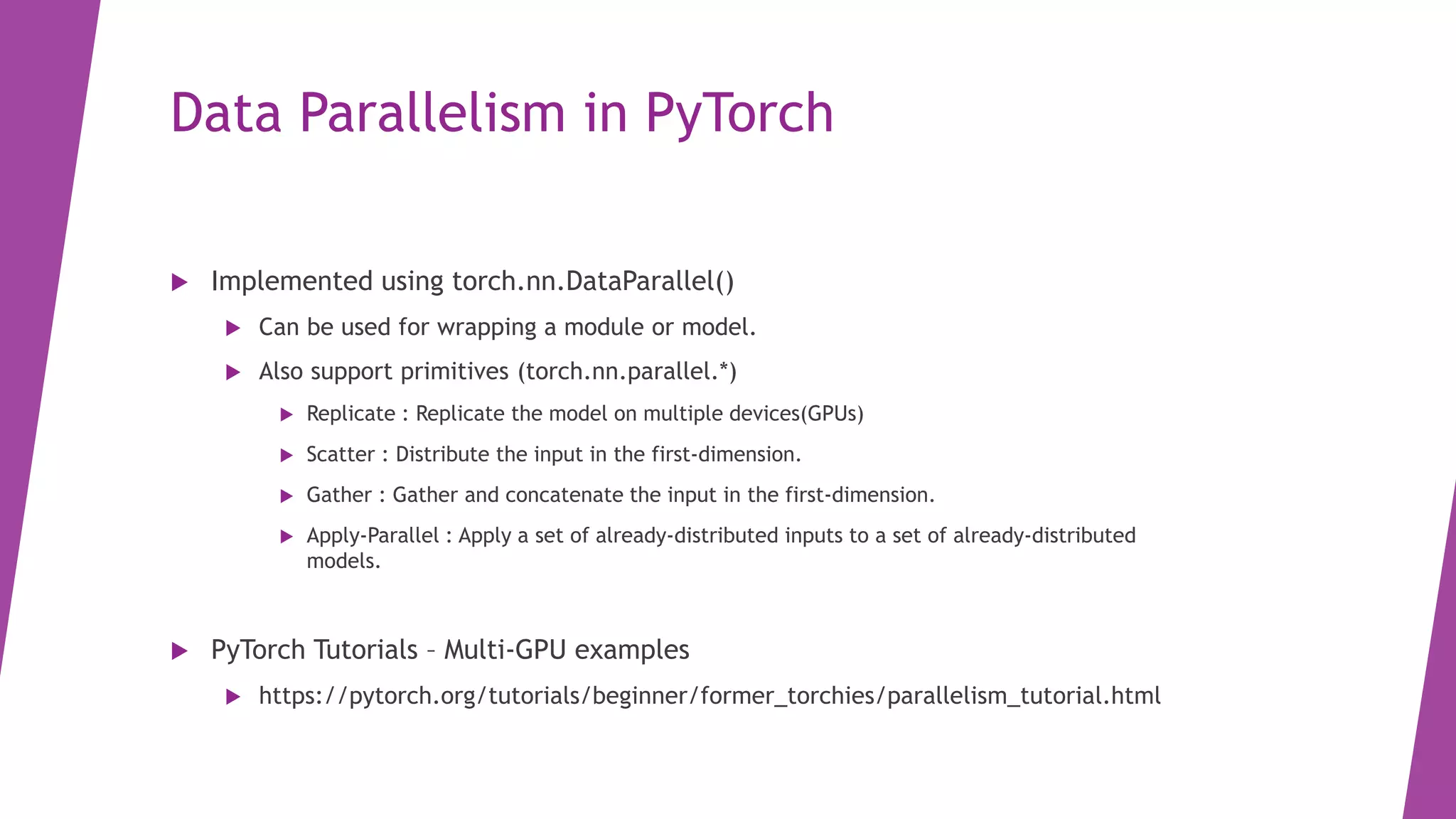

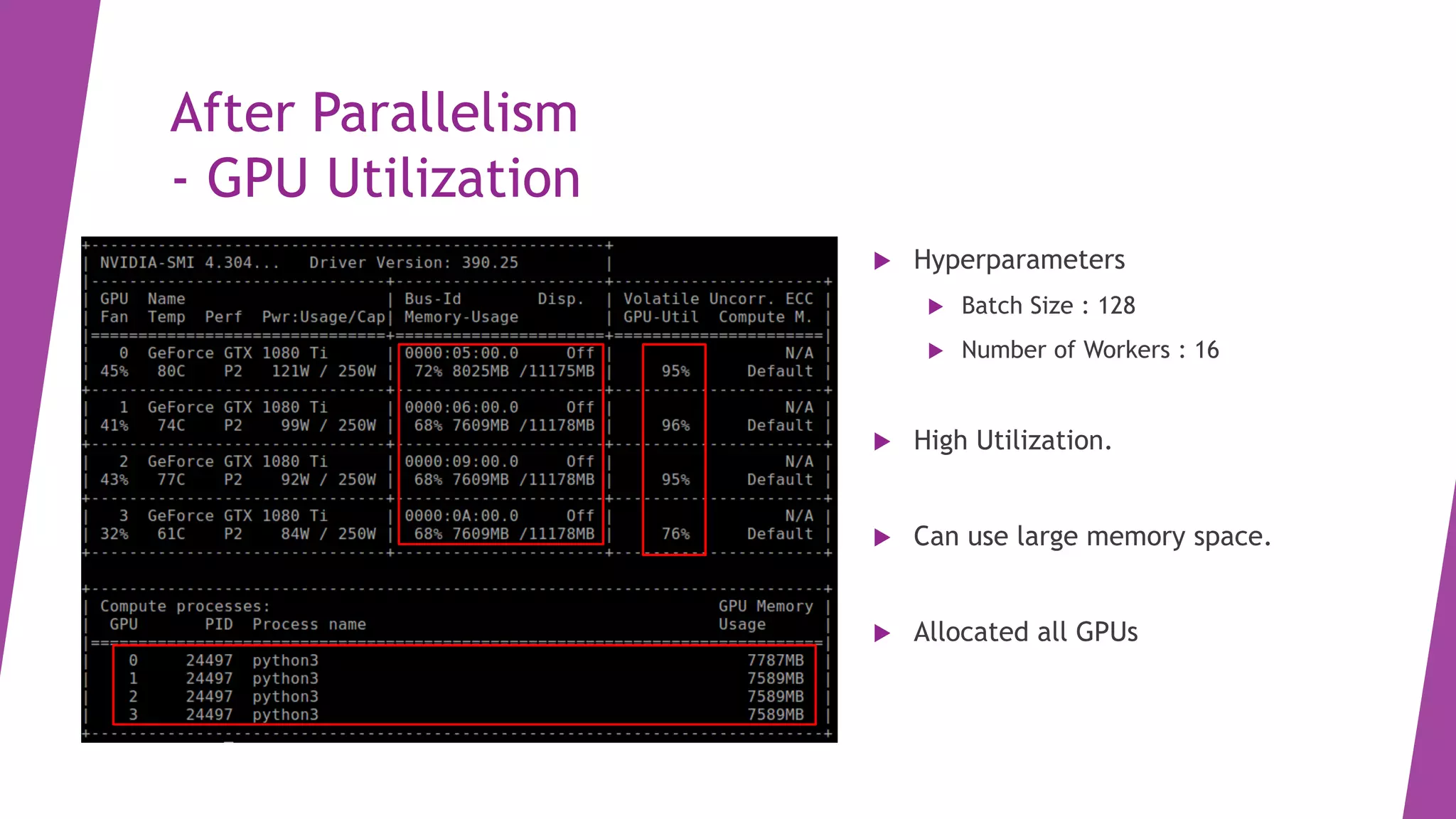

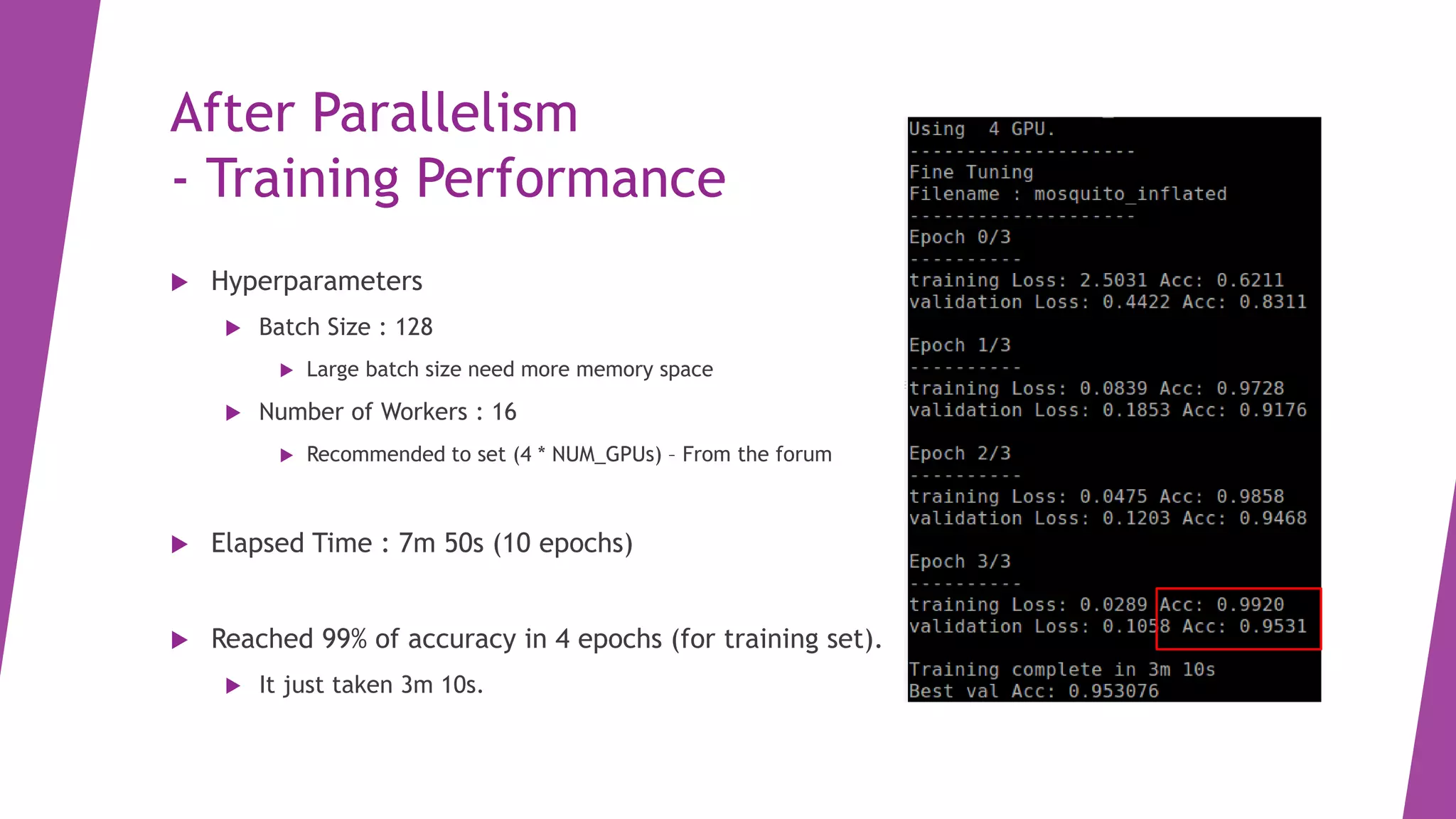

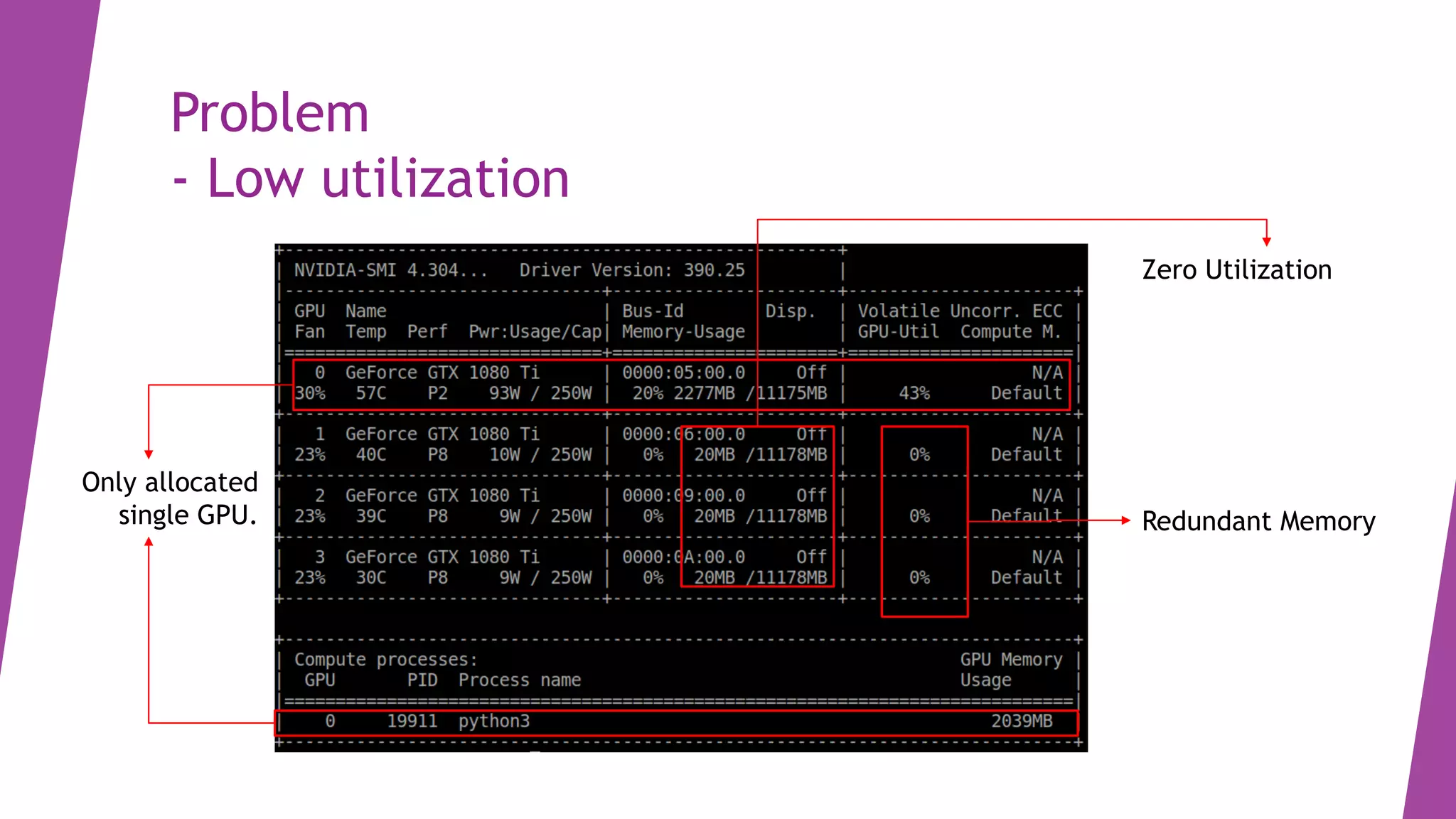

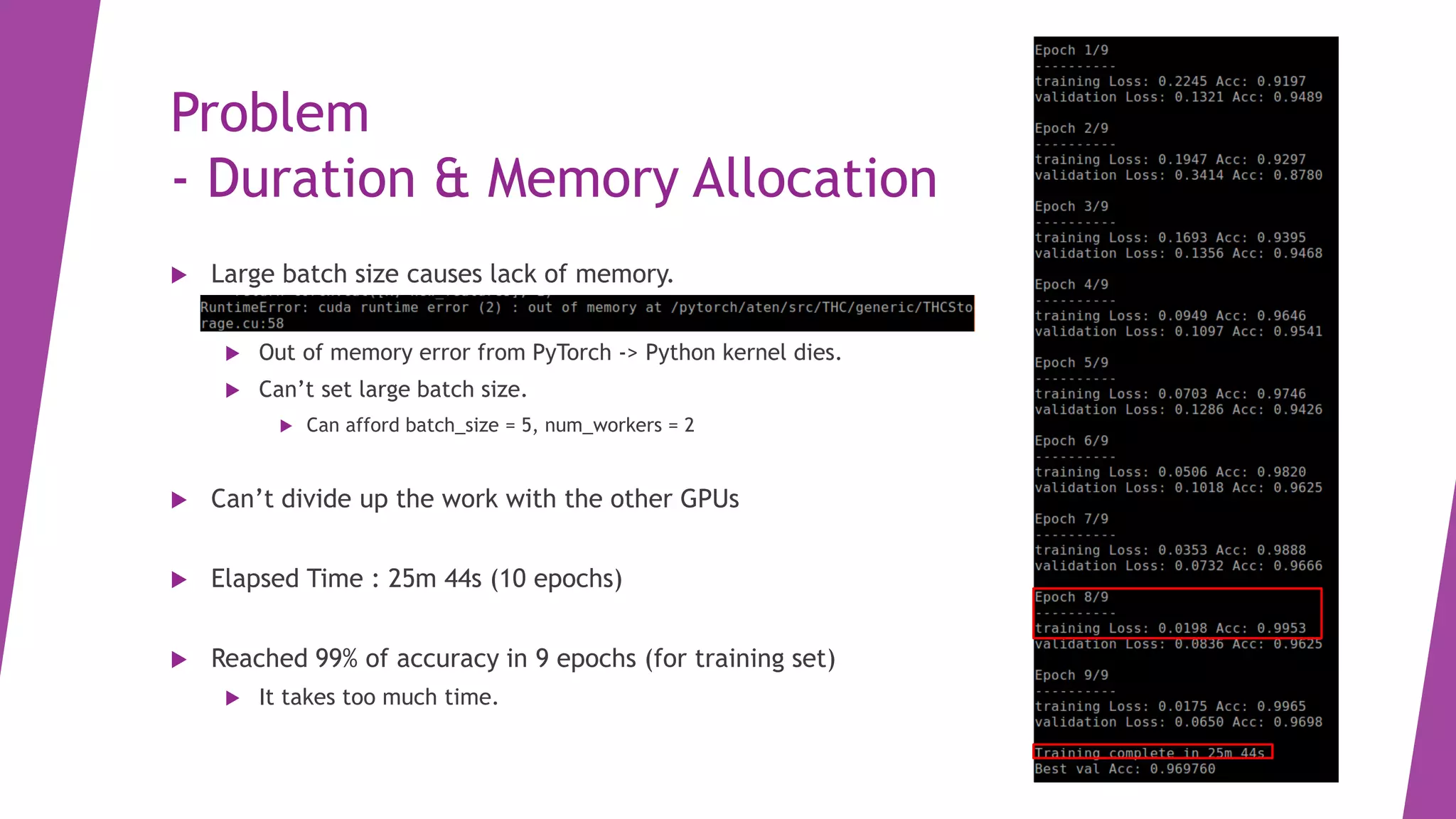

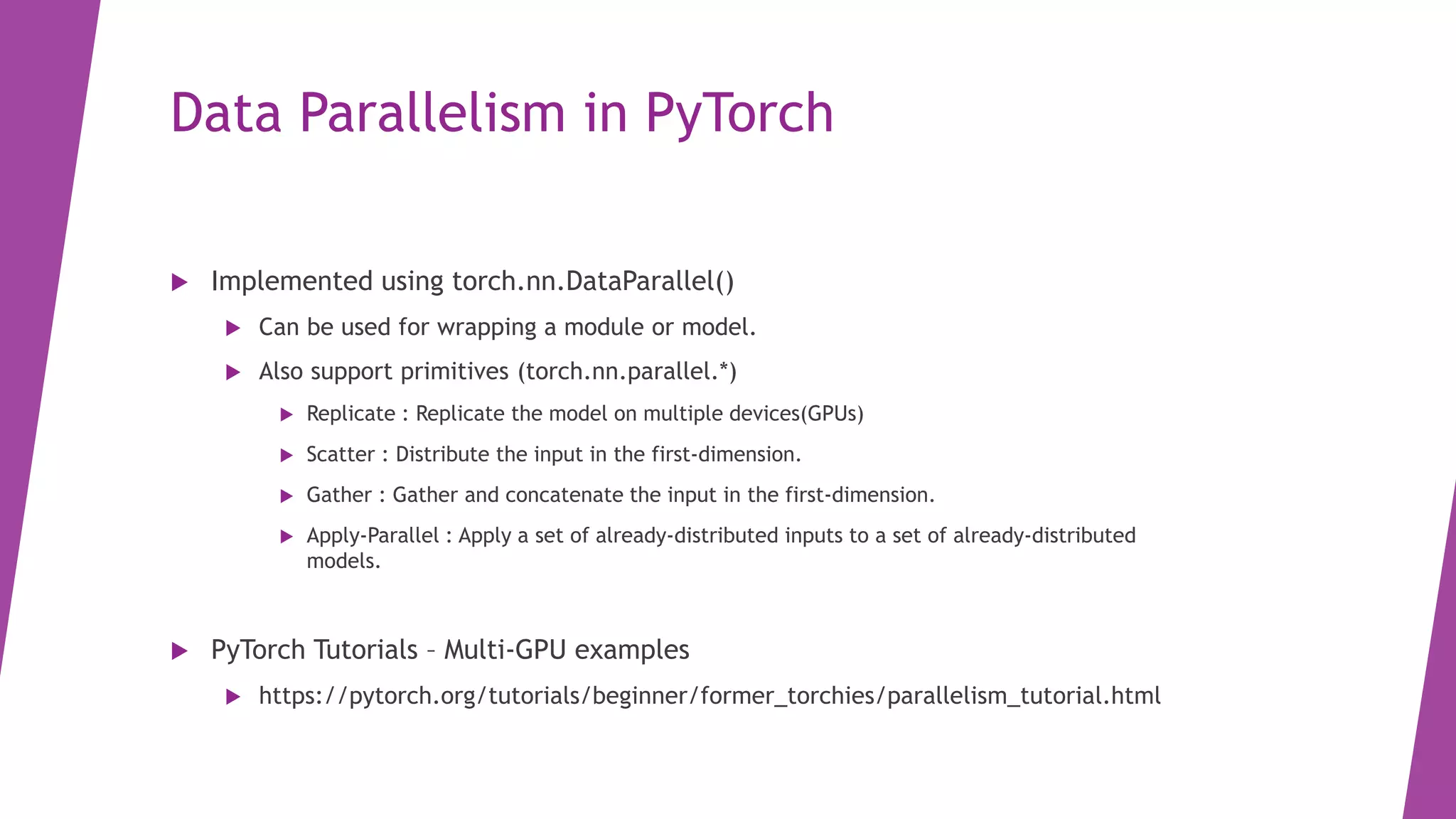

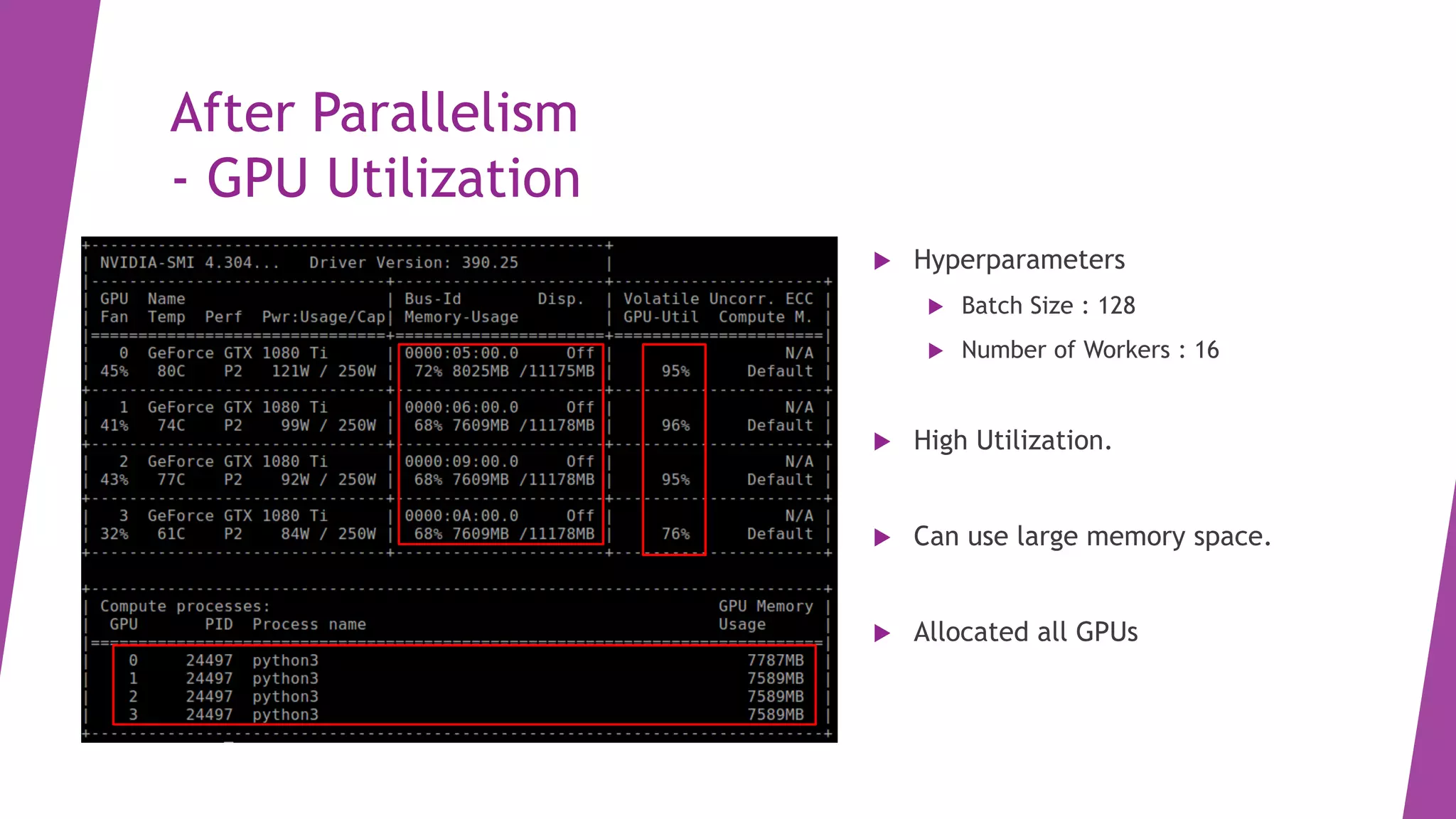

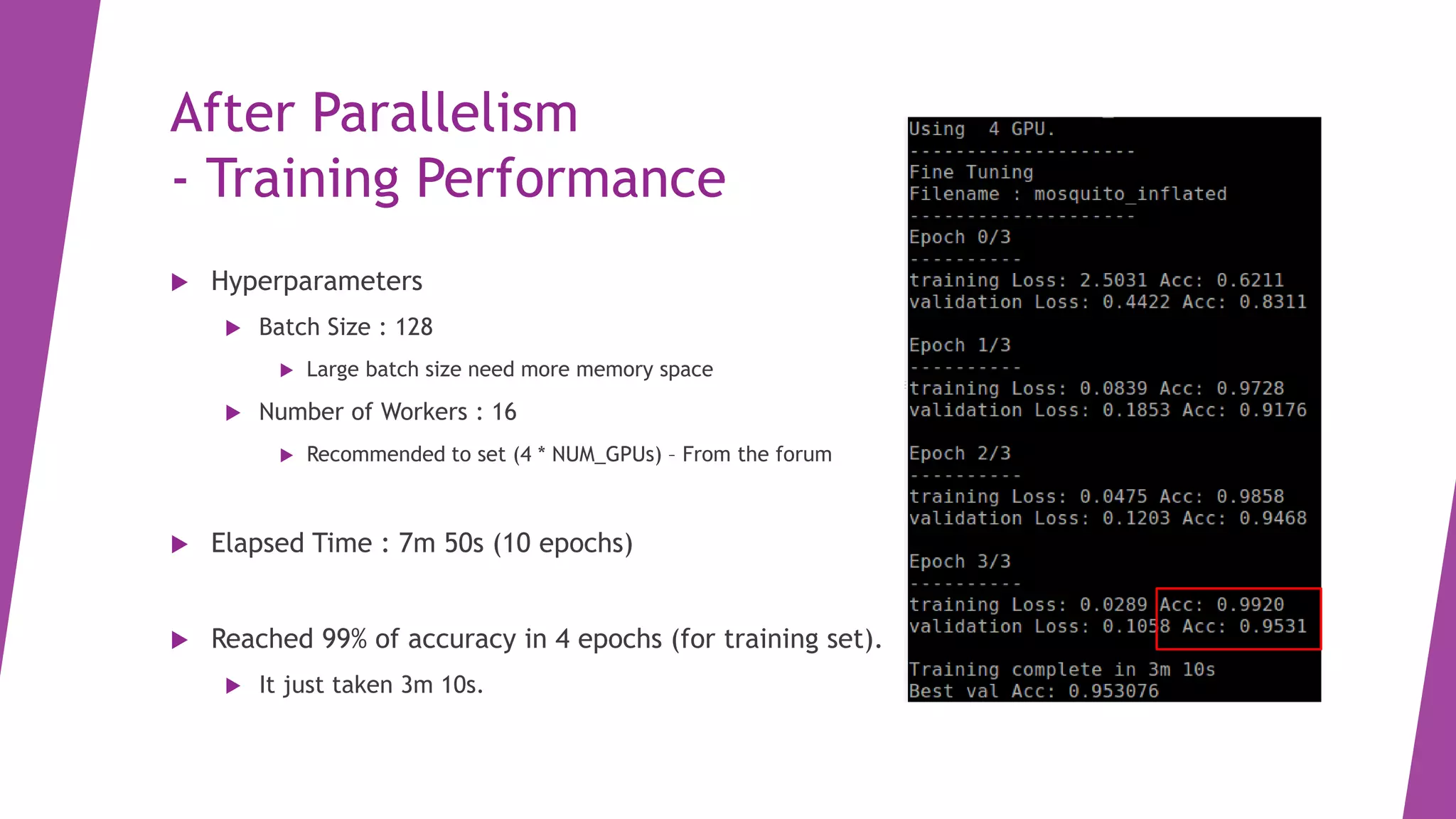

This document discusses using multiple GPUs in PyTorch for improved training performance and GPU utilization. It describes problems with single GPU usage like low utilization and memory issues. Data parallelism in PyTorch is introduced as a solution, using torch.nn.DataParallel to replicate models across devices and distribute input/output. After applying parallelism with a batch size of 128 and 16 workers, GPU utilization is high, memory usage is improved, and training time decreased significantly from 25 minutes to just 7 minutes for 10 epochs.