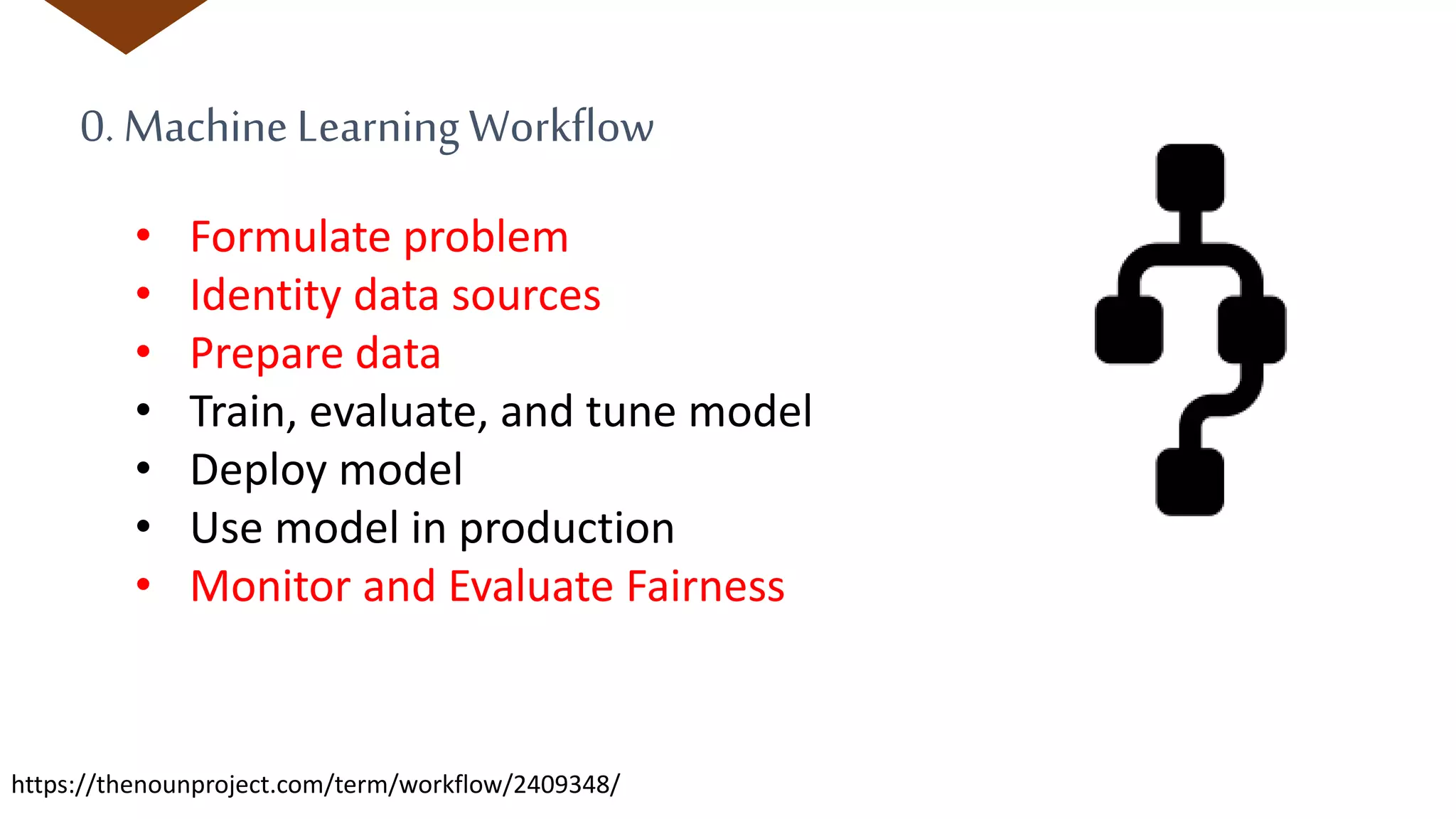

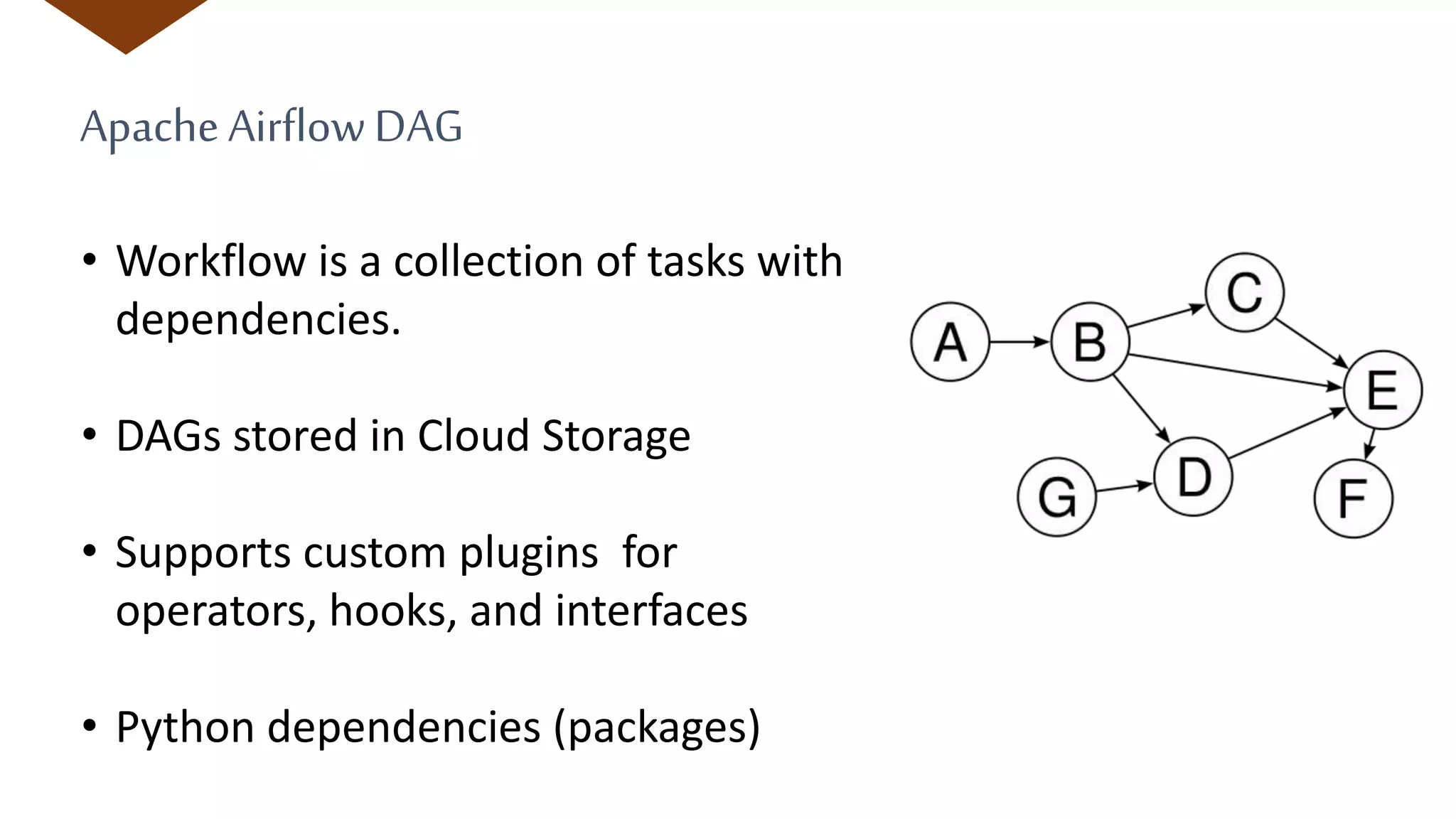

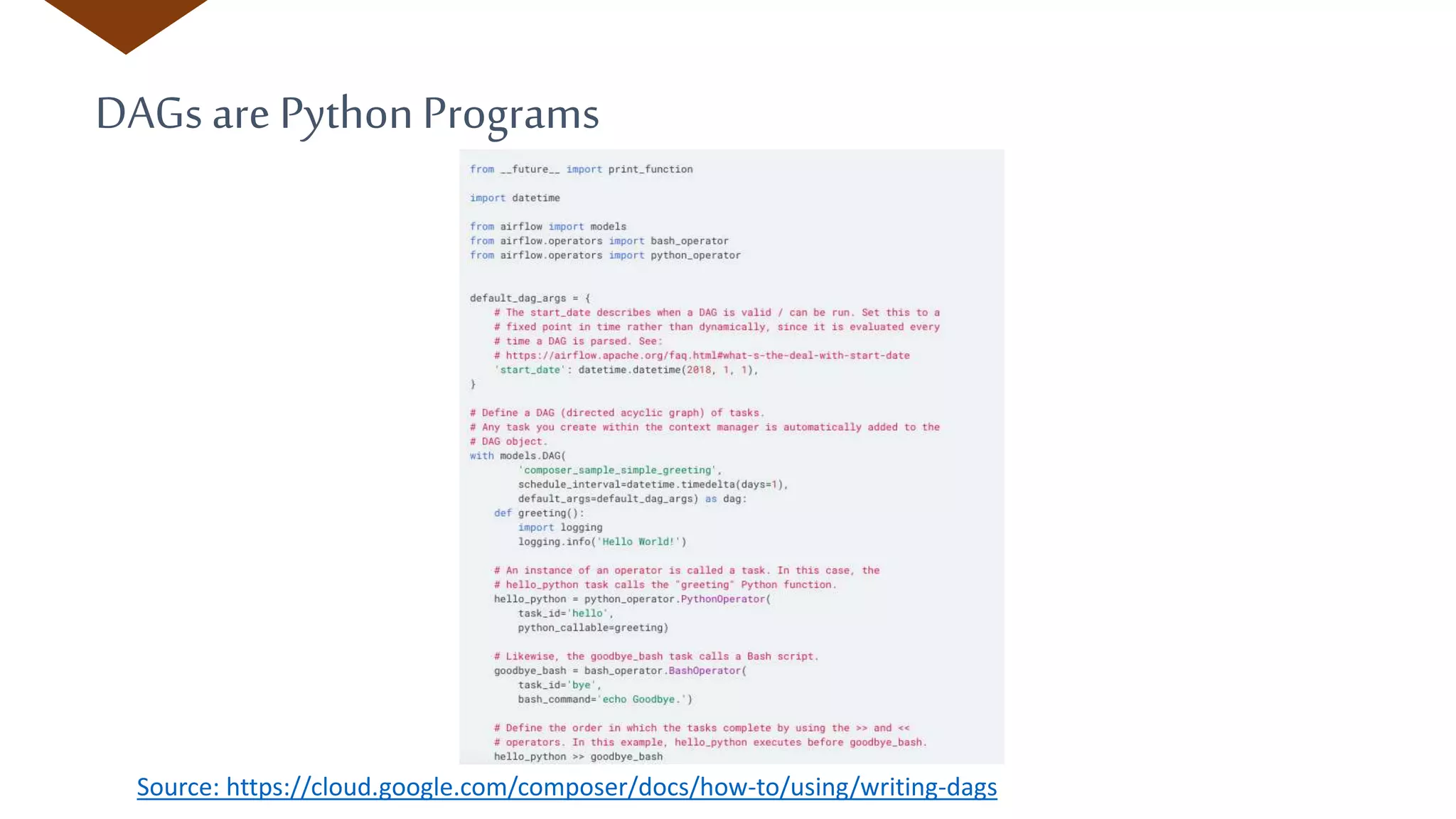

The document discusses the machine learning workflow and modern tools for building, deploying, and monitoring ML models, particularly using Google Cloud services. It emphasizes the importance of defining problems, identifying data sources, and evaluating model fairness throughout the ML process. Additionally, it highlights services like AutoML, AI Platform, and Cloud Composer that facilitate various stages of ML development while maintaining an emphasis on data quality and monitoring practices.