The document introduces TensorFlow's data input pipeline, specifically the tf.data module used for handling large datasets through various operations such as filtering, mapping, and batching. It discusses the creation of tf.data datasets from tensors or numpy arrays, illustrates the use of lazy operators like filter and map, and demonstrates method chaining for streamlined data processing. Code examples illustrate these concepts in practice, enhancing understanding of how to work efficiently with data in TensorFlow.

![What are TF Datasets

import tensorflow as tf # tf-dataset1.py

import numpy as np

x = np.array([1,2,3,4,5])

ds = tf.data.Dataset.from_tensor_slices(x)

# iterate through the elements:

for value in ds.take(len(x)):

print(value)](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-5-2048.jpg)

![tf.data.Dataset.from_tensors()

Import tensorflow as tf

#combine the input into one element

t1 = tf.constant([[1, 2], [3, 4]])

ds1 = tf.data.Dataset.from_tensors(t1)

# output: [[1, 2], [3, 4]]](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-10-2048.jpg)

![tf.data.Dataset.from_tensor_slices()

Import tensorflow as tf

#separate element for each item

t2 = tf.constant([[1, 2], [3, 4]])

ds1 =

tf.data.Dataset.from_tensor_slices(t2)

# output: [1, 2], [3, 4]](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-11-2048.jpg)

![TF filter() operator: ex #1

import tensorflow as tf # tf2_filter1.py

import numpy as np

x = np.array([1,2,3,4,5])

ds = tf.data.Dataset.from_tensor_slices(x)

print("First iteration:")

for value in ds:

print("value:",value)](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-13-2048.jpg)

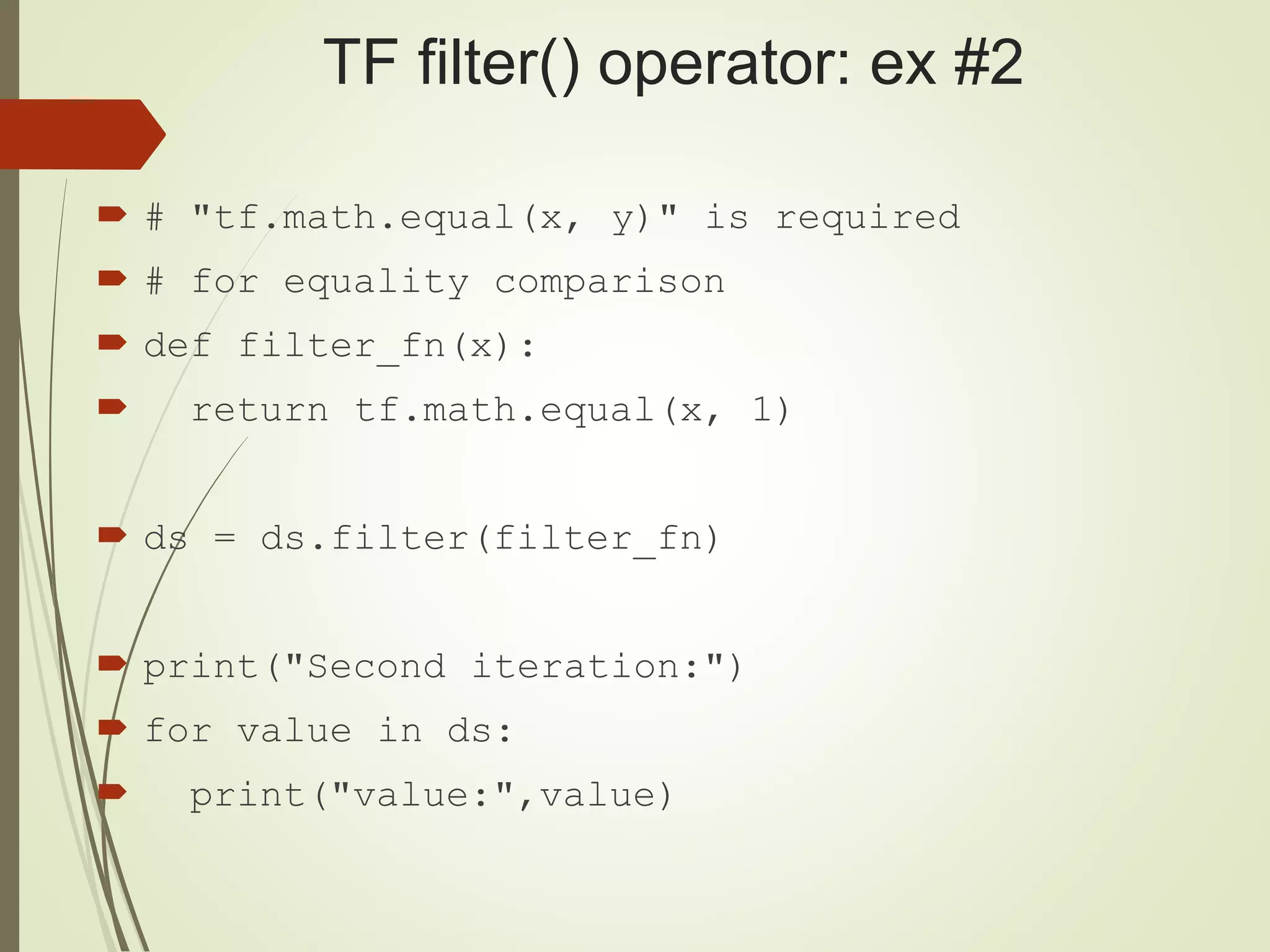

![TF filter() operator: ex #2

import tensorflow as tf # tf2_filter2.py

import numpy as np

x = np.array([1,2,3,4,5])

ds = tf.data.Dataset.from_tensor_slices(x)

print("First iteration:")

for value in ds:

print("value:",value)](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-15-2048.jpg)

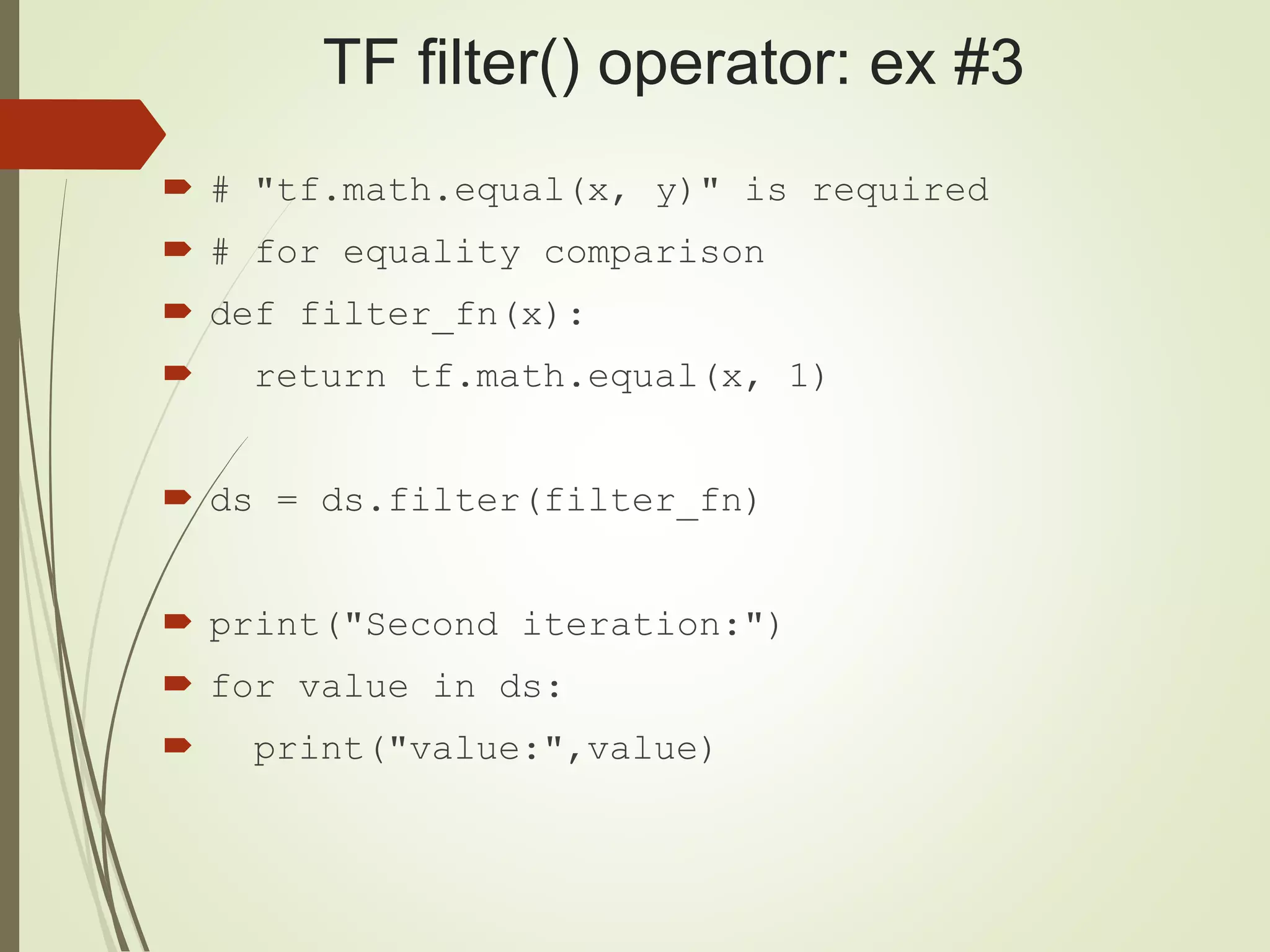

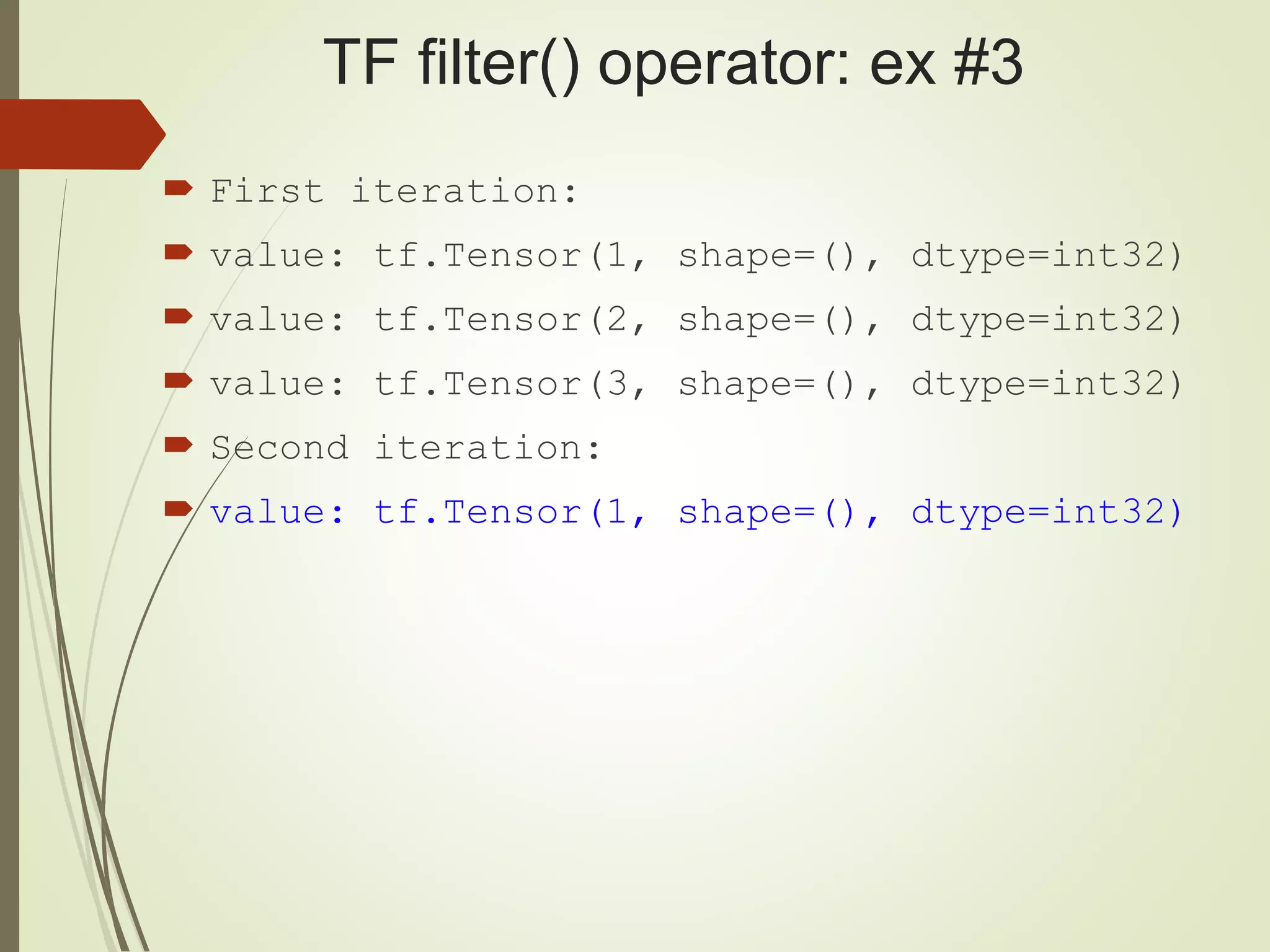

![TF filter() operator: ex #3

import tensorflow as tf # tf2_filter3.py

import numpy as np

ds = tf.data.Dataset.from_tensor_slices([1,2,3,4,5])

ds = ds.filter(lambda x: x < 4) # [1,2,3]

print("First iteration:")

for value in ds:

print("value:",value)](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-19-2048.jpg)

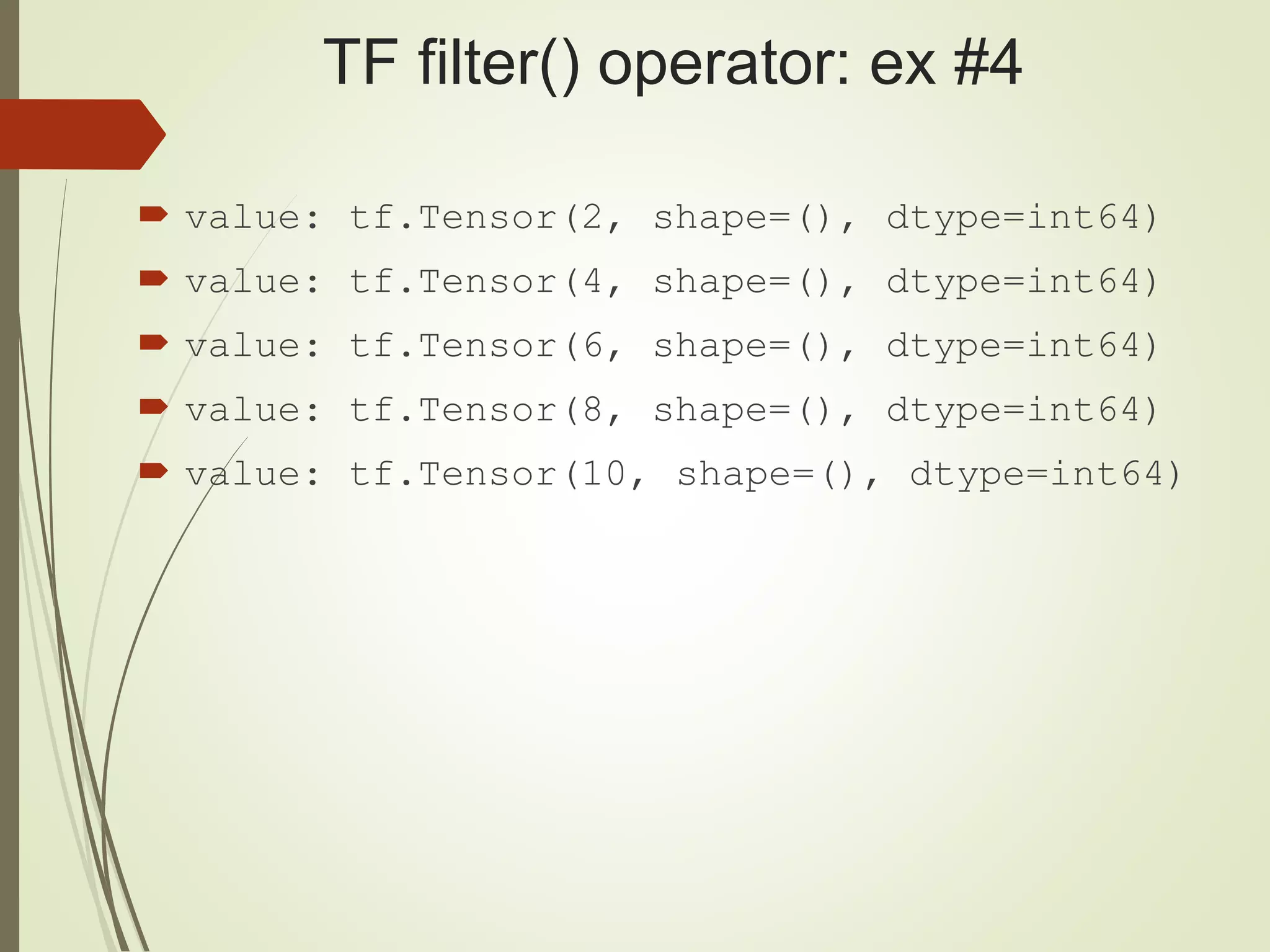

![TF filter() operator: ex #4

import tensorflow as tf # tf2_filter5.py

import numpy as np

def filter_fn(x):

return tf.equal(x % 2, 0)

x = np.array([1,2,3,4,5,6,7,8,9,10])

ds = tf.data.Dataset.from_tensor_slices(x)

ds = ds.filter(filter_fn)

for value in ds:

print("value:",value)](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-22-2048.jpg)

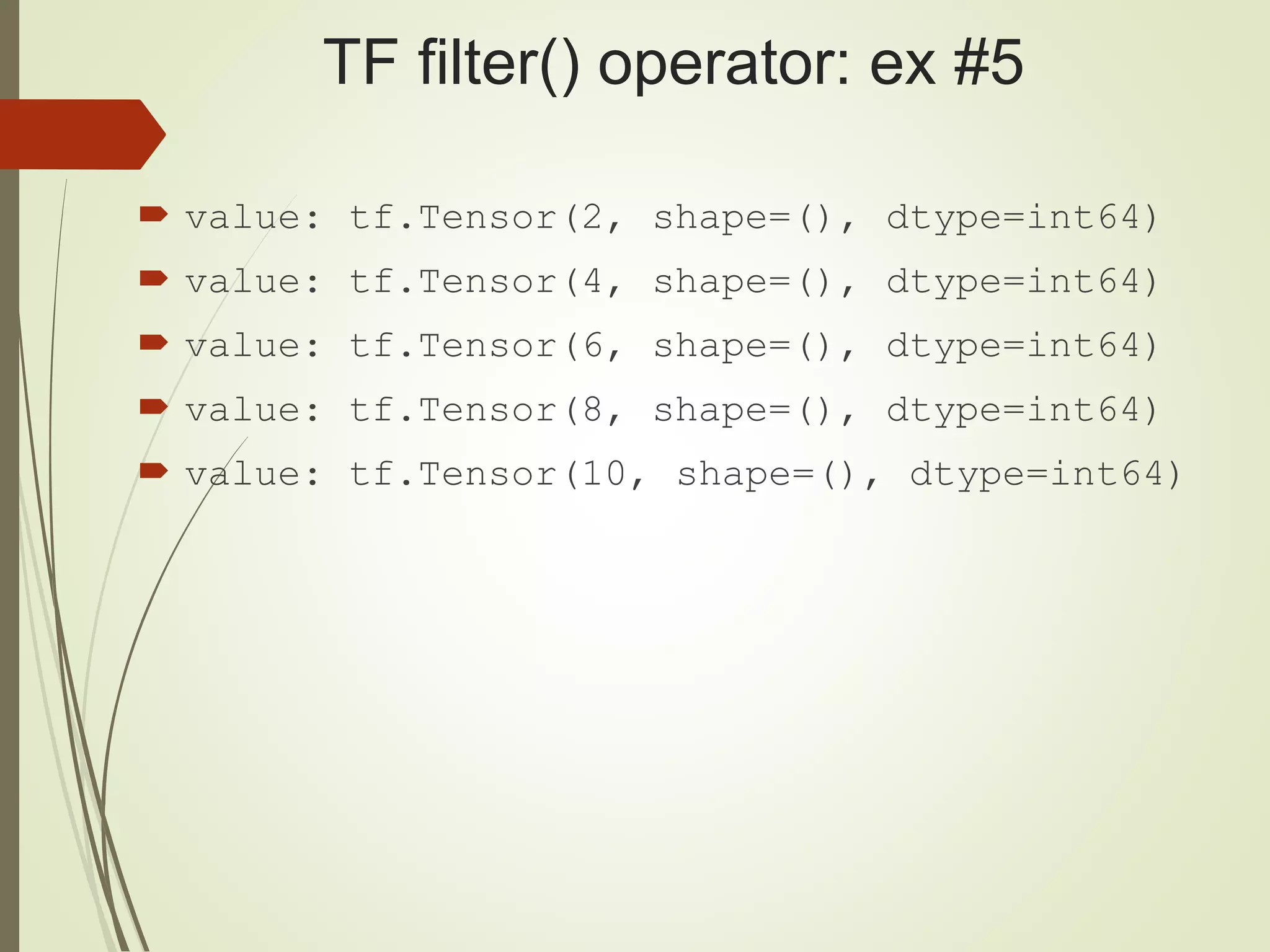

![TF filter() operator: ex #5

import tensorflow as tf # tf2_filter5.py

import numpy as np

def filter_fn(x):

return tf.reshape(tf.not_equal(x % 2, 1), [])

x = np.array([1,2,3,4,5,6,7,8,9,10])

ds = tf.data.Dataset.from_tensor_slices(x)

ds = ds.filter(filter_fn)

for value in ds:

print("value:",value)](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-24-2048.jpg)

![TF map() operator: ex #1

import tensorflow as tf # tf2-map.py

import numpy as np

x = np.array([[1],[2],[3],[4]])

ds = tf.data.Dataset.from_tensor_slices(x)

ds = ds.map(lambda x: x*2)

for value in ds:

print("value:",value)](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-26-2048.jpg)

![TF map() operator: ex #1

value: tf.Tensor([2], shape=(1,), dtype=int64)

value: tf.Tensor([4], shape=(1,), dtype=int64)

value: tf.Tensor([6], shape=(1,), dtype=int64)

value: tf.Tensor([8], shape=(1,), dtype=int64)](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-27-2048.jpg)

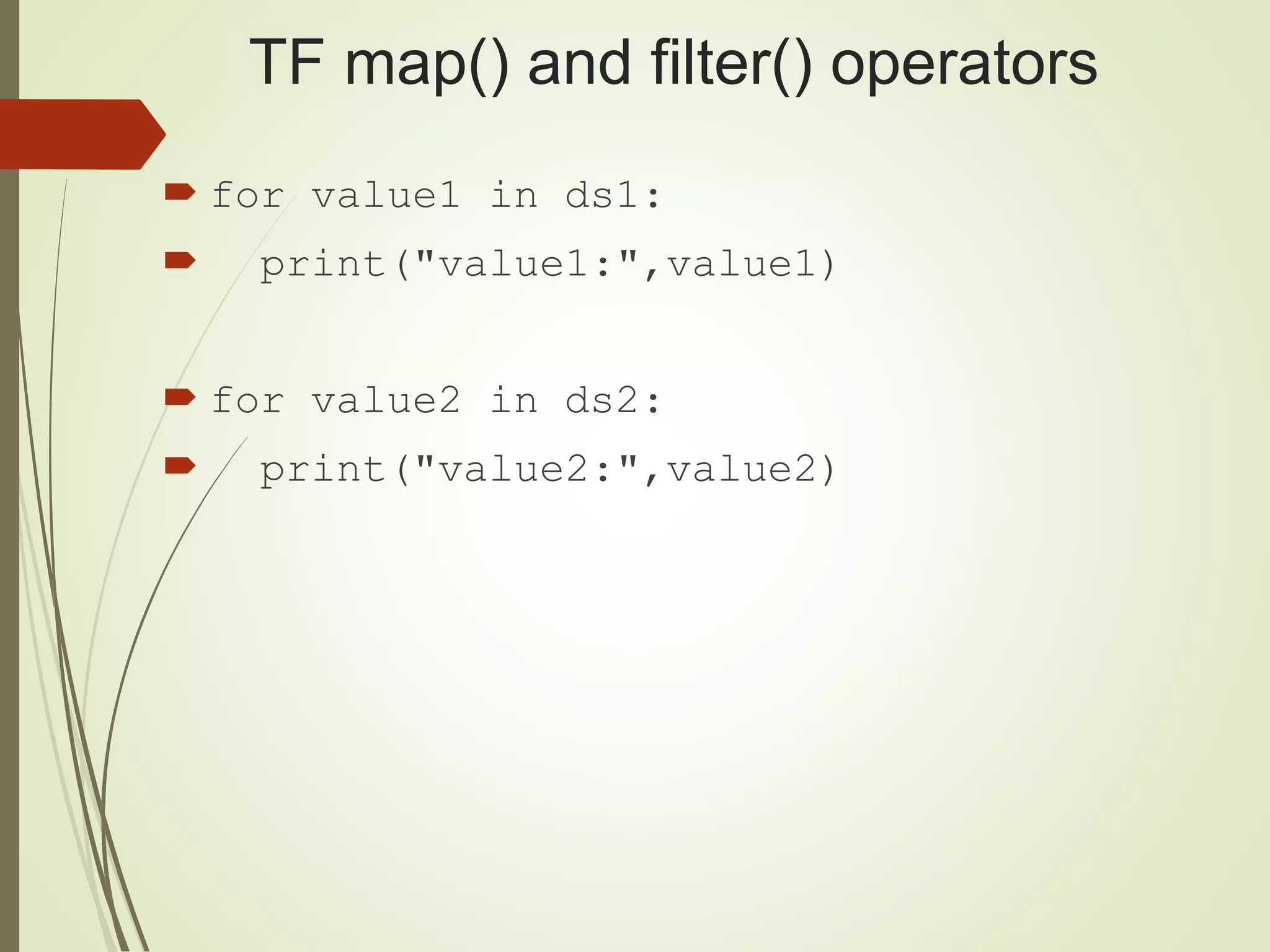

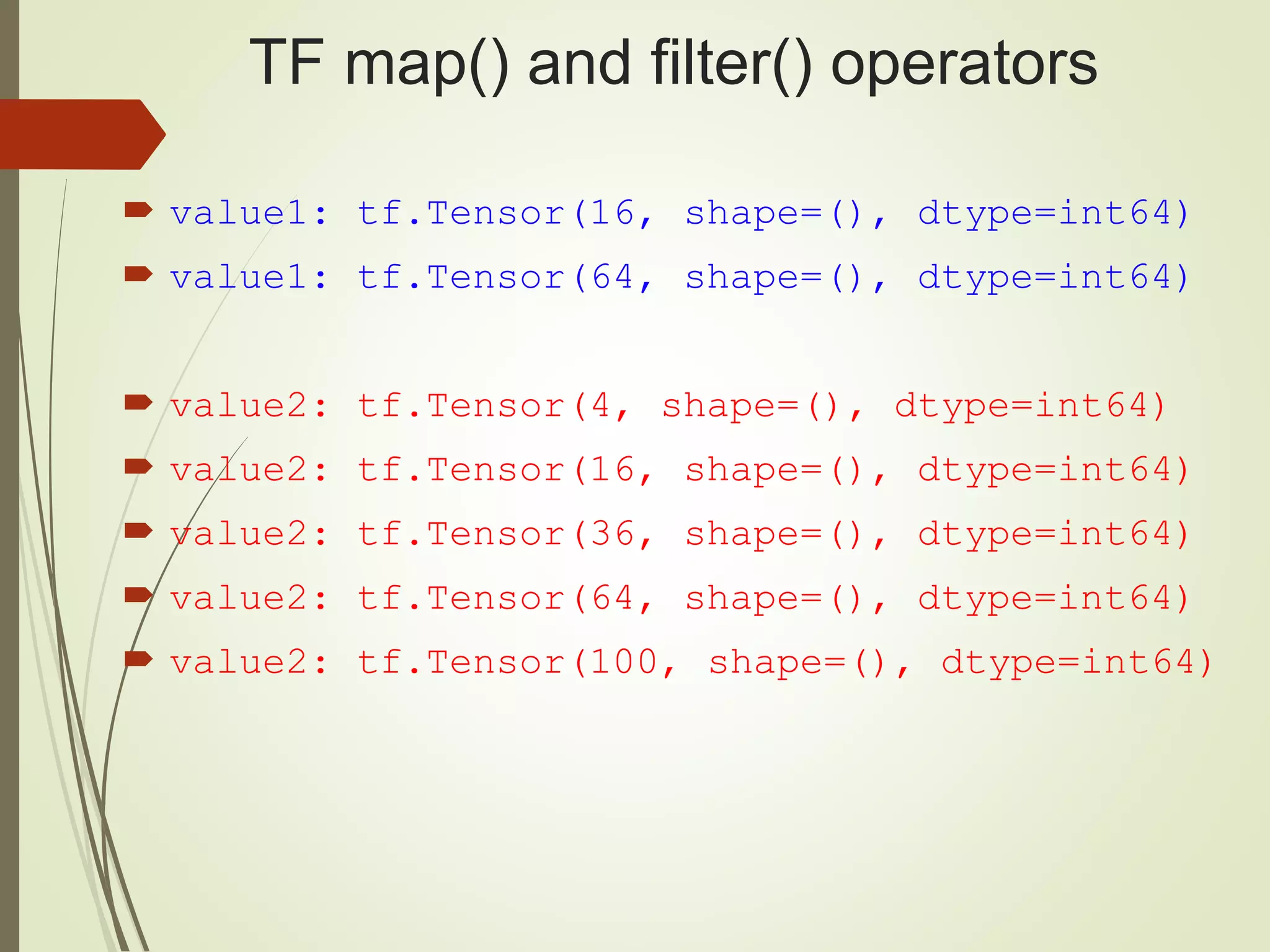

![TF map() and filter() operators

import tensorflow as tf # tf2_map_filter.py

import numpy as np

x = np.array([1,2,3,4,5,6,7,8,9,10])

ds = tf.data.Dataset.from_tensor_slices(x)

ds1 = ds.filter(lambda x: tf.equal(x % 4, 0))

ds1 = ds1.map(lambda x: x*x)

ds2 = ds.map(lambda x: x*x)

ds2 = ds2.filter(lambda x: tf.equal(x % 4, 0))](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-28-2048.jpg)

![TF map() operator: ex #2

import tensorflow as tf # tf2-map2.py

import numpy as np

x = np.array([[1],[2],[3],[4]])

ds = tf.data.Dataset.from_tensor_slices(x)

# METHOD #1: THE LONG WAY

# a lambda expression to double each value

#ds = ds.map(lambda x: x*2)

# a lambda expression to add one to each value

#ds = ds.map(lambda x: x+1)

# a lambda expression to cube each value

#ds = ds.map(lambda x: x**3)](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-31-2048.jpg)

![TF map() operator: ex #2

value: tf.Tensor([27], shape=(1,), dtype=int64)

value: tf.Tensor([125], shape=(1,), dtype=int64)

value: tf.Tensor([343], shape=(1,), dtype=int64)

value: tf.Tensor([729], shape=(1,), dtype=int64)](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-33-2048.jpg)

![TF take() operator: ex #2

import tensorflow as tf # tf2_take.py

import numpy as np

x = np.array([[1],[2],[3],[4]])

# make a ds from a numpy array

ds = tf.data.Dataset.from_tensor_slices(x)

ds = ds.map(lambda x: x*2)

.map(lambda x: x+1).map(lambda x: x**3)

for value in ds.take(4):

print("value:",value)](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-36-2048.jpg)

![TF 2 map() and take(): output

value: tf.Tensor([27], shape=(1,), dtype=int64)

value: tf.Tensor([125], shape=(1,), dtype=int64)

value: tf.Tensor([343], shape=(1,), dtype=int64)

value: tf.Tensor([729], shape=(1,), dtype=int64)](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-37-2048.jpg)

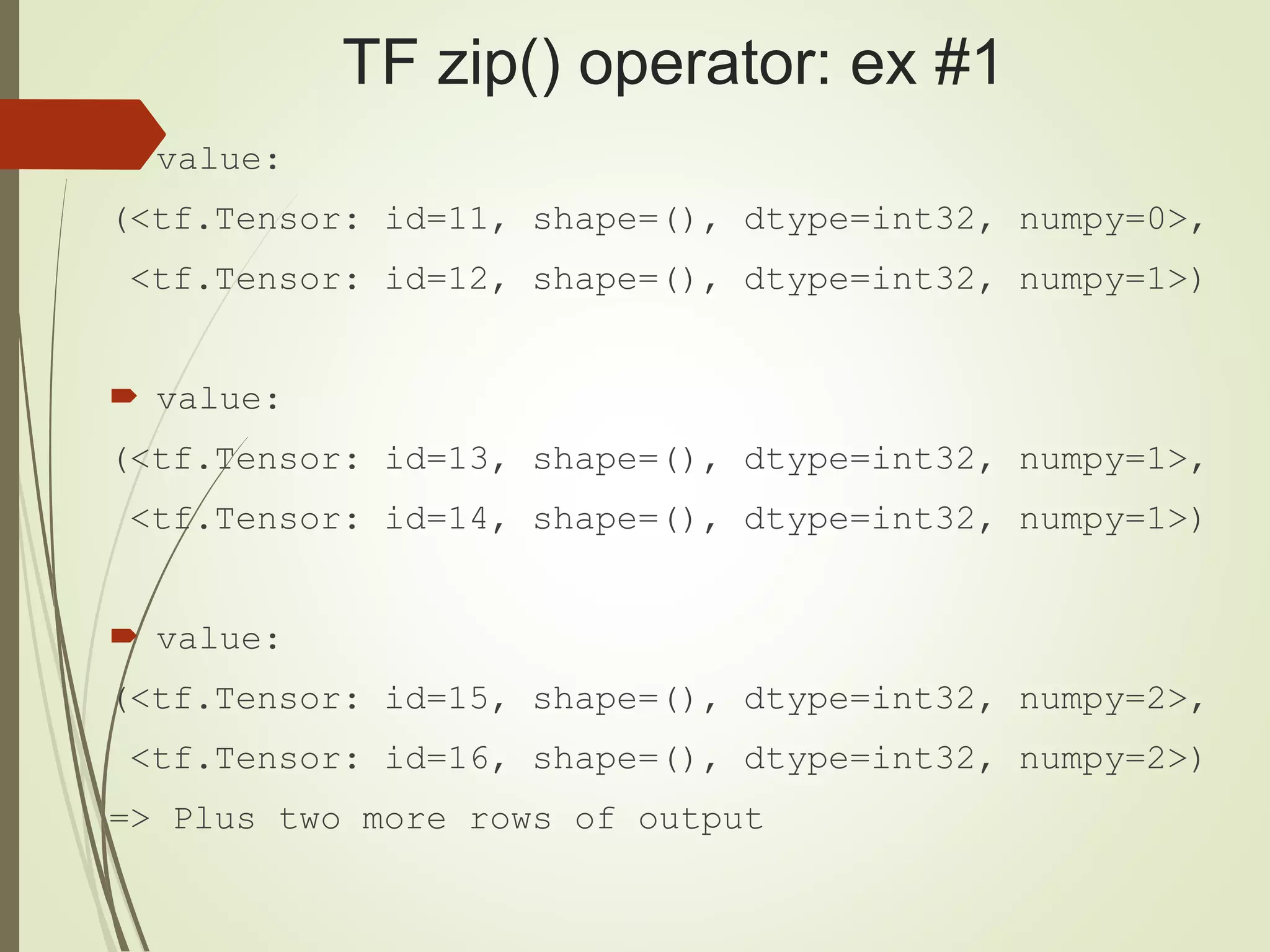

![TF zip() operator: ex #1

import tensorflow as tf # tf2_zip1.py

import numpy as np

dx = tf.data.Dataset.from_tensor_slices([0,1,2,3,4])

dy = tf.data.Dataset.from_tensor_slices([1,1,2,3,5])

# zip the two datasets together

d2 = tf.data.Dataset.zip((dx, dy))

for value in d2:

print("value:",value)](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-38-2048.jpg)

![TF zip() operator: ex #3

value: (<tf.Tensor: id=21, shape=(4,), dtype=int64,

numpy=array([0, 1, 2, 3])>, <tf.Tensor: id=22,

shape=(4,), dtype=int64, numpy=array([ 0, -1, -2, -

3])>)

value: (<tf.Tensor: id=25, shape=(4,), dtype=int64,

numpy=array([4, 5, 6, 7])>, <tf.Tensor: id=26,

shape=(4,), dtype=int64, numpy=array([-4, -5, -6, -

7])>)

value: (<tf.Tensor: id=29, shape=(4,), dtype=int64,

numpy=array([ 8, 9, 10, 11])>, <tf.Tensor: id=30,

shape=(4,), dtype=int64, numpy=array([ -8, -9, -10,

-11])>)

value: (<tf.Tensor: id=33, shape=(4,), dtype=int64,

numpy=array([12, 13, 14, 15])>, <tf.Tensor: id=34,

shape=(4,), dtype=int64, numpy=array([-12, -13, -14,

-15])>)](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-43-2048.jpg)

![Processing Text Files (2)

import tensorflow as tf # tf2_flatmap_filter.py

filenames = ["file.txt”]

ds = tf.data.Dataset.from_tensor_slices(filenames)](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-54-2048.jpg)

![Text Output (first two lines)

('value:', <tf.Tensor: id=16, shape=(5,),

dtype=string,

numpy=array(['this', 'is', 'file', 'line', ’#3'],

dtype=object)>)

('value:', <tf.Tensor: id=18, shape=(5,),

dtype=string,

numpy=array(['this', 'is', 'file', 'line', ’#5'],

dtype=object)>)](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-56-2048.jpg)

![Tokenizers and tf.text

import tensorflow as tf # NB: requires TF 2

import tensorflow_text as text

# pip3 install -q tensorflow-text

docs = tf.data.Dataset.from_tensor_slices(

[['Chicago Pizza'],

["how are you"]])

tokenizer = text.WhitespaceTokenizer()

token_docs = docs.map(

lambda x: tokenizer.tokenize(x))](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-57-2048.jpg)

![Tokenizers and tf.text

iterator = iter(tokenized_docs)

print(next(iterator).to_list())

print(next(iterator).to_list())

# [[b'a', b'b', b'c']]

# [[b'd', b'e', b'f']]

[[b'Chicago', b'Pizza']]

[[b'how', b'are', b'you']]](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-58-2048.jpg)

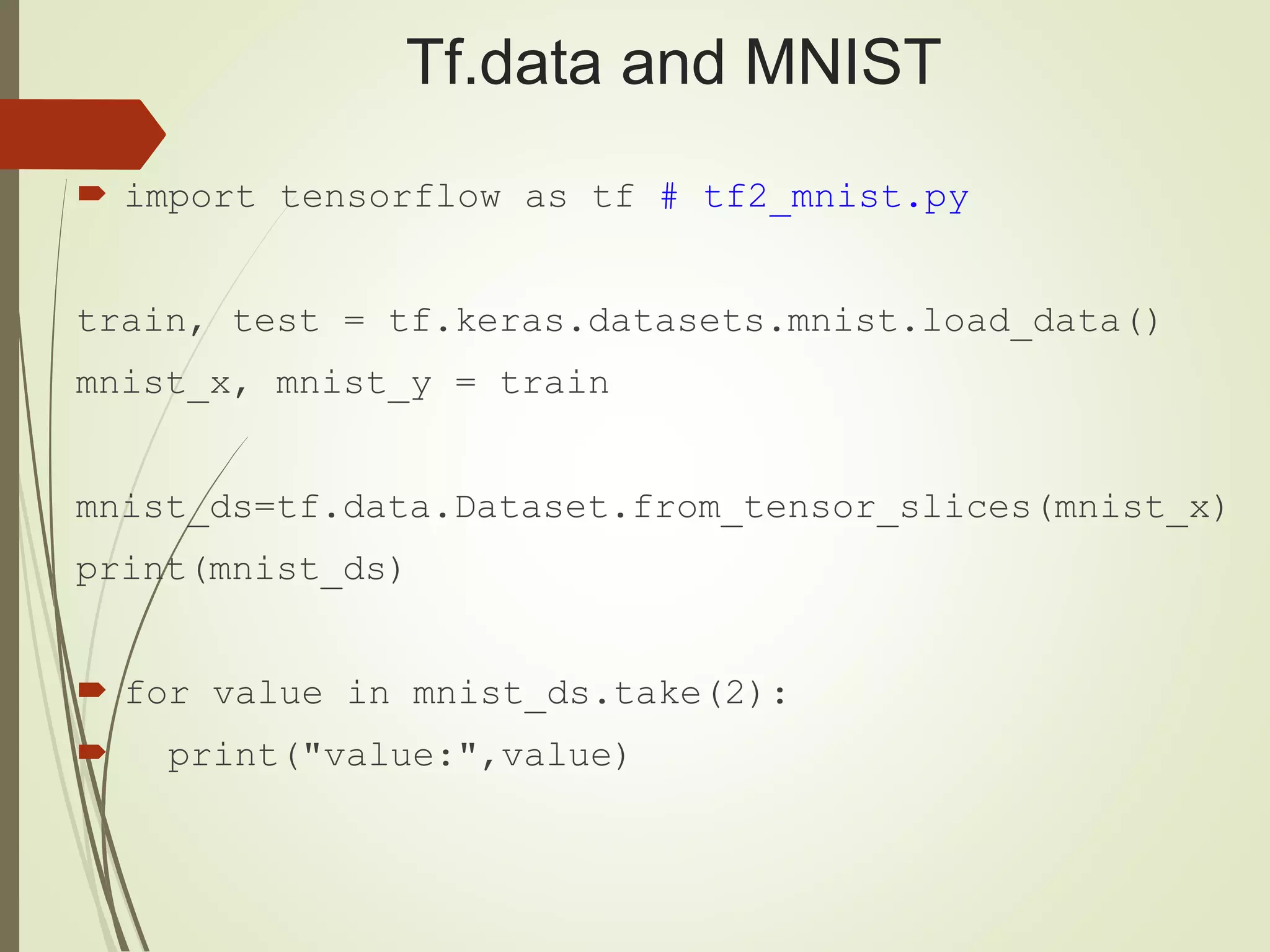

![Tf.data and MNIST

value: tf.Tensor(

[[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0

0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 3 18

18 18 126 136

175 26 166 255 247 127 0 0 0 0]

[ 0 0 0 0 0 0 0 0 30 36 94 154 170 253

253 253 253 253

225 172 253 242 195 64 0 0 0 0]

[ 0 0 0 0 0 0 0 49 238 253 253 253 253 253

253 253 253 251

93 82 82 56 39 0 0 0 0 0]](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-60-2048.jpg)

![TF 2 tf.data.TFRecordDataset

import tensorflow as tf

ds = tf.data.TFRecordDataset(tf-records)

ds = ds.map(your-pre-processing)

ds = ds.batch(batch_size=32)

OR:

ds = tf.data.TFRecordDataset(a-tf-record)

.map(your-pre-processing)

.batch(batch_size=32)

model = . . . [Keras]

model.fit(ds, epochs=20)](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-63-2048.jpg)

![Use prefetch() for Performance

import tensorflow as tf

ds = tf.data.TFRecordDataset(tf-records)

.map(your-pre-processing)

.batch(batch_size=32)

.prefetch(buffer_size=X)

model = . . . [Keras]

model.fit(ds, epochs=20)](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-64-2048.jpg)

![Parallelize Data Transformations

import tensorflow as tf

ds = tf.data.TFRecordDataset(tf-records)

.map(preprocess,num_parallel_calls=Y)

.batch(batch_size=32)

.prefetch(buffer_size=X)

model = . . . [Keras]

model.fit(ds, epochs=20)

=> uses background threads & internal buffer](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-65-2048.jpg)

![Parallelize Data “Readers”

import tensorflow as tf

ds = tf.data.TFRecordDataset(tf-records,

num_parallel_readers=Z)

.map(preprocess,num_parallel_calls=Y)

.batch(batch_size=32)

.prefetch(buffer_size=X)

model = . . . [Keras]

model.fit(ds, epochs=20)

=> for sharded data](https://image.slidesharecdn.com/h2osftf2data-200219055337/75/Working-with-tf-data-TF-2-66-2048.jpg)